Score Your Way to Clinical Reasoning Excellence: SCALENEo Online Serious Game in Physiotherapy Education

Abstract

1. Introduction

2. Materials and Methods

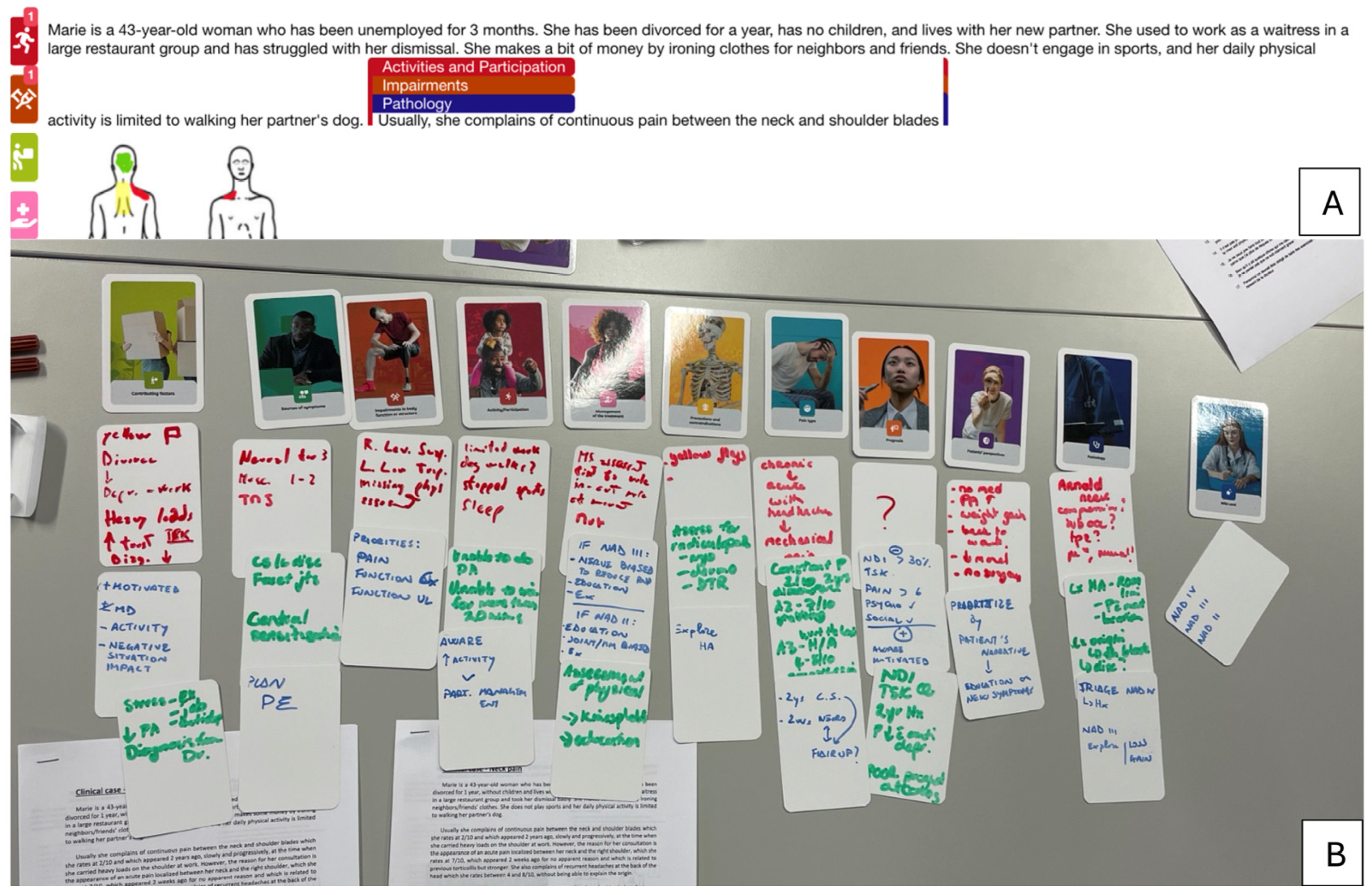

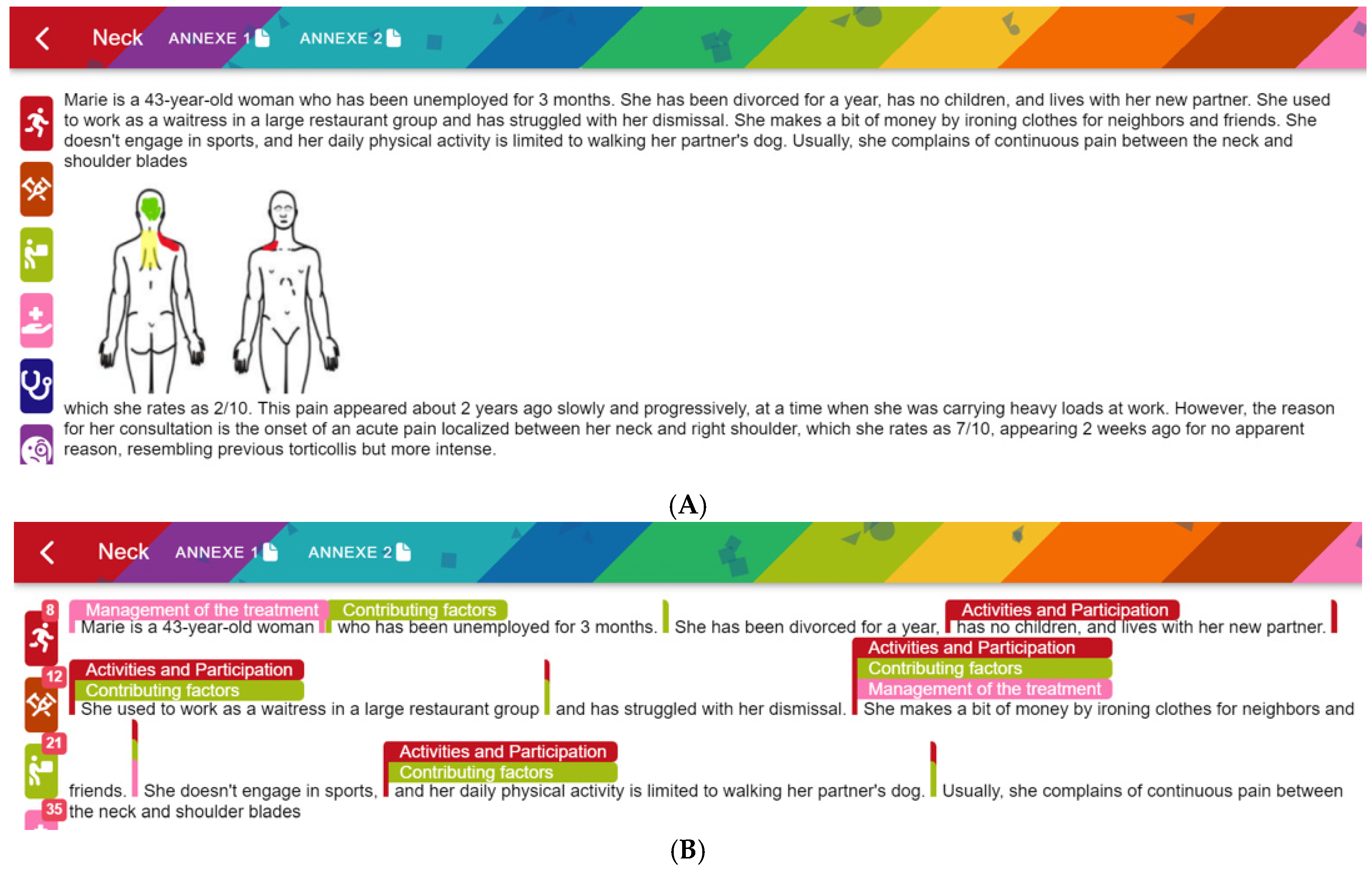

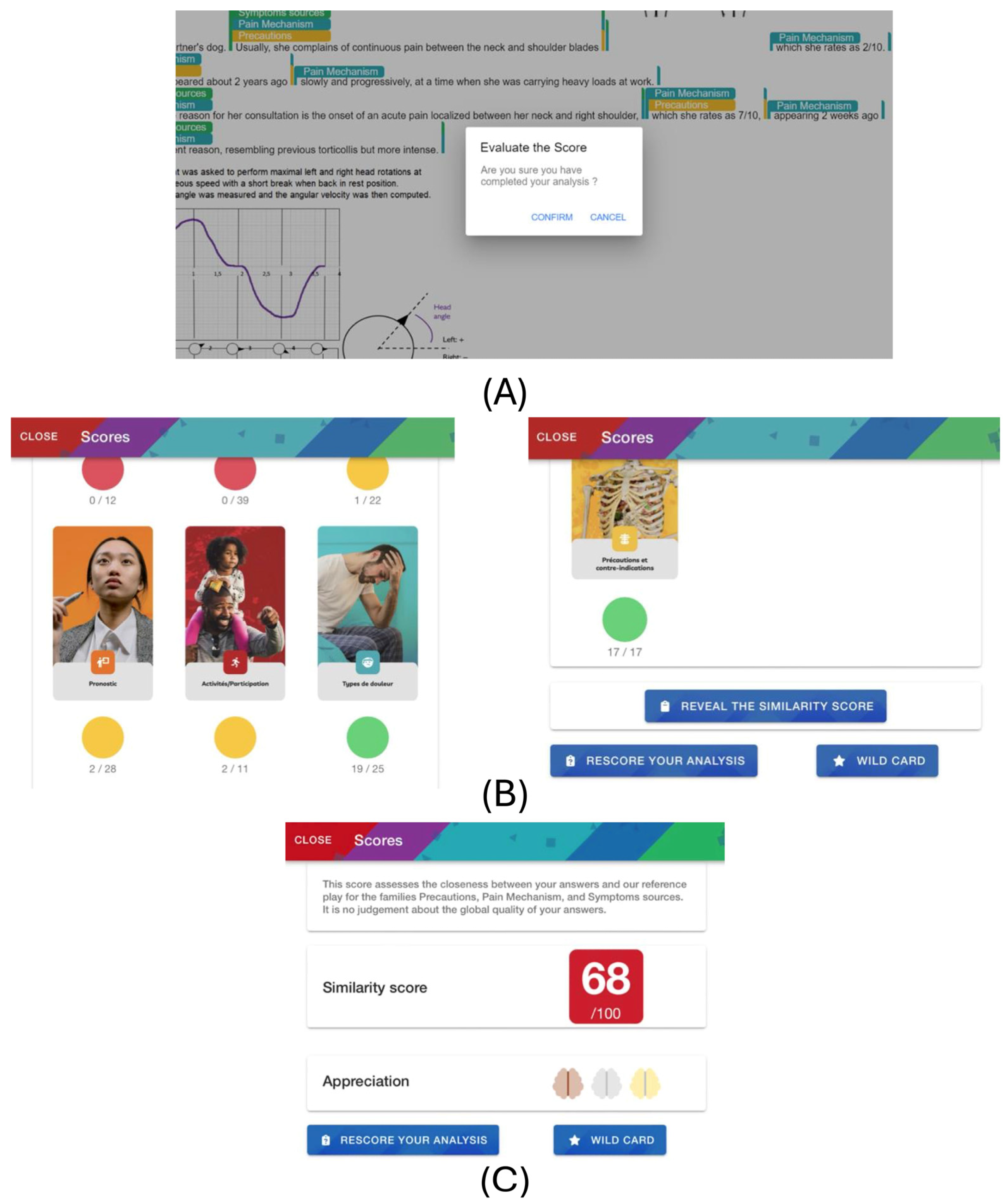

2.1. Online Game

2.2. Classifying Card Elements

- Green if the game and reference cards are either both empty or if all the significant elements are found and more than 50% of the reference card elements are correctly identified. This threshold of 50% is a parameter and could be lowered or increased according to the player(s) experience, for example.

- Yellow if all the significant elements are found and the percentage of correctly identified items is below the threshold of 50%.

- Red if significant elements have been missed or classified in the wrong family card, or incorrectly marked as relevant.

2.3. Total Score

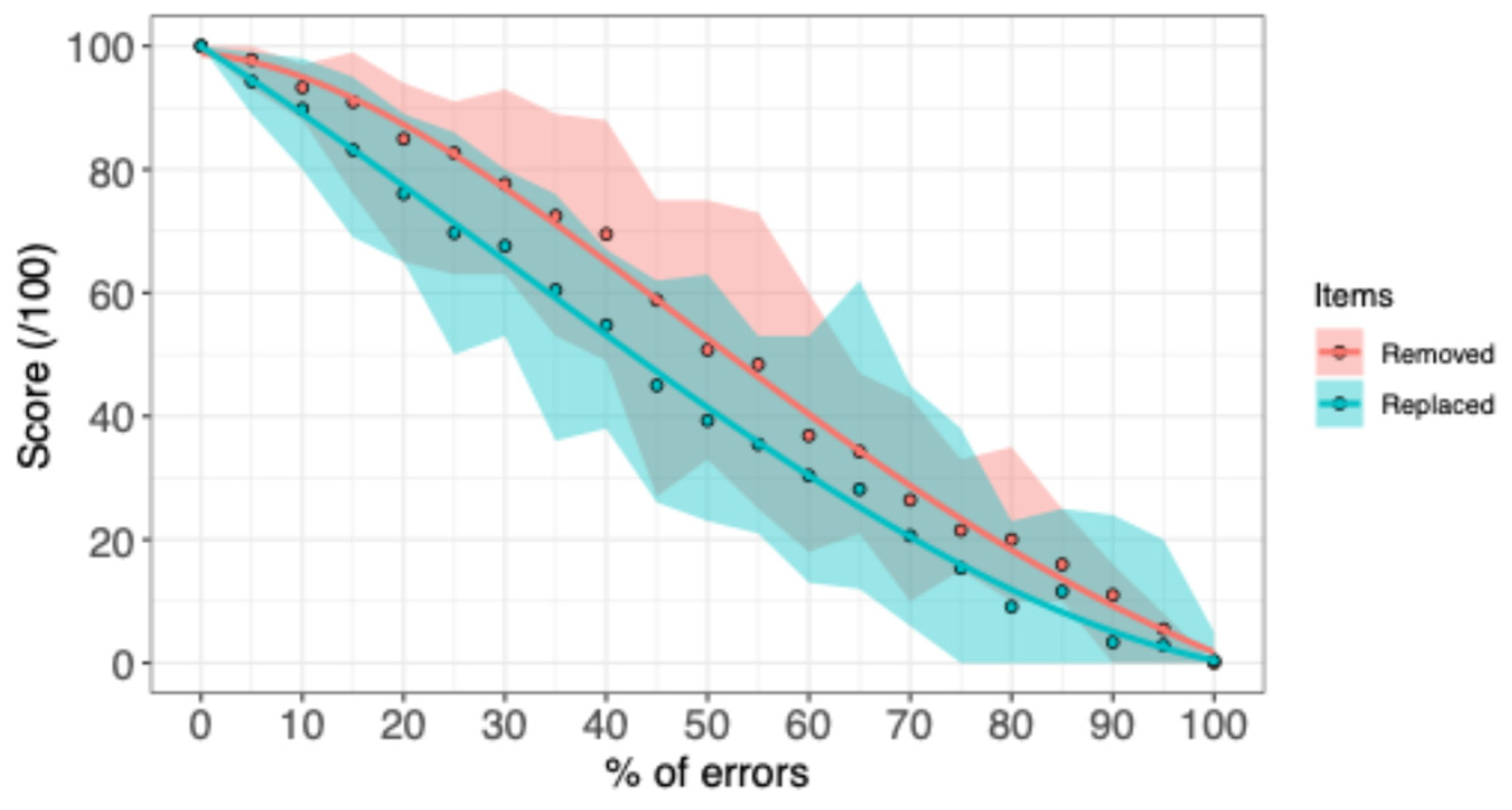

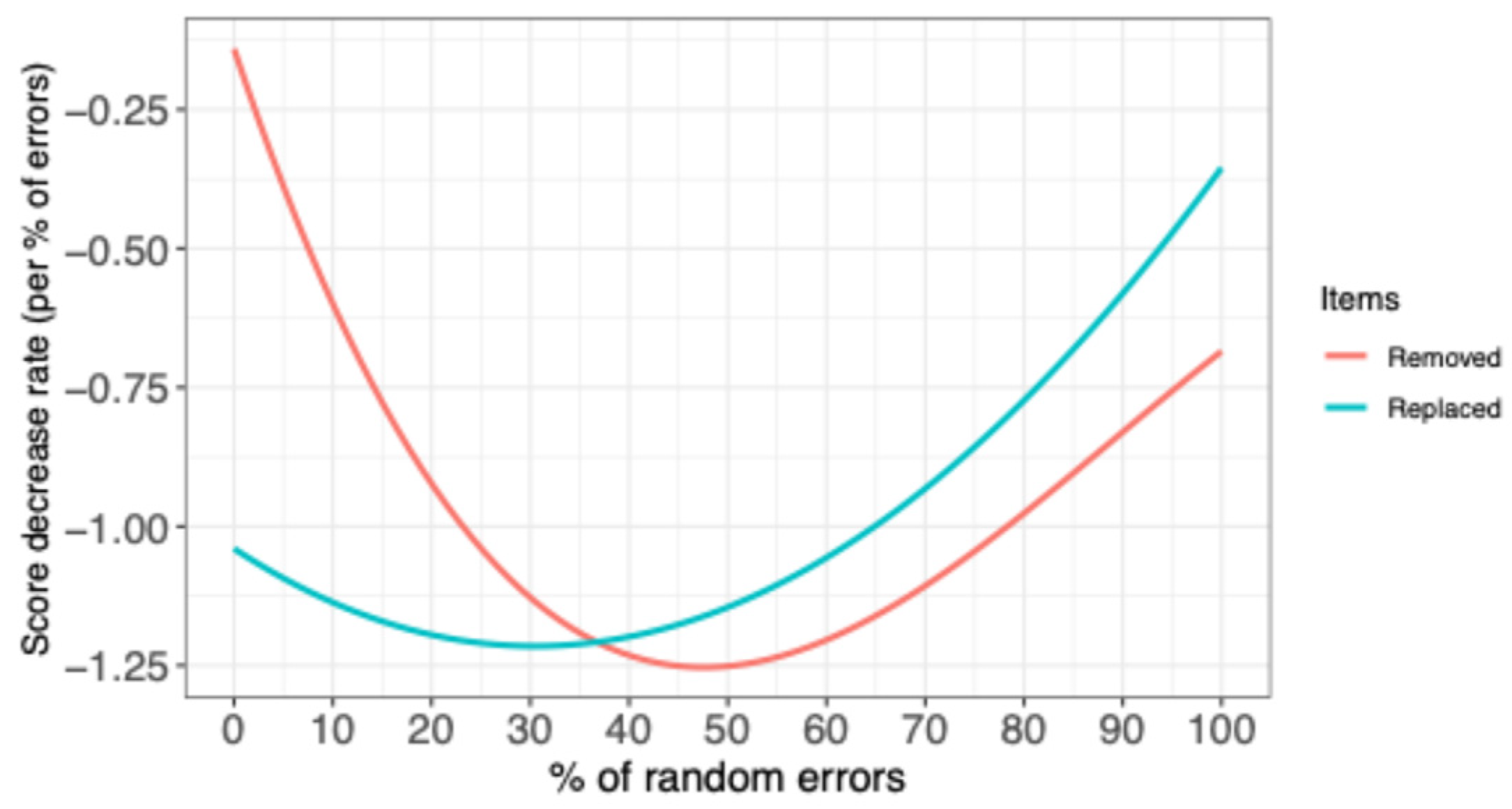

2.4. Score Evolution vs. Number of Errors

3. Results

4. Discussion

4.1. Feedback in CR Assessment

4.2. Scoring CR

4.3. Limitations

4.4. Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CR | Clinical Reasoning |

| SCALENEo | Smart ClinicAL rEasoning iN physiothErapy |

| SCT | Script Concordance Test |

| SG | Serious Game |

Appendix A

| Error (%) | Average Score | Score Standard Deviation | Min | Max |

|---|---|---|---|---|

| 0 | 100 | 0 | 100 | 100 |

| 5 | 97.80 | 2.07 | 93 | 100 |

| 10 | 93.30 | 2.47 | 88 | 97 |

| 15 | 90.95 | 5.38 | 76 | 99 |

| 20 | 84.95 | 7.54 | 65 | 94 |

| 25 | 82.65 | 6.70 | 63 | 91 |

| 30 | 77.65 | 7.88 | 63 | 93 |

| 35 | 72.45 | 9.26 | 53 | 89 |

| 40 | 69.50 | 9.49 | 49 | 88 |

| 45 | 58.80 | 11.28 | 27 | 75 |

| 50 | 50.75 | 11.65 | 33 | 75 |

| 55 | 48.40 | 14.20 | 25 | 73 |

| 60 | 36.85 | 11.54 | 18 | 60 |

| 65 | 34.25 | 8.42 | 21 | 47 |

| 70 | 26.40 | 7.69 | 10 | 43 |

| 75 | 21.50 | 4.49 | 15 | 33 |

| 80 | 20 | 6.01 | 10 | 35 |

| 85 | 15.95 | 4.33 | 10 | 25 |

| 90 | 11 | 4.42 | 0 | 16 |

| 95 | 5.50 | 3.10 | 0 | 8 |

| 100 | 0 | 0 | 0 | 0 |

| Error (%) | Average Score | Score Standard Deviation | Min | Max |

|---|---|---|---|---|

| 0 | 100 | 0 | 100 | 100 |

| 5 | 94.25 | 2.71 | 89 | 99 |

| 10 | 89.80 | 4.94 | 80 | 98 |

| 15 | 83.15 | 6.83 | 69 | 95 |

| 20 | 76.05 | 7.97 | 65 | 89 |

| 25 | 69.70 | 8.77 | 50 | 86 |

| 30 | 67.60 | 6.68 | 53 | 80 |

| 35 | 60.45 | 9.83 | 36 | 76 |

| 40 | 54.75 | 8.43 | 38 | 67 |

| 45 | 45.00 | 9.88 | 26 | 62 |

| 50 | 39.30 | 10.42 | 23 | 63 |

| 55 | 35.45 | 8.99 | 21 | 53 |

| 60 | 30.35 | 10.20 | 13 | 53 |

| 65 | 28.15 | 11.88 | 12 | 62 |

| 70 | 20.60 | 10.42 | 6 | 45 |

| 75 | 15.35 | 8.59 | 0 | 38 |

| 80 | 9.10 | 5.25 | 0 | 23 |

| 85 | 11.60 | 7.35 | 0 | 25 |

| 90 | 3.35 | 6.11 | 0 | 24 |

| 95 | 2.85 | 5.21 | 0 | 20 |

| 100 | 0.40 | 1.19 | 0 | 5 |

References

- Barlow, S. (2012). Physiotherapy outpatient’s chronic pain management ……Realizing the potential. NSW Government.

- Blanié, A., Amorim, M.-A., & Benhamou, D. (2020). Comparative value of a simulation by gaming and a traditional teaching method to improve clinical reasoning skills necessary to detect patient deterioration: A randomized study in nursing students. BMC Medical Education, 20(1), 53. [Google Scholar] [CrossRef]

- Bowen, J. L. (2006). Educational strategies to promote clinical diagnostic reasoning. The New England Journal of Medicine, 355(21), 2217–2225. [Google Scholar] [CrossRef]

- Brentnall, J., Thackray, D., & Judd, B. (2022). Evaluating the clinical reasoning of student health professionals in placement and simulation settings: A systematic review. International Journal of Environmental Research and Public Health, 19(2), 936. [Google Scholar] [CrossRef]

- Cant, R. P., & Cooper, S. J. (2010). Simulation-based learning in nurse education: Systematic review. Journal of Advanced Nursing, 66(1), 3–15. [Google Scholar] [CrossRef]

- Chamberland, M., Mamede, S., St-Onge, C., Setrakian, J., Bergeron, L., & Schmidt, H. (2015). Self-explanation in learning clinical reasoning: The added value of examples and prompts. Medical Education, 49(2), 193–202. [Google Scholar] [CrossRef] [PubMed]

- Charlin, B., Gagnon, R., Pelletier, J., Coletti, M., Abi-Rizk, G., Nasr, C., Sauvé, Év., & Van Der Vleuten, C. (2006). Assessment of clinical reasoning in the context of uncertainty: The effect of variability within the reference panel. Medical Education, 40(9), 848–854. [Google Scholar] [CrossRef] [PubMed]

- Charlin, B., Roy, L., Brailovsky, C., Goulet, F., & Van Der Vleuten, C. (2000). The script concordance test: A tool to assess the reflective clinician. Teaching and Learning in Medicine, 12(4), 189–195. [Google Scholar] [CrossRef] [PubMed]

- Clark, I., & Dumas, G. (2015). Toward a neural basis for peer-interaction: What makes peer-learning tick? Frontiers in Psychology, 6. [Google Scholar] [CrossRef]

- Cohen Aubart, F., Papo, T., Hertig, A., Renaud, M.-C., Steichen, O., Amoura, Z., Braun, M., Palombi, O., Duguet, A., & Roux, D. (2021). Are script concordance tests suitable for the assessment of undergraduate students? A multi-center comparative study. La Revue de Médecine Interne, 42(4), 243–250. [Google Scholar] [CrossRef]

- Connolly, T. M., Boyle, E. A., MacArthur, E., Hainey, T., & Boyle, J. M. (2012). A systematic literature review of empirical evidence on computer games and serious games. Computers & Education, 59(2), 661–686. [Google Scholar] [CrossRef]

- Croskerry, P. (2009a). A universal model of diagnostic reasoning. Academic Medicine, 84(8), 1022–1028. [Google Scholar] [CrossRef]

- Croskerry, P. (2009b). Clinical cognition and diagnostic error: Applications of a dual process model of reasoning. Advances in Health Sciences Education, 14(S1), 27–35. [Google Scholar] [CrossRef]

- Daniel, M., Rencic, J., Durning, S. J., Holmboe, E., Santen, S. A., Lang, V., Ratcliffe, T., Gordon, D., Heist, B., Lubarsky, S., Estrada, C. A., Ballard, T., Artino, A. R., Sergio Da Silva, A., Cleary, T., Stojan, J., & Gruppen, L. D. (2019). Clinical reasoning assessment methods: A scoping review and practical guidance. Academic Medicine, 94(6), 902–912. [Google Scholar] [CrossRef]

- Deschênes, M.-F., Charlin, B., Gagnon, R., & Goudreau, J. (2011). Use of a script concordance test to assess development of clinical reasoning in nursing students. The Journal of Nursing Education, 50(7), 381–387. [Google Scholar] [CrossRef]

- Dory, V., Gagnon, R., Charlin, B., Vanpee, D., Leconte, S., Duyver, C., Young, M., & Loye, N. (2016). In brief: Validity of case summaries in written examinations of clinical reasoning. Teaching and Learning in Medicine, 28(4), 375–384. [Google Scholar] [CrossRef] [PubMed]

- Dory, V., Gagnon, R., Vanpee, D., & Charlin, B. (2012). How to construct and implement script concordance tests: Insights from a systematic review. Medical Education, 46(6), 552–563. [Google Scholar] [CrossRef] [PubMed]

- Edwards, I., Jones, M., Carr, J., Braunack-Mayer, A., & Jensen, G. M. (2004). Clinical reasoning strategies in physical therapy. Physical Therapy, 84(4), 312–330. [Google Scholar] [CrossRef] [PubMed]

- Finucane, L. M., Downie, A., Mercer, C., Greenhalgh, S. M., Boissonnault, W. G., Pool-Goudzwaard, A. L., Beneciuk, J. M., Leech, R. L., & Selfe, J. (2020). International framework for red flags for potential serious spinal pathologies. Journal of Orthopaedic & Sports Physical Therapy, 50(7), 350–372. [Google Scholar] [CrossRef]

- García-Redondo, P., García, T., Areces, D., Núñez, J. C., & Rodríguez, C. (2019). Serious games and their effect improving attention in students with learning disabilities. International Journal of Environmental Research and Public Health, 16(14), 2480. [Google Scholar] [CrossRef]

- Gorbanev, I., Agudelo-Londoño, S., González, R. A., Cortes, A., Pomares, A., Delgadillo, V., Yepes, F. J., & Muñoz, Ó. (2018). A systematic review of serious games in medical education: Quality of evidence and pedagogical strategy. Medical Education Online, 23(1), 1438718. [Google Scholar] [CrossRef]

- Gruppen, L. D. (2017). Clinical reasoning: Defining it, teaching it, assessing it, studying it. Western Journal of Emergency Medicine, 18(1), 4–7. [Google Scholar] [CrossRef]

- Hage, R., Fourré, A., Ramonfosse, L., Leteneur, S., Jones, M., & Dierick, F. (2023). Description and rules of a new card game to learn clinical reasoning in musculoskeletal physiotherapy. Journal of Manual & Manipulative Therapy, 31(4), 287–296. [Google Scholar] [CrossRef]

- Haoran, G., Bazakidi, E., & Zary, N. (2019). Serious games in health professions education: Review of trends and learning efficacy. Yearbook of Medical Informatics, 28(01), 240–248. [Google Scholar] [CrossRef] [PubMed]

- Hickey, D. T. (1997). Motivation and contemporary socio-constructivist instructional perspectives. Educational Psychologist, 32(3), 175–193. [Google Scholar] [CrossRef]

- Huhn, K., Gilliland, S. J., Black, L. L., Wainwright, S. F., & Christensen, N. (2019). Clinical reasoning in physical therapy: A concept analysis. Physical Therapy, 99(4), 440–456. [Google Scholar] [CrossRef]

- Jones, M. A. (1992). Clinical reasoning in manual therapy. Physical Therapy, 72(12), 875–884. [Google Scholar] [CrossRef]

- Jones, M. A., & Rivett, D. A. (2004). Clinical reasoning for manual therapists. (Butterworth Heinemann). Elsevier Science Limited. [Google Scholar]

- Jones, M. A., Rivett, D. A., & Moore, A. (2019). Clinical reasoning in musculoskeletal practice (2nd ed.). Elsevier. [Google Scholar]

- Koivisto, J.-M., Haavisto, E., Niemi, H., Haho, P., Nylund, S., & Multisilta, J. (2018). Design principles for simulation games for learning clinical reasoning: A design-based research approach. Nurse Education Today, 60, 114–120. [Google Scholar] [CrossRef]

- Kojich, L., Miller, S. A., Axman, K., Eacret, T., Koontz, J. A., & Smith, C. (2024). Evaluating clinical reasoning in first year DPT students using a script concordance test. BMC Medical Education, 24(1), 329. [Google Scholar] [CrossRef]

- Kononowicz, A. A., Woodham, L. A., Edelbring, S., Stathakarou, N., Davies, D., Saxena, N., Tudor Car, L., Carlstedt-Duke, J., Car, J., & Zary, N. (2019). Virtual patient simulations in health professions education: Systematic review and meta-analysis by the digital health education collaboration. Journal of Medical Internet Research, 21(7), e14676. [Google Scholar] [CrossRef]

- Krishnamurthy, K., Selvaraj, N., Gupta, P., Cyriac, B., Dhurairaj, P., Abdullah, A., Krishnapillai, A., Lugova, H., Haque, M., Xie, S., & Ang, E.-T. (2022). Benefits of gamification in medical education. Clinical Anatomy, 35(6), 795–807. [Google Scholar] [CrossRef]

- Lapkin, S., Levett-Jones, T., Bellchambers, H., & Fernandez, R. (2010). Effectiveness of patient simulation manikins in teaching clinical reasoning skills to undergraduate nursing students: A systematic review. Clinical Simulation in Nursing, 6(6), e207–e222. [Google Scholar] [CrossRef]

- Larsen, D. P., Butler, A. C., & Roediger, H. L., Iii. (2008). Test-enhanced learning in medical education. Medical Education, 42(10), 959–966. [Google Scholar] [CrossRef]

- Lubarsky, S., Charlin, B., Cook, D. A., Chalk, C., & van der Vleuten, C. P. M. (2011). Script concordance testing: A review of published validity evidence. Medical Education, 45(4), 329–338. [Google Scholar] [CrossRef] [PubMed]

- Min Simpkins, A. A., Koch, B., Spear-Ellinwood, K., & St. John, P. (2019). A developmental assessment of clinical reasoning in preclinical medical education. Medical Education Online, 24(1), 1591257. [Google Scholar] [CrossRef] [PubMed]

- O’Brien, S. R., Dillon, N., Linsky, M., Lagueras, K., Uhl, J., Conroy, S., Kiley, A., Lanzafame, N., States, E., & Sturm, E. (2023). Initial validation of a script concordance test to measure the development of clinical reasoning among physical therapy residents. The Journal of Clinical Education in Physical Therapy, 5, 9014. [Google Scholar] [CrossRef]

- Pelaccia, T., & Viau, R. (2017). Motivation in medical education*. Medical Teacher, 39(2), 136–140. [Google Scholar] [CrossRef]

- Pinnock, R., Young, L., Spence, F., Henning, M., & Hazell, W. (2015). Can think aloud be used to teach and assess clinical reasoning in graduate medical education? Journal of Graduate Medical Education, 7(3), 334–337. [Google Scholar] [CrossRef]

- Qiao, J., Huang, C.-R., Liu, Q., Li, S.-Y., Xu, J., & Ouyang, Y.-Q. (2023). Effectiveness of nonimmersive virtual reality simulation in learning knowledge and skills for nursing students: Metaanalysis. Clinical Simulation in Nursing, 76, 26–38. [Google Scholar] [CrossRef]

- Roediger, H. L., & Karpicke, J. D. (2006). Test-enhanced learning: Taking memory tests improves long-term retention. Psychological Science, 17(3), 249–255. [Google Scholar] [CrossRef]

- Rushton, D. A., Beeton, D. K., Jordaan, D. R., Langendoen, M. J., Levesque, M. L., & Maffey, M. L. (2016). Part A: Educational standards 2016. International Federetion for Orthopeadics Manipulative Therapists. [Google Scholar]

- Sallam, M. A., & Abouzeid, E. n.d. Introducing AI as members of script concordance test expert reference panel: A comparative analysis. Medical Teacher, 1–8. [CrossRef]

- Schuwirth, L. (2009). Is assessment of clinical reasoning still the Holy Grail? Medical Education, 43(4), 298–300. [Google Scholar] [CrossRef] [PubMed]

- Smart, K., & Doody, C. (2007). The clinical reasoning of pain by experienced musculoskeletal physiotherapists. Manual Therapy, 12(1), 40–49. [Google Scholar] [CrossRef] [PubMed]

- Tekin, M., Yurdal, M. O., Toraman, Ç., Korkmaz, G., & Uysal, İ. (2025). Is AI the future of evaluation in medical education? AI vs. Human evaluation in objective structured clinical examination. BMC Medical Education, 25(1), 641. [Google Scholar] [CrossRef] [PubMed]

- Thammasitboon, T., Jj, R., Rl, T., Apj, O., M, S., & G, D. (2018). The assessment of reasoning tool (ART): Structuring the conversation between teachers and learners. Diagnosis (Berlin, Germany), 5(4), 197–203. [Google Scholar] [CrossRef]

- Tubelo, R. A., Portella, F. F., Gelain, M. A., De Oliveira, M. M. C., De Oliveira, A. E. F., Dahmer, A., & Pinto, M. E. B. (2019). Serious game is an effective learning method for primary health care education of medical students: A randomized controlled trial. International Journal of Medical Informatics, 130, 103944. [Google Scholar] [CrossRef]

- Vergara, D., Antón-Sancho, Á., & Fernández-Arias, P. (2023). Player profiles for game-based applications in engineering education. Computer Applications in Engineering Education, 31(1), 154–175. [Google Scholar] [CrossRef]

- Viau, R. (2009). La motivation en contexte scolaire (5e éd.). De Boeck. [Google Scholar]

- Young, M., Thomas, A., Gordon, D., Gruppen, L., Lubarsky, S., Rencic, J., Ballard, T., Holmboe, E., Da Silva, A., Ratcliffe, T., Schuwirth, L., & Durning, S. J. (2019). The terminology of clinical reasoning in health professions education: Implications and considerations. Medical Teacher, 41(11), 1277–1284. [Google Scholar] [CrossRef]

| Relevant/Significant Item in Reference Card | |||

|---|---|---|---|

| Present | Not Present | ||

| Relevant/Significant Item in Game card | Present | good/bad | wrong |

| Not present | missed | empty | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hage, R.; Dierick, F.; Da Natividade, J.; Daniau, S.; Estievenart, W.; Leteneur, S.; Servotte, J.-C.; Jones, M.A.; Buisseret, F. Score Your Way to Clinical Reasoning Excellence: SCALENEo Online Serious Game in Physiotherapy Education. Educ. Sci. 2025, 15, 1077. https://doi.org/10.3390/educsci15081077

Hage R, Dierick F, Da Natividade J, Daniau S, Estievenart W, Leteneur S, Servotte J-C, Jones MA, Buisseret F. Score Your Way to Clinical Reasoning Excellence: SCALENEo Online Serious Game in Physiotherapy Education. Education Sciences. 2025; 15(8):1077. https://doi.org/10.3390/educsci15081077

Chicago/Turabian StyleHage, Renaud, Frédéric Dierick, Joël Da Natividade, Simon Daniau, Wesley Estievenart, Sébastien Leteneur, Jean-Christophe Servotte, Mark A. Jones, and Fabien Buisseret. 2025. "Score Your Way to Clinical Reasoning Excellence: SCALENEo Online Serious Game in Physiotherapy Education" Education Sciences 15, no. 8: 1077. https://doi.org/10.3390/educsci15081077

APA StyleHage, R., Dierick, F., Da Natividade, J., Daniau, S., Estievenart, W., Leteneur, S., Servotte, J.-C., Jones, M. A., & Buisseret, F. (2025). Score Your Way to Clinical Reasoning Excellence: SCALENEo Online Serious Game in Physiotherapy Education. Education Sciences, 15(8), 1077. https://doi.org/10.3390/educsci15081077