Abstract

This paper develops the argument that, in the application of AI to improve the system of governance for higher education, machine learning will be more effective in some areas than others. To make that assertion more systematic, a classificatory taxonomy of types of decisions is necessary. This paper draws upon the classification of decision processes as either symbolic or sub-symbolic. Symbolic approaches focus on whole system design and emphasise logical coherence across sub-systems, while sub-symbolic approaches emphasise localised decision making with distributed engagement, at the expense of overall coherence. AI, especially generative AI, is argued to be best suited to working at the sub-symbolic level, although there are exceptions when discriminative AI systems are designed symbolically. The paper then uses Beer’s Viable System Model to identify whether the decisions necessary for viability are best approached symbolically or sub-symbolically. The need for leadership to recognise when a sub-symbolic system is failing and requires symbolic intervention is a specific case where human intervention may be necessary to override the conclusions of an AI system. The paper presents an initial analysis of which types of AI would support which functions of governance best, and explains why ultimate control must always rest with human leaders.

1. Introduction

In the last five years, artificial intelligence (AI) has received much attention from university scholars, national governments, and international organisations. Most of the publications focus on the use of AI in teaching and learning, and the extent to which this is going to disrupt the assessment systems of higher education (Chen et al., 2020; Chu et al., 2022; Crompton & Burke, 2023; Chang et al., 2024; Huesca et al., 2025). There is also a growing literature on the governance of AI in higher education. There has been less written about the role of AI in higher education governance, although some general discussions about AI and education governance are offered (see, e.g., Filgueiras, 2024).

Governance is very important in higher education as it provides the context in which stakeholders, such as students, academics, and administrators, operate. The Chartered Governance Institute of UK and Ireland describes governance as, “the framework by which organisations are directed and controlled. It identifies who can make decisions, who has the authority to act on behalf of the organisation and who is accountable for how an organisation and its people behave and perform.” (Chartered Governance Institute UK & Ireland, 2024, para. 1). It also identifies key components of corporate management, including transparency, responsibility, risk management, and ethical conduct. It is on the basis of these principles of good governance that we will evaluate the role of AI in higher education governance.

There has been a growing interest in the promotion of good governance, with initiatives such as the Governance Assessment Framework (GAF) (UN-Habitat, 2020). The publication introducing the GAF suggested that the framework could be extended to various levels of governance, beyond the original case studies, where the focus was on metropolitan regions. However, time and resources have not yet been made available to develop a framework that is specific to the higher education sector. Discussion of the decisions to be made to secure the good governance of higher education must, therefore, proceed at a rather speculative and abstract level.

In this paper, a first attempt is made to classify the kinds of problems that can best be addressed with the different forms of AI, as well as the kinds of problems that need to be addressed in the pursuit of better governance in higher education. Although the matching of the type of support provided by AI to the problems faced in the governance of higher education must remain a political issue and not a technical one, it is our conviction that this analysis is the necessary starting point for evaluating the potential impact of AI on the governance of higher education.

This paper is guided by a number of research questions: What is the nature of AI, and how does it make decisions? What are the possible areas of application of AI in higher education governance? In which conditions could the adoption of AI lead to better governance, and in which conditions could it lead to worse governance? To address these questions, we use Donald Broadbent’s (1993) classification of decisions to identify whether decision-making is concentrated in named individuals or groups, or whether decision-making is diffused more widely, and Stafford Beer’s (2009) Viable System Model to identify the types of decisions that any large and successful institution must make. In this way, we identify a comprehensive range of governance processes where AI could be introduced. We also identify a very limited range of processes that stand to be improved. This article is intended to be interpretive in nature. It uses documentary analysis as its basic approach, but secondary analysis of the literature supplements this. Below, we provide a detailed account of the materials and methods.

2. Materials and Methods

Documents are an established, appealing source of data in interpretive research. Similar to other analytical methods, documentary analysis scrutinises data to elicit meaning, gain understanding, and develop situated knowledge (Corbin & Strauss, 2008). Atkinson and Coffey (2011) argue that while it is tempting for the social scientist to treat documentary materials as secondary data—to help cross-check observational or oral accounts, or to provide some descriptive and historical context—documents should be treated as data in their own right. They should be given due weight and appropriate analytical attention. In contemporary times, as Coffey (2013) points out, we should also include electronic and digital resources among the ways in which documentary materials are produced and consumed. In this study, documents relating to the definition and promotion of good governance are collected online, specifically from the website of the Chartered Institute of Governance UK & Ireland and that of the UN Habitat.

In this paper, the literature serves as another important source of data. While the literature presents findings that are processed by their original authors, they can serve as potential ingredients for pursuing a new research strand. This is because secondary analysis is not merely descriptive but constructive. The idea is shared by Maxwell (2009, p. 223): ‘It incorporates pieces that are borrowed from elsewhere, but the structure, the overall coherence, is something that you build, not something that exists ready-made’ (original emphasis). Metaphorically, Becker (2007, p. 142) refers to the use of literature as doing a woodworking project:

Other people have worked on your problem or problems related to it and have made some of the pieces you need. You just have to fit them in where they belong. Like the woodworker, you leave space, when you make your portion of the argument, for the other parts you know you can get. You do that, that is, if you know that they are there to use.(original emphasis)

The analogy from Becker points to the strategic use of literature (e.g., as ‘modules’) in constructing arguments in our own paper. It also stresses the intrinsic value of literature as an essential component of research rather than just the basis for it. In this paper, existing literature is selected and used based on its potential contribution to understanding the nature of AI and the processes of institutional governance.

Overall, this is a conceptual analysis of how AI might be used in higher education governance. To provide a mental map, we start by recognising that there are various forms of AI and modes of deployment. We then discuss how the different forms match certain types of decisions, drawing upon the work of Broadbent. Afterwards, we use the Viable System Model to identify which types of decisions are needed for the maintenance of a higher education institution. In these ways, we develop an interpretive process that allows for understanding how to match the forms of AI to the types of decisions and the implications for higher education institutions.

3. Types of AI and Modes of Deployment

AI has been employed for technical purposes for a very long time (Cristianini, 2016; Filgueiras, 2024). Table 1 summarises the key types and their modes of deployment. The various modes of deployment of AI are described in the text.

Table 1.

AI and modes of deployment.

Initially, the most successful applications employed discriminative or conditional models. These models are used in cases where there is a clear criterion for classifying cases, and where a set of cases that have already been decided by human operators is available. AI using a discriminative model might be shown a number of images of a product at the end of the production line, together with information as to whether a human inspector had passed the product or failed it at quality control (examining for surface blemishes after a polishing process, for example). Although such processes are not without problems, AI of this sort has been successfully employed in identifying pre-cancerous cells, selecting areas of interest in the sky to search for pulsars or exoplanets, in visual recognition programs, as well as a host of quality control applications. In the higher education context, there are many areas of decision-making that might be improved by the application of such techniques. For example, selection procedures for admitting students or appointing members of faculty would appear well-suited to such applications, where a preliminary screening of applicants could be supported by AI. Properly deployed and well-trained, such systems can greatly improve the performance of human decision-makers, both in the speed with which they can make decisions and in their overall consistency.

There are two important caveats that need to be taken into account in such cases. The first is that such systems can never perform better than the training data that is used to train the AI system. If there are biases in the original data, they will remain in the operation of the system as a whole (Vicente & Matute, 2023). And the second is that such models can produce a categorical decision (such as pass/fail in quality assurance) taking into account multiple variables, but it may not be clear to the users of the system exactly how that decision has been arrived at; this operates against the principle of transparency that is one of the principles of good governance.

In the worst cases, those two possible shortcomings may work together to produce meaningless results. Bergstrom and West (2017) describe a study that purports to identify a disposition toward criminality from certain facial features derived from a comparison of photographs of criminals and the general population. Bergstrom and West argue that what the AI system concerned is actually doing is distinguishing between people who are smiling and people who are not. Whether or not Bergstrom and West are correct in this specific case, one can see the danger, if, as was the case in this study, photographs of criminals were taken from standard ID photographs, while other photos were taken at random from the internet; passport photos and other ID photos are less likely to be smiling than photos in general.

The lesson to be drawn from these considerations is that AI is unlikely to be a panacea, even in cases where its application might be appropriate. Above all, it is not guaranteed to be impartial simply because it is a machine. Using AI to sift applicants for a position is unlikely to be optimal if the system is trained on data from previous appointments where the decisions were biased. Concern that AI systems may simply perpetuate gender or racial discrimination is well-founded.

However, the cases that we have been describing here are relatively straightforward; in cases where discriminative models are employed, training is supervised by humans, there are clear criteria for the classification involved, and success or failure is fairly apparent. The recent rise in interest in AI has mainly focused on the application of generative models in AI, or generative AI. Generative AI is supposed to identify patterns in the data, and then generate new text, images, or other output that follows similar patterns. There is no clear criterion of success, and success or failure is not immediately apparent. Since generative AI systems are essentially tasked with creating something new on each separate occasion, they have a disposition to hallucinate on occasions and are not completely reliable. For example, when an AI system using a large language model creates a sentence, its goal is to ensure that it produces a pattern of words that looks like many previous patterns of words that it has used as training data (Domingos, 2015). It is not its goal to produce a true statement. Consequently, generative AI is highly likely to produce cliches, and some of those cliches may not be true. This shortcoming of generative AI is in addition to those basic features that it shares with discriminative AI, namely lack of transparency and possible bias.

There are many operations where generative AI can be useful. Reviewing and summarising specific policies employed by many institutions, preparing quasi-legal documents such as terms of reference and constitutions of specialised committees, and preparing audience-friendly text for websites, could all be assisted by generative AI. However, unless and until the issues of reliability can be resolved, generative AI has huge potential for causing institutions reputational damage and should be employed only in circumstances where human supervision can be ensured.

This section has highlighted the negative aspects of AI. This is appropriate in relation to a precautionary principle, in which AI embodies the possibility of disaster when it is applied badly. But it is worth emphasising that there are many cases where AI, especially discriminatory AI, has been used with great success to improve decision-making. It works best where there are clear criteria for the decisions to be made, and where success and failure are also distinct.

That leads us to the conclusion that there are some types of decisions to which AI is particularly well-suited, and that good governance might best be served by identifying those decisions and using AI most intensively, or exclusively, where it is likely to be successful. To this purpose, we move to look at a classification of decisions offered by the British psychologist Donald Broadbent.

4. Symbolic and Sub-Symbolic Decision-Making Systems

Writing at a time when computers were only just beginning to have an impact on the way that institutions functioned, and when computers and the internet were being introduced into higher education institutions, Broadbent was prescient in identifying two kinds of decision-making systems, which he described as symbolic and sub-symbolic. He argued that this was a difference in the kind of decisions to be made, and that the difference would be present, whether the decisions were made by human beings or by machines. In other words, these two decision-making systems ought to be in place for an institution to function; it does not matter whether these decisions are made by people or by computers; they have to be made in such a way.

Broadbent illustrated the difference with reference to the design of a fire alarm system. The physical implementation of the fire alarm system could be designed by an individual engineer. That engineer would have a clear synoptic representation of the whole system in mind; the approach would be symbolic. From that overall idea of the system, the location of bells and klaxons, of buttons to raise the alarm, of signs on doorways to indicate escape routes, and so on, could all be derived; it was serial, with each element depending on the functioning of other elements. The key information could be assessed by a limited number of people; it was local.

In contrast with the design of the physical implementation of the system, if a fire alarm system is to be effective, some knowledge has to be widely understood. Each person in the building has to know what to do if they see smoke or flames. They need to know what to do if they hear the alarm. The performance of each individual must be independent, not dependent on the actions of others. These aspects of the system must be parallel and distributed. Nobody needs a symbolic representation of the system; they simply need to know what to do. It is procedural, and responsibility is shared more widely. These different aspects are illustrated in Table 2.

Table 2.

Characteristics of symbolic and sub-symbolic systems.

In general, symbolic systems are concerned with design and require a representation that encompasses the whole institution so that radical changes can be made. In contrast with this, sub-symbolic systems are concerned with small incremental changes producing improvements in efficiency, without challenging the overall structure of the institution and its operations. Symbolic systems permit, although they do not necessarily demand, revolutionary change, while sub-symbolic systems are evolutionary.

In the operation of a higher education institution, sub-symbolic approaches might be used to refine student recruitment, to place advertising in the most effective places, to re-organise student open days for maximum effect, and so on. Incremental changes can be made to improve the current functioning of the organisation, each of which can be considered on its own merits, without having an overall picture of how the institution operates as a whole. In contrast with this, a decision to close a department, or to focus all research efforts on a particular strategic direction, does require a symbolic understanding, a sense of how the different parts of the institution impact each other, and, indeed, how the system could be re-designed.

Writing at the beginning of the revolution that information and communication technology brought to higher education, Broadbent was mainly thinking about the distinction between digital and analogue computers, which represented the two approaches he identified. He also held on to the hope that artificial neural networks, the technology on which most current AI operates, would result in a blurring between the two types, or “getting the best of both worlds”. That may have been a reasonable hope when the development of artificial neural networks was in its infancy, but we believe that it was mistaken. We think, as we have shown, that AI is sub-symbolic. It might be argued that discriminative AI is symbolic, in the sense that it incorporates a clear model of what is needed for success, and that decisions are made within an overall plan. However, the point is that discriminative AI cannot change the design and cannot shift the criteria for success. Some research promotes the idea that generative AI is capable of changing the overall design and of being truly creative (see e.g., Zhang et al., 2023; Yu et al., 2025), but it has to be noted that it is at that point that it is in greatest danger of hallucinating and producing greater mistakes.

In summary, then, our argument is that all forms of AI use sub-symbolic reasoning, seeking evolutionary changes to improve efficiency in operations within the present structures. For that purpose, we use Stafford Beer’s Viable System Model to understand the practical process of decision-making in an institution.

5. Decisions to Be Made in the Viable System

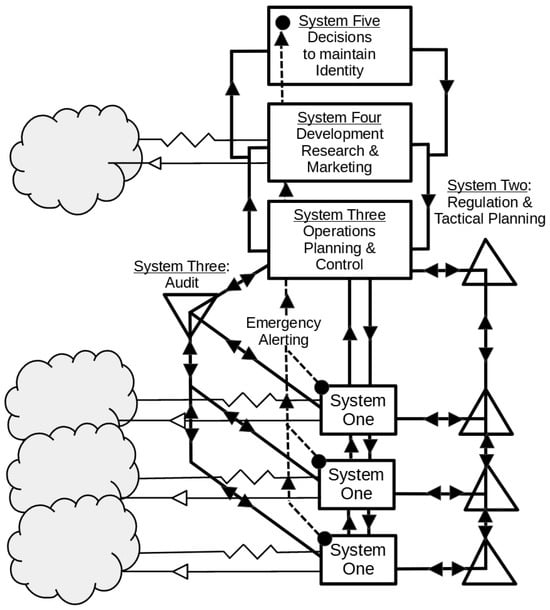

Stafford Beer is one of the leading figures in the science of effective organisation (Hilder, 1995). Stafford Beer (2009) argued that every viable system needed to incorporate five functions, or five systems, in order to be viable, as shown in Figure 1.

Figure 1.

Stafford Beer’s viable system model. Source: Figure by authors.

System One (the square symbols labelled ‘One’ in Figure 1) is the operation or collection of operations that are the reason for the institution’s existence in the first place. Presumably, in higher education, that would be the classroom, lecture theatre, research laboratory, or seminar series. In Figure 1, to the left of System One, is a fluffy cloud representing the external environment. Each System One has two connections to the environment, represented by the arrow symbol for an amplifier and the zig-zag symbol of an attenuator (or a filter). System One needs information about the environment, so that it can respond, but it does not need information about everything in the environment, only that which is relevant to its operations. That is quite an important distinction, but also quite a dangerous one, and we will return to it later. An AI system, and specifically a discriminative AI system, may be an excellent way of scanning the environment for changes and filtering the relevant ones.

The arrow symbol of the amplifier represents the notion that when System One operates on its environment, it wishes to produce the biggest effect possible for the minimum effort.

Within limits, System One will be able to respond to variations in the environment, recruiting more or fewer students depending on the demand for particular subjects, or shifting the focus of research to meet the needs of sponsors. However, System One’s flexibility is limited, and any variation that it cannot cope with will be passed on to System Two, which deals with regulation and tactical planning, and which might be able to draw on additional resources to cope with variation in the short term.

Any variation that System Two cannot cope with will be passed on to System Three, which, coordinating the operation of various System Ones, may be able to shift some resources from one class to another, or from a research project to teaching. In addition, System Three handles planning and control of operations, and may therefore be able to recruit additional staff to meet the variation that could not be handled in any other way.

Perhaps more importantly, because System Three has access to data from all of the System Ones (i.e., can aggregate data across classrooms, courses, or departments), it may be able to identify trends that are invisible in each System One. A case in point might be a system that could integrate information across a whole institution. Suppose an institution with 20,000 students had a drop-out rate of five per cent per year (or 1000 students per year), and an AI system could identify an intervention that would reduce that rate from five per cent to four per cent. That could make a major difference to the institution, reducing the number of drop-outs by 200 each year, and the institution would have clear evidence for implementing that intervention. But the individual teacher, even if he or she taught a class of 50 students, would not see a change in behaviour that was obviously different from random variation, and would therefore be unlikely to adopt the intervention as an individual.

Each system must, therefore, deal with two kinds of questions: it must monitor trends and changes in the environment, and adapt to those changes as best it can, which is essentially a generative function, and it must decide which variation it can handle and which variation it should pass on to the next system, which is essentially a discriminative function. Since AI systems are optimal only for one kind of decision, either generative or discriminatory, the risk of relying on AI is that an AI system will be likely to try to adapt to trends when it should recognise that those trends are now passing outside its range of competence, and it should pass the variation on to the next higher level.

Any variation that System Three cannot handle will be passed on to System Four, which is responsible for development, research and marketing. System Four, like System One, has connections to the environment, but in the case of System Four, it is the future environment. It is the function of System Four to scan the environment for possible future changes and to select those variations that are relevant for planning. Again, an AI system might well be a relevant part of responding to variation in the environment. But in this case, the danger of overlooking an important change in the environment is both more serious and more likely. A sub-symbolic system might be very valuable in scanning trends in applications, to highlight slow and long-term trends in one subject area and away from another. But it may be very bad at identifying a change that is absolutely different in kind, such as a pandemic. To incorporate such a new possibility and change the model through which the environment is filtered requires symbolic reasoning. That is about the ability of the system to identify and absorb the greater variety of stimuli that constantly assail organisations in the real world. The danger of relying excessively on AI in this process is that it risks missing the occurrence of a new and distinct type of threat to the institution, because it focuses on the familiar.

This caveat about the limits of reliance on AI that has been addressed in relation to System Four is also relevant in the case of System One, although it perhaps represents rather less of a danger there. In the first place, System One has to pass any variation that it cannot handle up the line to Systems Two, Three and Four. System Four is more limited in its options, and a mistake at that level is more likely to create existential problems for the institution.

System Five is the last resort, where System Four must pass on any variation that it cannot cope with. These decisions are those that relate to the identity of the institution. For example, in the face of a pandemic, the decision must be made that, “We are the kind of institution that insists of all students being present for all lectures, and we need to reorganise our spaces and spacing on campus to accommodate the new conditions”, or, “We are the kind of institution that offers all classes online, and must make provision accordingly to have online classes”. System Four, in monitoring the environment, might identify opportunities for short courses, research in commercial or government interests, and so on, but System Five must decide which of those activities are appropriate to the status and dignity of the specific institution.

The decisions that are to be addressed by System Five are not clearly defined, and the criteria for success are not clear either. It follows that the problems that face System Five are those that are least amenable to treatment by AI; they are problems that need symbolic thinking. But if those questions are settled with clarity, the parameters within which Systems One to Four operate are much clearer, and those systems can more easily be managed with the help and support of AI.

Stafford Beer was not only interested in the operation of viable systems when they were working optimally; he also had an interest in the pathology of institutions. And he had a fairly dim view of universities, and suggested that they were particularly poor in addressing issues at the level of System Five. He would have had an even dimmer view of trying to overcome this shortcoming by employing a sub-symbolic system of AI to deal with symbolic questions of identity.

This brief review of the types of decisions that are necessary for ensuring that a system is viable suggests that most activities in the governance of higher education can, with advantage, be supported by AI. The notable exception to this is decisions that relate to the identity of the institution and the selection of activities that are deemed appropriate for the institution. The resolution of such difficulties may require consultation across the institution, but in any event, there is a requirement for clear and decisive leadership that cannot be artificial in any way.

But if AI is a possible support at all other levels, it should be managed with three caveats in mind. First, there is always the danger that AI, acting on past precedents and prior design, will miss something important in the environment that should be taken into account. Second, the use of poor and biased training data can detrimentally affect the operation of AI, and it may then prove as flawed as the humans it supports, if not more so. For both these reasons, the implementation of AI needs to be undertaken under very direct human supervision, so that any missteps can be corrected before harm is done. Third, and perhaps most importantly for good governance, AI is not by its nature transparent, and considerable work needs to be done at the human–machine interface to make sure that an appropriate level of transparency is achieved.

6. Conclusions

Current commentary on the use of AI in higher education shows excessive optimism. We suggest that it is important to distinguish between the kinds of decisions that AI can make and those that it cannot make. Recognising the difference and the decision-making processes of all viable systems allows us to develop a more balanced view of the role of AI in higher education governance. In this paper, we first addressed the kinds of decisions that AI is best suited to, considering the ways in which AI operates. We then went on to look at a classification of decisions, namely the symbolic and the sub-symbolic, offered by Donald Broadbent, which he argued could be applied to decisions made by machines as well as by humans. We believe that there is a good match between those sets of considerations and that Broadbent identified that machines were better at sub-symbolic reasoning.

We then went on to use Stafford Beer’s Viable System Model to identify which kinds of institutional decisions were necessary and at which level in an institution they occurred. We concluded that at the level of System Five, where leadership is concerned with the identity of the institution, and with selecting policies and activities that accord with that identity, there was no place for AI. These are essentially moral questions that AI cannot help with.

But in every other area of activity, we believe that it is appropriate to employ AI to ensure the most efficient use of resources in reaching institutional goals. However, from the previous considerations, we conclude that such use of AI should be firmly supervised by people who understand the shortcomings of AI and the potential risks of employing it. But in addition to the inherent risks of AI, of treating extraordinary circumstances as though they were routine, there is the requirement that good governance should be transparent, and here there is a distinct opposition between good governance and AI, and considerable work needs to be done to ensure that the operations of AI are, indeed, transparent.

There are two implications for university policy. First, both approaches, symbolic and sub-symbolic, are necessary. A symbolic approach is needed when planning actions in advance, by forming a model of the world and changing it heuristically to find a way to achieve the goals of the university. A sub-symbolic approach is needed to make incremental adjustments to the system that has been adopted. Both approaches can be useful and successful in the right circumstances. Second, leaders must be able to understand and control AI. A deep understanding of AI in the governance of higher education requires recognition of which approach is appropriate. There will also be emergent situations where the university needs to deal with the unexpected. It is at such crucial moments that a good sense of which approach is right for which circumstances is essential. AI is best at supporting a sub-symbolic approach; effective governance requires that there are leaders who can recognise when a symbolic approach is needed and can take control away from any AI system in order to implement it.

The current study emphasises the importance of leaders understanding and distinguishing between these approaches to effectively manage AI systems. It highlights the need for leaders to balance incremental improvements with revolutionary changes, ensuring AI is used appropriately to support both symbolic and sub-symbolic processes, and to recognise when a shift from one approach to another is necessary. Future research could use this study as an entry point to explore the boundary and transition between the two approaches in the application of AI for improving the system of governance for higher education. Of central importance will be the attitudes of university leaders, and whether they see AI as a technical field outside their central concerns, or whether they recognise their responsibility to maintain oversight and control of the AI systems that support their decision-making. Empirical studies of how university leaders visualise the role of AI in governance would be important in developing sound policy. In addition, understanding the role of AI in higher education governance might benefit significantly from empirical research on the use (and misuse) of various types of AI at the five levels of institutional decisions.

Author Contributions

Conceptualisation, X.L.; methodology, D.A.T.; validation, B.L.; formal analysis, X.L.; investigation, D.A.T.; resources, B.L.; data curation, X.L.; writing—original draft preparation, X.L. and D.A.T.; writing—review and editing, B.L.; supervision, B.L.; project administration, D.A.T.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Fundamental Research Funds for the Central Universities, Ministry of Finance, China, grant number 1233100006, and Seed Funding Programme, Institute of International and Comparative Education, Beijing Normal University, grant number 2023IICEZS14.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Atkinson, P. A., & Coffey, A. (2011). Analysing documentary realities. In D. Silverman (Ed.), Qualitative research: Issues of theory, method and practice (3rd ed., pp. 77–92). SAGE Publications. [Google Scholar]

- Becker, H. (2007). Writing for social scientists: How to start and finish your thesis, book, or article (2nd ed.). University of Chicago Press. [Google Scholar]

- Beer, S. (2009). Think before you think: Social complexity and knowledge of knowing. Wavestone Press. [Google Scholar]

- Bergstrom, C., & West, J. (2017). Case study: Criminal machine learning. Available online: https://callingbullshit.org/case_studies/case_study_criminal_machine_learning.html (accessed on 12 April 2025).

- Broadbent, D. (1993). Planning and opportunism. The Psychologist, 6(2), 54–60. [Google Scholar]

- Chang, C.-Y., Chen, I.-H., & Tang, K.-Y. (2024). Roles and research trends of ChatGPT-based learning. Educational Technology & Society, 27(4), 471–486. [Google Scholar]

- Chartered Governance Institute UK & Ireland. (2024). Factsheet: What is governance? Available online: https://www.cgi.org.uk/resources/information-library/factsheets/factsheets/what-is-governance/ (accessed on 5 April 2025).

- Chen, L., Chen, P., & Lin, Z. (2020). Artificial intelligence in education: A review. IEEE Access, 8, 75264–75278. [Google Scholar] [CrossRef]

- Chu, H.-C., Hwang, G.-H., Tu, Y.-F., & Yang, K.-H. (2022). Roles and research trends of artificial intelligence in higher education: A systematic review of the top 50 most-cited articles. Australasian Journal of Educational Technology, 38(3), 22–42. [Google Scholar] [CrossRef]

- Coffey, A. (2013). Analysing documents. In U. Flick (Ed.), The SAGE handbook of qualitative data analysis (pp. 367–379). SAGE Publications. [Google Scholar]

- Corbin, J. M., & Strauss, L. A. (2008). Basics of qualitative research: Techniques and procedures for developing grounded theory (3rd ed.). SAGE Publications. [Google Scholar]

- Cristianini, N. (2016). Intelligence reinvented. New Scientist, 232(3097), 37–41. [Google Scholar] [CrossRef]

- Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20, 22. [Google Scholar] [CrossRef]

- Domingos, P. (2015). The master algorithm: How the quest for ultimate machine learning will remake our world. Basic Books. [Google Scholar]

- Filgueiras, F. (2024). Artificial intelligence and education governance. Education, Citizenship and Social Justice, 19(3), 349–361. [Google Scholar] [CrossRef]

- Hilder, T. (1995). The viable system model. Available online: http://www.users.globalnet.co.uk/~rxv/orgmgt/vsm.pdf (accessed on 5 April 2025).

- Huesca, G., Elizondo-García, M. E., Aguayo-González, R., Aguayo-Hernández, C. H., González-Buenrostro, T., & Verdugo-Jasso, Y. A. (2025). Evaluating the potential of generative artificial intelligence to innovate feedback processes. Education Sciences, 15(4), 505. [Google Scholar] [CrossRef]

- Maxwell, J. A. (2009). Designing a qualitative study. In L. Bickmen, & J. R. Debra (Eds.), The SAGE handbook of applied social research methods (pp. 214–253). SAGE Publications. [Google Scholar]

- UN-Habitat. (2020). Governance assessment framework for metropolitan, territorial and regional management. UN Habitat. [Google Scholar]

- Vicente, L., & Matute, H. (2023). Humans inherit artificial intelligence biases. Scientific Reports, 13(1), 15737. [Google Scholar] [CrossRef] [PubMed]

- Yu, C., Zheng, P., Peng, T., Xu, X., Vos, S., & Ren, X. (2025). Design meets AI: Challenges and opportunities. Journal of Engineering Design, 36(5–6), 637–641. [Google Scholar] [CrossRef]

- Zhang, C., Wang, W., Pangaro, P., Martelaro, N., & Byrne, D. (2023, June 19–21). Generative image AI using design sketches as input: Opportunities and challenges. 15th Conference on Creativity and Cognition (pp. 254–261), Virtual. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).