Validation of a Spanish-Language Scale on Data-Driven Decision-Making in Pre-Service Teachers

Abstract

1. Introduction

2. Literature Review

2.1. Socioformation and Sustainable Social Development

2.2. Data-Driven Decision-Making (DDDM) in Teacher Education

2.3. Relevance of DDDM: Political Context, Implementation Challenges, and the Role of Teacher Education

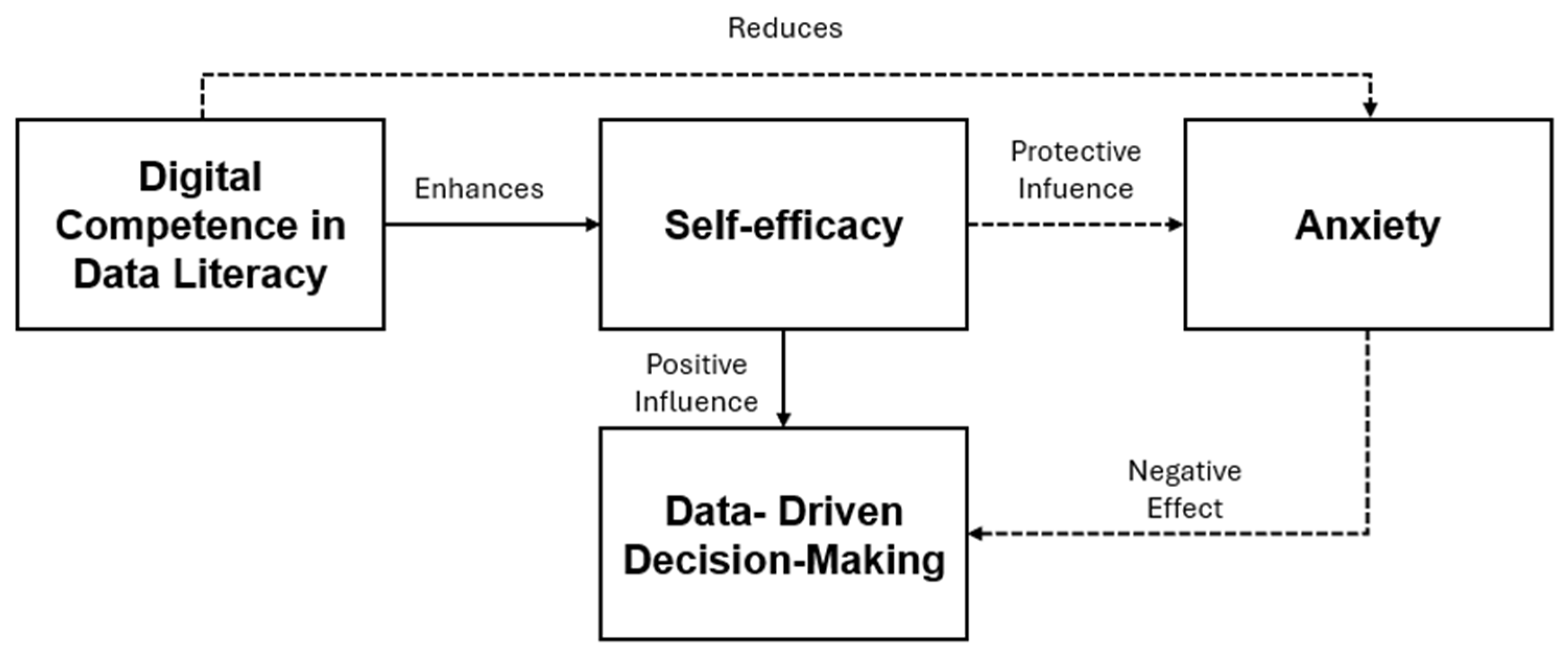

2.4. Self-Efficacy, Digital Competence, and Anxiety in DDDM

3. Materials and Methods

3.1. Participants

3.2. Materials

3.2.1. Data-Driven Decision-Making Efficacy and Anxiety Inventory (3D-MEA)

3.2.2. Beliefs About Basic ICT Competencies in Information and Data Literacy

3.3. Procedures

3.3.1. Translation Process

3.3.2. Content Validity

3.3.3. Pilot Test

3.3.4. Data Collection for Psychometric Validation

3.4. Data Analysis

4. Results

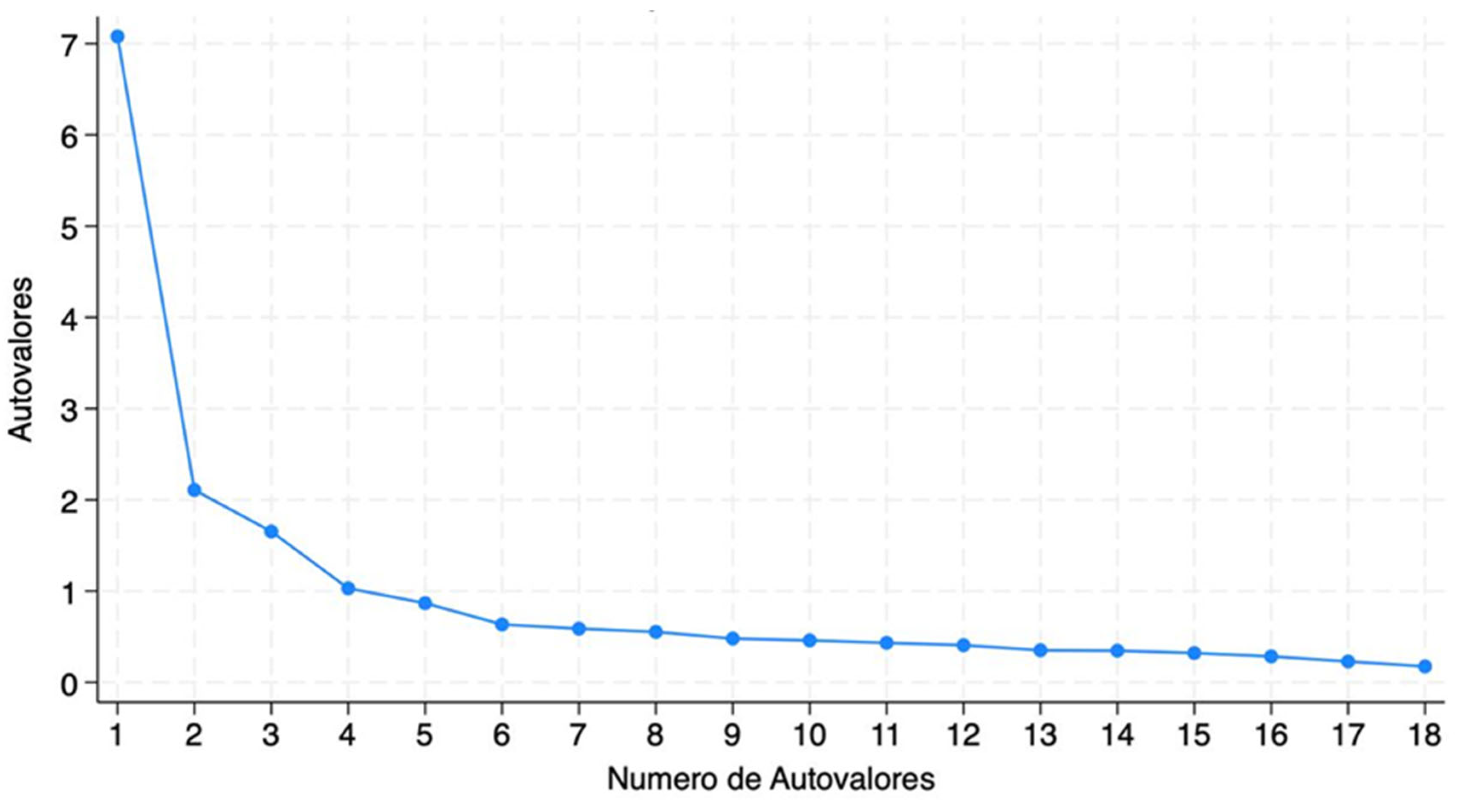

4.1. Exploratory Factor Analysis (EFA)

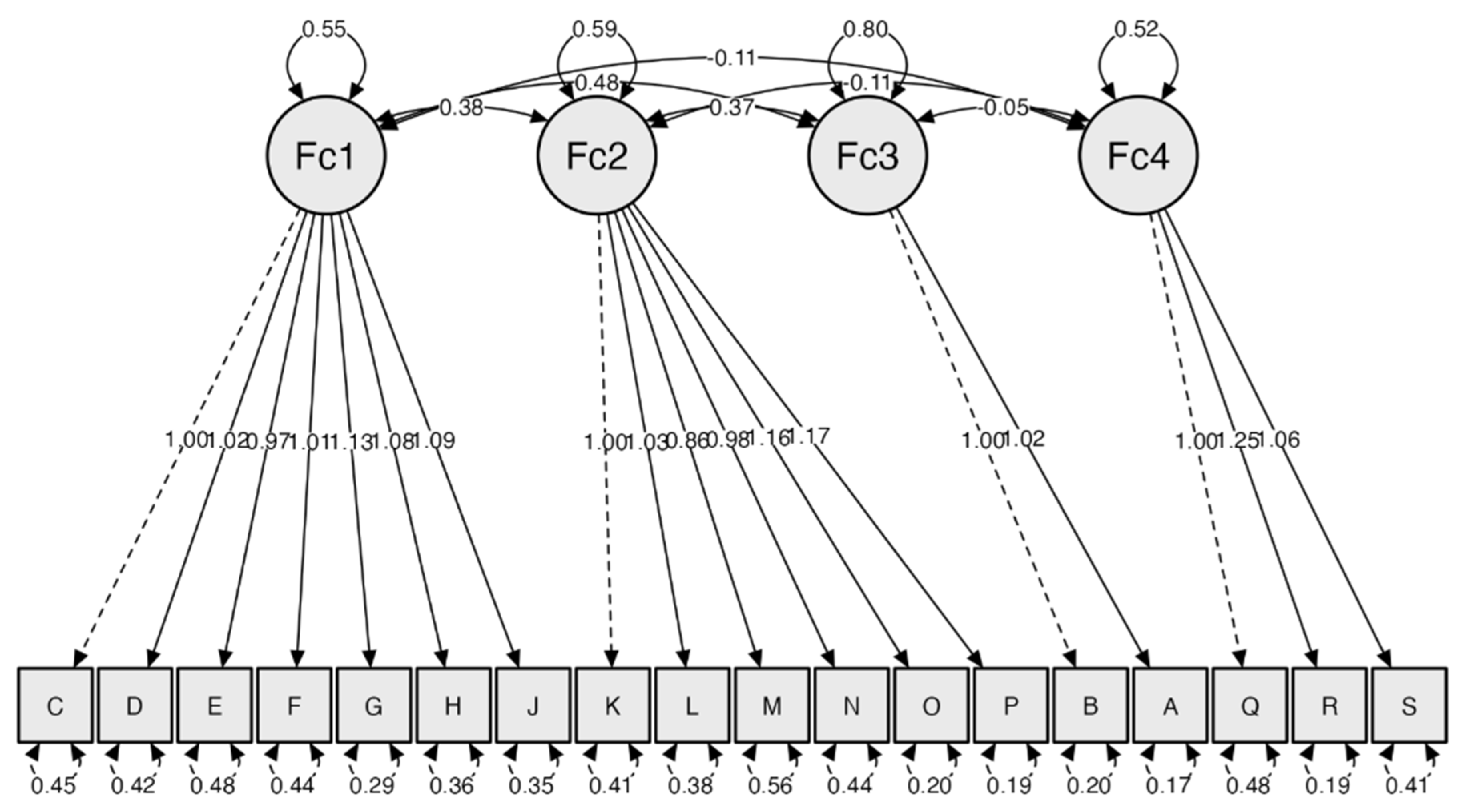

4.2. Confirmatory Factor Analysis (CFA)

4.3. Measurement Invariance Across Gender and Country

5. Discussion

Limitations and Future Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Item |

|---|

| 1. I am confident that I know what types of data or reports I need to evaluate group performance. |

| 2. I am confident that I know what types of data or reports I need to evaluate student performance. |

| 3. I am confident in my ability to understand assessment reports. |

| 4. I am confident in my ability to interpret subtest or content area scores to determine students’ strengths and weaknesses in a specific area. |

| 5. I am confident that I can use data to identify students with special learning needs. |

| 6. I am confident that I can use data to identify gaps in students’ understanding of curricular concepts. |

| 7. I am confident that I can use assessment data to provide students with specific feedback on their performance or progress. |

| 8. I am confident that I can use data to group students with similar learning needs for instruction. |

| 9. I am confident in my ability to use data to guide my selection of specific interventions to address students’ learning gaps. |

| 10. I can identify and use appropriate sources in digital environments based on my information needs. |

| 11. I can use my search strategies in digital environments. |

| 12. I am critical of information, sources, and data in digital environments. |

| 13. I can securely store digital information and data. |

| 14. I can retrieve the information I have stored. |

| 15. I can retrieve information I have stored from different environments. |

| 16. I feel intimidated by statistics. |

| 17. I worry about feeling or seeming “stupid” when it comes to making data-based decisions. |

| 18. I am intimidated by the process of linking data analysis with my teaching practice. |

Appendix B

| Dimension | Chile | Spain |

|---|---|---|

| Program duration | 5-year undergraduate teacher education programs (10 semesters), leading to a professional teaching degree. | 4-year undergraduate degree (Grado) for Primary or Early Childhood Education. For Secondary Education, a university degree plus a 1-year Master’s is required. |

| Admission criteria | Regulated by Law 20.903, including minimum scores in national exams (PAES), NEM (high school GPA), or ranking of high school achievement. | Admission is based on the results of the national university entrance exam (EBAU), with no specific national-level criteria for teacher education programs. |

| Curriculum structure | Includes disciplinary training, pedagogy, and progressive practicum experiences beginning in early semesters. | Focuses on theoretical foundations and mandatory practicum, typically concentrated in later semesters or during the Master’s program. |

| National diagnostic assessment | Mandatory Evaluación Nacional Diagnóstica (END) in the second and final years, aligned with the national standards for initial teacher education. | No national diagnostic exam. Internal assessments are managed by each university. |

| Professional certification | The teaching degree grants eligibility to work as a teacher. Entry into the public education system requires participation in national or municipal competitions. | The degree (Grado or Master) grants eligibility, but public school teachers must pass national competitive examinations (oposiciones) based on standardized content. |

| Competency frameworks | Governed by the 2023 Initial Teacher Education Standards (Estándares Orientadores), which include general references to digital skills and data use. | Based on the Marco de Competencia Digital Docente (Instituto Nacional de Tecnologías Educativas y de Formación del Profesorado, n.d.), which explicitly incorporates data-informed decision-making, learning analytics, and digital fluency. |

| Data use training | No standardized national approach. Some teacher education programs include content related to data use, evaluation, and technologies, but implementation varies across institutions. | Data use is explicitly addressed through the digital competence framework of the National Institute of Educational Technologies and Teacher Training and integrated into state-level policies for teacher training and professional performance. |

References

- Abraham, J., Ferfolja, T., Sickel, A., Power, A., Curry, C., Fraser, D., & Mackay, K. (2021). Development and validation of a scale to explore pre-service teachers’ sense of preparedness, engagement and self-efficacy in classroom teaching. Australian Journal of Teacher Education, 46(1), 1–23. [Google Scholar] [CrossRef]

- Alaqeel, A. M. (2024). Examining self-efficacy and anxiety in data-driven decision-making practices among learning disabilities teachers in Saudi Arabia: A mixed methods study. Cogent Education, 11(1), 2434775. [Google Scholar] [CrossRef]

- Ambuehl, B., & Inauen, J. (2022). Contextualized measurement scale adaptation: A 4-step tutorial for health psychology research. International Journal of Environmental Research and Public Health, 19(19), 12775. [Google Scholar] [CrossRef]

- Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215. [Google Scholar] [CrossRef]

- Bandura, A. (1997). Self-efficacy: The exercise of control. W. H. Freeman. [Google Scholar]

- Betancur-Chicué, V., Gómez-Ardila, S. E., Cárdenas-Rodríguez, Y. P., Hernández-Gómez, S. A., Galindo-Cuesta, J. A., & Cadrazco-Suárez, M. A. (2023). Instrumento para la identificación de competencias digitales docentes: Validación de un instrumento basado en el DigCompEdu en la Universidad de la Salle, Colombia. Revista Prisma Social, 41, 27–46. Available online: https://revistaprismasocial.es/article/view/4970 (accessed on 10 May 2025.).

- Brinkhuis, M. J. S., & Maris, G. (2019). Tracking ability: Defining trackers for measuring educational progress. In B. Veldkamp, & C. Sluijter (Eds.), Theoretical and practical advances in computer-based educational measurement (pp. 161–173). Springer. [Google Scholar] [CrossRef]

- Busco, C., González, F., & Aránguiz, M. (2023). Factors that favor or hinder the acquisition of a digital culture in large organizations in Chile. Frontiers in Psychology, 14, 1153031. [Google Scholar] [CrossRef]

- Caycho-Rodríguez, T., Vilca, L. W., Cervigni, M., Gallegos, M., Martino, P., Calandra, M., Rey Anacona, C. A., López-Calle, C., Moreta-Herrera, R., Chacón-Andrade, E. R., Lobos-Rivera, M. E., del Carpio, P., Quintero, Y., Robles, E., Panza Lombardo, M., Gamarra Recalde, O., Buschiazzo Figares, A., White, M., & Burgos-Videla, C. (2022). Cross-national measurement invariance of the purpose in life test in seven Latin American countries. Frontiers in Psychology, 13, 974133. [Google Scholar] [CrossRef] [PubMed]

- Centro de Perfeccionamiento, Experimentación e Investigaciones Pedagógicas. (n.d.-a). National diagnostic assessment of initial teacher education. Ministry of Education of Chile. Available online: https://cpeip.cl/Categoria/evaluacion-nacional-diagnostica-de-la-formacion-inicial-docente/ (accessed on 24 May 2025).

- Centro de Perfeccionamiento, Experimentación e Investigaciones Pedagógicas. (n.d.-b). Guiding standards for initial teacher education. Ministry of Education of Chile. Available online: https://www.cpeip.cl/estandares-formacion-docente/ (accessed on 24 May 2025).

- Conn, C. A., Bohan, K. J., Bies-Hernandez, N. J., Powell, P. J., Sweeny, S. P., Persinger, L. L., & Persinger, J. D. (2022). Expected data literacy knowledge and skills for early career teachers: Perspectives from school and district personnel. Teaching and Teacher Education, 111, 103607. [Google Scholar] [CrossRef]

- Costello, A. B., & Osborne, J. W. (2005). Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Practical Assessment, Research & Evaluation, 10(7), 1–9. [Google Scholar] [CrossRef]

- Cruz-Vargas, J., Pérez-González, M., & Rodríguez-López, A. (2023). Implications for the quality of initial teacher training: The influence of socioformative processes. Mediterranean Journal of Social Sciences, 14(2), 101–110. [Google Scholar] [CrossRef]

- Datnow, A., & Park, V. (2019). Professional collaboration with purpose: Teacher learning towards equitable and excellent schools. Routledge. [Google Scholar]

- Dunn, K. E., Airola, D. T., & Hayakawa, T. (2020). Pre-service teachers’ efficacy, anxiety, and concerns about data and the new idea of anchored judgment. Current Issues in Education, 21(1), 1–25. Available online: http://cie.asu.edu/ojs/index.php/cieatasu/article/view/1856 (accessed on 24 May 2025).

- Dunn, K. E., Airola, D. T., Lo, W. J., & Garrison, M. (2013a). Becoming data driven: The influence of teachers’ sense of efficacy on concerns related to data-driven decision making. Journal of Experimental Education, 81(2), 222–241. [Google Scholar] [CrossRef]

- Dunn, K. E., Airola, D. T., Lo, W. J., & Garrison, M. (2013b). What teachers think about what they can do with data: Development and validation of the data-driven decision-making efficacy and anxiety inventory. Contemporary Educational Psychology, 38(1), 87–98. [Google Scholar] [CrossRef]

- Ertmer, P. A., Ottenbreit-Leftwich, A. T., Sadik, O., Sendurur, E., & Sendurur, P. (2012). Teacher beliefs and technology integration practices: A critical relationship. Computers & Education, 59(2), 423–435. [Google Scholar] [CrossRef]

- Etikan, I., Abubakar, S., & Sunusi, R. (2016). Comparison of convenience sampling and purposive sampling. American Journal of Theoretical and Applied Statistics, 5(1), 1–4. [Google Scholar] [CrossRef]

- Fabrigar, L. R., MacCallum, R. C., Wegener, D. T., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272–299. [Google Scholar] [CrossRef]

- Fischer, C., Pardos, Z. A., Baker, R. S., Williams, J. J., Smyth, P., Yu, R., Slater, S., Baker, R., & Warschauer, M. (2020). Mining big data in education: Affordances and challenges. Review of Research in Education, 44(1), 130–160. [Google Scholar] [CrossRef]

- Flodén, A., Stadtler, M., Jones Collazo, S. E., Mone, T., Ash, R., & Fridlund, B. (2020). Cross-cultural adaptation and psychometric validation of the Flodén ATODAI instrument in the North American context. BMC Nursing, 19, 55. [Google Scholar] [CrossRef]

- Floyd, F. J., & Widaman, K. F. (1995). Factor analysis in the development and refinement of clinical assessment instruments. Psychological Assessment, 7(3), 286–299. [Google Scholar] [CrossRef]

- Gaftandzhieva, S., Hussain, S., Hilčenko, S., Doneva, R., & Boykova, K. (2023). Data-driven decision making in higher education institutions: State-of-play. International Journal of Advanced Computer Science and Applications, 14(6), 397–405. [Google Scholar] [CrossRef]

- García-Martín, J., Rico, R., & García-Martín, M. A. (2023). Self-perception of digital competence and the use of digital resources among teachers from Spain and the United States. Behavioral Sciences, 13(7), 559. [Google Scholar] [CrossRef]

- Gálvez-de-la-Cuesta, M. d. C., Gertrudix-Barrio, M., & García-García, F. (2020). Datos abiertos y educación: Formación de docentes en la sociedad digital. Páginas de Educación, 13(2), 1–20. [Google Scholar] [CrossRef]

- Gálvez-Nieto, J. L., Salvo-Garrido, S., Domínguez-Lara, S., Polanco-Levicán, K., & Mieres-Chacaltana, M. (2023). Psychometric properties of the teachers’ sense of efficacy scale in a sample of Chilean public school teachers. Frontiers in Psychology, 14, 1272548. [Google Scholar] [CrossRef]

- González-Medina, I., Gavín-Chocano, Ó., Pérez-Navío, E., & Maldonado Berea, G. A. (2025). Trajectories of digital teaching competence: A multidimensional PLS-SEM study in university contexts. Information, 16(5), 373. [Google Scholar] [CrossRef]

- Gummer, E. S., & Mandinach, E. B. (2015). Building a conceptual framework for data literacy. Teachers College Record, 117(4), 1–22. [Google Scholar] [CrossRef]

- Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2014). Multivariate data analysis (7th ed.). Cengage Learning. [Google Scholar]

- Hamilton, V., Onder, Y., Andzik, N. R., & Reeves, T. D. (2022). Do data-driven decision-making efficacy and anxiety inventory scores mean the same thing for pre-service and in-service teachers? Journal of Psychoeducational Assessment, 40(4), 482–498. [Google Scholar] [CrossRef]

- Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. [Google Scholar] [CrossRef]

- Instituto Nacional de Tecnologías Educativas y de Formación del Profesorado. (n.d.). Competencia digital docente. Available online: https://intef.es/competencia-digital-educativa/competencia-digital-docente/ (accessed on 10 May 2025).

- Javier-Aliaga, D., Paredes-Aguirre, M., Quispe-Apaza, C., & López-Meneses, E. (2024). Self-efficacy and digital competence in teachers: Impact on the perceived usefulness of ICTs in educational settings. Education and Information Technologies. Advance online publication. [Google Scholar] [CrossRef]

- Jimerson, J. B. (2014). Thinking about data: Exploring the development of mental models for “data use” among teachers and school leaders. Studies in Educational Evaluation, 42, 5–14. [Google Scholar] [CrossRef]

- Jimerson, J. B., & Wayman, J. C. (2015). Professional learning for using data: Examining teacher needs & supports. Teachers College Record, 117(4), 1–36. [Google Scholar] [CrossRef]

- Kristanti, S., & Febriana, T. (2021). Adapting the Trait Emotional Intelligence Questionnaire–Short Form (TEIQue–SF) into Indonesian language and culture. Journal of Educational, Health and Community Psychology, 10(4), 320–337. [Google Scholar] [CrossRef]

- Laakasuo, M., Palomäki, J., Abuhamdeh, S., Salmela, M., & Salmela, M. (2022). Psychometric analysis of the flow short scale translated to finnish. Scientific Reports, 12, 20067. [Google Scholar] [CrossRef]

- Lee, J., Alonzo, D., Beswick, K., Abril, J. M. V., Chew, A. W., & Zin Oo, C. (2024). Dimensions of teachers’ data literacy: A systematic review of literature from 1990 to 2021. Educational Assessment, Evaluation and Accountability, 36, 145–200. [Google Scholar] [CrossRef]

- Li, C.-H. (2016). Confirmatory factor analysis with ordinal data: Comparing robust maximum likelihood and diagonally weighted least squares. Behavior Research Methods, 48(3), 936–949. [Google Scholar] [CrossRef]

- Luo, Y., Wang, Y., Zhang, Y., & Li, X. (2024). Development and validation of the self-volume management behaviour questionnaire for patients with chronic heart failure. ESC Heart Failure, 11, 1076–1085. [Google Scholar] [CrossRef]

- Mandinach, E. B., & Gummer, E. S. (2016). What does it mean for teachers to be data literate: Laying out the skills, knowledge, and dispositions. Teaching and Teacher Education, 60, 366–376. [Google Scholar] [CrossRef]

- Mandinach, E. B., & Jackson, S. (2012). Transforming teaching and learning through data-driven decision making. Corwin Press. [Google Scholar] [CrossRef]

- Mandinach, E. B., & Schildkamp, K. (2021). Misconceptions about data-based decision making in education: An exploration of the literature. Studies in Educational Evaluation, 69, 100842. [Google Scholar] [CrossRef]

- Martínez-Iñiguez, J. E., Tobón, S., & Soto-Curiel, J. A. (2021). Ejes claves del modelo educativo socioformativo para la formación universitaria en el marco de la transformación hacia el desarrollo social sostenible. Formación Universitaria, 14(1), 53–66. [Google Scholar] [CrossRef]

- Merjovaara, O., Eklund, K., Nousiainen, T., Karjalainen, S., Koivula, M., Mykkänen, A., & Hämäläinen, R. (2024). Early childhood pre-service teachers’ attitudes towards digital technologies and their relation to digital competence. Education and Information Technologies. Advance online publication. [Google Scholar] [CrossRef]

- Milkova, E., Moldoveanu, M., & Krcil, T. (2025). Sustainable education through information and communication technology: A case study on enhancing digital competence and academic performance of social science higher education students. Sustainability, 17(10), 4422. [Google Scholar] [CrossRef]

- Miller-Bains, K. L., Cohen, J., & Wong, V. C. (2022). Developing data literacy: Investigating the effects of a pre-service data use intervention. Teaching and Teacher Education, 109, 103569. [Google Scholar] [CrossRef]

- Mokkink, L. B., Terwee, C. B., Patrick, D. L., Alonso, J., Stratford, P. W., Knol, D. L., Bouter, L. M., & De Vet, H. C. W. (2010). The COSMIN checklist for assessing the methodological quality of studies on measurement properties of health status measurement instruments: An international Delphi study. Quality of Life Research, 19(4), 539–549. [Google Scholar] [CrossRef]

- Neugebauer, S. R., Morrison, D., Karahalios, V., Harper, E., Jones, H., Lenihan, S., Oosterbaan, F., & Tindall, C. (2020). A collaborative model to support K-12 pre-service teachers’ data-based decision making in schools: Integrating data discussions across stakeholders, spaces, and subjects. Action in Teacher Education, 43(1), 85–101. [Google Scholar] [CrossRef]

- Norris, M., & Lecavalier, L. (2010). Evaluating the use of exploratory factor analysis in developmental disability psychological research. Journal of Autism and Developmental Disorders, 40, 8–20. [Google Scholar] [CrossRef]

- O’Connor, B., & Park, M. (2023). Exploring the influence of collaborative data-based decision making among teachers in professional learning communities on teaching practice. Disciplinary and Interdisciplinary Science Education Research, 5, 17. [Google Scholar] [CrossRef]

- Oguguo, B., Ezechukwu, R., Nannim, F., & Offor, K. (2023). Analysis of teachers in the use of digital resources in online teaching and assessment in COVID times. Innoeduca. International Journal of Technology and Educational Innovation, 9(1), 81–96. [Google Scholar] [CrossRef]

- Ortiz-Mallegas, S., Carrasco-Aguilar, C., Luzón-Trujillo, A., & Torres-Sánchez, M. M. (2025). New teacher associations: Comparative analysis of teachers’ political participation in Chile and Spain. SAGE Open, 15(1). [Google Scholar] [CrossRef]

- Padilla-Hernández, A. L., Gámiz-Sánchez, V. M., & Romero-López, M. A. (2019). Niveles de desarrollo de la competencia digital docente: Una mirada a marcos recientes del ámbito internacional. Innoeduca. International Journal of Technology and Educational Innovation, 5(2), 140–150. [Google Scholar] [CrossRef]

- Penfield, R. D., & Giacobbi, P. R., Jr. (2004). Applying a score confidence interval to Aiken’s item content-relevance index. Measurement in Physical Education and Exercise Science, 8(4), 213–225. [Google Scholar] [CrossRef]

- Putnick, D. L., & Bornstein, M. H. (2016). Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Developmental Review, 41, 71–90. [Google Scholar] [CrossRef]

- Reeves, T. D., Onder, Y., & Abdi, B. (2020). Validation of the data-driven decision-making efficacy and anxiety inventory (3D-MEA) with U.S. pre-service teachers. Mid-Western Educational Researcher, 32(4), 286–303. Available online: https://scholarworks.bgsu.edu/mwer/vol32/iss4/2 (accessed on 10 May 2025).

- Reise, S. P., Waller, N. G., & Comrey, A. L. (2000). Factor analysis and scale revision. Psychological Assessment, 12(3), 287–297. [Google Scholar] [CrossRef]

- Ried, L., Eckerd, S., & Kaufmann, L. (2022). Social desirability bias in PSM surveys and behavioral experiments: Considerations for design development and data collection. Journal of Purchasing and Supply Management, 28(1), 100743. [Google Scholar] [CrossRef]

- Ritoša, A., Danielsson, H., Sjöman, M., Almqvist, L., & Granlund, M. (2020). Assessing school engagement: Adaptation and validation of “Engagement versus disaffection with learning: Teacher report” in the Swedish educational context. Frontiers in Education, 5, 521972. [Google Scholar] [CrossRef]

- Romero, C., & Ventura, S. (2020). Educational data mining and learning analytics: An updated survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 10(3), e1355. [Google Scholar] [CrossRef]

- Roy Sadradín, D., Céspedes Carreño, C., Chacana Yordá, C., & Vera Carreño, H. (2025). La competencia digital y la autoeficacia en docentes de educación superior: El rol de las variables de edad y género. European Public & Social Innovation Review, 10(1), 1–20. Available online: https://epsir.net/index.php/epsir/article/view/1413 (accessed on 10 May 2025).

- Rubach, C., & Lazarides, R. (2021). Addressing 21st-century digital skills in schools: Development and validation of an instrument to measure teachers’ basic ICT competence beliefs. Computers in Human Behavior, 118, 106636. [Google Scholar] [CrossRef]

- Salas-Rodríguez, F., Lara, S., & Martínez, M. (2021). Spanish version of the teachers’ sense of efficacy scale: An adaptation and validation study. Frontiers in Psychology, 12, 714145. [Google Scholar] [CrossRef]

- Sandoval-Ríos, F., Gajardo-Poblete, C., & López-Núñez, J. A. (2025). Role of data literacy training for decision-making in teaching practice: A systematic review. Frontiers in Education, 10, 1485821. [Google Scholar] [CrossRef]

- Scherer, R., Siddiq, F., & Teo, T. (2015). Becoming more specific: Measuring and modeling teachers’ perceived usefulness of ICT in the context of teaching and learning. Computers and Education, 88, 202–214. [Google Scholar] [CrossRef]

- Schildkamp, K., & Kuiper, W. (2010). Data-informed curriculum reform: Which data, what purposes, and promoting and hindering factors. Teaching and Teacher Education, 26(3), 482–496. [Google Scholar] [CrossRef]

- Schildkamp, K., van der Kleij, F. M., Heitink, M. C., Kippers, W. B., & Veldkamp, B. P. (2020). Formative assessment: A systematic review of critical teacher prerequisites for classroom practice. International Journal of Educational Research, 103, 101602. [Google Scholar] [CrossRef]

- Shamsuddin, F., & Abdul Razak, A. Z. (2023). Development of a model for data-driven decision making: Critical skills for school leaders. Malaysian Journal of Social Sciences and Humanities, 8(12), e002614. [Google Scholar] [CrossRef]

- Skaalvik, E. M., & Skaalvik, S. (2010). Teacher self-efficacy and teacher burnout: A study of relations. Teaching and Teacher Education, 26(4), 1059–1069. [Google Scholar] [CrossRef]

- Sorkos, G., & Hajisoteriou, C. (2021). Sustainable intercultural and inclusive education: Teachers’ efforts on promoting a combining paradigm. Pedagogy, Culture and Society, 29(4), 517–536. [Google Scholar] [CrossRef]

- Stevens, J. (2002). Applied multivariate statistics for the social sciences (4th ed.). Lawrence Erlbaum Associates. [Google Scholar]

- Stiggins, R. (2001). Student-involved classroom assessment (3rd ed.). Prentice Hall. [Google Scholar]

- Tan, H., Zhao, K., & Dervin, F. (2022). Experiences of and preparedness for intercultural teacherhood in higher education: Non-specialist English teachers’ positioning, agency and sense of legitimacy in China. Language and Intercultural Communication, 22(1), 68–84. [Google Scholar] [CrossRef]

- Tobón, S., & Lozano-Salmorán, E. F. (2024). Socioformative pedagogical practices and academic performance in students: Mediation of socioemotional skills. Heliyon, 10, e34898. [Google Scholar] [CrossRef]

- UNESCO. (2020a). Education for sustainable development: A roadmap. United Nations Educational, Scientific and Cultural Organization. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000374802 (accessed on 10 May 2025).

- UNESCO. (2020b). Evaluation of the Latin American laboratory for the assessment of quality in education. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000374760 (accessed on 10 May 2025).

- Valenzuela, L. Á., Ocaña, Y. J., Soto, C. V., Cotrina, J. C., & Fuster-Guillén, D. (2023). E-government and its development in the region: Challenges. International Journal of Professional Business Review, 8(1), e0939. [Google Scholar] [CrossRef]

- Van Audenhove, L., Korica, M., Taddeo, M., & Colpaert, J. (2024). Data literacy in the new EU DigComp 2.2 framework: How DigComp defines competences on artificial intelligence, internet of things and data. Information and Learning Sciences, 125(1/2), 60–74. [Google Scholar] [CrossRef]

- Velandia Rodríguez, C. A., Mena-Guacas, A. F., Tobón, S., & López-Meneses, E. (2022). Digital teacher competence frameworks evolution and their use in Ibero-America up to the year the COVID-19 pandemic began: A systematic review. International Journal of Environmental Research and Public Health, 19(19), 12628. [Google Scholar] [CrossRef]

- Villeneuve, A., & Bouchamma, Y. (2023). Data-driven decision making using local multi-source data: Analysis of a teacher-researcher’s professional practice. Teaching and Teacher Education, 132, 104198. [Google Scholar] [CrossRef]

- Vuorikari, R., Kluzer, S., & Punie, Y. (2022). DigComp 2.2: The digital competence framework for citizens (EUR 31006 EN). Publications Office of the European Union. [Google Scholar] [CrossRef]

- Walker, D. A., Reeves, T. D., & Smith, T. J. (2018). Confirmation of the data-driven decision-making efficacy and anxiety inventory’s score factor structure among teachers. Journal of Psychoeducational Assessment, 36(5), 477–491. [Google Scholar] [CrossRef]

- Yoshizawa, L. (2022). The imposition of instrumental research use: How school and district practitioners enact their state’s evidence requirements. American Educational Research Journal, 59(6), 1157–1193. [Google Scholar] [CrossRef]

| Participants N = 512 | Values | Total n; (%) |

|---|---|---|

| Age (n = 511) | <20 | 63 (12%) |

| 20–29 | 388 (76%) | |

| 30–39 | 38 (7%) | |

| 40–49 | 15 (3%) | |

| 50–59 | 6 (1%) | |

| ≥60 | 1(0.2%) | |

| Gender | Female | 296 (57.81%) |

| Male | 211 (41.21%) | |

| Other | 5 (0.98%) | |

| University | Chilean | 306 (59.77%) |

| Spanish | 206 (40.23%) | |

| Academic Level | First year | 92 (17.97%) |

| Second year | 167 (32.62%) | |

| Third year | 80 (15.62%) | |

| Fourth year | 94 (18.36%) | |

| Fifth year | 32 (6.25%) | |

| Postgraduate | 47 (9.18%) |

| Item Code | Subscale | Sufficiency | Clarity | Coherence | Relevance |

|---|---|---|---|---|---|

| IT01 | Identification | 0.75 | 0.78 | 0.77 | 0.95 |

| IT02 | 0.8 | 0.74 | 0.86 | 0.81 | |

| IT03 | Interpretation and Application | 0.76 | 0.79 | 0.9 | 0.78 |

| IT04 | 0.93 | 0.94 | 0.9 | 0.93 | |

| IT05 | 0.93 | 0.79 | 0.87 | 0.78 | |

| IT06 | 0.75 | 0.78 | 0.77 | 0.78 | |

| IT07 | 0.89 | 0.86 | 0.94 | 0.83 | |

| IT08 | 0.8 | 0.78 | 0.88 | 0.73 | |

| IT09 | 0.73 | 0.86 | 0.78 | 0.94 | |

| IT10 | 0.93 | 0.9 | 0.77 | 0.88 | |

| IT11 | Technology | 0.95 | 0.87 | 0.77 | 0.75 |

| IT12 | 0.94 | 0.86 | 0.91 | 0.85 | |

| IT13 | 0.79 | 0.94 | 0.81 | 0.92 | |

| IT14 | 0.88 | 0.89 | 0.76 | 0.74 | |

| IT15 | 0.81 | 0.87 | 0.88 | 0.77 | |

| IT16 | Anxiety | 0.9 | 0.74 | 0.87 | 0.9 |

| IT17 | 0.77 | 0.84 | 0.79 | 0.92 | |

| IT18 | 0.91 | 0.81 | 0.89 | 0.79 |

| Item | Mean | Standard Deviation | Skewness | Kurtosis |

|---|---|---|---|---|

| 1. Confío en que sé qué tipos de datos o informes necesito para evaluar el rendimiento del grupo. | 3.47 | 0.95 | −0.27 | 2.81 |

| 2. Confío en que sé qué tipos de datos o informes necesito para evaluar el rendimiento de los estudiantes | 3.59 | 0.95 | −0.48 | 2.98 |

| 3. Confío en mi capacidad para comprender informes de evaluación | 3.82 | 0.88 | −0.63 | 3.32 |

| 4. Confío en mi habilidad para interpretar puntajes de subpruebas o áreas de contenido para determinar las fortalezas y debilidades de los estudiantes en un área específica | 3.69 | 0.94 | −0.35 | 2.79 |

| 5. Confío en que puedo utilizar datos para identificar a estudiantes con necesidades especiales de aprendizaje | 3.59 | 1.02 | −0.47 | 2.82 |

| 6. Confío en que puedo utilizar datos para identificar brechas en la comprensión de los estudiantes en cuanto a conceptos curriculares | 3.49 | 0.98 | −0.41 | 2.90 |

| 7. Confío en que puedo utilizar los datos de evaluación para proporcionar retroalimentación específica a los estudiantes sobre su rendimiento o progreso | 3.81 | 0.94 | −0.67 | 3.36 |

| 8. Confío en que puedo utilizar los datos para agrupar a los estudiantes con necesidades de aprendizaje similares para la enseñanza | 3.66 | 0.93 | −0.45 | 2.96 |

| 9. Confío en mi capacidad para utilizar datos para guiar mi selección de intervenciones específicas para abordar las brechas en la comprensión de los estudiantes | 3.54 | 0.95 | −0.49 | 3.26 |

| 10. Puedo identificar y utilizar fuentes adecuadas en entornos digitales en función de mis necesidades de información | 3.76 | 0.93 | −0.43 | 2.85 |

| 11. Puedo utilizar mis estrategias de búsqueda en entornos digitales | 4.02 | 0.89 | −0.83 | 3.63 |

| 12. Soy crítico/a con la información, las fuentes y los datos en entornos digitales | 4.01 | 0.92 | −0.83 | 3.49 |

| 13. Puedo almacenar información y datos digitales de forma segura | 4.01 | 0.90 | −0.67 | 2.98 |

| 14. Puedo recuperar la información que tengo almacenada | 3.91 | 0.98 | −0.73 | 3.12 |

| 15. Puedo recuperar información que tengo almacenada de distintos entornos. | 3.81 | 0.98 | −0.54 | 2.73 |

| 16. Me siento intimidado/a por las estadísticas. | 2.77 | 1.24 | 0.26 | 2.13 |

| 17. Me preocupa sentirme o parecer “tonto” cuando se trata de tomar decisiones basadas en datos. | 2.94 | 1.36 | 0.90 | 1.79 |

| 18. Me intimida el proceso de vincular el análisis de datos con mi práctica de enseñanza. | 2.99 | 1.23 | −0.08 | 2.13 |

| Item | Factor Loadings | |||

|---|---|---|---|---|

| F1 (Interpretation and Application) | F2 (Technology) | F3 (Identification) | F4 (Anxiety) | |

| 1 | 0.5878 | 0.4442 | 0.8784 | −0.1135 |

| 2 | 0.583 | 0.4221 | 0.8289 | −0.0862 |

| 3 | 0.6576 | 0.4493 | 0.5311 | −0.2403 |

| 4 | 0.6855 | 0.4522 | 0.5608 | −0.1606 |

| 5 | 0.7089 | 0.3451 | 0.3804 | −0.0503 |

| 6 | 0.7235 | 0.3682 | 0.4351 | −0.1181 |

| 7 | 0.7827 | 0.4679 | 0.5639 | −0.2019 |

| 8 | 0.7654 | 0.4233 | 0.4758 | −0.1707 |

| 9 | 0.7518 | 0.4544 | 0.5276 | −0.1758 |

| 10 | 0.6009 | 0.5762 | 0.3567 | −0.2359 |

| 11 | 0.5969 | 0.6385 | 0.3694 | −0.1529 |

| 12 | 0.4898 | 0.5391 | 0.279 | −0.1244 |

| 13 | 0.4643 | 0.7463 | 0.3441 | −0.1152 |

| 14 | 0.4119 | 0.8391 | 0.3901 | −0.1418 |

| 15 | 0.431 | 0.846 | 0.4326 | −0.1585 |

| 16 | −0.0923 | −0.1239 | 0.0034 | 0.6943 |

| 17 | −0.1694 | −0.1723 | −0.123 | 0.8315 |

| 18 | −0.1485 | −0.0762 | −0.0962 | 0.7529 |

| Factors | F1 (Interpretation and Application) | F2 (Technology) | F3 (Identification) | F4 (Anxiety) |

|---|---|---|---|---|

| F1 | 1 | |||

| F2 | 0.5812 | 1 | ||

| F3 | 0.6289 | 0.4728 | 1 | |

| F4 | −0.2163 | −0.1966 | −0.1325 | 1 |

| Factors | Cronbach’s Alpha > 0.70 | Variance | KMO | Bartlett’s χ2 | RMSEA | CFI | TLI | SRMR |

|---|---|---|---|---|---|---|---|---|

| F1 | 0.888 | 0.33 | 0.896 | <0.001 | 0.085 | 0.900 | 0.881 | 0.066 |

| F2 | 0.855 | 0.26 | ||||||

| F3 | 0.857 | 0.22 | ||||||

| F4 | 0.802 | 0.11 |

| Item | Factor | Standardized Loading | Standard Error | z-Value | p |

|---|---|---|---|---|---|

| 1 | Identification | 0.912 | 0.016 | 58.497 | <0.001 |

| 2 | 0.895 | 0.016 | 54.592 | <0.001 | |

| 3 | Interpretation and Application | 0.742 | 0.024 | 30.683 | <0.001 |

| 4 | 0.76 | 0.021 | 35.859 | <0.001 | |

| 5 | 0.72 | 0.023 | 31.811 | <0.001 | |

| 6 | 0.746 | 0.02 | 37.054 | <0.001 | |

| 7 | 0.841 | 0.016 | 51.201 | <0.001 | |

| 8 | 0.8 | 0.019 | 42.362 | <0.001 | |

| 9 | 0.806 | 0.019 | 42.526 | <0.001 | |

| 10 | Technology | 0.768 | 0.022 | 35.545 | <0.001 |

| 11 | 0.789 | 0.02 | 39.236 | <0.001 | |

| 12 | 0.661 | 0.029 | 23.013 | <0.001 | |

| 13 | 0.749 | 0.022 | 34.52 | <0.001 | |

| 14 | 0.894 | 0.014 | 66.132 | <0.001 | |

| 15 | 0.899 | 0.012 | 78.151 | <0.001 | |

| 16 | Anxiety | 0.72 | 0.027 | 26.983 | <0.001 |

| 17 | 0.9 | 0.026 | 34.666 | <0.001 | |

| 18 | 0.765 | 0.029 | 26.629 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sandoval-Ríos, F.; Cabezas-Orellana, C.; López-Núñez, J.A. Validation of a Spanish-Language Scale on Data-Driven Decision-Making in Pre-Service Teachers. Educ. Sci. 2025, 15, 789. https://doi.org/10.3390/educsci15070789

Sandoval-Ríos F, Cabezas-Orellana C, López-Núñez JA. Validation of a Spanish-Language Scale on Data-Driven Decision-Making in Pre-Service Teachers. Education Sciences. 2025; 15(7):789. https://doi.org/10.3390/educsci15070789

Chicago/Turabian StyleSandoval-Ríos, Fabián, Carola Cabezas-Orellana, and Juan Antonio López-Núñez. 2025. "Validation of a Spanish-Language Scale on Data-Driven Decision-Making in Pre-Service Teachers" Education Sciences 15, no. 7: 789. https://doi.org/10.3390/educsci15070789

APA StyleSandoval-Ríos, F., Cabezas-Orellana, C., & López-Núñez, J. A. (2025). Validation of a Spanish-Language Scale on Data-Driven Decision-Making in Pre-Service Teachers. Education Sciences, 15(7), 789. https://doi.org/10.3390/educsci15070789