1. Introduction

The landscape of reading has undergone a profound transformation in the 21st century, shifting from predominantly paper-based formats to an increasingly digital and interconnected information space (

Delgado et al., 2018;

Turner et al., 2020). Marked by the proliferation of digital texts, multimodal platforms, and algorithmic information curation, this shift presents both unprecedented opportunities and complex challenges for readers (

Yen et al., 2018). While traditional models of reading comprehension have provided valuable insights into how readers construct meaning, they often fail to account for the nonlinear, multimodal, and rapidly evolving nature of digital reading environments (

Paris & Hamilton, 2009;

Raphael et al., 2009). Specifically, these models emphasize recall and text-to-text connections, often overlooking the metacognitive questioning strategies required for deep comprehension and critical engagement in digital spaces (

Pressley, 2002b).

Traditional comprehension frameworks tend to conceptualize reading as a linear process of extracting information from a static text (

Gavelek & Bresnahan, 2009). They emphasize declarative knowledge (knowing

that) and procedural knowledge (knowing

how), through practices such as identifying main ideas or deploying repair strategies when comprehension breaks down. However, they frequently neglect conditional knowledge (knowing when and why to apply strategies), which is essential for navigating the nonlinear, multimodal, and algorithmically curated nature of digital information (

Pearson & Cervetti, 2017). Moreover, such models often marginalize the reader’s active role, underestimating the importance of metacognitive self-questioning as a tool for higher-order comprehension and critical literacy (

Cho & Afflerbach, 2017;

Wilson & Smetana, 2011). Even highly proficient readers, including graduate students, frequently rely on surface-level strategies when engaging with digital texts, missing opportunities for deeper critical analysis (

Adams et al., 2023). These limitations challenge the assumption that comprehension naturally leads to criticality without deliberate metacognitive support.

As digital environments become the dominant medium for information consumption, readers face evolving cognitive and sociocultural demands that fundamentally reshape how comprehension occurs (

Cho et al., 2018;

Leu et al., 2011). Digital reading is inherently networked, requiring the construction of meaning across multiple, nonlinear pathways that span sources, platforms, authors, and contexts (

Coiro, 2021;

Leu et al., 2018;

Naumann, 2015). Algorithmic curation further complicates this terrain by privileging some perspectives while obscuring others (

Nash, 2024). To navigate such information ecologies, readers must engage in ongoing metacognitive regulation, continually asking themselves:

What is my reading goal? Which links are worth following? How does this hyperlinked information relate to the main argument? (

Raphael et al., 2009). In this context, self-questioning becomes not just a cognitive support but questioning-as-thinking (

Wilson & Smetana, 2011), or the active generation of purposeful questions to direct comprehension, interpretation, and critique.

Metacognitive strategies are increasingly recognized as essential tools for supporting online readers (

Cho & Afflerbach, 2015;

Cho et al., 2017;

Uçak & Kartal, 2023;

Zenotz, 2012). These intentional cognitive moves enable readers to integrate multimodal content, navigate nonlinear structures, and critically evaluate sources (

Kiili & Leu, 2019). Without such regulation, digital reading is prone to fragmentation, superficiality, and cognitive overload (

Schurer et al., 2023;

Uçak & Kartal, 2023). While traditional models of metacognitive comprehension often emphasize monitoring at the level of a single text, digital literacy demands flexible, goal-driven strategies that enable readers to move across texts, assess credibility, and synthesize across modalities. In this reconceptualization, the internet becomes not simply a source of information, but a dynamic, deictic environment requiring sustained metacognitive engagement (

Leu et al., 2018).

For educators, these shifts demand more than technological integration; they require a foundational rethinking of literacy instruction. Metacognitive strategies must be reimagined for digital contexts in ways that support students’ agency as readers, evaluators, and synthesizers of online content. While an emerging body of research explores the potential of metacognition to support digital reading (

Cho & Afflerbach, 2015;

Cho et al., 2017;

Uçak & Kartal, 2023;

Wu, 2014;

Zenotz, 2012), much of this scholarship remains research-focused rather than instructionally applicable.

To address this gap, this paper introduces the Digital Metacognitive Question–Answer Relationship (dmQAR) Framework, a theoretical adaptation of

Raphael’s (

1982,

1984,

1986) widely used Question–Answer Relationship (QAR) model and the questioning-as-thinking framework (

Wilson & Smetana, 2011). Grounded in

Rosenblatt’s (

1978) transactional theory of reading, which conceptualizes meaning-making as relational, dynamic, and context-dependent, dmQAR extends these insights into the digital domain. It maps metacognitive questioning strategies onto the architecture of digital environments, accounting for hypertext, multimodality, and algorithmic curation (

Mertens, 2023). Offering explicit instructional strategies, scaffolds, and lesson adaptations, the framework is designed to help teachers embed structured metacognitive questioning into digital literacy curricula. Its goal is to cultivate reflective, independent, and critically engaged digital readers.

Guided by the following research questions, the paper integrates cognitive, sociocultural, and critical traditions in literacy studies:

How do digital literacy environments shape the need for metacognitive questioning strategies?

What types of self-questioning facilitate deeper comprehension and critical engagement with digital texts?

How can educators scaffold students’ metacognitive questioning skills to foster critical digital literacy?

The sections that follow outline the theoretical foundations of metacognitive questioning, present the dmQAR Framework, and explore implications for digital literacy instruction.

3. Theoretical Framework

To conceptualize metacognitive questioning for digital readers, we integrate three theoretical perspectives: metacognitive knowledge, experiences, and regulation (

Ozturk, 2016); questioning-as-thinking; and critical literacy. Together, these frameworks illuminate how readers monitor cognition, interact dynamically with texts, and critique the sociopolitical forces shaping information in digital spaces.

3.1. Metacognitive Reading Practices in Digital Literacy

Metacognition, or thinking about thinking, is central to self-regulated learning and effective comprehension (

Flavell, 1979). The literature most consistently divides metacognition into three concepts. The first, metacognitive skills, includes processes of self-regulation such as monitoring, evaluation, and reflection. These skills help readers recognize breakdowns in understanding and adjust strategies accordingly. The second, metacognitive knowledge, addresses knowledge of the self, the task, and appropriate strategies. Once readers develop regulation skills, they can engage this knowledge to modify their behavior. The third, metacognitive experiences, involves the affective and cognitive responses that accompany learning (

Barzilai & Zohar, 2012,

2014,

2016). For example, recognizing when a multimodal element distracts from a reading goal, or when conflicting information across sources induces uncertainty, constitutes a metacognitive experience.

However, metacognition is not limited to knowledge or strategy. It also involves epistemic thinking, or one’s beliefs about knowledge and knowing (

Hofer, 2016). Epistemic metacognition includes both the ability to regulate one’s learning and the influence of epistemological beliefs on that regulation (

Mason et al., 2010). In digital reading, these beliefs shape how readers assess credibility. For instance, a reader’s view of Wikipedia as a “crowdsourced” resource may influence their trust in its content, just as a generative AI blurb in a search engine might prompt skepticism, or unwarranted acceptance. Engaging epistemic metacognition helps readers navigate the blurred boundaries between reliable and dubious online content (

Barzilai & Zohar, 2014,

2016).

Since digital environments intensify metacognitive demands through their architecture and design (

Marsh & Rajaram, 2019;

Pae, 2020), epistemic awareness alone is not sufficient. Metacognitive abilities are effective only when enacted by a reader who is dispositionally inclined to be metacognitive (

Kuhn, 2021). In digital contexts, this means being aware of the following: (1) online environments require intentional metacognitive engagement (

Barzilai & Zohar, 2016), and (2) these environments are often structured to inhibit that very engagement (

Mertens & Kohnen, 2022;

Nash, 2025). Readers who recognize these challenges are more likely to deploy strategies like selective attention, goal-setting, and critical questioning to manage cognitive load and sustain purpose-driven engagement with digital content (

Cho & Afflerbach, 2017).

Substantial scholarship has demonstrated that metacognitive interventions enhance digital reading. Studies show benefits for English learners (

Uçak & Kartal, 2023), improvements in synthesizing across multiple sources (

List & Lin, 2023), and increased strategic decision-making (

Wu, 2014). Yet much of this research remains theoretical or exploratory, offering limited guidance for classroom instruction. As digital reading becomes a dominant mode of knowledge construction, educational research must prioritize pragmatic frameworks for explicitly teaching metacognitive regulation during digital engagement.

3.2. Critical Literacy and Digital Environments

Grounded in

Freire and Macedo’s (

1987) conception of reading as both cognitive and sociopolitical, critical literacy emphasizes the interrogation of power structures embedded in texts. This perspective is especially salient in digital environments where platform design, algorithmic curation, and commercial interests filter what readers see and how they see it (

Lewison et al., 2015;

Luke, 2012;

Mertens & Kohnen, 2022). Digital spaces are often engineered to elicit specific behaviors (e.g., clicks, engagement, emotional reactions) many of which benefit platforms more than users (

Nash, 2025). Readers must interrogate how and why certain texts appear, asking:

Who benefits from this representation? What perspectives are excluded? How is my understanding being shaped by platform design or algorithmic filtering? (

Janks et al., 2013).

Embedding critical literacy into digital reading instruction involves more than identifying bias. It requires cultivating metacognitive questioning habits that empower students to critique, resist, and transform digital discourse. Through the integration of metacognitive, transactional, and critical lenses, the dmQAR Framework supports the structured self-questioning practices necessary for navigating a digital information ecosystem shaped by sociotechnical forces.

3.3. Question–Answer Relationship and Questioning-As-Thinking

Questioning lies at the heart of reading comprehension, transforming readers from passive recipients into active constructors of meaning. Through questioning, readers engage in metacognitive skills (e.g., self-regulation), activate knowledge (e.g., task or strategy awareness), and experience cognitive dissonance or curiosity that can drive deeper engagement. In digital spaces, these dimensions of metacognitive questioning are essential for maintaining purposeful interaction with online content.

Raphael’s (

1982,

1984,

1986) original Question–Answer Relationship (QAR) framework categorizes questions according to their relation to the text: “Right There” questions target explicit details, “Think and Search” questions synthesize across text segments, “Author and Me” questions require integration of textual and personal knowledge, and “On My Own” questions rely primarily on prior experience. QAR helps make the cognitive demands of questions visible and has been widely used to support strategic comprehension development (

Raphael & Au, 2005;

Wilson & Smetana, 2011).

However, while QAR provides a strong developmental scaffold for print environments, digital reading requires a more expansive approach. Readers must now synthesize information across hyperlinked documents, verify source credibility, interpret multimodal elements, and account for algorithmic filtering (

Wineburg & McGrew, 2019;

Coiro, 2011;

Mertens & Kohnen, 2022). These additional layers necessitate dynamic, self-generated questioning rather than teacher-led prompts. In print-based classrooms, teachers scaffold attention and inference through guided questioning (

Pressley, 2002a;

Ness, 2016). Online, readers must internalize these questioning strategies and apply them independently across fragmented and interactive content. Without these self-questioning habits, readers are vulnerable to shallow engagement and misinformation (

Cho & Afflerbach, 2017;

Kiili & Leu, 2019).

In response, the dmQAR Framework extends Raphael’s original taxonomy to meet the epistemic, cognitive, and structural demands of digital reading. It retains the developmental progression from comprehension to critique but embeds this sequence within the realities of online engagement. The framework scaffolds questioning about hyperlinks, multimedia, source attribution, and ideological framing, equipping students to navigate today’s networked, multimodal texts with purpose, reflection, and critical awareness.

4. The Digital Metacognitive Question–Answer Relationship Framework

This framework adapts QAR (

Raphael, 1982,

1984,

1986) and metacognitive questioning-as-thinking (

Wilson & Smetana, 2011) into a flexible, developmentally sensitive model for scaffolding readers through the cognitive complexities of digital reading. The ability to engage in metacognitive questioning does not emerge spontaneously; rather, it develops progressively as readers gain experience in regulating comprehension and critically engaging with texts (

Perkins, 1992). In an era where readers encounter a vast array of digital texts with varying formats, multimodal structures, credibility challenges, and algorithmic curation (

Cho et al., 2018;

Ness, 2016), purposeful questioning becomes essential for navigating and critically engaging with information.

However, traditional QAR frameworks, developed for static print environments and designed to occur after reading, do not fully address the demands of reading in digital spaces characterized by nonlinearity, hypertextuality, and fragmented authorship. The dmQAR Framework directly responds to these challenges by bridging basic comprehension and higher-order metacognitive processes, such as self-regulation, lateral validation, and critical synthesis, during online reading.

Designed to be adaptable across age groups and instructional contexts from primary to post-secondary, the dmQAR provides educators with a structured yet flexible guide to fostering purposeful, reflective, and critically engaged digital readers. Specifically, the dmQAR reinterprets each traditional QAR category through the lens of digital literacy demands. The following sections outline how foundational comprehension (Right There), relational analysis (Think and Search), critical evaluation (Author and Me), and reflexive application (On My Own) are reconfigured for online environments and describe practical instructional strategies to scaffold these metacognitive practices.

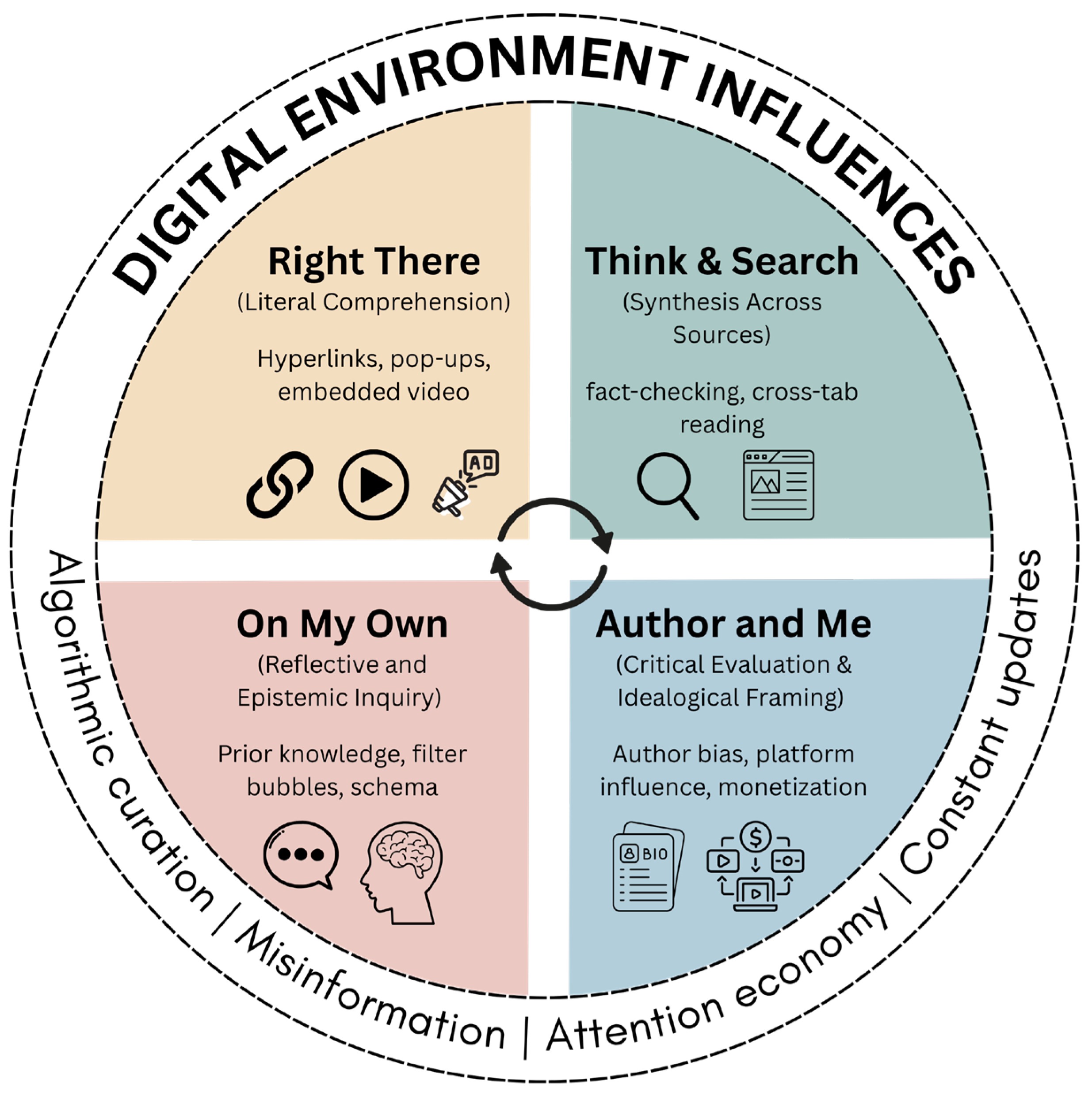

The original QAR framework divides question types into two broad categories: In the Text and In My Head. These general categories gave readers clues as to where information can be found when answering questions. However, because the digital environment algorithmically adapts to provide different experiences for different users, it can be challenging to clearly delineate if an answer can be found either within a given text or within our minds. Since so much of what we encounter online is controlled by digital environments, the firm boundaries between these question types become nebulous. For this reason, dmQAR focuses on four categories: Right There, Think and Search, Author and Me, and On My Own. Though we present these as separate categories, we recognize that proficient digital readers ask a combination of each type of question as they read online. To support understanding and classroom application, the dmQAR is represented as a circular visual model (see

Figure 1).

4.1. “Right There” During Online Reading

“Right There” questions traditionally ask readers to locate highly literal information within a passage. Often, these questions are designed to guide readers directly to the language of the text itself. However, in digital environments, surface-level retrieval is complicated by multimodal layering, hyperlinking, and variable credibility, requiring readers to attend both to textual content and digital affordances. For the purposes of the dmQAR Framework, “Right There” questions refer to information that can be found within a given webpage, without leaving the page, but that may require navigating multimodal features or discerning subtle credibility cues.

To support critical engagement even at this basic level, readers must interrogate digital texts more deliberately. Example questions include:

How did I locate this article/webpage?

Does this website/article meet my purpose for reading?

What can I learn from understanding the organization of this article/webpage?

What sources does this article cite, and are they reputable?

Does this text present multiple perspectives, or is it biased?

Whose perspective is included in this piece?

Is this content fact-based, opinion-based, or designed to persuade?

What is the main argument of this text?

How does this text construct its argument?

These questions move beyond surface retrieval to prompt students to consider how they were directed to the article, as well as its credibility, purpose, and the structure of single-page digital texts, thereby laying a foundation for deeper metacognitive regulation.

Educators can scaffold “Right There” engagement by implementing a variety of active strategies. Digital annotation tools such as Hypothes.is, Perusall, or Kami allow students to highlight key points, define unfamiliar terms, and insert marginal reflections as they read (

Leu et al., 2011). Online discussion boards or collaborative Google Docs extend this engagement into social learning environments, where readers can compare main ideas and interpretations with peers, reinforcing active comprehension (

Cho et al., 2017). Summarization exercises, in which students restate the main argument of a digital article in their own words, further ensure that comprehension is not passive but generative. Instructors can also model comprehension questioning by generating basic “Right There” prompts and gradually shifting this responsibility to students as they build fluency in self-monitoring comprehension (

Behrman, 2006).

Importantly, comprehension strategies in digital reading must also address multimodal features. Videos, infographics, and interactive elements embedded within texts are increasingly common, and their integration can either reinforce or obscure meaning (

Wilson & Smetana, 2011;

Kiili & Leu, 2019). When engaging with video content, for example, students might be guided to take structured notes summarizing how audiovisual elements support (or conflict with) the central argument. When interacting with infographics, students might evaluate whether the visual data extends or distorts the accompanying text. Explicit instruction in cross-modal comprehension is thus essential for maintaining coherence across modes (

Leu et al., 2011).

Ultimately, comprehension at the “Right There” level acts as a cognitive safeguard against fragmentation in digital environments. Without a strong foundation in basic meaning-making, students are less equipped to evaluate credibility, integrate sources, or engage critically with digital texts. Foundational comprehension strategies must therefore be explicitly taught and reinforced as the first step toward robust digital literacy.

4.2. “Think and Search” During Online Reading

In traditional print contexts, “Think and Search” questions required readers to piece together information from different parts of a single text. However, in digital environments, this skill must expand significantly: readers must evaluate fragmented, multimodal, and networked sources while making real-time credibility judgments. Limiting “Think and Search” to a single source is inadequate for the contemporary Internet reader. Online reading demands that readers synthesize information across hyperlinked documents, embedded media, and cross-platform contexts (

Leu et al., 2011).

Unlike print texts that offer linear sequencing, digital texts often disperse meaning across links, videos, sidebars, and comment threads. Readers must not only locate explicit information but also assess how various elements (e.g., hyperlinks, visuals, related articles) construct meaning, shape arguments, or obscure key details (

Cho et al., 2017;

Coiro, 2021;

Turner et al., 2020). Readers who cannot make these inferences may misinterpret implicit messages or miss critical relationships between ideas. Thus, “Think and Search” questions in online environments scaffold students’ ability to contextualize and critically synthesize information, bridging basic comprehension and higher-order analysis.

Effective “Think and Search” questioning in digital contexts encourages students to interrogate structural features, such as hyperlinking and multimodal integration. Hyperlinks often suggest authority or relatedness, but readers must ask: Why was this link included? What perspectives does it amplify or omit? Similarly, videos, infographics, and interactive tools embedded within articles can either enrich or manipulate meaning. Readers must determine whether these additions clarify the main argument or distract from it (

Kiili & Leu, 2019).

Moreover, online content often contains surface indicators of credibility (e.g., citations, author bios, professional design) that may not reflect actual reliability. As

Barzilai and Zohar (

2012) caution, readers must learn to interrogate the structure of a digital text, not merely its surface features. Students should therefore be taught to ask the following questions:

How does following this hyperlink help me achieve my purpose online?

Does this hyperlink lead to a reputable source?

Does the linked content reinforce or challenge the main argument of the text?

What perspectives are absent in the sources being linked?

Who shared this resource, and why? What might they want me to believe?

To develop these skills, educators can engage students in targeted activities that foreground source comparison and credibility analysis. One effective practice is Comparing News Sources, where students examine how different media outlets frame the same event, noting shifts in language, tone, and ideological perspective. This exercise helps students detect bias, agenda-setting, and narrative shaping across sources.

Another critical instructional focus is Recognizing Sponsored Content. Many online texts blend advertising with editorial material, obscuring commercial interests behind apparent neutrality. This architecture of the internet requires that readers consistently approach text with an evaluative perspective while engaging in metacognitive practices. The features of online environments provide opportunities for engaging with complex, diverse, and unfamiliar forms of information; within this environment, metacognitive practices can ensure students that they are moving purposefully towards a learning goal (

Barzilai & Ka’adan, 2017). Teaching students to identify native advertising, sponsored posts, and conflict-of-interest disclosures sharpens their ability to question the motivations underlying information presentation.

In sum, effective “Think and Search” questioning enables readers to move from isolated comprehension toward relational, contextualized understanding. However, interrogating links, structure, and source credibility naturally leads readers to deeper critical inquiries: not only how a text was constructed, but why. For this reason, “Think and Search” practices build a necessary bridge to “Author and Me” questioning, where readers evaluate the author’s purpose, perspective, and underlying assumptions.

4.3. “Author and Me” During Online Reading

“Author and Me” questions challenge readers to move beyond information retrieval toward making inferences about a text’s framing, purpose, and ideological positioning. In digital environments, this inferential reading is particularly essential, as persuasive bias, commercial agendas, and emotional framing are often obscured by platform architectures. Unlike traditional print texts, online content frequently lacks transparent sourcing, editorial review, or clear authorship (

Leu et al., 2011), making critical questioning indispensable for evaluating credibility.

Digital texts, especially those circulating via social media, blogs, or opinion-based news outlets, are often designed to persuade, evoke emotional responses, or reinforce ideological worldviews. In contrast to journalistic standards historically associated with mainstream media, many digital sources operate without formal accountability structures (

Metzger & Flanagin, 2013). Therefore, readers must be taught to interrogate not only

what is presented, but

how and

why it is framed. Educators can cultivate these competencies through explicit instruction in source evaluation, fact-checking, and digital credibility assessment. Students should regularly engage in structured questioning practices such as:

How is this text or these texts meeting my reading purpose?

Who is the author of this text, and what are their credentials?

Who shared this text, and how does that sharing context shape interpretation?

How might the hosting platform’s design influence which perspectives are amplified?

Without such critical reading habits, students risk becoming passive consumers of misinformation, confirmation bias, and commercially-motivated narratives (

Kiili et al., 2008).

One key instructional strategy is lateral reading (

Wineburg & McGrew, 2019). Skilled readers cross-reference claims by consulting multiple independent sources rather than trusting information at face value. The “Author and Me” questions a reader may ask during reading helps construct knowledge from multiple information sources by being able to “apply in a coordinated and adaptive fashion, a complex set of epistemic strategies such as evaluating source reliability, corroborating claims, and integrating information” (

Barzilai & Ka’adan, 2017, p. 194) across sources. Practical classroom applications include verifying an author’s credentials, checking citations for accuracy, and corroborating claims through reputable fact-checking organizations, like Snopes or FactCheck.org. Students should practice the following:

Searching for the same claim across diverse sources.

Investigating whether cited studies are accurately represented.

Identifying financial sponsorships or conflicts of interest that might bias reporting.

Moreover, critical literacy practices must extend to examining how financial structures influence content production. Native advertising, clickbait headlines, and monetization strategies often obscure commercial intent (

Lewison et al., 2002). Classroom activities can guide students to analyze who funds media outlets, what advertisements accompany particular articles, and how financial incentives shape journalistic framing.

Yet even when sources appear credible, students must recognize that digital texts are mediated through algorithmic curation and corporate platform structures (

Coiro, 2021;

Behrman, 2006). In working with the complex context and content of information sources, readers can engage with their own metacognitive knowledge about which epistemic strategy to apply (

Barzilai & Ka’adan, 2017). For example, readers can critically reflect on how search engines, social media filters, and ownership consolidation privilege certain perspectives while marginalizing others. “Author and Me” questioning can help students step outside of individual texts to interrogate systemic structures by asking the following questions:

What political, economic, or social forces influence this text?

How does algorithmic curation affect what I encounter?

What ideological assumptions are embedded in this content?

Who benefits and who is disadvantaged by the way this issue is framed?

Building on these practices, educators can integrate exercises that analyze social media algorithms. Platforms tailor content based on users’ engagement histories, reinforcing existing beliefs and creating so-called filter bubbles. By comparing how the same social or political issue is presented across Twitter, Facebook, TikTok, and major news outlets, students can explore the following:

Who is speaking across platforms?

Who is absent from these narratives?

How do platform architectures amplify or distort issues differently?

Encouraging students to vary search terms, for example, comparing “climate change hoax” versus “climate change evidence,” also reveals how algorithms prioritize information differently depending on phrasing (

Coiro, 2021). Such exercises foster awareness that access to information itself is curated, partial, and politically charged.

In brief, “Author and Me” questioning within the dmQAR framework prepares readers not only to comprehend digital texts but also to interrogate the broader power structures that shape digital discourse. By embedding structured, critical questioning into literacy instruction, educators can equip students to challenge dominant narratives, seek out marginalized voices, and participate ethically and thoughtfully in digital spaces.

4.4. “On My Own” During Online Reading

“On My Own” questions traditionally ask readers to rely on their background knowledge rather than retrieving information directly from a text. In digital reading environments, this type of metacognitive questioning acquires heightened importance. According to schema theory, comprehension is an interactive process in which new information is interpreted through the lens of pre-existing cognitive frameworks (

Anderson & Pearson, 1984). Readers who possess rich background knowledge are better equipped to make inferences, recognize implicit assumptions, and synthesize across fragmented digital sources (

Bråten et al., 2011).

However, while prior knowledge can enable deeper inquiry, it can also constrain questioning in digital spaces. Algorithmic curation often reinforces confirmation bias by exposing readers predominantly to content that aligns with their existing beliefs (

Wineburg & McGrew, 2019). Without broad, critical schemas, students may accept information at face value, misinterpret sources, or fail to recognize missing perspectives (

Barzilai & Zohar, 2012). Thus, “On My Own” questioning highlights the critical role of activating and expanding prior knowledge during digital reading.

Consider, for example, a student with a strong foundation in climate science. Encountering a news article that oversimplifies or misrepresents scientific consensus, such a student may immediately ask: What key information is omitted? How does this portrayal compare with established scientific findings? Conversely, a student without this background may not recognize why skeptical sources position climate data in misleading ways (

Mertens, 2023). These differences underscore the metacognitive necessity of equipping readers to recognize gaps, contradictions, and biases when engaging with digital texts.

To foster deeper critical engagement, educators must intentionally scaffold background knowledge. Pre-reading activities can introduce foundational concepts before students encounter complex texts. Comparative reading assignments can expose students to diverse perspectives on a single topic, helping them recognize ideological framing and expand their cognitive schemas. Guided questioning models can explicitly demonstrate how prior knowledge shapes inquiry, with teachers modeling how to activate and apply existing knowledge when evaluating online sources.

Beyond content scaffolding, reflective practices help students interrogate how their personal experiences shape interpretation. These reflective practices are important because most of the digital reading that people engage with often comes from curiosity and not from formal schooling practices. Critical literacy involves not only identifying external bias but also examining one’s internalized beliefs and habits of attention. Activities that ask students to track their digital habits, such as analyzing the types of sources they engage with over a week, can reveal content patterns and cognitive blind spots. Key reflective questions include the following:

What types of voice am I most often exposed to?

How do my beliefs influence how I interpret information?

What important perspectives might I be missing?

Building on these reflections, students can be encouraged to ask relational questions when reading digitally:

What connections can I make between this issue and my own experiences?

What additional information would help me better understand this issue?

How might different communities or stakeholders interpret this information differently?

In sum, while strong background knowledge enhances relational and critical questioning, gaps in schema and algorithmic curation threaten to narrow students’ digital comprehension. The dmQAR Framework therefore emphasizes explicit instruction not only in digital text analysis but also in the cultivation, activation, and critical expansion of prior knowledge. “On My Own” questioning fosters readers who are not merely passive absorbers of digital content, but active interrogators of meaning-making, bias, and epistemological complexity in online environments.

5. Instructional Applications of dmQAR in Classrooms

To support classroom implementation, we integrate practitioner-based strategies drawn from research on digital comprehension, critical questioning, and metacognitive scaffolding (e.g.,

Cho et al., 2017;

Wineburg & McGrew, 2019;

Ness, 2016). The dmQAR Framework provides a structured approach for fostering self-questioning strategies aligned with the cognitive demands of digital reading. Because online spaces present new affordances and constraints compared to traditional print, metacognitive questioning must evolve to help students slow down, reflect, and engage critically with digital texts. When considering the architecture of online spaces, specific question-and-answer relationships shift to accommodate the affordances and challenges of hypertextuality, multimodality, and algorithmic influence. While this framework scaffolds students in reflecting more deliberately during online reading, how it is implemented in classroom practice will significantly influence its impact. For the model to succeed, educators must combine explicit instruction, scaffolded inquiry, and blended use of digital and non-digital tools to support students’ critical engagement with digital texts.

While the framework is designed to support all learners, equitable implementation requires attention to disparities in device access, internet connectivity, and digital reading experience. Teachers may need to scaffold digital navigation and metacognitive questioning differently for students with limited prior exposure to online texts (

Warschauer & Matuchniak, 2010).

5.1. Explicit Instruction and Teacher Modeling

One crucial foundation for developing metacognitive questioning is explicit instruction through teacher modeling. Traditional comprehension instruction has often relied on teacher-led questioning, where educators construct questions to guide students’ understanding. In this model, educators act as facilitators, helping students recognize patterns, identify textual gaps, and engage in structured discussion. By modeling these questioning strategies, teachers provide students with a cognitive roadmap for critically engaging with digital texts (

Ness, 2016).

Think-alouds remain one of the most effective methods for explicitly teaching self-questioning (

McKeown et al., 2009;

Wilson & Smetana, 2011). Instructors can verbalize their thought processes while engaging with texts, modeling how to ask questions at different levels of the dmQAR Framework. Through real-time metacognitive modeling, teachers articulate comprehension gaps, evaluate textual reliability, and challenge biases (

Wilkinson & Son, 2011). Think-alouds also highlight transferable questioning habits that apply across both digital and traditional literacy environments (

Raphael & Au, 2005). For example, while reading a controversial news article, a teacher might include the following questions in their model:

Right There: “Does this webpage meet my purpose for learning?”

Think and Search: “I see that an expert is cited. Should I look up their credentials to verify credibility?”

Author and Me: “The author is making a strong claim. What evidence supports it?”

On My Own: “What is this article about? How does it intersect with what I already know or believe about the topic?”

Teachers would pose these questions while actively demonstrating online reading about a topic. By doing so, the teacher illustrates the interactive nature of online reading, and the necessity of constant questioning to make progress towards a learning goal. After teacher think-alouds, engaging the class in collaborative think-alouds, where students take turns verbalizing thought processes, further supports shared metacognition and reinforces the epistemic thinking necessary with online reading. These practices enable students to internalize questioning strategies that they can later apply independently during digital reading.

Table 2 illustrates classroom-aligned applications of each dmQAR category, highlighting instructional strategies, tools, and learning outcomes.

5.2. Shifting Toward Student-Driven Questioning

While purposeful teacher-led questioning scaffolds student engagement with strategic digital reading, overuse of teacher-led instruction risks promoting passive learning (

Pearson & Gallagher, 1983). To foster genuine cognitive autonomy, the dmQAR framework suggests that instruction must gradually shift from teacher-led modeling to student-driven questioning. The goal is not merely for students to answer pre-constructed prompts, but to support students in internalizing a flexible habit of inquiry that persists across digital contexts (

Pearson & Cervetti, 2017).

Student-generated questioning deepens comprehension, enhances engagement, and promotes self-regulated learning (

Chin, 2006;

McNamara & Magliano, 2009). This shift toward student agency aligns with broader calls to reevaluate traditional instructional and assessment structures in favor of practices that prioritize cognitive autonomy and authentic engagement (

Crogman et al., 2023). Research suggests that, when students formulate their own questions, they monitor comprehension more effectively and engage more deeply with texts (

Ness, 2016). This skill is particularly essential in digital environments, where algorithmic curation demands that readers independently identify gaps, biases, and credibility challenges (

Cho & Afflerbach, 2017).

The dmQAR Framework supports this transition by scaffolding the process of independent questioning. Effective instructional strategies include collaborative questioning groups, where students co-construct questions about a digital text, refining their ability to detect bias, synthesize multiple sources, and assess credibility (

King, 1994). For example, in a middle school classroom exploring media coverage of a current event, students might use the dmQAR structure to generate “Think and Search” questions that compare how different news outlets report on the same issue. Using graphic organizers, they examine variations in framing, highlight which perspectives are emphasized or excluded, and analyze how images or layout shape reader interpretation. A follow-up journal reflection invites students to connect these insights to their personal digital reading habits, reinforcing both metacognitive transfer and critical digital citizenship (

Crogman et al., 2023).

Maintaining questioning logs, either digitally or on paper, further encourages students to reflect on their inquiries and build a personal repertoire of metacognitive questioning strategies.

5.3. Digital and Non-Digital Tools for Metacognition

While digital reading presents challenges, such as nonlinear navigation, multimodal distractions, and information overload, interactive digital tools can help scaffold metacognitive questioning practices. Annotation and collaborative discussion platforms, like Perusall, Hypothes.is, and Kami, enable students to engage in layered, social reading experiences that promote deeper questioning. These tools offer multiple affordances: embedding instructor-posed guiding questions, fostering peer-generated discussion, and supporting multimodal integration (

Crogman et al., 2025). They also support multimodal integration, encouraging students to critically analyze hyperlinks, embedded visuals, and video content alongside traditional textual elements.

For example, a high school teacher guiding students through an online article on climate change might use Hypothes.is to embed prompts that ask students to assess the credibility of cited sources, highlight persuasive language, and comment on embedded graphs. In response, students collaboratively annotate the article, debate reliability in the margins, and summarize multimodal features in threaded discussions. These shared annotations offer a trace of evolving comprehension and deepen awareness of how digital features influence interpretation.

However, digital scaffolding alone is insufficient for cultivating enduring metacognitive habits. Non-digital strategies, like reflection journals, provide an important complement to digital tools by offering opportunities for deliberate, extended questioning beyond the immediacy of online interaction. Research suggests that written reflection reinforces self-regulation, deepens inquiry, and enhances memory retention (

Zimmerman & Schunk, 2011). After engaging with a complex digital text, students might reflect in journals:

Right There: “What claims were made? Were sources cited?”

Think and Search: “What perspectives were missing? How did different elements contribute to meaning?”

Author and Me: “What ideological assumptions are embedded in this content?”

On My Own: “How do my prior experiences shape my interpretation of this issue?”

Reflection journals allow students to track evolving interpretations over time and build metacognitive self-awareness that transcends individual reading sessions.

Similarly, Socratic seminars offer rich opportunities for collective inquiry into digital texts. In these dialogues, students pose and respond to open-ended questions, modeling the kinds of critical engagement and multi-perspective evaluation necessary for digital literacy (

Chin, 2006). By facilitating discussions of digital news articles, social media debates, or multimedia texts, educators can nurture habits of flexible, dialogic questioning that transfer to independent digital engagement.

Educators are increasingly integrating immersive technologies to extend this reflective space (

Crogman et al., 2025). By integrating digital tools for scaffolding with non-digital practices for reflection and synthesis, educators ensure that metacognitive questioning becomes a durable cognitive habit rather than a medium-specific technique. This blended approach strengthens the dmQAR Framework, cultivating students’ ability to flexibly interrogate meaning across screen-based and traditional literacy contexts (

Pearson & Cervetti, 2017).

Embedding both digital and non-digital scaffolds for metacognitive questioning, the dmQAR Framework promotes a flexible and transferable critical literacy. Students trained through explicit modeling, gradual autonomy, and multimodal inquiry develop not just comprehension, but the critical habits necessary for engaged, ethical participation in the digital information landscape. As the boundaries between reading, thinking, and communicating continue to evolve, equipping students with structured self-questioning strategies will be essential for fostering thoughtful, discerning, and resilient digital citizens.

6. Future Directions for Practice

This paper proposes and justifies the dmQAR Framework as a critical adaptation of the traditional QAR model for contemporary digital literacy instruction. In an era increasingly dominated by hyperlinked, multimodal, and algorithmically curated texts, the dmQAR Framework reconceptualizes comprehension as an active, inquiry-driven, and metacognitive process. Drawing from metacognitive theory (

Flavell, 1979;

Ozturk, 2016), transactional reading theory (

Rosenblatt, 1978), and critical literacy (

Freire & Macedo, 1987;

Luke, 2012), the dmQAR systematically integrates self-regulated questioning into online reading practices.

The framework advances digital literacy pedagogy by providing structured scaffolds for moving students beyond surface-level comprehension toward deeper relational analysis, critical evaluation of sources, and reflexive engagement with digital architectures. Unlike traditional models, which assume meaning is constructed linearly from stable texts, the dmQAR reflects the nonlinearity and epistemological complexity of reading in networked digital environments (

Coiro, 2021;

Turner et al., 2020;

Leu et al., 2018).

Practically, the dmQAR offers educators actionable pathways to teach metacognitive questioning across a range of instructional contexts. Strategies such as digital annotation, lateral reading practices, collaborative questioning, and reflection journals ensure that questioning becomes a transferable literacy habit rather than an isolated comprehension exercise. In doing so, this framework positions metacognitive questioning not as ancillary, but as foundational to critical participation in digital information ecosystems.

7. Future Research Directions

While this paper provides a theoretical and pedagogical foundation, the dmQAR Framework opens several critical avenues for future empirical research. First, future studies should investigate the instructional impact of the dmQAR on students’ digital comprehension, evaluation of source credibility, and resistance to misinformation. Experimental and quasi-experimental studies could compare students taught with traditional comprehension instruction to those receiving explicit dmQAR scaffolding, measuring outcomes such as critical questioning behaviors, integrative synthesis across digital sources, and susceptibility to biased or false information (

Wineburg & McGrew, 2019;

List & Lin, 2023).

Second, longitudinal research could track the development of autonomous metacognitive questioning habits over time. Do students who are initially scaffolded through dmQAR instruction continue to employ self-questioning strategies in independent digital reading? What factors sustain or inhibit the internalization of these metacognitive skills across contexts? Such research must also examine how the framework functions in multilingual classrooms, where students bring diverse linguistic repertoires, cognitive strategies, and culturally situated questioning practices that may shape digital comprehension and metacognitive engagement.

Third, given the proliferation of AI-enhanced reading platforms and adaptive annotation tools, research should examine how such technologies influence self-questioning practices. Tools like Perusall, Newsela, and AI-driven personal tutors increasingly embed questioning prompts within digital texts; however, it remains unclear whether these affordances foster deeper inquiry or promote overreliance on external cues (

Ademola, 2024;

Kiili & Leu, 2019). Future studies should explore how adaptive scaffolds interact with student-driven metacognitive development.

Fourth, as digital media continues to evolve toward hyper-modal and participatory platforms (e.g., TikTok, Instagram, Discord), research must investigate how self-questioning differs across media environments (

Crogman et al., 2025). Does questioning about credibility and ideological framing function differently in video-based versus text-based or infographic-based environments? How can dmQAR strategies be tailored to meet the cognitive demands of emerging digital formats (

Greenhow et al., 2020)?

Finally, equity-focused research is needed to ensure that dmQAR practices benefit all students, not just those with privileged access to devices, stable internet, or prior digital literacy experience. While prior work (e.g.,

Warschauer & Matuchniak, 2010) has illuminated longstanding gaps, post-pandemic data reveal even greater disparities in students’ digital reading skills (

García & Weiss, 2020). Future studies should examine how dmQAR interventions can be designed for inclusivity, addressing access gaps and supporting historically marginalized learners’ critical digital participation.

8. Preparing Students for Future-Ready Literacy

As digital information environments become increasingly complex, nonlinear, and politically charged, ensuring that students develop the skills to interrogate, synthesize, and apply knowledge in meaningful ways is no longer optional. Rather, it is a fundamental prerequisite for participatory, democratic, and critical engagement. Literacy education must move beyond static comprehension models to embrace inquiry-based, socially reflexive, and critically evaluative practices.

The dmQAR Framework equips students with the structured tools necessary to actively interrogate the credibility, structure, purpose, and ideological positioning of digital texts. By embedding self-questioning at every stage of reading—across comprehension, synthesis, critique, and reflexive application—educators can foster students’ capacity to navigate information ecologies saturated with commercial agendas, misinformation, and algorithmic biases.

Future-ready literacy means cultivating readers who do not passively consume information but who actively question, contextualize, synthesize, and challenge the narratives that shape their digital worlds. By leveraging explicit instruction, collaborative inquiry, multimodal integration, and reflective metacognitive practices, the dmQAR Framework offers a pragmatic path forward for preparing students to think critically, read deeply, and act ethically in a dynamic digital age.