1. Introduction

Higher education in the 21st century is undergoing profound transformations, driven by globalization, technological advancements, and shifting student demographics (

Johnson et al., 2015). These changes have catalyzed the rise of digital learning platforms, enabling universities to offer fully online programs (

Lin & Chen, 2024). Such innovations have not only expanded access to education but also enhanced the flexibility of learning modalities, catering to diverse learner needs (

Allen & Seaman, 2017).

In response to contemporary challenges and growing stakeholder expectations, universities are increasingly prioritizing the integration of innovative educational strategies (

Altbach et al., 2009). Among these, artificial intelligence (AI) has emerged as a transformative tool with significant potential to reshape educational environments. By enhancing teaching quality and offering accessible, personalized learning experiences, AI is redefining traditional educational paradigms (

Luckin et al., 2016;

Cope & Kalantzis, 2015;

Lin & Chen, 2024).

To examine the integration and reception of generative AI in higher education, this study employs two theoretical frameworks: the SAMR model and the Unified Theory of Acceptance and Use of Technology (UTAUT).

AI-powered pedagogical tools offer numerous advantages, such as precise assessment of student performance, identification of individual strengths and weaknesses, and the development of personalized learning experiences (

Lin & Chen, 2024). These tools enhance not only students’ knowledge but also the practical application of their learning in real-world contexts (

Roll & Wylie, 2016;

Siemens & Long, 2011). Examples include chatbots, virtual assistants, and adaptive learning systems, which have demonstrated the ability to create interactive and engaging educational environments (

Warschauer, 2004). Additionally, AI enables the automation of routine educational tasks, such as grading assignments and monitoring student engagement, freeing educators to focus on higher-order teaching activities (

Barocas et al., 2023;

Khan et al., 2022). Platforms like Bit.AI, Mendeley, and Coursera are also transforming academic research by analyzing large datasets, identifying trends, and generating in-depth insights (

Amornkitpinyo et al., 2021).

However, the integration of AI into higher education is not without challenges. Ethical concerns have emerged surrounding tools like ChatGPT (ChatGPT-4), which may produce inaccurate or inappropriate outputs and unintentionally encourage practices such as plagiarism (

Chen et al., 2020). Additional apprehensions include the potential erosion of human expertise in disciplines requiring nuanced and complex thinking, such as the humanities (

Chen et al., 2020). Despite their capabilities, current AI models frequently struggle to comprehend the subtleties and complexities of advanced ideas (

Bentaleb, 2023). Over-reliance on AI may also foster technological dependency, undermining the development of essential human skills like creativity, collaboration, and critical thinking (

Siham & Sophia, 2024). Furthermore, issues related to data privacy and the security of personal information collected during educational activities necessitate the implementation of robust protection mechanisms (

Siham & Sophia, 2024).

Research Questions

With these considerations in mind, our study seeks to address the following research questions:

How do students and teachers perceive the benefits and challenges associated with the integration of generative AI in higher education?

To what extent is generative AI perceived as a complementary tool or a threat to the human role of teachers in higher education?

What concerns do teachers and students have regarding the integration of generative AI into educational contexts?

2. Theoretical Frameworks

This study employs two theoretical frameworks to analyze the integration and perceptions of generative AI in higher education, the SAMR model and the Unified Theory of Acceptance and Use of Technology (UTAUT), and ethical frameworks. Together, these models provide a comprehensive lens for examining (a) the perceived benefits and challenges of generative AI among students and teachers, (b) concerns regarding its potential impact on the role of educators, and (c) the ethical implications of AI-driven learning technologies.

The SAMR (Substitution, Augmentation, Modification, Redefinition) model, represented as a ladder, is a four-level framework for selecting, utilizing, and evaluating technology in education. According to

Puentedura (

2006), the model serves as a tool to describe and categorize teachers’ use of classroom technology. It encourages educators to progress from lower to higher levels of technological integration, which, according to Puentedura, leads to enhanced teaching and learning experiences. The SAMR model (

Puentedura, 2010) is widely employed to assess how technological advancements, including artificial intelligence, transform pedagogical practices—ranging from the mere substitution of traditional tasks to a profound redefinition of educational approaches. At the substitution level, digital technology replaces analog tools without introducing functional change (

Puentedura, 2014). In contrast, augmentation represents a stage where technology serves as a direct tool substitute but enhances the task’s functionality (

Hamilton et al., 2016). Modification involves a significant redesign of the task through technological integration (

Hamilton et al., 2016). Finally, redefinition represents the highest level of the model, where technology enables the creation of novel tasks that were previously inconceivable (

Hamilton et al., 2016). Through this model, we can examine how students and educators perceive AI’s role in enhancing or disrupting learning experiences in higher education.

The UTAUT The Unified Theory of Acceptance and Use of Technology (UTAUT) is a theoretical framework that explains technology adoption by identifying key determinants of behavioral intention and actual usage. Proposed by

Venkatesh et al. (

2003), the model integrates insights from multiple prior technology acceptance theories to provide a comprehensive understanding of user adoption. It posits that technology usage is primarily driven by four core constructs: performance expectancy, effort expectancy, social influence, and facilitating conditions. These constructs are moderated by age, gender, experience, and voluntariness of use, which influence their strength in predicting behavioral intention and use behavior.

Performance expectancy refers to the extent to which a person believes that using a system will improve job performance. This factor is considered the strongest predictor of behavioral intention and incorporates concepts such as perceived usefulness and extrinsic motivation (

Venkatesh et al., 2003;

Zhou & Yue, 2010).

Effort expectancy represents the perceived ease of use of a technology. It significantly influences a user’s initial intention to adopt a system, though its impact tends to decline as users gain more experience (

Gupta et al., 2008;

Chauhan & Jaiswal, 2016).

Social influence reflects the perceived pressure from important figures, such as colleagues or superiors, to use a technology. This factor is particularly strong in environments where technology adoption is mandatory, as individuals may comply due to external expectations rather than personal preference (

Venkatesh et al., 2003;

Venkatesh & Davis, 2000).

Facilitating conditions refer to the availability of organizational resources and technical support essential for technology adoption. While this factor plays a crucial role in the early stages of adoption, its influence gradually diminishes as users become more accustomed to the system (

Venkatesh et al., 2003).

The moderating effects of age, gender, experience, and voluntariness of use refine the predictive power of the model. Age moderates all four core constructs, while gender influences the relationships between performance expectancy, effort expectancy, and social influence. Experience strengthens the impact of effort expectancy, social influence, and facilitating conditions, whereas voluntariness of use moderates only the effect of social influence on behavioral intention (

Venkatesh et al., 2003). This model is particularly useful for understanding variations in acceptance across different user demographics and institutional contexts.

Finally, ethical frameworks (

Barocas et al., 2023) offer a structured approach to addressing key concerns related to bias, data privacy, academic integrity, and the potential erosion of essential human skills. These frameworks incorporate diverse philosophical perspectives—deontological ethics, consequentialism, virtue ethics, and justice-oriented ethics—providing a foundation for evaluating the societal and educational impact of AI technologies. They highlight critical issues such as algorithmic bias, fairness, and the responsible development and deployment of AI in educational settings.

3. Materials and Methods

The primary objective of this study is to examine the use and perceptions of generative artificial intelligence (GAI) technologies in teaching and learning among students and educators in Moroccan universities. A mixed-methods approach was employed, integrating quantitative and qualitative techniques to provide a comprehensive understanding of this phenomenon.

3.1. Quantitative Approach

Quantitative data were collected through a questionnaire adapted from

Lin and Chen (

2024). The original English version was translated into French to ensure participant comprehension. The online questionnaire featured closed-ended questions using a four-point Likert scale ranging from 1 (Strongly Disagree) to 4 (Strongly Agree). The questions focused on key topics, including the integration of AI technologies in higher education, potential risks associated with these technologies, and their perceived impact on teaching and learning.

The survey was distributed online to two participant groups: university students and undergraduate teachers. Participants were recruited via email invitations, and a convenience sampling method was used, selecting respondents based on their availability and willingness to participate. Before completing the survey, participants were provided with an informed consent form and a detailed document outlining this study’s purpose and instructions for completing the questionnaire.

The quantitative data were analyzed using appropriate statistical software. Descriptive statistics, such as means and standard deviations, were calculated to summarize the data. Additionally, statistical tests were conducted to explore relationships between variables and validate this study’s hypotheses.

3.2. Qualitative Approach

To enrich the quantitative data, a qualitative component was incorporated into the questionnaire. Each question included a comments section, enabling participants to elaborate on their responses. This approach aimed to capture deeper insights into participants’ perceptions and opinions, offering a more nuanced understanding of the data.

The qualitative responses were analyzed using

Bardin’s (

2001) content analysis framework, which consists of three main stages: pre-analysis, data coding, and processing of information. This method facilitated the identification of recurring themes and trends within the participants’ comments, providing valuable context to the quantitative findings.

3.3. Participants

This study’s sample consisted of two distinct groups, teachers and students, providing a diverse range of perspectives. The participants in this study, consisting of both teachers and students, were recruited from a public Moroccan university. The respondents came from six different faculties within this university, ensuring disciplinary diversity. This approach allowed for a balanced representation of participants while maintaining institutional consistency.

For the recruitment of respondents (teachers and students), we adopted a network-based dissemination approach, combining an initial selection with a gradual expansion of the sample through participants’ contacts.

The teacher group included 130 participants, with a nearly equal gender distribution of 64 males (49.2%) and 66 females (50.8%). In terms of age, 45.9% of teachers were between 26 and 35 years old, 21.5% were aged 36 to 46, 25.2% were aged 46 to 55, and 7.5% were 56 years or older. Regarding professional experience, 4.6% had less than 1 year of teaching experience, 17.2% had between 1 and 3 years, 33.3% had between 4 and 6 years, 36.2% had between 7 and 10 years, and 8.8% had more than 10 years of experience. In terms of specialization, 45.1% of the teachers were involved in languages and education sciences, 15.9% in mathematics, 11.5% in computer science, 13.2% in law, 12.1% in economics and management, and 2.3% in health sciences. This diverse expertise among the teachers enriches the perspectives collected in the study.

The student group included 156 participants, with a gender distribution of 99 females (63.5%) and 57 males (36.5%). Most students (73.1%) were aged between 18 and 25 years, 25.1% were aged between 26 and 35 years, and 1.8% were aged between 36 and 45 years. Regarding their educational level, 69.3% were enrolled in undergraduate (license) programs, 28.1% were pursuing a master’s degree, and 2.7% were doctoral candidates. The disciplinary distribution showed that 49.2% of students were from social sciences, 22.4% from law and economics, 9.6% from natural sciences, 8.1% from engineering and technology, 7.2% from communication and information sciences, 1.9% from medicine and health sciences, 1.2% from arts and design, and 0.6% from agronomic and environmental sciences.

This diverse sample of teachers and students offers a comprehensive understanding of attitudes, experiences, and expertise across various demographic profiles, professional backgrounds, and academic disciplines. These characteristics provide a robust foundation for analyzing the integration and impact of generative AI in higher education.

4. Results

The quantitative analysis provides valuable insights into the research questions, highlighting the contributions of both student and teacher groups (see

Table 1).

Table 1 presents a summary of participants’ perceptions regarding the potential of artificial intelligence (AI) technologies to replace teachers. The survey consisted of 11 items, with higher scores indicating stronger agreement. The table includes the number of participants (n), means (M), and standard deviations (SD) for both groups—students and teachers.

The results reveal significant differences between student and teacher perceptions regarding the use of generative artificial intelligence (GAI) technologies in educational contexts (

Table 1). Students reported significantly higher mean scores across several survey items, reflecting a strong interest in and openness to the adoption of GAI. They perceive these technologies as innovative and practical tools capable of effectively addressing their academic needs.

Conversely, teachers demonstrated greater caution, raising concerns about ethical issues, the potential impact of GAI on the development of critical human skills, and the preservation of the relational and human dimensions of teaching. These contrasting perspectives underscore the importance of a balanced approach to integrating GAI technologies. Such an approach should account for students’ enthusiasm for these innovations while addressing teachers’ reservations to ensure the responsible and effective use of GAI in educational practice.

4.1. Integration and Perceptions of Generative AI in Education

4.1.1. Diverging Perceptions Between Students and Teachers

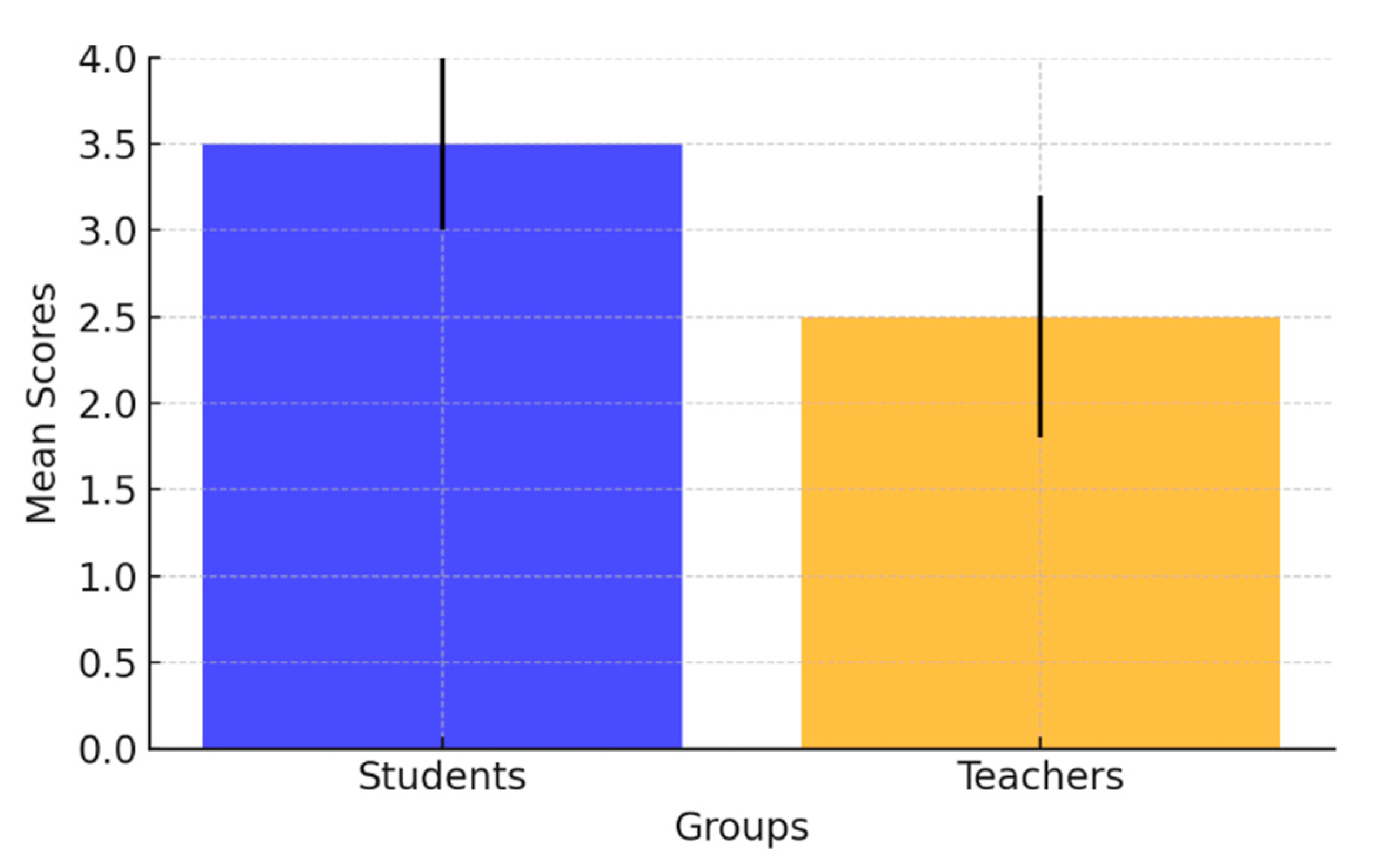

The findings (

Figure 1) indicate that students report a significantly higher mean score for the integration of generative AI technologies into their learning practices compared to teachers (M = 2.4, SD = 1.39). This suggests that students are more receptive to adopting these tools (t(216.19) = −8.96,

p < 0.001). This openness may reflect the younger generation’s familiarity with digital technologies, which they frequently use in various aspects of their daily lives. Their inclination to embrace generative AI can be attributed to its perceived convenience and accessibility, aligning with their digitally integrated lifestyles.

The analysis indicates that students (M = 3.38, SD = 0.88) were significantly more likely than teachers (M = 2.38, SD = 1.18) to believe that generative AI technologies could provide guidance for academic work as effectively as teachers (t(235) = −7.99, p < 0.001). Students highlighted the ability of AI to deliver immediate answers, reducing dependency on teacher availability and fostering greater autonomy and control over their learning process.

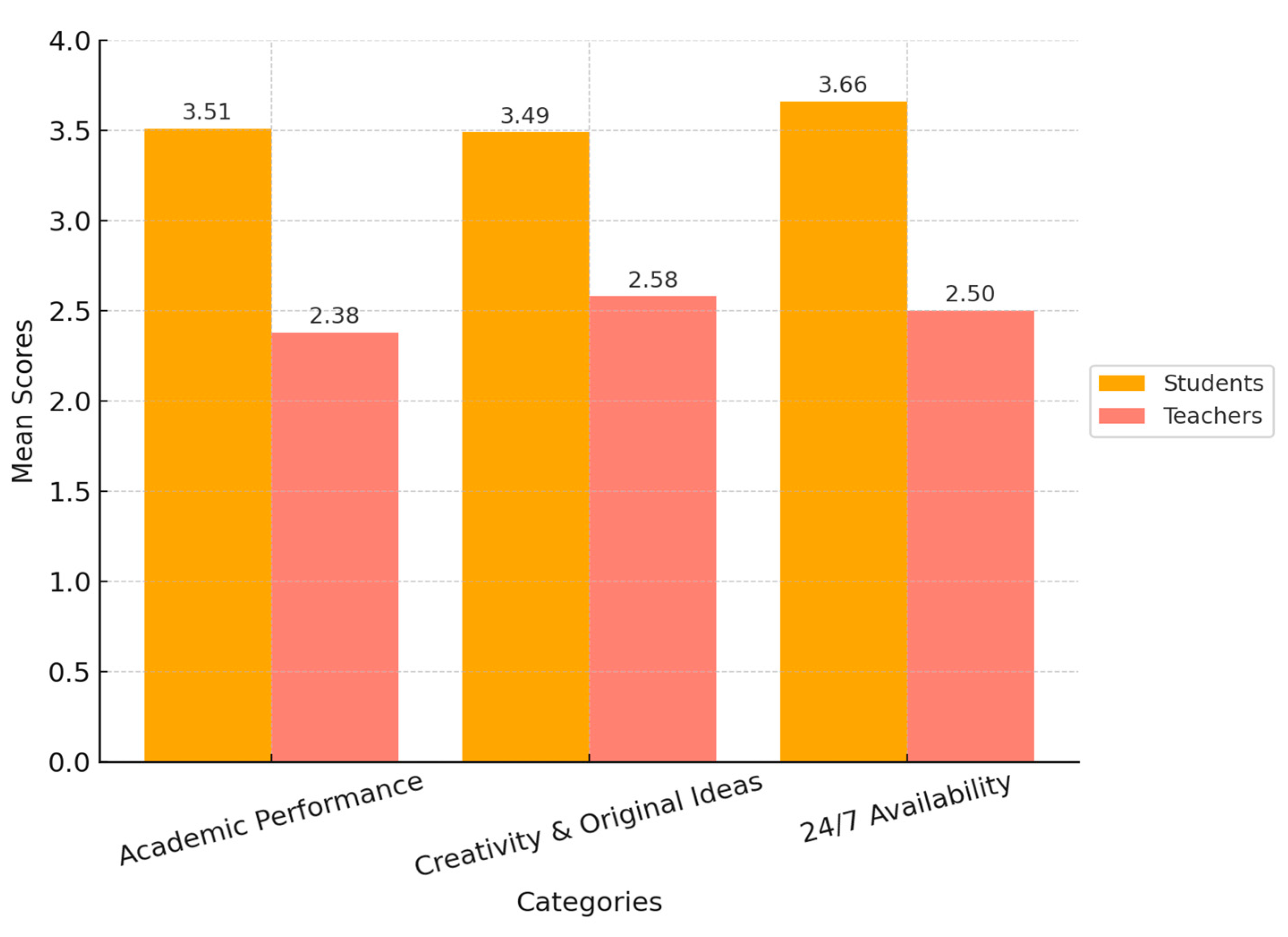

Regarding academic performance, students (M = 3.51, SD = 0.82) expressed a more favorable view than teachers (M = 2.38, SD = 1.17) (t(223.81) = −9.21,

p < 0.001), emphasizing the potential of AI to improve results and simplify complex academic tasks (

Figure 2). Similarly, when considering creativity and the generation of original ideas, students (M = 3.49, SD = 0.83) exhibited a more positive perspective compared to teachers (M = 2.58, SD = 1.24) (t (218) = −7.14,

p = 0.002). These findings suggest that students recognize AI’s role in fostering innovation and generating novel insights.

4.1.2. Accessibility and Availability of AI

Students highly valued the continuous availability of AI technologies, with a mean score of M = 3.66 (SD = 0.76), compared to M = 2.50 (SD = 1.25) for teachers (t(230.87) = −7.58,

p < 0.001) (

Figure 2). This 24/7 accessibility was particularly appreciated by students who often study outside traditional hours, highlighting the capacity of digital tools to align with flexible learning schedules. Qualitative findings support the quantitative data, further highlighting the differing perspectives of students and teachers. Students described AI as an invaluable tool for improving their academic experience. For example, one student remarked, “AI helps me generate new and creative ideas when I get stuck in my research. It gives me a different perspective”. Another student emphasized, “The immediate feedback that AI provides is very useful. It allows me to know directly where I’m going wrong and to rectify my mistakes without waiting for feedback from the teacher”. These testimonials reflect students’ enthusiasm for the efficiency and accessibility offered by AI technologies.

The results reveal a significant divergence between students’ and teachers’ perceptions of the claim that generative AI technologies, such as ChatGPT, allow students to ask questions they might hesitate to pose directly to their teachers. Students reported a high mean score (M = 3.43, SD = 0.38), emphasizing their agreement with AI’s role in facilitating communication and enabling them to express questions without fear of judgment. Teachers, however, reported a lower mean score (M = 2.56, SD = 1.19), indicating a less pronounced perception of this benefit. Statistical analysis confirms a significant difference between the two groups (t(230.85) = −8.15, p < 0.001). These findings underscore the potential of AI to reduce communicative barriers and promote student self-expression in educational contexts. These findings are further supported by qualitative data from student interviews. Many students explained that they use AI to ask questions they are reluctant to raise in class due to various concerns. Some students mentioned that they fear being judged for not understanding basic concepts: “Sometimes, I feel like my teacher expects us to already know certain things, and I don’t want to look incompetent in front of everyone. With ChatGPT, I can ask without feeling embarrassed”. Others reported that they avoid asking sensitive or controversial questions in a classroom setting: “There are topics I am curious about, like the ethical implications of AI, but I don’t feel comfortable discussing them in class. With AI, I can explore these topics privately”. Several students also stated that they turn to AI for additional explanations when they struggle to understand course material: “I don’t always grasp concepts immediately in class, but I hesitate to ask too many questions. AI helps me get alternative explanations in a way that makes more sense to me”. Additionally, social anxiety plays a role in students’ reluctance to speak up: “Speaking in front of a large class is intimidating, even if I have a question. I prefer checking with AI first to see if I can find the answer on my own”.

In contrast, teachers expressed reservations about over-reliance on AI and its potential to undermine critical aspects of the learning environment. Teachers conveyed concerns about AI’s inability to nurture essential soft skills such as communication, problem-solving, and critical thinking, which they consider vital for students’ professional and personal growth. One teacher noted, “What AI produces may be useful, but it’s often a repetition of existing data. It lacks the originality and adaptability of a teacher who can adjust their methods according to the needs of the group”. Another added, “AI may give immediate answers, but it doesn’t allow students to develop essential human skills like communication, conflict management, and critical thinking”. A minority of teachers recognize the potential of AI as a complementary tool to enhance specific aspects of their work, such as creating tailored audiovisual aids, supporting formative assessments, and automating administrative tasks. These teachers highlight that AI can help streamline routine responsibilities, allowing educators to focus on tasks requiring human interaction. For example, one teacher remarked, “AI can help me create visual aids that are more interactive and tailored to my students’ needs, which is a real time-saver”.

The findings reveal a clear divergence in how students and teachers perceive the integration of AI in education. While students embrace AI for its convenience, creativity, and accessibility, teachers remain cautious, focusing on its limitations in fostering critical human skills. This highlights the importance of balancing AI’s potential benefits with strategies to preserve the relational and adaptive dimensions of education.

4.2. Generative AI as a Complementary Tool or a Threat to the Human Role of Teachers

4.2.1. AI’s Role in Replacing Educators

The findings reveal that both students and teachers exhibit limited support for the notion that generative AI could replace educators. Quantitatively, students reported a mean score of M = 2.21 (SD = 0.97), while teachers reported a mean score of M = 1.82 (SD = 1.02) (t(233.47) = −5.97, p < 0.001). These results underscore a shared belief in the irreplaceable role of human educators in providing emotional and personalized support to learners.

Qualitative insights further support this perspective. Teachers consistently highlighted the importance of understanding individual student needs and adapting to unforeseen circumstances. As one teacher stated, “AI can be a valuable tool, but it will never understand a student’s emotions or adapt to situations as varied as a human teacher can”. Similarly, students expressed skepticism regarding AI’s ability to replicate the human connection inherent in teaching. One student observed, “AI could help with my revisions, but no machine will ever replace a teacher in understanding me when I struggle with a subject”.

4.2.2. Concerns About the Impact on Soft Skills

A key concern raised by teachers is the potential negative impact of generative AI on the development of essential soft skills. Quantitative results reveal that teachers expressed significantly higher concern (M = 3.33, SD = 0.85) than students (M = 2.86, SD = 0.87) (t(218.84) = 7.69, p < 0.001). Teachers view these human-centric skills, including teamwork, problem-solving, and leadership, as critical in preparing students for real-world challenges.

Qualitative data further emphasize these concerns. Teachers noted that AI-generated content is often repetitive and decontextualized, failing to foster critical thinking or collaborative skills. One teacher remarked, “AI may give immediate answers, but it doesn’t allow students to develop essential human skills like communication, conflict management, and critical thinking”. These findings highlight the need for a balanced integration of AI into educational practices, ensuring that it complements rather than undermines the development of holistic, transferable skills.

4.2.3. The Relational Dimension of Learning

Teachers unanimously emphasized the critical role of human elements in the educational process, such as empathy, adaptability, and the ability to build meaningful connections with students. One teacher remarked, “AI can provide information and facilitate exercises, but interaction, motivation, and personalized support are irreplaceable human aspects”. These attributes are seen as vital for creating dynamic and supportive learning environments that cater to the diverse needs of students. Students also echoed these sentiments, emphasizing the unique value of teachers in motivating and supporting their learning. As one student noted, “Teachers are more than just sources of information. They motivate, support, and create a dynamic learning environment”. These perspectives highlight a shared understanding of the limitations of generative AI and the enduring importance of human interaction in education.

4.3. Considerations and Concerns in the Integration of Generative AI in Education

4.3.1. Detection of AI Use in Student Work

The findings highlight a significant disparity in confidence levels between teachers and students regarding their ability to detect AI-generated content in academic work. Quantitatively, teachers reported lower confidence (M = 2.46, SD = 1.16) compared to students (M = 2.96, SD = 0.83) (t(255.86) = −4.96, p < 0.001). This suggests that educators face substantial challenges in identifying AI-assisted outputs, raising concerns about the implications of undetected AI use for academic integrity.

These findings underscore the urgent need for advanced tools and tailored training programs to equip teachers with the skills necessary to effectively manage and monitor AI usage in educational settings. By addressing these challenges, educators can ensure that generative AI is integrated responsibly, safeguarding the validity of student assessments and upholding academic standards.

4.3.2. The Need to Develop Digital Literacy

Both teachers and students recognize the critical importance of developing digital literacy as a foundation for the ethical and effective use of AI technologies in education. Digital literacy is widely acknowledged as an essential skill for navigating the complex ethical and practical challenges associated with AI integration.

Teachers emphasize the necessity of ethical training and the thoughtful incorporation of AI into pedagogical practices. One teacher noted, “AI can be an excellent tool to complement my teaching, but it’s essential to acquire a solid understanding of digital tools to prevent them from becoming barriers to learning”. This statement reflects teachers’ broader concerns about the risks of over-reliance on AI and its potential misuse in educational contexts.

Similarly, students stress the need for education and training to ensure the critical and autonomous use of AI tools. One student remarked, “AI is great for helping me research and come up with ideas. But I think we need to be trained to use it effectively, because it doesn’t always answer the exact question we’re asking”. This perspective highlights students’ recognition of the limitations of AI and the importance of developing skills to evaluate and apply AI-generated content responsibly.

The findings of this study highlight that both teachers and students acknowledge the benefits of AI, particularly its ability to automate tasks and provide immediate feedback. However, both groups agree that AI cannot replace the human aspects of teaching, such as empathy, adaptability, and personal interaction, which remain indispensable to the learning process.

In addition, the development of digital literacy is identified as a critical priority by both groups. Mastery of digital tools is essential to ensure the responsible and effective integration of AI in education, avoiding dependency and fostering its optimal use. AI should be viewed as a complement to teaching, not as a substitute.

In conclusion, while AI offers significant potential for enhancing educational practices, its integration must be guided by a thoughtful and balanced approach that preserves the irreplaceable role of teachers and the human connection at the heart of education.

5. Discussion

The findings of this study reveal significant differences between students’ and teachers’ perceptions of the integration of AI technologies into the learning process. While students exhibit enthusiasm, viewing AI as a tool to foster autonomy and creativity, teachers express concerns regarding its potential impact on the development of essential human skills and the overall quality of teaching.

5.1. Integration and Perceptions of Generative AI in Education

The UTAUT (Unified Theory of Acceptance and Use of Technology) model by

Venkatesh et al. (

2003) offers a relevant framework for understanding differences between students and teachers regarding their openness to integrating artificial intelligence (AI) into educational practices. The model identifies four main factors influencing technology adoption: perceived usefulness, perceived ease of use, social norms, and facilitating conditions (

Kamhi & Salahddine, 2020). These dimensions provide valuable insights into the findings of this study.

The results indicate that students show significantly higher openness to AI than teachers, reflecting their greater receptiveness to these technologies. This difference can be attributed to students’ familiarity with digital tools and their attraction to AI due to its perceived usefulness.

Lin and Chen (

2024) describe this tendency as characteristic of Generation Z, a cohort shaped by the omnipresence of the Internet and rapid technological innovations. Similarly,

Chan and Tsi (

2024) characterize students as “active problem solvers” and “independent learners” (

Marshall & Wolanskyj-Spinner, 2020), enabling them to leverage generative AI tools to enhance their autonomy and customize their educational experiences.

AI provides students with unique opportunities, such as the ability to progress at their own pace while receiving immediate feedback.

Nguyen et al. (

2024) identify rapid feedback as a critical factor in boosting motivation and improving academic performance. This flexibility encourages deeper engagement with learning. Previous studies by

Bíró (

2014) and

Borys and Laskowski (

2013) further emphasize Generation Z’s preference for personalized and immediate feedback, highlighting the central role of AI in meeting these expectations.

In contrast, teachers express notable reservations about AI integration, particularly concerning the effort required to master new tools. This negative perception of ease of use can negatively impact their willingness to adopt these technologies. These findings underscore the need for facilitating conditions, such as targeted training and support, to reduce perceived barriers and promote AI acceptance in teaching practices. Teachers also stress that, while AI offers advantages such as automating repetitive tasks, it cannot replace fundamental human aspects of teaching, including empathy, emotional support, and personalized adaptation to students’ needs.

Social norms, another key factor in the UTAUT model, also contribute to the differing perceptions of students and teachers (

Kamhi & Salahddine, 2020). Students are immersed in an environment where technology integration is widely valued and supported, which promotes their adoption of AI. Teachers, however, may experience less social pressure to embrace these technologies or may fear that doing so could challenge their traditional pedagogical role. This difference in social influence helps explain the divergent attitudes between the two groups.

The UTAUT model thus provides a clear lens for interpreting the observed differences between students and teachers by highlighting factors such as perceived usefulness, ease of use, social norms, and facilitating conditions (

Kamhi & Salahddine, 2020). Students view AI as an intuitive and valuable tool for enhancing their learning, while teachers face practical and cultural challenges that hinder its adoption. These findings highlight the importance of providing tailored training and support to encourage the broader integration of AI in education, while addressing the unique needs of both students and teachers.

5.2. AI as a Complement Rather Than a Replacement for Teachers

An important finding of this study is the shared consensus among students and teachers that AI cannot replace teachers. While both groups recognize AI as a valuable complementary tool, they agree that it cannot substitute for the human aspects of teaching. As

Chan and Tsi (

2024) observe, many teachers view AI as a useful resource for streamlining tasks such as creating visual aids or supporting formative assessments. However, they emphasize that human interaction remains indispensable in teaching. Qualitative data further reinforce this perspective, with teachers highlighting AI’s inability to adapt to students’ emotional and cognitive needs. One teacher noted, “AI can provide support in repetitive or technical tasks, but it cannot replace the empathy and flexibility required to respond to a student’s unique challenges”. This finding underscores the irreplaceable role of human educators in fostering meaningful connections and providing tailored guidance in the learning process. This observation can be interpreted through the prism of the SAMR model (

Puentedura, 2010), which assesses the impact of technologies on teaching practices. In this framework, AI is mainly perceived as operating at the levels of substitution and augmentation. For example, AI replaces certain administrative or repetitive tasks, such as correcting homework or monitoring student progress, which corresponds to the substitution level. It also enhances certain pedagogical functions, notably by providing rapid, personalized feedback to students, thus increasing the effectiveness of existing educational practices. However, reaching the redefinition level, which would imply a profound transformation of educational practices, remains limited.

5.3. The Need for Digital Literacy for Optimal Use of AI

While AI offers undeniable advantages, such as personalized learning and enhanced academic performance, as indicated by the findings of this study, its effective integration into educational practices necessitates AI literacy. This requires the implementation of targeted and comprehensive training programs designed to equip educators and learners with the skills, knowledge, and attitudes needed to understand, engage with, and utilize AI systems effectively and critically. According to

Long and Magerko (

2020), these competencies include the ability to interpret AI-generated content, recognize algorithmic biases, and assess the reliability of AI outputs.

Findings indicate that students often face difficulties in interacting efficiently with these tools, as AI-generated responses do not always accurately align with their queries. Without adequate training, they may misinterpret AI-generated information or rely on incomplete and unverified content, potentially leading to misconceptions rather than deep learning. Research conducted by

Kim How et al. (

2022), as well as

Chan and Tsi (

2024), highlights the crucial role of digital literacy in fostering responsible and effective AI usage. These studies emphasize the importance of training programs that help both students and educators refine their queries, critically assess AI-generated content, and utilize AI as a complement to human learning rather than a replacement.

Educators also caution against the uncritical adoption of AI, which could undermine students’ autonomy and creativity, thereby threatening the fundamental role of education in developing essential human competencies such as communication, conflict resolution, and critical thinking. As highlighted by

Mallik and Gangopadhyay (

2024), a balanced approach to AI is essential for enriching learning experiences without diminishing students’ active engagement. Excessive reliance on AI-generated responses could, in fact, weaken their ability to think independently and solve problems, which are crucial skills for their future success (

Wood & Moss, 2024).

Given these risks, both educators and students stress the importance of integrating structured AI-focused training into educational curricula to ensure the responsible and effective use of AI tools. Such training programs should focus on developing critical evaluation skills by teaching students how to verify the accuracy of AI-generated content, identify biases, and cross-check multiple sources before relying on AI-produced information. They should also encourage best practices for AI usage by promoting a thoughtful and intentional approach where students regard AI as a learning aid rather than a substitute for intellectual effort. Additionally, addressing issues related to academic integrity and data protection is crucial, particularly regarding plagiarism, misinformation, and the responsible use of AI in research and academic work. Training should also optimize AI queries, enabling students to formulate precise and well-structured questions to obtain relevant and reliable responses.

Without a structured approach to digital literacy, there is a genuine risk that AI will assume a disproportionate role in education, diverting learning from its core objectives and compromising academic integrity. As highlighted by previous studies, maintaining the educator’s role as a mentor while effectively integrating AI relies on strong digital literacy training. If students and educators fail to develop these essential skills, AI could become a barrier to learning rather than a tool for intellectual growth.

6. Conclusions

This study highlights the contrasting perceptions and uses of generative artificial intelligence technologies among students and teachers in Moroccan universities. While students adopt a largely positive stance, highlighting the advantages in terms of autonomy, creativity and accessibility, teachers express significant reservations, notably about the potential impact of these technologies on essential human skills and traditional pedagogical dynamics.

The results highlight a greater acceptance of AI by students, who perceive these tools as levers for academic performance and innovation. Teachers, on the other hand, warn of the risks associated with over-reliance on AI, which could compromise the development of general and transferable skills such as critical thinking, collaboration and problem-solving. Both groups agree, however, that AI cannot replace the fundamental role of teachers, particularly in providing personalized support and managing learners’ emotional and cognitive needs.

This research makes a significant contribution by providing an in-depth understanding of the divergent attitudes towards AI in education, while identifying the opportunities and challenges associated with its integration. It highlights the importance of a balanced approach, where AI is used as a complement to, not a substitute for, traditional pedagogical practices. In addition, the need for enhanced digital literacy emerged as an essential condition for the responsible and effective integration of these tools, enabling the benefits to be maximized while minimizing the risks.

In conclusion, this study provides valuable insights for the development of educational policies and teacher training programs aimed at effectively integrating AI into higher education. It also paves the way for future research to explore the broader impacts of AI on pedagogical practices and the cultivation of learners’ skills, while ensuring that essential human values remain central to the educational process.

Author Contributions

All the authors have substantially contributed to the research and writing of the article. Additionally, all the authors have approved the final version of the article. S.H.: Conceptualization; Data collection; Investigation; Methodology; Questionnaire translation; Questionnaire administration; Writing—original draft; Writing—review and editing. N.S.: Conceptualization; Data collection; Investigation; Methodology; Questionnaire translation; Questionnaire administration; Writing—original draft; Writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study as no personal or sensitive human data were collected.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request, as we prioritize targeted access to ensure proper use of the data.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Allen, I. E., & Seaman, J. (2017). Digital learning compass: Distance education enrollment report 2017. Babson Survey Research Group, e-Literate, and WCET. [Google Scholar]

- Altbach, P. G., Reisberg, L., & Rumbley, L. E. (2009, July 5–8). Trends in global higher education: Tracking an academic revolution. A Report Prepared for the UNESCO 2009 World Conference on Higher Education, Paris, France. [Google Scholar]

- Amornkitpinyo, T., Yoosomboon, S., Sopapradit, S., & Amornkitpinyo, P. (2021). The structural equation model of actual use of cloud learning for higher education students in the 21st century. Journal of e-Learning and Knowledge Society, 17(1), 72–80. [Google Scholar] [CrossRef]

- Bardin, L. (2001). L’analyse de contenu (Édition originale 1977). Presses Universitaires de France. [Google Scholar] [CrossRef]

- Barocas, S., Hardt, M., & Narayanan, A. (2023). Fairness and machine learning: Limitations and opportunities. MIT Press. [Google Scholar]

- Bentaleb, M. (2023). Impacts of artificial intelligence on teaching in translation. Cahiers de Traduction, 1(28), 233–245. [Google Scholar]

- Bíró, G. I. (2014). Didactics 2.0: A pedagogical analysis of gamification theory from a comparative perspective with a special view to the components of learning. Procedia-Social and Behavioral Sciences, 141, 148–151. [Google Scholar] [CrossRef]

- Borys, M., & Laskowski, M. (2013). Implementing game elements into didactic process: A case study. In V. Dermol, N. T. Širca, & G. Dakovic (Eds.), Active citizenship by knowledge management & innovation: Proceedings of the management, knowledge and learning international conference (pp. 819–824). ToKnowPress. Available online: https://bit.ly/3F9Obij (accessed on 22 December 2024).

- Chan, C. K. Y., & Tsi, L. H. Y. (2024). The AI revolution in education: Will AI replace or assist teachers in higher education? Journal of Educational Technology and Innovation, 10(2), 35–49. [Google Scholar]

- Chauhan, S., & Jaiswal, M. (2016). Determinants of acceptance of ERP software training in business schools: Empirical investigation using UTAUT model. The International Journal of Management Education, 14(3), 248–262. [Google Scholar] [CrossRef]

- Chen, L., Chen, P., & Lin, Z. (2020). Artificial intelligence in education: A review. IEEE Access, 8, 75264–75278. [Google Scholar] [CrossRef]

- Cope, B., & Kalantzis, M. (2015). Interpreting evidence-of-learning: Educational research in the era of big data. Open Review of Educational Research, 2(1), 218–239. [Google Scholar] [CrossRef]

- Gupta, B., Dasgupta, S., & Gupta, A. (2008). Adoption of ICT in a government organization in a developing country: An empirical study. The Journal of Strategic Information Systems, 17(2), 140–154. [Google Scholar] [CrossRef]

- Hamilton, E. R., Rosenberg, J. M., & Akcaoglu, M. (2016). Examining the substitution augmentation modification redefinition (SAMR) model for technology integration. TechTrends, 60(5), 433–441. [Google Scholar] [CrossRef]

- Johnson, L., Becker, S. A., Estrada, V., & Freeman, A. (2015). NMC horizon report: 2015 higher education edition. The New Media Consortium. [Google Scholar]

- Kamhi, M., & Salahddine, A. (2020). L’acceptation technologique: Modèles d’intention. Centre des études doctorales: Droit, Économie et Gestion, Université Abdelmalek Essaâdi, ENCG de Tanger. [Google Scholar]

- Khan, S., Zaman, S. I., & Rais, M. (2022). Measuring student satisfaction through overall quality at business schools: A structural equation modeling: Student satisfaction and quality of education. South Asian Journal of Social Review, 1(2), 34–55. [Google Scholar] [CrossRef]

- Kim How, R. P. T., Zulnaidi, H., & Abdul Rahim, S. S. (2022). The importance of digital literacy in quadratic equations, strategies used, and issues faced by educators. Contemporary Educational Technology, 14(3), ep372. [Google Scholar] [CrossRef]

- Lin, H., & Chen, Q. (2024). Artificial intelligence (AI)-integrated educational applications and college students’ creativity and academic emotions: Students and teachers’ perceptions and attitudes. BMC Psychology, 12(1), 487. [Google Scholar] [CrossRef]

- Long, D., & Magerko, B. (2020). What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–16). Association for Computing Machinery. [Google Scholar] [CrossRef]

- Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2016). Intelligence unleashed: An argument for AI in Education. Pearson. [Google Scholar]

- Mallik, S., & Gangopadhyay, A. (2024). Proactive and reactive engagement of artificial intelligence methods for education: A review. Journal of Research in Innovative Teaching & Learning, 6(2023), 2397–7604. [Google Scholar] [CrossRef]

- Marshall, A. L., & Wolanskyj-Spinner, A. (2020). COVID-19: Challenges and opportunities for educators and Generation Z learners. Mayo Clinic Proceedings, 95(6), 1135–1137. [Google Scholar] [CrossRef]

- Nguyen, T. N. T., Lai, N. V., & Nguyen, Q. T. (2024). Artificial intelligence (AI) in education: A case study on ChatGPT’s influence on student learning behaviors. Educational Process: International Journal, 13(2), 105–121. [Google Scholar] [CrossRef]

- Puentedura, R. (2010). SAMR and TPCK: Intro to advanced practice. Hippasus. Available online: http://hippasus.com/resources/sweden2010/SAMR_TPCK_IntroToAdvancedPractice.pdf (accessed on 24 December 2024).

- Puentedura, R. (2014). Building transformation: An introduction to the SAMR model [Blog post]. Available online: http://www.hippasus.com/rrpweblog/archives/2014/08/22/BuildingTransformation_AnIntroductionToSAMR.pdf (accessed on 29 December 2024).

- Puentedura, R. R. (2006). Transformation, technology, and education. Hippasus. Available online: http://hippasus.com/resources/tte/puentedura_tte.pdf (accessed on 23 December 2024).

- Roll, I., & Wylie, R. (2016). Evolution and revolution in artificial intelligence in education. International Journal of Artificial Intelligence in Education, 26(2), 582–599. [Google Scholar] [CrossRef]

- Siemens, G., & Long, P. (2011). Penetrating the fog: Analytics in learning and education. EDUCAUSE Review, 46(5), 30–32. [Google Scholar]

- Siham, J., & Sophia, V. (2024). L’intelligence artificielle dans l’enseignement: Histoire et présent, perspectives et défis. Revue Dossiers de Recherches en Économie et Management des Organisations, 9(1), 118–128. [Google Scholar]

- Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204. [Google Scholar] [CrossRef]

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Warschauer, M. (2004). Technology and social inclusion: Rethinking the digital divide. MIT Press. [Google Scholar]

- Wood, D., & Moss, S. H. (2024). Evaluating the impact of students’ generative AI use in educational contexts. Journal of Research in Innovative Teaching & Learning, 17, 2397–7604. [Google Scholar]

- Zhou, Q. C., & Yue, Y. R. (2010). Effect of replacing soybean meal with canola meal on growth, feed utilization and haematological indices of juvenile hybrid tilapia Oreochromis niloticus × Oreochromis aureus. Aquaculture Research, 41(7), 982–990. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).