Abstract

As large language models (LLMs) continue to evolve, their capacity to replace humans as their surrogates is also improving. As increasing numbers of intelligent tutoring systems (ITSs) are embracing the integration of LLMs for digital tutoring, questions are arising as to how effective they are and if their hallucinatory behaviors diminish their perceived advantages. One critical question that is seldom asked if the availability, plurality, and relative weaknesses in the reasoning process of LLMs are contributing to the much discussed digital divide and equity and fairness in online learning. In this paper, we present an experiment with database design theory assignments and demonstrate that while their capacity to reason logically is improving, LLMs are still prone to serious errors. We demonstrate that in online learning and in the absence of a human instructor, LLMs could introduce inequity in the form of “wrongful” tutoring that could be devastatingly harmful for learners, which we call ignorant bias, in increasingly popular digital learning. We also show that significant challenges remain for STEM subjects, especially for subjects for which sound and free online tutoring systems exist. Based on the set of use cases, we formulate a possible direction for an effective ITS for online database learning classes of the future.

1. Introduction

The increased attention to diversity, equity, inclusion, and accessibility (DEIA) in learning is aimed at social fairness and justice Williamson and Kizilcec (2022). While the idea of equity is not new, it is recent in the context of technology-enhanced learning Ferro et al. (2021); J. Han and Geng (2023), and especially in the context of eLearning Littenberg-Tobias and Reich (2020), in the wake of the unfolding digital divide Mawasi et al. (2020). During the recent COVID-19 pandemic, new alarms went off after observing serious equity lapses in online education that the entire student population of the world had to endure Ezra et al. (2021). In these moments of awakening, researchers discovered that equity concerns, and the rest of DEIA, were conspicuously missing in most learning analytic dashboards used by institutions Williamson and Kizilcec (2021). Naturally, investigations followed to understand how technology could mediate DEIA Higgins et al. (2023), and what can be done to counter the negative impacts in large-scale online learning S. Han et al. (2023); Khalil et al. (2023), which are the focus of this paper.

A rapidly emerging issue for the academic community is the concerns over LLMs such as ChatGPT, Gemini, and CoPilot aiding in cheating and plagiarism Khalil and Er (2023), and the debate about how to address the existence and use of LLMs in education Joyner (2023); Rueda et al. (2023), assessment in particular Anders (2023). Research is in full swing to learn how to leverage their existence in learning Qureshi (2023); Seetharaman (2023) and in assessment Kiesler and Schiffner (2023); Li et al. (2023), and the impact of the distrust they sow in instructor–student relationships Gorichanaz (2023) as a form of inequity. Debate is also raging about their impact on education, traditional education at least Lin et al. (2023). In this paper, our goal is not to debate the usefulness or the destructive capacities of LLMs in education, but to discuss LLMs’ own deficiencies and the modalities of access to them that introduce fairness and equity concerns in assessment, even when they are used as an intelligent tutor.

While we focus mainly on various editions of ChatGPT (including 3.5, 4o, and 4o1) as a representative of one of the most advanced LLMs, we have also experimented with CoPilot and Gemini. Our goal in this paper is to show the subtle differences in a few use cases and extrapolate their capacities to a wider class of use cases to help formulate a recommendation on the nature of a possible database design tutoring system. Our hope is that the vision we project for a possible ITS will overcome the shortcomings of contemporary LLMs and address the equity and fairness concerns we raise, at least until they become more effective and able to take up these responsibilities.

2. Related Research

From the point of view of teaching the concepts of functional dependency theory and database normalization, the challenge is in designing a system that can get the point across, guide the students to learn the theories, and improve learning overall. There are several online systems that are actually effective to varying degrees. We note that as opposed to a tutoring system for teaching conceptual database modeling or database query languages, teaching functional dependency theory and database normalization are probably technically simpler. This is because these concepts are well defined and have established algorithms to compute them. As can be expected, there are several online normalization tools, or calculators, that do quite well in computing various steps and components of dependency theories and normalization Ahmedi et al. (2012); Dongare et al. (2011); H. Kung and Tung (2006); H.-J. Kung and Tung (2006); Mitrovic (2002); Piza-Dávila et al. (2017); Soler et al. (2006); Stefanidis and Koloniari (2016); Wang and Stantic (2019); Yang (2011), and perform not too poorly in explaining the steps to the students.

2.1. Contemporary Online Normalization Tutoring and Assessment Systems

Before we delve into LLM-based tutoring systems, it is perhaps educational to learn about the traditional tutoring systems for database design assignments. There have been several attempts at building database normalization tools as a surrogate for intelligent tutoring systems. However, only a handful of them have been designed for tutoring only FD Calculator Team (2022); Wang and Stantic (2019), and none are designed for assessment, even though it is an integral component of learning. Essentially, assessment is left as an offline exercise. Furthermore, among the online normalization calculators, only the Griffith University Normalization Tool Wang and Stantic (2019), Tool for Database Design Springfield (2024), and Cho’s normal form calculator Cho (2017) are live.

To be an effective learning tool for normalization theory, prior to introducing the theory of normalization, it is essential that the students are able to grasp the concepts of functional dependencies and inference rules so that they are able to derive the closure of a set of dependencies (). This fundamental understanding serves as the foundation for them to follow the notions of attribute closures (), which can be used to compute candidate keys, covers, and to test loss-less join decomposition.

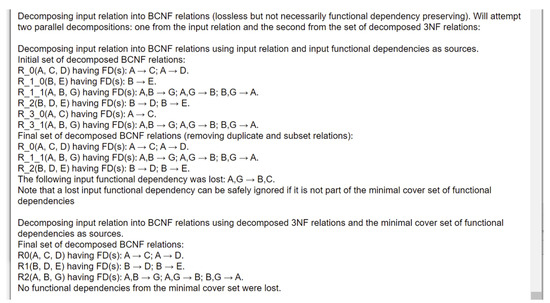

While there are a few tools that could potentially help compute some of these, most are not comprehensive, and often only cover a subset of computing needs. Even when they do, are not in a form that is suitable for a learning system. For example, for the set of functional dependencies over the scheme , the FD calculator developed by Arjo Chakravarty Chakravarty (2018) does not compute the normal forms. While it computes attribute and FD closures, and minimal covers, it computes everything exhaustively and unnecessarily without offering any insight or explanation. Similar concerns apply to the Tool for Database Design Springfield (2024), interactive normalization tool Stefanidis and Koloniari (2016), and NORMIT Mitrovic (2002). An interesting FD calculator by Cho (2017) also generates explanations of all its derivations, as shown in Figure 1. However, it carries out all the computations in one go in a non-interactive fashion. Unfortunately, it is also not being maintained.

Figure 1.

Raymond Cho’s RD Tool.

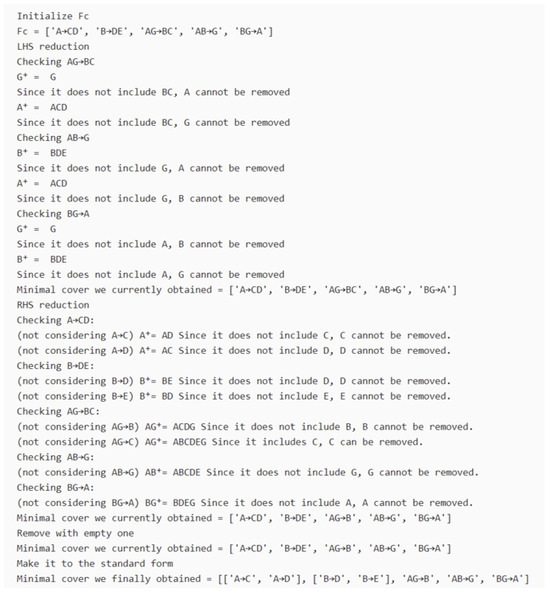

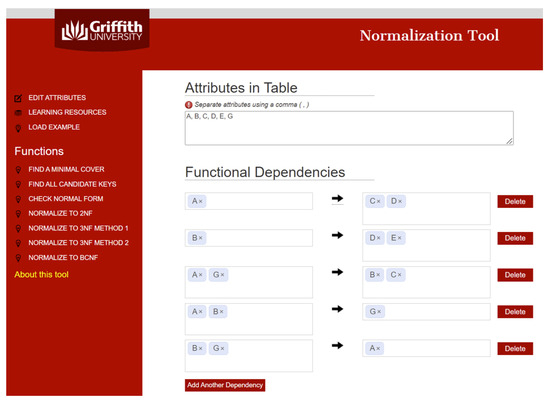

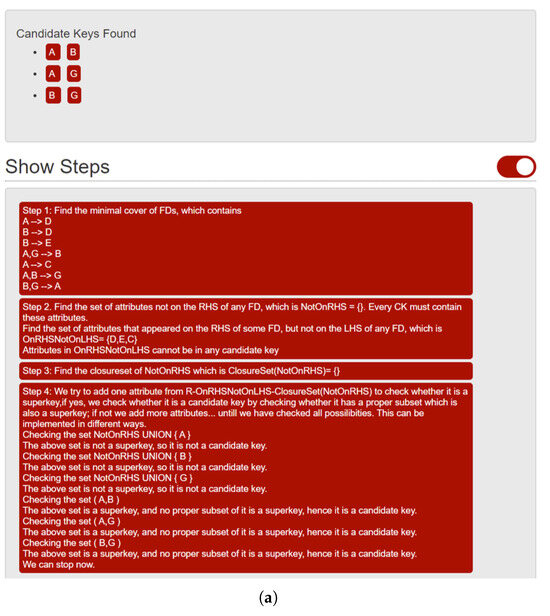

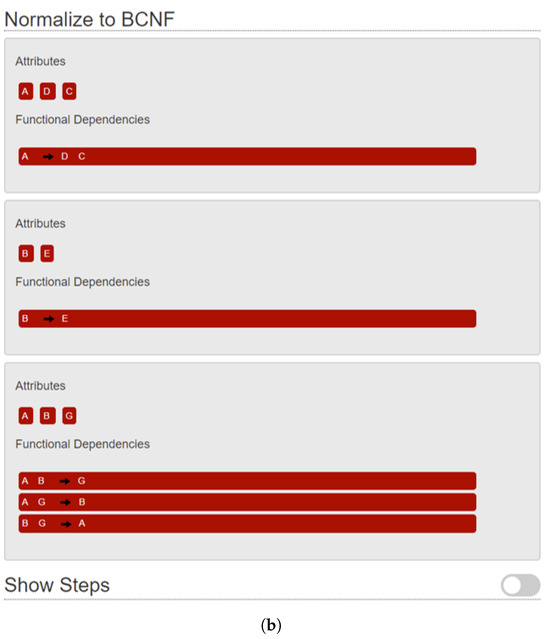

A similarly interesting system was FD Calculator (FD Calculator Team (2022)), which was more modular and generated explanations of its derivations (see Figure 2). However, none of these systems are open-source and no support is offered. This FD Calculator appears to be non-functional as well. Furthermore, they are designed for online direct interactions by the users and no APIs are supported. Considering all the aspects, we believe the Griffith University Normalization Tool Wang and Stantic (2019) is one of the best normal form calculators. It also has a higher commitment to maintenance, even though it does not offer all the functionalities we desire. Figure 3 and Figure 4 show, respectively, the front-end of the Griffith tool and complete set of candidate key computations, as well as normalization into BCNF using it. It can also optionally show the derivation steps (as shown in Figure 4a), but without any explanations. Similar to FD Calculator, this is also modular and interactive. Furthermore, among the online normalization calculators, only the Normalization Tool Wang and Stantic (2019) and Cho’s normal form calculator Cho (2017) are live.

Figure 2.

FD Calculator.

Figure 3.

Griffith University Normalization Tool front-end.

Figure 4.

Griffith University Normalization Tool. (a) Computing all candidate keys. (b) Computing BCNF decomposition.

It is important to note for the sake of completeness and clarity that, while there are tutoring systems for many computer science (CS) subjects, there are significantly fewer systems for a first database course. The ones that are available split the topics into multiple tutoring systems (e.g, Röhm et al. (2020) for SQL, Jukic et al. (2020) for conceptual design, Kessler et al. (2019) for relational algebra, and Wang and Stantic (2019) for normalization). We are, however, unaware of a single comprehensive tutoring system for database teaching. Using multiple systems to teach a single subject introduces substantial impedance mismatch, management hurdles, and potential equity issues. To address this complex set of issues, we have initiated Project 360 as a comprehensive database tutoring system. It is designed to include four inter-connected and integrated tutoring systems – Conceptual Database Design (CoDD), Visual SQL (ViSQL), Relational Query Language (ReliQ), and Normalization and Database Design Theory (NoDD). As it so happens, NoDD also covers everything the Griffith tool does, and much more, but in a significantly unique way.

2.2. LLMs for Tutoring Database Design

The use of LLMs such as ChatGPT in learning is recent, and they are most likely here to stay despite their many shortcomings and controversies Vasconcelos and dos Santos (2023); Zhai (2023); Zheng (2023). However, how the community will adopt their use is a matter of policy decision Lin et al. (2023); Vargas-Murillo et al. (2023) and how learning technology adopts LLM-powered tools Rospigliosi (2023). As online education become increasingly popular, ChatGPT is also complicating and adding Anders (2023) to the prevailing equity and fairness concerns in digital learning Ezra et al. (2021); McBroom et al. (2020).

We recognize the potential of LLMs such as ChatGPT and Gemini, and believe that their capacities can be successfully utilized in technology-assisted learning to accelerate learning outcomes, and they are already making their mark. We, however, identify a potential equity and fairness concern that such technologies introduce, about which not much is known, especially in the online learning sector. In self-paced, non-formal, and online learning settings, such as Udemy or Coursera, and even in many formal class settings, learners are basically left to their own devices. Thus, they are free to choose any tutoring and assistive technologies to learn, and the quality of such tools becomes an equity concern, particularly when affordability and access determine learning outcomes. This is the case even when lack of awareness and guidance prevents learners’ access to such technologies that are freely available.

We discuss the use of ChatGPT (all its versions), CoPilot, and Gemini as tutors for normalization theory in this paper, and the potential equity and fairness challenges they pose in the context of these online calculators for normalization. As we discuss later in this paper, ChatGPT 3.5 (even ChatGPT 4o) not only performs truly poorly and receives low grades or fail, but teaches completely unfounded and incorrect theories that a novice learner will not be able to detect. The fairness and equity issue then is if a student performs poorly in a test, who is liable? The other question is if a student does well by consulting online calculators such as Normalization Tool Wang and Stantic (2019) or ChatGPT-4o1, is it fair to assign better credit to them than the students who were not aware of these tools, and considered ChatGPT 3.5 or CoPilot as more advanced tutors, with the ignorance of these LLMs remaining hidden? Students with better access to more knowledgeable instructors or peers, and financial or technological resources, are unlikely to meet the same unwanted fate.

3. Use Cases: A First Database Course

In most college-level computer science and data science programs today, databases are a required component. As eLearning expands, online database teaching, tutoring, and learning have become the center stage, improving its delivery and management and warranting our utmost attention. Two recent studies point to issues of significant importance. In the first study Pardos and Bhandari (2023), using a cohort of 77 students in two algebra courses, it was found that 70% of the hints generated by ChatGPT 3.5 were on par with human-generated hints, but the learning gains were substantially and statistically significant only for the human-generated hints. The second study Carr et al. (2023); Clark and Jamil (2024) highlights the significant capabilities LLMs bring to bear in teaching and tutoring SQL as part of database courses. It appears to suggest that ChatGPT 3.5 actually outperforms many of the leading systems, such as Cosette Chu et al. (2017), grounded in sophisticated theoretical foundations. In contrast, our experiment with ChatGPT 3.5 as a functional dependency solver was not that impressive.

3.1. Background: Functional Dependency Theory and Database Normalization

A typical first database course includes three main topics—(i) conceptual modeling using a modeling tool such as entity relationship (ER) diagrams, or unified modeling language (UML), (ii) query languages such as SQL, relational algebra, and calculus, and (iii) database design theory using functional dependencies (FDs) and normalization. As envisaged, in this article, we focus on FD theory and its application in database design, and how LLMs, ChatGPT in general, can be used in online learning to tutor and assess FD theory assignments and the concomitant pitfalls.

Given a relational table instance r over the scheme , and given , we say that the FD holds, or satisfies if . Thus, for a relation instance over ,

we say that

It so happens that FDs have logical implications, which can be derived using rules, called Armstrong’s Axioms system, described as follows:

These inference rules can be used to derive the implications of a given set of FDs to reduce a given set of FDs to its minimum cover, compute candidate keys, and to reduce databases to third normal form (3NF) or Boyce–Codd Normal Form (BCNF) to maintain database consistency Silberschatz et al. (2020). Students in a database class solve assignments of the following form:For any , the following hold.

- –

- –

- –

- –

- –

- –

Our plan is to investigate if an LLM could solve such assignments, and to what degree of accuracy. In the event that it cannot, we investigate how its technical inability introduces ignorant bias (discussed in Section 7), resulting in inadvertent inequity and unfairness in the learning ecosystem. In the sections to follow, we present several experiments that we conducted using ChatGPT 3.5, 4o, and 4o1, as well as CoPilot and Gemini.Let R be a relation with a scheme over the attributes , and the set of functional dependencies holds on R.

3.2. ChatGPT 3.5 as a Functional Dependency Tutor

We conducted the following two experiments with GPT 3.5, in which we attempted to solve two basic FD theory assignments.

3.2.1. Experiment 1

To initiate the conversation, we asked ChatGPT 3.5 the following question. In this question, we assigned a number from 1 to 3 to each of the FDs.

ChatGPT 3.5 responded to this as follows:Suppose holds over relation . Use Armstrong’s axioms to derive .

To derive using Armstrong’s axioms, we’ll start by using the given functional dependencies and applying the axioms:

- –

- –

- –

- –

- –

- –

- –

- –

So, we’ve derived using Armstrong’s axioms and the given functional dependencies.

It turns out that the solution above is not correct; at the least, it is not how the proof should unfold. One of the correct solutions is as follows:

| 4. | Given FD 1 | |

| 5. | Decomposition of FD 4 | |

| 6. | Augmentation of FD 5 | |

| 7. | Decomposition of FD 4 | |

| 8. | Peudotransitivity of FDs 7 and 2 | |

| 9. | Union of FDs 6 and 8 □ |

The question is what did ChatGPT 3.5 do wrong? We cannot derive via augmentation in such a manner, even though it is derivable since , the attribute closure of . Most direct derivation would require steps such as

Derivation steps aside, the conclusion was correct, nonetheless. Similarly, the claim that we are able to derive using transitivity from and is theoretically wrong, even though the conclusion that is correct. The claim that and follow from via decomposition is also flat wrong. is actually given, and is trivial, because of reflexivity. Finally, the suggestion that follows from and via transitivity is unfounded.

| 4’. | Given FD 1 | |

| 5’. | Decomposition of FD 4’ | |

| 6’. | Augmentation of FD 5’ |

3.2.2. Experiment 2

Noticing the lapses in the Experiment 1, it was time to test the might of ChatGPT 3.5 with a more involved assignment and see if it can recognize its own mistakes. So, we posed the following question.

Lets assume that is a relation scheme, and is the set of dependencies that hold on it. Can you derive from F? How or why not?

Immediately, ChatGPT 3.5 sprung into action and produced the following proof.

To determine whether we can derive the functional dependency from the given set of functional dependencies , we need to use the Armstrong’s axioms and closure rules. The closure of a set of attributes X, denoted as , is the set of all attributes that are functionally determined by X under the given set of functional dependencies.

First, let’s calculate the closure of B with respect to the functional dependencies F:

- –

- –

- –

- *

- –

In this case, we cannot derive from the given set of functional dependencies .

It was interesting to observe that ChatGPT 3.5 could sense that , and thus the implication is not provable. The right course of action is then to show either a counterexample, or show that , which it was trying to do in a convoluted manner.

Next, we asked a follow-up question to build up our case, where we expected ChatGPT 3.5 would fail.

While the computation is correct, the explanations and the derivation process are not accurate, making it difficult to gather if ChatGPT 3.5 computed the results with full cognition of the science, or if it just happened because of the algorithm behind it. For example, redundancy of dependencies depends on showing that for a given dependency , it is redundant if and only if we can show that , i.e., .

It responded as follows:Can you compute the canonical cover of F?

To compute the canonical cover of the set of functional dependencies , we need to follow these steps:

Let’s go through these steps:

Step 1: Remove extraneous attributes

For each functional dependency, we’ll check if any attribute on the right-hand side is extraneous and remove it if necessary.

Step 2: Remove redundant functional dependencies

In this step, we’ll remove any redundant functional dependencies from the set.

After going through these steps, the canonical cover of F is:

In this example, both and are not redundant. However, is. This is because . We therefore do not need to even consider left redundancy. However, is left-redundant because , and we are able to derive from via augmentation on its LHS with D, and then we should eliminate because it is redundant. One important ChatGPT 3.5 lapse is that it stated that we need to “Remove extraneous attributes on the right-hand side (RHS)” as one of the steps, which is totally incorrect. We need to remove extraneous attributes from the LHS, not RHS.

The next logical step was to ask the following normalization question,

to which ChatGPT 3.5 responded by saying the following.Could you decompose this scheme into 3NF?

To decompose a relation scheme into 3NF (Third Normal Form), we need to follow these general steps:

Let’s start by analyzing the given functional dependencies .

Step 1: Identify the Candidate Keys

In this case, we don’t have explicit information about the candidate keys. We’ll assume that A is a candidate key.

Step 2: Analyze Functional Dependencies

The given functional dependencies are:

Step 3: Check for 3NF Violations

The given functional dependencies do not violate 3NF, as they have a candidate key on the left-hand side and the right-hand side attributes are prime attributes.

Step 4: Decompose into 3NF

Since there are no 3NF violations, we don’t need to perform any decomposition. The given relation scheme with the functional dependencies is already in 3NF.

The analysis, conclusions, and explanations above are completely wrong, and do not make any sense. First, A is not a candidate key of R; is the only candidate key, since , but is, i.e., . Furthermore, a 3NF decomposition of R is , and , and not , because both and have a non-key on the LHS, and the RHS is not a prime attribute (the only prime attributes are A and D). Finally, in R, a transitive dependency between and exists, which is not allowed in 3NF and is the major issue in non-3NF schemes.

The final investigative question to ChatGPT 3.5 was

and the response was as follows, which went downhill from the start.How about a BCNF decomposition?

To decompose a relation scheme into BCNF (Boyce-Codd Normal Form), we follow a similar process to 3NF decomposition, with the additional requirement that all functional dependencies must have a superkey on the left-hand side.

Given relation scheme and the functional dependencies , let’s decompose it into BCNF.

Step 1: Identify the Candidate Keys

We’ll assume that A is a candidate key.

Step 2: Check for BCNF Violations

Step 3: Decompose into BCNF

We need to decompose the relation to eliminate the BCNF violation caused by . We’ll create two new relations:

Now, we have decomposed R into two relations in BCNF: and . Both of these relations are in BCNF, and the original relation R has been decomposed accordingly.

As expected, the entire decomposition and analysis in the above BCNF decomposition are wrong, and it was primarily because ChatGPT 3.5 did not compute the candidate key, i.e., , correctly. The correct decomposition of R should be, again, , , and .

3.3. Advanced LLMs as Database Design Tutors

Since ChatGPT 3.5 has been recently deprecated, we conducted another experiment on design theory as follows using ChatGPT 4o and 4o1, CoPilot, and Gemini to observe how these advanced LLMs perform. The examples used are as follows:

andLet be a relation with scheme over the attributes , and the set of functional dependencies holds on .

- Q1:

- Q2:

Let’s assume that is a relation scheme, and is the set of dependencies that hold on it.

- Q3:

- Q4:

- Q5:

- Q6:

- Q7:

We posed questions through to ChatGPT 4o and 4o1, CoPilot, and Gemini. We briefly discuss the responses we received from them in the following sections. The ChatGPT 4o and 4o1 responses can be reviewed at https://chatgpt.com/share/678f3bf6-ac68-800b-9202-61f78a4fbe11 (accessed 22 January 2025). We did not try ChatGPT 4o1-mini as the efficiency of the LLM was not a focus of our experiment.

3.3.1. ChatGPT 4o and 4o1 Responses

ChatGPT 4o responses were much more refined and were accurate relative to ChatGPT 3.5 in general. In response to question , it correctly made the following conclusion after a series of steps.

Step 4: Verify H is included

At this point, . Since H is not in and cannot be directly inferred from , .

For query , ChatGPT 4o concluded correctly that follows from , and can be derived using Armstrong’s axioms. Though the derivation is a bit extraneous, the conclusion is accurate.

As for question , it used the attribute closure and concluded that since . In response to question , it correctly computed the canonical cover of as . It also computed the 3NF and BCNF decompositions correctly as for both in response to questions and . Unfortunately, in response to question , ChatGPT 4o computed the set of candidate keys of as and , which is a serious mistake. It did not recognize that is a subset of and thus cannot be a candidate key of . However, ChatGPT-4o1 did not make this mistake and correctly concluded that is the only candidate key of .

3.3.2. CoPilot Responses

Just to check how CoPilot would respond where ChatGPT 4o failed, we asked question to CoPilot, and the response and reasoning it produced were even worse. By using a series of attribute closure computations (i.e., all the subsets of the attributes ), it wrongly concluded as follows:

So, the candidate keys for the relation with the given functional dependencies are .

3.4. Gemini Responses

Gemini too applied exhaustive attribute closure computation, as did CoPilot, but correctly made the following conclusion.

The only candidate key for relation with the given functional dependencies is .

Note

In the interest of brevity and to avoid repetition, we assumed that ChatGPT-4o1, CoPilot, or Gemini would perform similarly on questions through , which may not be accurate, and is possibly interesting to try for the sake of completeness. However, can be treated as a barometer of performance since ChatGPT 4o and CoPilot both failed to generate the correct response and are already flawed.

4. Confronting ChatGPT 3.5’s Ignorance

Armed with ChatGPT 3.5’s failures discussed in the earlier section, it was time to confront it with probing questions with surgical precision. The goal here was to bring ChatGPT 3.5 to the right track of thinking, by designing appropriate prompts, to see if it was capable of re-evaluating and rectify its mistakes. So, we proceeded with the conversations below.

4.1. Misconception About Candidate Keys

Since it made a fundamental mistake in computing the set of candidate keys of such a simple, trivial, and clean scheme, we decided to explore the reasons why. An additional reason was to see if it computed the keys right, could it then also correct itself about the 3NF and BCNF decomposition it suggested earlier. The the natural staring point was the following question, which was a bit different than before.

What are the candidate keys of ?

Though it now attempted a slightly different analysis, it made the computation even worse and computed keys that made no sense.

To determine the candidate keys of relation given the functional dependencies (the canonical cover of F), we’ll use closure to find the attributes that can determine all other attributes in the relation.

We’ll start by considering each attribute as a candidate key and calculate the closure for each.

- –

- –

- –

- –

- –

- –

- –

- –

- –

- –

- –

- –

There are multiple candidate keys for .

The conversation, derivations, and explanations above were one of the strangest yet. This is because assuming even A to be a candidate key, which it is not ( is), , , and cannot be candidate keys too, since these are supersets of A. The entire analysis was wrong.

So, the gentle question or prompt to ChatGPT 3.5 was

to which it responded as follows.I think the only candidate key of is . Am I wrong?

Let’s re-evaluate the candidate keys for relation given the functional dependencies .

A candidate key is a minimal set of attributes that can uniquely determine all other attributes in the relation.

Given functional dependencies:

Let’s try to determine the candidate keys using the closure approach for different combinations of attributes.

- –

- –

- –

- –

Even here, it is not clear if ChatGPT 3.5 is constructing a plausible derivation, just as a language engineering task, rather than doing so logically. This is because in the last step, it chose to compute , and concluded that it contained all the attributes of , but did not expand beyond saying “ contains A (trivially)”. In reality, the attributes of are not trivially contained in . To see that contains , it is necessary to show the progression of containment via transitivity as follows:Therefore, you are correct. The only candidate key for based on the given functional dependencies F is . I apologize for the oversight in the initial response. Thank you for bringing it to my attention.

So, the derivation and the explanation are not quite what was expected.

4.2. Revisiting 3NF Decomposition

Now that ChatGPT 3.5 knew which one was the candidate key, our goal was to see if a revision of the earlier incorrect 3NF decomposition could be made.

4.2.1. First Attempt

So, we asked

ChaGPT 3.5 was humbled, and responded as follows:Then, do you think your 3NF decomposition is still correct?

You’re absolutely correct, and I appreciate your keen observation. Given that the only candidate key for the relation with the functional dependencies is , let’s revisit the 3NF decomposition.

In the 3NF decomposition, we want to ensure that every non-prime attribute is fully functionally dependent on the candidate key(s). In the earlier response, we assumed A as the candidate key, which was incorrect.

Correct 3NF Decomposition

Since the correct candidate key is , let’s decompose the relation into 3NF accordingly.

The given functional dependencies are:

The candidate key is .

- –

- –

This decomposition ensures that each relation is in 3NF, with non-prime attributes fully functionally dependent on the candidate key .

Despite having the knowledge that was the only key, ChatGPT 3.5 used a novice-like argument to just check non-key-dependent FDs. It did not appear to have used the standard algorithm in any text book Silberschatz et al. (2020), and had it done so, it would have made the wrong derivation once again.Thank you for pointing out the oversight, and I appreciate your understanding.

4.2.2. Last Attempt

As a final attempt, we posed the following comment,

to which ChatGPT 3.5 finally provided a satisfactory answer.I still think that your decomposition is not correct. Do you see why?

You are absolutely correct, and I apologize for the oversight. Upon revisiting the decomposition, I can see the mistake. The decomposition I provided earlier is not in line with 3NF requirements, especially considering the correct candidate key . Let’s rectify this and create a proper 3NF decomposition.

Given the correct candidate key and the functional dependencies , let’s decompose into 3NF.

Correct 3NF Decomposition

The correct decomposition into 3NF would be as follows:

- –

- –

- –

This decomposition ensures that each relation is in 3NF, and non-prime attributes are fully functionally dependent on the candidate key .

Even after all the prompting and prodding, the decomposition is primarily wordsmithing, and not a logical analysis. Yet, we decided to move on.Thank you for your patience, and I appreciate your vigilance in ensuring the accuracy of the decomposition.

4.3. Acknowledgement of Misconception

The ChatGPT 3.5 confession of ignorance came in the following way.

The final words from ChatGPT were as follows:Exactly. Now why do you think you had trouble coming to this correct answer in the first place and needed my prompting more than once?

I appreciate your understanding and patience. Upon reflection, it’s clear that the initial difficulty in arriving at the correct 3NF decomposition stemmed from a combination of factors:

The iterative and collaborative nature of our conversation allowed for a deeper understanding of the problem and its solution. I appreciate your engagement and contributions, which ultimately resulted in a more accurate and informed response. Thank you for guiding me to the correct solution.

5. Merit of the ChatGPT Conversation

While the conversations for Experiment 1 (https://chat.openai.com/share/70d6b435-1ebe-4448-a550-fbf63d708ea3, accessed 2 November 2023) and Experiment 2 (https://chat.openai.com/share/78754043-9d66-4dc7-9e45-18c1ff5c8e18, accessed 2 November 2023) with ChatGPT 3.5, as well as the conversations with ChatGPT 4o and 4o1 (https://chatgpt.com/share/678f3bf6-ac68-800b-9202-61f78a4fbe11, accessed 22 January 2025) can be found online at the respective OpenAI links in their entirety, we thought including their sanitized versions in this article was important for the complete comprehension of the conversational dynamics, tenor, and perils they embodied. These conversations also reveal the intricate details about how ChatGPT 3.5 failed repeatedly (and so did ChatGPT 4o), despite all the prompts. As a database instructor and a researcher, one must wonder if ChatGPT 3.5 even is familar with FD theory and normalization algorithms beyond its ability to construct logical sentences with apparent scientific rigor.

Our opinion based on the experimental evidence is that all the LLMs are works in progress; some are more advanced than others, but they still contain flaws that tend to suggest that they do not have the capacity to reason, and that is a concern regarding using them as tutors for at least FD theory and normalization. While Ilya Sutskever (OpenAI Chief Scientist) appears to suggest that next-token prediction (https://www.youtube.com/watch?v=YEUclZdj_Sc, accessed on 22 January 2025), or other research on Chain of Thought Xiang et al. (2025), is enough for artificial general intelligence and for reasonable deductive capabilities for LLMs, the evidence we have presented is not very reassuring. As opposed to LLMs, the Griffith University Normalization Tool preforms flawlessly and we believe that using it, or a more serious incarnation of it, as a foundation for a database design tutoring system is prudent and recommended. We believe that is precisely the motivation for the design of the normalization tutoring system by Jamil (2023); Jamil and Shawon (2023). The issues that the traditional design theory tutoring systems confront is human-like feedback generation and explaining the underlying concepts, in which the LLMs perform admirably. Naturally the question is can these two paradigms be fused together to develop the ultimate ITS for database design classes?

6. Discussion

Finally, it is important to acknowledge the psychological dimension of learning and the integration of LLMs for personalized learning and effective teaching. The disruptive entry of LLMs into education is both promising—with potential to enhance learning—and concerning, as it may dismantle the traditional educational scaffolding and inquiry-based learning Caccavale et al. (2024). Regardless of their long-term effects, LLMs will undoubtedly shape the educational landscape Shahzad et al. (2025), raising significant ethical, algorithmic bias, and privacy concerns.

From a psychology of technology perspective, instructors weigh learning outcomes Lyu et al. (2024) and ease of instructional delivery, while learners adopt LLMs for time savings, independent study, knowledge provision, cognitive efficiency, and accessibility Niloy et al. (2024). As LLMs permeate classrooms worldwide, academic integrity issues arise Bin-Nashwan et al. (2023), and cheating becomes more difficult to detect Elkhatat (2023). Yet, evidence indicates a net gain from LLM usage Baek et al. (2024), particularly in self-learning and self-assessment through authentic feedback Mazzullo et al. (2023); Pozdniakov et al. (2024).

However, self-learning with LLMs—even if not sought as a shortcut—may subject learners to algorithmic biases and weaker models, leading to disparities in equitable outcomes Bispo et al. (2024); Bispo and Santos (2024). Effective prompt engineering knowledge becomes essential for mitigating these biases and bridging the digital divide. As LLMs transition from ChatGPT-3.5 to -4o1, both learners and instructors must remain aware of these limitations and pitfalls, especially in advanced domains like database design theory, where traditional ITSs are scarce and objective comparisons remain challenging. More research is needed to establish baseline standards for the responsible and effective use of LLMs in such specialized areas.

7. Ignorant Bias, Matthew Effect, and Equity Concerns

There are two serious issues emerging from the conversations with ChatGPT, CoPilot, and Gemini in the previous sections. First, ChatGPT 3.5 was seriously wrong in all cases, yet convincingly constructed arguments, often nonsensically. But it is not possible for a novice learner to spot this, and certainly not possible for a student to tease out the correct response without being a master of the subject, making the idea of ChatGPT as a tutor moot. Second, not being aware of ChatGPT’s ignorance, the question is how do the students of a database class use it as a tutor, and possibly fail the class? Then, the next question is does the ignorance of ChatGPT, which we call ignorant bias, introduce unfairness and inequity in the learning system?

The concept of ignorant bias loosely parallels the Matthew Effect Soares (2011); Walberg and Tsai (1983), which highlights how learners who already benefit from certain educational advantages experience compounding gains over time. In our context, discovering and affording a more capable LLM (e.g., ChatGPT-4o1 or later) places learners at a significantly higher position on the learning outcome spectrum. Simultaneously, it worsens the equity divide by disadvantaging those who must rely on a less “intelligent” LLM due to financial or other constraints.

Although ChatGPT-4o1 improved upon ChatGPT 3.5 and 4o, we believe the current LLMs introduce an unfair bias and inequity in the learning ecosystem, at least in the online learning set up for database design theory. Because, in this environment, students are basically on their own and a dedicated mentor or instructor is not often available to guide them, or to warn them. One of the concerns is that students who wish to cheat by seeking help from ChatGPT to respond to test questions online, now blurs the distinction between themselves and legitimate non-cheating learners who accepted the tutoring of ChatGPT on this subject. While the assessment system will most likely fail both, but it will do so for the wrong reasons.

In an odd way, the fairness issue is that students who will cheat and use one of the many online FD calculators, such as the Griffith Normalization Tool Wang and Stantic (2019), Cho’s normal form calculator Cho (2017), and other tools Stefanidis and Koloniari (2016), which correctly compute all these assignment responses, will succeed. So the question remains how could we assume the existence of FD calculators, and the not-so-great tutoring by LLMs such as ChatGPT 3.5 or 4o and CoPilot, and yet level the playing field for all? The evolution of ChatGPT into a subscription model at 4o and 4o1 also introduces a financial barrier to economically disadvantaged students who are unable to pay and have the benefit of the superiorly performing LLMs as opposed to poorly preforming free versions (ChatGPT 4o or CoPilot).

Fortunately, there are efforts underway to design graphical conversational languages to accept student responses in machine-understandable formats for tutoring and assessment purposes. The idea is to make students express their cognitive understanding of the subject matter and use their creative abilities to respond to test questions, and not just copy and paste solutions from either the FD calculators or LLMs, so that we are able to peek into students’ thought processes for evaluation purposes Jamil (2023); Jamil and Shawon (2023). It remains to be seen if the LLMs’ superior capabilities for explainability can be combined with the approaches such as NoDD that we are embarking upon designing within a conversational chatbot-like ITS.

Although NoDD is still under development for tutoring and assessing database design assignments, we compared student submissions and a ChatGPT-4o1-generated solution to the same design theory tasks. In nearly all instances, ChatGPT outperformed the students. However, this comparison does not demonstrate whether an LLM-based tutoring and assessment system would surpass an intelligent tutoring system for database design theory in terms of learning outcomes, as no such ITS currently exists. Should NoDD become fully realized, we could objectively contrast LLM-based tutoring with a dedicated ITS for database design theory. Nonetheless, the equity challenges introduced by LLMs, as discussed in this article, will likely persist and demand ongoing attention.

8. Conclusions and Future Research

Our objective in this paper was to highlight a new and emerging equity and fairness concern in online learning: the use of LLMs such as ChatGPT. We explored how unfairness and inequity can arise when learners rely on these models. Our findings show that LLMs are far from infallible; in some instances, they can prove detrimental to students’ learning outcomes. Only learners who are well informed—whether by chance or through systemic support—are likely to avoid the pitfalls we have identified. Further research is required to fully understand the scope and impact of these inequities.

An intriguing question is whether ChatGPT or CoPilot can learn from the types of conversations described here, thereby improving over time. We plan to revisit these models with the same queries to gauge any evolution in their responses. Moreover, we have observed unauthorized usage of ChatGPT by students in SQL and functional dependency assignments, raising concerns about fairness for those who choose—or are unable—to use such tools. This reality prompts deeper pedagogical questions: how do we adapt teaching methods to recognize and even encourage the use of LLMs, yet still maintain authentic assessment? Addressing these questions will guide our future research, as we continue to examine the implications of LLM usage in educational settings.

We encourage ITS designers and end users (teachers and students) to recognize that database design theory relies on algorithmic reasoning and can be effectively encoded in a smart ITS. Consequently, student solutions can be systematically compared and evaluated to generate meaningful feedback. Both textual and structured tool-driven responses Jamil (2023); Jamil and Shawon (2023) can be analyzed for tutoring and grading purposes.

Should an LLM-based ITS be developed, we believe prompt engineering guidance could offset user inexperience, enabling more effective tutoring interactions. However, it would be essential to implement guardrails—preventing the system from failing to deliver expected responses, as highlighted by the challenges discussed in this article.

Funding

This research was partially supported by an Institutional Development Award (IDeA) from the National Institute of General Medical Sciences of the National Institutes of Health under Grant P20GM103408, a National Science Foundation OIA 2019609, and a US Department of Energy grant DE-0011014.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

Author declares no conflicts of interest.

References

- Ahmedi, L., Jakupi, N., & Jajaga, E. (2012, January 30–February 4). NORMALDB—A logic-based interactive e-learning tool for database normalization and denormalization. eLmL—International Conference on Mobile, Hybrid, and On-line Learning (pp. 44–50), Valencia, Spain. [Google Scholar]

- Anders, B. A. (2023). Is using ChatGPT cheating, plagiarism, both, neither, or forward thinking? Patterns, 4(3), 100694. [Google Scholar] [CrossRef] [PubMed]

- Baek, C., Tate, T., & Warschauer, M. (2024). “ChatGPT seems too good to be true”: College students’ use and perceptions of generative AI. Computers and Education: Artificial Intelligence, 7, 100294. [Google Scholar] [CrossRef]

- Bin-Nashwan, S. A., Sadallah, M., & Bouteraa, M. (2023). Use of ChatGPT in academia: Academic integrity hangs in the balance. Technology in Society, 75, 102370. [Google Scholar] [CrossRef]

- Bispo, E. L., dos Santos, S. C., & De Matos, M. V. A. B. (2024). Equity issues derived from use of large language models in education. In New media pedagogy: Research trends, methodological challenges, and successful implementations (pp. 425–440). Springer. [Google Scholar] [CrossRef]

- Bispo, E. L., Jr., & Santos, J. M. O. (2024). Equity and LLMs in computing education: A gradeless perspective under well-being lens. In Anais do i workshop uma tarde na urca: Encontro filosófico sobre informática na educação (urca 2024) (pp. 41–45). Sociedade Brasileira de Computação—SBC. [Google Scholar] [CrossRef]

- Caccavale, F., Gargalo, C. L., Gernaey, K. V., & Krühne, U. (2024). Towards education 4.0: The role of large language models as virtual tutors in chemical engineering. Education for Chemical Engineers, 49, 1–11. [Google Scholar] [CrossRef]

- Carr, N., Shawon, F., & Jamil, H. (2023, November 27–December 1). An experiment on leveraging ChatGPT for online teaching and assessment of database students. IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE 2023), Auckland, New Zealand. [Google Scholar]

- Chakravarty, A. (2018). Functional dependencies checker. Available online: https://arjo129.github.io/functionalDependencyCalculator/ (accessed on 20 December 2024).

- Cho, R. (2017). Tool for database design. Available online: http://raymondcho.net/RelationalDatabaseTools/RelationalDatabaseTools.html (accessed on 20 December 2024).

- Chu, S., Li, D., Wang, C., Cheung, A., & Suciu, D. (2017). Demonstration of the cosette automated SQL prover. In S. Salihoglu, W. Zhou, R. Chirkova, J. Yang, & D. Suciu (Eds.), Proceedings of the 2017 ACM international conference on management of data, SIGMOD conference 2017, Chicago, IL, USA, May 14–19, 2017 (pp. 1591–1594). ACM. [Google Scholar] [CrossRef]

- Clark, H., & Jamil, H. M. (2024, December 9–12). Mixing up gemini and Ast in explains for authentic SQL tutoring. IEEE International Conference on Teaching, Assessment and Learning for Engineering, TALE 2024 (pp. 1–8), Bangaluru, India. [Google Scholar]

- Dongare, Y. V., Dhabe, P. S., & Deshmukh, S. V. (2011). RDBNorma—A semi-automated tool for relational database schema normalization up to third normal form. arXiv, arXiv:1103.0633. [Google Scholar] [CrossRef]

- Elkhatat, A. M. (2023). Evaluating the authenticity of ChatGPT responses: A study on text-matching capabilities. International Journal for Educational Integrity, 19(1), 15. [Google Scholar] [CrossRef]

- Ezra, O., Cohen, A., Bronshtein, A., Gabbay, H., & Baruth, O. (2021). Equity factors during the COVID-19 pandemic: Difficulties in emergency remote teaching (ert) through online learning. Education and Information Technologies, 26(6), 7657–7681. [Google Scholar] [CrossRef]

- FD Calculator Team. (2022). Fd calculator. Available online: http://functionaldependencycalculator.ml/ (accessed on 1 August 2022).

- Ferro, L. S., Sapio, F., Terracina, A., Temperini, M., & Mecella, M. (2021). Gea2: A serious game for technology-enhanced learning in STEM. IEEE Transactions on Learning Technologies, 14(6), 723–739. [Google Scholar] [CrossRef]

- Gorichanaz, T. (2023). Accused: How students respond to allegations of using ChatGPT on assessments. arXiv, arXiv:2308.16374. [Google Scholar] [CrossRef]

- Han, J., & Geng, X. (2023). University students’ approaches to online learning technologies: The roles of perceived support, affect/emotion and self-efficacy in technology-enhanced learning. Computers & Education, 194, 104695. [Google Scholar]

- Han, S., Jung, J., Ji, H., Lee, U., & Liu, M. (2023). The role of social presence in MOOC students’ behavioral intentions and sentiments toward the usage of a learning assistant chatbot: A diversity, equity, and inclusion perspective examination. In N. Wang, G. Rebolledo-Mendez, V. Dimitrova, N. Matsuda, & O. C. Santos (Eds.), Artificial intelligence in education. posters and late breaking results, workshops and tutorials, industry and innovation tracks, practitioners, doctoral consortium and blue sky—24th international conference, AIED 2023, Tokyo, Japan, July 3–7, 2023, proceedings (Vol. 1831, pp. 236–241). Springer. [Google Scholar]

- Higgins, E., Posada, J., Kimble-Brown, Q., Abler, S., Coy, A., & Hamidi, F. (2023). Investigating an equity-based participatory approach to technology-rich learning in community recreation centers. In A. Schmidt, K. Väänänen, T. Goyal, P. O. Kristensson, A. Peters, S. Mueller, J. R. Williamson, & M. L. Wilson (Eds.), Proceedings of the 2023 CHI conference on human factors in computing systems, CHI 2023, Hamburg, Germany, April 23–28, 2023 (pp. 443:1–443:18). ACM. [Google Scholar]

- Jamil, H. (2023, November 27–December 1). Online tutoring and plagiarism-aware authentic assessment of database design assignments. IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE 2023), Auckland, New Zealand. [Google Scholar]

- Jamil, H., & Shawon, F. (2023, November 26–28). Automatic and authentic eassessment of online database design theory assignments. International Conference on Web-Based Learning, ICWL 2023 (pp. 77–91), Sydney, Australia. [Google Scholar]

- Joyner, D. A. (2023). ChatGPT in Education: Partner or Pariah? XRDS: Crossroads, The ACM Magazine for Students, 29(3), 48–51. [Google Scholar]

- Jukic, N., Vrbsky, S., Nestorov, S., & Sharma, A. (2020). Erdplus. Available online: https://erdplus.com/ (accessed on 20 December 2024).

- Kessler, J., Tschuggnall, M., & Specht, G. (2019). RelaX: A webbased execution and learning tool for relational algebra. In T. Grust, F. Naumann, A. Böhm, W. Lehner, T. Härder, E. Rahm, & A. Heuer (Eds.), Datenbanksysteme für business, technologie und web (BTW 2019), 18. fachtagung des gi-fachbereichs “datenbanken und informationssysteme” (dbis), 4–8 März 2019, Rostock, Germany, proceedings (Vol. P-289, pp. 503–506). Gesellschaft für Informatik. [Google Scholar] [CrossRef]

- Khalil, M., & Er, E. (2023). Will ChatGPT get you caught? Rethinking of plagiarism detection. In P. Zaphiris, & A. Ioannou (Eds.), Learning and collaboration technologies—10th international conference, LCT 2023, held as part of the 25th HCI international conference, HCII 2023, Copenhagen, Denmark, July 23–28, 2023, proceedings, part I (Vol. 14040, pp. 475–487). Springer. [Google Scholar]

- Khalil, M., Prinsloo, P., & Slade, S. (2023). Fairness, trust, transparency, equity, and responsibility in learning analytics. Journal of Learning Analytics, 10(1), 1–7. [Google Scholar] [CrossRef]

- Kiesler, N., & Schiffner, D. (2023). Large language models in introductory programming education: ChatGPT’s performance and implications for assessments. arXiv, arXiv:2308.08572. [Google Scholar] [CrossRef]

- Kung, H.-J., & Tung, H.-L. (2006). A web-based tool to enhance teaching/learning database normalization. Sais. Available online: https://aisel.aisnet.org/sais2006/43 (accessed on 20 December 2024).

- Kung, H., & Tung, H. (2006). An alternative approach to teaching database normalization: A simple algorithm and an interactive e-learning tool. Journal of Information Systems Education, 17(3), 315–326. [Google Scholar]

- Li, J., Gui, L., Zhou, Y., West, D., Aloisi, C., & He, Y. (2023). Distilling ChatGPT for explainable automated student answer assessment. arXiv, arXiv:2305.12962. [Google Scholar] [CrossRef]

- Lin, S., Chung, H., Chung, F., & Lan, Y. (2023). Concerns about using ChatGPT in education. In Y. Huang, & T. Rocha (Eds.), Innovative technologies and learning—6th international conference, ICITL 2023, Porto, Portugal, August 28–30, 2023, proceedings (Vol. 14099, pp. 37–49). Springer. [Google Scholar]

- Littenberg-Tobias, J., & Reich, J. (2020). Evaluating access, quality, and equity in online learning: A case study of a MOOC-based blended professional degree program. The Internet and Higher Education, 47, 100759. [Google Scholar] [CrossRef]

- Lyu, W., Wang, Y., Chung, T. R., Sun, Y., & Zhang, Y. (2024). Evaluating the effectiveness of LLMs in introductory computer science education: A semester-long field study. In D. Joyner, M. K. Kim, X. Wang, & M. Xia (Eds.), Proceedings of the eleventh ACM conference on learning@scale, l@s 2024, Atlanta, GA, USA, July 18–20, 2024 (pp. 63–74). ACM. [Google Scholar] [CrossRef]

- Mawasi, A., Aguilera, E., Wylie, R., & Gee, E. (2020). Neutrality, “New” digital divide, and openness paradox: Equity in learning environments mediated by educational technology. In Interdisciplinarity in the learning sciences: Proceedings of the 14th international conference of the learning sciences, ICLS 2020, [Nashville, Tennessee, Usa], online conference, June 19–23, 2020. International Society of the Learning Sciences. [Google Scholar]

- Mazzullo, E., Bulut, O., Wongvorachan, T., & Tan, B. (2023). Learning analytics in the era of large language models. Analytics, 2(4), 877–898. [Google Scholar] [CrossRef]

- McBroom, J., Yacef, K., & Koprinska, I. (2020). Scalability in online computer programming education: Automated techniques for feedback, evaluation and equity. In A. N. Rafferty, J. Whitehill, C. Romero, & V. Cavalli-Sforza (Eds.), Proceedings of the 13th international conference on educational data mining, EDM 2020, fully virtual conference, July 10–13, 2020. International Educational Data Mining Society. [Google Scholar]

- Mitrovic, A. (2002, December 3–6). NORMIT: A web-enabled tutor for database normalization. International Conference on Computers in Education, ICCE 2002 (Vol. 2, pp. 1276–1280), Auckland, New Zealand. [Google Scholar]

- Niloy, A. C., Bari, M. A., Sultana, J., Chowdhury, R., Raisa, F. M., Islam, A., Mahmud, S., Jahan, I., Sarkar, M., Akter, S., Nishat, N., Afroz, M., Sen, A., Islam, T., Tareq, M. H., & Hossen, M. A. (2024). Why do students use ChatGPT? Answering through a triangulation approach. Computers and Education: Artificial Intelligence, 6, 100208. [Google Scholar] [CrossRef]

- Pardos, Z. A., & Bhandari, S. (2023). Learning gain differences between ChatGPT and human tutor generated algebra hints. arXiv, arXiv:2302.06871. [Google Scholar] [CrossRef]

- Piza-Dávila, H. I., Preciado, L. F. G., & Ortega-Guzmán, V. H. (2017). An educational software for teaching database normalization. Computer Applications in Engineering Education, 25(5), 812–822. [Google Scholar] [CrossRef]

- Pozdniakov, S., Brazil, J., Abdi, S., Bakharia, A., Sadiq, S., Gašević, D., Denny, P., & Khosravi, H. (2024). Large language models meet user interfaces: The case of provisioning feedback. Computers and Education: Artificial Intelligence, 7, 100289. [Google Scholar] [CrossRef]

- Qureshi, B. (2023). Exploring the use of ChatGPT as a tool for learning and assessment in undergraduate computer science curriculum: Opportunities and challenges. arXiv, arXiv:2304.11214. [Google Scholar] [CrossRef]

- Röhm, U., Brent, L., Dawborn, T., & Jeffries, B. (2020). SQL for data scientists: Designing SQL tutorials for scalable online teaching. Proceedings of the VLDB Endowment, 13(12), 2989–2992. [Google Scholar] [CrossRef]

- Rospigliosi, P. A. (2023). Artificial intelligence in teaching and learning: What questions should we ask of ChatGPT? Interactive Learning Environments, 31(1), 1–3. [Google Scholar] [CrossRef]

- Rueda, M. M., Fernández-Cerero, J., Fernández-Batanero, J. M., & López-Meneses, E. (2023). Impact of the implementation of ChatGPT in education: A systematic review. Computers, 12(8), 153. [Google Scholar] [CrossRef]

- Seetharaman, R. (2023). Revolutionizing medical education: Can ChatGPT boost subjective learning and expression? Journal of Medical Systems, 47(1), 61. [Google Scholar] [CrossRef]

- Shahzad, T., Mazhar, T., Tariq, M. U., Ahmad, W., Ouahada, K., & Hamam, H. (2025). A comprehensive review of large language models: Issues and solutions in learning environments. Discover Sustainability, 6(1), 27. [Google Scholar] [CrossRef]

- Silberschatz, A., Korth, H. F., & Sudarshan, S. (2020). Database system concepts (7th ed.). McGraw-Hill Book Company. [Google Scholar]

- Soares, J. A. (2011). The matthew effect: How advantage begets further advantage. Contemporary Sociology: A Journal of Reviews, 40(4), 477–478. [Google Scholar] [CrossRef]

- Soler, J., Boada, I., Prados, F., & Poch, J. (2006, October 24–26). A web-based problem-solving environment for database normalization. Simposio Internacional de Informatica Educativa, SIIE (2006), Leon, Spain. [Google Scholar]

- Springfield, I. (2024). Relational database tools. Available online: https://uisacad5.uis.edu/cgi-bin/mcrem2/database_design_tool.cgi (accessed on 20 December 2024).

- Stefanidis, C., & Koloniari, G. (2016, November 10–12). An interactive tool for teaching and learning database normalization. The 20th Pan-Hellenic Conference on Informatics (p. 18), Patras, Greece. Available online: http://dl.acm.org/citation.cfm?id=3003790 (accessed on 20 December 2024).

- Vargas-Murillo, A. R., de la Asuncion Pari-Bedoya, I. N. M., & de Jesus Guevara-Soto, F. (2023, June 9–12). The ethics of AI assisted learning: A systematic literature review on the impacts of ChatGPT usage in education. The 2023 8th International Conference on Distance Education and Learning, ICDEL 2023 (pp. 8–13), Beijing, China. [Google Scholar]

- Vasconcelos, M. A. R., & dos Santos, R. P. (2023). Enhancing STEM learning with ChatGPT and bing chat as objects to think with: A case study. arXiv, arXiv:2305.02202. [Google Scholar] [CrossRef]

- Walberg, H. J., & Tsai, S.-L. (1983). Matthew effects in education. American Educational Research Journal, 20(3), 359–373. [Google Scholar] [CrossRef]

- Wang, J., & Stantic, B. (2019). Facilitating learning by practice and examples: A tool for learning table normalization. In G. Eleftherakis, M. Lazarova, A. Aleksieva-Petrova, & A. Tasheva (Eds.), Proceedings of the 9th balkan conference on informatics, BCI 2019, Sofia, Bulgaria, September 26–28, 2019 (pp. 35:1–35:4). ACM. [Google Scholar] [CrossRef]

- Williamson, K., & Kizilcec, R. F. (2021). Learning analytics dashboard research has neglected diversity, equity and inclusion. In C. Meinel, M. Pérez-Sanagustín, M. Specht, & A. Ogan (Eds.), L@s’21: Eighth ACM conference on learning@scale, virtual event, Germany, June 22–25, 2021 (pp. 287–290). ACM. [Google Scholar]

- Williamson, K., & Kizilcec, R. F. (2022, March 21–25). A review of learning analytics dashboard research in higher education: Implications for justice, equity, diversity, and inclusion. LAK 2022: 12th International Learning Analytics and Knowledge Conference (pp. 260–270), Online. [Google Scholar]

- Xiang, V., Snell, C., Gandhi, K., Albalak, A., Singh, A., Blagden, C., Phung, D., Rafailov, R., Lile, N., Mahan, D., Castricato, L., Franken, J.-P., Haber, N., & Finn, C. (2025). Towards system 2 reasoning in llms: Learning how to think with meta chain-of-thought. Available online: https://arxiv.org/abs/2501.04682 (accessed on 20 December 2024).

- Yang, F. (2011). A virtual tutor for relational schema normalization. ACM Inroads, 2(3), 38–42. [Google Scholar]

- Zhai, X. (2023). ChatGPT for next generation science learning. XRDS: Crossroads, The ACM Magazine for Students, 29(3), 42–46. [Google Scholar]

- Zheng, Y. (2023). ChatGPT for teaching and learning: An experience from data science education. arXiv, arXiv:2307.16650. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).