Applying Natural Language Processing Adaptive Dialogs to Promote Knowledge Integration During Instruction

Abstract

:1. Introduction

- (1)

- How does consistent engagement in the NLP dialog compare to inconsistent engagement in influencing students’ KI scores across instruction?

- (2)

- How does the NLP adaptive dialog help students strengthen their integrated understanding of photosynthesis across instruction?

- (3)

- How does the NLP adaptive dialog support students to integrate ideas about photosynthesis across instruction?

- (4)

- How do the two rounds of guidance within the NLP dialog help students integrate their ideas?

2. Literature Review

2.1. Knowledge Integration Framework

2.2. NLP and Knowledge Integration Framework

2.3. Designing Automated Guidance in KI Framework

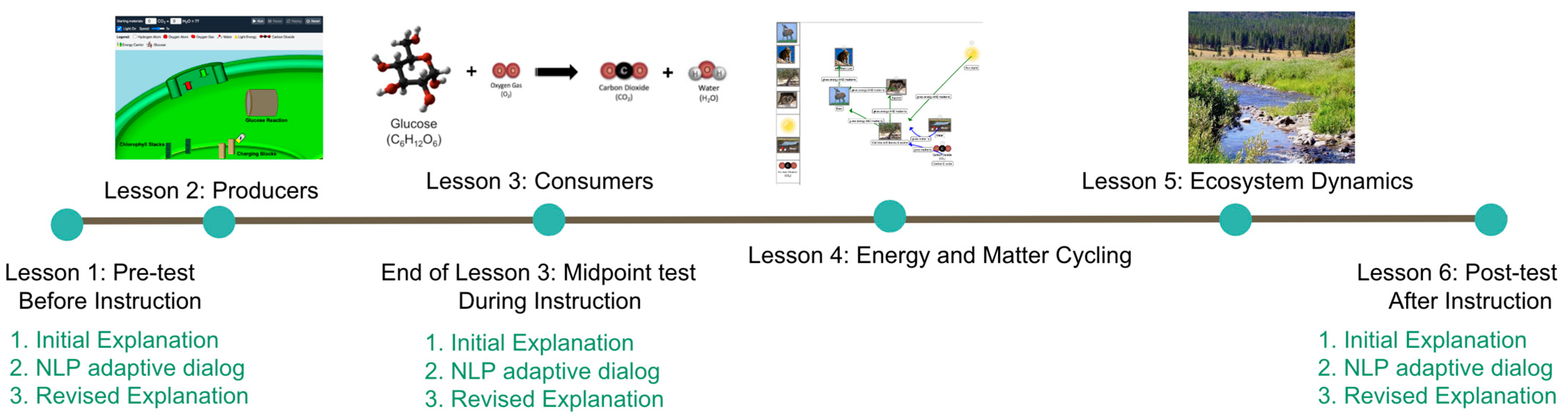

3. Curriculum Design

4. Methods

4.1. Participants

4.2. NLP Models

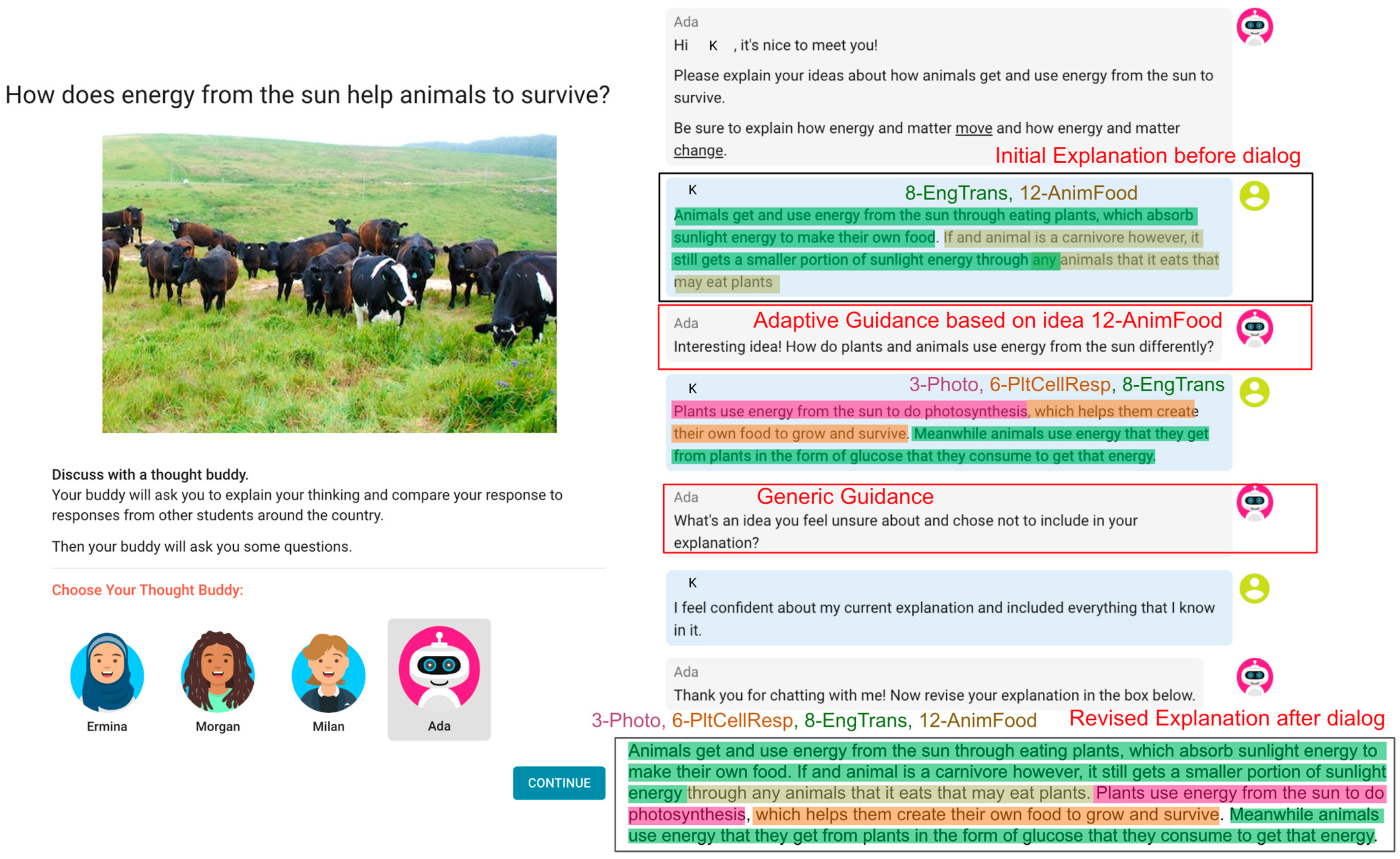

4.3. Guidance Design in the NLP Dialog

5. Data Preprocessing and Analysis

5.1. Data Preprocessing

5.2. Data Analysis

6. Results

6.1. How Did the NLP Dialog Engagement Affect Student Learning?

6.2. How Did the NLP Dialog Strengthen KI Scores Along with Instruction?

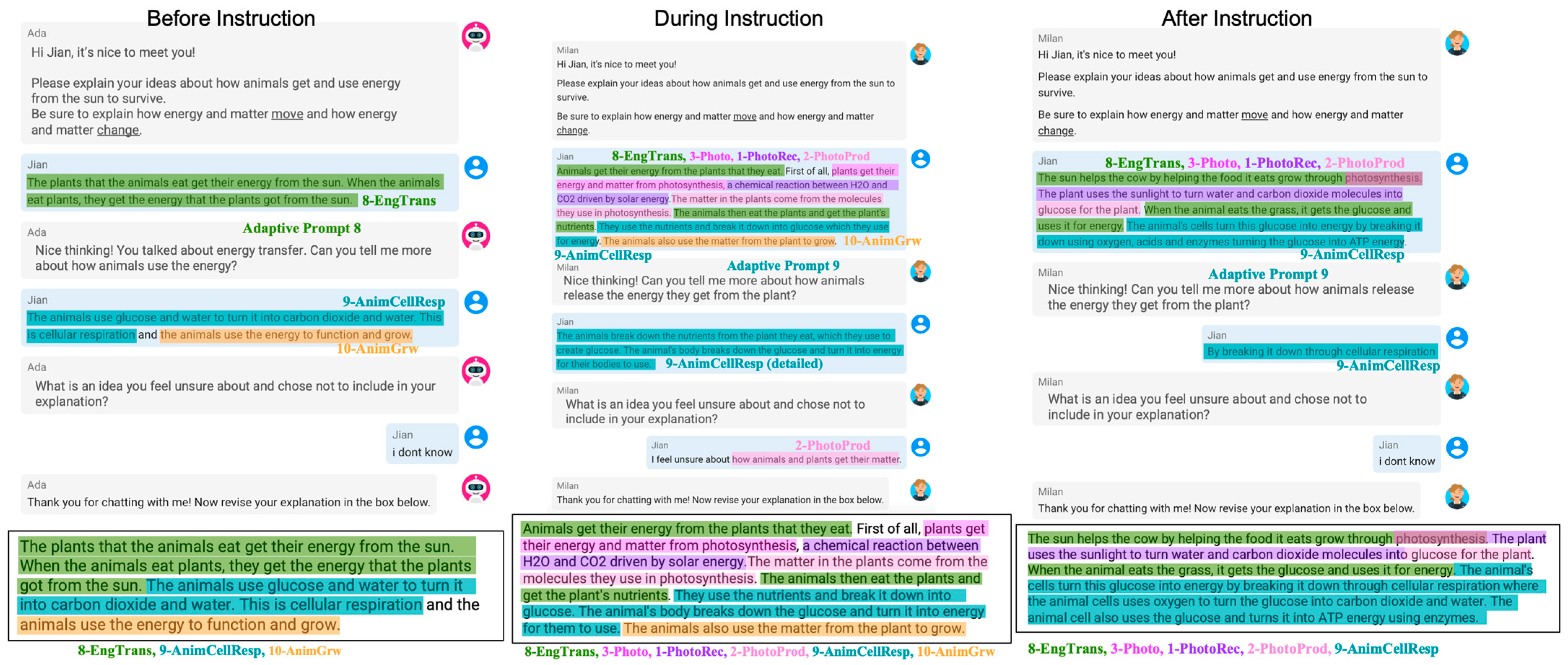

6.3. How Did the NLP Dialog Elicit Ideas Along with Instruction?

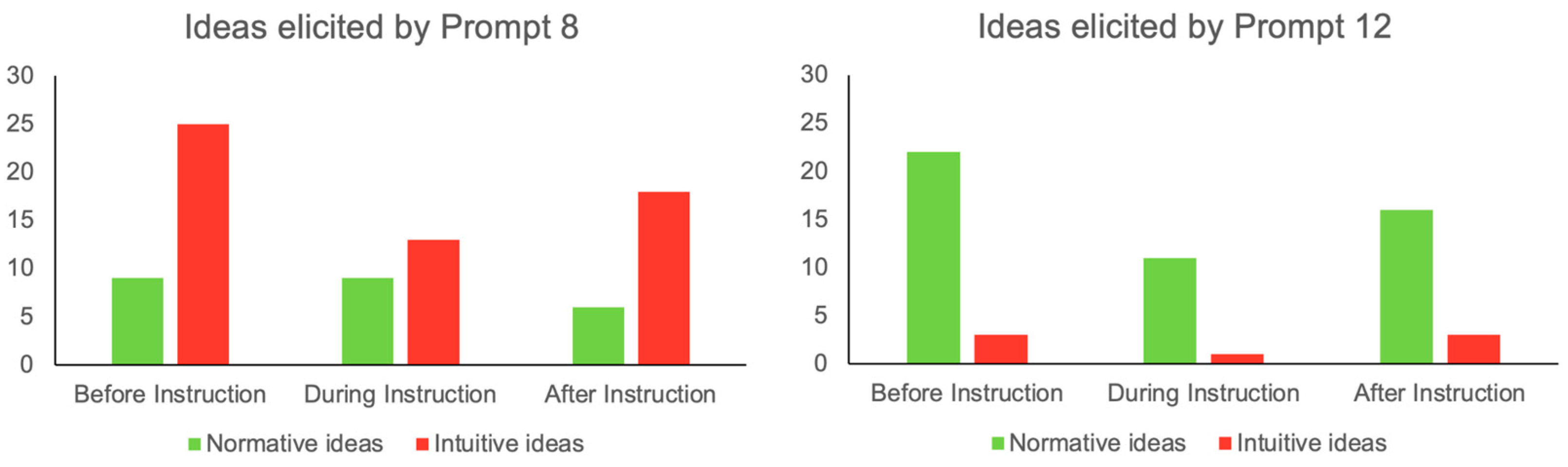

6.4. How Did the Two Rounds of Guidance Work in the NLP Dialog?

6.4.1. Round 1: Adaptive Guidance

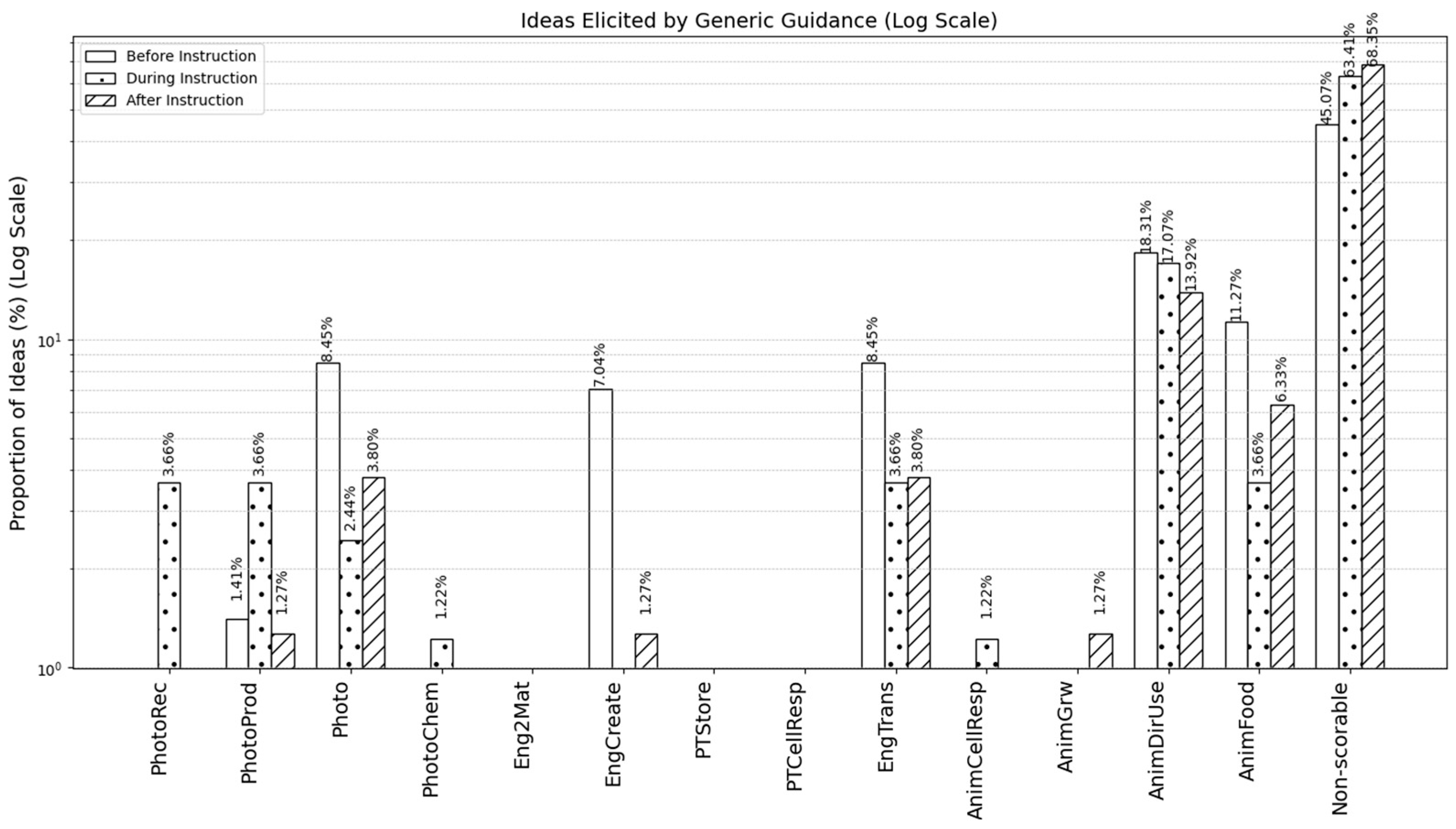

6.4.2. Round 2: Generic Reflection Guidance

7. Discussion

8. Limitations

9. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aleven, V. A., & Koedinger, K. R. (2002). An effective metacognitive strategy: Learning by doing and explaining with a computer-based cognitive tutor. Cognitive Science, 26, 147–179. [Google Scholar]

- Amir, R., & Tamir, P. (1990). Detailed analysis of misconceptions as a basis for developing remedial instruction: The case of photosynthesis. Available online: https://eric.ed.gov/?id=ED319635 (accessed on 4 November 2022).

- Atteberry, A., Loeb, S., & Wyckoff, J. (2017). Teacher churning: Reassignment rates and implications for student achievement. Educational Evaluation and Policy Analysis, 39(1), 3–30. [Google Scholar] [CrossRef]

- Barker, M., & Carr, M. (1989). Teaching and learning about photosynthesis. Part 1: An assessment in terms of students’ prior knowledge. International Journal of Science Education, 11(1), 49–56. [Google Scholar] [CrossRef]

- Barton, A. C., & Tan, E. (2009). Funds of knowledge and discourses and hybrid space. Journal of Research in Science Teaching, 46(1), 50–73. [Google Scholar] [CrossRef]

- Basu, S. J., & Barton, A. C. (2007). Developing a sustained interest in science among urban minority youth. Journal of Research in Science Teaching, 44(3), 466–489. [Google Scholar] [CrossRef]

- Beltagy, I., Lo, K., & Cohan, A. (2019). SciBERT: A pretrained language model for scientific text. arXiv, arXiv:1903.10676. [Google Scholar] [CrossRef]

- Bradford, A., Li, W., Steimel, K., Riordan, B., & Linn, M. C. (2023). Adaptive dialog to support student understanding of climate change mechanism and who is most impacted. In P. Blikstein, J. Van Aalst, R. Kizito, & K. Brennan (Eds.), Proceedings of the 17th international conference of the learning sciences—ICLS 2023 (pp. 816–823). International Society of the Learning Sciences. [Google Scholar] [CrossRef]

- Bransford, J. D., Brown, A. L., & Cocking, R. (1999). How people learn: Brain, mind, experience, and school. National Academy Press. [Google Scholar]

- Brown, M. H., & Schwartz, R. S. (2009). Connecting photosynthesis and cellular respiration: Preservice teachers’ conceptions. Journal of Research in Science Teaching, 46(7), 791–812. [Google Scholar] [CrossRef]

- Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C., Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon, K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian, D. L. (2018). Translating the ICAP theory of cognitive engagement into practice. Cognitive Science, 42(6), 1777–1832. [Google Scholar] [CrossRef]

- Chi, M., Siler, S., Jeong, H., Yamauchi, T., & Hausmann, R. (2001). Learning from human tutoring. Cognitive Science, 25, 471–533. [Google Scholar]

- Davis, E. A. (2003). Prompting middle school science students for productive reflection: Generic and directed prompts. The Journal of the Learning Sciences, 12(1), 91–142. [Google Scholar]

- diSessa, A. A. (1993). Toward an epistemology of physics. Cognition and Instruction, 10(2–3), 105–225. [Google Scholar] [CrossRef]

- diSessa, A. A., & Sherin, B. L. (1998). What changes in conceptual change? International Journal of Science Education, 20(10), 1155–1191. [Google Scholar] [CrossRef]

- Eisen, Y., & Stavy, R. (1993). How to make the learning of photosynthesis more relevant. International Journal of Science Education, 15(2), 117–125. [Google Scholar] [CrossRef]

- Ferguson, C., van den Broek, E. L., & van Oostendorp, H. (2022). AI-induced guidance: Preserving the optimal zone of proximal development. Computers and Education: Artificial Intelligence, 3, 100089. [Google Scholar] [CrossRef]

- Gerard, L. F., & Linn, M. C. (2016). Using automated scores of student essays to support teacher guidance in classroom inquiry. Journal of Science Teacher Education, 27(1), 111–129. [Google Scholar] [CrossRef]

- Gerard, L. F., Ryoo, K., McElhaney, K. W., Liu, O. L., Rafferty, A. N., & Linn, M. C. (2016). Automated guidance for student inquiry. Journal of Educational Psychology, 108(1), 60–81. [Google Scholar] [CrossRef]

- Gerard, L., & Linn, M. C. (2022). Computer-based guidance to support students’ revision of their science explanations. Computers & Education, 176, 104351. [Google Scholar] [CrossRef]

- Gerard, L., Holtman, M., Riordan, B., & Linn, M. C. (2024a). Impact of an adaptive dialog that uses natural language processing to detect students’ ideas and guide knowledge integration. Journal of Educational Psychology, 117(1), 63–87. [Google Scholar] [CrossRef]

- Gerard, L., Linn, M. C., & Holtmann, M. (2024b). A comparison of responsive and general guidance to promote learning in an online science dialog. Education Sciences, 14(12), 1383. [Google Scholar] [CrossRef]

- Gerard, L., Matuk, C., McElhaney, K., & Linn, M. C. (2015). Automated, adaptive guidance for K-12 education. Educational Research Review, 15, 41–58. [Google Scholar] [CrossRef]

- Gonzalez, N., Moll, L. C., & Amanti, C. (2006). Funds of knowledge: Theorizing practices in households, communities, and classrooms. Routledge. [Google Scholar]

- Graesser, A. C. (2016). Conversations with autotutor help students learn. International Journal of Artificial Intelligence in Education, 26(1), 124–132. [Google Scholar] [CrossRef]

- Graesser, A. C., Lu, S., Jackson, G. T., Mitchell, H. H., Ventura, M., Olney, A., & Louwerse, M. M. (2004). AutoTutor: A tutor with dialogue in natural language. Behavior Research Methods, Instruments, & Computers, 36(2), 180–192. [Google Scholar] [CrossRef]

- Hammer, D. (1995). Student inquiry in a physics class discussion. Cognition and Instruction, 13(3), 401–430. [Google Scholar] [CrossRef]

- Haslam, F., & Treagust, D. F. (1987). Diagnosing secondary students’ misconceptions of photosynthesis and respiration in plants using a two-tier multiple choice instrument. Journal of Biological Education, 21(3), 203–211. [Google Scholar] [CrossRef]

- Hmelo-Silver, C. E., Duncan, R. G., & Chinn, C. A. (2007). Scaffolding and achievement in problem-based and inquiry learning: A response to kirschner, sweller, and clark (2006). Educational Psychologist, 42(2), 99–107. [Google Scholar] [CrossRef]

- Holtmann, M., Gerard, L., Li, W., Linn, M. C., & Riordan, B. (2023). How does an adaptive dialog based on natural language processing impact students from distinct language backgrounds? In P. Blikstein, J. Van Aalst, R. Kizito, & K. Brennan (Eds.), Proceedings of the 17th international conference of the learning sciences—ICLS 2023 (pp. 1350–1353). International Society of the Learning Sciences. [Google Scholar] [CrossRef]

- Inhelder, B., & Piaget, J. (1958). The growth of logical thinking from childhood to adolescence; An essay on the construction of formal operational structures. Basic Books. [Google Scholar]

- Jurenka, I., Kunesch, M., McKee, K. R., Gillick, D., Zhu, S., Wiltberger, S., Phal, S. M., Hermann, K., Kasenberg, D., Bhoopchand, A., Anand, A., Pîslar, M., Chan, S., Wang, L., She, J., Mahmoudieh, P., Rysbek, A., Ko, W.-J., Huber, A., . . . Ibrahim, L. (2024). Towards responsible development of generative AI for education: An evaluation-driven approach. arXiv, arXiv:2407.12687. Available online: http://arxiv.org/abs/2407.12687 (accessed on 28 January 2025).

- Kang, H., Windschitl, M., Stroupe, D., & Thompson, J. (2016). Designing, launching, and implementing high quality learning opportunities for students that advance scientific thinking. Journal of Research in Science Teaching, 53(9), 1316–1340. [Google Scholar] [CrossRef]

- Kirschner, P. A., Sweller, J., & Clark, R. E. (2006). Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educational Psychologist, 41, 75–86. [Google Scholar] [CrossRef]

- Kubsch, M., Krist, C., & Rosenberg, J. M. (2023). Distributing epistemic functions and tasks—A framework for augmenting human analytic power with machine learning in science education research. Journal of Research in Science Teaching, 60(2), 423–447. [Google Scholar] [CrossRef]

- Kulik, J. A., & Fletcher, J. D. (2016). Effectiveness of intelligent tutoring systems: A meta-analytic review. Review of Educational Research, 86(1), 42–78. [Google Scholar] [CrossRef]

- Larkin, D. (2012). Misconceptions about “misconceptions”: Preservice secondary science teachers’ views on the value and role of student ideas. Science Education, 96(5), 927–959. [Google Scholar] [CrossRef]

- Lee, H.-S., Gweon, G.-H., Lord, T., Paessel, N., Pallant, A., & Pryputniewicz, S. (2021). Machine learning-enabled automated feedback: Supporting students’ revision of scientific arguments based on data drawn from simulation. Journal of Science Education and Technology, 30(2), 168–192. [Google Scholar] [CrossRef]

- Lee, H.-S., Liu, O. L., & Linn, M. C. (2011). Validating measurement of knowledge integration in science using multiple-choice and explanation items. Applied Measurement in Education, 24(2), 115–136. [Google Scholar] [CrossRef]

- Lee, H.-S., Pallant, A., Pryputniewicz, S., Lord, T., Mulholland, M., & Liu, O. L. (2019). Automated text scoring and real-time adjustable feedback: Supporting revision of scientific arguments involving uncertainty. Science Education, 103(3), 590–622. [Google Scholar] [CrossRef]

- Li, W., Chang, H.-Y., Bradford, A., Gerard, L., & Linn, M. C. (2024a). Combining natural language processing with epistemic network analysis to investigate student knowledge integration within an AI Dialog. Journal of Science Education and Technology, 1–14. [Google Scholar] [CrossRef]

- Li, W., Liao, Y., Steimel, K., Bradford, A., Gerard, L., & Linn, M. (2024b). Teacher-informed expansion of an idea detection model for a knowledge integration assessment. In Proceedings of the eleventh ACM conference on learning@ scale (pp. 447–450). Association for Computing Machinery. [Google Scholar] [CrossRef]

- Li, W., Lim-Breitbart, J., Bradford, A., Linn, M. C., Riordan, B., & Steimel, K. (2023). Explaining thermodynamics: Impact of an adaptive dialog based on a natural language processing idea detection model. In P. Blikstein, J. Van Aalst, R. Kizito, & K. Brennan (Eds.), Proceedings of the 17th international conference of the learning sciences—ICLS 2023 (pp. 1306–1309). International Society of the Learning Sciences. [Google Scholar] [CrossRef]

- Linn, M. C., & Eylon, B.-S. (2011). Science learning and instruction: Taking advantage of technology to promote knowledge integration. Routledge. [Google Scholar]

- Linn, M. C., & Hsi, S. (2000). Computers, teachers, peers: Science learning partners. Lawrence Erlbaum Associates. [Google Scholar]

- Linn, M. C., Donnelly-Hermosillo, D., & Gerard, L. (2023). Synergies between learning technologies and learning sciences: Promoting equitable secondary school teaching. In Handbook of research on science education. Routledge. [Google Scholar]

- Liu, O. L., Rios, J. A., Heilman, M., Gerard, L., & Linn, M. C. (2016). Validation of automated scoring of science assessments. Journal of Research in Science Teaching, 53(2), 215–233. [Google Scholar] [CrossRef]

- Liu, O. L., Ryoo, K., Linn, M. C., Sato, E., & Svihla, V. (2015). Measuring knowledge integration learning of energy topics: A two-year longitudinal study. International Journal of Science Education, 37(7), 1044–1066. [Google Scholar] [CrossRef]

- Luna, M. J. (2018). What does it mean to notice my students’ ideas in science today?: An investigation of elementary teachers’ practice of noticing their students’ thinking in science. Cognition and Instruction, 36(4), 297–329. [Google Scholar] [CrossRef]

- Mestad, I., & Kolstø, S. D. (2014). Using the concept of zone of proximal development to explore the challenges of and opportunities in designing discourse activities based on practical work. Science Education, 98(6), 1054–1076. [Google Scholar] [CrossRef]

- Myers, M. C., & Wilson, J. (2023). Evaluating the construct validity of an automated writing evaluation system with a randomization algorithm. International Journal of Artificial Intelligence in Education, 33(3), 609–634. [Google Scholar] [CrossRef]

- NGSS Lead States. (2013). Next generation science standards: For states, by states. National Academies Press. Available online: http://www.nextgenscience.org/next-generation-science-standards (accessed on 10 October 2022).

- Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. [Google Scholar] [CrossRef]

- Nye, B. D., Graesser, A. C., & Hu, X. (2014). AutoTutor and family: A review of 17 years of natural language tutoring. International Journal of Artificial Intelligence in Education, 24(4), 427–469. [Google Scholar] [CrossRef]

- Özay, E., & Öztaş, H. (2003). Secondary students’ interpretations of photosynthesis and plant nutrition. Journal of Biological Education, 37(2), 68–70. [Google Scholar] [CrossRef]

- Paladines, J., & Ramirez, J. (2020). A systematic literature review of intelligent tutoring systems with dialogue in natural language. IEEE Access, 8, 164246–164267. [Google Scholar] [CrossRef]

- Pintrich, P. R. (2000). The role of goal orientation in self-regulated learning. In Handbook of self-regulation (pp. 451–502). Academic Press. [Google Scholar] [CrossRef]

- Puntambekar, S., Dey, I., Gnesdilow, D., Passonneau, R. J., & Kim, C. (2023). Examining the effect of automated assessments and feedback on students’ written science explanations. International Society of the Learning Sciences. Available online: https://repository.isls.org//handle/1/10060 (accessed on 28 January 2025).

- Riordan, B., Bichler, S., Bradford, A., King Chen, J., Wiley, K., Gerard, L., & C. Linn, M. (2020a). An empirical investigation of neural methods for content scoring of science explanations. In Proceedings of the fifteenth workshop on innovative use of NLP for building educational applications (pp. 135–144). Association for Computational Linguistics. [Google Scholar] [CrossRef]

- Riordan, B., Bichler, S., Steimel, K., & Bradford, A. (2021, June 15–17). Detecting students’ emerging ideas in science explanations [Poster presentation]. 2021 National Science Foundation DRK-12 PI Meeting, Virtually. [Google Scholar] [CrossRef]

- Riordan, B., Cahill, A., Chen, J. K., Wiley, K., Bradford, A., Gerard, L., & Linn, M. C. (2020b, February 8). Identifying NGSS-aligned ideas in student science explanations. Workshop on Artificial Intelligence for Education, New York, NY, USA. [Google Scholar]

- Riordan, B., Wiley, K., King Chen, J., Bradford, A., Gerard, L., & Linn, M. C. (2020c, April 17–21). Automated scoring of science explanations for multiple NGSS dimensions and knowledge integration. 2020 American Educational Research Association (AERA) Annual Meeting, San Francisco, CA, USA. [Google Scholar]

- Rivera Maulucci, M. S., Brown, B. A., Grey, S. T., & Sullivan, S. (2014). Urban middle school students’ reflections on authentic science inquiry. Journal of Research in Science Teaching, 51(9), 1119–1149. [Google Scholar] [CrossRef]

- Rodriguez, G. M. (2013). Power and agency in education: Exploring the pedagogical dimensions of funds of knowledge. Review of Research in Education, 37, 87–120. [Google Scholar] [CrossRef]

- Rosebery, A. S., Warren, B., & Tucker-Raymond, E. (2016). Developing interpretive power in science teaching. Journal of Research in Science Teaching, 53(10), 1571–1600. [Google Scholar] [CrossRef]

- Roseman, J., Stern, L., & Koppal, M. (2009). A method for analyzing the coherence of high school biology textbooks. Journal of Research in Science Teaching, 47(1), 47–70. [Google Scholar] [CrossRef]

- Ryoo, K., & Linn, M. C. (2012). Can dynamic visualizations improve middle school students’ understanding of energy in photosynthesis? Journal of Research in Science Teaching, 49(2), 218–243. [Google Scholar] [CrossRef]

- Ryoo, K., & Linn, M. C. (2014). Designing guidance for interpreting dynamic visualizations: Generating versus reading explanations. Journal of Research in Science Teaching, 51(2), 147–174. [Google Scholar] [CrossRef]

- Ryoo, K., & Linn, M. C. (2015). Designing and validating assessments of complex thinking in science. Theory Into Practice, 54(3), 238–254. [Google Scholar] [CrossRef]

- Ryoo, K., & Linn, M. C. (2016). Designing automated guidance for concept diagrams in inquiry instruction. Journal of Research in Science Teaching, 53(7), 1003–1035. [Google Scholar] [CrossRef]

- Schulz, C., Eger, S., Daxenberger, J., Kahse, T., & Gurevych, I. (2018). Multi-task learning for argumentation mining in low-resource settings. arXiv, arXiv:1804.04083. [Google Scholar] [CrossRef]

- Schulz, C., Meyer, C. M., & Gurevych, I. (2019). Challenges in the automatic analysis of students’ diagnostic reasoning. Proceedings of the AAAI Conference on Artificial Intelligence, 33(01), 01. [Google Scholar] [CrossRef]

- Schwartz, R., & Lederman, N. (2008). What scientists say: Scientists’ views of nature of science and relation to science context. International Journal of Science Education, 30(6), 727–771. [Google Scholar] [CrossRef]

- Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research, 78(1), 153–189. [Google Scholar] [CrossRef]

- Simpson, M., & Arnold, B. (1982). The inappropriate use of subsumers in biology learning. European Journal of Science Education, 4(2), 173–182. [Google Scholar] [CrossRef]

- Smith, J. P., III, diSessa, A. A., & Roschelle, J. (1994). Misconceptions reconceived: A constructivist analysis of knowledge in transition. Journal of the Learning Sciences, 3(2), 115–163. [Google Scholar] [CrossRef]

- VanLehn, K., Graesser, A. C., Jackson, G. T., Jordan, P., Olney, A., & Rose, C. P. (2007). When are tutorial dialogues more effective than reading? Cognitive Science, 31(1), 3–62. [Google Scholar] [CrossRef]

- Vitale, J. M., McBride, E., & Linn, M. C. (2016). Distinguishing complex ideas about climate change: Knowledge integration vs. specific guidance. International Journal of Science Education, 38(9), 1548–1569. [Google Scholar] [CrossRef]

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press. [Google Scholar]

- Walker, E., Rummel, N., & Koedinger, K. R. (2011). Using automated dialog analysis to assess peer tutoring and trigger effective support. In S. B. G. Biswas, & A. M. J. Kay (Eds.), Proceedings of the 10th international conference on artificial intelligence in education (pp. 385–393). Springer. [Google Scholar]

- Wiley, K., Bradford, A., & Linn, M. C. (2019). Supporting collaborative curriculum customizations using the knowledge integration framework. Computer-Supported Collaborative Learning, 1, 480–487. Available online: https://par.nsf.gov/biblio/10106811-supporting-collaborative-curriculum-customizations-using-knowledge-integration-framework (accessed on 3 January 2024).

- Wollny, S., Schneider, J., Di Mitri, D., Weidlich, J., Rittberger, M., & Drachsler, H. (2021). Are we there yet?—A systematic literature review on chatbots in education. Frontiers in Artificial Intelligence, 4, 654924. [Google Scholar] [CrossRef] [PubMed]

- Zhai, X., He, P., & Krajcik, J. (2022). Applying machine learning to automatically assess scientific models. Journal of Research in Science Teaching, 59(10), 1765–1794. [Google Scholar] [CrossRef]

- Zhai, X., Krajcik, J., & Pellegrino, J. W. (2021). On the validity of machine learning-based next generation science assessments: A validity inferential network. Journal of Science Education and Technology, 30(2), 298–312. [Google Scholar] [CrossRef]

- Zhai, X., Yin, Y., Pellegrino, J. W., Haudek, K. C., & Shi, L. (2020). Applying machine learning in science assessment: A systematic review. Studies in Science Education, 56(1), 111–151. [Google Scholar] [CrossRef]

- Zhu, M., Liu, O. L., & Lee, H.-S. (2020). The effect of automated feedback on revision behavior and learning gains in formative assessment of scientific argument writing. Computers & Education, 143, 103668. [Google Scholar] [CrossRef]

| KI | Description | Ideas and Descriptions | Adaptive Guidance |

|---|---|---|---|

| 1 | Irrelevant/off-topic | Off topic ideas (e.g., I don’t know.) | Can you tell me more about this idea or another one in your explanation? I am still learning about student ideas to become a better thought partner. |

| 2 | No link Incomplete/vague/inaccurate ideas | 4-EngCreate: Energy is created not transformed/transferred (e.g., chemical energy is created by plants) | Cannot be accurately detected. |

| 5-Eng2Mat: Plants transform (convert/change/turn) light energy into glucose/sugar/food OR turn/transform glucose into energy | Cannot be accurately detected. | ||

| 11-AnimDirUse: Animals directly use the Sun’s energy for vitamins and keeping warm | Interesting idea! Can animals live without sunlight? How does the animal use energy from the sun? | ||

| 12-AnimFood: Animals eat plants [focused on animals eat plants as food and NOT about energy such as sun grows plants which are energy source for animals] | Interesting idea! How do plants and animals use energy from the sun differently? | ||

| 3 | Partial link Accurate idea(s), but isolated (conclusion only, explanation/evidence only) | 1-PhotoRec: CO2, H2O or both as reactants of photosynthesis | Nice thinking. You mentioned the inputs of photosynthesis. What are the outputs of this process? |

| 2-PhotoProd: glucose [or sugar or food] or oxygen as a product | Interesting idea about sugar as a product in this process. How are the products of photosynthesis useful for animals? | ||

| 3-Photo: plant uses energy from the sun to do photosynthesis | Nice thinking of photosynthesis. How are the products of photosynthesis useful for animals? | ||

| 3a-PhotoChem: Energy from the sun transforms into another type of energy [kinetic/chemical/usable] during photosynthesis | Interesting idea about how plants transform light energy to usable energy. How does the energy get to animals? | ||

| 6a-PltStore: Plants store energy in glucose | Cannot be accurately detected. | ||

| 6-PltCellResp: Plant releases energy from glucose/food for: growth, energy, repair, seed production | Cannot be accurately detected. | ||

| 8-EngTrans: Energy from the sun gets to animals when they eat plants | Nice thinking! You talked about energy transfer. Can you tell me more about how animals use the energy? | ||

| 9-AnimCellResp: Animal uses cellular respiration to release energy | Nice thinking! Can you tell me more about how the animal release the energy they get from the plant? | ||

| 10-AnimGrw: Animal uses glucose/food for energy, repair, growth, to move | Cannot be accurately detected. | ||

| 4 | Single link: One scientifically complete and valid connection between ideas in KI level 3 | ||

| 5 | Multiple links: Two or more scientifically complete and valid connections between ideas in KI level 3 | ||

| Activity | Total N | Participated N 1 | Completed N 2 | Completed at All Three Time Points |

|---|---|---|---|---|

| Pre-test dialog | 162 | 146 | 134 | 79 |

| Pre-test revision | 162 | 162 | 162 | |

| Midpoint test dialog | 162 | 131 | 129 | |

| Midpoint test revision | 162 | 162 | 160 | |

| Post-test dialog | 162 | 116 | 116 | |

| Post-test revision | 162 | 162 | 159 |

| Guidance Is Assigned for | Before Instruction | During Instruction | After Instruction |

|---|---|---|---|

| Priority 1: Interesting idea! Can animals live without sunlight? How does the animal use energy from the sun? (Prompt 11, for idea 11-AnimDirUse) | 7 | 9 | 8 |

| Priority 2: Interesting idea! How do plants and animals use energy from the sun differently? (Prompt 12, for idea 12-AnimFood) 1 | 16 | 9 | 14 |

| Priority 3: Nice thinking! Can you tell me more about how animals release the energy they get from the plant? (Prompt 9, for idea 9-AnimCellResp) | 2 | 10 | 13 |

| Priority 4: Nice thinking! You talked about energy transfer. Can you tell me more about how animals use the energy? (Prompt 8, for idea 8-EngTrans) | 35 | 25 | 20 |

| Priority 5: Interesting idea about how plants transform the light energy to the energy they can use. How does the energy get to animals? (Prompt 3a, for idea 3a-PhotoChem) | 1 | 3 | 3 |

| Priority 6: Nice thinking of photosynthesis. Why are the products of photosynthesis useful for animals? (Prompt 3, for idea 3-Photo) | 0 | 3 | 2 |

| Priority 7: Can you tell me more about this idea or another one in your explanation? I am still learning about student ideas to become a better thought partner. (Prompt Non, for Non-scorable ideas) | 3 | 4 | 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W. Applying Natural Language Processing Adaptive Dialogs to Promote Knowledge Integration During Instruction. Educ. Sci. 2025, 15, 207. https://doi.org/10.3390/educsci15020207

Li W. Applying Natural Language Processing Adaptive Dialogs to Promote Knowledge Integration During Instruction. Education Sciences. 2025; 15(2):207. https://doi.org/10.3390/educsci15020207

Chicago/Turabian StyleLi, Weiying. 2025. "Applying Natural Language Processing Adaptive Dialogs to Promote Knowledge Integration During Instruction" Education Sciences 15, no. 2: 207. https://doi.org/10.3390/educsci15020207

APA StyleLi, W. (2025). Applying Natural Language Processing Adaptive Dialogs to Promote Knowledge Integration During Instruction. Education Sciences, 15(2), 207. https://doi.org/10.3390/educsci15020207