What Worked for the U.S. Students’ Learning During the Pandemic? Cross-State Comparisons of Remote Learning Policies, Practices, and Outcomes

Abstract

1. Introduction

2. Literature Review

3. Data and Methods

3.1. State Policy and Funding Data

3.2. School Policy and Practice Data

3.3. Student Learning Survey and Assessment Data

3.4. Data Analysis Methods

4. Results

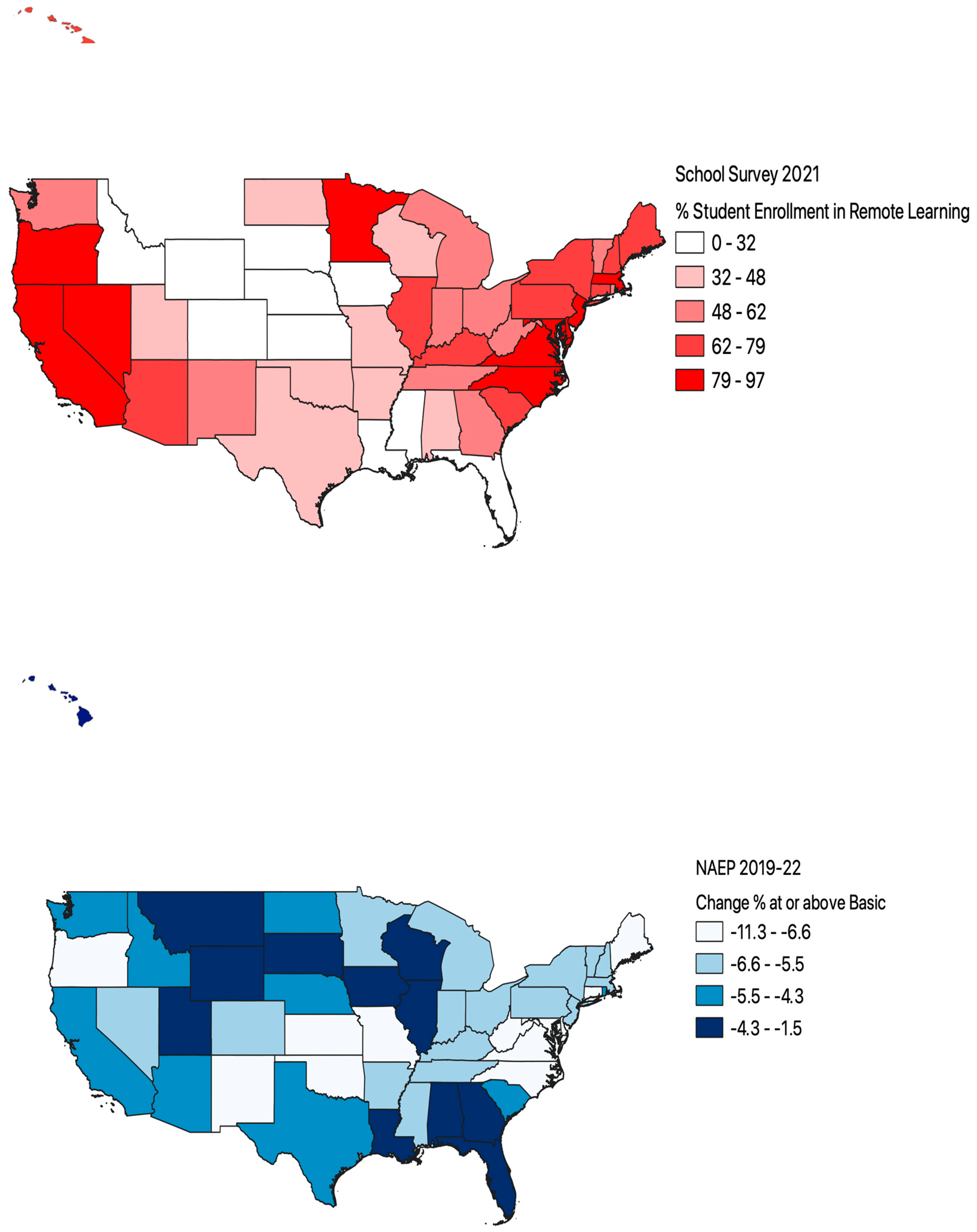

4.1. Remote Learning Trends

4.2. Academic Achievement Trends

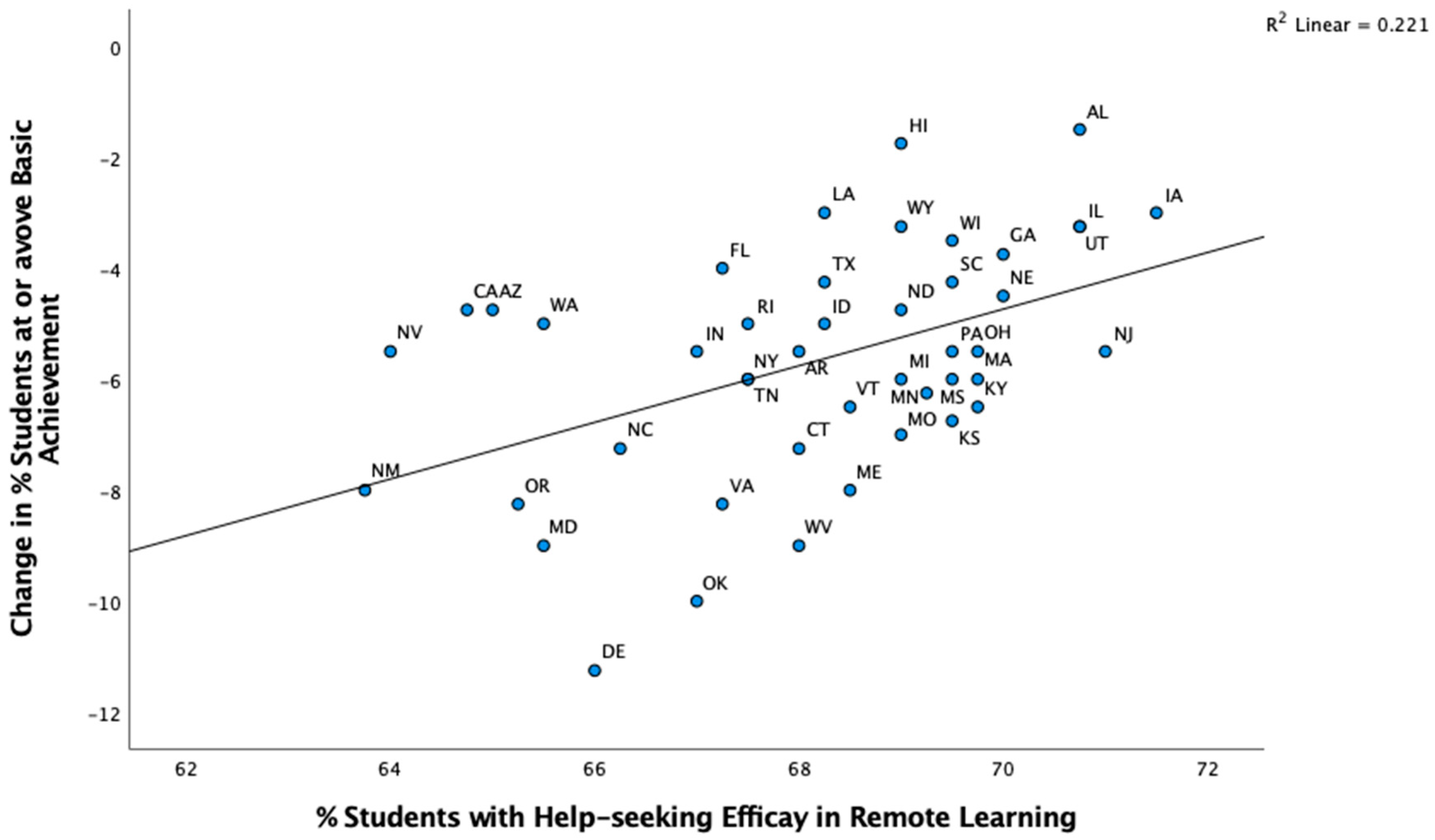

4.3. Association Between Remote Learning and Academic Achievement Trends

4.4. Case Study

5. Discussion

5.1. Summary of the Key Results

5.2. Limitations

5.3. Implications

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Description of Key Variables, Descriptive Statistics, and Data Sources

References

- Azevedo, J. P., Akmal, M., Cloutier, M.-H., Rogers, H., & Wong, Y. N. (2022). Learning losses during COVID-19: Global estimates of an invisible and unequal crisis (Policy Research Working Paper 10218). World Bank Group. [Google Scholar]

- Barnard, L., Lan, W. Y., To, Y. M., Paton, V. O., & Lai, S.-L. (2008). Measuring self-regulation in online and blended learning environments. The Internet and Higher Education, 12(1), 1–6. [Google Scholar] [CrossRef]

- Doherty, T., Guida, V., Quilantan, B., & Wanneh, G. (2020, October 14). Which states had the best pandemic response? Politico. Available online: https://www.politico.com/news/2020/10/14/best-state-responses-to-pandemic-429376 (accessed on 10 January 2023).

- Donnelly, R., & Patrinos, H. A. (2022). Learning loss during COVID-19: An early systematic review. Prospects, 51, 601–609. [Google Scholar] [CrossRef]

- Dorn, E., Hancock, B., Sarakatsannis, J., & Viruleg, E. (2021, July). COVID-19 and education: The lingering effects of unfinished learning. McKinsey & Company Research Report. Available online: https://www.mckinsey.com/industries/education/our-insights/covid-19-and-education-the-lingering-effects-of-unfinished-learning (accessed on 10 January 2023).

- Eder, R. (2020). The remoteness of remote learning: A policy lesson from COVID19. Journal of Interdisciplinary Studies in Education, 9(1), 168–171. [Google Scholar] [CrossRef]

- Ferren, M. (2021). Remote learning and school reopenings: What worked and what didn’t. Center for American Progress Research Report. Available online: https://www.americanprogress.org/wp-content/uploads/2021/06/Remote-Learning-and-School-Reopening.pdf (accessed on 10 January 2023).

- Fisher, P. (2016). The political culture gap: Daniel Elazar’s subculture in contemporary American politics. Journal of Political Science, 44(1), 87–108. [Google Scholar]

- Haidt, J. (2012). The righteous mind: Why good people are divided by politics and religion. Vintage. [Google Scholar]

- Jakubowski, M., Gajderowicz, T., & Wrona, S. (2022). Achievement of secondary school students after pandemic lockdown and structural reforms of education system: Results from ticks 2021 assessment in Warsaw (policy note). Evidence Institute. Available online: https://www.evidin.pl/wp-content/uploads/2022/01/POLICY-NOTE-1-2022-EN.pdf (accessed on 10 January 2023).

- Kendall, J., Colavito, A., & Moller, Z. (2023, January). America’s digital skills divide. Available online: https://www.thirdway.org/report/americas-digital-skills-divide (accessed on 10 January 2023).

- Kuhfeld, M., Tarasawa, B., Johnson, A., Ruzek, E., & Lewis, K. (2020, November). Learning during COVID-19: Initial findings on student’s reading and math achievement and growth. NWEA RESEARCH. Available online: https://www.nwea.org/content/uploads/2020/11/Collaborative-brief-Learning-during-COVID-19.NOV2020.pdf (accessed on 10 January 2023).

- Lee, J. (2004). Evaluating the effectiveness of instructional resource allocation and use: IRT and HLM analysis of NAEP teacher survey and student assessment data. Studies in Educational Evaluation, 30, 175–199. [Google Scholar] [CrossRef]

- Lee, J., Seo, Y., & Faith, M. (2024). Whole-child development losses and racial inequalities during the pandemic: Fallouts of school accountability turnover with remote learning and unprotective community. Creative Education, 15(6), 1043–1071. [Google Scholar] [CrossRef]

- Lewis, K., Kuhfeld, M., Ruzek, E., & McEachin, A. (2021, July). Learning during COVID-19: Reading and math achievement in the 2020-21 school year. NWEA Research Brief. Available online: https://www.nwea.org/content/uploads/2021/07/Learning-during-COVID-19-Reading-and-math-achievement-in-the-2020-2021-school-year.research-brief-1.pdf (accessed on 10 January 2023).

- Martin, A. (2006). A European framework for digital literacy. Digital Kompetanse, 2, 151–161. [Google Scholar] [CrossRef]

- McElrath, K. (2020, August 26). Nearly 93% of households with school-age children report some form of distance learning during COVID-19. U.S. Census Bureau. Available online: https://www.census.gov/library/stories/2020/08/schooling-during-the-covid-19-pandemic.html (accessed on 10 January 2023).

- McGinn, S., & Crampton, L. (2021, December 15). COVID’S deadly trade-offs, by the numbers: How each state has fared in the pandemic. Politico. Available online: https://www.politico.com/interactives/2021/covid-by-the-numbers-how-each-state-fared-on-our-pandemic-scorecard/ (accessed on 10 January 2023).

- Means, B., Toyama, Y., Murphy, R., Bakia, M., & Jones, K. (2010). Evaluation of evidence-based practices in online learning: A meta-analysis and review of online learning studies. U.S. Department of Education.

- Miller, C. C. (2022, October 1). Spending on children surged during the pandemic. It didn’t last. New York Times. Available online: https://www.nytimes.com/2022/10/01/upshot/children-pandemic-spending.html (accessed on 10 January 2023).

- Murnane, R., & Willett, J. (2010). Methods matter: Improving causal inference in educational and social science research. Oxford University Press. [Google Scholar]

- National Conference of State Legislators. (2021). State actions on Coronavirus relief funds. Database. Available online: https://www.ncsl.org/fiscal/state-actions-on-coronavirus-relief-funds (accessed on 10 January 2023).

- Ng, W. (2012). Can we teach digital natives digital literacy? Computers & Education, 59, 1065–1078. [Google Scholar]

- O’Brien, B. (2020, May 12). In one Florida school district, virtual school is not a virtual vacation. Reuters. Available online: https://www.reuters.com/article/us-health-coronavirus-usa-attendance/in-one-florida-school-district-virtual-school-is-not-a-virtual-vacation-idUSKBN22O1KX (accessed on 10 January 2023).

- OECD. (2015). Students, computers and learning: Making the connection. OECD Publishing. [Google Scholar] [CrossRef]

- OECD. (2019). Skills matter: Additional results from the survey of adult skills. OECD Publishing. Available online: https://www.oecd-ilibrary.org/education/skills-matter_1f029d8f-en (accessed on 21 January 2025).

- OECD. (2021). 21st-century readers: Developing literacy skills in a digital world, PISA. OECD Publishing. [Google Scholar] [CrossRef]

- Office for Civil Rights. (2021). Education in a pandemic: The disparate impacts of COVID-19 on America’s students. Available online: https://www2.ed.gov/about/offices/list/ocr/docs/20210608-impacts-of-covid19.pdf (accessed on 10 January 2023).

- Palvia, S., Aeron, P., Gupta, P., Mahapatra, D., Parida, R., Rosner, R., & Sindhi, S. (2018). Online education: Worldwide status, challenges, trends, and implications. Journal of Global Information Technology Management, 21(4), 233–241. [Google Scholar] [CrossRef]

- Patrinos, H. A., Vegas, E., & Carter-Rau, R. (2022). An analysis of COVID-19 student learning loss (Policy Research Working Paper 10033). World Bank Group. [Google Scholar]

- Qayyum, A. (2018). Student help-seeking attitudes and behaviors in a digital era. International Journal of Educational Technology in Higher Education, 15, 17. [Google Scholar] [CrossRef]

- Raudenbush, S. W., Fotiu, R. P., & Cheong, Y. F. (1998). Inequality of access to educational resources: A national report card for eighth-grade math. Educational Evaluation and Policy Analysis, 20, 253–267. [Google Scholar]

- Rickles, J., Garet, M., Neiman, S., & Hodgman, S. (2020, October). Approaches to Remote instruction: How District Responses to the pandemic differed across contexts. American Institute for Research. Available online: https://www.air.org/sites/default/files/COVID-Survey-Approaches-to-Remote-Instruction-FINAL-Oct-2020.pdf (accessed on 10 January 2023).

- Swanson, C. B., & Stevenson, D. L. (2002). Standards-based reform in practice: Evidence on state policy and classroom instruction from the NAEP state assessments. Educational Evaluation and Policy Analysis, 24, 1–27. [Google Scholar] [CrossRef]

- UNICEF. (2021, September). Education disrupted: The second year of the COVID-19 pandemic and school closures. Available online: https://data.unicef.org/resources/education-disrupted/ (accessed on 10 January 2023).

- U.S. Department of Education, National Center for Education Statistics. (2021). Monthly school survey dashboard. Available online: https://ies.ed.gov/schoolsurvey (accessed on 10 January 2023).

- Vegas, E. (2020). School closures, government responses, and learning inequality around the world during COVID-19. Brookings Institution Report. [Google Scholar]

- Verlenden, J. V., Pampati, S., Rasberry, C. N., Liddon, N., Hertz, M., Kilmer, G., Viox, M. H., Lee, S., Cramer, N. K., Barrios, L. C., & Ethier, K. A. (2021). Association of children’s mode of school instruction with child and parent experiences and well-being during the COVID-19 pandemic—COVID experiences survey, United States, October 8–November 13, 2020. Morbidity and Mortality Weekly Report, 70(11), 369–371. Available online: https://www.cdc.gov/mmwr/volumes/70/wr/pdfs/mm7011a1-H.pdf (accessed on 10 January 2023). [PubMed]

- Winne, P. H. (2018). Theorizing and researching levels of processing in self-regulated learning. British Journal of Educational Psychology, 88(1), 9–20. [Google Scholar] [CrossRef] [PubMed]

- World Bank, UNESCO & UNICEF. (2021). The state of the global education crisis: A path to recovery. The World Bank, UNESCO, and UNICEF. Available online: https://documents1.worldbank.org/curated/en/416991638768297704/pdf/The-State-of-the-GlobalEducation-Crisis-A-Path-to-Recovery.pdf (accessed on 10 January 2023).

- Young, J. R. (2020, October 1). Sudden shift to online learning revealed gaps in digital literacy, study finds. EdSurge. Available online: https://www.edsurge.com/news/2020-10-01-sudden-shift-to-online-learning-revealed-gaps-in-digital-literacy-study-finds (accessed on 10 January 2023).

- Zimmerman, B. J. (2008). Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. American Educational Research Journal, 45(1), 166−183. [Google Scholar] [CrossRef]

| Remote Learning Enrollment | Quiet Learning Place at Home | Internet Access at Home | Digital Learning Devices at Home | Remote Learning Teacher Help Availability | |

|---|---|---|---|---|---|

| Quiet Learning Place at Home | 0.36 * | ||||

| Internet Access at Home | 0.10 | 0.27 * | |||

| Digital Learning Devices at Home | 0.71 *** | 0.45 ** | −0.27 | ||

| Remote Learning Teacher Help | 0.47 ** | 0.50 *** | 0.16 | 0.37 * | |

| % Minority (Non-White) | 0.23 | −0.40 ** | −0.27 ^ | 0.07 | −0.31 * |

| % Poverty | −0.31 ^ | −0.55 *** | 0.22 | −0.50 *** | −0.29 |

| % Vaccination | 0.61 ** | 0.37 * | −0.20 | 0.57 *** | −0.31 * |

| Reading and Math Combined | Reading | Math | ||||

|---|---|---|---|---|---|---|

| Predictors | Control for Covariates | Grades 4 and 8 Combined | Grade 4 | Grade 8 | Grade 4 | Grade 8 |

| Remote Learning Enrollment (School Reported) | No | −0.44 ** | −0.51 ** | 0.07 | −0.48 ** | −0.34 * |

| Yes | −0.52 ** | −0.58 ** | −0.14 | −0.49 * | −0.41 ^ | |

| Remote Learning Experience (Student Reported) | No | −0.32 * | −0.25 | −0.001 | −0.17 | −0.42 ** |

| Yes | −0.39 * | −0.27 ^ | −0.16 | −0.11 | −0.46 ** | |

| High-Speed Internet Access at Home | No | −0.17 | −0.01 | −0.41 ** | −0.08 | 0.12 |

| Yes | −0.09 | −0.03 | −0.10 | −0.15 | −0.15 | |

| Digital Learning Devices at Home | No | 0.00 | 0.02 | 0.10 | −0.06 | −0.19 |

| Yes | −0.09 | −0.02 | 0.07 | 0.03 | 0.16 | |

| Remote Learning Teacher Help Availability | No | −0.10 | 0.14 | −0.20 | 0.13 | −0.09 |

| Yes | −0.08 | 0.19 | −0.21 | −0.09 | −0.15 | |

| Remote Learning Help-Seeking Efficacy | No | 0.47 ** | 0.10 | 0.05 | 0.43 ** | 0.26 ^ |

| Yes | 0.65 *** | 0.52 ** | 0.23 | 0.55 ** | 0.25 | |

| Online Resources Search Efficacy | No | 0.32 * | 0.22 | 0.13 | 0.23 | 0.03 |

| Yes | 0.30 ^ | 0.30 * | 0.10 | 0.28 ^ | 0.03 | |

| Remote Learning Difficulty | No | −0.18 ^ | −0.22 | 0.01 | −0.40 ** | −0.34 ** |

| Yes | −0.27 ^ | −0.21 | 0.00 | −0.59 ** | −0.33 * | |

| Category | States | School Closure and Reopening Policies (Fall 2021) | Remote Learning Enrollment (School-Reported) | Remote Learning Experience (Student-Reported) | Remote Learning Teacher Help Availability | Remote Learning Help-Seeking Efficacy | Online Resources Search Efficacy | Academic Achievement Gain (NAEP Basic or Above) |

|---|---|---|---|---|---|---|---|---|

| Lower Remote Learning Rates and Smaller Learning Losses | Iowa | Full school reopening ordered | 26% | 57% | 59% | 72% | 62% | −3 pp |

| Florida | Full school reopening ordered | 29% | 62% | 57% | 67% | 62% | −4 pp | |

| Higher Remote Learning Rates and Larger Learning Losses | Delaware | Partial school closure ordered | 95% | 69% | 59% | 66% | 60% | −11 pp |

| New Mexico | Partial school closure ordered | 63% | 66% | 59% | 58% | 64% | −8 pp |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Seo, Y.S. What Worked for the U.S. Students’ Learning During the Pandemic? Cross-State Comparisons of Remote Learning Policies, Practices, and Outcomes. Educ. Sci. 2025, 15, 139. https://doi.org/10.3390/educsci15020139

Lee J, Seo YS. What Worked for the U.S. Students’ Learning During the Pandemic? Cross-State Comparisons of Remote Learning Policies, Practices, and Outcomes. Education Sciences. 2025; 15(2):139. https://doi.org/10.3390/educsci15020139

Chicago/Turabian StyleLee, Jaekyung, and Young Sik Seo. 2025. "What Worked for the U.S. Students’ Learning During the Pandemic? Cross-State Comparisons of Remote Learning Policies, Practices, and Outcomes" Education Sciences 15, no. 2: 139. https://doi.org/10.3390/educsci15020139

APA StyleLee, J., & Seo, Y. S. (2025). What Worked for the U.S. Students’ Learning During the Pandemic? Cross-State Comparisons of Remote Learning Policies, Practices, and Outcomes. Education Sciences, 15(2), 139. https://doi.org/10.3390/educsci15020139