Chilean Teachers’ Knowledge of and Experience with Artificial Intelligence as a Pedagogical Tool

Abstract

1. Introduction

2. Conceptual Framework

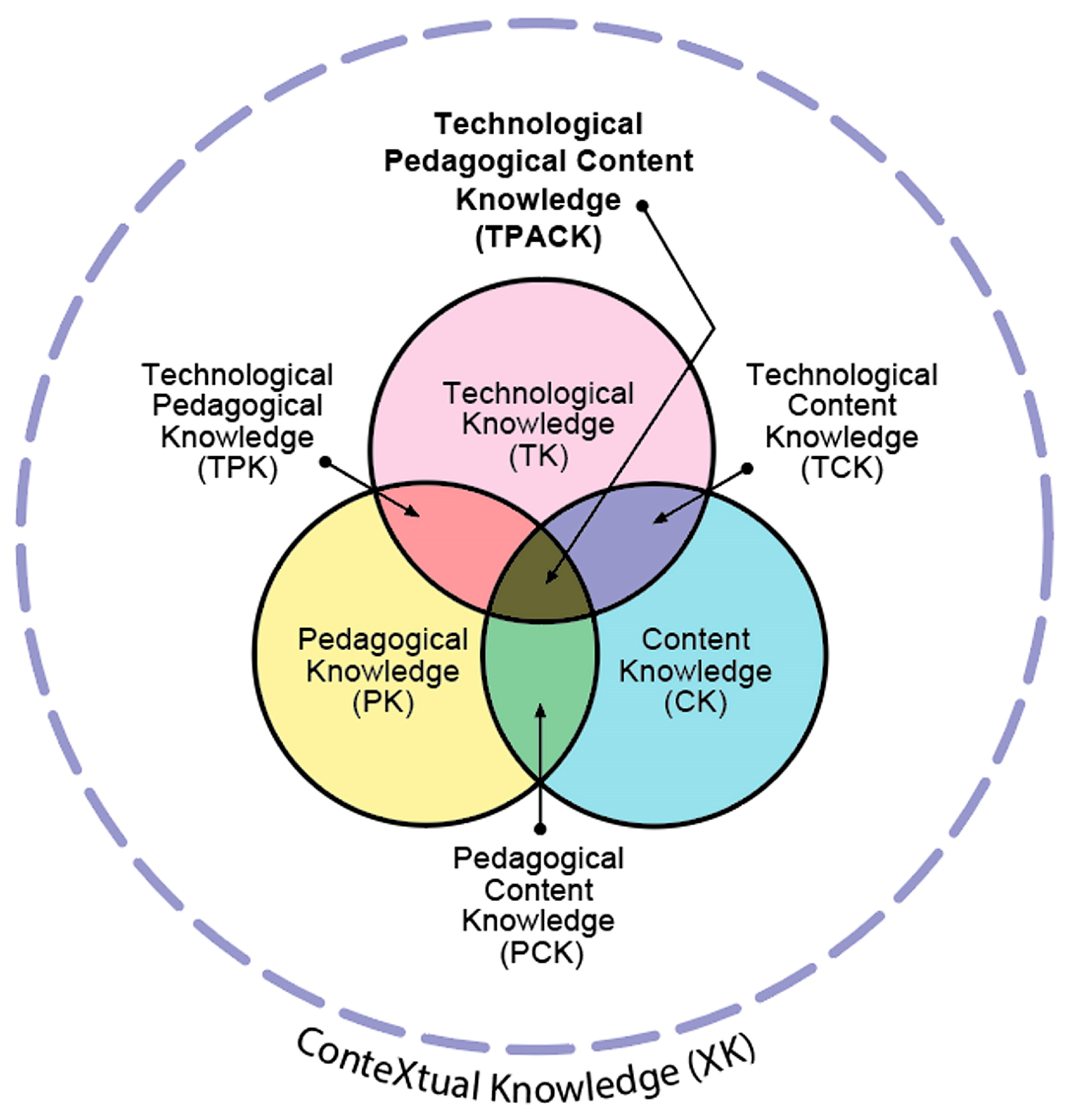

2.1. TPACK Framework

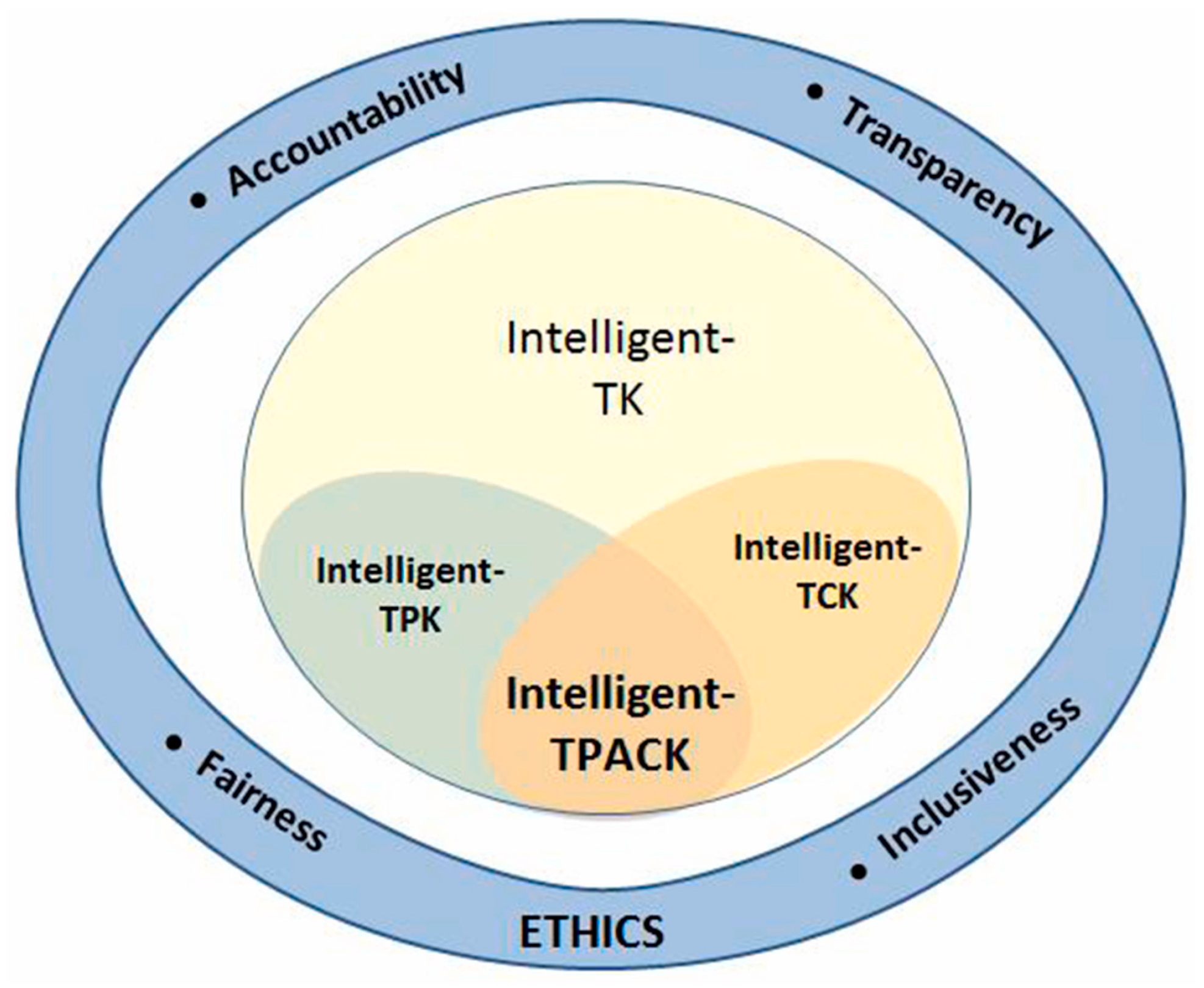

2.2. Intelligent-TPACK Framework

“Intelligent-TK tackles the knowledge to interact with AI-based tools and to use fundamental functionalities of AI-based tools. This component aims to measure teachers’ familiarization level with the technical capacities of AI-based tools.Intelligent-TPK addresses the knowledge of pedagogical affordances of AI-based tools, such as providing personal and timely feedback and monitoring students’ learning. Additionally, Intelligent-TPK evaluates teachers’ understanding of alerting (or notification) and how they interpret messages from AI-based tools.Intelligent-TCK focuses on the knowledge of field-specific AI tools. It assesses how well teachers incorporate AI tools to update their content knowledge. This component also addresses teachers’ understanding of particular technologies that are best suited for subject-matter learning in their specific field.Intelligent-TPACK is considered the core area of knowledge. It evaluates teachers’ professional knowledge to choose and use appropriate AI-based tools (e.g., intelligent tutoring systems) for implementing teaching strategies (e.g., monitoring and providing timely feedback) to achieve instructional goals in a specific domain.Ethics evaluates the teacher’s judgment regarding the use of AI-based tools. The evaluation focuses on transparency, fairness, accountability, and inclusiveness.”

3. Methods

3.1. Design and Research Questions

- What levels of technological, pedagogical, content, and ethical knowledge are reported by a sample of teachers from the Metropolitan Region (Chile) regarding the use of AI in education?

- Are there significant differences in teachers’ knowledge of AI according to sociodemographic, professional, and disciplinary variables such as gender, age, or subject taught?

- What professional teacher profiles emerge from the combination of technological, pedagogical, content, and ethical knowledge regarding the integration of AI in their professional practice?

3.2. Implementation of the Adapted Questionnaire

3.2.1. Population and Study Sample

3.2.2. Questionnaire Characteristics

3.2.3. Data Analysis Strategies

4. Results

4.1. What Levels of Technological, Pedagogical, Content, and Ethical Knowledge Are Reported by a Sample of Teachers from the Metropolitan Region (Chile) Regarding the Use of AI in Education?

4.2. Are There Significant Differences in Teachers’ Knowledge of AI According to Sociodemographic, Professional, and Disciplinary Variables Such as Gender, Age, or Subject Taught?

4.2.1. Group Differences Analysis

4.2.2. Correlation Analysis

4.2.3. Multiple Linear Regression

4.3. What Professional Teacher Profiles Emerge from the Combination of Technological, Pedagogical, Content, and Ethical Knowledge Regarding the Integration of AI in Their Professional Practice?

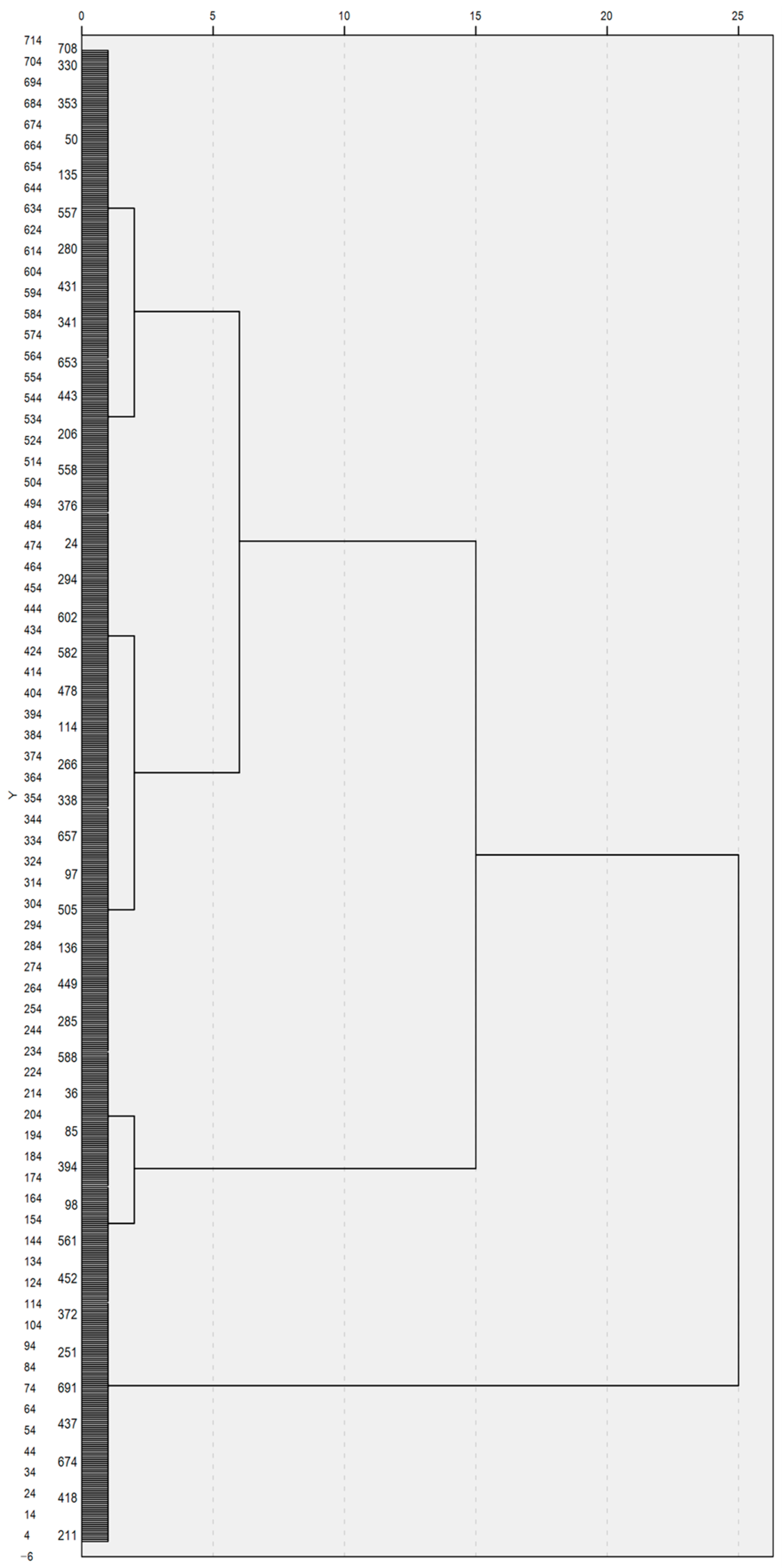

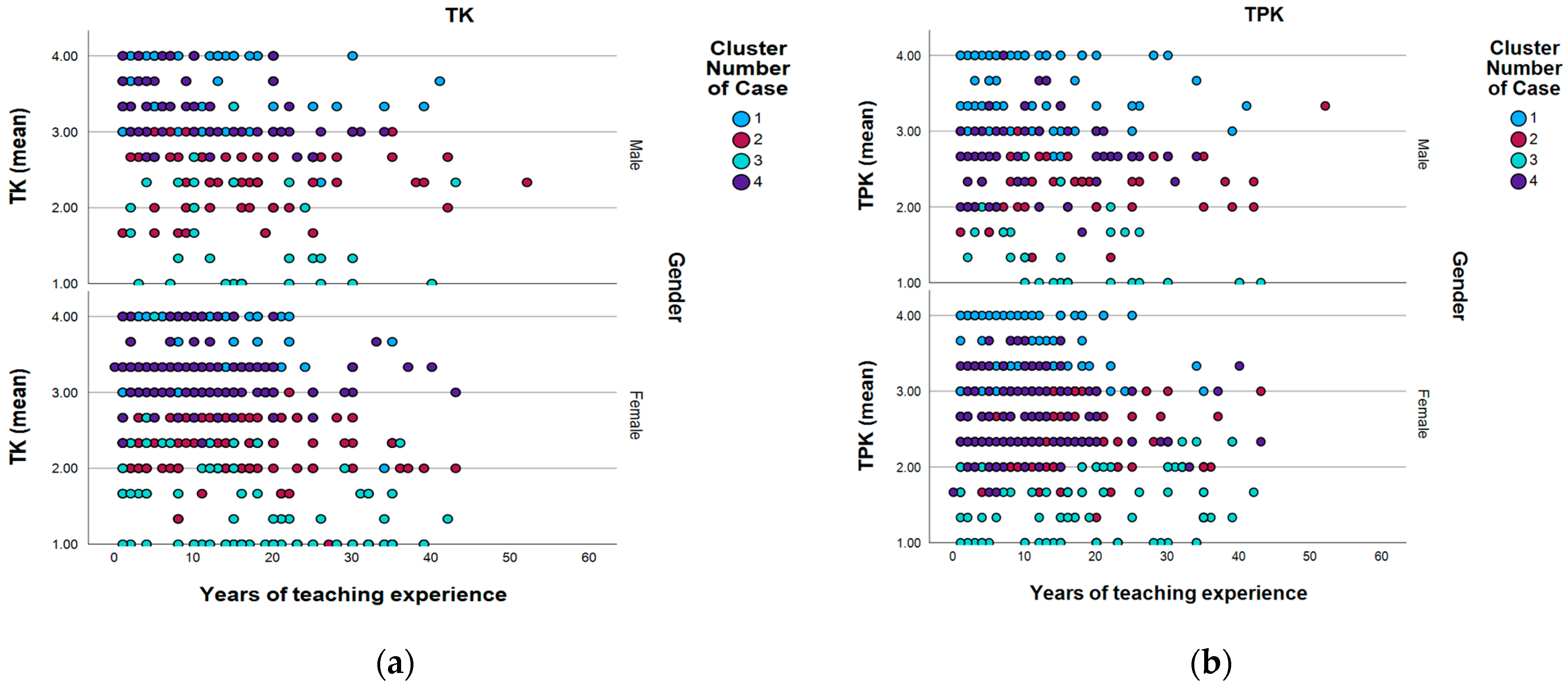

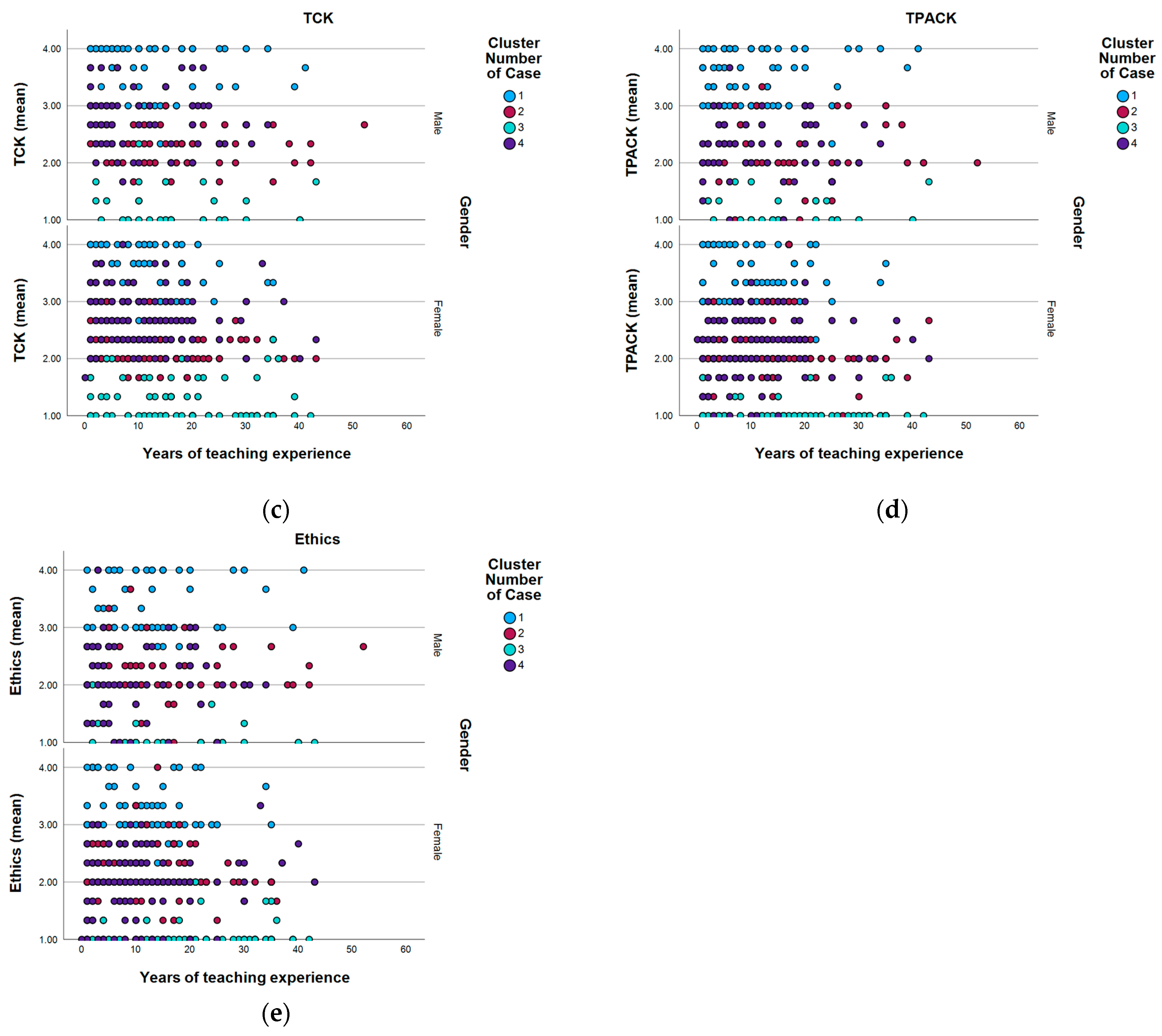

Cluster Analysis

5. Discussion and Conclusions

5.1. What Levels of Technological, Pedagogical, Content, and Ethical Knowledge Are Reported by a Sample of Teachers from the Metropolitan Region (Chile) Regarding the Use of AI in Education?

5.2. Are There Significant Differences in Teachers’ Knowledge of AI According to Sociodemographic, Professional, and Disciplinary Variables Such as Gender, Age, or Subject?

5.3. What Professional Teacher Profiles Emerge from the Combination of Technological, Pedagogical, Content, and Ethical Knowledge Regarding AI Integration in Their Professional Practice?

5.4. Key Implications

5.4.1. Implications for Public Policy

5.4.2. Implications for Future Research

5.5. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AIED | Artificial Intelligence in Education |

| TPACK | Technological Pedagogical and Content Knowledge |

| TK | Technological Knowledge |

| TCK | Technological Content Knowledge |

| TPK | Technological Pedagogical Knowledge |

| PCK | Pedagogical Content Knowledge |

| STEM | Science, Technology, Engineering, and Mathematics |

Appendix A

Validation Procedures for the Adapted Questionnaire

- Preliminary Analysis

| Item | Min | Max | Mean | SD | Med | Skew | Kurt | CITC |

|---|---|---|---|---|---|---|---|---|

| TK1 | 1 | 3 | 1.95 | 0.582 | 2.0 | −0.001 | 0.157 | 0.664 |

| TK2 | 1 | 3 | 1.98 | 0.468 | 2.0 | −0.090 | 2.031 | 0.699 |

| TK3 | 1 | 3 | 1.88 | 0.504 | 2.0 | −0.243 | 0.945 | 0.616 |

| TK4 | 1 | 3 | 2.00 | 0.494 | 2.0 | 0.000 | 1.514 | 0.741 |

| TK5 | 1 | 3 | 2.02 | 0.468 | 2.0 | 0.090 | 2.031 | 0.824 |

| TPK1 | 1 | 4 | 2.90 | 0.484 | 3.0 | −1.626 | 6.373 | 0.529 |

| TPK2 | 2 | 4 | 2.90 | 0.484 | 3.0 | −0.274 | 1.389 | 0.561 |

| TPK3 | 2 | 4 | 2.98 | 0.517 | 3.0 | −0.040 | 1.078 | 0.548 |

| TPK4 | 2 | 4 | 2.93 | 0.407 | 3.0 | −0.582 | 3.317 | 0.254 |

| TPK5 | 2 | 4 | 2.90 | 0.484 | 3.0 | −0.274 | 1.389 | 0.529 |

| TPK6 | 1 | 4 | 2.83 | 0.490 | 3.0 | −1.720 | 4.655 | 0.485 |

| TPK7 | 1 | 4 | 2.88 | 0.453 | 3.0 | −2.187 | 7.855 | 0.260 |

| TCK1 | 2 | 4 | 2.98 | 0.604 | 3.0 | 0.008 | −0.068 | 0.673 |

| TCK2 | 2 | 4 | 2.76 | 0.576 | 2.0 | 0.039 | −0.286 | 0.781 |

| TCK3 | 2 | 4 | 2.79 | 0.565 | 2.0 | −0.026 | −0.134 | 0.765 |

| TCK4 | 2 | 4 | 2.79 | 0.520 | 2.0 | −0.268 | 0.098 | 0.664 |

| TPACK1 | 1 | 3 | 1.64 | 0.577 | 1.0 | 0.204 | −0.667 | 0.612 |

| TPACK2 | 1 | 3 | 1.79 | 0.645 | 1.0 | 0.228 | −0.585 | 0.700 |

| TPACK3 | 1 | 3 | 1.60 | 0.701 | 1.0 | 0.761 | −0.578 | 0.816 |

| TPACK4 | 1 | 3 | 1.76 | 0.532 | 1.0 | −0.192 | −0.127 | 0.563 |

| TPACK5 | 1 | 3 | 1.79 | 0.565 | 1.0 | −0.026 | −0.134 | 0.779 |

| TPACK6 | 1 | 3 | 1.60 | 0.701 | 1.0 | 0.761 | −0.578 | 0.744 |

| TPACK7 | 1 | 4 | 1.83 | 0.696 | 1.0 | 0.694 | 1.079 | 0.614 |

| ETHIC1 | 1 | 4 | 1.45 | 0.670 | 1.0 | 1.714 | 3.803 | 0.420 |

| ETHIC2 | 1 | 3 | 1.21 | 0.470 | 1.0 | 2.154 | 4.213 | 0.521 |

| ETHIC3 | 1 | 3 | 1.33 | 0.612 | 1.0 | 1.692 | 1.837 | 0.450 |

| ETHIC4 | 1 | 3 | 1.19 | 0.455 | 1.0 | 2.416 | 5.583 | 0.575 |

| Item | k (Items) | Cronbach’s α | McDonald’s ω | CITC | Inter-Item r |

|---|---|---|---|---|---|

| TK | 5 | 0.874 | 0.876 | 0.616–0.824 | 0.48–0.74 |

| TPK | 7 | 0.740 | 0.683 | 0.254–0.561 | −0.06–0.65 |

| TCK | 4 | 0.868 | 0.871 | 0.664–0.781 | 0.53–0.74 |

| TPACK | 7 | 0.891 | 0.895 | 0.563–0.816 | 0.35–0.75 |

| Ethics | 4 | 0.691 | 0.685 | 0.420–0.575 | 0.30–0.60 |

- 2.

- Verification of factorability

- 3.

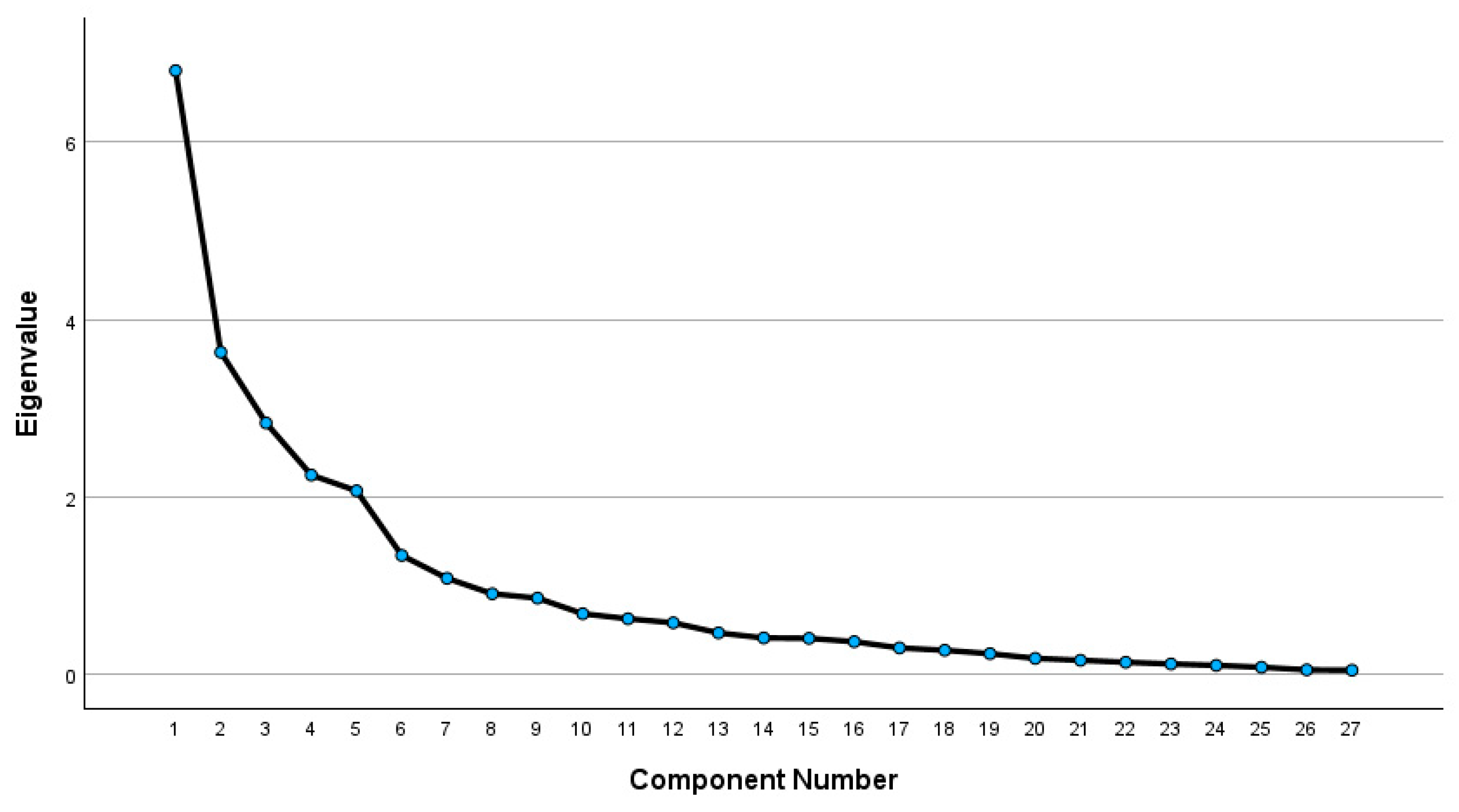

- Principal Components Analysis (PCA)

| Element | Result |

|---|---|

| Input matrix | Pearson correlations (listwise deletion) |

| Determinant | 4.29 × 10−10 |

| Sampling adequacy | KMO = 0.635 |

| Sphericity (Bartlett) | χ2(351) = 672.244, p < 0.001 |

| Extraction | PCA |

| Rotation | Varimax (orthogonal); Kaiser normalization |

| Retention criteria | Eigenvalue > 1 + Scree |

| Convergence | 19 iterations |

| # of factors retained | 7 |

| Explained variance | C1 16.44%, C2 13.90%, C3 11.33%, C4 9.85%, C5 8.51%, C6 7.12%, C7 6.99% |

| Component | Highest-Loading Items (Main) |

|---|---|

| C1–TPACK | TPACK5 (0.888), TPACK3 (0.835), TPACK6 (0.754), TPACK2 (0.752), TPACK4 (0.686), TPACK1 (0.632), TPACK7 (0.552) |

| C2–TK | TK5 (0.878), TK4 (0.840), TK2 (0.746), TK3 (0.741), TK1 (0.657) |

| C3–TCK (core) | TCK3 (0.862), TCK1 (0.827), TCK2 (0.802) |

| C4–TCK (extension) | TCK4 (0.620) |

| C5–TPK (group 1) | TPK1 (0.827), TPK5 (0.813), TPK6 (0.764), TPK2 (0.616) |

| C6–Ethics | E4 (0.750), E2 (0.726), E3 (0.707), E1 (0.502) |

| C7–TPK (group 2) | TPK4 (0.813), TPK7 (0.785), TPK3 (0.711) |

| Item | Extraction | Selected |

|---|---|---|

| TK1 | 0.643 | No |

| TK2 | 0.675 | No |

| TK3 | 0.650 | Yes |

| TK4 | 0.818 | Yes |

| TK5 | 0.835 | Yes |

| TPK1 | 0.773 | Yes |

| TPK2 | 0.801 | No |

| TPK3 | 0.747 | No |

| TPK4 | 0.729 | Yes |

| TPK5 | 0.832 | No |

| TPK6 | 0.708 | Yes |

| TPK7 | 0.810 | No |

| TCK1 | 0.764 | No |

| TCK2 | 0.812 | Yes |

| TCK3 | 0.803 | Yes |

| TCK4 | 0.705 | Yes |

| TPACK1 | 0.550 | No |

| TPACK2 | 0.736 | No |

| TPACK3 | 0.768 | Yes |

| TPACK4 | 0.567 | No |

| TPACK5 | 0.816 | Yes |

| TPACK6 | 0.743 | Yes |

| TPACK7 | 0.815 | No |

| E1 | 0.761 | Yes |

| E2 | 0.756 | No |

| E3 | 0.680 | Yes |

| E4 | 0.718 | Yes |

| Total | 27 items | 15 items |

- 4.

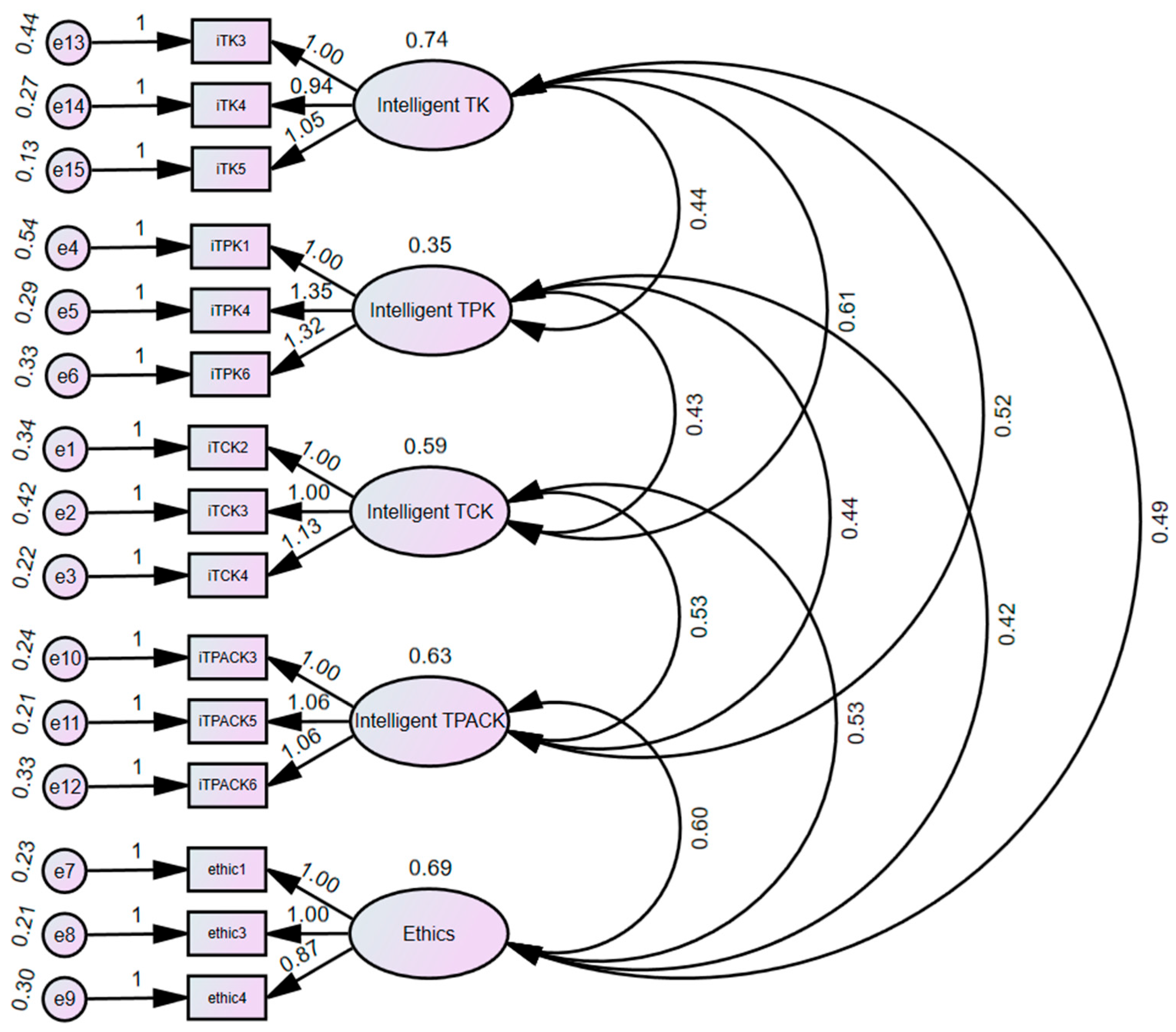

- Confirmatory Factor Analysis

| Construct | Std. Loadings (Min–Max) | CR | AVE |

|---|---|---|---|

| TK | 0.79–0.93 | 0.891 | 0.733 |

| TPK | 0.63–0.83 | 0.802 | 0.577 |

| TCK | 0.77–0.88 | 0.856 | 0.666 |

| TPACK | 0.83–0.88 | 0.888 | 0.726 |

| Ethics | 0.80–0.87 | 0.882 | 0.714 |

Appendix B

Complementary Data

| Category | TK | TPK | TCK | TPACK | Ética |

|---|---|---|---|---|---|

| Male | 2.67 (1.00) | 2.67 (1.00) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| Female | 2.67 (1.00) | 2.67 (1.00) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| Primary | 2.33 (1.00) | 2.33 (1.00) | 2.33 (1.00) | 2.00 (1.00) | 2.00 (1.33) |

| Secondary | 3.00 (1.00) | 2.67 (1.33) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.33) |

| Public 1 | 2.33 (1.00) | 2.33 (1.00) | 2.33 (1.00) | 2.00 (1.00) | 2.00 (1.00) |

| Public 2 | 2.67 (1.00) | 2.67 (1.00) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| Private 1 | 2.67 (1.00) | 2.67 (1.00) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| Private 2 | 2.67 (1.00) | 2.67 (1.00) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| Private 3 | 3.00 (1.00) | 3.00 (1.00) | 3.00 (1.00) | 2.67 (1.00) | 2.67 (1.00) |

| 20–30 years old | 2.33 (1.00) | 2.33 (1.00) | 2.33 (1.00) | 2.00 (1.00) | 2.00 (1.00) |

| 30–40 years old | 3.00 (1.00) | 2.67 (1.33) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| 40–50 years old | 3.00 (1.00) | 2.67 (1.00) | 2.67 (1.00) | 2.33 (1.00) | 2.33 (1.00) |

| 50–60 years old | 3.00 (1.00) | 3.00 (1.00) | 3.00 (1.00) | 2.67 (1.00) | 2.67 (1.00) |

| 60–70 years old | 2.33 (1.00) | 2.33 (1.00) | 2.33 (1.00) | 2.00 (1.00) | 2.00 (1.00) |

| 70–80 years old | 2.50 (1.00) | 2.83 (1.00) | 2.33 (1.00) | 2.00 (1.00) | 2.33 (1.00) |

| Visual arts | 3.17 (0.42) | 2.67 (0.75) | 3.00 (0.83) | 2.50 (0.92) | 2.00 (0.42) |

| Biology | 3.33 (1.34) | 2.67 (1.34) | 2.67 (1.50) | 2.33 (1.33) | 2.33 (1.00) |

| Natural sciences | 2.33 (1.58) | 2.33 (1.00) | 2.50 (1.25) | 2.00 (1.17) | 2.00 (1.33) |

| Science for Citizenship | 4.00 (1.67) | 4.00 (1.50) | 4.00 (2.00) | 3.67 (2.00) | 2.67 (1.83) |

| Physical Education | 2.83 (1.00) | 2.67 (1.33) | 2.50 (1.25) | 2.33 (1.17) | 2.50 (1.25) |

| Philosophy | 3.33 (0.58) | 2.67 (0.67) | 3.00 (1.33) | 2.33 (1.00) | 2.67 (1.00) |

| Physics | 3.00 (1.33) | 3.00 (1.33) | 2.67 (1.00) | 2.67 (2.00) | 2.33 (1.33) |

| History | 2.67 (1.33) | 2.33 (1.25) | 2.33 (1.33) | 2.00 (1.33) | 2.00 (1.83) |

| English | 3.17 (1.33) | 2.67 (1.00) | 2.83 (1.33) | 2.33 (1.00) | 2.00 (1.00) |

| Indigenous Culture | 1.33 (2.42) | 1.50 (2.33) | 1.50 (2.00) | 1.33 (2.42) | 1.50 (2.50) |

| Communication | 2.33 (2.33) | 2.33 (1.33) | 2.33 (1.67) | 2.00 (1.67) | 2.00 (1.33) |

| Mathematics | 2.67 (1.00) | 2.67 (0.67) | 2.33 (1.00) | 2.00 (1.00) | 2.00 (1.67) |

| Music | 3.33 (0.50) | 3.00 (0.50) | 2.67 (0.33) | 2.33 (0.50) | 2.00 (0.67) |

| Counseling | 2.33 (1.67) | 2.00 (1.00) | 2.33 (1.33) | 2.00 (1.67) | 2.00 (1.00) |

| Chemistry | 3.33 (1.00) | 2.33 (0.83) | 2.67 (1.00) | 2.00 (0.67) | 2.00 (1.00) |

| Religion | 2.67 (1.00) | 2.33 (1.00) | 2.33 (0.67) | 2.33 (1.50) | 2.67 (1.50) |

| Technology | 2.67 (2.00) | 3.00 (1.00) | 2.33 (1.83) | 4.00 (1.33) | 2.67 (0.67) |

| Variable | TK | TPK | TCK | TPACK | ETHIC |

|---|---|---|---|---|---|

| Male | 2.85 (0.84) | 2.62 (0.83) | 2.58 (0.88) | 2.38 (0.90) | 2.28 (0.91) |

| Female | 2.57 (0.93) | 2.46 (0.80) | 2.37 (0.85) | 2.15 (0.86) | 2.01 (0.79) |

| Primary | 2.42 (0.92) | 2.37 (0.78) | 2.24 (0.81) | 2.06 (0.87) | 1.89 (0.80) |

| Secondary | 2.84 (0.86) | 2.62 (0.81) | 2.59 (0.87) | 2.34 (0.86) | 2.24 (0.84) |

| Public 1 | 2.70 (0.84) | 2.57 (0.79) | 2.50 (0.81) | 2.24 (0.83) | 2.10 (0.82) |

| Public 2 | 2.63 (0.91) | 2.45 (0.83) | 2.41 (0.93) | 2.28 (0.89) | 2.22 (0.90) |

| Private 1 | 2.57 (0.94) | 2.41 (0.83) | 2.34 (0.89) | 2.17 (0.92) | 2.01 (0.85) |

| Private 2 | 2.53 (1.08) | 2.56 (0.67) | 2.27 (0.90) | 2.04 (0.79) | 2.12 (0.88) |

| Private 3 | 2.90 (0.85) | 2.67 (0.80) | 2.69 (0.79) | 2.36 (0.88) | 2.21 (0.82) |

| 20–30 | 3.05 (0.73) | 2.72 (0.82) | 2.80 (0.84) | 2.39 (0.91) | 2.29 (0.87) |

| 30–40 | 2.95 (0.78) | 2.70 (0.75) | 2.68 (0.77) | 2.38 (0.79) | 2.27 (0.79) |

| 40–50 | 2.53 (0.94) | 2.36 (0.86) | 2.29 (0.87) | 2.12 (0.82) | 2.01 (0.83) |

| 50–60 | 2.32 (0.94) | 2.33 (0.73) | 2.23 (0.87) | 2.01 (0.89) | 1.95 (0.86) |

| 60–70 | 2.02 (0.82) | 2.26 (0.75) | 1.91 (0.72) | 2.10 (1.12) | 1.70 (0.81) |

| 70–80 | 2.50 (0.24) | 2.83 (0.71) | 2.33 (0.47) | 2.00 (0.00) | 2.33 (0.47) |

| Visual arts | 3.22 (0.27) | 2.72 (0.44) | 2.89 (0.50) | 2.56 (0.58) | 1.94 (0.53) |

| Biology | 3.14 (0.78) | 2.86 (0.85) | 2.85 (0.82) | 2.55 (0.85) | 2.35 (0.79) |

| Natural sciences | 2.58 (0.98) | 2.46 (0.84) | 2.45 (0.86) | 2.12 (0.89) | 2.00 (0.88) |

| Science for Citizenship | 3.11 (1.07) | 3.00 (1.26) | 2.94 (1.32) | 2.83 (1.35) | 2.33 (1.17) |

| Physical Education | 2.71 (0.87) | 2.69 (0.79) | 2.59 (0.75) | 2.51 (0.85) | 2.43 (0.79) |

| Philosophy | 3.13 (0.76) | 2.67 (0.83) | 2.80 (0.92) | 2.30 (0.90) | 2.47 (0.67) |

| Physics | 2.97 (0.84) | 2.71 (0.87) | 2.64 (0.96) | 2.30 (0.93) | 2.28 (0.87) |

| History | 2.57 (0.93) | 2.33 (0.85) | 2.27 (0.90) | 2.15 (0.87) | 2.08 (0.90) |

| English | 3.04 (0.78) | 2.79 (0.68) | 2.70 (0.75) | 2.42 (0.82) | 2.15 (0.73) |

| Indigenous Culture | 1.92 (1.42) | 2.00 (1.36) | 2.00 (1.41) | 1.92 (1.42) | 2.00 (1.41) |

| Communication | 2.35 (1.01) | 2.35 (0.86) | 2.26 (0.93) | 1.98 (0.83) | 1.94 (0.83) |

| Mathematics | 2.80 (0.71) | 2.61 (0.67) | 2.52 (0.72) | 2.17 (0.75) | 2.03 (0.83) |

| Music | 2.96 (0.56) | 2.73 (0.49) | 2.57 (0.56) | 2.51 (0.57) | 2.24 (0.47) |

| Counseling | 2.23 (0.85) | 2.01 (0.71) | 2.04 (0.75) | 2.00 (0.83) | 1.89 (0.78) |

| Chemistry | 3.02 (0.91) | 2.46 (0.75) | 2.48 (0.92) | 2.13 (0.79) | 2.17 (0.83) |

| Religion | 2.67 (0.58) | 2.52 (0.58) | 2.67 (0.60) | 2.48 (0.82) | 2.30 (0.84) |

| Technology | 2.79 (0.88) | 2.92 (0.77) | 2.69 (0.90) | 3.26 (0.92) | 2.75 (0.77) |

Appendix C

| AI-TK |

| TK3 Sé cómo iniciar una tarea con herramientas de IA mediante texto o voz. |

| TK4 Tengo conocimientos suficientes para usar varias herramientas de IA. |

| TK5 Estoy familiarizado con las herramientas de IA y sus capacidades técnicas. |

| AI-TPK |

| TPK1 Puedo comprender la contribución pedagógica de las herramientas de IA en mi campo de enseñanza. |

| TPK4 Sé cómo usar herramientas de IA para monitorear el aprendizaje de mis estudiantes. |

| TPK6 Puedo comprender las notificaciones de herramientas de IA para apoyar el aprendizaje de mis estudiantes. |

| AI-TCK |

| TCK2 Conozco diversas herramientas de IA que son utilizadas por profesionales de mi asignatura. |

| TCK3 Puedo usar herramientas de IA para comprender mejor los contenidos de asignatura. |

| TCK4 Sé cómo usar herramientas de IA específicas para mi asignatura. |

| AI-TPACK |

| TPACK3 En la enseñanza de mi disciplina, sé cómo utilizar diferentes herramientas de IA para ofrecer retroalimentación en tiempo real. |

| TPACK5 Puedo impartir lecciones que combinen de manera adecuada el contenido de enseñanza, las herramientas de IA y las estrategias didácticas. |

| TPACK6 Puedo asumir un rol de liderazgo entre mis colegas en la integración de herramientas de IA en alguna asignatura. |

| AI-ETHIC |

| E1 Puedo evaluar en qué medida las herramientas de IA consideran las diferencias individuales de mis estudiantes durante el proceso de enseñanza (por ejemplo, sexo, género, nivel socio económico, etc.). |

| E3 Puedo comprender la justificación de cualquier decisión tomada por una herramienta basada en IA. |

| E4 Puedo identificar quiénes son los desarrolladores responsables en el diseño y la toma de decisiones de las herramientas basadas en IA. |

References

- Adel, A., Ahsan, A., & Davison, C. (2024). ChatGPT promises and challenges in education: Computational and ethical perspectives. Education Sciences, 14(8), 814. [Google Scholar] [CrossRef]

- Alé, J., & Arancibia, M. L. (2025). Emerging technology-based motivational strategies: A systematic review with meta-analysis. Education Sciences, 15(2), 197. [Google Scholar] [CrossRef]

- Alé, J., Ávalos, B., & Araya, R. (2025). Scientific practices for understanding, applying and creating with artificial intelligence in K-12 education: A scoping review. Review of Education, 13(2), e70098. [Google Scholar] [CrossRef]

- Almusharraf, N., & Alotaibi, H. (2022). An error-analysis study from an EFL writing context: Human and automated essay scoring approaches. Technology Knowledge And Learning, 28(3), 1015–1031. [Google Scholar] [CrossRef]

- Anwar, A., Rehman, I. U., Nasralla, M. M., Khattak, S. B. A., & Khilji, N. (2023). Emotions matter: A systematic review and meta-analysis of the detection and classification of students’ emotions in stem during online learning. Education Sciences, 13(9), 914. [Google Scholar] [CrossRef]

- Berryhill, J., Kok Heang, K., Clogher, R., & McBride, K. (2019). Hello, world: Artificial intelligence and its use in the public sector. In OECD working papers on public governance. No. 36. OECD Publishing. [Google Scholar] [CrossRef]

- Bulathwela, S., Pérez-Ortiz, M., Holloway, C., Cukurova, M., & Shawe-Taylor, J. (2024). Artificial intelligence alone will not democratise education: On educational inequality, techno-solutionism and inclusive tools. Sustainability, 16(2), 781. [Google Scholar] [CrossRef]

- Cai, Z., Fan, X., & Du, J. (2016). Gender and attitudes toward technology use: A meta-analysis. Computers & Education, 105, 1–13. [Google Scholar] [CrossRef]

- Castro, A., Díaz, B., Aguilera, C., Prat, M., & Chávez-Herting, D. (2025). Identifying rural elementary teachers’ perception challenges and opportunities in integrating artificial intelligence in teaching practices. Sustainability, 17(6), 2748. [Google Scholar] [CrossRef]

- Cavalcanti, A. P., Barbosa, A., Carvalho, R., Freitas, F., Tsai, Y., Gašević, D., & Mello, R. F. (2021). Automatic feedback in online learning environments: A systematic literature review. Computers And Education Artificial Intelligence, 2, 100027. [Google Scholar] [CrossRef]

- Cazzaniga, M., Jaumotte, F., Li, L., Melina, G., Panton, A. J., Pizzinelli, C., Rockall, E. J., & Tavares, M. M. (2024). Gen-AI: Artificial intelligence and the future of work. IMF Staff Discussion Note, 2024(1), 1. [Google Scholar] [CrossRef]

- Celik, I. (2023). Towards Intelligent-TPACK: An empirical study on teachers’ professional knowledge to ethically integrate artificial intelligence (AI)-based tools into education. Computers In Human Behavior, 138, 107468. [Google Scholar] [CrossRef]

- Celik, I., & Dogan, S. (2025). Intelligent-TPACK for AI-assisted literacy instruction. In Reimagining literacy in the age of AI (pp. 92–112). Chapman and Hall/CRC. [Google Scholar] [CrossRef]

- Chiu, T., & Chai, C. (2020). Sustainable curriculum planning for artificial intelligence education: A self-determination theory perspective. Sustainability, 12, 5568. [Google Scholar] [CrossRef]

- Cohen, J. (2013). Statistical power analysis for the behavioral sciences. Routledge. [Google Scholar] [CrossRef]

- Cohen, L., Manion, L., & Morrison, K. (2018). Research methods in education (8th ed.). Routledge. [Google Scholar] [CrossRef]

- Cowan, P., & Farrell, R. (2023). Virtual reality as the catalyst for a novel partnership model in initial teacher education: ITE subject methods tutors’ perspectives on the island of Ireland. Education Sciences, 13(3), 228. [Google Scholar] [CrossRef]

- Cukurova, M., Kralj, L., Hertz, B., & Saltidou, E. (2024). Professional development for teachers in the age of AI. European Schoolnet. Available online: https://discovery.ucl.ac.uk/id/eprint/10186881 (accessed on 22 September 2025).

- Diao, Y., Li, Z., Zhou, J., Gao, W., & Gong, X. (2024). A meta-analysis of college students’ intention to use generative artificial intelligence. arXiv, arXiv:2409.06712. [Google Scholar] [CrossRef]

- Dimitriadou, E., & Lanitis, A. (2023). A critical evaluation, challenges, and future perspectives of using artificial intelligence and emerging technologies in smart classrooms. Smart Learning Environments, 10(1), 12. [Google Scholar] [CrossRef]

- Dogan, M. E., Dogan, T. G., & Bozkurt, A. (2023). The use of artificial intelligence (AI) in online learning and distance education processes: A systematic review of empirical studies. Applied Sciences, 13(5), 3056. [Google Scholar] [CrossRef]

- Dogan, S., Nalbantoglu, U. Y., Celik, I., & Dogan, N. A. (2025). Artificial intelligence professional development: A systematic review of TPACK, designs, and effects for teacher learning. Professional Development in Education, 51, 519–546. [Google Scholar] [CrossRef]

- Edwards, C., Edwards, A., Spence, P. R., & Lin, X. (2018). I, teacher: Using artificial intelligence (AI) and social robots in communication and instruction. Communication Education, 67(4), 473–480. [Google Scholar] [CrossRef]

- European Commission. (2020). High-level expert group on artificial intelligence. The assessment list for trustworthy artificial intelligence (ALTAI) for self-assessment. Available online: https://data.europa.eu/doi/10.2759/002360 (accessed on 22 September 2025).

- Field, A. (2024). Discovering statistics using IBM SPSS statistics (6th ed.). SAGE Publications Ltd. [Google Scholar]

- George, D., & Mallery, P. (2021). IBM SPSS Statistics 27 step by step: A simple guide and reference (17th ed.). Routledge. [Google Scholar] [CrossRef]

- Grassini, S. (2023). Shaping the future of education: Exploring the potential and consequences of AI and ChatGPT in educational settings. Education Sciences, 13(7), 692. [Google Scholar] [CrossRef]

- Gregorio, T. A. D., Alieto, E. O., Natividad, E. R., & Tanpoco, M. R. (2024). Are preservice teachers “totally PACKaged”? A quantitative study of pre-service teachers’ knowledge and skills to ethically integrate artificial intelligence (AI)-based tools into education. In Lecture notes in networks and systems (pp. 45–55). Springer. [Google Scholar] [CrossRef]

- Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2019). Multivariate data analysis (8th ed.). Cengage. [Google Scholar]

- Hancock, G. R., & An, J. (2020). A closed-form alternative for estimating ω reliability under unidimensionality. Measurement: Interdisciplinary Research and Perspectives, 18(1), 1–14. [Google Scholar] [CrossRef]

- Hayes, A. F., & Coutts, J. J. (2020). Use omega rather than Cronbach’s alpha for estimating reliability. But…. Communication Methods and Measures, 14(1), 1–24. [Google Scholar] [CrossRef]

- Holmes, W. (2023). The unintended consequences of artificial intelligence and education. Education International. [Google Scholar]

- Holmes, W., & Porayska-Pomsta, K. (2023). The ethics of artificial intelligence in education: Practices, challenges, and debates. Routledge. [Google Scholar] [CrossRef]

- Holmes, W., Porayska-Pomsta, K., Holstein, K., Sutherland, E., Baker, T., Shum, S. B., Santos, O. C., Rodrigo, M. T., Cukurova, M., Bittencourt, I. I., & Koedinger, K. R. (2022). Ethics of AI in education: Towards a community-wide framework. International Journal of Artificial Intelligence in Education, 32(3), 504–526. [Google Scholar] [CrossRef]

- Hsu, C., Liang, J., Chai, C., & Tsai, C. (2013). Exploring preschool teachers’ technological pedagogical content knowledge of educational games. Journal of Educational Computing Research, 49(4), 461–479. [Google Scholar] [CrossRef]

- Joo, Y. J., Park, S., & Lim, E. (2018). Factors influencing preservice teachers’ intention to use technology: TPACK, teacher self-efficacy, and technology acceptance model. Journal of Educational Technology & Society, 21(3), 48–59. [Google Scholar]

- Kadluba, A., Strohmaier, A., Schons, C., & Obersteiner, A. (2024). How much C is in TPACK? A systematic review on the assessment of TPACK in mathematics. Educational Studies in Mathematics, 118, 169–199. [Google Scholar] [CrossRef]

- Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika, 39(1), 31–36. [Google Scholar] [CrossRef]

- Karatas, F., & Atac, B. A. (2025). When TPACK meets artificial intelligence: Analyzing TPACK and AI-TPACK components through structural equation modelling. Education And Information Technologies, 30, 8979–9004. [Google Scholar] [CrossRef]

- Kaufman, J. H., Woo, A., Eagan, J., Lee, S., & Kassan, E. B. (2025). Uneven adoption of artificial intelligence tools among U.S. teachers and principals in the 2023–2024 school year. RAND Corporation. [Google Scholar] [CrossRef]

- Kim, S., Jang, Y., Choi, S., Kim, W., Jung, H., Kim, S., & Kim, H. (2022). Correction to: Analyzing teacher competency with TPACK for K-12 AI education. KI—Künstliche Intelligenz, 36(2), 187. [Google Scholar] [CrossRef]

- Kitto, K., & Knight, S. (2019). Practical ethics for building learning analytics. British Journal of Educational Technology, 50(6), 2855–2870. [Google Scholar] [CrossRef]

- Koehler, M. J., & Mishra, P. (2008). Introducción a TPACK. In AACTE Committee on Innovation and Technology (Ed.), Handbook of technological pedagogical content knowledge (TPCK) for educators (Vol. 1, pp. 3–29). Routledge. [Google Scholar]

- Krug, M., Thoms, L., & Huwer, J. (2023). Augmented reality in the science classroom—Implementing pre-service teacher training in the competency area of simulation and modeling according to the DiKoLAN framework. Education Sciences, 13(10), 1016. [Google Scholar] [CrossRef]

- Kuo, Y., & Kuo, Y. (2024). An exploratory study of pre-service teachers’ perceptions of technological pedagogical content knowledge of digital games. Research And Practice In Technology Enhanced Learning, 19, 008. [Google Scholar] [CrossRef]

- Labadze, L., Grigolia, M., & Machaidze, L. (2023). Role of AI chatbots in education: Systematic literature review. International Journal of Educational Technology in Higher Education, 20(1), 56. [Google Scholar] [CrossRef]

- Lo, C. K. (2023). What is the impact of ChatGPT on education? A rapid review of the literature. Education Sciences, 13(4), 410. [Google Scholar] [CrossRef]

- Lorenz, P., Perset, K., & Berryhill, J. (2023). Initial policy considerations for generative artificial intelligence. OECD Artificial Intelligence Papers, 1. OECD Publishing. [Google Scholar] [CrossRef]

- Luckin, R., George, K., & Cukurova, M. (2022). AI for school teachers. Routledge. [Google Scholar] [CrossRef]

- Maslej, N., Fattorini, L., Perrault, R., Parli, V., Reuel, A., Brynjolfsson, E., Etchemendy, J., Ligett, K., Lyons, T., Manyika, J., Niebles, J. C., Shoham, Y., Wald, R., Clark, J., & Lyon, K. (2024). Artificial intelligence index report 2024 (7th ed.). Stanford University, Human-Centered Artificial Intelligence. Available online: https://hai-production.s3.amazonaws.com/files/hai_ai-index-report-2024-smaller2.pdf (accessed on 22 September 2025).

- Miao, F., & Cukurova, M. (2024). AI competency framework for teachers. UNESCO. [Google Scholar] [CrossRef]

- Miao, F., Hinostroza, J. E., Lee, M., Isaacs, S., Orr, D., Senne, F., Martinez, A.-L., Song, K.-S., Uvarov, A., Holmes, W., Vergel de Dios, B., & UNESCO. (2022). Guidelines for ICT in education policies and masterplans (ED-2021/WS/34). UNESCO. [Google Scholar] [CrossRef]

- Miao, F., Holmes, W., Ronghuai, H., & Hui, Z. (2021). AI and education: Guidance for policy-makers. UNESCO. [Google Scholar] [CrossRef]

- Miao, F., Holmes, W., & UNESCO. (2023). Guidance for generative AI in education and research. UNESCO. [Google Scholar] [CrossRef]

- Miao, F., Shiohira, K., & Lao, N. (2024). AI competency framework for students. UNESCO. [Google Scholar] [CrossRef]

- Mineduc. (2024). Cargos docentes de Chile—Directorio 2024. Datos Abiertos Mineduc. Available online: https://datosabiertos.mineduc.cl/ (accessed on 22 September 2025).

- Mishra, P. (2019). Considering contextual knowledge: The TPACK diagram gets an upgrade. Journal of Digital Learning in Teacher Education, 35(2), 76–78. [Google Scholar] [CrossRef]

- Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record: The Voice of Scholarship in Education, 108(6), 1017–1054. [Google Scholar] [CrossRef]

- Mishra, P., Warr, M., & Islam, R. (2023). TPACK in the age of ChatGPT and Generative AI. Journal of Digital Learning in Teacher Education, 39(4), 235–251. [Google Scholar] [CrossRef]

- Møgelvang, A., Bjelland, C., Grassini, S., & Ludvigsen, K. (2024). Gender differences in the use of generative artificial intelligence chatbots in higher education: Characteristics and consequences. Education Sciences, 14(12), 1363. [Google Scholar] [CrossRef]

- Ng, D. T. K., Lee, M., Tan, R. J. Y., Hu, X., Downie, J. S., & Chu, S. K. W. (2023). A review of AI teaching and learning from 2000 to 2020. Education And Information Technologies, 28(7), 8445–8501. [Google Scholar] [CrossRef]

- Ning, Y., Zhang, C., Xu, B., Zhou, Y., & Wijaya, T. T. (2024). Teachers’ AI-TPACK: Exploring the relationship between knowledge elements. Sustainability, 16(3), 978. [Google Scholar] [CrossRef]

- OECD. (2019). TALIS 2018 results (Vol. I): Teachers and school leaders as lifelong learners. OECD Publishing. [Google Scholar] [CrossRef]

- OECD. (2020). TALIS 2018 results (Vol. II): Teachers and school leaders as valued professionals. OECD Publishing. [Google Scholar] [CrossRef]

- OECD. (2023). Opportunities, guidelines and guardrails on effective and equitable use of AI in education. OECD Publishing. [Google Scholar]

- OECD. (2024). Teaching and learning international survey (TALIS) 2024: Teacher questionnaire, survey instrument. In OECD TALIS 2024 Database. OECD. [Google Scholar]

- OECD. (2025). Teaching and learning international survey (TALIS) 2024 conceptual framework. OECD Publishing. [Google Scholar] [CrossRef]

- Pallant, J. (2020). SPSS survival manual: A step by step guide to data analysis using IBM SPSS (7th ed.). Routledge. [Google Scholar] [CrossRef]

- Polly, D. (2024). Examining TPACK enactment in elementary mathematics with various learning technologies. Education Sciences, 14(10), 1091. [Google Scholar] [CrossRef]

- Popenici, S. A. D., & Kerr, S. (2017). Exploring the impact of artificial intelligence on teaching and learning in higher education. Research and Practice in Technology Enhanced Learning, 12(1), 22. [Google Scholar] [CrossRef] [PubMed]

- Saenen, L., Hermans, K., Rocha, M. D. N., Struyven, K., & Emmers, E. (2024). Co-designing inclusive excellence in higher education: Students’ and teachers’ perspectives on the ideal online learning environment using the I-TPACK model. Humanities and Social Sciences Communications, 11(1), 890. [Google Scholar] [CrossRef]

- Seufert, S., Guggemos, J., & Sailer, M. (2021). Technology-related knowledge, skills, and attitudes of pre- and in-service teachers: The current situation and emerging trends. Computers in Human Behavior, 115, 106552. [Google Scholar] [CrossRef] [PubMed]

- Shin, D., & Park, Y. J. (2019). Role of fairness, accountability, and transparency in algorithmic affordance. Computers in Human Behavior, 98, 277–284. [Google Scholar] [CrossRef]

- Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15(2), 4–14. [Google Scholar] [CrossRef]

- Shulman, L. S. (1987). Knowledge and teaching: Foundations of the new reform. Harvard Educational Review, 57(1), 1–22. [Google Scholar] [CrossRef]

- Shum, S. J. B., & Luckin, R. (2019). Learning analytics and AI: Politics, pedagogy and practices. British Journal of Educational Technology, 50(6), 2785–2793. [Google Scholar] [CrossRef]

- Sierra, Á. J., Iglesias, J. O., & Palacios-Rodríguez, A. (2024). Diagnosis of TPACK in elementary school teachers: A case study in the colombian caribbean. Education Sciences, 14(9), 1013. [Google Scholar] [CrossRef]

- Stolpe, K., & Hallström, J. (2024). Artificial intelligence literacy for technology education. Computers and Education Open, 6, 100159. [Google Scholar] [CrossRef]

- Sun, J., Ma, H., Zeng, Y., Han, D., & Jin, Y. (2023). Promoting the AI teaching competency of K-12 computer science teachers: A TPACK-based professional development approach. Education and Information Technologies, 28(2), 1509–1533. [Google Scholar] [CrossRef]

- Tan, X., Cheng, G., & Ling, M. H. (2024). Artificial intelligence in teaching and teacher professional development: A systematic review. Computers and Education Artificial Intelligence, 8, 100355. [Google Scholar] [CrossRef]

- Tillé, Y. (2020). Sampling and estimation from finite populations. Wiley. [Google Scholar] [CrossRef]

- Tomczak, M., & Tomczak, E. (2014). The need to report effect size estimates revisited: An overview of some recommended measures of effect size. Trends in Sport Sciences, 21(1), 19–25. [Google Scholar]

- Traga, Z. A., & Rocconi, L. (2025). AI literacy: Elementary and secondary teachers’ use of AI-tools, reported confidence, and professional development needs. Education Sciences, 15(9), 1186. [Google Scholar] [CrossRef]

- UNESCO. (2019, May 16–18). Beijing consensus on artificial intelligence and education. International Conference on Artificial Intelligence and Education, Planning Education in the AI Era: Lead the Leap, Beijing, China. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000368303 (accessed on 22 September 2025).

- Velander, J., Taiye, M. A., Otero, N., & Milrad, M. (2023). Artificial intelligence in K-12 education: Eliciting and reflecting on Swedish teachers’ understanding of AI and its implications for teaching & learning. Education and Information Technologies, 29(4), 4085–4105. [Google Scholar] [CrossRef]

- Wang, K. (2024). Pre-service teachers’ GenAI anxiety, technology self-efficacy, and TPACK: Their structural relations with behavioral intention to design GenAI-Assisted teaching. Behavioral Sciences, 14(5), 373. [Google Scholar] [CrossRef]

- Wang, Y., Nadler, E. O., Mao, Y., Adhikari, S., Wechsler, R. H., & Behroozi, P. (2021). Universe machine: Predicting galaxy star formation over seven decades of halo mass with zoom-in simulations. The Astrophysical Journal, 915(2), 116. [Google Scholar] [CrossRef]

- Williamson, B., & Eynon, R. (2020). Historical threads, missing links, and future directions in AI in education. Learning, Media and Technology, 45(3), 223–235. [Google Scholar] [CrossRef]

- Yan, L., Sha, L., Zhao, L., Li, Y., Martinez-Maldonado, R., Chen, G., Li, X., Jin, Y., & Gašević, D. (2024). Practical and ethical challenges of large language models in education: A systematic scoping review. British Journal of Educational Technology, 55, 90–112. [Google Scholar] [CrossRef]

- Yim, I. H. Y., & Su, J. (2025). Artificial intelligence (AI) learning tools in K-12 education: A scoping review. Journal of Computers in Education, 12, 93–131. [Google Scholar] [CrossRef]

- Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education—Where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27. [Google Scholar] [CrossRef]

- Zeng, Y., Wang, Y., & Li, S. (2022). The relationship between teachers’ information technology integration self-efficacy and TPACK: A meta-analysis. Frontiers in Psychology, 13, 1091017. [Google Scholar] [CrossRef]

- Zhang, K., & Aslan, A. B. (2021). AI technologies for education: Recent research & future directions. Computers and Education Artificial Intelligence, 2, 100025. [Google Scholar] [CrossRef]

- Zhao, J., Li, S., & Zhang, J. (2025). Understanding teachers’ adoption of AI technologies: An empirical study from Chinese middle schools. Systems, 13(4), 302. [Google Scholar] [CrossRef]

| Variables | Reference Population | Sample | ||

|---|---|---|---|---|

| N | % | N | % | |

| Gender | ||||

| Female | 46,235 | 66% | 464 | 65.2% |

| Male | 23,667 | 34% | 237 | 33.3% |

| Educational level | ||||

| Primary | 29,205 | 42% | 290 | 40.9% |

| Secondary | 40,697 | 58% | 419 | 59.1% |

| School type | ||||

| Public | 20,787 | 30% | 298 | 42% |

| Private | 49,115 | 70% | 411 | 58% |

| Geography | ||||

| Urban | 67,840 | 97% | 658 | 92.8% |

| Rural | 2062 | 3% | 51 | 7.2% |

| Age (years old) | ||||

| 20–30 | 6372 | 9% | 100 | 14.1% |

| 30–40 | 22,354 | 32% | 241 | 34.0% |

| 40–50 | 18,340 | 26% | 196 | 27.6% |

| 50–60 | 11,638 | 17% | 102 | 14.4% |

| 60–70 | 9449 | 14% | 68 | 9.6% |

| 70–80 | 1514 | 2% | 2 | 0.3% |

| 80+ | 235 | 0.3% | 0 | 0% |

| Total | 69,902 | 100% | 709 | 100% |

| Dimension | Items | Cronbach’s α | McDonald’s ω | Interpretation |

|---|---|---|---|---|

| TK | 3 | 0.886 | 0.891 | Very good |

| TPK | 3 | 0.793 | 0.805 | Acceptable |

| TCK | 3 | 0.849 | 0.855 | Very good |

| TPACK | 3 | 0.885 | 0.888 | Very good |

| Ethics | 3 | 0.882 | 0.883 | Very good |

| Kolmogorov–Smirnov | Shapiro–Wilk | |||||

|---|---|---|---|---|---|---|

| Dimension | Z | p-Value | W | p-Value | Skewness | Kurtosis |

| TK | 0.122 | <0.001 | 0.934 | <0.001 | −0.352 | −0.740 |

| TPK | 0.092 | <0.001 | 0.961 | <0.001 | −0.062 | −0.551 |

| TCK | 0.092 | <0.001 | 0.948 | <0.001 | 0.016 | −0.653 |

| TPACK | 0.128 | <0.001 | 0.934 | <0.001 | 0.330 | −0.653 |

| Ethics | 0.138 | <0.001 | 0.921 | <0.001 | 0.366 | −0.526 |

| Scale | Mdn | Q1–Q3 | Mean | SD | Skew | Kurt | 95% CI Mean |

|---|---|---|---|---|---|---|---|

| TK | 2.67 | 1.33 | 2.67 | 0.91 | −0.352 | −0.740 | [2.60–2.73] |

| TPK | 2.33 | 1.00 | 2.52 | 0.81 | −0.062 | −0.551 | [2.46–2.58] |

| TCK | 2.33 | 1.00 | 2.45 | 0.86 | 0.016 | −0.653 | [2.38–2.51] |

| TPACK | 2.00 | 1.33 | 2.23 | 0.88 | 0.330 | −0.653 | [2.16–2.29] |

| Ethics | 2.00 | 1.33 | 2.10 | 0.84 | 0.366 | −0.526 | [2.04–2.16] |

| Dimension | U de Mann–Whitney | Z Statistic | p-Value | Decision | r |

|---|---|---|---|---|---|

| Gender | |||||

| TK | 45,865.500 | −3.706 | <0.001 | Reject H0 | 0.14 |

| TPK | 48,928.000 | −2.492 | 0.013 | Do not reject H0 | 0.09 |

| TCK | 47,597.500 | −3.022 | 0.003 | Reject H0 | 0.11 |

| TPACK | 47,549.000 | −3.048 | 0.002 | Reject H0 | 0.12 |

| Ethics | 45,786.500 | −3.769 | <0.001 | Reject H0 | 0.14 |

| School level | |||||

| TK | 76,729.500 | 6.005 | <0.001 | Reject H0 | 0.23 |

| TPK | 70,448.500 | 3.646 | <0.001 | Reject H0 | 0.14 |

| TCK | 74,602.500 | 5.210 | <0.001 | Reject H0 | 0.20 |

| TPACK | 72,717.500 | 4.512 | <0.001 | Reject H0 | 0.17 |

| Ethics | 75,727.500 | 5.677 | <0.001 | Reject H0 | 0.21 |

| Dimension | H (K-W) | gl | p-Value | Decision | ε2 |

|---|---|---|---|---|---|

| School type | |||||

| TK | 10.553 | 4 | 0.032 | Do not reject H0 | 0.009 |

| TPK | 10.534 | 4 | 0.032 | Do not reject H0 | 0.008 |

| TCK | 14.614 | 4 | 0.060 | Do not reject H0 | 0.014 |

| TPACK | 4.316 | 4 | 0.365 | Do not reject H0 | 0.000 |

| Ethics | 5.550 | 4 | 0.235 | Do not reject H0 | 0.001 |

| Age | |||||

| TK | 88.969 | 5 | <0.001 | Reject H0 | 0.119 |

| TPK | 34.602 | 5 | <0.001 | Reject H0 | 0.042 |

| TCK | 78.255 | 5 | <0.001 | Reject H0 | 0.104 |

| TPACK | 27.926 | 5 | <0.001 | Reject H0 | 0.033 |

| Ethics | 38.705 | 5 | <0.001 | Reject H0 | 0.048 |

| Subject | |||||

| TK | 59.841 | 16 | <0.001 | Reject H0 | 0.063 |

| TPK | 50.374 | 16 | <0.001 | Reject H0 | 0.048 |

| TCK | 35.987 | 16 | 0.003 | Reject H0 | 0.028 |

| TPACK | 71.778 | 16 | <0.001 | Reject H0 | 0.079 |

| Ethics | 45.154 | 16 | <0.001 | Reject H0 | 0.052 |

| Comparison | Z | p-Value | r |

|---|---|---|---|

| TK–TPK | −6.013 | <0.001 | 0.23 |

| TK–TCK | −9.920 | <0.001 | 0.37 |

| TK–TPACK | −14.748 | <0.001 | 0.55 |

| TK–Ethics | −16.141 | <0.001 | 0.61 |

| TPK–TCK | −3.623 | <0.001 | 0.14 |

| TPK–TPACK | −12.157 | <0.001 | 0.46 |

| TPK–Ethics | −15.121 | <0.001 | 0.57 |

| TCK–TPACK | −10.436 | <0.001 | 0.39 |

| TCK–Ethics | −13.022 | <0.001 | 0.49 |

| TPACK–Ethics | −6.394 | <0.001 | 0.24 |

| Dimension | Years of Teacher Experience | Age (Years Old) | ||

|---|---|---|---|---|

| Spearman’s Rho | p-Value | Spearman’s Rho | p-Value | |

| TK | −0.330 | <0.001 | −0.349 | <0.001 |

| TPK | −0.218 | <0.001 | −0.202 | <0.001 |

| TCK | −0.308 | <0.001 | −0.321 | <0.001 |

| TPACK | −0.218 | <0.001 | −0.189 | <0.001 |

| Ethics | −0.218 | <0.001 | −0.219 | <0.001 |

| Dimension | df Regression | df Residual | F | p-Value |

|---|---|---|---|---|

| TK | 7 | 693 | 21.875 | <0.001 |

| TPK | 7 | 693 | 8.372 | <0.001 |

| TCK | 7 | 693 | 15.489 | <0.001 |

| TPACK | 7 | 693 | 8.944 | <0.001 |

| Ethics | 7 | 693 | 7.066 | <0.001 |

| Dimension | R | R2 | Adjusted R2 | Standard Error | Durbin-Watson | Interp. |

|---|---|---|---|---|---|---|

| TK | 0.425 | 0.181 | 0.173 | 0.82738 | 1.635 | Medium |

| TPK | 0.279 | 0.078 | 0.069 | 0.78226 | 1.652 | Small |

| TCK | 0.368 | 0.135 | 0.127 | 0.80630 | 1.719 | Small |

| TPACK | 0.288 | 0.083 | 0.074 | 0.84628 | 1.612 | Small |

| Ethics | 0.315 | 0.100 | 0.090 | 0.80362 | 1.732 | Small |

| Predictor | Standardized β | p-Value | Tolerance | VIF | Interp. |

|---|---|---|---|---|---|

| TK | |||||

| Age | −0.202 | 0.002 | 0.277 | 3.613 | Small-mod. |

| Years of experience | −0.168 | 0.009 | 0.288 | 3.475 | Small |

| Gender | −0.142 | <0.001 | 0.974 | 1.026 | Small |

| Teaching level | 0.270 | <0.001 | 0.860 | 1.163 | Small-mod. |

| School type | −0.016 | 0.656 | 0.866 | 1.155 | Negligible |

| Subject | −0.001 | 0.914 | 0.950 | 1.052 | Negligible |

| TPK | |||||

| Age | 0.000 | 0.998 | 0.277 | 3.613 | Negligible |

| Years of experience | −0.224 | 0.001 | 0.288 | 3.475 | Small-mod. |

| Gender | −0.084 | 0.023 | 0.974 | 1.026 | Small |

| Teaching level | 0.104 | 0.008 | 0.860 | 1.163 | Small |

| School type | −0.021 | 0.592 | 0.866 | 1.155 | Negligible |

| Subject | 0.002 | 0.949 | 0.950 | 1.052 | Negligible |

| TCK | |||||

| Age | −0.161 | 0.017 | 0.277 | 3.613 | Small |

| Years of experience | −0.141 | 0.032 | 0.288 | 3.475 | Small |

| Gender | −0.111 | 0.002 | 0.974 | 1.026 | Small |

| Teaching level | 0.116 | 0.002 | 0.860 | 1.163 | Small |

| School type | −0.033 | 0.392 | 0.866 | 1.155 | Negligible |

| Subject | −0.009 | 0.795 | 0.950 | 1.052 | Negligible |

| TPACK | |||||

| Age | 0.106 | 0.127 | 0.277 | 3.613 | Negligible |

| Years of experience | −0.294 | <0.001 | 0.288 | 3.475 | Moderate |

| Gender | −0.105 | 0.005 | 0.974 | 1.026 | Small |

| Teaching level | 0.128 | 0.001 | 0.860 | 1.163 | Small |

| School type | −0.020 | 0.602 | 0.866 | 1.155 | Negligible |

| Subject | 0.062 | 0.098 | 0.950 | 1.052 | Negligible |

| Ethics | |||||

| Age | −0.112 | 0.104 | 0.277 | 3.613 | Negligible |

| Years of experience | −0.096 | 0.155 | 0.288 | 3.475 | Negligible |

| Gender | −0.139 | <0.001 | 0.974 | 1.026 | Small |

| Teaching level | 0.160 | <0.001 | 0.860 | 1.163 | Small-mod. |

| School type | −0.035 | 0.367 | 0.866 | 1.155 | Negligible |

| Subject | 0.056 | 0.128 | 0.950 | 1.052 | Negligible |

| Dimension | Cluster 1 | Cluster 2 | Cluster 3 | Cluster 4 |

|---|---|---|---|---|

| TK | 3.59 (0.47) | 2.28 (0.40) | 1.41 (0.58) | 3.19 (0.39) |

| TPK | 3.52 (0.42) | 2.31 (0.40) | 1.43 (0.46) | 2.70 (0.46) |

| TCK | 3.52 (0.48) | 2.21 (0.35) | 1.22 (0.34) | 2.71 (0.45) |

| TPACK | 3.37 (0.48) | 2.18 (0.61) | 1.09 (0.21) | 2.21 (0.49) |

| Ethics | 3.19 (0.55) | 2.16 (0.48) | 1.09 (0.24) | 1.93 (0.59) |

| N (%) | 151 (21.3%) | 203 (28.6%) | 139 (19.6%) | 216 (30.5%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alé, J.; Ávalos, B.; Araya, R. Chilean Teachers’ Knowledge of and Experience with Artificial Intelligence as a Pedagogical Tool. Educ. Sci. 2025, 15, 1268. https://doi.org/10.3390/educsci15101268

Alé J, Ávalos B, Araya R. Chilean Teachers’ Knowledge of and Experience with Artificial Intelligence as a Pedagogical Tool. Education Sciences. 2025; 15(10):1268. https://doi.org/10.3390/educsci15101268

Chicago/Turabian StyleAlé, Jhon, Beatrice Ávalos, and Roberto Araya. 2025. "Chilean Teachers’ Knowledge of and Experience with Artificial Intelligence as a Pedagogical Tool" Education Sciences 15, no. 10: 1268. https://doi.org/10.3390/educsci15101268

APA StyleAlé, J., Ávalos, B., & Araya, R. (2025). Chilean Teachers’ Knowledge of and Experience with Artificial Intelligence as a Pedagogical Tool. Education Sciences, 15(10), 1268. https://doi.org/10.3390/educsci15101268