1. Introduction

When higher education leaders begin a new analytics initiative involving machine learning, stakeholders play a key role in determining what design to adopt when closing the loop to provide students with feedback [

1,

2]. These stakeholders may hold a range of views about the utility, efficacy, optimal design, and importance of such an initiative that would potentially impact its implementation and success. When evaluating a proposed project for potential adoption, leaders and managers interested in data-informed decision making need to understand the project’s value to the institution and its constituents to determine whether it warrants the resources required [

3]. If stakeholders who would potentially use the analytics do not see the value of the initiative or its practicality for aiding their decision making and practice, they may not use what eventually becomes available to them. This would undermine the institutional value proposition.

Therefore, stakeholder voices became a key component in a human-centered design approach for a novel prescriptive analytics application in the present research. This research’s overall initiative aims to support students who would benefit from receiving recommendations for tutoring, and relatedly, to support course redesign efforts by identifying where students struggle most. From a co-creation perspective, I involved stakeholders intentionally throughout the project’s design and development, guiding each step toward implementation [

4]. This research study explored one step of this process, assessing alignment with the institution’s core values.

This case study took place at a small, private, women-only institution of higher education in the northeastern United States. Recently, the institution was ranked highly among regional universities for advancing social mobility [

5], and is an emerging Hispanic-Serving Institution (HSI), underscoring its commitment to supporting its diverse student body. To help students succeed, the university leverages technology, including online applications, and provides extensive wraparound support for students through a unique holistic model [

6]. This support extends the university’s extensive on-campus services for more traditionally oriented undergraduates to the predominantly non-traditional students who attend the online arm of the university. To help the university provide cost-effective and substantively effective support to students, the institution values data-informed decision making and has a history of developing analytics applications to inform practice at an institutional level, such as with retention efforts [

7].

Educational leaders have shown interest in expanding analytics to inform teaching- and learning-related decisions [

8], such as the prescriptive application considered in this study. This application is facilitated by the existence of a data warehouse that aggregates data from multiple campus systems, including the adaptive learning system, the learning management system, the student information system, the online tutoring system, and the advising system, among others. The rich data available offer the opportunity to generate meaningful feedback for students on their educational progress and practice [

9]. This proposed analytics application would be co-designed with institutional stakeholders to provide feedback to students to inform their on-the-fly decisions regarding whether to seek additional tutoring support at points in a course when they are struggling to learn the material.

Importantly, as context for this study, the institution has undertaken an in-depth and inclusive determination of its core values. The university president initiated this process as part of a strategic effort to bring the online and on-campus components of the university into greater alignment (Email communication, 2021). A multifaceted, institution-wide discovery process involved a core committee with 15 representatives from across the campus, a campus-wide survey, and focus groups that were open to the community. After a five-month process, including data analysis, core values were articulated in February 2022 [

10]. Since then, various practices have moved the institution intentionally toward incorporating these values into daily discussions, conversations, events, awards, and student activities. Thus, a conversation about aligning the proposed prescriptive analytics initiative with institutional values proceeded from a common foundation, which is unusual in co-designed learning analytics initiatives. As Dollinger et al. [

4] noted, “The current gap in the literature relating to participatory design in LA is not the lack of interventions, but rather the transparency of the researchers’… values… underpinning their decision-making” (p. 12). This study addresses this gap by exploring the way stakeholders see their values pertaining to this analytics initiative.

The research question that guided this study was as follows: How would institutionalizing a prescriptive analytics approach offering students support within courses align with institutional core values?

2. Literature Review

Prescriptive analytics, as investigated here, is an approach not yet widely developed or adopted in higher education. It presents an opportunity for research and development, and a competitive advantage for institutions if executed well. Despite the promise of prescriptive analytics for comparing potential student outcomes in simulated worlds [

11], learning analytics applications to date have typically focused on descriptive or predictive analytics or evaluation [

12,

13,

14]. When mentioned, prescriptive analytics has been recognized as an opportunity for institutions interested in making more effective use of existing data [

15]. For example, educational leaders can now use student learning data aggregated from across multiple campus systems in data warehouses to support analytic directions involving calculating potential outcomes using Bayesian network modeling. This approach facilitates the investigation of optimal decisions while accounting for uncertainty, particularly when combined with the knowledge mapping that accompanies adaptive learning system implementation [

16]. Many opportunities exist for expanding use of data and analytics applications and building further capacity within higher education [

17,

18], and prescriptive opportunities hold promise as one avenue for further investigation.

This study focuses on the alignment of institutional core values with the implementation of prescriptive analytics to support students. These values are a key component underpinning the realization of technological innovation in practice. The resources, processes, and values (RPV) change management framework describes organizational innovative capability as shaped by key elements that include the values that provide the context for institutional data-informed decision making [

15,

19]. When implementing a significant innovation with the potential to change how an organization conducts its operations substantially, leaders need to determine necessary resources and identify and enact processes for implementing the innovative initiative. The organization’s values act as an umbrella over all parts of the project, impacting the overall success or failure of the initiative. As described in the Learning Analytics in Higher Education Adoption Model [

20], organizational factors, such as those highlighted by RPV theory, play a key contextual role in analytics adoption efforts leading to the user reflection, ongoing engagement, self-assessment, and action necessary for effective implementation.

Supported by RPV theory’s emphasis on the role of organizational values in shaping practical, innovative capacity, I determined that assessing the project’s feasibility should involve stakeholders exploring how and whether the institution’s core values aligned with the proposed initiative. According to RPV theory, the organization’s values govern the standards used to judge the attractiveness of innovative opportunities [

19]. These values determine how stakeholders engage with the project and whether the project supports key performance indicators that matter to institutional decision makers. They also determine whether those administrators will see the project as aligning with the institution’s strategic direction, likely to succeed, and supported by the community.

This values emphasis is combined here with an understanding of appropriate stakeholder involvement. In a learning analytics context, the simplified Orchestrating Learning Analytics (OrLA) framework represents a view of critical issues that need stakeholder communication for a successful analytics initiative specifically involving applications to learning [

1]. These include understanding local issues, current practices, affordances provided by the enhanced technology, the innovation itself, and ethics and privacy issues. These factors all feed into a cost–benefit analysis or, as described in the present study, a feasibility analysis. OrLA posits that stakeholders who provide input to this analysis could include practitioners (e.g., faculty and staff), students, system developers, researchers, and legal experts. Such stakeholder considerations are particularly salient for a study like the present one, which aims to close the loop and provide useful feedback to individuals (i.e., users).

In the present case, the institution initially created its online arm as a separate operation targeting a distinctive population of students (i.e., non-traditional) who valued the additional flexibility of online learning and appreciated additional technological supports such as adaptive learning [

8]. As the institution works to integrate what has been learned through this online initiative into the main operations of the university (i.e., a current strategic priority), the conversations around values take on particular importance. The shared values across innovative and traditional modes of teaching and learning focus everyone on what matters most, moving past surface differences to the heart of what is important in the learning environment.

The present core values-focused study constitutes one component of a larger project investigating all three RPV framework components: resources, processes, and values. Because of the central importance of values alignment to determining the sensibleness and feasibility of the project overall, particularly in the context of Bay Path University’s evolution and merging of online and traditional educational modes, the present study focused on the values component of the model, highlighting the richness of results connecting the proposed project to the institution’s core values. In line with the stakeholder groups the OrLA model indicates are relevant to assess a project’s feasibility, this project involved faculty, staff, students, systems people, and me—the researcher.

3. Methods

An action research approach was taken involving participants in a series of focus groups that each included a different mix of stakeholders [

21]. In action research, participant voices drive the research. Thus, this methodology was appropriate for this study centering human-oriented design processes [

22]. This approach facilitated emphasizing stakeholder input into design direction and design decisions [

23]. Action research often occurs in iterative cycles of investigation, and the individuals engaging with this study participated in two cycles. The core values data analyzed here are from the first action research cycle. The second action research cycle more deeply investigated the resources and processes needed and is reported elsewhere.

In action research, the researcher is an actor along with the participants. As such, reflecting on my role and how I might influence the results is important for ensuring study quality, particularly given my connections to the institution [

24]. As the researcher, I have had several connections to the institution over time, including as a faculty member when these data were collected. I have also analyzed data from the institution for grant project assessments and other independent research. I developed prior connections with some, but not all, participants in this study. No participants directly worked with or studied in my teaching area during data collection, and I have since left the institution to teach at another university. Thus, my analysis was conducted after I left the setting, giving me both insider knowledge and external perspective on the proposed project being investigated as I strove to engage in rigorous analysis to understand the participants’ perspectives.

In reflecting upon my role as an insider action researcher during data collection, I recognize that my role as an invested community member may have increased my credibility with the participants. It also gave me insight into what kind of project would be of interest to stakeholders, informing the project framing [

25]. Though I endeavored to facilitate participants sharing their views, it is possible my dual role may have influenced what they shared in unknown ways. Although my connection to the university may have thus influenced my engagement with the participants and the resulting analysis, I have endeavored to be reflective about my practice and my role in the research to minimize such potential effects and instead amplify the participants’ voices in the results.

4. Data

The data analyzed come from a series of 10 focus groups that I conducted in Spring 2023 involving 37 institutional stakeholders. Participants included 17 students, 7 faculty members, and 13 staff, each paid a small stipend for their participation (see

Table 1).

Study participation was open to anyone at the institution, but the sample was primarily generated through purposeful sampling of people with different roles with the goal of obtaining wide sample variation. Stakeholders involved with both online and on-campus education were engaged in the study, so potential differences between the student experience in these different modalities could be identified. Students came from a variety of majors, including fields related to business, health, communications, and psychology. Faculty came from various departments, which are not identified here to keep the respondents anonymous, given the university’s small size.

Other characteristics were not systematically identified, but the focus group discussion clarified many. Several participants were peer mentors and several had disabilities–I specifically reached out to both groups with invitations because they were expected to have insightful perspectives on the topic under study. At least two participants were on the Core Values Committee, and two others were Core Values Ambassadors for the campus. Some students had used either online or on-campus tutoring, and others had not. Students ranged from first-year students to seniors; some were traditional age, and others were not. Some lived on campus, others near the university, and yet others in various states around the country.

Each participant attended one first-round focus group concentrated on the core values, lasting an hour and a half. These ten focus groups contained anywhere from two to six participants. Six groups contained a mix of students and others; one contained a mix of faculty and staff, one contained only staff, and two contained only students. Groups were mostly of mixed composition, allowing participants to hear other viewpoints and spark ideas. Even groups that were all staff or all students had people from different functional areas or student backgrounds, providing a diversity of opinions. However, these more homogenous groups also allowed for a deeper dive into the issues most salient to that type of participant.

5. Analysis

Focus groups were recorded on Zoom and transcribed using the assistance of otter.ai. Transcriptions were reviewed and corrected by the researcher, beginning the process of immersing myself in the data. Corrections included things like the names of programs or acronyms specific to the institution. Anonymized summaries of each focus group discussion were sent to that group’s participants as a member checking exercise, involving the participants to ensure the accuracy of the participant voices quoted in the results and bolstering the trustworthiness of the findings overall [

26]. After this review by the members of each focus group, the summaries of all focus groups were shared with all participants.

During the analysis, I conducted coding by hand via multiple passes through printouts of sixteen pages of single-spaced, transcribed data, a process during which I familiarized myself with the nuances of the data and sought patterns. (I note that other data from these focus groups were not analyzed for this study because they involved discussion that did not pertain directly to the core values.) After reviewing all transcriptions, an open coding cycle through the data identified major topics discussed using descriptive codes and in vivo codes based on statements by the focus group participants [

27]. I followed this step with a second cycle of focused coding, looking for patterns specifically within each core values area identified by the institution [

28]. I remained reflective about how my role as the researcher and sole coder might affect the results. In doing so, I engaged in contemplation and memo writing, aiming to understand and minimize my potential impact on the results beyond my role as the focus group facilitator and the explanations of the proposed project that I provided to the participants. Consistent with this orientation, I foregrounded participant views in my presentation of the findings. My investment in the process of improving practice at the institution was mirrored in the participant voices in a way that seems authentic to the institutional ethos. My reflexive practice was undertaken with the aim of bolstering the credibility of my findings for a study with a single coder. I categorized results thematically within each core value area and across all areas, as presented next.

6. Results

In addition to making specific observations about individual core values, some participants felt the project related to all core values. This was encapsulated in a theme of cross-cutting core relevance. For example, some participants saw the project aligning well with the core values overall, saying that “it really touches on most, if not all of them”, and “I do believe that the analytics line up with the core values 100%”. The project was also seen as supporting administrators’ efforts to foster student success:

I see it as such a benefit to the core values like because we’re taking, the administrators and everything at Bay Path are making every effort for those students to succeed. And you want to support them any way you can, throughout their whole journey. I think it’s amazing. I think it’s a good, very good idea. And it does, I think it matches great with the core values, all of them.

Another participant concluded: “But analytically speaking, I mean, to be honest, all this is gonna focus on these values, no matter what, you know, whichever avenues that you choose to take. On the end, they all come together as one anyways”. As these comments illustrate, there was wide support for the application of the core values within a prescriptive analytics initiative. This support for cross-cutting core relevance was found among both people who had been involved directly in the core values initiative and others who were not.

This enthusiasm was countered by one person who expressed skepticism, saying:

You can’t interact with the instructor while you’re in class to ask questions, and whatnot. That’s the biggest hurdle for me, as somebody that does have issues in general, like, I will disclose, I do have ADHD, I have anxiety, I have depression. I have all those that all are also incorporated into my challenges with the courses. So lately, they’ve been making it very difficult with attending to the course load and everything. It’s not that I can’t get the material down, it’s just the fact that the time that I give and contribute to my courses is very difficult, especially when I don’t have a lot of energy. And I don’t think that with analytics itself, it will take that into account, because it’s just looking at the grades of the student. So you don’t really know the backstory behind the student as well, which I know that’s another concern with these things.

Another person expressed both skepticism and enthusiasm, demonstrating a complexity of feelings:

It doesn’t feel like the campus actually implements those. It feels like it’s being promoted but not implemented. Like while seeing learners come first, it doesn’t feel like it as a person of color on campus. We pledge to foster inclusion and belonging, but it doesn’t feel as though. So I feel like things like that. … This is aligned with these core values. I think that they’re really good qualifiers. I just wish that they were more implemented within the campus. Like I said before, it feels along the lines of marketing and feels like it’s being stated, but not actually done.

Thus, these and other findings below show a range of opinions, leading to another theme of the importance of multiple perspectives. This points to the importance of continuing to have diverse voices engaged in the next steps of the project.

The subsections below present results from the student, faculty, and staff discussions of each core value. In terms of prevalence of responses, the core values of “our learners come first” and “committed to equity” stood out as the most frequently identified, typically with passionate thoughts. “We are a community that collaborates” and “innovation and excellence” were also mentioned quite frequently. The values of “respect and compassion”, as well as “health and well being” were mentioned less frequently, though sometimes with great passion. “Diversity makes us stronger” and “fostering inclusion and belonging” were mentioned by the fewest people. In what follows, I foreground the voices of the study participants to share their perspectives on the primary themes related to each core value.

6.1. Core Value: Our Learners Come First

The primary theme in the responses noting “our learners come first” was as follows: help students by making currently invisible patterns visible. Example quotes that illustrate this theme included the following:

There is so much about learners that should be taken into account—their background, their learning styles, where they are in their education (right out of high school or coming back to education), whether they work. Diversity incorporates a lot of different things, including gender and race, but also diversity in learning, such as neurodiverse learning, as well as diverse activities, such as multicultural events, academic gatherings that aren’t classes, and clubs or activities.

Our learners come first is really important to look at, because you’re trying to improve the experience for the population as a whole. But each individual needs to be looked at, because the data doesn’t necessarily reflect each individual’s needs, it reflects the needs of a larger group.

I think the first one, our learners come first, is huge… I think that kind of goes hand in hand with the predictive analytics if anything can help our learners learn better… coming at it from different directions kind of just looking at what the data shows and how we can use that to help them improve in any aspect of their learning is big.

6.2. Core Value: Diversity Makes Us Stronger

The primary theme in the responses around the core value of “diversity makes us stronger” was as follows: diversity should be reflected throughout. Example participant quotes included the following.

When we use analytics, we should “perhaps have a variety of them. Because having the diversity [in the modeling], that would be better able to address the diversity of our students”.

Another participant discussed not growing up in the United States and the difference in her education:

We don’t all learn the same. If we’re going to talk about diversity in America, we need to consider the students that we should include—that they might not learn the same, that they might need more support. … Those folks that do better with a visual understanding could benefit from a dashboard, but then I can see how the support from the faculty, it’s what actually takes it all the way. So we need to consider that not everyone comes from the same background set. Because of it, we don’t all learn the same. So if we’re going to be inclusive, and diversity is going to be claimed, then we need to account for everyone’s way of learning. We don’t all learn the same.

6.3. Core Value: We Are Committed to Equity

The following in vivo statement exemplified the primary theme in the responses noting the equity core value: “the more we try to embrace analytics, the less we rely on our biased judgements.” Example statements pertaining to this theme included the following:

I think that I have reservations that we can inadvertently bias ourselves. But I think the more we can try to incorporate analytics and embrace that spirit, the less we rely on some of our instinctive judgments, which you know, are inherently biased. And maybe it’s a better together kind of thing when you can combine your intuition and as much data as possible.

I think it kind of holds the campus and the university accountable with the core values saying that they’re committed to equity. Because analytics just make sure that we all have an equal chance and a fair chance at education and all that and it’s just as reflected for the professors and whatnot. So the core values will hold us accountable.

6.4. Core Value: Innovation and Excellence Drive Us

The primary theme in the responses around innovation and excellence was as follows create an extra set of tools to support quality experiences. Examples of this theme included the following:

Even though prescriptive analytics may not be a perfect tool, that doesn’t mean it’s not a tool that can’t be used effectively. We just have to be very cognizant of how we’re using it, and of the other variables that are at play, and of how we assess what we’re doing. It’s like everything in life, everything in education—you need to take a moment, step back and reassess if what you’re doing is effective or not. So I think that does align with Bay Path’s core values in that it’s an innovation and excellence striving. We’re always striving to be better. Even though our tools are imperfect, we’re always striving for excellence.

It’s an extra set of tools for faculty and staff to use to support our learners and to support the experience, to support the quality of the courses that we’re delivering. It’s a consistent process, a consistent set of tools so that we’re all working together. It’s shared knowledge, hopefully, with the warehouse. So if we’re working collaboratively, that data is available to multiple people. And I think about the recommendation for tutoring, or being able to provide automated support, or recommendations—that’s in some ways the low hanging fruit and takes that off the plate of the faculty who can then also work on creating additional materials or reaching out to the student to meet via zoom or whatever they need to do.

6.5. Core Value: We Pledge to Foster Inclusion and Belonging

The primary theme in the responses pertaining to inclusion and belonging was as follows: validate students’ inclusion and feeling understood in their learning experiences. Example participant quotes included the following: “You’re considering that they need that to feel like they’re belonging and they can do this”.

Fostering inclusion and belonging stands out. If there are students that are struggling in certain areas, we can find a way to make them feel more included, and feel more understood in their course, and give them whatever tools they need.

6.6. Core Value: We Work Best as a Community That Collaborates

The primary theme for the core value of collaboration was as follows: this work necessarily brings different voices together to discuss better supporting students. Examples of this theme included the following:

I think of how, as I look at ways to utilize data, it requires collaboration in a lot of ways. So I’m reaching out to multiple staff and other stakeholders as we’re considering what data to use and how to use it, and how it can provide benefit as we work together in figuring out those things. That makes it more effective. That makes it better. I don’t know if we’re doing it right. We’re hitting on that core principle of collaboration and we’re again making things better for our students, for the university, for the community, for faculty, etc.

I think that it’s easy for everyone to get in their lane and do their own thing and their specialty. And it’s always so refreshing and exciting to talk to people in departments who don’t typically get to talk and see all the wonderful ideas and that different perspective that is so illuminating. But also it’s just kind of shocking sometimes when you realize that I’ve been looking, you know, at this in such a limited way and I think that I’m seeing all sides of it and I’m not even close.

There’s just no way one person can do or make use of such immense and intricate data. So, there’s the people that are tasked with collecting it, and the people who analyze it, and the people who use it, and so forth.

6.7. Core Value: We Treat Others with Respect and Compassion

The primary theme in the responses for respect and compassion was as follows: hold students’ experiences with respect to help them navigate their education and get help when needed. Examples of this theme included the following:

What’s been said drives them to the core value of we treat others with respect and compassion. What I’m learning from the course I am teaching right now and students who have shared personal experiences with me is that depending on upbringing, culture, family background, etc., some students can’t ask for help. Or if they do, it’s a sign of weakness in certain cultures. I think we have to understand that and respect that. Instead of saying, Oh, this student’s just lazy, they may be so overwhelmed that they don’t ask questions.

This had a direct response from another participant who said, “I like what the last speaker said about backgrounds because we also have a lot of veterans here and the military teaches: do not ask for help. It’s a weakness”.

Treating with respect and compassion stands out to me, because this is all about making Bay Path’s classes, both online and traditional, more easily navigated by the students, and just better in the institution, and also the way in which we learn. I think it’s going to lead to a better outcome overall, and I think that’s very compassionate.

6.8. Core Value: Health and Well-Being Matter

The primary theme around health and well-being was as follows: engage a mindset that reduces student anxiety, stress, and doubt. Examples of this theme from the participants included the following:

So the whole health and well-being thing, I think, if we can look at the data and analytics and help our learners learn better—I think I see a lot of anxiety and a lot of stress when it comes to especially around exams and stuff. So I think if we can help them learn better, we can help them get tutoring. Or I think by just focusing on that first bullet point [i.e., our learners come first] will help improve the last bullet point [i.e., health and well-being matter], because it will help them not be as stressed. It’ll help them be not so anxious, you know. So I think it’ll tie into how to have a more positive mindset. And to be, I got this, instead of going into something like a ball of nerves. So I think those two are hugely tied together. So I think by fixing one, you can help improve the other.

Which also goes with the health and well-being because they’re stressed out or kind of doubting themselves. Like, self-esteem, and so forth. But I think all of this, this whole idea could actually touch base on all of the core values. … It could help improve the health and well-being aspects because if you’re able to find ways to try to strategize on the academic levels and find out, you know, well, why are they needing a tutor? What aren’t they being given by the instructors? Or what’s not said? What is being said? In the end, after you figure all that out, because at that time, the student is stressed, they’re having anxiety. Sometimes it can fall into depression if it goes far enough. But their self-worth is extremely important. And I think it would all start if your ideas press through and find ways to combat it.

I know personally, health and well-being, they are very important things to me. I spend most of my time doing schoolwork, and I know that’s expected, this is college, but over 20 h a week. And being able to figure out a way, even if it’s not the data that’s coming from me, getting data and figuring out a way to better, more efficiently manage how we study, and the strategies that are going to make this more efficient I think are going to, at least for me, immensely improve my health and well-being while attending college. Just over the amount of time and effort that gets put in, I think it’s going to be helpful.

7. Discussion

Across the focus groups, some participants discussed how they saw each core value supported by the proposed analytics initiative, leading to the identification of cross-cutting core relevance as a key finding. Most participants identified one, two, or a few core values they thought were exemplified by the proposed project. Some participants felt the project related to all core values clearly. However, a few participants expressed skepticism, leading to the key finding of the importance of multiple perspectives. Participants did not agree on which core values were most aligned or whether some were aligned, which should be considered when interpreting the results. This lack of consensus reinforces the emergent nature of such an initiative and the need for strong stakeholder involvement throughout any implementation initiative, as suggested by the OrLA framework [

1]. However, although skeptics existed, they were a small minority. The voices speaking about how the project would enact and reinforce core values were, on balance, much stronger than feelings and opinions questioning the connection.

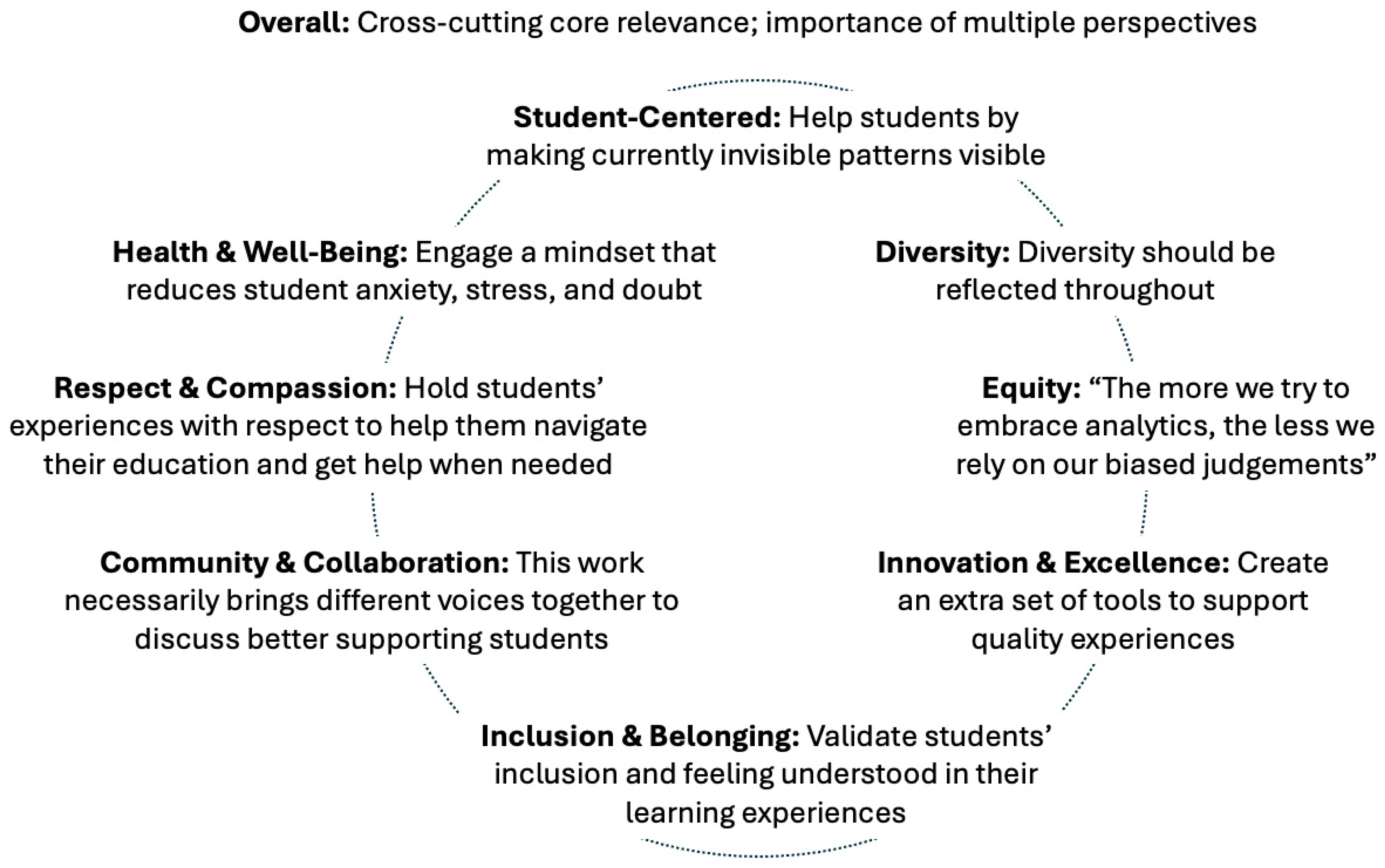

Within the set of core values, themes that most prevalently arose included the following: (a) help students by making currently invisible patterns visible; (b) diversity should be reflected throughout; (c) “the more we try to embrace analytics, the less we rely on our biased judgements”; (d) create an extra set of tools to support quality experiences; (e) validate students’ inclusion and feeling understood in their learning experiences; (f) hold students’ experiences with respect to help them navigate their education and get help when needed; and (g) engage a mindset that reduces student anxiety, stress, and doubt (see

Figure 1). These findings provide important results that can be guideposts to this institution when proceeding with this initiative. Findings may also be beneficial for other institutions contemplating similar analytics initiatives, particularly when considering organizational context [

20]. Aligning such initiatives with institutional values can direct available resources toward valued online support.

Of these themes, the most interesting and surprising result was the finding around “health and well-being matter”. Students, rather than faculty or staff, were the ones who emphasized this. They saw it as an important core value relating to the potential for a prescriptive analytics initiative to support students by bolstering their self-worth. These students keenly recognized the anxiety and stress many current students face, connecting the potential for decreased anxiety to getting directed to tutoring at opportune moments when stress would otherwise be high due to confusion about course material. Multiple students across different focus groups saw such a connection between the use of data to provide timely support recommendations for students through prescriptive analytics and health and well-being, including mental health. Students sought the efficiency of support that analytics has the potential to provide, particularly for busy students juggling multiple responsibilities including work, school, and home life. The idea that these students think prescriptive analytics can help them feel “I got this” at times when they would otherwise be “a ball of nerves” is a powerful testament to the humanistic side of data-informed decision making and a compelling argument for the value of such an initiative, particularly after the impacts of the COVID-19 pandemic for higher education [

29]. This finding illustrates the importance of including a wide range of stakeholders in discussions of analytics project directions, and particularly making sure students are involved, in line with the OrLA framework’s recommendations [

1].

Notably when interpreting these findings, the institutional process that identified a common set of core values should be recognized as a key feature facilitating this study. The potentially amorphous nature of shared values among a group as large as an institution could challenge those wishing to have such discussions on their campus. Although mission and vision statements could be starting places for such discussion [

30], these differ from statements of values [

10]. All participants knew these institutional values, so they acted as a shared reference point for discussing values about the present project. This awareness reduced the amount of preparatory time needed for a productive group conversation about values because the set of potential values was finite and known.

8. Conclusions

These results demonstrate that people from across the campus involved with both online learning and campus-based learning see promise for improving student academic support through the strategic use of online technology that would deliver learning analytics-based recommendations to students about possible tutoring. Such forward-thinking prescriptive application using the increasing amount of data available for analysis in service of targeted student support aligns clearly with each of this institution’s core values [

10]. Despite the lack of consensus for the relevance of each core value, institutional stakeholders felt strongly that each value could be obviously aligned. This offers hope that such application of online technology could help the institution affordably improve its instruction by utilizing extensions to existing strategic investments. This could provide more efficient and effective support to both traditional and non-traditional students.

This study reinforces the importance of stakeholder engagement in the design process of analytics initiatives. Specifically, this study explored the alignment between institutional and stakeholder values and the perceived value proposition of an analytics initiative. This study extends prior participatory design work by making discussions of values explicit rather than hidden [

4]. Results also emphasize the importance of having early involvement of groups who will contribute to the design phase of the implementation. The lack of consensus about the alignment with the institutional core values demonstrates the relative newness of analytics in higher education and the need to continue exploring of the value of data to inform decisions [

3]. The need for ongoing stakeholder involvement exists even at an institution that (a) espouses the value of data-informed decision making, (b) seeks out ways to implement this approach, and (c) has successfully done so in the past, including with displays of institutional data using PowerBI and using analytics in the learning management system and the adaptive learning system [

7]. By pursuing this action research in collaboration with faculty, staff, and students, this project contributes to understanding contextual factors facilitating co-designing a prescriptive analytics system.

These results will be summarized for the university’s administration in a feasibility report along with the results of the second round of focus groups centered on the resources and processes necessary to implement this prescriptive analytics initiative. The report will highlight the themes that emerged and participant voices that exemplify them. The lack of unanimity of thinking provides a cautionary note not to be overly prescriptive in the approach itself.

Future administrators, data analysts, and researchers should aim to align analytics initiatives with the values espoused by the institution and its stakeholders. This alignment could be through explicit values statements, as collectively crafted at the current institution studied, or potentially as stated in or derived from the institutional mission and vision statements. The efficacy of this latter option would need to be explored in future research. The current findings support the argument that success of implementing prescriptive analytics will likely be strengthened by such organizational contextual alignment [

20]. Other learning analytics applications may be similarly strengthened by connecting to shared values, as there was little in the focus group discussions unique to a prescriptive prediction approach compared with other forms of predictive analytics.

Overall, alignment between an institution’s core values and implementation of prescriptive analytics as seen in the present research, when achieved, means resources devoted to the analytics initiative will be more clearly targeted toward areas of importance to the institutional community. Academic leaders championing a prescriptive analytics initiative could expect values alignment to increase the likelihood of adoption and, therefore, the project’s usefulness, along with the long-term relevance and sustainability of such an approach.