University Students’ Perceptions of Peer Assessment in Oral Presentations

Abstract

1. Introduction

1.1. Peer Assessment: Experiences and Support Measures to Improve Its Effectiveness

1.2. Student Perceptions of Peer Assessment

- What are undergraduate students’ perceptions of the effectiveness of peer assessment in oral presentation tasks?

- To what extent does participation in peer assessment activities influence students’ perceived self-efficacy as assessors of their peers’ oral presentations?

2. Materials and Methods

2.1. Participants

2.2. Data Collection Procedure

2.3. Instruments

2.4. Data Analysis

3. Results

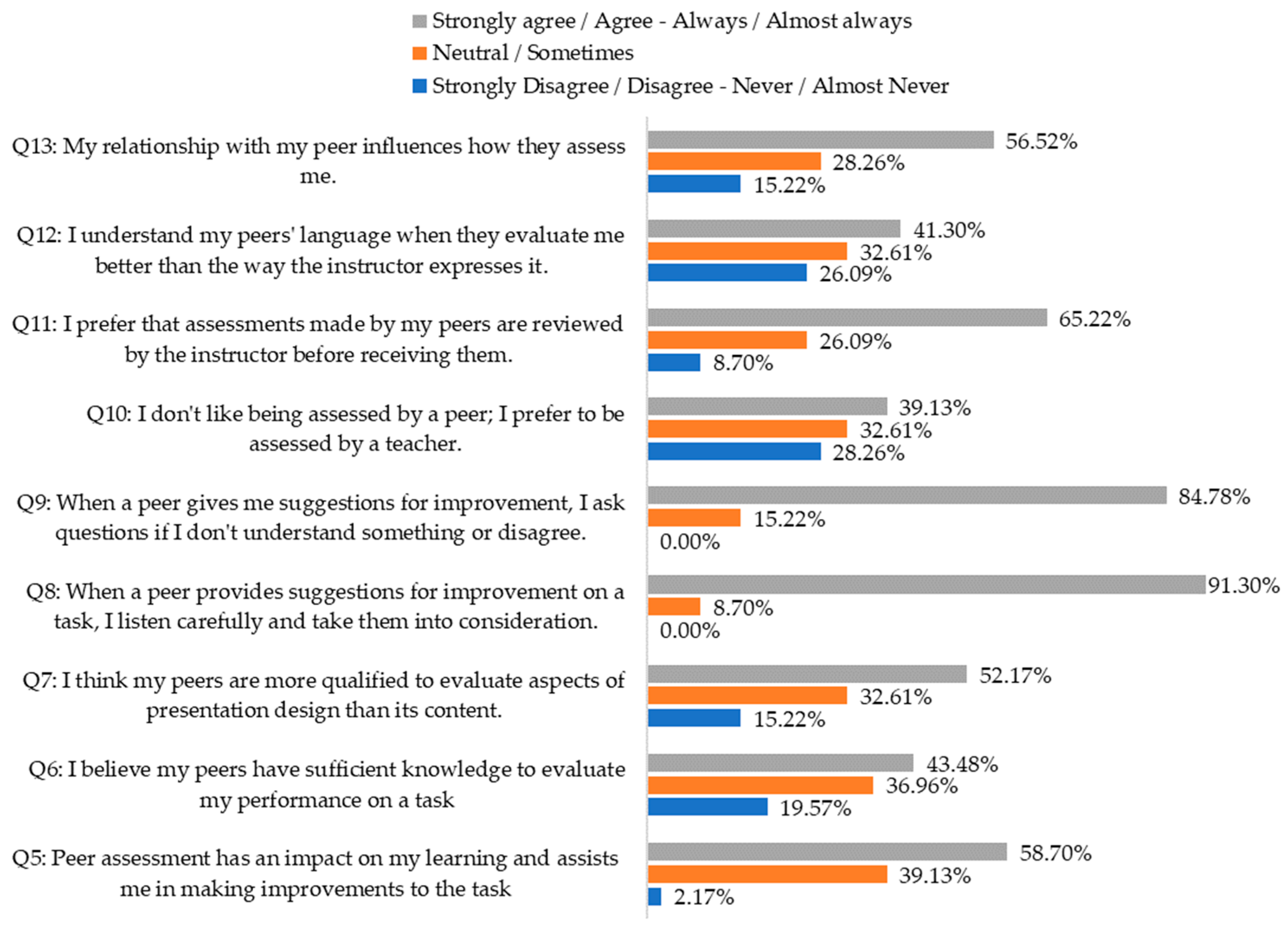

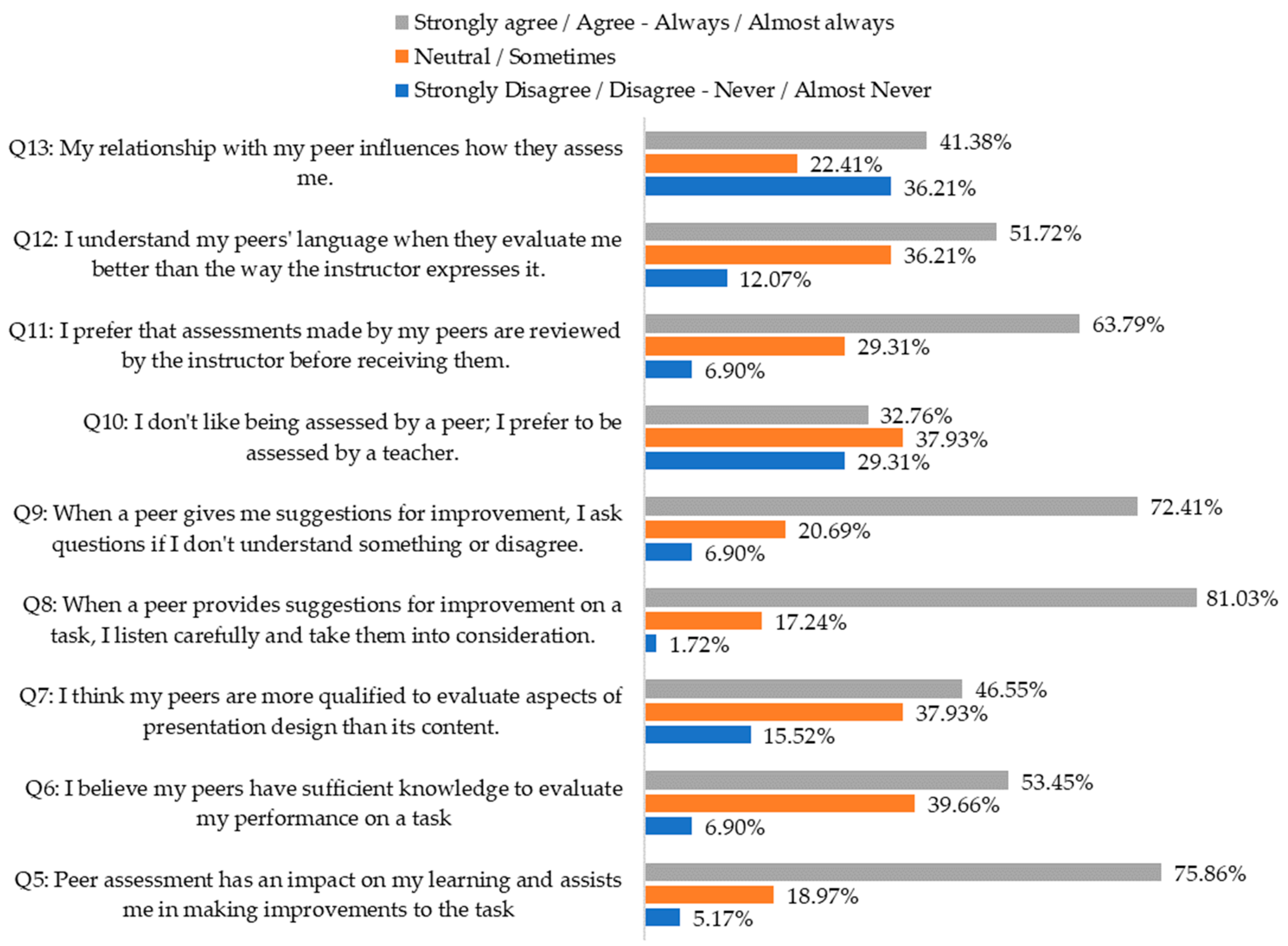

3.1. Perception of University Students Regarding Peer Assessment of Oral Presentations as a Student Evaluated before and after an Experience

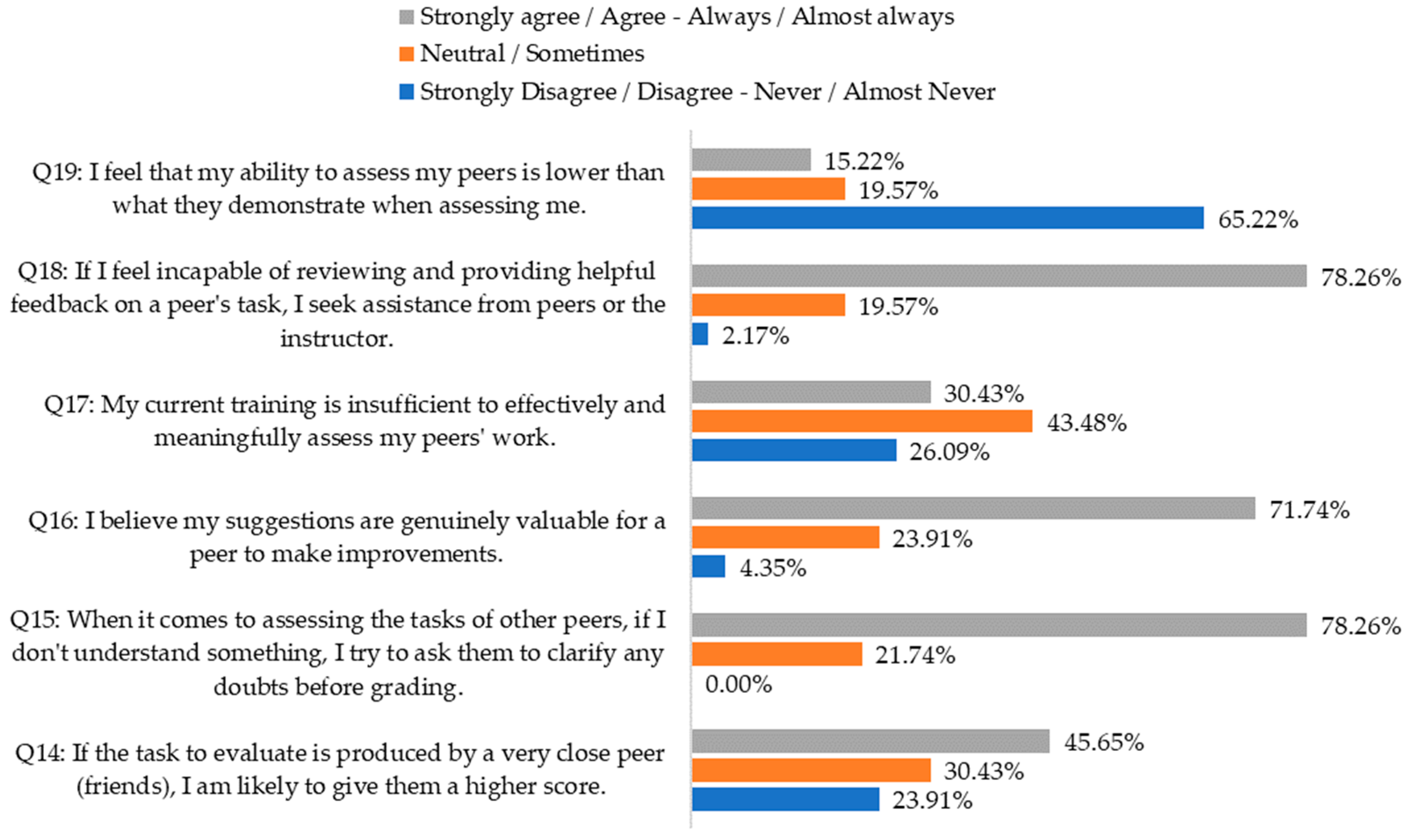

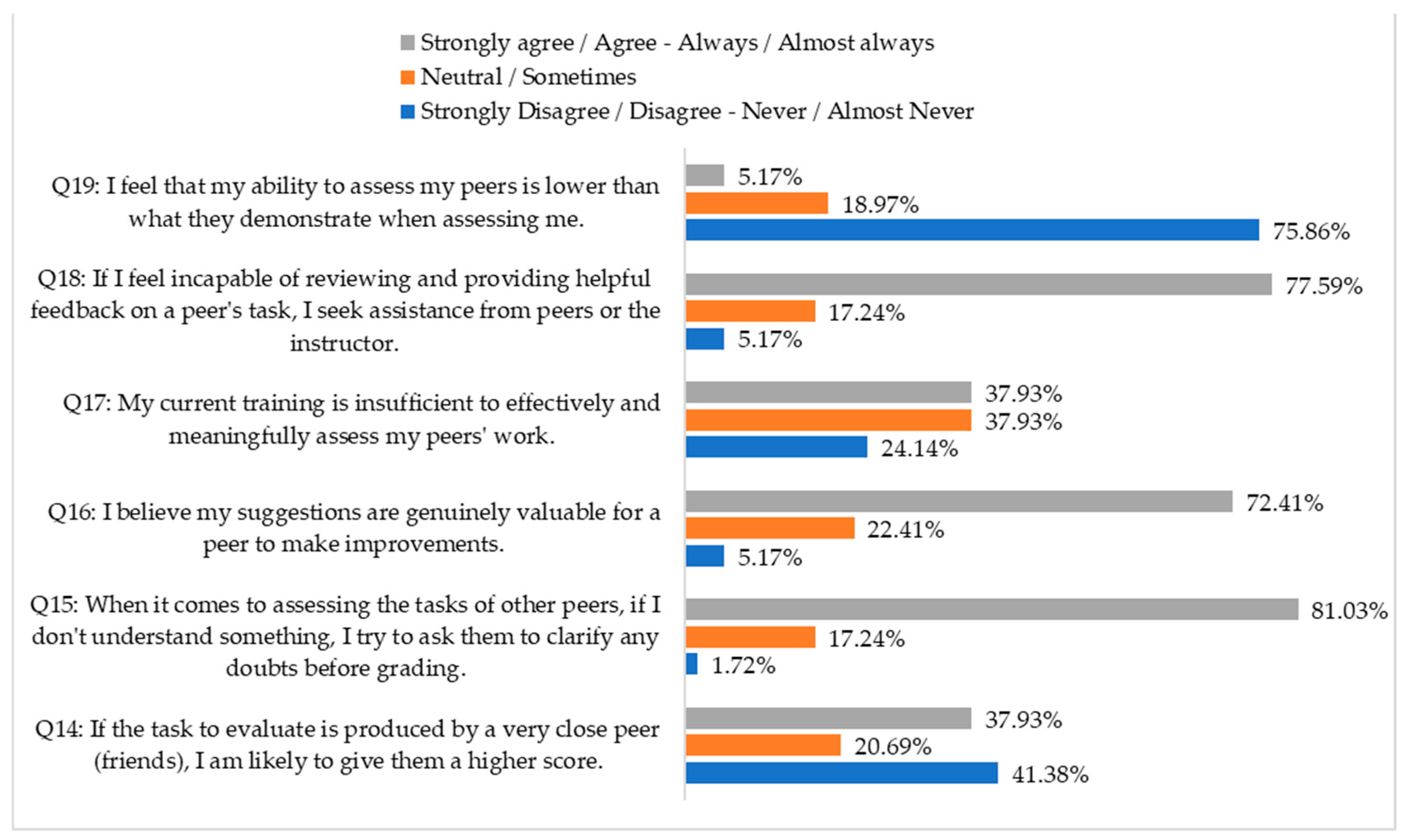

3.2. Self-Efficacy of University Students as Assessors of ORAL Presentations before and after a Peer Assessment Experience

3.3. Differences in the Responses Obtained before and after Peer Assessment by the University Student

| Items | PRE-TEST | POST-TEST | DIF | ||

|---|---|---|---|---|---|

| Dt | Dt | ||||

| Q5: Peer assessment has an impact on my learning and assists me in making improvements to the task. | 3.83 | 0.85 | 4.03 | 0.86 | 0.21 |

| Q6: I believe my peers have sufficient knowledge to evaluate my performance on a task. | 3.28 | 1.05 | 3.53 | 0.73 | 0.25 |

| Q7: I think my peers are more qualified to evaluate aspects of presentation design than its content. | 3.52 | 1.01 | 3.34 | 1.07 | −0.18 |

| Q8: When a peer provides suggestions for improvement on a task, I listen carefully and take them into consideration. | 4.33 | 0.63 | 4.19 | 0.78 | −0.14 |

| Q9: When a peer gives me suggestions for improvement, I ask questions if I don’t understand something or disagree. | 4.41 | 0.75 | 4.03 | 0.99 | −0.38 |

| Q10: I don’t like being assessed by a peer; I prefer to be assessed by a teacher. | 3.24 | 1.25 | 3.17 | 1.16 | −0.07 |

| Q11: I prefer that assessments made by my peers are reviewed by the instructor before receiving them. | 3.96 | 1.01 | 3.86 | 0.93 | −0.09 |

| Q12: I understand my peers’ language when they evaluate me better than the way the instructor expresses it. | 3.17 | 1.12 | 3.53 | 0.88 | 0.36 |

| Q13: My relationship with my peer influences how they assess me. | 3.54 | 1.22 | 3.00 | 1.26 | −0.54 * |

| Q14: If the task to evaluate is produced by a very close peer (friend), I am likely to give them a higher score. | 3.24 | 1.23 | 2.81 | 1.37 | −0.43 |

| Q15: When it comes to assessing the tasks of other peers, if I don’t understand something, I try to ask them to clarify any doubts before grading. | 4.26 | 0.80 | 4.19 | 0.78 | −0.07 |

| Q16: I believe my suggestions are genuinely valuable for a peer to make improvements. | 3.96 | 0.92 | 3.93 | 0.83 | −0.03 |

| Q17: My current training is insufficient to effectively and meaningfully assess my peers’ work. | 3.11 | 1.08 | 3.22 | 0.92 | 0.12 |

| Q18: If I feel incapable of reviewing and providing helpful feedback on a peer’s task, I seek assistance from peers or the instructor. | 4.22 | 0.84 | 4.19 | 0.91 | −0.03 |

| Q19: I feel that my ability to assess my peers is lower than what they demonstrate when assessing me. | 2.07 | 1.24 | 1.86 | 0.91 | −0.20 |

4. Discussion and Conclusions

4.1. Practical Contributions

4.2. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- Dimension 1. Sociodemographic Identification Data.

- 1. Gender: Female, Male.

- Dimension 2. Peer Assessment Experiences.

- 2. How many times have you been assessed by a peer? None, Few, Several, Many.

- 3. Have you recently participated in peer assessment activities in the university context? Yes, No, I don’t remember.

- 4. If the answer to the previous question is affirmative, did the instructor take into account the peer’s grade when making the final assessment? Yes, No, I don’t remember.

- Dimension 3. Influential factors in peer assessment.

- Rate from 1 to 5 in each case according to the scale proposed in each question:

- Scale for questions 5, 6, 7, 10, 11, 12, 13: 1 (strongly disagree), 2 (disagree), 3 (neutral), 4 (agree) and 5 (strongly agree).

- Scale for questions 8, 9: 1 (never), 2 (almost never), 3 (sometimes), 4 (almost always) and 5 (always).

- 5. Peer assessment has an impact on my learning and assists me in making improvements to the task (in the case of resubmission).

- 6. I believe my peers have sufficient knowledge to evaluate my performance on a task.

- 7. I think my peers are more qualified to evaluate aspects of presentation design than its content.

- 8. When a peer provides suggestions for improvement on a task, I listen carefully and take them into consideration.

- 9. When a peer gives me suggestions for improvement, I ask questions if I don’t understand something or disagree.

- 10. I don’t like being assessed by a peer; I prefer to be assessed by a teacher.

- 11. I prefer that assessments made by my peers are reviewed by the instructor before receiving them.

- 12. I understand my peers’ language when they evaluate me better than the way the instructor expresses it.

- 13. My relationship with my peer influences how they assess me.

- Dimension 4. Self-efficacy as an evaluator in peer assessment.

- Rate from 1 to 5 in each case according to the scale proposed in each question:

- Scale for questions 14, 16, 17, 19: 1 (strongly disagree), 2 (disagree), 3 (neutral), 4 (agree) and 5 (strongly agree).

- Scale for questions 15, 18: 1 (never), 2 (almost never), 3 (sometimes), 4 (almost always) and 5 (always).

- 14. If the task to evaluate is produced by a very close peer (friend), I am likely to give them a higher score.

- 15. When it comes to assessing the tasks of other peers, if I don’t understand something, I try to ask them to clarify any doubts before grading.

- 16. I believe my suggestions are genuinely valuable for a peer to make improvements.

- 17. My current training is insufficient to effectively and meaningfully assess my peers’ work.

- 18. If I feel incapable of reviewing and providing helpful feedback on a peer’s task, I seek assistance from peers or the instructor.

- 19. I feel that my ability to assess my peers is lower than what they demonstrate when assessing me.

References

- Topping, K. Peer assessment between students in colleges and universities. Rev. Educ. Res. 1998, 68, 249–276. [Google Scholar] [CrossRef]

- Latifi, S.; Noroozi, O. Supporting argumentative essay writing through an online supported peer-review script. Innov. Educ. Teach. Int. 2021, 58, 501–511. [Google Scholar] [CrossRef]

- Gielen, S.; Tops, L.; Dochy, F.; Onghena, P.; Smeets, S. A comparative study of peer and teacher feedback and of various peer feedback forms in a secondary school writing curriculum. Br. Educ. Res. J. 2010, 36, 143–162. [Google Scholar] [CrossRef]

- Guelfi, M.R.; Formiconi, A.R.; Vannucci, M.; Tofani, L.; Shtylla, J.; Masoni, M. Application of peer review in a university course: Are students good reviewers? J. E-Learn. Knowl. Soc. 2021, 17, 1–8. [Google Scholar] [CrossRef]

- Double, K.S.; McGrane, J.A.; Hopfenbeck, T.N. The impact of peer assessment on academic performance: A meta-analysis of control group studies. Educ. Psychol. Rev. 2020, 32, 481–509. [Google Scholar] [CrossRef]

- Liu, N.F.; Carless, D. Peer feedback: The learning element of peer assessment. Teach. High. Educ. 2006, 11, 279–290. [Google Scholar] [CrossRef]

- Schillings, M.; Roebertsen, H.; Savelberg, H.; Whittingham, J.; Dolmans, D. Peer-to-peer dialogue about teachers’ written feedback enhances students’ understanding on how to improve writing skills. Educ. Stud. 2020, 46, 693–707. [Google Scholar] [CrossRef]

- Topping, K. Peer assessment: Learning by judging and discussing the work of other learners. Interdiscip. Educ. Psychol. 2017, 1, 1–17. [Google Scholar] [CrossRef]

- Spiller, D. Assessment Matters: Self-Assessment and Peer Assessment; Teaching Development, The University of Waikato: Hamilton, New Zealand, 2009; Available online: http://www.waikato.ac.nz/tdu/pdf/booklets/8_SelfPeerAssessment.pdf (accessed on 14 January 2023).

- Murillo-Zamorano, L.R.; Montanero, M. Oral presentations in higher education: A comparison of the impact of peer and teacher feedback. Assess. Eval. High. Educ. 2018, 43, 138–150. [Google Scholar] [CrossRef]

- Dunbar, N.E.; Brooks, C.F.; Kubicka-Miller, T. Oral communication skills in higher education: Using a performance-based evaluation rubric to assess communication skills. Innov. High. Educ. 2006, 31, 115–128. [Google Scholar] [CrossRef]

- Ryan, M. The pedagogical balancing act: Teaching reflection in higher education. Teach. High. Educ. 2013, 18, 144–155. [Google Scholar] [CrossRef]

- Dickson, H.; Harvey, J.; Blackwood, N. Feedback, feedforward: Evaluating the effectiveness of an oral peer review exercise amongst postgraduate students. Assess. Eval. High. Educ. 2019, 44, 692–704. [Google Scholar] [CrossRef]

- Lu, J.; Law, N. Online Peer Assessment: Effects of Cognitive and Affective Feedback. Instr. Sci. 2012, 40, 257–275. [Google Scholar] [CrossRef]

- Misiejuk, K.; Wasson, B.; Egelandsdal, K. Using learning analytics to understand student perceptions of peer feedback. Comput. Hum. Behav. 2021, 117, 106658. [Google Scholar] [CrossRef]

- Molloy, E.; Boud, D.; Henderson, M. Developing a Learning-Centred Framework for Feedback Literacy. Assess. Eval. High. Educ. 2020, 45, 527–540. [Google Scholar] [CrossRef]

- Ramon-Casas, M.; Nuño, N.; Pons, F.; Cunillera, T. The different impact of a structured peerassessment task in relation to university undergraduates’ initial writing skills. Assess. Eval. High. Educ. 2019, 44, 653–663. [Google Scholar] [CrossRef]

- Wu, Y.; Schunn, C.D. When peers agree, do students listen? The central role of feedback quality and feedback frequency in determining uptake of feedback. Contemp. Educ. Psychol. 2020, 62, 101897. [Google Scholar] [CrossRef]

- Elander, J. Student assessment from a psychological perspective. Psychol. Learn. Teach. 2004, 3, 114–121. [Google Scholar] [CrossRef]

- Rød, J.K.; Nubdal, M. Double-blind multiple peer reviews to change students’ reading behaviour and help them develop their writing skills. J. Geogr. High. Educ. 2022, 46, 284–303. [Google Scholar] [CrossRef]

- Ada, M.B.; Majid, M.U. Developing a system to increase motivation and engagement in student code peer review. In Proceedings of the 2022 IEEE International Conference on Teaching, Assessment and Learning for Engineering (TALE), Hong Kong, 4–7 December 2022; pp. 93–98. [Google Scholar] [CrossRef]

- Lu, R.; Bol, L. A comparison of anonymous versus identifiable e-peer review on college student writing performance and the extent of critical feedback. J. Interact. Online Learn. 2007, 6, 100–115. Available online: https://digitalcommons.odu.edu/efl_fac_pubs/5/?utm_sourc (accessed on 15 January 2023).

- Morales-Martinez, G.; Latreille, P.; Denny, P. Nationality and gender biases in multicultural online learning environments: The effects of anonymity. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–14. [Google Scholar] [CrossRef]

- Papinczak, T.; Young, L.; Groves, M. Peer assessment in problem-based learning: A qualitative study. Adv. Health Sci. Educ. 2007, 12, 169–186. [Google Scholar] [CrossRef] [PubMed]

- Montanero, M.; Madeira, M.L. Collaborative chain writing: Effects on the narrative competence on primary school students. Infanc. Y Aprendiz. 2019, 42, 915–951. [Google Scholar] [CrossRef]

- Rahmanian, M.; Shafieian, M.; Samie, M.E. Computing with words for student peer assessment in oral presentation. Nexo Rev. Científica 2021, 34, 229–241. [Google Scholar] [CrossRef]

- Wang, W. Students’ perceptions of rubric-referenced peer feedback on EFL writing: A longitudinal inquiry. Assess. Writ. 2014, 19, 80–96. [Google Scholar] [CrossRef]

- O’Donovan, B.; Price, M.; Rust, C. The student experience of criterion-referenced assessment (through the introduction of a common criteria assessment grid). Innov. Educ. Teach. Int. 2001, 38, 74–85. [Google Scholar] [CrossRef]

- Mangelsdorf, K. Peer Reviews in the ESL Composition Classroom: What Do the Students Think? ELT J. 1992, 46, 274–284. [Google Scholar] [CrossRef]

- Loureiro, P.; Gomes, M.J. Online peer assessment for learning: Findings from higher education students. Educ. Sci. 2023, 13, 253. [Google Scholar] [CrossRef]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Lladó, A.; Soley, L.; Sansbelló, R.; Pujolras, G.; Planella, J.; Roura-Pascual, N.; Moreno, L. Student perceptions of peer assessment: An interdisciplinary study. Assess. Eval. High. Educ. 2014, 39, 592–610. [Google Scholar] [CrossRef]

- Patchan, M.M.; Schunn, C.D.; Correnti, R.J. The Nature of Feedback: How Peer Feedback Features Affect Students’ Implementation Rate and Quality of Revisions. J. Educ. Psychol. 2016, 108, 1098–1120. [Google Scholar] [CrossRef]

- Djelil, F.; Brisson, L.; Charbey, R.; Bothorel, C.; Gilliot, J.M.; Ruffieux, P. Analysing peer assessment interactions and their temporal dynamics using a graphlet-based method. In Proceedings of the EC-TEL’21, Bolzano, Italy, 20–24 September 2021; pp. 82–95. [Google Scholar] [CrossRef]

- Misiejuk, K.; Wasson, B. Backward evaluation in peer assessment: A scoping review. Comput. Educ. 2021, 175, 104319. [Google Scholar] [CrossRef]

- Harland, T.; Wald, N.; Randhawa, H. Student Peer Review: Enhancing Formative Feedback with a Rebuttal. Assess. Eval. High. Educ. 2017, 42, 801–811. [Google Scholar] [CrossRef]

- Double, K.S.; Birney, D.P. Reactivity to confidence ratings in older individuals performing the latin square task. Metacognition Learn. 2018, 13, 309–326. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Attaining reciprocality between learning and development through self-regulation. Hum. Dev. 1995, 38, 367–372. [Google Scholar] [CrossRef]

- Gaspar, A.; Fernández, M.J.; Sánchez-Herrera, S. Percepción del alumnado universitario sobre la evaluación por pares en tareas de escritura. Rev. Complut. Educ. 2023, 34, 541–554. [Google Scholar] [CrossRef]

- Andrade, H.L.; Wang, X.; Du, Y.; Akawi, R.L. Rubric-referenced self-assessment and self-efficacy for writing. J. Educ. Res. 2009, 102, 287–302. [Google Scholar] [CrossRef]

- Vanderhoven, E.; Raes, A.; Montrieux, H.; Rotsaert, T.; Schellens, T. What if pupils can assess their peers anonymously? A quasi-experimental study. Comput. Educ. 2015, 81, 123–132. [Google Scholar] [CrossRef]

- Kollar, I.; Fischer, F. Peer assessment as collaborative learning: A cognitive perspective. Learn. Instr. 2010, 20, 344–348. [Google Scholar] [CrossRef]

- Koh, E.; Shibani, A.; Tan, J.P.L.; Hong, H. A pedagogical framework for learning analytics in collaborative inquiry tasks: An example from a teamwork competency awareness program. In Proceedings of the LAK’16, Edinburgh, Scotland, 25–29 April 2016; pp. 74–83. [Google Scholar] [CrossRef]

- Hunt, P.; Leijen, Ä.; van der Schaaf, M. Automated feedback is nice and human presence makes it better: Teachers’ perceptions of feedback by means of an e-portfolio enhanced with learning analytics. Educ. Sci. 2021, 11, 278. [Google Scholar] [CrossRef]

- Zevenbergen, R. Peer assessment of student constructed posters: Assessment alternatives in preservice mathematics education. J. Math. Teach. Educ. 2001, 4, 95–113. [Google Scholar] [CrossRef]

- Reily, K.; Finnerty, P.L.; Terveen, L. Two peers are better than one: Aggregating peer reviews for computing assignments is surprisingly accurate. In Proceedings of the 2009 ACM International Conference on Supporting Group Work, Sanibel Island, FL, USA, 10–13 May 2009; pp. 115–124. [Google Scholar] [CrossRef]

- Mulder, R.A.; Pearce, J.M. PRAZE: Innovating Teaching through Online Peer Review. ICT: Providing Choices for Learners and Learning, Proceedings of the Ascilite Singapore 2007, Singapore, 2–5 December 2007. Available online: https://people.eng.unimelb.edu.au/jonmp/pubs/ascilite2007/Mulder%20&%20Pearce%20ASCILITE%202007.pdf (accessed on 18 January 2023).

- Pearce, J.; Mulder, R.; Baik, C. Involving Students in Peer Review: Case Studies and Practical Strategies for University Teaching; Centre for the Study of Higher Education, University of Melbourne: Melbourne, Australia, 2010; Available online: https://apo.org.au/node/20259 (accessed on 24 January 2023).

- Song, X.; Goldstein, S.C.; Sakr, M. Using peer code review as an educational tool. In Proceedings of the 2020 ACM Conference on Innovation and Technology in Computer Science Education, Trondheim, Norway, 15–19 June 2020; pp. 173–179. [Google Scholar] [CrossRef]

- Cho, K.; Schunn, C.D.; Wilson, R.W. Validity and reliability of scaffolded peer assessment of writing from instructor and student perspectives. J. Educ. Psychol. 2006, 98, 891. [Google Scholar] [CrossRef]

- Gielen, M.; De Wever, B. Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Comput. Hum. Behav. 2015, 52, 315–325. [Google Scholar] [CrossRef]

- Lutze-Mann, L. Peer Assessment of Assignment Drafts: About Peer Assessment. Assessment Toolkit, Student Peer Assessment. 2015. Available online: https://teaching.unsw.edu.au/peer-assessment (accessed on 21 January 2023).

- Ross, J. The Reliability, Validity, and Utility of Self-Assessment. Pract. Assess. Res. Eval. 2006, 11, 10. [Google Scholar] [CrossRef]

- Gunnarsson, B.L.; Alterman, R. Peer promotions as a method to identify quality content. J. Learn. Anal. 2014, 1, 126–150. [Google Scholar] [CrossRef]

- Mulder, R.; Baik, C.; Naylor, R.; Pearce, J. How does student peer review influence perceptions, engagement and academic outcomes? A case study. Assess. Eval. High. Educ. 2014, 39, 657–677. [Google Scholar] [CrossRef]

- Bangert-Drowns, R.L.; Kulik, C.-C.; Kulik, J.A.; Morgan, M. The instructional effect of feedback in test-like events. Rev. Educ. Res. 1991, 61, 213–238. [Google Scholar] [CrossRef]

| Number of Peer Assessment Participations by the Student | Gender | Count (%) | Total (%) |

|---|---|---|---|

| None/Few | Male | 18 (88.24%) | 53 (91.38%) |

| Female | 38 (92.68%) | ||

| Quite a few | Male | 2 (11.76%) | 5 (8.62%) |

| Female | 3 (7.32%) | ||

| Many | Male | 0 (0%) | 0 (0%) |

| Female | 0 (0%) |

| Dimensions | Item | Total | Description | Typology |

|---|---|---|---|---|

| Dimension 1. Sociodemographic Identification Data. | 1 | 1 | Aims to identify the gender of the participants. | Closed nominal dichotomous question. |

| Dimension 2. Peer Assessment Experiences. | 2, 3, 4, | 3 | Aims to determine the nature and amount of prior peer assessment experience of the participants. | Four-point Likert scale question (from none to many) (question 2) and closed nominal polytomous questions (questions 3 and 4). |

| Dimension 3. Influential Factors in Peer Assessment. | 5, 6, 7, 8, 9, 10, 11, 12, 13 | 9 | Aims to analyze whether knowledge and training influence peer assessment. | Closed nominal polytomous questions with five-point Likert scale response options (from strongly disagree to strongly agree and from never to always). |

| Dimension 4. Self-efficacy as an evaluator in peer assessment. | 14, 15, 16, 17, 18, 19 | 6 | Aims to understand how students perceive and use the suggestions for improvement made by their peers during peer assessment. | Closed nominal polytomous questions with five-point Likert scale response options (from strongly disagree to strongly agree and from never to always). |

| Total | 19 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gudiño, D.; Fernández-Sánchez, M.-J.; Becerra-Traver, M.-T.; Sánchez-Herrera, S. University Students’ Perceptions of Peer Assessment in Oral Presentations. Educ. Sci. 2024, 14, 221. https://doi.org/10.3390/educsci14030221

Gudiño D, Fernández-Sánchez M-J, Becerra-Traver M-T, Sánchez-Herrera S. University Students’ Perceptions of Peer Assessment in Oral Presentations. Education Sciences. 2024; 14(3):221. https://doi.org/10.3390/educsci14030221

Chicago/Turabian StyleGudiño, Diego, María-Jesús Fernández-Sánchez, María-Teresa Becerra-Traver, and Susana Sánchez-Herrera. 2024. "University Students’ Perceptions of Peer Assessment in Oral Presentations" Education Sciences 14, no. 3: 221. https://doi.org/10.3390/educsci14030221

APA StyleGudiño, D., Fernández-Sánchez, M.-J., Becerra-Traver, M.-T., & Sánchez-Herrera, S. (2024). University Students’ Perceptions of Peer Assessment in Oral Presentations. Education Sciences, 14(3), 221. https://doi.org/10.3390/educsci14030221