1. Introduction

The most effective way of learning in a classroom is debatable. Conventional didactic lectures remain the most common method of knowledge dissemination [

1,

2,

3,

4]. Rooted in a teacher-centred paradigm, it involves the direct transmission of knowledge from instructor to students, typically through a structured, non-interactive format [

5]. This method has been a mainstay in educational institutions for centuries, valued for its efficiency in covering extensive material within a limited timeframe. The primary goal is to convey factual information and foundational concepts clearly and concisely, ensuring that students acquire the essential knowledge base required for further study [

6].

In contrast, project-based learning (PBL) embodies a student-centred approach that emphasises active learning through engagement with real-world problems and collaborative projects [

7]. PBL plays an important role in STEM education by shifting the focus from the teacher as the primary source of information to students as active participants in their learning journey through problem-based learning [

8,

9,

10,

11]. This interactive method, particularly in STEM subjects, encourages students to explore, inquire, and apply their knowledge to practical challenges, furthering critical thinking, creativity, and teamwork [

12]. In a PBL environment, students are often given complex, open-ended tasks that require them to research, plan, execute, and present their findings. The role of the teacher transforms into that of a facilitator or guide, providing support and resources while encouraging students to take ownership of their learning process [

7,

13,

14].

In our previous study, we demonstrated that PBL was highly effective for the majority of biosciences students [

12]. Most participants responded positively, finding the method engaging and conducive to deep learning. However, a notable minority expressed concerns regarding the formative assessment mode. Specifically, they highlighted issues of unequal participation among group members, which led to perceptions of unfair outcomes. Some students felt that the lack of individual accountability within the group projects allowed certain members to contribute less, thus receiving the same grade as more active participants.

Building on these insights, our current study investigates the implementation of the same PBL approach in a Year 3 module of a biomedical science program, with a particular focus on enhancing fairness and accountability in group assessments. This iteration of the PBL method incorporated a structured peer assessment tool known as “Buddycheck” to address the previously identified issues of unequal participation [

15].

1.1. Peer Assessment

Peer assessment, a process where students evaluate each other’s contributions and performance either qualitatively and/or quantitatively, has garnered significant attention in the context of higher education [

16,

17,

18]. This practice aligns with constructivist theories of learning, which posit that knowledge is constructed through interaction and collaboration. The theoretical underpinnings of peer assessment in group work are multifaceted, encompassing principles from educational psychology, collaborative learning theories, and assessment for learning paradigms. Additionally, in community of practice, peer assessment can also be beneficial, as discussed below.

1.2. Constructivist Learning Theory

Constructivist learning theory, as pioneered by Jean Piaget and Lev Vygotsky, emphasises that learning is an active, dynamic process where learners construct new knowledge by building upon their prior experiences. This theory highlights that knowledge is not passively received but actively created by the learner. Vygotsky’s concept of the zone of proximal development (ZPD) is a key aspect of this theory, emphasising that learners can achieve higher levels of understanding when assisted by more knowledgeable peers or instructors [

19]. The ZPD suggests that peer interaction is crucial for fostering growth, as students often learn more effectively when working collaboratively with someone slightly more advanced than themselves.

In the context of peer–peer evaluation, Vygotsky’s theory becomes especially relevant. During peer assessment, students engage in reflective dialogue, offering and receiving constructive critique, which stimulates deeper understanding and cognitive development. This practice allows learners to function as both evaluators and recipients of feedback, which creates a rich, collaborative environment where learning is co-constructed through shared experiences and insights [

20]. By taking on the role of both the assessor and the assessed, students internalise and reflect on their own knowledge, reinforcing their learning in a way that aligns with constructivist principles.

Furthermore, group PBL provides an ideal environment for this co-construction of knowledge. When students collaborate in PBL settings, they actively work together to solve complex, real-world problems, promoting higher-order thinking and reflection. It was shown that such interactive processes in group work further support the constructivist view by demonstrating that learning is a communal endeavour, where shared efforts and experiences deepen understanding [

12]. Through peer-to-peer engagement, learners not only develop academic skills but also critical thinking, problem solving, and collaboration, which are fundamental to lifelong learning.

1.3. Collaborative Learning Theory

Collaborative learning theory emphasizes the social nature of learning, where knowledge is constructed through interaction and dialogue among learners. In this approach, students work together to solve problems, complete tasks, and create understanding, often in groups or teams [

21]. Collaborative learning shifts the focus from individual performance to shared goals, encouraging learners to actively engage in each other’s cognitive processes.

Peer–peer evaluation plays a central role in this theory, as it fosters an environment of dialogue and reflection. When students assess each other’s work, they engage in meaningful discussions, offer constructive feedback, and reflect on both their own contributions and those of their peers. This reflective process is essential for developing critical thinking and evaluative skills [

22,

23,

24]. Moreover, peer evaluation holds students accountable for their contributions and fosters mutual respect, as they become both assessors and learners [

25].

Collaborative learning also promotes active participation and shared responsibility, making peer evaluation a key element in developing effective teamwork and communication skills [

12,

26]. By engaging in these activities, students learn how to give and receive feedback, articulate their ideas, and listen to differing perspectives, which are essential skills in academic and professional settings [

18,

21]. This collective approach not only enhances learning outcomes but also creates a more inclusive and engaging educational environment where students feel a stronger sense of ownership over their learning process.

1.4. Community of Practice Model

The community of practice (CoP) model, rooted in social learning theory, emphasises collaborative knowledge sharing and skill development within a group bound by shared interests and goals [

27]. This model is particularly significant in PBL, where students engage in real-world projects that require collective problem solving and knowledge construction [

28]. In a CoP, learners work together, leveraging each other’s strengths and experiences to achieve common objectives, which enhances their understanding and application of the subject matter. This collaborative approach not only creates a sense of belonging and mutual support but also facilitates deeper learning through active engagement and peer-to-peer interactions [

29]. Project-based group learning embodies the CoP model by creating structured opportunities for students to collaborate, reflect, and iterate on their projects, thus promoting a continuous cycle of learning and improvement. Peer evaluation further enhances the CoP model in PBL by providing students with the opportunity to critique and learn from each other’s work. Through peer evaluation, students engage in reflective practice, receiving diverse perspectives that can uncover blind spots and highlight strengths in their projects [

30]. This process encourages a culture of continuous feedback and improvement, reinforcing the collaborative nature of the CoP. It also empowers students to take ownership of their learning, as they become both evaluators and contributors to the collective knowledge of the group, driving higher levels of academic achievement and skill development essential for their academic and professional success.

1.5. Assessment for Learning (AfL)

Assessment for Learning (AfL) is an instructional approach that shifts the focus of assessment from mere evaluation to a tool for enhancing student learning. Instead of using assessments solely to measure outcomes, AfL integrates assessment into the learning process, helping students reflect, adjust, and improve their understanding [

31]. The core idea is that assessment should guide both teachers and students in identifying gaps in learning and facilitating the growth necessary to achieve learning objectives.

Peer–peer evaluation fits seamlessly within the AfL framework, as it directly involves students in the assessment process. By taking part in peer evaluation, students engage more deeply with learning objectives and assessment criteria, gaining firsthand experience in what is expected of high-quality work. This participation helps them to develop critical self-regulation skills, as they monitor and assess both their own and their peers’ performance [

15]. Through peer assessment, students can gain a clearer understanding of the subject matter by recognizing the qualities of successful work and understanding what needs improvement.

Additionally, peer evaluation promotes the development of metacognitive skills, encouraging students to think about how they learn and how they can improve. As they assess their peers, students gain valuable insights into the steps needed to reach higher levels of achievement. This active involvement leads to a deeper understanding of the material and often results in improved academic performance [

15].

1.6. Peer Assessment in Practice

Effective peer assessment in group learning is targeted and focuses on specific aspects of a peer’s performance and/or contribution, either in a formative or summative process [

32]. It goes beyond simple praise or criticism, offering actionable suggestions that can guide improvement where applicable. However, the effectiveness of peer assessment in evaluating individual contributions to group work is debated [

16]. Critics claim that peer assessment can result in unreliable and biased grading, including friendship marking, which causes overmarking, collusive marking, which prevents differentiation within groups, and parasite marking, where non-contributing students benefit from group marks [

33,

34]. Lejk and Wyvill (2001) suggest that peer-assessed marks often only slightly differ from equal allocation, failing to accurately represent individual contributions [

35]. Peer assessment was also suggested to be insufficient for assessing individual efforts due to students’ reluctance to judge peers, especially friends, and fears of causing friction [

36,

37]. King and Behnke (2005) noted that in competitive grading systems, students might lower peers’ grades to improve their own standings, while unrestricted high grades can lead to inflated ratings [

38].

Despite these challenges, peer assessment has been shown to enhance students’ learning experiences. Raban and Litchfield (2007) found that providing objective evidence of individual efforts enabled students to give more varied and accurate marks for group work [

39]. This approach also increased acceptance of mark differentiation. Students prefer to base their evaluations on objective data, such as task allocation tables and interaction records [

16]. Engaging in feedback and scores received from peer assessment allows students to assess the work of others critically, helping them to identify strengths and weaknesses. This practice fosters analytical and evaluative skills, which are crucial for problem solving in group tasks [

22]. More recently, utilising summative peer assessment demonstrated an improvement in academic performance of students [

32]. Peer assessments in group work have also been shown to enhance student engagement by promoting active participation in modules for large cohorts [

40]. Fair assessment of teamwork can promote peer collaboration and improve students’ ability to work effectively in teams [

16]. However, issues around biases and lack of fairness still remain a challenge in group peer assessments.

To address these, adjustments can be made to peer assessment practices. One approach is to consider the majority of student scores to eliminate individual biases and exclude self-scores, which are often biased. Additionally, making comments formative and sharing them anonymously with individual students can improve the process via self-reflection. Incorporating all activities, from group work to presentations, into the peer assessment can ensure that scores more accurately reflect student contributions and participation. This ensures that non-contributing members do not receive undeserved high grades, while diligent contributors are appropriately rewarded. Thus, in this current project, we utilised Buddycheck to mitigate the aforementioned challenges and biases to ensure equity in marking.

1.7. Buddycheck

Buddycheck is a peer evaluation tool designed to ensure fairness and accountability in group work by allowing students to evaluate each other’s contributions anonymously both qualitatively and quantitatively within a group. It emphasises equity by promoting objective evaluations and transparency, holding students accountable for their individual responsibilities and avoids “social loafing” or “passenger behaviour” [

16,

41].

In the context of learning and pedagogy, “passenger behaviour” refers to a passive approach taken by students where they act as passive recipients of information rather than active participants in the learning process. This term metaphorically likens students to passengers who simply go along for the ride without engaging deeply with the material or contributing to the learning environment. This is where students work “as a group” versus “in a group” [

42].

Utilising PBL with Buddycheck could mitigate passenger behaviour by enhancing student’s active participation through constructive criticism and formative feedback in peer evaluation [

43]. Buddycheck aids in continuous improvement and skill development, particularly in critical thinking and communication. It fosters enhanced group dynamics and collaborative learning, encouraging shared responsibility for group success. The tool ensures reliability through standardised criteria and rubrics, is user-friendly, and integrates with learning management systems. Teachers can monitor and moderate assessments to maintain fairness and support students throughout the process.

In this study, we investigate whether implementation of Buddycheck allows for equity, fairness, and enhanced participation in group work. We demonstrate the effective design and implementation of Buddycheck in a summative group project where students assess each other on their contribution within their group. Our findings reveal that student satisfaction increased, as evidenced by feedback collected on their experience of the group PBL task, and overall project marks improved over the past few years when comparing results before and after the introduction of Buddycheck in academic year 2023/24. Positive student feedback suggests that the effective implementation of the Buddycheck peer assessment system contributed significantly to achieving the course’s learning outcomes. This indicates that the structured peer evaluation process not only enhanced accountability and collaboration among students but also ensured that the educational goals were met successfully.

2. Methods

The PBL method was utilised in a final-year module, namely “Immunology in Health and Disease”. Initially, between 2019 and 2022, the PBL was carried out in groups but without any peer assessment tool. In 2023, Buddycheck was first implemented and applied to the whole project, i.e., from group work to the presentation itself. Note that the two terms “peer assessment” and “peer evaluation” have been used interchangeably throughout.

2.1. Structure

In this PBL approach, students were randomly divided into groups and tasked with creating a digital poster focused on a disease setting. The primary objective was to encourage collaborative study and knowledge consolidation as students integrated learning materials from the immunology module. Through peer tutoring, discussion, and reflective learning, group members shared their insights and knowledge. The final product was an interactive, accessible, and easily understood digital poster. Students then presented the poster as a group, which was assessed summatively. Whenever a student had accessibility issues or could not attend the presentation, they were able to record themselves (with or without video) and embed it onto the digital group poster.

Overall, there were two aims of implementing Buddycheck:

Enhance fairness and equity of the group work, as discussed below.

Improve students’ learning experience through enhanced participation in group activity.

2.2. Rationale

The rationale behind this project builds on our previous PBL task where students’ activity was not peer evaluated and was assessed formatively [

12]. Here, this PBL is carried out with peer evaluation and is summatively assessed. The objective was to deepen the surface knowledge acquired in lectures and seminars through the construction of a digital poster. Integrating the Buddycheck peer evaluation tool was crucial to enhance accountability, collaboration, and teamwork, and to counteract “passenger behaviour” [

41]. This process aimed to enable a deeper understanding by encouraging students to make sense of what they had learned, thereby ‘create meaning and make ideas their own’ [

44]. Within the cycle of knowledge construction, the project enabled learners to ‘learner observes a moderately complicated situation, makes connections, and builds up relationships to produce more sophisticated conceptions’ [

45]. By synthesising their knowledge, students improved their ability to evaluate, organise, and filter material to produce digestible and accessible content. This approach also encouraged students to make their thinking visible, offering them the opportunity to ‘explicitly monitor their own learning, which encourages reflection and more accurately models the scientific process’ [

46].

In addition to consolidating and evaluating their knowledge, the process of co-creation fostered a sense of community and cohort identity. Unlike the limited and pre-structured core laboratory group work, this task required open peer-to-peer dialogue and was undertaken outside of university contact hours. Students were prompted to manage their own time, organise meetings independently, and take ownership of their learning.

This PBL task was aimed at final and third-year students undertaking the immunology module. Through the students’ construction of the poster, it directed their development to meet the specific needs of higher education assessment [

47]. Consequently, this task promoted an ethos of collegiality, which is especially pertinent to science subjects where solving complex problems in isolation is challenging. As Li et al. (2020) highlight, PBL introduces students to the benefits of collaboration early in their scientific careers [

48].

The Buddycheck peer evaluation system was used to allow students in each group to assess one another’s contribution and participation in the project. This peer-generated adjustment factor was then applied to the final group presentation grade to ensure fairness. For instance, if a student contributed less to the project, their final grade would be adjusted accordingly, preventing them from receiving the same mark as peers who put in more effort. This process helps to ensure that the final grades reflect individual contributions and maintain equity within the group.

2.3. Participants

In the 2023-24 academic year, a total of 103 students participated in the PBL project as part of the final-year Biomedical Science module, “Immunology in Health and Disease” (FHEQ level 6). Of these, 45 students completed the optional Buddycheck survey. It is important to note that this study did not collect or analyse demographic data, such as age, gender, ethnicity, or socio-economic background of the participants.

2.4. Student Activity

At the start of the module, students were introduced to the required coursework assignments. This included the group preparation and presentation of a scientific poster based on a current topical research article that fits within the content of the Immunology in Health and Disease module.

Students were given an introductory presentation outlining the requirements of the assignment alongside general pointers and guidance for success. This included highlighting the benefits of academic posters as an important scientific tool that facilitates rapid communication of scientific ideas, as well as the necessary real-world skills that will be developed by engaging with the assignment [

49].

The importance of groupwork and teamwork was impressed on the students, not only as a principle of social constructivism and building knowledge but also as a mechanism to increase academic achievement in comparison to individualistic learning. These were highlighted as invaluable skills that are not only useful throughout an academic career but also by many future employers [

50]. The students were given explicit guidance on what makes a successful scientific poster and what sections needed to be included. Students were also provided with a blank PowerPoint template upon which to build their design and were given free range for the visual design and scientific content of the poster. In addition, guidance was given on how to get the most out of teamwork. This included the options for holding regular weekly meetings and division of labour, through to guidance on how to manage and resolve potential conflict.

Students were also introduced to peer evaluation and the use of Buddycheck as an educational tool. Guidance was given on how Buddycheck would be integrated within the assignment, and how this would adjust the group mark on an individual basis for each student based on the peer evaluation.

At the start of the module, the students were randomly assigned into fifteen groups of six/seven students, with each group being assigned a current topical research article. During the first lecture, students were encouraged to meet with their group members, whilst initial group communication was possible through the Canvas chat function. Students then worked until the assessment deadline to prepare their poster and presentations using their collaboration medium of choice.

2.5. Assessment and Feedback

It was decided to use a digital poster format to promote inclusivity [

51]. If there were any students with reasonable adjustments (anxiety, for example) or absences, this provided an option for those students to record their narrated segment and embed it within the PowerPoint file, so that it could be played back at the appropriate part by their teammates during the presentation. Students were then given eight minutes to present their poster to two academic members, with two minutes devoted to questions. Using the marking criteria, the tutors awarded a group mark for each poster which was entered into Canvas alongside feedback. Student groups were awarded a mark based on three criteria: visual impact (30%), scientific content (30%), and delivery of the poster (40%). The table below summarises the marking criteria (

Table 1).

Following the presentations, students were invited through Buddycheck to participate in the peer evaluation process by answering predefined questions on five sections (keeping the team on track, interacting with teammates, related knowledge skills and abilities, expected quality, and contribution to the teams’ work). Each student was also asked to self-score, though this played no part in the adjustment factor. Students were also asked to leave peer messages as part of the formative feedback process, which has been shown to enhance students’ learning [

15,

52]. Upon completion of the peer evaluation process, Buddycheck generated an individual adjustment factor for each student based on their perceived contribution by the group peers. This adjustment factor was then used to adjust the group mark for each student, with the assignment being converted from a group assignment to an individual assignment. A comprehensive report was also emailed to the students via Buddycheck, including results from the peer evaluation in comparison to self-evaluation scores. In addition, formative feedback and pointers on how to improve on the five evaluated sections were provided. Students were, therefore, given guidance, not only from the tutors but also feedback from the group via Buddycheck.

The tutor had complete oversight of the Buddycheck process before the adjustment of marks and feedback report were sent out to students. This enabled the tutor to check that the proposed adjustment factors were appropriate, and that peer messages were similarly screened. Any students with reasonable adjustments could also be withdrawn from Buddycheck at this point, if the process were to negatively affect their grade. Buddycheck provided feedback labels that gave the tutor insight into the dynamics within the group. These highlighted high and low achievers; over- or under-confidence; conflict, tension; or if individuals were trying to skew the results. Buddycheck also provided the option for students to feedback directly to their tutor, including any concerns they may have.

2.6. Buddycheck Settings

When setting up the Buddycheck peer evaluation, it was decided to use the default peer evaluation questions. These are as follows: keeping the team on track; interacting with teammates; having related knowledge, skills, and abilities; expected quality; contributing to the team’s works; team satisfaction; team conflict.

The open question option was turned on to gather student feedback regarding the team and assessment in general. The peer messages option was also turned on to enable students to provide peer feedback messages for each group member.

The caps refer to the weightings set at the start of the Buddycheck process, using the default settings of 0.5 and 1.05. Buddycheck uses its algorithm to calculate the adjustment factor based on the responses students provide. If any individual adjustment factor falls outside the range set by these caps, the system limits it to either 0.5 or 1.05, ensuring the adjustments stay within the defined boundaries (

Table 2).

Random groups of six to seven students for each poster/research article were created in Canvas and synced with Buddycheck. The Canvas group chat function was enabled to allow the student an instant means of group communication.

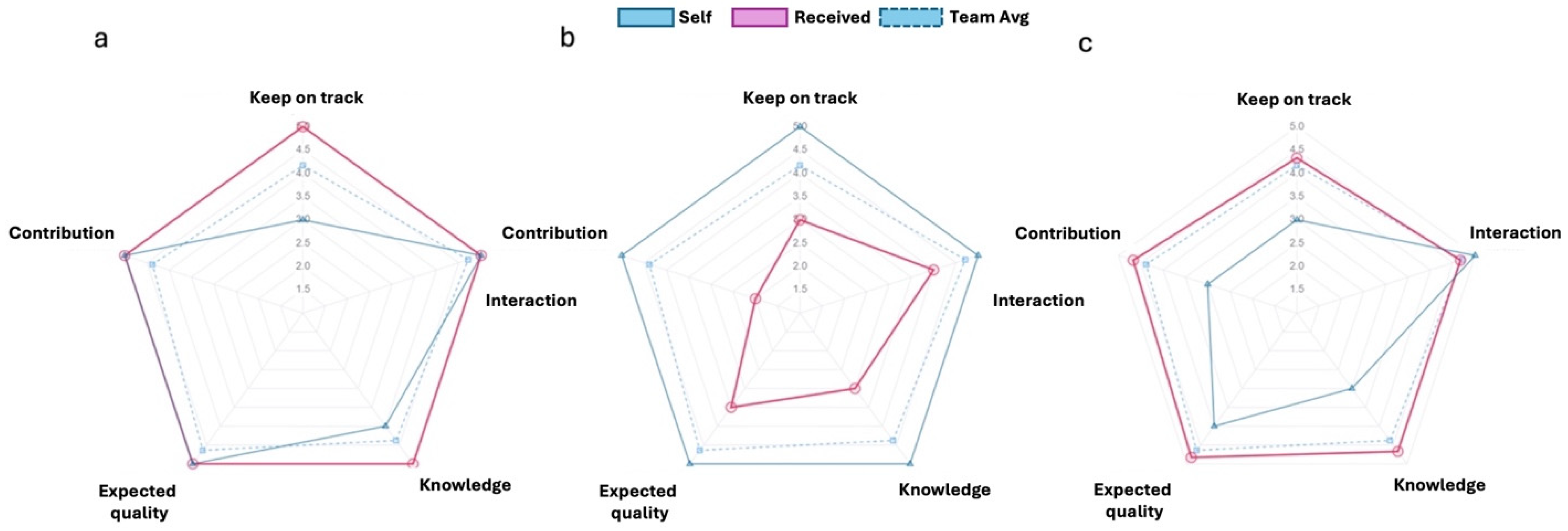

Students were given a week to complete the Buddycheck peer evaluation, after which the numerical results of the peer evaluation were applied. Student self-scores were excluded from the calculations, which still allowed students to receive feedback based on their self-score in comparison to their peers’ scores (

Figure 1). The figure illustrates how students assess themselves and each other within their group. Self-scores are excluded from final grading due to potential bias, as students may either overrate (

Figure 1b) or underrate (

Figure 1c) themselves. To mitigate this bias, group scores are used and adjusted with an adjustment factor to contribute to the final marks (

Table 2).

Where students underscore themselves (

Figure 1c), Buddycheck would provide the following feedback after final marking is complete:

“Your self-ratings were significantly lower than your teammates. The members of your team have indicated that you were a highly effective team member. Please try not to minimize the value of your contributions to the team”.

In the case where students appropriate score themselves (

Figure 1a), the feedback is:

“Congratulations! The members of your team have indicated that you were a highly effective team member. Keep up the good work!”

The weighting that the adjustment factor can have on the final scores was set to a value of 1 for both positive and negative adjustment factors. The ‘minimum grade is 0’ option was selected, as was the ‘grade cannot exceed maximum points’ option.

Once the peer evaluation had been screened by the tutor for the appropriateness of the scores and feedback, and any students with reasonable adjustments had been removed from the peer evaluation, results could be applied to the group marks. This process enabled Buddycheck to adjust the group marks on an individual basis. An example of how a mark is adjusted using Buddycheck is tabulated above (

Table 2).

Marks were released to students on Canvas alongside the release of the Buddycheck peer evaluation scores and feedback. This was accompanied by a general Canvas announcement to all students informing them how to access scores and feedback.

2.7. Student Feedback and Data Analysis

In this study, we conducted both qualitative and quantitative analyses. For the current PBL task, we collected student feedback (qualitative) using the default Buddycheck service evaluation form, which students voluntarily completed at the end of the activity. This form includes an open text box for students to provide additional comments, prompted by the following question: “If you have anything else to say, please share it here”. The comments shared in the results section are fully anonymous and only the teacher has access. In the current PBL task, 45 out of 103 students (44%) provided feedback on their experiences related to engagement, participation, teamwork, communication, and collaboration within their group work. These areas were emphasised as key aspects for reflection throughout the task.

To facilitate a comparison with previous years’ PBL activities, we collected student feedback from an open-ended question, “Please share your experience working in your group while creating the poster”, which was part of an end-of-module survey and not a research-driven questionnaire. Two faculty members sorted through this feedback by searching for terms, such as “group”, “group work”, “engage”, “team”, “teamwork”, and “equal”. Additionally, to ensure comprehensiveness, we individually read through the comments to identify those specifically focused on the PBL group activity and presentation. Student feedback was collected from the academic years 2021/22 and 2022/23, with approximately 30% of students sharing their responses. For the academic year 2023/24, the survey was not administered due to the inclusion of a similar evaluation question in Buddycheck, which aligned closely with the format and purpose of the survey previously used in earlier years.

All feedback was then gathered and transferred into a spreadsheet for analysis. The data were reviewed, and the PBL task was evaluated in terms of its effectiveness across several key areas:

Fair and positive group dynamics: Assessing how equitably responsibilities were shared among group members and the overall cohesion and cooperation within the group.

Consolidation of knowledge: Evaluating the extent to which the PBL activity helped students integrate and deepen their understanding of the module content.

Developing communication skills: Determining the effectiveness of the activity in enhancing students’ abilities to communicate their ideas clearly and effectively, both within their groups and during the poster presentation.

2.8. Comparison to Previous Year

In this module, students’ project marks were analysed before and after the implementation of Buddycheck. A quantitative comparison was conducted between the results obtained in the same project (same module) in the academic years 2019/20–2022/23 (prior to the introduction of Buddycheck) and those from the year Buddycheck was implemented in 2023/24. Student results from these five years were retrieved from a school office database, anonymised, and then compared to determine whether students achieved higher grades following the introduction of Buddycheck in the PBL project presentation. The findings are presented as mean ± standard deviation. Using GraphPad PRISM (Version 10.1.0, Boston, USA), a Mann–Whitney (non-parametric) test was conducted to evaluate the statistical differences between the individual academic years “19–20,” “20–21,” “21–22,” and “22–23” in comparison to “23–24”, where a p value of less than 0.05 was considered statistically significant.

3. Results and Discussions

This study builds on our previous PBL task, where peer assessment was not employed, and the evaluation was formative in nature. In the current iteration, peer assessment was integrated using the Buddycheck tool, with summative assessment applied to enhance accountability, collaboration, and teamwork. The shift towards summative peer evaluation aimed to address the issue of “passenger behaviour” in group work, where some students may contribute less to the overall project [

41]. By implementing peer evaluations in PBL group work, we sought to deepen the knowledge students acquired through lectures and seminars by having them collaboratively construct digital posters. This approach promoted fair individual marking and enhanced students’ learning experience, as captured by student comments in later sections.

3.1. Lack of Equal Participation from Previously Run PBL without Peer Evaluation or Buddycheck

In previous years, the PBL group work did not include peer evaluation, resulting in some dissatisfaction among students on the module. One student said,

“The group activity felt unproductive because not everyone was actively participating. The absence of peer evaluation may have contributed to this, as there was no accountability for each member’s contributions. It was unfair that some students did nothing but still received the same grade as the rest of us who put in the effort.”

Another comment: “There was no accountability, and some students didn’t contribute as much as they could have, making the workload uneven”.

The students’ concerns about unequal participation point to a common challenge in group projects. When some members contribute less than others, it can lead to frustration and imbalance in workload distribution, potentially creating a negative group dynamic [

12]. Moreover, there was a lack of communication among students, potentially due to lack of accountability.

One student said, “Students didn’t engage much when reaching out to them”.

Another said, “…I had no problem with my team but students should be monitored individually to see if they are working or if they are slacking off and leaving their work for the rest of the team to do”.

Formalising equal participation can help mitigate these issues, promoting fairness and accountability. Moreover, by making equal participation a formal requirement, students are likely to feel more compelled to engage fully with the project. This can enhance teamwork, as each member understands their role and responsibility within the group. Formal assessment of participation can drive better collaboration, as students recognise that their individual contributions will directly impact their grades [

53].

Incorporating peer evaluation as a formal part of the grading process ensures that students are aware their contributions will be evaluated by their peers [

54]. This can motivate students to participate more actively and equitably. Peer evaluation (e.g., Buddycheck) provides a structured way to monitor and evaluate each member’s involvement, making it clear that equal participation is a key component of the project’s success [

43].

To address the aforementioned comments, we introduced Buddycheck, a peer evaluation tool, allowing students to evaluate each other within their groups. This evaluation directly influences the final project mark, including the grading of the poster presentation. This incorporation has notably improved the student experience, as evidenced below.

3.2. Fair and Positive Group Dynamic through Effective Teamwork

Students consistently noted the importance of equal input and mutual respect among group members.

One student remarked, “

We all had an equal input into the poster and presentation. There was never any conflict and I would work with the team again in a heartbeat”. This sense of equality ensured that every member felt valued and responsible for the group’s success, fostering a collaborative atmosphere where diverse ideas were welcomed and considered. The absence of conflict further indicated that members were respectful and supportive, creating a positive and productive working environment [

55].

Effective communication was a cornerstone of the positive group dynamics observed. One student stated, “No one was scared to speak up and everyone was very respectful to others”. Open communication channels within the group encouraged active participation and the free flow of ideas. When members felt safe to express their thoughts and concerns without fear of criticism, it promoted a constructive and inclusive dialogue. Respectful interactions helped maintain harmony and facilitated the exchange of constructive feedback.

Regular and productive meetings were indicative of a well-functioning group. A student noted, “

Regular scheduled meetings were productive. People showed up to meetings with materials ready and eager to do good work and share thoughts”. This level of commitment and preparedness was essential for maintaining momentum and ensuring efficient task completion. When members came to meetings ready to contribute, it not only enhanced productivity but also demonstrated a collective dedication to the project’s success [

55].

While varying levels of engagement were observed, a supportive group dynamic ensured that this did not negatively impact the overall outcome. As one student observed, “Some people did not engage as highly as the others but nobody did anything that would jeopardize the presentation or the group’s grade”. This adaptability and mutual support allowed groups to navigate different levels of participation without compromising the quality of the work. It highlighted the importance of understanding and accommodating individual differences while maintaining a focus on the group’s shared goals.

The statement, “

We worked really well as a team, and everyone contributed equally”, exemplified balanced contribution and strong team cohesion. When all members actively participated and shared responsibilities, it led to a more integrated and cohesive final product. Equal contribution not only distributed the workload fairly but also ensured that the collective knowledge and skills of the group were fully utilised [

56]. This also reflects key principles of the community of practice (CoP) model, where learning occurs through active participation, collaboration, and the sharing of knowledge among group members who work toward a common goal [

57]. When all members contribute equally, it demonstrates the successful distribution of roles and tasks, a hallmark of effective CoP, where learning is co-constructed through interaction [

58].

3.3. Consolidation of Knowledge through Teamwork

One student remarked, “I enjoyed this coursework, it helped me to develop my communication and teamwork skills and build on my scientific knowledge, be more creative”. This feedback underscores the multifaceted benefits of PBL. The collaborative nature of the project required students to articulate their ideas clearly and work cohesively with their peers. As a result, they not only deepened their understanding of scientific concepts but also honed their ability to communicate effectively and work as part of a team.

Another student noted the balanced contributions within the group: “

Overall I really enjoyed working with this group, everyone put equal effort into organising meetings and making sure people were on track—each person was knowledgeable and didn’t have to ask people to do work at any point as everyone stayed on track!”. This comment highlights the importance of mutual respect and responsibility in the learning process. The equal effort from all members ensured a fair distribution of work and fostered an environment where knowledge was actively shared and reinforced [

56]. Such an approach not only solidifies individual understanding but also enhances the collective competence of the group.

Positive team dynamics played a crucial role in the successful consolidation of knowledge. One student shared, “

I loved my team members as they were so friendly. I enjoyed doing this poster presentation as all of my team members worked hard and we never got into disagreement. Everyone made time to meet, summarise, practice, go through each other’s parts and help each other. It was the best teamwork that I ever had”. The friendly and supportive atmosphere allowed students to freely share their insights and learn from one another [

59]. Regular meetings and collaborative practice sessions ensured that everyone was well prepared and confident in their understanding of the material. This cooperative spirit not only facilitated deeper learning but also built a strong foundation for future collaborative endeavours.

Moreover, this above comment suggests that “positive interdependence” was a key factor in facilitating effective collaboration and knowledge consolidation among students [

60]. The positive influence of interdependence on student achievement has been widely documented in the literature [

61,

62,

63]. Our students’ feedback highlights the importance of accomplishment in learning, likely influenced by both the intrinsic motivation and the summative nature of the peer assessment and poster presentation, as engagement was low in previous assessments without peer assessment. In this context, achieving higher grades seems to have motivated students to engage more effectively in group work and learning. This observation aligns with previous findings [

64,

65], which noted that rewards, such as grades, played a significant role in enhancing collaboration. However, this partially contrasts with Scager et al. (2016), who found that students valued the learning process itself, which led to greater collaborative effort, suggesting that intrinsic motivation may also drive successful teamwork [

60].

The creative aspect of the project, such as designing a poster presentation, also played a significant role in knowledge consolidation [

12]. The opportunity to creatively express scientific concepts helped students internalise and present their knowledge in a clear and engaging manner. By summarising and explaining complex information, students were able to reinforce their own understanding and contribute to the collective learning of the group.

3.4. Developing Communication Skills

One student noted, “Due to the fact that the whole task was assessed and everyone in the group knew they’d be assessed for their contribution, everyone worked equally as hard...No conflict had arisen, & when asked to do something, everyone would deliver and communicate well. Everyone got in contact very early, so we were able to make constant progress across the past 2 months”. This statement underscores how the peer-assessed PBL approach enhanced students’ communication abilities. Engaging in regular discussions and collaborative tasks required students to clearly articulate their ideas and listen actively to their peers, thereby refining their ability to convey complex information effectively.

Another student remarked, “Except from one person, everyone contributed to the project and made an effort to get the work done by specific times. It was easy working with my team, as everyone communicated efficiently regarding the meeting times. Everyone felt comfortable giving colleagues constructive feedback”. Efficient communication about logistical details, such as meeting times, facilitated smoother collaboration and ensured that the group stayed on track. Additionally, the comfort in providing constructive feedback indicates a mature communication environment where students could discuss and improve their work candidly and respectfully.

The comment, “Amazing group, everyone was accommodating and worked well, never ran into any situations everyone was communicative and productive”, highlights the importance of continuous and inclusive communication. When all group members actively participate and share information openly, it prevents misunderstandings and ensures that everyone is aligned with the group’s goals. This level of engagement fosters a productive and harmonious working environment.

A student noted, “We worked really well as a team, and everyone contributed equally. I would have liked to have more meetings to prepare and rehearse as a team, but we had good communication on group chats almost daily”. The use of digital communication tools facilitated regular interaction and coordination among team members, demonstrating how technology can enhance communication in group projects. Daily communication helped maintain a constant flow of information, enabling the group to address issues promptly and keep everyone informed about progress and changes.

It is well established that increased engagement in group learning is closely tied to enhanced communication among team members. A fundamental aspect of PBL is the necessity for effective, consistent communication, where all participants work towards a common goal—an inherent principle of the community of practice model. The summative peer assessment process likely further reinforced this engagement, maintaining active participation throughout the task. The previously discussed positive interdependence may have contributed to improved communication within the groups. This observation is consistent with findings from studies on group learning, which emphasize the importance of interdependence in promoting teamwork and communication [

66,

67,

68,

69]. However, van Gennip et al. (2010) reported that while collaboration and interdependence enhance group dynamics such as communication and information sharing, these do not necessarily result in deeper conceptual understanding or perceived learning gains [

22]. Moving forward, future research should explore whether peer-assessed PBL tasks lead to improvements in interpersonal and transferrable skills, such as communication and teamwork, through a more detailed analysis of the learning outcomes associated with these group processes.

3.5. Assessment of Project Marks in the Module over Five Years

We compared the project marks of students using the Buddycheck peer evaluation system in year 2023–2024 with those from the past four years when Buddycheck was not implemented (

Table 3). The data indicate a statistically significant improvement in project marks this year, coinciding with the introduction of Buddycheck. This improvement is supported by positive feedback from students, who reported a higher level of enjoyment and engagement in group activities.

The comparative analysis of project marks revealed that the integration of Buddycheck corresponded with higher average scores. A recent meta-analysis reported that peer assessment improved students’ academic performance in formative assessments [

17]. However, in summative collaborative group work, peer assessment improved academic scores [

32], promoting “fairness” in marking [

70] and reducing social loafing [

71]. Constructivist learning theories suggest that active involvement in learning processes, such as through peer assessment, enhances students’ cognitive development and retention of information [

72]. The improved marks indicate that students not only engaged more deeply with the content but also developed essential skills such as teamwork, communication, and self-regulation.

4. Challenges in This PBL Task and Peer Assessment

From a pedagogical and theoretical perspective, one limitation of using Buddycheck (a peer evaluation tool) in PBL tasks is that students often underestimate or overestimate themselves and others. This phenomenon can be understood through the lens of social comparison theory, where individuals assess their own abilities by comparing themselves to others, often leading to biased self-assessments and peer assessments [

73]. Such biases, whether stemming from the Dunning–Kruger effect (overestimating one’s abilities) or imposter syndrome (underestimating one’s abilities), are unavoidable and can skew the accuracy of the assessments [

74,

75].

While the self-evaluation marks from Buddycheck are not included in the final grade, the potential bias is mitigated through a structured peer evaluation process. Peer evaluations encourage students to provide more balanced and reflective feedback, fostering a deeper understanding of their own and their peers’ contributions [

15]. This approach aligns with constructivist learning theories, which emphasise the importance of active engagement and reflection in the learning process. By incorporating peer evaluations, the tool supports a more collaborative and self-regulated learning environment, helping to balance out individual biases and ensuring a fairer assessment of each student’s performance.

There were instances where we had to create groups larger than the intended size of 4–5 students. This led to some dissatisfaction among a minority of students. A student remarked, “7 people in a group for a project of this size is way too many in my opinion, would have much preferred around 5. It becomes difficult to organise a group that big”. This feedback highlights a limitation associated with the current group size configuration.

The primary reason for the larger group sizes of 6–7 students is the large cohort size. Smaller groups would lead to a significantly higher number of groups, making management and oversight more challenging. Additionally, logistical issues arise when students join the course late or return after a year abroad or from a professional/research placement, necessitating their integration into existing groups. In future years, this will be mitigated by ensuring extra group allocation for returning students.

A minority of students continue to neglect their tasks, a habit carried over from previous activities, where tools like Buddycheck or peer evaluation were not utilised. This ongoing issue was highlighted by several student comments.

One student remarked, “

The group work was challenging because it was difficult to get everyone to meet up at the same time, and people needed reminding to put their parts on the poster”. This reflects the logistical difficulties in coordinating schedules and ensuring consistent contributions from all group members [

60].

Another student noted, “

Not all group members finished their parts at the time the group agreed on, which left very limited time for recommending improvements by other members and checking for the overall flow of the poster”. This feedback underscores the negative impact of delayed contributions on the quality of the final product. The inability to complete individual tasks on time prevents the group from collaboratively refining the project, affecting its cohesiveness and overall quality. This could also be due to “social loafing” where members rely on others to complete the task [

76]. This “free riding” practice could hinder positive experience in group learning.

To address these challenges, incorporating peer evaluation tools like Buddycheck in earlier semesters can be beneficial [

77]. Educational theories on collaborative learning, such as Johnson and Johnson’s cooperative learning theory, emphasise the importance of individual accountability and positive interdependence in group work [

78]. Peer evaluation enhances accountability by making students aware that their contributions will be reviewed by peers, motivating them to meet deadlines and participate actively, thereby reducing task neglect [

79]. It ensures timely task completion, which is crucial for meaningful feedback and maintaining project quality. Consistent use of peer evaluation helps develop essential skills such as time management, collaboration, and constructive feedback, which are vital for academic and professional success. Additionally, it promotes collective responsibility, leading to more effective collaboration and a stronger commitment to group goals.

6. Future Directions

In future iterations of our teaching practice, we plan to reassess students in subsequent modules, incorporating insights from peer assessments conducted during the current project-based learning (PBL) module. This approach will encourage students to engage in self-reflection and enhance their performance in theoretical and practical aspects.

We also aim to assess the long-term impacts of the Buddycheck peer assessment tool on students’ skill development beyond group work. Specifically, we are interested in whether the insights gained through peer feedback contribute to the enhancement of critical soft skills, such as communication, teamwork, and self-regulation, in a broader academic or professional context within science subjects. By examining how students apply peer feedback in future courses or real-world settings, we hope to evaluate whether the formative and summative peer assessment process fosters continuous improvement and personal growth over time. This analysis may help inform the integration of peer assessment as a sustained pedagogical tool in skill development curricula.

Additionally, we intend to conduct a detailed analysis of intra-group scores, focusing on whether high- or low-achieving students display biases when evaluating peers from different achievement levels. For instance, we seek to examine whether low-achieving students’ grade both low- and high-achieving peers differently, and vice versa.

Cultural factors can significantly influence the effectiveness of peer assessment by shaping group dynamics and feedback perceptions. Communication styles vary, with some cultures favouring direct feedback while others prioritise politeness and harmony, leading to potential reluctance in giving critical assessments [

80]. In hierarchical cultures, peer feedback may be undervalued compared to instructor feedback, whereas more egalitarian cultures embrace it [

81]. Group work norms, varying by cultural emphasis on collaboration versus individual achievement, also impact engagement with peer assessment [

82]. Additionally, gender dynamics and differing interpretations of feedback can introduce biases [

83]. Considering these cultural nuances is essential in ensuring peer assessment and is both effective and equitable. Thus, we would like to explore this in the future.

Another key area of interest is the role of self-assessment. While often biased, it remains crucial to investigate how students perceive their own contributions and how these self-assessments correlate with objective academic outcomes, such as exam performance. These findings could provide valuable insights into the types of support and training that may be beneficial to better prepare students for future academic and professional challenges, particularly in STEM. Future research should aim to address these questions by including larger, more diverse samples, employing longitudinal designs, and implementing strategies to minimise bias in peer evaluations.

7. Conclusions

The implementation of project-based learning in conjunction with the peer evaluation tool Buddycheck has proven to be an effective strategy in enhancing student engagement, accountability, and satisfaction in STEM education. By allowing students to assess each other’s contributions within small groups, Buddycheck introduced a fair and equitable grading system that reflected individual performance, thereby increasing student buy-in and participation. Additionally, the process facilitated the development of crucial skills such as knowledge consolidation and communication, further enriching the learning experience.

Our findings also indicate an improvement in overall marks following the introduction of peer evaluation, suggesting that this approach not only benefits student experience but also positively impacts academic outcomes. However, we acknowledge certain limitations in our current methodology. To address these, future iterations of this approach will incorporate peer observation and more comprehensive pedagogical strategies to refine and enhance the effectiveness of the peer evaluation process. Through these adjustments, we aim to further optimise the learning environment and continue improving student outcomes in PBL settings.