The Good and Bad of AI Tools in Novice Programming Education

Abstract

1. Introduction

1.1. Literature Review

1.2. Aims and Research Questions

2. Methodology

2.1. Participants

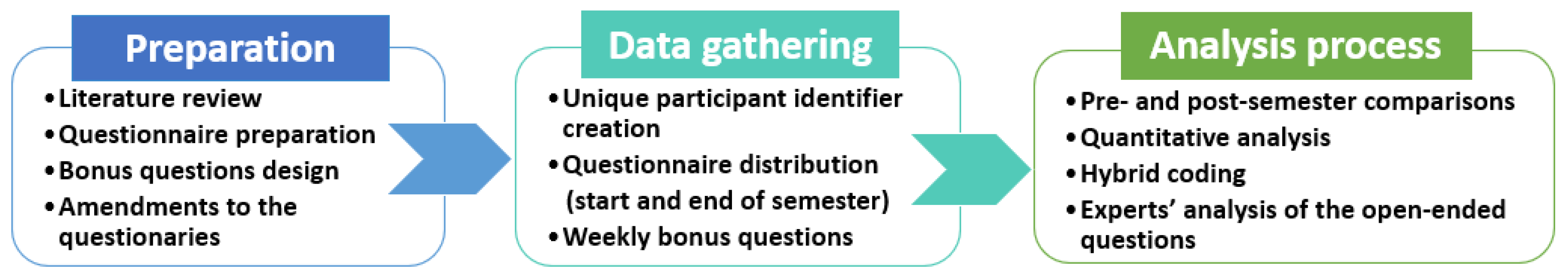

2.2. Procedure and Data Analysis

2.3. Data Analysis

3. Results

3.1. Familiarity with AI Tools

3.2. Dynamics of AI Tool Integration

3.3. Student Satisfaction with AI Tools

3.4. Common AI Tool Tasks and Prevalence

3.5. Benefits and Concerns of AI Tool Usage

4. Discussion

4.1. General Discussion

4.2. The Good

4.3. The Bad

5. Conclusions

6. Limitations

7. Future Research

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- I feel familiar with AI tools usage (Likert scale from 1 to 5). (This question was given at the beginning of the course and was given again at the end of the course.)

- I feel comfortable with usage of AI tools in this assignment (Likert scale from 1 to 5). (This question was given only for assignments that required the use of AI tools.)

- Which tools did you use: ____________________

- I used AI tools during this assignment (yes/no). (This question was given only for assignments that do not require the use of AI tools.)

- Query language: I used only English, only Hebrew, both English and Hebrew, other language ____

- I was happy with the results provided by AI tools (Likert scale from 1 to 5).

- I am concerned that I may not have enough time to complete the assignment without the help of AI tools (Likert scale from 1 to 5).

- I used AI tools during this assignment for the following tasks ____________________ (Note: In the analysis of this question, we did not analyze the specific tasks required by the assignment itself.)

- Provide a screenshot of the good prompt (a compulsory question in all assignments where students were asked to use AI tools).

- Provide a screenshot of the bad prompt (a compulsory question in all assignments where students were asked to use AI tools).

- Describe the benefits and concerns about using AI tools in your studies, personally. (This question was given in the middle of the course and was given again at the end of the course.)

References

- Becker, B.A.; Denny, P.; Finnie-Ansley, J.; Luxton-Reilly, A.; Prather, J.; Santos, E.A. Programming is hard-or at least it used to be: Educational opportunities and challenges of ai code generation. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1; Association for Computing Machinery: New York, NY, USA, 2023; pp. 500–506. [Google Scholar]

- Cotton, D.R.E.; Cotton, P.A.; Shipway, J.R. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Denny, P.; Prather, J.; Becker, B.A.; Finnie-Ansley, J.; Hellas, A.; Leinonen, J.; Luxton-Reilly, A.; Reeves, B.N.; Santos, E.A.; Sarsa, S. Computing Education in the Era of Generative AI. Commun. ACM 2024, 67, 56–67. [Google Scholar] [CrossRef]

- Firat, M. What ChatGPT means for universities: Perceptions of scholars and students. J. Appl. Learn. Teach. 2023, 6, 57–63. [Google Scholar]

- Tlili, A.; Shehata, B.; Adarkwah, M.A.; Bozkurt, A.; Hickey, D.T.; Huang, R.; Agyemang, B. What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education–where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 1–27. [Google Scholar] [CrossRef]

- Kalliamvakou, E. Research: Quantifying GitHub Copilot’s Impact on Developer Productivity and Happiness. GitHub Blog 2022. Available online: https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-on-developer-productivity-and-happiness/ (accessed on 1 May 2024).

- Peng, S.; Kalliamvakou, E.; Cihon, P.; Demirer, M. The impact of ai on developer productivity: Evidence from github copilot. arXiv 2023, arXiv:2302.06590. [Google Scholar]

- Finnie-Ansley, J.; Denny, P.; Becker, B.A.; Luxton-Reilly, A.; Prather, J. The robots are coming: Exploring the implications of OpenAI Codex on introductory programming. In Proceedings of the 24th Australasian Computing Education Conference, Virtual Event, 14–18 February 2022; pp. 10–19. [Google Scholar]

- Yilmaz, R.; Yilmaz, F.G.K. The effect of generative artificial intelligence (AI)-based tool use on students’ computational thinking skills, programming self-efficacy and motivation. Comput. Educ. Artif. Intell. 2023, 4, 100147. [Google Scholar] [CrossRef]

- Bird, C.; Ford, D.; Zimmermann, T.; Forsgren, N.; Kalliamvakou, E.; Lowdermilk, T.; Gazit, I. Taking Flight with Copilot: Early insights and opportunities of AI-powered pair-programming tools. Queue 2022, 20, 35–57. [Google Scholar] [CrossRef]

- Lau, S.; Guo, P. From “Ban it till we understand it” to “Resistance is futile”: How university programming instructors plan to adapt as more students use AI code generation and explanation tools such as ChatGPT and GitHub Copilot. In Proceedings of the 2023 ACM Conference on International Computing Education Research-Volume 1; Association for Computing Machinery: New York, NY, USA, 2023; pp. 106–121. [Google Scholar]

- Ray, P.P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Yin, J.; Goh, T.T.; Yang, B.; Xiaobin, Y. Conversation technology with micro-learning: The impact of chatbot-based learning on students’ learning motivation and performance. J. Educ. Comput. Res. 2021, 59, 154–177. [Google Scholar] [CrossRef]

- Biswas, S. Role of ChatGPT in Computer Programming. Mesopotamian J. Comput. Sci. 2023, 2023, 9–15. [Google Scholar] [CrossRef] [PubMed]

- Haleem, A.; Javaid, M.; Singh, R.P. An era of ChatGPT as a significant futuristic support tool: A study on features, abilities, and challenges. BenchCouncil Trans. Benchmarks Stand. Eval. 2022, 2, 100089. [Google Scholar] [CrossRef]

- Jalil, S.; Rafi, S.; LaToza, T.D.; Moran, K.; Lam, W. Chatgpt and software testing education: Promises & perils. In Proceedings of the 2023 IEEE International Conference on Software Testing, Verification and Validation Workshops (ICSTW), Dublin, Ireland, 16–20 April 2023; pp. 4130–4137. [Google Scholar]

- Surameery, N.M.S.; Shakor, M.Y. Use chat gpt to solve programming bugs. Int. J. Inf. Technol. Comput. Eng. 2023, 3, 17–22. [Google Scholar] [CrossRef]

- Vukojičić, M.; Krstić, J. ChatGPT in programming education: ChatGPT as a programming assistant. InspirED Teach. Voice 2023, 2023, 7–13. [Google Scholar]

- Zhai, X. ChatGPT for next generation science learning. XRDS Crossroads ACM Mag. Stud. 2023, 29, 42–46. [Google Scholar] [CrossRef]

- Malinka, K.; Peresíni, M.; Firc, A.; Hujnák, O.; Janus, F. On the educational impact of chatgpt: Is artificial intelligence ready to obtain a university degree? In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1; Association for Computing Machinery: New York, NY, USA, 2023; pp. 47–53. [Google Scholar]

- Rudolph, J.; Tan, S.; Tan, S. ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? J. Appl. Learn. Teach. 2023, 6, 342–363. [Google Scholar]

- Covill, A.E. College students’ perceptions of the traditional lecture method. Coll. Stud. J. 2011, 45, 92–102. [Google Scholar]

- Yue, S. The Evolution of Pedagogical Theory: From Traditional to Modern Approaches and Their Impact on Student Engagement and Success. J. Educ. Educ. Res. 2024, 7, 226–230. [Google Scholar] [CrossRef]

- Stukalenko, N.M.; Zhakhina, B.B.; Kukubaeva, A.K.; Smagulova, N.K.; Kazhibaeva, G.K. Studying innovation technologies in modern education. Int. J. Environ. Sci. Educ. 2016, 11, 7297–7308. [Google Scholar]

- Baidoo-Anu, D.; Ansah, L.O. Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Pardos, Z.A.; Bhandari, S. Learning gain differences between ChatGPT and human tutor generated algebra hints. arXiv 2023, arXiv:2302.06871. [Google Scholar]

- Chen, R.; Zhao, H. ChatGPT in Creative Writing Courses in Chinese Universities: Application and Research. In Proceedings of the 2024 12th International Conference on Information and Education Technology (ICIET), Yamaguchi, Japan, 18–20 March 2024; pp. 243–247. [Google Scholar]

- Fischer, R.; Luczak-Roesch, M.; Karl, J.A. What does chatgpt return about human values? exploring value bias in chatgpt using a descriptive value theory. arXiv 2023, arXiv:2304.03612. [Google Scholar]

- Huang, Z.; Mao, Y.; Zhang, J. The Influence of Artificial Intelligence Technology on College Students’ Learning Effectiveness from the Perspective of Constructivism—Taking ChatGPT as an Example. J. Educ. Humanit. Soc. Sci. 2024, 30, 40–46. [Google Scholar] [CrossRef]

- Lo, C.K. What is the impact of ChatGPT on education? A rapid review of the literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Mishra, P.; Warr, M.; Islam, R. TPACK in the age of ChatGPT and Generative AI. J. Digit. Learn. Teach. Educ. 2023, 39, 235–251. [Google Scholar] [CrossRef]

- Koć-Januchta, M.M.; Schönborn, K.J.; Roehrig, C.; Chaudhri, V.K.; Tibell, L.A.; Heller, H.C. “Connecting concepts helps put main ideas together”: Cognitive load and usability in learning biology with an AI-enriched textbook. Int. J. Educ. Technol. High. Educ. 2022, 19, 11. [Google Scholar] [CrossRef]

- Sandoval-Medina, C.; Arévalo-Mercado, C.A.; Muñoz-Andrade, E.L.; Muñoz-Arteaga, J. Self-Explanation Effect of Cognitive Load Theory in Teaching Basic Programming. J. Inf. Syst. Educ. 2024, 35, 303–312. [Google Scholar] [CrossRef]

- Mandai, K.; Tan, M.J.H.; Padhi, S.; Pang, K.T. A Cross-Era Discourse on ChatGPT’s Influence in Higher Education through the Lens of John Dewey and Benjamin Bloom. Educ. Sci. 2024, 14, 614. [Google Scholar] [CrossRef]

- Kuhail, M.A.; Mathew, S.S.; Khalil, A.; Berengueres, J.; Shah, S.J.H. “Will I be replaced?” Assessing ChatGPT’s effect on software development and programmer perceptions of AI tools. Sci. Comput. Program. 2024, 235, 103111. [Google Scholar] [CrossRef]

- Rahman, M.M.; Watanobe, Y. ChatGPT for education and research: Opportunities, threats, and strategies. Appl. Sci. 2023, 13, 5783. [Google Scholar] [CrossRef]

- Dick, M.; Sheard, J.; Bareiss, C.; Carter, J.; Joyce, D.; Harding, T.; Laxer, C. Addressing student cheating: Definitions and solutions. ACM SigCSE Bull. 2002, 35, 172–184. [Google Scholar] [CrossRef]

- Sheard, J.; Simon Butler, M.; Falkner, K.; Morgan, M.; Weerasinghe, A. Strategies for maintaining academic integrity in first-year computing courses. In Proceedings of the 2017 ACM Conference on Innovation and Technology in Computer Science Education, Bologna, Italy, 3–5 July 2017; pp. 244–249. [Google Scholar]

- Albluwi, I. Plagiarism in programming assessments: A systematic review. ACM Trans. Comput. Educ. (TOCE) 2019, 20, 1–28. [Google Scholar] [CrossRef]

- Chen, M.; Tworek, J.; Jun, H.; Yuan, Q.; de Oliveira Pinto, H.P.; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating large language models trained on code. arXiv 2021, arXiv:2107.03374. [Google Scholar]

| Response | Pre-Semester | Post-Semester | ||

|---|---|---|---|---|

| Frequency | Percent | Frequency | Percent | |

| strongly disagree (1) | 33 | 45.2 | 0 | 0 |

| disagree (2) | 16 | 21.9 | 0 | 0 |

| neutral (3) | 4 | 5.5 | 0 | 0 |

| agree (4) | 13 | 17.8 | 39 | 53.4 |

| strongly agree (5) | 7 | 9.6 | 34 | 46.6 |

| Response | Week 3 | Week 7 | Week 10 | |||

|---|---|---|---|---|---|---|

| Frequency | Percent | Frequency | Percent | Frequency | Percent | |

| strongly disagree (1) | 0 | 0 | 0 | 0 | 0 | 0 |

| disagree (2) | 0 | 0 | 0 | 0 | 0 | 0 |

| neutral (3) | 4 | 5.5 | 3 | 4.1 | 2 | 2.7 |

| agree (4) | 34 | 46.6 | 33 | 45.2 | 31 | 42.5 |

| strongly agree (5) | 35 | 47.9 | 37 | 50.7 | 40 | 54.8 |

| Response | Week 2 | Week 6 | Week 9 | Week 11 | ||||

|---|---|---|---|---|---|---|---|---|

| Frequency | Percent | Frequency | Percent | Frequency | Percent | Frequency | Percent | |

| yes | 24 | 32.9 | 30 | 41.1 | 30 | 41.1 | 42 | 57.5 |

| no | 49 | 67.1 | 43 | 58.9 | 43 | 58.9 | 31 | 42.5 |

| Response | Week 3 | Week 7 | Week 10 | |||

|---|---|---|---|---|---|---|

| Frequency | Percent | Frequency | Percent | Frequency | Percent | |

| strongly disagree (1) | 0 | 0 | 0 | 0 | 0 | 0 |

| disagree (2) | 3 | 4.1 | 1 | 1.4 | 0 | 0 |

| neutral (3) | 10 | 13.7 | 6 | 8.2 | 3 | 4.1 |

| agree (4) | 31 | 42.5 | 34 | 46.6 | 35 | 47.9 |

| strongly agree (5) | 29 | 39.7 | 32 | 43.8 | 35 | 47.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zviel-Girshin, R. The Good and Bad of AI Tools in Novice Programming Education. Educ. Sci. 2024, 14, 1089. https://doi.org/10.3390/educsci14101089

Zviel-Girshin R. The Good and Bad of AI Tools in Novice Programming Education. Education Sciences. 2024; 14(10):1089. https://doi.org/10.3390/educsci14101089

Chicago/Turabian StyleZviel-Girshin, Rina. 2024. "The Good and Bad of AI Tools in Novice Programming Education" Education Sciences 14, no. 10: 1089. https://doi.org/10.3390/educsci14101089

APA StyleZviel-Girshin, R. (2024). The Good and Bad of AI Tools in Novice Programming Education. Education Sciences, 14(10), 1089. https://doi.org/10.3390/educsci14101089