Validation of the University Student Engagement Questionnaire in the Field of Education

Abstract

1. Introduction

2. Student Engagement Questionnaire (SEQ)

- -

- The analysis of the skills that university students must acquire during the learning process.

- -

- The evaluation of the learning environment that educators establish in the classroom to facilitate the acquisition of these skills.

3. Materials and Methods

3.1. Participants

3.2. Instrument

3.3. Procedure

3.4. Data Analysis

4. Results

4.1. Descriptive Statistics

4.2. Internal Consistency Analysis

4.3. Exploratory Factor Analysis

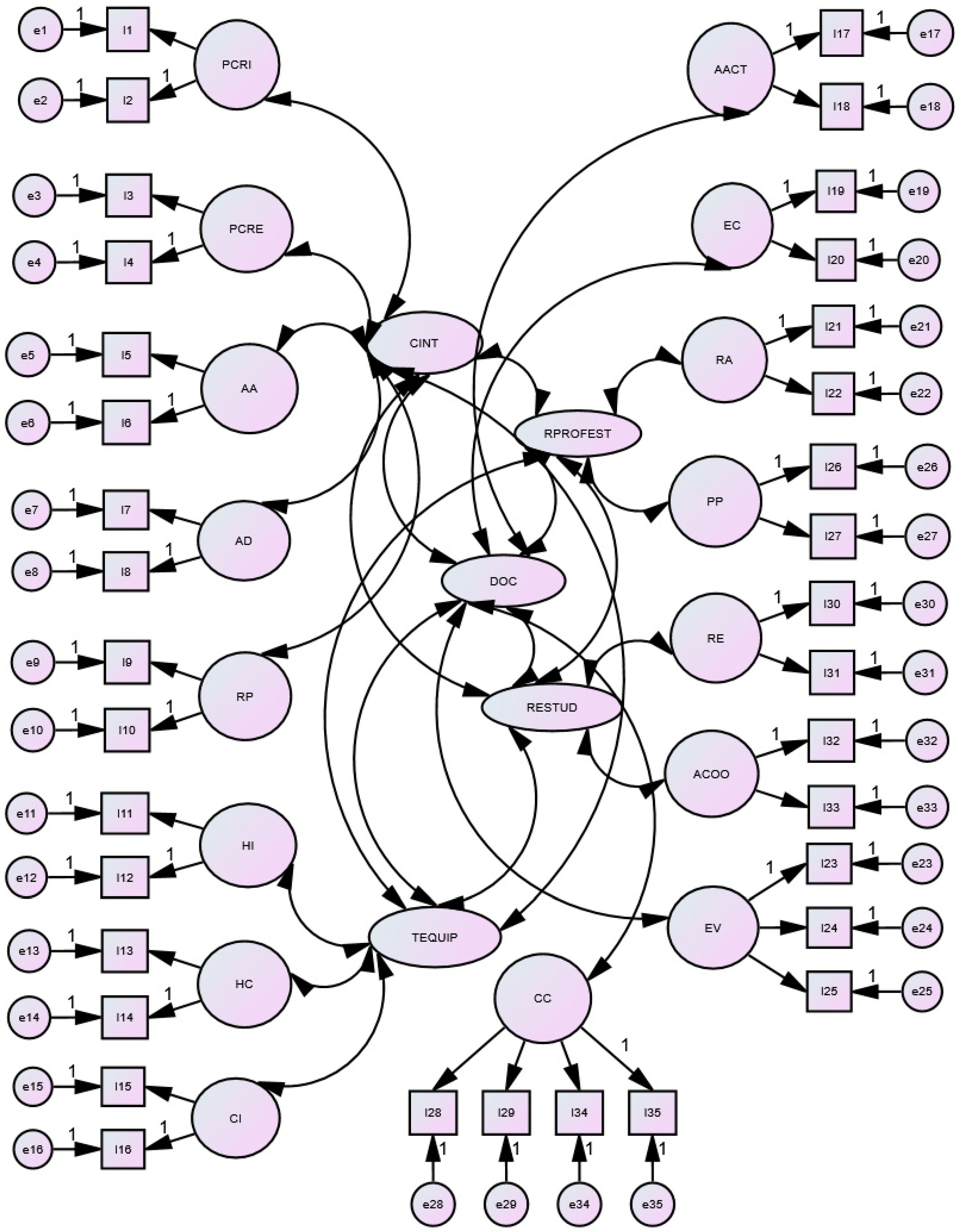

4.4. Confirmatory Factor Analysis

- -

- Bentler’s CFI (Comparative Fit Index), which is based on a previous index, called BFI, corrects to avoid taking values that are beyond the range of between 0 and 1. To do this, it uses different procedures and it is understood that its measurement must be around 0.95 to consider that the model fits the data adequately. In this study, a value of 0.937 was obtained, indicating an adequate fit of the model to the data (see Table 8).

- -

- The RMSEA facilitates the determination of the amount of variance not explained by the model per degree of freedom. To consider that there is a good fit, its value must be <0.05. This is enhanced if the 90% confidence interval (C.I.) is between 0 and 0.05. The value obtained is 0.044, which is less than 0.5, indicating a good fit. Similarly, the confidence interval is set at 0.41, which further supports this conclusion (see Table 9 and Figure 1).

5. Discussion

- Diagnosis of students’ capacities at the beginning of the course, at the end of a specific timeframe, before or after an intervention, etc.

- Teacher evaluation during an academic period, to establish new guidelines to follow, etc.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Items | Strongly Disagree | Disagree | Undecided | Agree | Strongly Agree |

|---|---|---|---|---|---|

| I have developed my capacity to judge alternative perspectives. | |||||

| I have become more willing to consider alternative perspectives. | |||||

| I have been motivated to use my own initiative. | |||||

| I have been challenged to come up with new ideas. | |||||

| I feel I am able to take responsibility for my own learning. | |||||

| I have greater confidence in my capacity to continue learning. | |||||

| In this subject I have learned to be more adaptable. | |||||

| I have become more willing to change my perspective and accept new ideas. | |||||

| I have improved my ability to use knowledge to solve problems in my field of study. | |||||

| I am able to offer information and different ideas to solve problems. | |||||

| I have developed my ability to communicate effectively with others. | |||||

| In this subject I have improved my ability to transmit ideas. | |||||

| I have learned to be an effective team member during group work. | |||||

| I feel confident dealing with a wide range of people. | |||||

| I feel confident using computer applications when necessary. | |||||

| I have learned more about using computers to present information. | |||||

| The teacher uses a variety of teaching methods. | |||||

| Students are given the opportunity to participate in classes. | |||||

| The teacher makes an effort to help understand the course material. | |||||

| The design of the course helps students understand its contents. | |||||

| The explanations given by the teacher are useful when I have difficulties with the learning materials. | |||||

| There is enough feedback on activities and tasks to ensure that we learn from the work we do. | |||||

| The subject uses a variety of evaluation methods. | |||||

| To do well when being evaluated in this subject you need to have good analytical skills. | |||||

| The evaluation assesses our understanding of the key concepts in this subject. | |||||

| There is good communication between the teacher and students. | |||||

| The teacher helps when asked. | |||||

| I am able to complete the programme requirements without feeling excessively stressed. | |||||

| The workload assigned is reasonable. | |||||

| I have a strong sense of belonging to my class group. | |||||

| I regularly work with peers in my classes. | |||||

| I have regularly discussed course ideas with other students outside of class. | |||||

| Discussing the course material with other students outside of class has helped me gain a better understanding of the subject. | |||||

| I can see how the subjects fit together to make a coherent study programme for my specialisation. | |||||

| The study programme for my specialisation is well-integrated. |

References

- Almerich, G.; Suárez Rodríguez, J.; Diaz García, I.; Orellana, N. Structure of 21st century competences in students in the sphere of education. Influential personal factors. Educ. XX1 2020, 23, 45–74. [Google Scholar] [CrossRef]

- Organisation for Economic Cooperation and Development. The Future of Educational and Skills, Education 2030. Available online: https://hdl.handle.net/20.500.12365/17367 (accessed on 15 January 2024).

- Aguaded, E. Development of personal competencies in multicultural university contexts. In Development of Intercultural Competencies in University Contexts; Salmeron, H., Rodríguez, S., Cardona, M.L., Eds.; Publicacions de la Universitat de València: Valencia, Spain, 2012; pp. 55–92. [Google Scholar]

- Almerich, G.; Díaz-García, I.; Cebrián-Cifuentes, S.; Suárez-Rodríguez, J. Dimensional structure of 21st century competences in university student of education. Relieve 2018, 24, 1–21. [Google Scholar] [CrossRef]

- Crespí, P. How Higher Education can develop generic competences? Int. E-J. Adv. Educ. 2020, 6, 23–29. [Google Scholar] [CrossRef]

- Crespí, P.; García-Ramos, J.M. Generic skills at university. Evaluation of a training program. Educ. XX1 2021, 24, 297–327. [Google Scholar] [CrossRef]

- Comisión Europea. Bologna with Student Eyes 2020. Available online: https://www.esu-online.org/wp-content/uploads/2021/01/BWSE2020-Publication_WEB2.pdf (accessed on 21 February 2024).

- Rodríguez Pallares, M.; Segado-Boj, F. Journalistic Competences and Skills in the 21st Century. Perception of Journalism Students in Spain. Ad. Comun. 2020, 20, 67–94. [Google Scholar] [CrossRef]

- González-Ferreras, J.M.; Wagenaar, R. Una introducción a Tuning Educational Structures in Europe. In La contribución de las universidades al proceso de Bolonia; Publicaciones de la Universidad de Deusto: Bilbao, Spain, 2006; Available online: http://www.deustopublicaciones.es/deusto/pdfs/tuning/tuning12.pdf (accessed on 23 March 2024).

- Salido, P. Active methodologies in initial teacher training: Project-based learning (ABS) and artistic education. Profr. Rev. Currículum Form. Profr. 2020, 24, 120–143. [Google Scholar] [CrossRef]

- Council of Europe. A Educación en el Consejo de Europa, Competencias y Cualificaciones para la Vida en Democracia, 2017. Available online: https://rm.coe.int/09000016806fe63e (accessed on 20 April 2024).

- Virtanen, A.; Tynjälä, P. Factors explaining the learning of generic skills: A study of university student’s experiences. Teach. High Edu. 2019, 24, 880–894. [Google Scholar] [CrossRef]

- Corominas Rovira, E. Generic competencies in university training. Rev. Edu. 2001, 325, 299–321. Available online: https://redined.educacion.gob.es/xmlui/bitstream/handle/11162/75927/008200230385.pdf?sequence=1&isAllowed=y (accessed on 21 April 2024).

- Crespí, P.; García-Ramos, J.M. Design and evaluation of a program on transversal personal competencies at the university. In Proceedings of the XIX International Congress of Educational Research, Palma, Spain, 1–3 July 2019; pp. 963–969. [Google Scholar]

- Cheng, M.; Lee, K.; Chan, C. Generic skills development in discipline-specific courses in higher education: A systematic literature review. Curr. Teach. 2018, 33, 47–65. [Google Scholar] [CrossRef]

- Martín González, M.; Ortiz, S.; Jano, M. Do teaching and learning methodologies affect the skills acquired by master’s students? Evidence from Spanish universities? Educ in the Know Soc. 2020, 28, 1–28. [Google Scholar]

- Gargallo, B.; Suárez-Rodríguez, J. Methodological formats used in the research and results. Methodological format in the subject Theory of Education. In Teaching Focused on Learning and Competency-Based Design at the University; Gargallo, B., Ed.; Tirant Humanidades: Valencia, Spain, 2017; pp. 287–294. [Google Scholar]

- Kember, D. Nurturing generic capabilities through a teaching and learning environment which provides practise in their use. High. Educ. 2009, 57, 37–55. [Google Scholar] [CrossRef]

- Kember, D.; Leung, D. Development of a questionnaire for assessing students’ perceptions of the teaching and learning environment and its use in quality assurance. Learn. Environ. Res. 2009, 12, 15–29. [Google Scholar] [CrossRef]

- Kember, D.; Leung, D. Disciplinary differences in student ratings of teaching quality. Res. High. Educ. 2011, 52, 278–299. [Google Scholar] [CrossRef]

- Kember, D.; Leung, Y.P.L.; Ma, R. Characterizing learning environments capable of nurturing generic capabilities in higher education. Res. High. Educ. 2007, 48, 609–632. [Google Scholar] [CrossRef]

- Mayer, R.E. Learning and Instruction; Alianza: Madrid, Spain, 2010. [Google Scholar]

- González-Pienda, J.A. The student: Personal variables. In Instructional Psychology I. Basic Variables and Processes; Beltrán, J., Genovard, C., Eds.; Síntesis: Madrid, Spain, 1999; pp. 148–191. [Google Scholar]

- Coll, C. The constructivist conception as an instrument for the analysis of school educational practices. In Psychology of School Education; Coll, C., Palacios, J., Marchesi, A., Eds.; Alianza: Madrid, Spain, 2000. [Google Scholar]

- Vidal-Abarca, E. Learning and teaching: A view from Psychology. In Learning and Personality Development; Vidal-Abarca, E., García-Ros, R., Pérez-González, F., Eds.; Alianza Editorial: Madrid, Spain, 2010; pp. 19–43. [Google Scholar]

- Monereo, C. Learning strategies in formal education: Teaching to think and about thinking. Infanc. Aprendiz. 1990, 50, 3–25. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Investigating self-regulation and motivation: Historical background, methodological developments, and future prospects. Am. Edu. Res. J. 2008, 45, 166–183. [Google Scholar] [CrossRef]

- Doménech Betoret, F. Educational Psychology and Instruction: Its Application to the Classroom Context; Universitat Jaume I: Castellón, Spain, 2007. [Google Scholar]

- Coll, C. The constructivist conception as an instrument for the analysis of school educational practices. In Psychology of Instruction; Coll, C., Ed.; Teaching and Learning in Secondary Education Barcelona: Barcelona, Spain, 2008; p. 16.44. [Google Scholar]

- Ertmer, P.A.; Newby, T.J. Behaviorism, cognitivism, constructivism: Comparing critical features from an instructional design perspective. Intern. Impro. Quart. 2013, 6, 43–71. [Google Scholar] [CrossRef]

- Gargallo, B.; Suárez, J.; Almerich, G.; Verde, I.; Cebrià, A. The dimensional validation of the Student Engagement Questionnaire (SEQ) with a Spanish university population. Students’ capabilities and the teaching-learning environment. Anal. Psic. 2018, 34, 519–530. [Google Scholar] [CrossRef]

- Pascarella, E.T.; Terenzini, P.T. How college affects students: Findings and insights form twenty years of research; Jossey-Bass: San Francisco, CA, 1991. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 7th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Kember, D.; Leung, D. The influence of the teaching and learning environment on the development of generic capabilities needed for a knowledge-based society. Learn. Environ. Res. 2011, 8, 245–266. [Google Scholar] [CrossRef]

- Crocker, J.C.; Algina, J. Introduction to Classical and Modern Test Theory; Holt, Rinehart and Winston: New York, NY, USA, 1986. [Google Scholar]

- Jornet, J.M.; Suárez, J.M. Standardized Testing and Performance Assessment: Metric Uses and Characteristics. Rev. Invesig. Edu. 1996, 14, 141–163. [Google Scholar]

- Popham, J. Modern Educational Measurement; Allyn and Bacon: Boston, MA, USA, 1990. [Google Scholar]

- Cronbach, L. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Gargallo López, B.; Suárez Rodríguez, J.; Garfella Esteban, P.R.; Fernández March, A. The TAMUFQ Questionnaire (Teaching andAssessment Methodology of University Faculty Questionnaire). An Instrument to Assess the Teaching Methodology of University Faculty. Est. Educ. 2011, 21, 9–40. [Google Scholar] [CrossRef]

- Gargallo, B.; Morera, I.; Iborra, S.; Climent, M.J.; Navalón, S. y García, E. Methodology focused on learning. Its impact on learning strategies and academic performance of university students. Rev. Esp. Ped. 2014, 259, 415–435. [Google Scholar]

| Student Capacity | |

|---|---|

| Categories | Variables |

| Intellectual capacities |

|

| Teamwork |

|

| Teaching–learning environment variables | |

| Teaching |

|

| Teacher–student relationship |

|

| Relationship between students |

|

| Item | Mean | Dev. | N |

|---|---|---|---|

| 1. In this subject I develop my capacity to judge alternative perspectives. | 3.62 | 0.930 | 561 |

| 2. I have become more willing to consider alternative perspectives | 3.71 | 0.871 | 561 |

| 3. I have been motivated to use my own initiative | 3.76 | 0.887 | 561 |

| 4. I have been challenged to come up with new ideas | 3.52 | 0.926 | 561 |

| 5. I feel I am able to take responsibility for my own learning | 3.98 | 0.837 | 561 |

| 6. I have greater confidence in my capacity to continue learning | 3.69 | 0.888 | 561 |

| 7. In this subject I have learned to be more adaptable | 3.54 | 0.853 | 561 |

| 8. I have become more willing to change my perspective and accept new ideas. | 3.62 | 0.898 | 561 |

| 9. I have improved my ability to use knowledge to solve problems in my field of study | 3.55 | 0.885 | 561 |

| 10. I am able to offer information and different ideas to solve problems | 3.81 | 0.842 | 561 |

| 11. I have developed my ability to communicate effectively with others | 3.65 | 0.927 | 561 |

| 12. In this subject I have improved my ability to transmit ideas | 3.33 | 0.933 | 561 |

| 13. I have learned to be an effective team member during group work | 3.88 | 0.818 | 561 |

| 14. I feel confident dealing with a wide range of people | 3.70 | 0.886 | 561 |

| 15. I feel confident using computer applications when necessary | 3.68 | 1.032 | 561 |

| 16. I have learned more about using computers to present information | 3.50 | 0.986 | 561 |

| 17. The teacher uses a variety of teaching methods | 3.75 | 0.860 | 561 |

| 18. In this subject, students are given the opportunity to participate in classes | 4.19 | 0.861 | 561 |

| 19. The teacher makes an effort to help understand the course material | 4.19 | 0.725 | 561 |

| 20. The design of this subject helps students understand its contents | 3.73 | 0.890 | 561 |

| 21. The explanations given by the teacher are useful when I have difficulties with the learning materials | 3.88 | 0.823 | 561 |

| 22. There is enough feedback on activities and tasks to ensure that we learn from the work we do | 3.78 | 0.844 | 561 |

| 23. The subject uses a variety of evaluation methods | 3.62 | 0.804 | 561 |

| 24. To do well when being evaluated in this subject you need to have good analytical skills | 3.65 | 0.834 | 561 |

| 25. The evaluation assesses our understanding of the key concepts in this subject | 3.63 | 0.813 | 561 |

| 26. There is good communication between the teacher of this subject and their students | 4.11 | 0.762 | 561 |

| 27. The teacher of this subject helps when asked | 4.31 | 0.692 | 561 |

| 28. I am able to complete the programme requirements without feeling excessively stressed | 3.36 | 0.952 | 561 |

| 29. The workload assigned in this subject is reasonable | 3.73 | 0.770 | 561 |

| 30. I have a strong sense of belonging to my class group | 3.31 | 1.013 | 561 |

| 31. I regularly work with peers in my classes | 3.84 | 0.877 | 561 |

| 32. I have regularly discussed course ideas with other students outside of class | 2.67 | 1.150 | 561 |

| 33. Discussing the course material with other students outside of class has helped me gain a better understanding of the subject | 3.12 | 1.008 | 561 |

| 34. I can see how the subjects fit together to make a coherent study programme for my specialisation | 3.36 | 0.888 | 561 |

| 35. The study programme for my specialisation is well-integrated | 3.42 | 0.949 | 561 |

| Cronbach’s Alpha | Number of Elements |

|---|---|

| 0.941 | 35 |

| Kaiser–Meyer–Olkin measure of sampling adequacy | 0.942 |

| Bartlett’s test of sphericity | Approx. Chi-squared 9088.286 |

| gl | 595 |

| Sig. | 0.000 |

| Initial Eigenvalues | Sum of Squared Loadings from the Extraction | Sum of Squared Loadings from the Rotation | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Component | Total | % Variance | % Accumulated | Total | % Variance | % Accumulated | Total | % Variance | % Accumulated |

| 1 | 12.144 | 34.698 | 34.698 | 12.144 | 34.698 | 34.698 | 3.458 | 9.879 | 9.879 |

| 2 | 2.221 | 6.345 | 41.043 | 2.221 | 6.345 | 41.043 | 3.240 | 9.257 | 19.135 |

| 3 | 1.941 | 4.687 | 45.730 | 1.941 | 4.687 | 45.730 | 1.970 | 5.628 | 24.764 |

| 4 | 1.893 | 4.266 | 49.997 | 1.893 | 4.266 | 49.997 | 1.725 | 4.929 | 29.693 |

| 5 | 1.841 | 3.545 | 53.542 | 1.841 | 3.545 | 53.542 | 1.683 | 4.809 | 34.503 |

| 6 | 1.760 | 3.315 | 56.857 | 1.760 | 3.315 | 56.857 | 1.679 | 4.796 | 39.299 |

| 7 | 1.662 | 2.750 | 59.607 | 1.662 | 2.750 | 59.607 | 1.535 | 4.385 | 43.684 |

| 8 | 1.629 | 2.655 | 62.262 | 1.629 | 2.655 | 62.262 | 1.521 | 4.347 | 48.030 |

| 9 | 1.555 | 2.442 | 64.705 | 1.555 | 2.442 | 64.705 | 1.521 | 4.345 | 52.375 |

| 10 | 1.502 | 2.292 | 66.997 | 1.502 | 2.292 | 66.997 | 1.504 | 4.296 | 56.672 |

| 11 | 1.466 | 2.189 | 69.186 | 1.466 | 2.189 | 69.186 | 1.476 | 4.216 | 60.888 |

| 12 | 1.435 | 2.099 | 71.285 | 1.435 | 2.099 | 71.285 | 1.467 | 4.191 | 65.079 |

| 13 | 1.317 | 2.050 | 73.335 | 1.317 | 2.050 | 73.335 | 1.438 | 4.109 | 69.188 |

| 14 | 1.250 | 1.858 | 75.192 | 1.250 | 1.858 | 75.192 | 1.246 | 3.561 | 72.749 |

| 15 | 1.126 | 1.788 | 76.980 | 1.126 | 1.788 | 76.980 | 1.228 | 3.508 | 76.257 |

| 16 | 1.007 | 1.679 | 78.659 | 1.007 | 1.679 | 78.659 | 0.841 | 2.402 | 78.659 |

| 17 | 0.970 | 1.628 | 80.287 | ||||||

| 18 | 0.828 | 1.509 | 81.797 | ||||||

| 19 | 0.709 | 1.453 | 83.249 | ||||||

| 20 | 0.693 | 1.409 | 84.658 | ||||||

| 21 | 0.687 | 1.392 | 86.051 | ||||||

| 22 | 0.556 | 1.302 | 87.352 | ||||||

| 23 | 0.553 | 1.293 | 88.645 | ||||||

| 24 | 0.426 | 1.218 | 89.863 | ||||||

| 25 | 0.402 | 1.148 | 91.011 | ||||||

| 26 | 0.384 | 1.097 | 92.107 | ||||||

| 27 | 0.374 | 1.068 | 93.176 | ||||||

| 28 | 0.354 | 1.011 | 94.186 | ||||||

| 29 | 0.349 | 0.999 | 95.185 | ||||||

| 30 | 0.313 | 0.896 | 96.080 | ||||||

| 31 | 0.309 | 0.882 | 96.963 | ||||||

| 32 | 0.292 | 0.834 | 97.797 | ||||||

| 33 | 0.283 | 0.809 | 98.606 | ||||||

| 34 | 0.248 | 0.709 | 99.315 | ||||||

| 35 | 0.240 | 0.685 | 100.000 | ||||||

| Component | Initial | Extraction |

|---|---|---|

| 1 | 1.000 | 0.805 |

| 2 | 1.000 | 0.770 |

| 3 | 1.000 | 0.731 |

| 4 | 1.000 | 0.848 |

| 5 | 1.000 | 0.907 |

| 6 | 1.000 | 0.700 |

| 7 | 1.000 | 0.781 |

| 8 | 1.000 | 0.740 |

| 9 | 1.000 | 0.711 |

| 10 | 1.000 | 0.835 |

| 11 | 1.000 | 0.753 |

| 12 | 1.000 | 0.686 |

| 13 | 1.000 | 0.819 |

| 14 | 1.000 | 0.907 |

| 15 | 1.000 | 0.873 |

| 16 | 1.000 | 0.795 |

| 17 | 1.000 | 0.705 |

| 18 | 1.000 | 0.745 |

| 19 | 1.000 | 0.741 |

| 20 | 1.000 | 0.758 |

| 21 | 1.000 | 0.743 |

| 22 | 1.000 | 0.720 |

| 23 | 1.000 | 0.795 |

| 24 | 1.000 | 0.813 |

| 25 | 1.000 | 0.740 |

| 26 | 1.000 | 0.777 |

| 27 | 1.000 | 0.790 |

| 28 | 1.000 | 0.807 |

| 29 | 1.000 | 0.803 |

| 30 | 1.000 | 0.767 |

| 31 | 1.000 | 0.851 |

| 32 | 1.000 | 0.844 |

| 33 | 1.000 | 0.787 |

| 34 | 1.000 | 0.835 |

| 35 | 1.000 | 0.844 |

| Component | Items | Factor Loadings |

|---|---|---|

| Component 1 | 26 | 0.81 |

| 27 | 0.84 | |

| Component 2 | 1 | 0.73 |

| 2 | 0.75 | |

| Component 3 | 28 | 0.34 |

| 29 | 0.42 | |

| 34 | 0.80 | |

| 35 | 0.82 | |

| Component 4 | 32 | 0.90 |

| 33 | 0.80 | |

| Component 5 | 9 | 0.38 |

| 10 | 0.80 | |

| Component 6 | 3 | 0.56 |

| 4 | 0.82 | |

| Component 7 | 23 | 0.75 |

| 24 | 0.80 | |

| 25 | 0.60 | |

| Component 8 | 30 | 0.69 |

| 31 | 0.84 | |

| Component 9 | 13 | 0.76 |

| 14 | 0.33 | |

| Component 10 | 21 | 0.58 |

| 22 | 0.38 | |

| Component 11 | 11 | 0.43 |

| 12 | 0.52 | |

| Component 12 | 15 | 0.85 |

| 16 | 0.63 | |

| Component 13 | 7 | 0.51 |

| 8 | 0.56 | |

| Component 14 | 5 | 0.88 |

| 6 | 0.37 | |

| Component 15 | 19 | 0.60 |

| 20 | 0.66 | |

| Component 16 | 17 | 0.27 |

| 18 | 0.54 |

| Model | NFI Delta1 | RFI rho1 | IFI Delta2 | TLI rho2 | CFI |

|---|---|---|---|---|---|

| Default model | 0.895 | 0.850 | 0.938 | 0.907 | 0.937 |

| Saturated model | 1.000 | 1.000 | 1.000 | ||

| Independence model | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 |

| Model | RMSEA | LO 90 | HI 90 | PCLOSE |

|---|---|---|---|---|

| Default model | 0.044 | 0.041 | 0.048 | 0.031 |

| Independence model | 0.162 | 0.159 | 0.164 | 0.000 |

| Component | Factor |

|---|---|

| Component 1 | Communication between the student and the teacher. |

| Component 2 | Stimulation of critical thinking. |

| Component 3 | Workload and time management according to the study programme. |

| Component 4 | Cooperation between students. |

| Component 5 | Acquisition of tools to solve problems. |

| Component 6 | Strengthening student initiative. |

| Component 7 | Teacher evaluation methods and assessments. |

| Component 8 | Sense of belonging and teamwork. |

| Component 9 | Integration in teams and appreciation of diversity. |

| Component 10 | Teacher explanations and feedback on the activities. |

| Component 11 | Development of assertive communication and transmission of information. |

| Component 12 | Effective use of IT. |

| Component 13 | Training of ability to adapt. |

| Component 14 | Strengthening of self-confidence and accountability in learning. |

| Component 15 | Teacher support and subject design. |

| Component 16 | Use of teaching methods by the teacher and creation of participatory spaces. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cebrián Cifuentes, S.; Guerrero Valverde, E. Validation of the University Student Engagement Questionnaire in the Field of Education. Educ. Sci. 2024, 14, 1047. https://doi.org/10.3390/educsci14101047

Cebrián Cifuentes S, Guerrero Valverde E. Validation of the University Student Engagement Questionnaire in the Field of Education. Education Sciences. 2024; 14(10):1047. https://doi.org/10.3390/educsci14101047

Chicago/Turabian StyleCebrián Cifuentes, Sara, and Empar Guerrero Valverde. 2024. "Validation of the University Student Engagement Questionnaire in the Field of Education" Education Sciences 14, no. 10: 1047. https://doi.org/10.3390/educsci14101047

APA StyleCebrián Cifuentes, S., & Guerrero Valverde, E. (2024). Validation of the University Student Engagement Questionnaire in the Field of Education. Education Sciences, 14(10), 1047. https://doi.org/10.3390/educsci14101047