1. Introduction

Generative AI (GenAI) can be defined as a “technology that (i) leverages deep learning models to (ii) generate human-like content (e.g., images, words) in response to (iii) complex and varied prompts (e.g., languages, instructions, questions)” [

1] (p. 2). As generative Artificial Intelligence (AI) continues to evolve rapidly, in the next few years, it will drive innovation and improvements in higher education, but it will also create a myriad of new challenges [

2,

3]. Specifically, ChatGPT (Chat Generative Pre-Trained Transformer), a chatbot driven by GenAI, has been attracting headlines and has become the center of ongoing debate regarding the potential negative effects that it can have on teaching and learning. ChatGPT describes itself as a large language model trained to “generate humanlike text based on a given prompt or context. It can be used for a variety of natural language processing tasks, such as text completion, conversation generation, and language translation” [

4] (p. 4). Given its advanced generative skills, one of the major concerns in higher education is that it can be used to reply to exam questions, write assignments and draft academic essays without being easily detected by current versions of anti-plagiarism software [

5].

Responses from higher education institutions (HEIs) to this emerging threat to academic integrity have been varied and fragmented, ranging from those that have rushed to implement full bans on the use of ChatGPT [

6,

7] to others who have started to embrace it by publishing student guidance on how to engage with AI effectively and ethically [

8]. Nevertheless, most of the information provided by higher education institutions (HEIs) to students so far has been unclear or lacking in detail regarding the specific circumstances in which the use of ChatGPT is allowed or considered acceptable [

9,

10]. However, what is evident is that most HEIs are currently in the process of reviewing their policies around the use of ChatGPT and its implications for academic integrity.

Meanwhile, a growing body of literature has started to document the potential challenges and opportunities posed by ChatGPT. Among the key issues with the use of ChatGPT in education, accuracy, reliability, and plagiarism are regularly cited [

1,

3]. Issues related to accuracy and reliability include relying on biased data (i.e., the limited scope of data used to train ChatGPT), having limited up-to-date knowledge (i.e., training stopped in 2021), and generating incorrect/fake information (e.g., providing fictitious references) [

11]. It is also argued that the risk of overreliance on ChatGPT could negatively impact students’ critical thinking and problem-solving skills [

3]. Regarding plagiarism, evidence suggests that essays generated by ChatGPT can bypass conventional plagiarism detectors [

11]. ChatGPT can also successfully pass graduate-level exams, which could potentially make some types of assessments obsolete [

1].

ChatGPT can also be used to enhance education, provided that its limitations (as discussed in the previous paragraph) are recognized. For instance, ChatGPT can be used as a tool to generate answers to theory-based questions and generate initial ideas for essays [

3,

12], but students should be mindful of the need to examine the credibility of generated responses. Given its advanced conversational skills, ChatGPT can also provide formative feedback on essays and become a tutoring system by stimulating critical thinking and debates among students [

13]. The language editing and translation skills of ChatGPT can also contribute towards increased equity in education by somewhat leveling the playing field for students from non-English speaking backgrounds [

1]. ChatGPT can also be a valuable tool for educators as it can help in creating lesson plans for specific courses, developing customized resources and learning activities (i.e., personalized learning support), carrying out assessment and evaluation, and supporting the writing process of research [

14]. ChatGPT might also be used to enrich a reflective teaching practice by testing existing assessment methods to validate their scope, design, and capabilities beyond the possible use of GenAI, challenging academics to develop AI-proof assessments as a result and contributing to the authentic assessment of students’ learning achievements [

15].

Overall, some early studies have started to shed some light regarding the potential challenges and opportunities of ChatGPT for higher education, but more in-depth discussions are needed. We argue that the current discourse is highly focused on studying ChatGPT as an object rather than a subject. Given the advanced generative capabilities of ChatGPT, we would like to contribute to the ongoing discussion by exploring what ChatGPT has to say about itself regarding the challenges and opportunities that it represents for higher education. By adopting this approach, we hope to contribute to a more balanced discussion that accommodates the AI perspective using a ‘thing ethnography’ methodology [

16]. This approach considers things not as objects but as subjects that possess a non-human worldview or perspective that can point to novel insights in research [

17].

This study will examine ChatGPT’s perspective on the opportunities and challenges that it represents for academic purposes, as well as recommendations to mitigate challenges and harness potential opportunities. In doing so, we will aim to answer the following research questions:

What are the potential challenges for higher education posed by ChatGPT, and how can these be mitigated?

What are the potential opportunities and barriers to the integration of ChatGPT in higher education?

The structure of this paper is as follows. After this intro, the next section explores ‘thing ethnography methodology’ as a novel approach to engage with GenAI. It will also explain the steps followed in this study to collect ChatGPT’s views. Section Three will summarize the results, and section four will discuss the results in the context of existing literature to validate the non-human perspective. Lastly, a conclusion will answer the research questions and outline future research directions.

2. Method: Thing Ethnography Applied to ChatGPT

Ethnography refers to a form of social research that emphasizes the importance of studying first-hand what people do and say in particular contexts [

18]. It involves an in-depth understanding of the world based on social relations and everyday practices. Traditionally, the focus of ethnography has been on human perspectives via qualitative methods such as observation and interviews. However, it is argued that as humans, “we have complex and intertwined relationships with the objects around us. We shape objects; and objects shape and transform our practices and us in return” [

19] (p. 235). Recognizing this continual interplay between humans and objects necessitates research methodologies that grant both parties an equal role.

Recently, there has been a growing interest in moving away from a human-centered ethnographic approach to that of seeking nonhuman perspectives in a context where human perspectives are felt to be partial to fully understanding the interdependent relations between humans and nonhumans [

20]. By studying things as incorporated into practices, we learn about both people and objects at the same time. This approach provides the opportunity to reflect on us by reflecting on things [

21]. Relatively consolidated methods for exploring the distinct viewpoints, development paths, and possible worldviews of nonhuman entities incorporate ‘thing ethnography’ [

22]. Thing ethnography is an approach that allows access to and interpretation of things’ perspectives, enabling the acquisition of novel insights into their socio-material networks. Thing ethnography emerges at the intersection of data that things give access to and the analysis and interpretation that human researchers contribute [

23]. Giaccardi et al. [

19] contend that adopting a thing perspective can offer distinct revelations regarding the interplay between objects and human practices, leading to novel approaches for collectively framing and resolving problems alongside these entities.

In this shift towards thing ethnography, artificial intelligence (AI) plays a significant role as its unique capacities provide unprecedented access to nonhuman perspectives of the world [

20]. The unique perspectives of mundane things such as kettles, cups, and scooters can now be accessed via the use of software and sensors [

21,

23]. For instance, by attaching autographers to kettles, fridges, and cups, a study collected more than 3000 photographs that helped to uncover use patterns and home practices around these objects [

21]. Another study looking into the design of thoughtful forms of smart mobility used cameras and sensors to collect data from scooters in Taipei, generating a better understanding of the socio-material networks among scooters and scooterists in Taiwan [

23]. Moreover, the development of chatbots opens the possibility of directly interacting and accessing AI systems’ views via text exchange.

It has already been recognized that AI “has the potential to impact our lives and our world as no other technology has done before” [

24], both positively and negatively. This knowledge is raising many questions concerning its ethical, legal, and socio-economic effects, and even calls for a pause in the development of more advanced AI systems [

25]. With the increasing intelligence of things, it becomes crucial to adopt suitable perspectives to access, observe and understand the diverse social consequences and emerging possibilities that arise from this advancement [

23]. So far, the relationship between humans and things in research has been unidirectional [

19]. So, what happens if we change the focus to ‘things’, especially ‘things’ with human-like intelligence with the potential to become fully self-aware within the next few decades or even achieve Artificial General Intelligence (AGI) [

26,

27]? What happens if we try to understand the world from the perspective of a ‘thing’, such as AI, which is increasingly impacting many aspects of life? Applying thing ethnography to the study of AI systems can aid in exploring their social and cultural dimensions and their impact on society from their own perspective.

In this context, we argue that ChatGPT should not be studied merely as an “object” designed, trained, and used by people but as a “subject” that co-performs daily practices with users and has an impact on the socio-material networks in which it is embedded. In the case of thing ethnography applied to ChatGPT, the focus would be on understanding the user experience, the impact on communication dynamics, and the broader societal implications from ChatGPT’s perspective. A better understanding of this can help guide the development and deployment of chatbot technologies in a way that is more responsive to user needs, respects societal values, and promotes responsible AI practices. We believe that thing ethnography approaches are becoming crucially relevant as efforts attempting to build successively more powerful AI systems closer to AGI—AI systems that are generally smarter than humans—continue [

27]. As ‘things’ become increasingly intelligent, there is an unprecedented and pressing demand to enhance our ability to access and comprehend the perspective of these entities.

In this study, we apply thing ethnography to engage with ChatGPT. Whereas previous thing ethnography interventions have used different smart devices to access the perspective of things, our study will engage with the ‘subject’ via a written conversation given the unique text generation capacities of chatbots. To answer the research questions stated in the previous section, this study has adopted a semi-structured interview. This type of interview facilitates the collection of rich data related to participants’ views [

28], with the required degree of structure and flexibility [

29]. An interview guide containing a list of themes and key questions was prepared before the interview. Nevertheless, the flexibility of semi-structured interviewing allowed the use of probes devised during the interviews. Probing is useful to further explore responses that are deemed significant for the research topic [

28]. In line with King and Horrocks’ [

30] suggestion, probing was adopted to seek clarification for unclear words or phrases, completion for stories that the interviewer felt unfinished, and elaboration to encourage the interviewee to ‘keep talking’.

The six-phase thematic analysis approach proposed by Braun and Clarke [

31] formed the foundation for analyzing data from the semi-structured interview. This approach provided guidance for coding and categorizing the data, enabling a systematic, thorough, and cumulative analysis of the case study information. During the reporting phase, we aimed for dependability and transparency by providing verbatim quotes linked to specific codes being analyzed. These links are what connect raw data to “the data summary and interpretation generated by the researcher” [

32] (p. 95). Additionally, we addressed transferability by comparing the emerging findings with the existing body of literature [

33]. This was accomplished by examining similarities, contradictions, and their underlying reasons. This approach can lead to broader applicability and increased credibility of qualitative data.

3. Results: How Did ChatGPT Respond?

The unedited transcript of the interview with ChatGPT is shown below. This is followed by post-interview reflections and discussions on emerging themes.

Interviewer: What are the potential challenges for higher education posed by ChatGPT?

ChatGPT: ChatGPT, as an advanced language model, presents certain challenges for higher education. Here are a few potential challenges that institutions may face:

Academic Integrity: With the ability to generate human-like responses, ChatGPT raises concerns about academic integrity. Students could potentially misuse the model to generate plagiarized content or cheat on assignments and assessments. This poses a challenge for educators and institutions in ensuring fair evaluation and maintaining academic standards.

Quality Control: ChatGPT generates responses based on the data it has been trained on, which includes a vast amount of information available on the internet. While the model strives to provide accurate and helpful information, there is still a possibility of generating incorrect or misleading responses. Institutions need to carefully assess and validate the information provided by ChatGPT to ensure its accuracy and reliability.

Personalized Learning: While ChatGPT can provide personalized responses to individual queries, it might not fully capture the nuanced needs of each learner. Higher education institutions often focus on tailoring education to meet the specific requirements of students. Relying solely on ChatGPT for personalized learning experiences may overlook certain aspects of individual student needs, such as their learning style, preferences, and unique challenges.

Expertise and Authority: ChatGPT lacks the domain-specific expertise that specialized instructors and professors possess. Higher education institutions emphasize the role of subject matter experts in providing deep insights, critical analysis, and guidance in specific fields. Relying solely on ChatGPT might not fully meet the expectations and needs of students seeking expertise from highly qualified professionals.

Communication and Collaboration: Effective communication and collaboration are integral parts of the higher education experience. ChatGPT, being an AI language model, might not possess the same level of human interaction and interpersonal skills as an instructor or peers. This can limit its effectiveness in supporting group work, discussions, and other collaborative activities that are crucial for a well-rounded educational experience.

It is important to note that these challenges are not insurmountable, and institutions can develop strategies to address them effectively. With careful implementation and integration, ChatGPT can still offer significant benefits and opportunities for higher education.

Interviewer: What significant benefits or opportunities for higher education can ChatGPT offer?

ChatGPT: ChatGPT indeed presents several significant benefits and opportunities for higher education. Here are some of them:

24/7 Support and Accessibility: ChatGPT can provide round-the-clock support to students, offering assistance and answering queries at any time. This accessibility can be particularly valuable for distance learning programs, international students in different time zones, or individuals who prefer to learn at their own pace. ChatGPT can offer instant access to information and resources, enhancing the overall learning experience.

Personalized Learning and Tutoring: ChatGPT can provide personalized feedback, explanations, and recommendations based on individual student queries. By tailoring responses to specific needs, ChatGPT can assist students in understanding complex concepts, identifying knowledge gaps, and offering customized learning pathways. This personalized approach can enhance student engagement and promote self-directed learning.

Supplemental Learning Resource: ChatGPT can serve as a valuable supplementary resource alongside traditional course materials. Students can seek additional explanations, examples, or alternative perspectives from ChatGPT, enabling them to explore topics in greater depth. This can enhance comprehension, reinforce learning, and encourage independent research.

Language Learning and Communication Skills: Language learners can benefit from interacting with ChatGPT to practice and improve their language proficiency. ChatGPT can simulate conversational exchanges, provide language correction, and offer vocabulary and grammar assistance. It can also foster communication skills by engaging students in dialogue and encouraging them to express their thoughts and ideas effectively.

Support for Instructors and Teaching Assistants: ChatGPT can assist instructors and teaching assistants by handling routine queries, providing quick references, or offering guidance on commonly asked questions. This frees up their time to focus on higher-level tasks, such as facilitating discussions, leading critical analyses, and providing personalized feedback to students.

Innovative Learning Experiences: Incorporating ChatGPT into the curriculum can introduce innovative and interactive learning experiences. For instance, instructors can create simulations or virtual characters powered by ChatGPT to engage students in role-playing scenarios, decision-making exercises, or historical reenactments. Such immersive experiences can enhance student motivation, critical thinking, and creativity.

Research and Data Analysis: ChatGPT can support research efforts by assisting with literature reviews, data analysis, and generating hypotheses. Researchers can leverage the model’s ability to sift through vast amounts of information and provide insights on complex topics. ChatGPT can aid in discovering relevant sources, summarizing findings, and even suggesting potential research directions.

By leveraging the strengths of ChatGPT while addressing its limitations, higher education institutions can harness the benefits and opportunities it offers to enhance the learning experience, promote student success, and foster innovative educational practices.

Interviewer: What factors can prevent universities from fully harnessing the benefits of ChatGPT?

ChatGPT: Several factors can prevent universities from fully harnessing the benefits of ChatGPT:

Lack of Awareness and Understanding: A lack of awareness and understanding about the capabilities and potential applications of ChatGPT can hinder its adoption. If faculty, administrators, and students are not familiar with the technology and its benefits, they may be reluctant to explore its potential.

Technological Barriers: Implementing ChatGPT or other AI tools may require technical infrastructure, such as high-performance computing resources and robust network connectivity. Universities that lack the necessary technological infrastructure may face challenges in fully utilizing the benefits of ChatGPT.

Resistance to Change: Resistance to change within the university community can impede the adoption of new technologies. Faculty members, administrators, or staff may be resistant to integrating ChatGPT into existing workflows or may prefer traditional teaching and learning methods. Overcoming resistance to change requires effective communication, training, and highlighting the value and potential of ChatGPT.

Ethical and Privacy Concerns: The responsible use of AI, including ChatGPT, raises ethical and privacy concerns. Universities need to address issues related to data privacy, algorithmic biases, and the responsible use of AI-generated content. Failure to address these concerns may result in hesitance or skepticism around implementing ChatGPT.

Academic Rigor and Quality: Some may argue that relying too heavily on AI tools like ChatGPT could compromise the academic rigor and quality of education. Concerns may arise regarding the authenticity of student work, the development of critical thinking skills, and the role of human interaction in the learning process. Universities need to carefully balance the integration of AI with maintaining high academic standards.

Resource Constraints: Adopting ChatGPT or any AI tool requires investment in resources, including faculty training, technical support, and ongoing maintenance. Universities with limited financial or personnel resources may struggle to fully harness the benefits of ChatGPT due to resource constraints.

Legal and Regulatory Considerations: The use of AI tools in education may be subject to legal and regulatory frameworks. Universities need to navigate intellectual property rights, copyright, privacy regulations, and other legal considerations when implementing ChatGPT, which can be a complex and time-consuming process.

Lack of Interdisciplinary Collaboration: Harnessing the full benefits of ChatGPT often requires interdisciplinary collaboration. Universities may face challenges in fostering collaboration between computer science, education, ethics, and other relevant disciplines. Bridging these disciplinary gaps is crucial to effectively leverage ChatGPT in an educational context.

To overcome these barriers, universities can focus on raising awareness, providing training and support, addressing ethical considerations, fostering collaboration across disciplines, and developing policies and guidelines that promote the responsible and effective use of ChatGPT. It is essential for universities to actively engage in discussions, seek stakeholder input, and create an environment that encourages innovation and experimentation while upholding academic rigor and ethics.

Interviewer: Many universities are struggling with addressing the emerging risks; what should be the priorities?

ChatGPT: When it comes to addressing the emerging risks associated with integrating AI models like ChatGPT, universities can prioritize the following key areas:

Policy Development: Developing clear policies and guidelines regarding the use of AI models in higher education is crucial. Institutions should establish policies that define acceptable and responsible use of AI, address issues of academic integrity, data privacy, algorithmic bias, and ethical considerations. These policies should be communicated effectively to all stakeholders, including students, faculty, and staff.

Education and Training: Providing comprehensive education and training to faculty, staff, and students is essential. Universities should offer workshops, seminars, or online courses to raise awareness about the benefits, limitations, and potential risks of AI models. This education should cover topics such as responsible AI use, data ethics, digital literacy, critical thinking, and academic integrity.

Collaboration and Interdisciplinary Efforts: Addressing emerging risks requires a multidisciplinary approach. Universities can encourage collaboration between faculties of computer science, ethics, education, and law, among others. By fostering interdisciplinary conversations and collaborations, institutions can effectively identify and address the challenges associated with AI integration in higher education.

Research and Development: Investing in research and development efforts focused on AI in higher education is vital. This includes studying the impact of AI models on student learning outcomes, developing algorithms for bias detection and mitigation, exploring ways to combine AI models with human expertise, and investigating ethical frameworks for AI use in educational settings. Research can provide evidence-based insights to guide policy decisions and best practices.

Ethical Review Processes: Universities can establish ethical review processes to evaluate the integration of AI models like ChatGPT. These processes should assess potential risks and benefits, address data privacy concerns, evaluate algorithmic biases, and ensure compliance with relevant ethical standards. Ethical review boards or committees can help institutions make informed decisions regarding the implementation and use of AI models.

Continuous Monitoring and Evaluation: It is important for universities to continuously monitor and evaluate the impact of AI integration in higher education. This involves gathering feedback from students, faculty, and staff, assessing the effectiveness of risk mitigation strategies, identifying areas for improvement, and making necessary adjustments based on the evolving landscape of AI technologies.

By prioritizing these areas, universities can proactively address the emerging risks associated with AI integration, promote the responsible use of AI models, and create an environment that maximizes the benefits while minimizing potential harm.

Interviewer: Can you tell me more about what can be considered acceptable and responsible use of AI in this context?

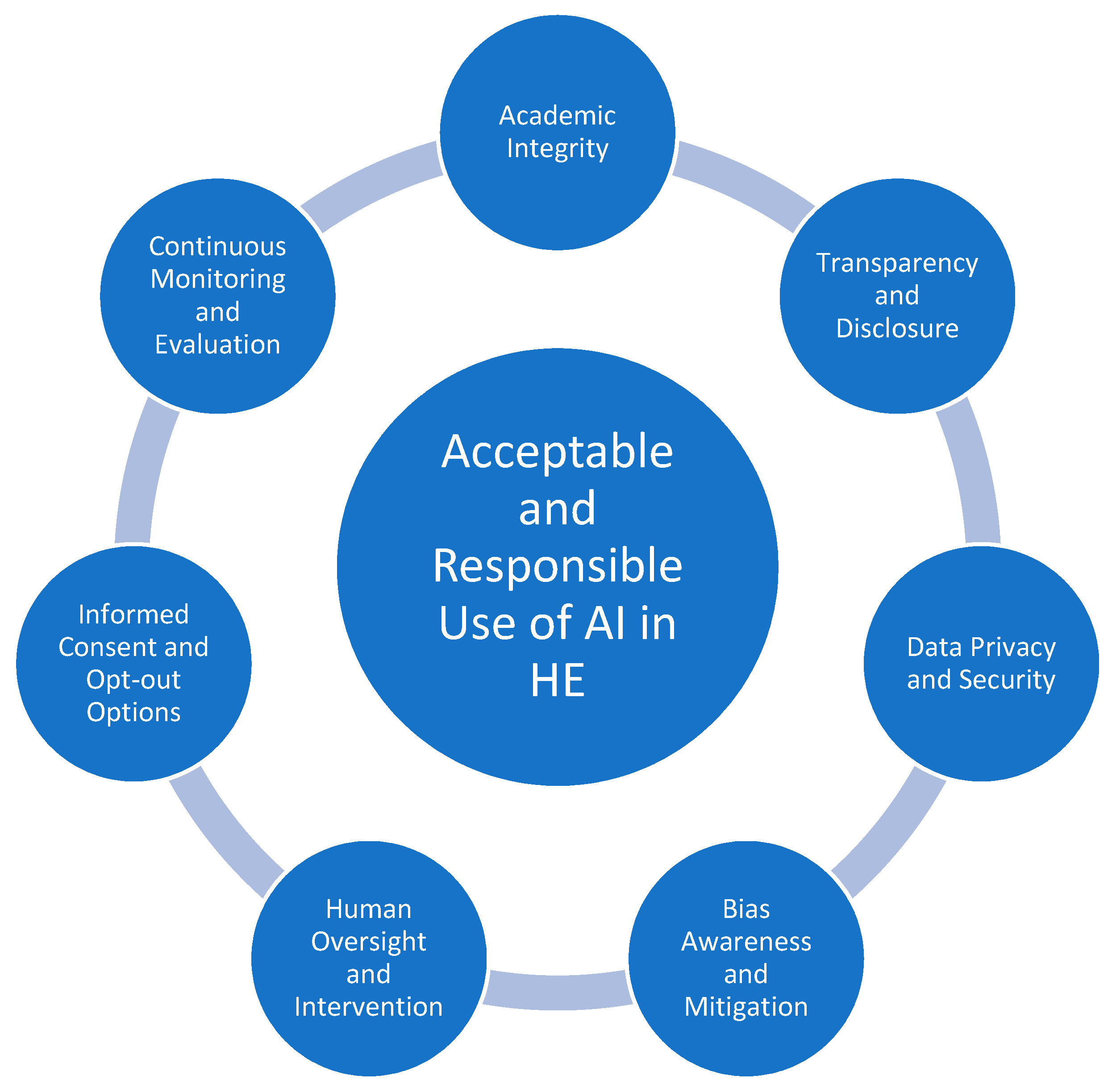

ChatGPT: Certainly! Acceptable and responsible use of AI in the context of higher education involves adhering to ethical principles and best practices. Here are some key considerations:

Academic Integrity: Institutions should emphasize the importance of academic integrity and discourage the use of AI models like ChatGPT for generating plagiarized content or cheating on assignments and assessments. Students should be educated about proper citation practices, originality of work, and the ethical use of AI tools.

Transparency and Disclosure: Institutions should promote transparency in the use of AI models. When students interact with AI systems like ChatGPT, they should be made aware that they are communicating with an AI and not a human. It is important to disclose the limitations of AI models, such as their potential for generating incorrect or biased information.

Data Privacy and Security: Universities should prioritize the privacy and security of student data when using AI models. It is crucial to comply with relevant data protection regulations and establish robust security measures to safeguard sensitive information. Institutions should clearly communicate their data handling practices and obtain necessary consents from students.

Bias Awareness and Mitigation: AI models can inadvertently perpetuate biases present in the training data. Institutions should be proactive in identifying and addressing biases in AI-generated responses. Regular monitoring, bias detection tools, and ongoing training of AI models can help mitigate bias and ensure fair and inclusive outcomes.

Human Oversight and Intervention: While AI models can provide valuable support, human oversight and intervention are essential. Institutions should emphasize the role of instructors and teaching staff in guiding students, verifying the accuracy of AI-generated information, and providing additional context when needed. Human intervention can help address limitations, verify facts, and ensure the quality of education.

Informed Consent and Opt-out Options: Students should be given the choice to engage with AI models or opt-out if they have concerns or preferences for alternative modes of learning. Institutions should clearly communicate the purposes and implications of using AI tools, allowing students to make informed decisions about their participation.

Continuous Monitoring and Evaluation: Institutions should continuously monitor and evaluate the use of AI models to identify potential ethical or educational issues. This includes collecting feedback from students and instructors, assessing the impact on learning outcomes, and making necessary adjustments to ensure responsible use and effectiveness.

By adopting these principles, universities can promote an environment of responsible AI use in higher education, ensuring that AI models like ChatGPT are employed in a manner that upholds ethical standards, protects student rights, and enhances the overall learning experience.

Interviewer: Finally, what do you think about universities banning the use of ChatGPT?

ChatGPT: The decision to ban the use of ChatGPT or any AI tool in universities is a complex one that requires careful consideration of various factors. While I, as an AI language model, do not possess personal opinions, I can provide some insights on the matter.

Universities may choose to ban the use of ChatGPT for several reasons:

Plagiarism Concerns: ChatGPT, as a powerful language model, can generate text that might be used for plagiarism if not properly attributed or verified. Universities may be concerned about maintaining academic integrity and ensuring that students produce their own original work.

Lack of Human Interaction: AI tools like ChatGPT lack human qualities such as empathy, contextual understanding, and common sense reasoning. The absence of human interaction in certain educational scenarios may be seen as a limitation that detracts from the quality of education and the development of critical thinking and communication skills.

Educational Value: Some argue that relying solely on AI tools for learning may limit the depth of understanding and exploration. Universities may believe that the interaction with instructors, peers, and authentic learning experiences is essential for a comprehensive and meaningful education.

However, it’s important to note that banning ChatGPT entirely may overlook its potential benefits. Instead of a blanket ban, universities could consider alternative approaches:

Guidelines and Responsible Use: Develop clear guidelines and policies that outline the responsible and ethical use of AI tools, including ChatGPT. These guidelines can address issues such as plagiarism, disclosure, limitations, and the role of human oversight.

Integration with Human Instruction: Encourage the use of ChatGPT as a supplementary tool to support teaching and learning rather than a replacement for human instruction. Instructors can leverage ChatGPT to augment their lessons, provide additional resources, or spark discussions while still maintaining their role as facilitators and subject matter experts.

Education and Training: Offer education and training programs for students and faculty on the responsible and effective use of AI tools. This can include workshops, seminars, or online modules that address the potential benefits, limitations, and ethical considerations associated with AI.

Continuous Monitoring and Evaluation: Continuously monitor the use of AI tools like ChatGPT and evaluate their impact on learning outcomes, student engagement, and overall educational experience. This ongoing assessment can help inform policies and practices to ensure the responsible and beneficial integration of AI in higher education.

Ultimately, the decision to ban or allow the use of ChatGPT in universities depends on the specific context, institutional goals, and values. It is important for universities to carefully consider the potential benefits, risks, and alternatives before making a final decision.

4. Discussion

This study conducted a semi-structured interview with ChatGPT to document its views on the challenges and benefits of integrating AI in higher education. In line with previous studies, the results revealed that ChatGPT has the potential to revolutionize education in different ways [

3,

12,

14,

34]. However, vast challenges need to be addressed first. ChatGPT’s views are generally in line with existing literature; however, it provided additional insights that have not been extensively documented.

Figure 1 summarizes the key themes emerging from the interview, including opportunities, challenges, barriers, and mitigation strategies mentioned by ChatGPT. These are discussed in more detail in this section.

4.1. Opportunities

The integration of ChatGPT in higher education translates into a set of potential opportunities or benefits for key stakeholders, including students, teaching staff, and researchers. Of the seven opportunities mentioned by ChatGPT, five have a strong emphasis on students, whilst the other two focus on teaching staff and researchers, respectively. Firstly, ChatGPT sees itself as a tool capable of providing “

personalized feedback, explanations, and recommendations based on individual student queries”, thus promoting self-directed learning. This is echoed in the literature as findings suggest that ChatGPT can provide personalized feedback on essays and become a tutoring system by stimulating critical thinking and debates among students [

13]. Similarly, ChatGPT suggests that it “

can serve as a valuable supplementary resource alongside traditional course materials” to reinforce learning and encourage independent research. In line with this, the literature argues that ChatGPT can be used as a supplementary tool to generate answers to theory-based questions and generate initial ideas for essays [

3,

12].

Another opportunity includes the role that ChatGPT can play in enhancing language and communication skills, as it can “

simulate conversational exchanges, provide language correction, and offer vocabulary and grammar assistance”. This feature of ChatGPT can arguably have a positive effect on widening participation in higher education by removing barriers that some students face. For instance, Lim et al. [

1] suggest that the language editing and translation skills of ChatGPT can contribute towards increased equity in education by somewhat leveling the playing field for students from non-English speaking backgrounds. It could also remove some barriers for students who suffer from disabilities such as dyslexia, as it can support paraphrasing text that is free of grammar or spelling errors given the correct prompts and input. Another opportunity mentioned by ChatGPT has to do with improving accessibility by providing “

round-the-clock support to students”. This capability could be particularly valuable for distance learning students or international students in different time zones. This potential benefit of ChatGPT seems to be less studied and documented in the existing literature. The last benefit for students relates to the idea that “

Incorporating ChatGPT into the curriculum can introduce innovative and interactive learning experiences”. Although the literature recognizes the potential of ChatGPT and similar large language models for improving digital ecosystems for education [

3,

35], this has not been extensively documented.

ChatGPT mentioned two opportunities that focus on teaching and research staff. Firstly, it suggested that it “can assist instructors and teaching assistants by handling routine queries, providing quick references, or offering guidance on commonly asked questions”. Interestingly, ChatGPT goes on to say that “this frees up their time to focus on higher-level tasks”, which seems to indicate that ChatGPT does not see itself as a tool that can contribute to such higher-level tasks. In this regard, the literature suggests that ChatGPT can also be a valuable tool for educators as it can help in creating lesson plans for specific courses, developing customized resources and learning activities (i.e., personalized learning support), and generating course objectives, learning outcomes, and assessment criteria [

14,

36,

37]. However, given the novelty of ChatGPT, these benefits seem to be mostly theoretical or speculative at this point, as more empirical works are needed to demonstrate how this can be fully achieved. Empirical works to test the effectiveness of this approach are also required.

Lastly, ChatGPT suggested that it “

can support research efforts by assisting with literature reviews, data analysis, and generating hypotheses”. The literature supports this view by acknowledging that ChatGPT can support the writing process of research [

14], improve collaboration, facilitate coordinated efforts, and be conducive to higher-quality research outcomes [

38]. However, a key concern remains regarding the loss of human potential and autonomy in the research process [

39]. Some publishers have started to provide general advice regarding the role that ChatGPT can play in the writing process, but there is a call for more specific guidelines regarding how to acknowledge the use of AI in research and writing for journals [

38]. Van Dis et al. [

39] suggest that whilst the focus should be on embracing opportunities of AI, we also need to manage the risks to the essential aspects of scientific work: curiosity, imagination, and discovery. The authors call for measures to ensure that humans’ creativity, originality, education, training, and interactions remain essential for conducting research. In line with this, Kooli [

38] argues that ChatGPT and other chatbots should not replace the essential role of the human researcher. Instead, they should adopt a “research assistant” role that requires constant and close supervision. Overall, the current debate around potential applications remains hypothetical as there is still a lack of a regulatory framework to advance the use of ChatGPT in research activities.

4.2. Challenges

ChatGPT’s views on the challenges that it poses for higher education clearly revolve around several of the shortcomings of GenAI systems, including the lack of accuracy and reliability of the information it generates. But other issues relate to the actual misuse by students. Challenges associated with the shortcomings of ChatGPT are summarized into four categories: Quality Control, Expertise and Authority, Personalized Learning, and Communication and Collaboration. Regarding Quality Control, ChatGPT suggests that whilst it “

strives to provide accurate and helpful information, there is still a possibility of generating incorrect or misleading response”. Similar issues are echoed in the literature, with concerns about how the inherent biases of ChatGPT and other GenAI systems could seriously impact the accuracy and reliability of information generated by these systems [

11,

40]. ChatGPT further suggests that regular monitoring, bias detection tools, and ongoing training of AI models can help mitigate biases and ensure fair and inclusive outcomes. In terms of Expertise and Authority, ChatGPT explains that it “

lacks the domain-specific expertise that specialized instructors and professors possess”. Therefore, relying solely on ChatGPT for higher education may not meet students’ needs regarding deep insights, critical analysis, and guidance in specific fields. This challenge links to the previous one (i.e., Quality Control), and in this regard, ChatGPT adds that “

Institutions should emphasize the role of instructors and teaching staff in guiding students, verifying the accuracy of AI-generated information, and providing additional context when needed”.

Two challenges that have not been extensively documented in existing literature include Personalized Learning and Communication and Collaboration. Whilst the idea of using ChatGPT to develop personalized learning and tutoring systems has been discussed [

3,

13,

40], the downsides have not been fully considered. In this regard, ChatGPT warns that it may not be able to fully capture the distinctive needs of each learner, adding that “

Relying solely on ChatGPT for personalized learning experiences may overlook certain aspects of individual student needs, such as their learning style, preferences, and unique challenges”. Once again, the central role of teaching staff in overseeing the use and application of ChatGPT in higher education is evidenced here. The other challenge concerns Communication and Collaboration as integral parts of higher education. ChatGPT suggests that being an AI language model, it “

might not possess the same level of human interaction and interpersonal skills as an instructor or peers”, which can limit its contribution to certain elements of the educational experience, such as facilitating group work and other collaborative activities. The challenges discussed here and in the previous paragraph suggest that we are still a long way (if we ever get there) from a world where GenAI systems fully replace teaching staff. ChatGPT views seem to suggest that it can serve as a tool but cannot fully substitute the human element. Hence, those wanting to incorporate it into education need to provide teachers with the skills and training to effectively utilize the technology in order to minimize the challenges discussed here.

A final challenge mentioned by ChatGPT has to do with its misuse, especially when it can negatively affect academic integrity. ChatGPT suggests that “

Students could potentially misuse the model to generate plagiarized content or cheat on assignments and assessments”. This is arguably one of the most cited concerns in existing academic literature, prompting an ongoing debate on whether the technology should be completely banned from HEIs [

11,

40,

41]. Plagiarism and academic misconduct are not new threats to higher education and have been extensively studied [

42]. However, the novelty of ChatGPT has amplified the threat for two main reasons, lack of clear academic policies and low probability of detection. A recent study found evidence of a clear absence of clarity regarding the use of ChatGPT and similar GenAI tools in academic policies. Out of 142 HEIs surveyed in May 2023, only one explicitly prohibited the use of AI [

43]. This is an important finding as there is evidence to suggest that academic dishonesty is inversely related to understanding and acceptance of academic integrity policies [

44,

45]. Therefore, it is imperative that HEIs prioritize the clarification of institutional expectations regarding the use of ChatGPT in learning and assessment. Updating academic integrity policies is crucial to address GenAI tools threats. Updates should provide clear instructions on AI tool usage and penalties for policy violations and be accessible to students [

43]. The absence of specific guidelines for AI tools creates ambiguity, indicating a hesitancy among HEIs to take a clear stance, possibly due to limited experience. This poses challenges in formulating comprehensive policies that address evolving issues posed by emerging AI technologies. The other key issue has to do with the low probability of detection perceived by students wanting to use ChatGPT for cheating. There is evidence suggesting that the likelihood of students engaging in cheating increases when they believe that the chances of getting caught are minimal [

45]. Thus, the lack of readily available AI plagiarism checkers to detect ChatGPT-generated content can increase the threat to academic integrity. So, the challenge for HEIs is how to create an environment where academic dishonesty enabled by GenAI is socially unacceptable. This must involve ensuring that institutional expectations are clearly communicated and understood and that students perceive the probability of plagiarism detection as high.

4.3. Barriers

Having reflected on the potential benefits for higher education, ChatGPT provided some interesting insights regarding the barriers that HEIs may face in adopting GenAI technology; these included Lack of Awareness and Understanding, Technological Barriers, Resistance to Change, Ethical and Privacy Concerns, Academic Rigor and Quality, Resource Constraints, Legal and Regulatory Considerations, and Lack of Interdisciplinary Collaboration. Some of these barriers have already been documented in existing literature; however, the majority remain unexplored and open avenues for future research directions.

One obvious barrier is a Lack of Awareness and Understanding. In this regard, ChatGPT suggests that “if faculty, administrators, and students are not familiar with the technology and its benefits, they may be reluctant to explore its potential”. This issue is further compounded by the current lack of clear academic policies. For instance, this may hinder the progress toward the adoption of ChatGPT for teaching and learning purposes. Currently, academic staff wanting to develop innovative interventions using ChatGPT do not have a regulatory framework to guide them. This can have a negative effect on the empirical testing of the potential benefits discussed in this paper. A recent study on students’ acceptance and use of ChatGPT found that students are comfortable adopting GenAI technology and that their frequency of use contributes to the development of habitual behavior [

46]. In this context, habitual behavior or habit refers to the level of a regular and consistent pattern of use of ChatGPT as part of an academic routine. Therefore, HEIs have the unique opportunity to shape the principles of responsible use of emerging AI technology and guide students in the formation of habitual behavior that is in line with such principles.

Figure 2 summarizes the principles that ChatGPT suggests considering to “promote an environment of responsible AI use in higher education, ensuring that AI models like ChatGPT are employed in a manner that upholds ethical standards, protects student rights, and enhances the overall learning experience”. All of these principles deserve further consideration and open avenues for future research direction.

Other barriers include Ethical and Privacy Concerns and Academic Rigor and Quality. Some aspects of these have already been discussed in this paper as part of the challenges of integrating ChatGPT in higher education. These barriers relate to a wide range of issues, including data privacy, algorithmic biases, and authenticity of student work. As suggested by Dignum [

24], “As the capabilities for autonomous decision-making grow, perhaps the most important issue to consider is the need to rethink responsibility. Being fundamentally tools, AI systems are fully under the control and responsibility of their owners or users” (p. 2011). Thus, there is a pressing need for more research into the responsible use of AI in the higher education context. This will hopefully translate into academic policies that contain the principles for responsible use of AI, as well as training and dissemination activities to increase awareness among key users (i.e., academic staff and students).

Economic barriers include a lack of technology and resource constraints. ChatGPT suggests that “Universities with limited financial or personnel resources may struggle to fully harness the benefits of ChatGPT due to resource constraints”. Equally, the adoption of GenAI technology may require sophisticated technical infrastructure such as high-performance computing resources and robust network connectivity. Therefore, “Universities that lack the necessary technological infrastructure may face challenges in fully utilizing the benefits of ChatGPT”. For those HEIs that manage to overcome the exclusionary economic barriers, resistance to change may become another hurdle. According to ChatGPT, “Faculty members, administrators, or staff may be resistant to integrating ChatGPT into existing workflows or may prefer traditional teaching and learning methods”. In this regard, emerging literature points to the need to train academic staff on how to make the best of ChatGPT during teaching preparation and course assessment [

11]. In addition, as we continue (or start) to embrace the use of ChatGPT in teaching and learning, it is critical to disseminate empirical findings on the use of ChatGPT that can inform the teaching practices of the wider academic community.

4.4. Mitigation Strategies

ChatGPT provided some priorities for mitigating the challenges discussed here; these include Policy Development, Education and Training, Collaboration and Interdisciplinary Efforts, Research and Development, Ethical Review Processes, and Continuous Monitoring and Evaluation. The urgency of developing or updating academic policies to address the use of ChatGPT has already been raised in this study as well as in existing literature [

11,

43]. ChatGPT’s views suggest that changes to policies need to “

address issues of academic integrity, data privacy, algorithmic bias, and ethical consideration”. Given the novelty of the technology as well as the robustness of HEIs’ governance systems, it is likely that HEIs will have clearer positions on the use of GenAI tools later this year [

41], and this will hopefully translate into updated academic policies. Subsequently, it will become crucial to focus resources on Education and Training. ChatGPT suggests that “

Universities should offer workshops, seminars, or online courses to raise awareness about the benefits, limitations, and potential risks of AI models”. This is in line with existing literature suggesting academic dishonesty is a multifaceted behavior influenced by various factors, and simply developing an academic policy is insufficient in addressing the problem [

44]. The dissemination of such policies is just as crucial; this could include adopting practices such as having students sign honor pledges, providing reminders about the gravity and repercussions of academic dishonesty, and including statements about academic misconduct in course syllabi. Furthermore, as GenAI technologies continue to evolve rapidly, it is imperative to regularly update academic integrity policies with clear guidelines on their appropriate and inappropriate applications [

43].

Investing in Research and Development is another important mitigation strategy, according to ChatGPT: “This includes studying the impact of AI models on student learning outcomes, developing algorithms for bias detection and mitigation, exploring ways to combine AI models with human expertise, and investigating ethical frameworks for AI use in educational settings”. These efforts can provide evidence-based insights to guide policy decisions and best practices for the integration of GenAI in higher education. Another important issue is the passive role that HEIs are currently playing in GenAI research. Most cutting-edge GenAI technologies are exclusive products created by a handful of prominent technology corporations that possess the necessary resources for AI advancement. This raises ethical issues and goes against the move toward transparency and open science [

39]. Dignum [

24] agrees that AI algorithm development has so far focused on enhancing performance, resulting in opaque systems that are difficult to interpret. Thus, there is a call for non-commercial organizations, including HEIs, to make significant investments in open-source AI technology to create more transparent and democratically controlled technologies [

39].

An important mitigation strategy that was not mentioned by ChatGPt but figures prominently in existing literature relates to the need for a reevaluation of the traditional modes of assessment [

43]. To overcome some of the challenges and barriers that GenAI pose for students’ learning and their authentic assessment, this technology might be used in combination with active learning pedagogies, such as experiential learning [

47], challenge-based learning [

48], or problem-based learning [

49]. In this sense, GenAI might be used as leverage to constructively enrich teaching and learning-by-doing activities beyond a passive approach in which students sit, listen, and do assignments. By doing this, GenAI cannot provide specific answers to the practicality of problem-solving in a situated real-world or contrived learning experience but supports students in addressing study situations. Taking this view offers a promising use of GenAI for teaching and learning purposes.

5. Conclusions

The findings of this study highlighted the transformative potential of ChatGPT in education, consistent with previous studies, while also revealing additional insights. It also highlighted significant challenges that must be addressed. The paper presents key themes identified during the interview, including opportunities, challenges, barriers, and mitigation strategies. Given the novelty of ChatGPT, existing literature about its use within higher education is limited and largely hypothetical or speculative. Our hope is that the findings presented here can serve as a research agenda for researchers and the wider academic community to identify research priorities, gaps in knowledge, and emerging trends. A key takeaway point is the urgent need for empirical research that delves into best practices and strategies for maximizing the benefits of GenAI, as well as user experiences, to understand students’ and academics’ perceptions, concerns, and interactions with ChatGPT. Another priority is the development of policies, guidelines, and frameworks for the responsible integration of ChatGPT in higher education. Based on the conversation with ChatGPT, this paper also presents some principles for the acceptable and responsible use of AI in higher education. These principles can be useful for Higher Education Institutions working towards internal AI policies. However, empirical studies are needed to explore the transferability of findings presented here to varied contexts with specific features, for instance, distance or online learning.

This study employed a thing ethnography approach to explore ChatGPT’s perspective on the opportunities and challenges it presents for higher education. This is an innovative approach that has been implemented in design research but could become a powerful tool for researchers from other disciplines wanting to engage with “intelligent things”. In this study, thing ethnography provided a methodological framework to collect the viewpoints of ChatGPT. This helped us to test its potential biases and accuracy, as well as uncover insights that have not been extensively documented in the existing literature. As an interviewee, ChatGPT provided very relevant and interesting insights, showing some level of critique towards itself when questioned about potential risks and challenges, which indicates some level of transparency regarding its own shortcomings. A benefit of this approach is that ChatGPT enables interviewers to request further information and clarify responses, which can enhance data collection. Additionally, the chatbot displays a very good level of recollection during a conversation. Its memory seems to be limited; however, this did not represent an issue for the length of our interview. Despite the absence of non-verbal cues and expressions, the answers obtained seemed balanced and clearly conveyed meaning. Therefore, it is argued that interviews with ChatGPT have the potential to collect relevant data.

Limitations inherent to ethnographic research [

50] are also applicable to thing ethnography and, therefore, to this study. The first one relates to the limited scope and selection bias; ethnographic research usually focuses on specific individuals, groups, or communities. In this study, there is a strong focus on ChatGPT as a form of GenAI. However, other GenAI chatbots, such as Bard or Bing Chat, have recently emerged and become accessible to the public. Thus, the findings presented here may not be representative of the views of all existing GenAI chatbots. Future interactions could aim to compare the views from different GenAI chats to find similarities and discrepancies. This could provide us with a more holistic understanding of GenAI chatbots’ perspectives in the context of higher education. Another limitation could be the replicability of results; even though the interview can be easily replicated, it is still not clear that ChatGPT will provide the same answers. As consecutive and improved versions of ChatGPT are released, there will be a need to replicate interventions such as the one proposed here to test for stability in the findings. Another limitation relates to the potential research bias during the analysis of data. The audit trail was particularly important in increasing the dependability and transparency of thematic analysis [

32]. A significant aspect of documenting this process is providing verbatim quotes linked to specific codes. These links are what connect participants’ words (i.e., raw data) to “the data summary and interpretation generated by the researcher” [

32] (p. 95). This approach can increase the capacity of external reviewers to judge the research findings fairly. Furthermore, transferability was addressed by comparing emerging results with existing literature [

33]. Lastly, ethnographic studies tend to be highly immersive and lengthy, requiring extended periods in the field to gain an in-depth understanding of the research subject. However, thing ethnography does not necessarily require a longitudinal research design and cross-sectional research designs, where data is collected at one point in time, have been documented [

21,

23]. In this context, we see the intervention reported here as the first step in a broader research study. The next steps include further conversations with ChatGPT, and other chatbots, to test the replicability of findings presented here and the exploration of more topics not covered in the initial interview.

We believe the use of thing ethnography to engage with ChatGPT is very relevant and would encourage other researchers to involve GenAI entities in the process of collectively framing and resolving current challenges. Our argument is that ChatGPT should be approached not merely as an object controlled by humans but as a subject actively engaging in daily practices alongside users, influencing communication dynamics, and impacting socio-material networks. We believe that thing ethnography approaches are increasingly significant, particularly as endeavors to create more advanced AI systems closer to artificial general intelligence (AGI) persist. As “things” grow more intelligent, there arises an urgent demand to improve our ability to access and comprehend the perspectives (and biases) of these entities for research purposes.