Effect of Model-Based Problem Solving on Error Patterns of At-Risk Students in Solving Additive Word Problems

Abstract

1. Introduction

1.1. Theoretical Framework in Mathematical Word Problem Solving

1.2. Difficulties Experienced by Students with LDM in Solving Word Problems

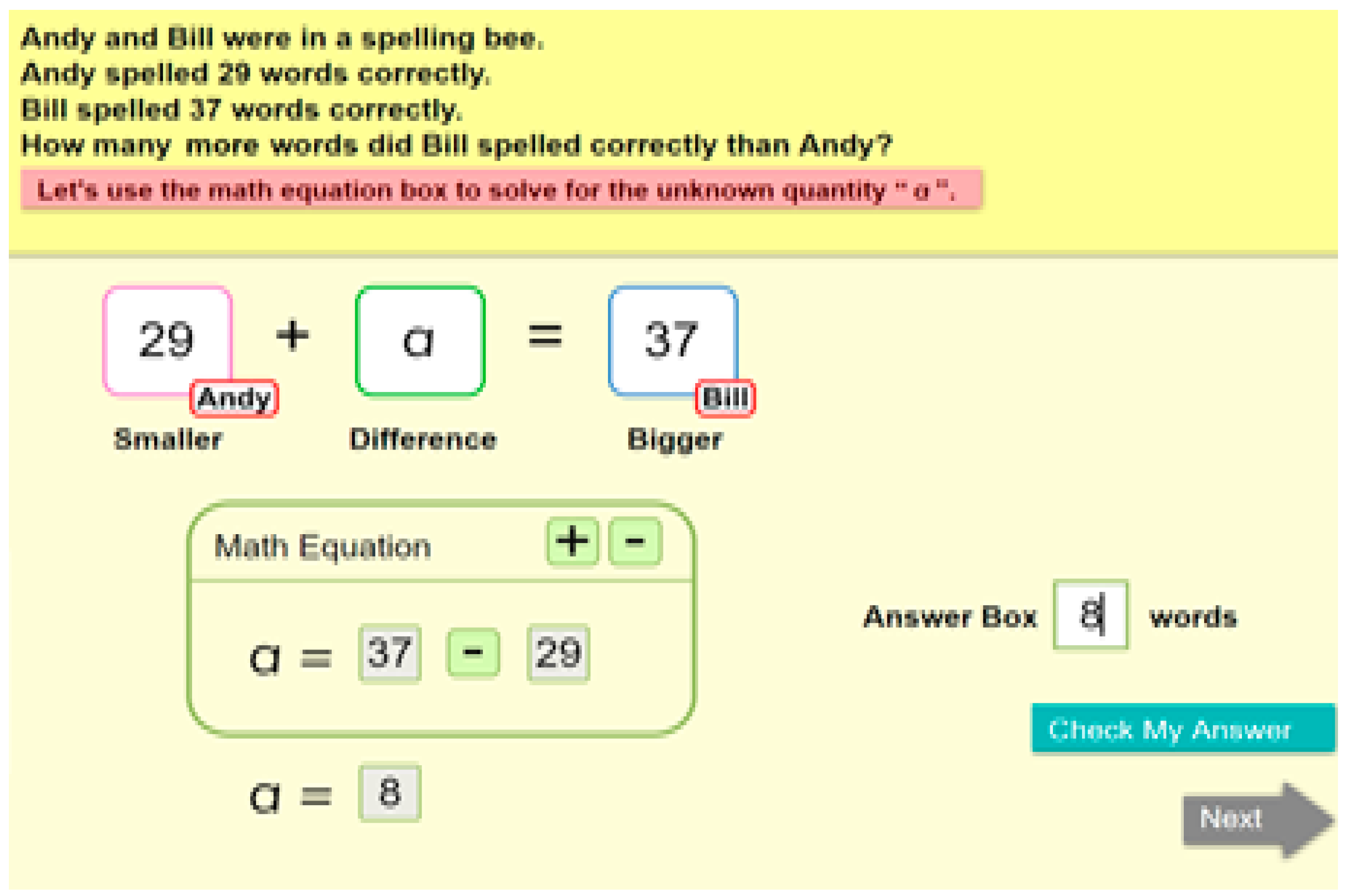

1.3. Model-Based Problem Solving That Empathizes Mathematical Relations

- Is there any changes in the success rate and error pattern of third graders with LDM on solving a range of addition and subtraction word problems before and after the MBPS intervention program?

- Is there any differential pattern in students’ ability to solve consistent and inconstant language problems before and after the MBPS intervention program?

2. Method

2.1. Participants and Setting

2.2. Measures

2.3. MBPS Intervention

3. Results

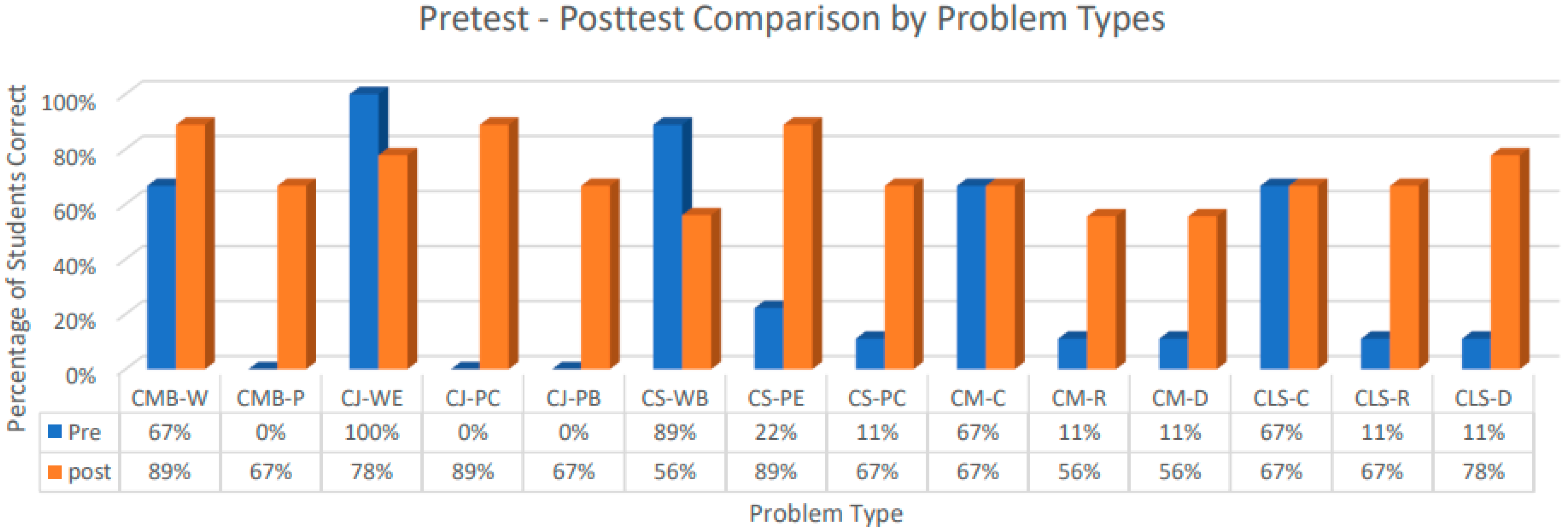

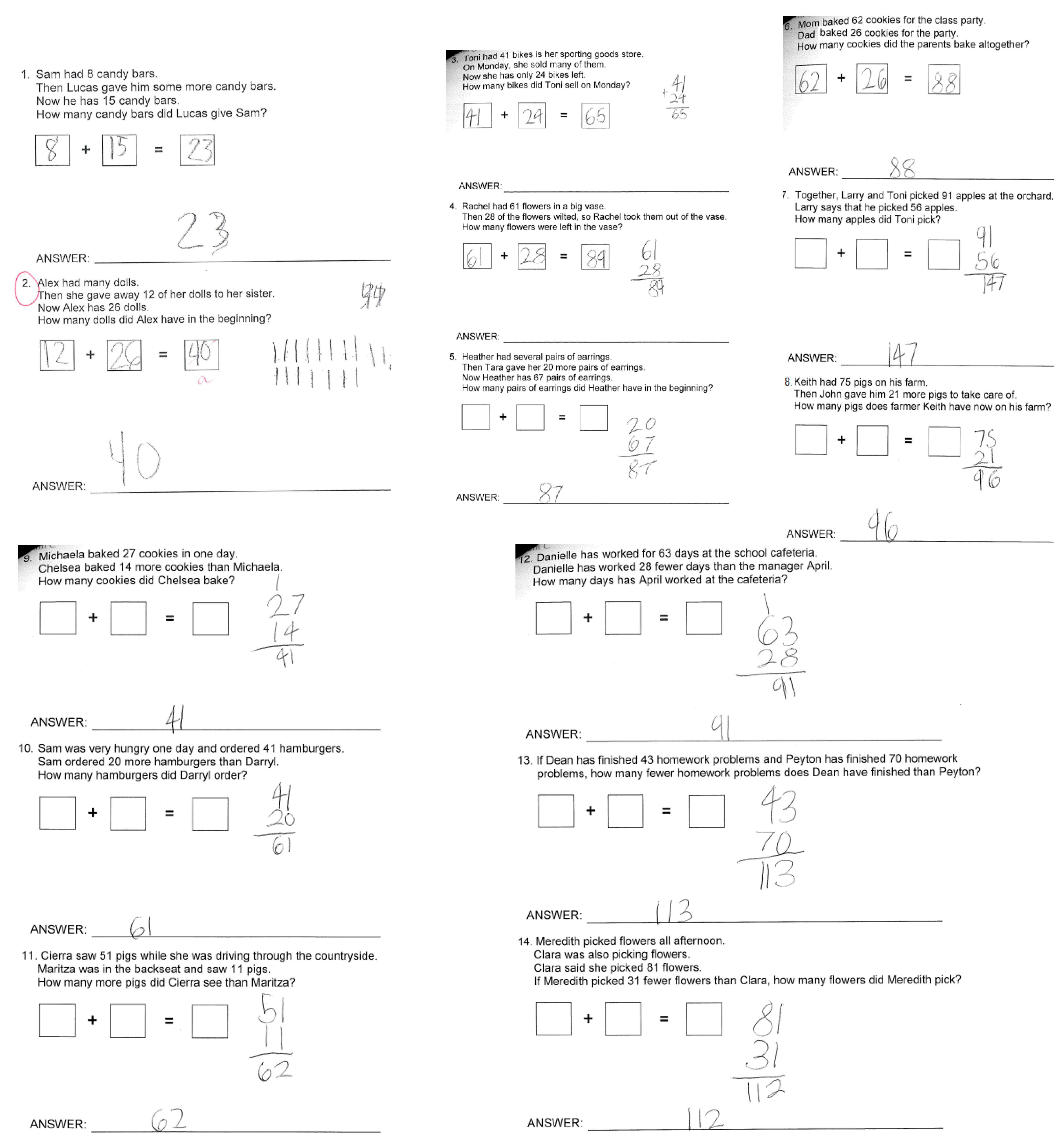

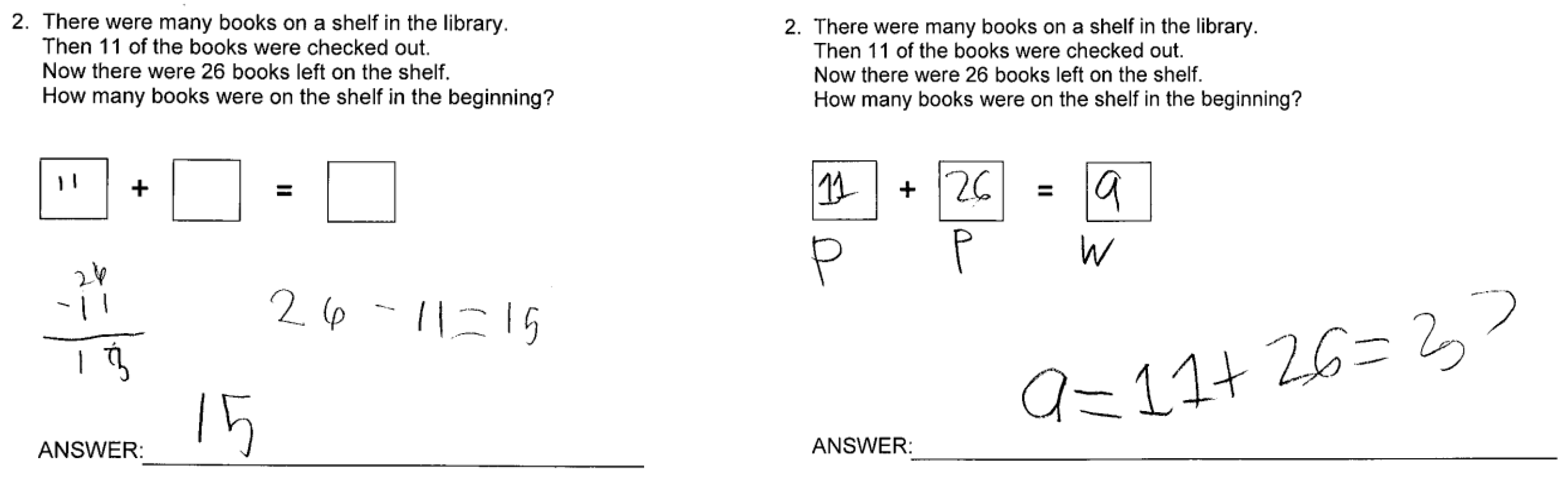

3.1. Success Rate and Error Pattern on Solving a Range of Additive Word Problems

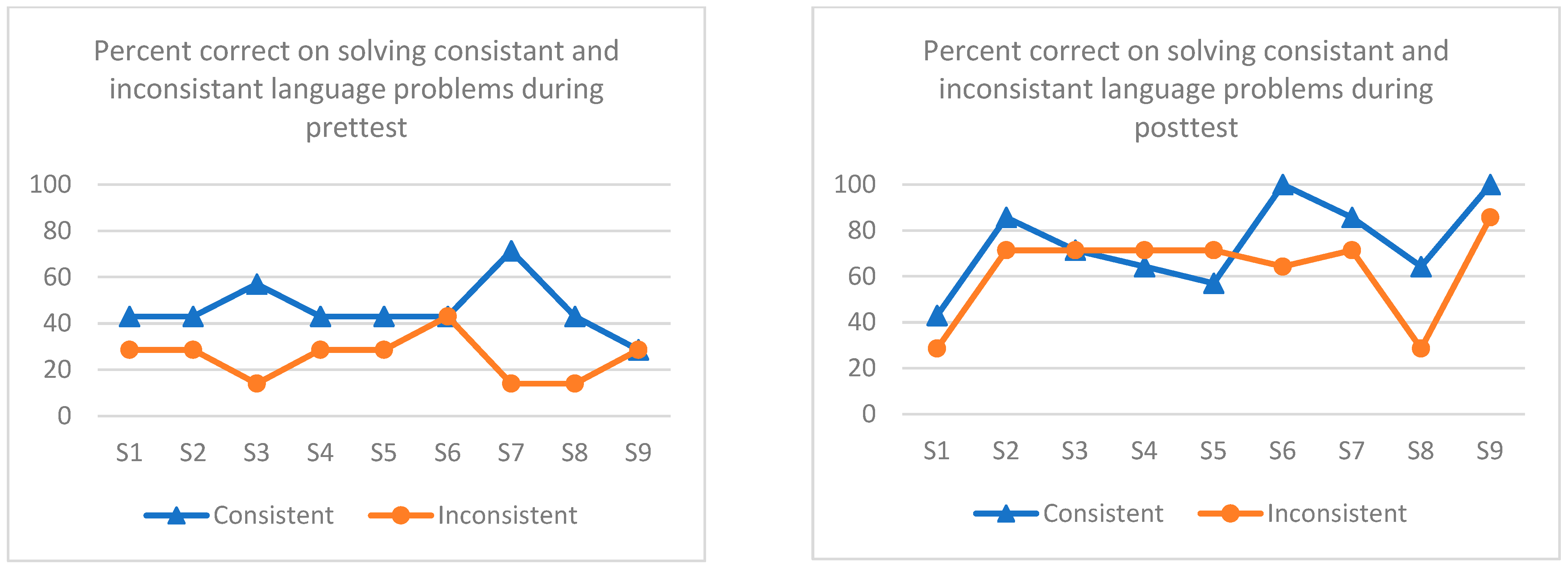

3.2. Performance in Solving Consistent and Inconsistent Language Problems

3.2.1. MB Design Study Data Analysis

3.2.2. Group Design Study Data Analysis

4. Discussion

4.1. Impact of MBPS on Students’ Success in Solving a Range of Additive Word Problems

4.2. Impact of MBPS on Students’ Ability to Solve Consistent/Inconstant Language Problems

4.3. Limitations and Future Research

4.4. Implications for Practice

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- National Assessment of Educational Progress Result (NAEP). 2022. Available online: https://www.nationsreportcard.gov/highlights/ltt/2022/ (accessed on 30 May 2023).

- Carcoba Falomir, G.A. Diagramming and Algebraic Word Problem Solving for Secondary Students with Learning Disabilities. Interv. Sch. Clin. 2019, 54, 212–218. [Google Scholar] [CrossRef]

- U.S. Department of Education. Every Student Succeeds Act. Pub. L. No. 114-95 § 114 Stat. 2015. Available online: https://congress.gov/114/plaws/publ95/PLAW-114publ95.pdf (accessed on 28 June 2023).

- Common Core State Standards Initiative. Introduction: Standards for Mathematical Practice. 2012. Available online: http://www.corestandards.org/the-standards/mathematics (accessed on 30 May 2023).

- Verschaffel, L.; Schukajlow, S.; Star, J.; Van Dooren, W. Word Problems in Mathematics Education: A Survey. ZDM 2020, 52, 1–16. [Google Scholar] [CrossRef]

- Xin, Y.P.; Wiles, B.; Lin, Y. Teaching Conceptual Model-Based Word-Problem Story Grammar to Enhance Mathematics Problem Solving. J. Spec. Educ. 2008, 42, 163–178. [Google Scholar] [CrossRef]

- Sowder, L. Children’s Solutions of Story Problems. J. Math. Behav. 1988, 7, 227–238. [Google Scholar]

- Greer, B. Multiplication and Division as Models of Situations. In Handbook of Research on Mathematics Teaching and Learning; Grouws, D., Ed.; MacMillan: New York, NY, USA, 1992; pp. 276–295. [Google Scholar]

- National Council of Teachers of Mathematics (NCTM). Principles and Standards for School Mathematics; National Council of Teachers of Mathematics: Reston, VA, USA, 2000. [Google Scholar]

- Caldwell, J.H.; Karp, K.; Bay-Williams, J.M. Developing Essential Understanding of Addition and Subtraction for Teaching Mathematics in Prekindergarten–Grade 2 (Essential Understanding Series); National Council of Teachers of Mathematics: Reston, VA, USA, 2011. [Google Scholar]

- de Corte, E.; Verschaffel, L. The Effect of Semantic Structure on First Graders’ Strategies for Solving Addition and Subtraction Word Problems. J. Res. Math. Educ. 1987, 18, 363–381. [Google Scholar] [CrossRef]

- Carpenter, T.P.; Moser, J.M. The Acquisition of Addition and Subtraction Concepts in Grades One Through Three. J. Res. Math. Educ. 1984, 15, 179–202. [Google Scholar] [CrossRef]

- Nortvedt, G.A. Coping Strategies Applied to Comprehend Multistep Arithmetic Word Problems by Students with Above-average Numeracy Skills and Below-average Reading Skills. J. Math. Behav. 2011, 30, 255–269. [Google Scholar] [CrossRef]

- Polotskaia, E.; Savard, A. Using the Relational Paradigm: Effects on Pupils’ Reasoning in Solving Additive Word Problems. Res. Math. Educ. 2018, 20, 70–90. [Google Scholar] [CrossRef]

- Davydov, V.V. Psychological Characteristics of the Formation of Mathematical Operations in Children. In Addition and Subtraction: Cognitive Perspective; Carpenter, T.P., Moser, J.M., Romberg, T.A., Eds.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1982; pp. 225–238. [Google Scholar]

- Thompson, P.W. Quantitative Reasoning, Complexity, and Additive structures. Educ. Stud. Math 1993, 25, 165–208. [Google Scholar] [CrossRef]

- Jonassen, D.H. Designing Research-based Instruction for Story Problems. Educ. Psychol. Rev. 2003, 15, 267–296. [Google Scholar] [CrossRef]

- Fleischner, J.E.; Manheimer, M.A. Math Interventions for Students with Learning Disabilities: Myths and Realities. Sch. Psychol. Rev. 1997, 26, 397–413. [Google Scholar] [CrossRef]

- Jaspers, M.W.M.; van Lieshout, E.C.D.M. Diagnosing Wrong Answers of Children with Learning Disorders Solving Arithmetic Word Problems. Comput. Hum. Behav. 1994, 10, 7–19. [Google Scholar] [CrossRef]

- Lewis, A.B.; Mayer, R.E. Students’ Miscomprehension of Relational Statements in Arithmetic Word Problems. J. Educ. Psychol. 1987, 79, 361–371. [Google Scholar] [CrossRef]

- Powell, S.R.; Katherine, A.B.; Benz, S.A. Analyzing the Word-problem Performance and Strategies of Students Experiencing Mathematics Difficulty. J. Math. Behav. 2020, 58, 100759. [Google Scholar] [CrossRef]

- Xin, Y.P.; Kastberg, S.; Chen, V. Conceptual Model-Based Problem Solving: A Response to Intervention Program for Students with Learning Difficulties in Mathematics (COMPS-RtI). National Science Foundation (NSF) Funded Project. 2015. Available online: https://www.nsf.gov/awardsearch/showAward?AWD_ID=1503451 (accessed on 28 June 2023).

- Steffe, L.P.; von Glasersfeld, E.; Richards, J.; Cobb, P. Children’s Counting Types; Praeger: New York, NY, USA, 1983. [Google Scholar]

- Fuson, K.C.; Willis, G.B. Subtracting by Counting Up: More Evidence. J. Res. Math. Educ. 1988, 19, 402–420. [Google Scholar] [CrossRef]

- Fuchs, L.S.; Newman-Gonchar, R.; Schumacher, R.; Dougherty, B.; Bucka, N.; Karp, K.S.; Woodward, J.; Clarke, B.; Jordan, N.C.; Gersten, R.; et al. Assisting Students Struggling with Math: Intervention in the Elementary Grades (WWC 2021006). National Center for Education Evaluation and Regional Assistance (NCEE), Institute of Education Sciences, U.S. Department of Education: Washington, DC, USA, 2021. Available online: http://whatworks.ed.gov/ (accessed on 30 May 2023).

- Xin, Y.P. Conceptual Model-Based Problem Solving: Teach Students with Learning Difficulties to Solve Math Problems; Sense: Rotterdam, The Netherlands, 2012. [Google Scholar] [CrossRef]

- Witzel, B.S.; Myers, J.A.; Xin, Y.P. Conceptually Intensifying Word Problem Solving for Students with Math Difficulties. Interv. Sch. Clin. 2021, 58, 9–14. [Google Scholar] [CrossRef]

- Xin, Y.P.; Zhang, D.; Park, J.Y.; Tom, K.; Whipple, A.; Si, L. A Comparison of Two Mathematics Problem-Solving Strategies: Facilitate Algebra-Readiness. J. Educ. Res. 2011, 104, 381–395. [Google Scholar] [CrossRef]

- Xin, Y.P.; Tzur, S.L.; Hord, C.; Liu, J.; Park, J.Y. An Intelligent Tutor-assisted Math Problem-solving Intervention Program for Students with Learning Difficulties. Learn. Disabil. Q. 2017, 40, 4–16. [Google Scholar] [CrossRef]

- Xin, Y.P.; Kim, S.J.; Lei, Q.; Liu, B.; Wei, S.; Kastberg, S.E.; Chen, Y.-J. The Effect of Model-based Problem Solving on the Performance of Students Who are Struggling in Mathematics. J. Spec. Educ. 2023. [Google Scholar] [CrossRef]

- Kingsdorf, S.; Krawec, J. Error Analysis of Mathematical Word Problem Solving Across Students with and without Learning Disabilities. Learn. Disabil. Res. Pract. 2014, 29, 66–74. [Google Scholar] [CrossRef]

- Xin, Y.P.; Kim, S.J.; Lei, Q.; Wei, S.; Liu, B.; Wang, W.; Kastberg, S.; Chen, Y.; Yang, X.; Ma, X.; et al. The Impact of a Conceptual Model-based Intervention Program on Math Problem-solving Performance of At-risk English learners. Read. Writ. Q. 2020, 36, 104–123. [Google Scholar] [CrossRef]

- Kim, S.J.; Kastberg, S.E.; Xin, Y.P.; Lei, Q.; Liu, B.; Wei, S.; Chen, Y. Counting strategies of students struggling in mathematics in a computer-based learning environment. J. Math. Behav. 2022, 68, 101007. [Google Scholar] [CrossRef]

- Newman, M.A. An analysis of sixth-grade pupils’ errors on written mathematical tasks. In Research in Mathematics Education in Australia; Clements, M.A., Foyster, J., Eds.; Swinburne College Press: Melbourne, Australia, 1977; Volume 34, pp. 269–287. [Google Scholar]

- Rosli, S.; Shahrill, M.; Yusof, J. Applying the Hybrid Strategy in Solving Mathematical Word Problems at the Elementary School Level. J. Technol. Sci. Educ. 2020, 10, 216–230. [Google Scholar] [CrossRef]

- Peltier, C.; Vannest, K.J. A Meta-Analysis of Schema Instruction on the Problem-Solving Performance of Elementary School Students. Rev. Educ. Res. 2017, 87, 899–920. [Google Scholar] [CrossRef]

- Mayer, R.E. Memory for algebra story problems. J. Educ. Psychol. 1982, 74, 199–216. [Google Scholar] [CrossRef]

- Hamson. The place of mathematical modeling in mathematics education. In Mathematical Modeling: A Way of life; Lamon, S.J., Parker, W.A., Houston, K., Eds.; Horwood Publishing: Chichester, UK, 2003; pp. 215–255. [Google Scholar]

- Zhang, D.; Xin, Y.P.; Si, L. Transition from Intuitive to Advanced Strategies in Multiplicative Reasoning for Students with Math Difficulties. J. Spec. Educ. 2013, 47, 50–64. [Google Scholar] [CrossRef]

- Hunt, J.; Tzur, R. Connecting Theory to Concept Building: Designing Instruction for Learning. In Enabling Mathematics Learning of Struggling Students: International Perspectives; Xin, Y.P., Tzur, R., Thouless, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2022; pp. 83–100. [Google Scholar]

| (a) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Variable/Name | Gender | Ethnicity | Age (Year-Month) | Socioeconomic | Years in SPED | RtI Support | % in Gen ed Class | Otis Lennon/Full | Verbal | Performance |

| Gaby | Female | Hispanic | 8-11 | Low | 0 | Tier 2 | 100% | 89 | 95 | 83 |

| Carmen | Male | Black | 8-7 | Low | 0 | Tier 2 | 100% | 72 | 78 | 68 |

| Sara | Female | Hispanic | 8-7 | Low | 5 (LI/OHI) | Tier 2 | 90% | 74 | 78 | 74 |

| Mary | Female | Multiracial | 9-0 | Low | 0 | Tier 2 | 100% | 80 | 80 | 82 |

| (b) | ||||||||||

| Variable/Name | Gender | Ethnicity | Age (Year-Month) | Socioeconomic | Years in SPED | RtI Support | % in Gen ed Class | Otis Lennon/Full | Verbal | Performance |

| P1 | Female | Hispanic | 8-11 | Low | 0 | Tier 2 | 100% | No test | ||

| P2 | Female | Black | 9-5 | Low | 0 | Tier 2 | 100% | 76 | 74 | 79 |

| P3 | Male | Hispanic | 8-8 | Low | 1 (LD) | Tier 3 | >80 | 77 | 79 | 77 |

| P4 | Male | Hispanic | 8-8 | Low | 0 | Tier 2 | 100% | 61 | 73 | 50 |

| P5 | Male | White | 8-6 | Low | 3(LD) | Tier 3 | >80 | 90 | 92 | 89 |

| P6 | Female | White | 8-3 | Low | 0 | Tier 2 | 100% | 79 | 81 | 79 |

| P7 | Male | White | 8-9 | Low | 0 | Tier 2 | 100% | 72 | 73 | 75 |

| P8 | Male | Black | 9-1 | low | 3(LD) | Tier 3 | >80 | 82 | 77 | 87 |

| P9 | Female | Black | 8-1 | Low | 0 | Tier 2 | 100% | No test | ||

| Combine | |

|---|---|

| “Whole” unknown CMB-W | Mr. Samir had 61 flashcards for his students. Mrs. Jones had 27 flashcards. How many flashcards do they have altogether? |

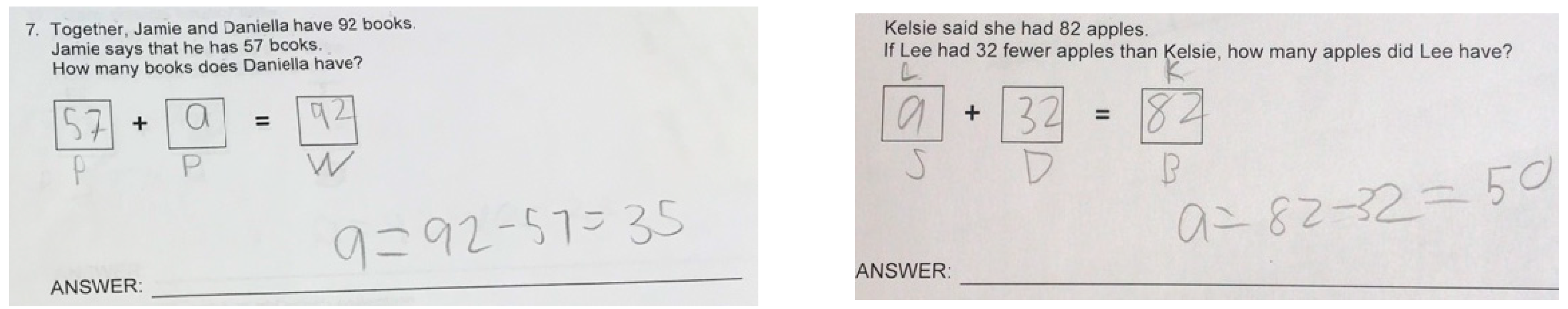

| “Part” unknown CMB-P (Combine2) | Together, Jamie and Daniella have 92 books. Jamie says that he has 57 books. How many books does Daniella have? |

| Change–join in | |

| “Whole” unknown ending amount CJ-WE | Leo has 76 math problems for homework. His Dad gives him 22 more problems to solve. How many math problems in total does Leo need to solve? |

| “Part” unknown change amount CJ-PC (Change3) | Sam had 8 candy bars. Then, Lucas gave him some more candy bars. Now he has 15 candy bars. How many candy bars did Lucas give Sam? |

| “Part” unknown beginning amount CJ-PB (Change5) | Selina had several comic books. Then, Andy gave her 40 more comic books. Now, Selina has 67 comic books. How many comic books did Selina have in the beginning? |

| Change–separate | |

| “Whole” unknown beginning amount CS-WB (Change6) | Alex had many dolls. Then, she gave away 12 of her dolls to her sister. Now Alex has 26 dolls. How many dolls did Alex have in the beginning? |

| “Part” unknown ending amount CS-PE | Davis had 62 toy army men. Then, one day he lost 29 of them. How many toy army men does Davis have now? |

| “Part” unknown change amount CS-PC | Ariel had 41 worms in a bucket for her fishing trip. She used many of them on the first day of her trip. The second day she had only 24 worms left. How many worms did Ariel use on the first day? |

| Compare-more | |

| Compared quantity unknown CM-C | Denzel has 28 toy cars. Gabrielle has 15 more toy cars than Denzel. How many toy cars does Gabrielle have? |

| Referent quantity unknown CM-R (Compare5) | Tiffany collects bouncy balls. As of today, she has 42 of them. Tiffany has 20 more balls than Elise. How many balls does Elise have? |

| Difference unknown CM-D (Compare1) | Logan has 52 rocks in his rock collection. Emanuel has 12 rocks in his collection. How many more rocks does Logan have than Emanuel? |

| Compare-less | |

| Referent quantity unknown CLS-R (Compare6) | Ellen ran 62 miles in one month. Ellen ran 29 fewer miles than her friend named Cooper. How many miles did Cooper run? |

| Compared quantity unknown CLS-C | Kelsie said she had 82 apples. If Lee had 32 fewer apples than Kelsie, how many apples did Lee have? |

| Difference unknown CLS-D | If Laura has 41 candy bars and another student named Paula has 70 candy bars, how many fewer candy bars does Laura have than Paula? |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xin, Y.P.; Kim, S.J.; Zhang, J.; Lei, Q.; Yılmaz Yenioğlu, B.; Yenioğlu, S.; Ma, X. Effect of Model-Based Problem Solving on Error Patterns of At-Risk Students in Solving Additive Word Problems. Educ. Sci. 2023, 13, 714. https://doi.org/10.3390/educsci13070714

Xin YP, Kim SJ, Zhang J, Lei Q, Yılmaz Yenioğlu B, Yenioğlu S, Ma X. Effect of Model-Based Problem Solving on Error Patterns of At-Risk Students in Solving Additive Word Problems. Education Sciences. 2023; 13(7):714. https://doi.org/10.3390/educsci13070714

Chicago/Turabian StyleXin, Yan Ping, Soo Jung Kim, Jingyuan Zhang, Qingli Lei, Büşra Yılmaz Yenioğlu, Samed Yenioğlu, and Xiaojun Ma. 2023. "Effect of Model-Based Problem Solving on Error Patterns of At-Risk Students in Solving Additive Word Problems" Education Sciences 13, no. 7: 714. https://doi.org/10.3390/educsci13070714

APA StyleXin, Y. P., Kim, S. J., Zhang, J., Lei, Q., Yılmaz Yenioğlu, B., Yenioğlu, S., & Ma, X. (2023). Effect of Model-Based Problem Solving on Error Patterns of At-Risk Students in Solving Additive Word Problems. Education Sciences, 13(7), 714. https://doi.org/10.3390/educsci13070714