Personalized Learning in Virtual Learning Environments Using Students’ Behavior Analysis

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. Methodology

- (1)

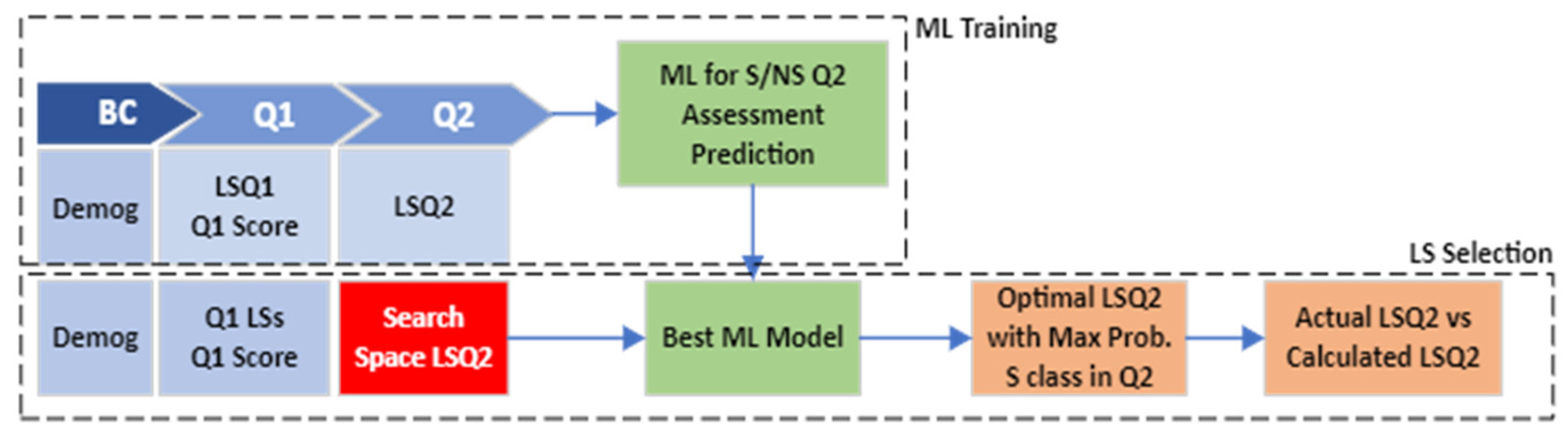

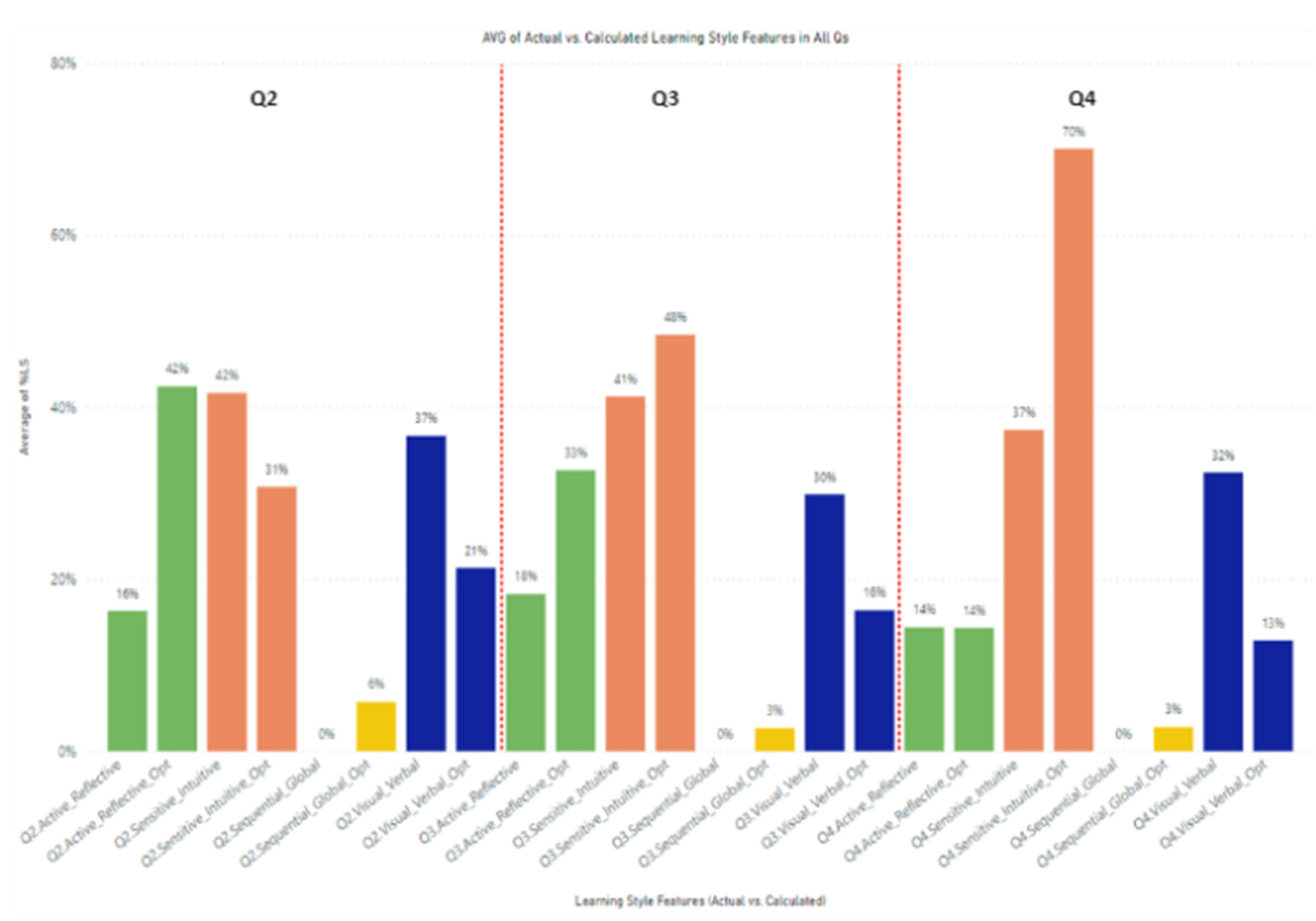

- State I—Beginning of Q2: Find LSs in Q2 that maximize the probability of satisfactory grades in Q2. Figure 3 explains the approach used in State I. It consists of two main processes including ML Training and LS Selection.

- (2)

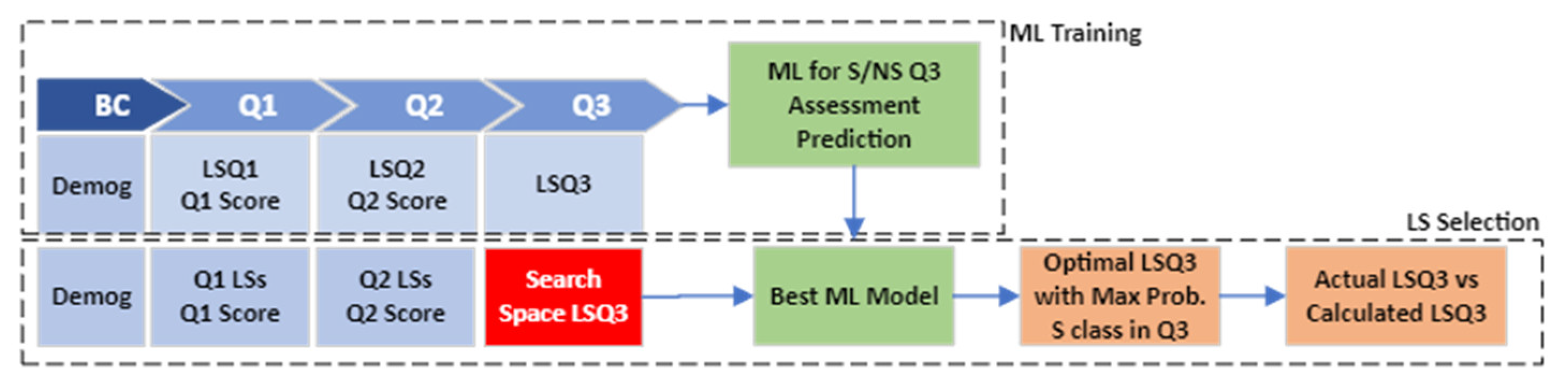

- State II—Beginning of Q3: Find LSs in Q3 to maximize the probability of satisfactory grades in Q3. The modeling process in state II is shown in Figure 4. It also consists of the same steps in state I including ML Training and LS Selection.

- (3)

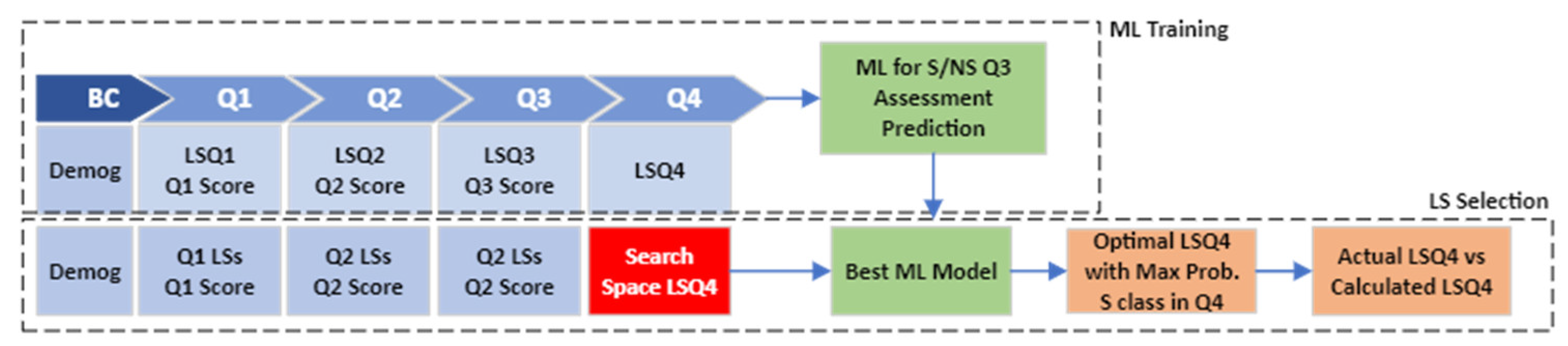

- State III—Beginning of Q4: Find LSs in Q4 to maximize the probability of satisfactory grades in Q4. Figure 5 depicts the modeling process in state III, which includes ML Training and LS Selection steps.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Felder, R.M.; Silverman, L.K. Learning and Teaching Styles in Engineering Education. Eng. Educ. 1988, 78, 674–681. [Google Scholar]

- Graf, S.; Liu, T.-C. Kinshuk Analysis of learners’ navigational behaviour and their learning styles in an online course: Analysis of navigational behaviour. J. Comput. Assist. Learn. 2010, 26, 116–131. [Google Scholar] [CrossRef]

- Ahmad, N.; Tasir, Z.; Kasim, J.; Sahat, H. Automatic Detection of Learning Styles in Learning Management Systems by Using Literature-based Method. Procedia Soc. Behav. Sci. 2013, 103, 181–189. [Google Scholar] [CrossRef]

- Tseng, S.-F.; Tsao, Y.-W.; Yu, L.-C.; Chan, C.-L.; Lai, K.R. Who will pass? Analyzing learner behaviors in MOOCs. Res. Pract. Technol. Enhanc. Learn. 2016, 11, 8. [Google Scholar] [CrossRef]

- Khan, B.H. Web-Based Instruction; Educational Technology: NJ, USA, 1997; ISBN 0-87778-296-2. [Google Scholar]

- Pardamean, B.; Suparyanto, T.; Cenggoro, T.W.; Sudigyo, D.; Anugrahana, A. AI-Based Learning Style Prediction in Online Learning for Primary Education. IEEE Access 2022, 10, 35725–35735. [Google Scholar] [CrossRef]

- Waheed, H.; Hassan, S.-U.; Aljohani, N.R.; Hardman, J.; Alelyani, S.; Nawaz, R. Predicting academic performance of students from VLE big data using deep learning models. Comput. Hum. Behav. 2020, 104, 106189. [Google Scholar] [CrossRef]

- Felder, R.M. Matters of Style. ASEE Prism 1996, 6, 18–23. [Google Scholar]

- Myers, I.B.; Myers, P.B. Gifts Differing: Understanding Personality Type; Davis-Black: Mountain View, CA, USA, 1995; ISBN 978-0-89106-074-1. [Google Scholar]

- Honey, P.; Mumford, A. Learning Styles Questionnaire; Organization Design and Development: Maidenhead, UK, 1989; Incorporated. [Google Scholar]

- Kolb, D.A. The Kolb Learning Style Inventory; Hay Resources Direct: Boston, MA, USA, 2007. [Google Scholar]

- Fleming, N.D. Facts, Fallacies and Myths: VARK and Learning Preferences. Retrieved from vark-learn.com/Introduction-to-Vark/the-Vark-Modalities. 2012. Available online: https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwjtoIaEw8v-AhVbAYgKHSf-AxYQFnoECA0QAQ&url=https%3A%2F%2Fvark-learn.com%2Fwp-content%2Fuploads%2F2014%2F08%2FSome-Facts-About-VARK.pdf&usg=AOvVaw0exKq7uf-fZ6G0bkrMQH5g (accessed on 24 March 2023).

- Basham, J.D.; Hall, T.E.; Carter, R.A., Jr.; Stahl, W.M. An operationalized understanding of personalized learning. J. Spec. Educ. Technol. 2016, 31, 126–136. [Google Scholar] [CrossRef]

- Ahmad Muhammad, B.; Qi, C.; Wu, Z.; Kabir Ahmad, H. GRL-LS: A learning style detection in online education using graph representation learning. Expert Syst. Appl. 2022, 201, 117138. [Google Scholar] [CrossRef]

- Dziedzic, M.; de Oliveira, F.B.; Janissek, P.R.; Dziedzic, R.M. Comparing learning styles questionnaires. In Proceedings of the 2013 IEEE Frontiers in Education Conference (FIE), Oklahoma City, OK, USA, 23–26 October 2013; pp. 973–978. [Google Scholar]

- Oranuch, P.; Monchai, T. Using Decision Tree C4. 5 Algorithm to Predict VARK Learning Styles. Int. J. Comput. Internet Manag. 2016, 24, 58–63. Available online: http://cmruir.cmru.ac.th/handle/123456789/428 (accessed on 24 March 2023).

- Hasibuan, M.S.; Nugroho, L.E.; Santosa, P.I. Model Detecting Learning Styles with Artificial Neural Network. J. Technol. Sci. Educ. 2019, 9, 85–95. [Google Scholar] [CrossRef]

- Khan, F.A.; Akbar, A.; Altaf, M.; Tanoli, S.A.K.; Ahmad, A. Automatic Student Modelling for Detection of Learning Styles and Affective States in Web Based Learning Management Systems. IEEE Access 2019, 7, 128242–128262. [Google Scholar] [CrossRef]

- Azzi, I.; Jeghal, A.; Radouane, A.; Yahyaouy, A.; Tairi, H. A robust classification to predict learning styles in adaptive E-learning systems. Educ. Inf. Technol. 2020, 25, 437–448. [Google Scholar] [CrossRef]

- Rasheed, F.; Wahid, A. Learning style detection in E-learning systems using machine learning techniques. Expert Syst. Appl. 2021, 174, 114774. [Google Scholar] [CrossRef]

- Costa, E.B.; Fonseca, B.; Santana, M.A.; de Araújo, F.F.; Rego, J. Evaluating the effectiveness of educational data mining techniques for early prediction of students’ academic failure in introductory programming courses. Comput. Hum. Behav. 2017, 73, 247–256. [Google Scholar] [CrossRef]

- Hassan, S.-U.; Waheed, H.; Aljohani, N.R.; Ali, M.; Ventura, S.; Herrera, F. Virtual learning environment to predict withdrawal by leveraging deep learning. Int. J. Intell. Syst. 2019, 34, 1935–1952. [Google Scholar] [CrossRef]

- Wasif, M.; Waheed, H.; Aljohani, N.R.; Hassan, S.-U. Understanding student learning behavior and predicting their performance. In Cognitive Computing in Technology-Enhanced Learning; IGI Global: Hershey, PA, USA, 2019; pp. 1–28. [Google Scholar]

- Hlosta, M.; Zdrahal, Z.; Zendulka, J. Are we meeting a deadline? classification goal achievement in time in the presence of imbalanced data. Knowl. Based Syst. 2018, 160, 278–295. [Google Scholar] [CrossRef]

- Marbouti, F.; Diefes-Dux, H.A.; Strobel, J. Building course-specific regression-based models to identify at-risk students. In Proceedings of the 2015 ASEE Annual Conference & Exposition, Seattle, WA, USA, 14–17 June 2015; pp. 26.304.1–26.304.11. [Google Scholar]

- Adnan, M.; Habib, A.; Ashraf, J.; Mussadiq, S.; Raza, A.A.; Abid, M.; Bashir, M.; Khan, S.U. Predicting at-risk students at different percentages of course length for early intervention using machine learning models. IEEE Access 2021, 9, 7519–7539. [Google Scholar] [CrossRef]

- Alhakbani, H.A.; Alnassar, F.M. Open Learning Analytics: A Systematic Review of Benchmark Studies Using Open University Learning Analytics Dataset (OULAD). In Proceedings of the 2022 7th International Conference on Machine Learning Technologies (ICMLT), Rome, Italy, 11–13 March 2022; pp. 81–86. [Google Scholar]

- Kuzilek, J.; Hlosta, M.; Zdrahal, Z. Open university learning analytics dataset. Sci. Data 2017, 4, 170171. [Google Scholar] [CrossRef]

- García, P.; Amandi, A.; Schiaffino, S.; Campo, M. Evaluating Bayesian networks’ precision for detecting students’ learning styles. Comput. Educ. 2007, 49, 794–808. [Google Scholar] [CrossRef]

- Graf, S.; Kinshuk; Liu, T.-C. Supporting teachers in identifying students’ learning styles in learning management systems: An automatic student modelling approach. J. Educ. Technol. Soc. 2009, 12, 3–14. [Google Scholar]

| Module Presentation | Domain | Length | #Students after Preprocessing | #Assessments |

|---|---|---|---|---|

| AAA-2013J | Social Sciences | 268 | 319 | 6 |

| AAA-2014J | Social Sciences | 269 | 292 | 6 |

| BBB-2013B | Social Sciences | 240 | 1154 | 12 |

| BBB-2013J | Social Sciences | 268 | 1499 | 12 |

| BBB-2014B | Social Sciences | 234 | 1052 | 12 |

| BBB-2014J | Social Sciences | 262 | 1490 | 6 |

| CCC-2014B | STEM | 241 | 960 | 10 |

| CCC-2014J | STEM | 269 | 1380 | 10 |

| DDD-2013B | STEM | 240 | 814 | 14 |

| DDD-2013J | STEM | 261 | 1179 | 7 |

| DDD-2014B | STEM | 241 | 692 | 7 |

| DDD-2014J | STEM | 262 | 1120 | 7 |

| EEE-2013J | STEM | 268 | 751 | 5 |

| EEE-2014B | STEM | 241 | 476 | 5 |

| EEE-2014J | STEM | 269 | 830 | 5 |

| FFF-2013B | STEM | 240 | 1148 | 13 |

| FFF-2013J | STEM | 268 | 1538 | 13 |

| FFF-2014B | STEM | 241 | 970 | 13 |

| FFF-2014J | STEM | 269 | 1466 | 13 |

| GGG-2013J | Social Sciences | 261 | 787 | 10 |

| GGG-2014B | Social Sciences | 241 | 647 | 10 |

| GGG-2014J | Social Sciences | 269 | 567 | 10 |

| Dimension | FSLSM Classifications | VLE Activity Type |

|---|---|---|

| Processing | Active/Reflective | Forumng, oucollaborate, ouwiki, glossary, htmlsctivity |

| Perception | Sensitive/Intuitive | oucontent, questionnaire, quiz, externalquez |

| Input | Visual/Verbal | dataPlus, dualPane, folder, page, homepage, resource, url, ouelluminate, subpage |

| Understanding | Sequential/Global | Repeatactivity, sharedsubpage |

| Quarter | Feature Category | Feature Name |

|---|---|---|

| Before Class (BC) | Demographics | gender, age_band, highest_education, disability, num_of_prev_attempts, studied_credits, date_registration |

| Q1 | Learning style | Q1.Visual_Verbal, Q1.Active_Reflective, Q1.Sesitive_Intuitive, Q1.Sequential_Global |

| Q1 | Assessment grades | Q1.Assess_score |

| Q2 | Learning style | Q2.Visual_Verbal, Q2.Active_Reflective, Q2.Sesitive_Intuitive, Q2.Sequential_Global |

| Q2 | Assessment grades | Q2.Assess_score |

| Q3 | Learning style | Q3.Visual_Verbal, Q3.Active_Reflective, Q3.Sesitive_Intuitive, Q3.Sequential_Global |

| Q3 | Assessment grades | Q3.Assess_score |

| Q4 | Learning style | Q4.Visual_Verbal, Q4.Active_Reflective, Q4.Sesitive_Intuitive, Q4.Sequential_Global |

| Q4 | Assessment grades | Q4.Assess_score |

| Model | Accuracy | AUC | Recall | Precision | F1 |

|---|---|---|---|---|---|

| Gradient Boosting Classifier | 0.7756 | 0.8439 | 0.8618 | 0.7908 | 0.8247 |

| Light Gradient Boosting Machine | 0.7744 | 0.8439 | 0.8576 | 0.7916 | 0.8232 |

| Random Forest Classifier | 0.7718 | 0.8346 | 0.8453 | 0.7952 | 0.8194 |

| Ada Boost Classifier | 0.7661 | 0.8334 | 0.8461 | 0.7878 | 0.8158 |

| Extra Trees Classifier | 0.7609 | 0.8267 | 0.8544 | 0.7775 | 0.8141 |

| Logistic Regression | 0.7609 | 0.8235 | 0.8937 | 0.7590 | 0.8208 |

| Linear Discriminant Analysis | 0.7538 | 0.8220 | 0.9129 | 0.7437 | 0.8196 |

| K Neighbors Classifier | 0.7289 | 0.7669 | 0.8403 | 0.7482 | 0.7916 |

| Naive Bayes | 0.7004 | 0.7425 | 0.8313 | 0.7218 | 0.7727 |

| Quadratic Discriminant Analysis | 0.6382 | 0.7095 | 0.7519 | 0.6246 | 0.6664 |

| Decision Tree Classifier | 0.6978 | 0.6830 | 0.7486 | 0.7559 | 0.7522 |

| SVM- Linear Kernel | 0.7505 | 0.0000 | 0.8723 | 0.7579 | 0.8106 |

| Ridge Classifier | 0.7528 | 0.0000 | 0.9154 | 0.7418 | 0.8194 |

| Model | Accuracy | AUC | Recall | Precision | F1 |

|---|---|---|---|---|---|

| Gradient Boosting Classifier | 0.7685 | 0.8421 | 0.8629 | 0.7770 | 0.8177 |

| Model | Accuracy | AUC | Recall | Precision | F1 |

|---|---|---|---|---|---|

| Gradient Boosting Classifier | 0.8106 | 0.8883 | 0.8643 | 0.8009 | 0.8314 |

| Light Gradient Boosting Machine | 0.8116 | 0.8871 | 0.8645 | 0.8024 | 0.8322 |

| Random Forest Classifier | 0.8099 | 0.8850 | 0.8541 | 0.8058 | 0.8292 |

| Extra Trees Classifier | 0.8083 | 0.8831 | 0.8600 | 0.8003 | 0.8290 |

| Ada Boost Classifier | 0.8062 | 0.8829 | 0.8551 | 0.8002 | 0.8267 |

| Logistic Regression | 0.7928 | 0.8684 | 0.8756 | 0.7717 | 0.8203 |

| Linear Discriminant Analysis | 0.7757 | 0.8610 | 0.9010 | 0.7403 | 0.8127 |

| K Neighbors Classifier | 0.7566 | 0.8163 | 0.8460 | 0.7405 | 0.7897 |

| Naive Bayes | 0.7246 | 0.8003 | 0.8507 | 0.7024 | 0.7695 |

| Quadratic Discriminant Analysis | 0.6679 | 0.7674 | 0.8494 | 0.5826 | 0.6909 |

| Decision Tree Classifier | 0.7306 | 0.7289 | 0.7502 | 0.7511 | 0.7505 |

| SVM- Linear Kernel | 0.7851 | 0.0000 | 0.8687 | 0.7655 | 0.8136 |

| Ridge Classifier | 0.7755 | 0.0000 | 0.9011 | 0.7400 | 0.8126 |

| Model | Accuracy | AUC | Recall | Precision | F1 |

|---|---|---|---|---|---|

| Gradient Boosting Classifier | 0.8071 | 0.8899 | 0.8635 | 0.7928 | 0.8266 |

| Model | Accuracy | AUC | Recall | Precision | F1 |

|---|---|---|---|---|---|

| Light Gradient Boosting Machine | 0.8325 | 0.9117 | 08419 | 0.8250 | 0.8333 |

| Random Forest Classifier | 0.8317 | 0.9081 | 0.8364 | 0.8271 | 0.8317 |

| Extra Trees Classifier | 0.8262 | 0.9050 | 0.8408 | 0.8154 | 0.8279 |

| Gradient Boosting Classifier | 0.8249 | 0.9027 | 0.8432 | 0.8120 | 0.8273 |

| Ada Boost Classifier | 0.7953 | 0.8716 | 0.8131 | 0.7837 | 0.7980 |

| K Neighbors Classifier | 0.7853 | 0.8545 | 0.8409 | 0.7554 | 0.7958 |

| Logistic Regression | 0.7691 | 0.8468 | 0.8125 | 0.7459 | 0.7777 |

| Linear Discriminant Analysis | 0.7651 | 0.8446 | 0.8303 | 0.7329 | 0.7785 |

| Quadratic Discriminant Analysis | 0.6844 | 0.7974 | 0.9007 | 0.6277 | 0.7396 |

| Naive Bayes | 0.6893 | 0.7894 | 0.8790 | 0.6357 | 0.7378 |

| Decision Tree Classifier | 0.7540 | 0.7540 | 0.7491 | 0.7547 | 0.7518 |

| SVM- Linear Kernel | 0.7637 | 0.0000 | 0.8031 | 0.7430 | 0.7715 |

| Ridge Classifier | 0.7651 | 0.0000 | 0.8303 | 0.7329 | 0.7785 |

| Model | Accuracy | AUC | Recall | Precision | F1 |

|---|---|---|---|---|---|

| Light Gradient Boosting Machine | 0.8273 | 0.9120 | 0.8457 | 0.8071 | 0.8259 |

| Quarter | Category | VV_Diff vs. Threshold | AR_Diff vs. Threshold | SI_Diff vs. Threshold | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Num | p-Value (Two-Sided) | p-Value (Greater) | Num | p-Value (Two-Sided) | p-Value (Greater) | Num | p-Value (Two-Sided) | p-Value (Greater) | ||

| Q2 | Supported | 7169 | 8.95 × 10−191 | 4.47 × 10−191 | 2638 | 3.971 × 10−5 | 1.98 × 10−5 | 4420 | 4.43 × 10−140 | 2.21 × 10−140 |

| Not Supported | 13,962 | 18,493 | 16,711 | |||||||

| Q3 | Supported | 6601 | 0.0 | 0.0 | 5078 | 4.63 × 10−138 | 2.31 × 10−138 | 5317 | 1.61 × 10−200 | 8.08 × 10−201 |

| Not Supported | 14,530 | 16,053 | 15,814 | |||||||

| Q4 * | Supported | 5298 | 5.63 × 10−7 | 2.81 × 10−7 | 7048 | 2.82 × 10−23 | 1.41 × 10−23 | 3912 | 0.0 | 0.0 |

| Not Supported | 13,776 | 12,026 | 15,162 | |||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nazempour, R.; Darabi, H. Personalized Learning in Virtual Learning Environments Using Students’ Behavior Analysis. Educ. Sci. 2023, 13, 457. https://doi.org/10.3390/educsci13050457

Nazempour R, Darabi H. Personalized Learning in Virtual Learning Environments Using Students’ Behavior Analysis. Education Sciences. 2023; 13(5):457. https://doi.org/10.3390/educsci13050457

Chicago/Turabian StyleNazempour, Rezvan, and Houshang Darabi. 2023. "Personalized Learning in Virtual Learning Environments Using Students’ Behavior Analysis" Education Sciences 13, no. 5: 457. https://doi.org/10.3390/educsci13050457

APA StyleNazempour, R., & Darabi, H. (2023). Personalized Learning in Virtual Learning Environments Using Students’ Behavior Analysis. Education Sciences, 13(5), 457. https://doi.org/10.3390/educsci13050457