Toward a Framework of Integrating Ability: Conceptualization and Design of an Integrated Physics and Mathematics Test

Abstract

1. Introduction

1.1. Integrated STEM Education

1.2. Evaluating Integrated STEM Education

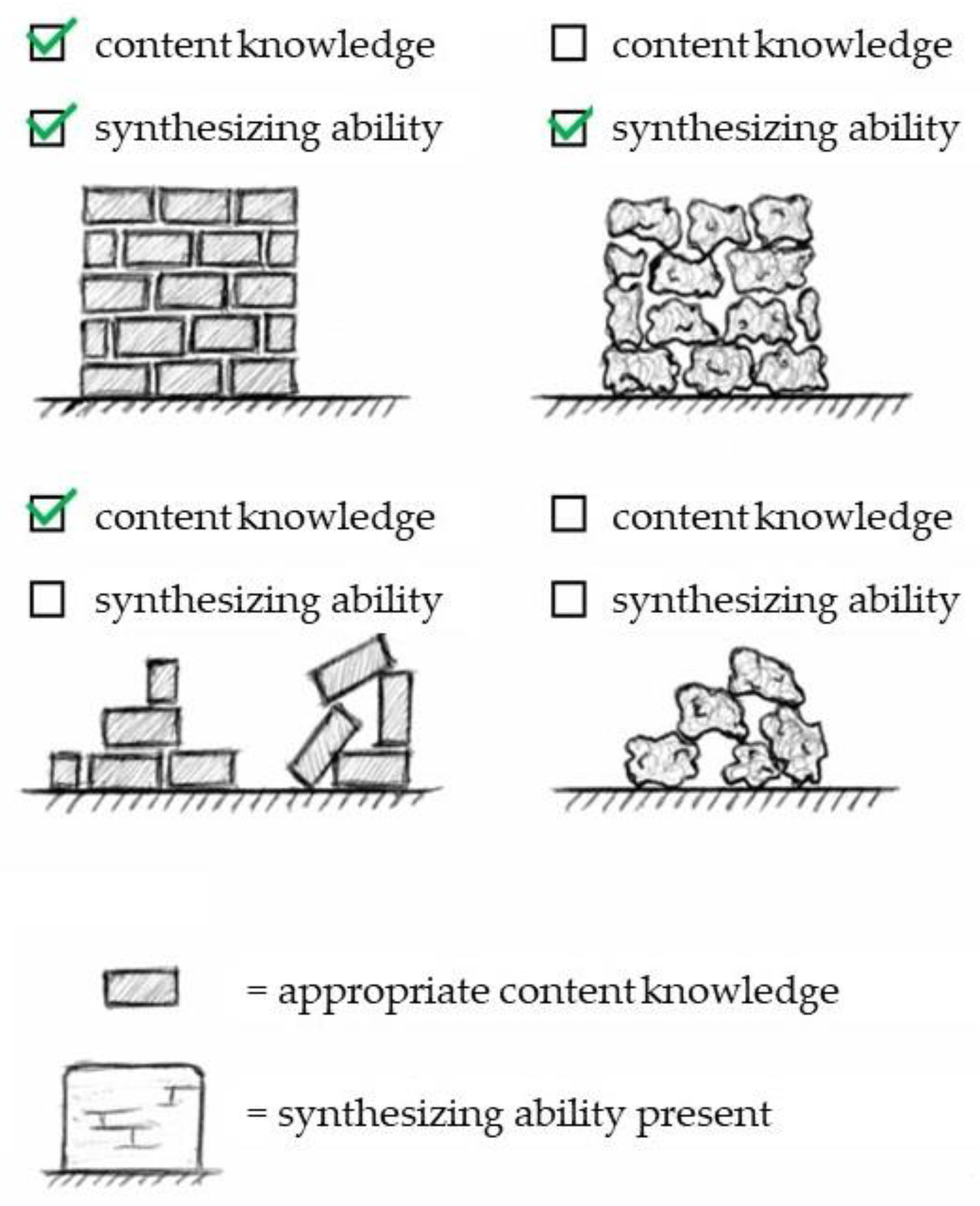

1.3. “Integrating Ability”: Definition and Framework

2. Method

2.1. Developing the Instrument

- (1)

- Establishing the test format;

- (2)

- Listing the physics and mathematics concepts that have been introduced in the ongoing school year;

- (3)

- Identifying cross-disciplinary links between these concepts;

- (4)

- Developing draft items that cover these links;

- (5)

- Having experts review these draft items;

- (6)

- Implementing the experts’ feedback.

2.2. Validation of the Instrument

2.2.1. Participants

2.2.2. Procedure

2.2.3. Analysis of Instrument Validity

3. Results

4. Discussion

4.1. Applications

4.2. Limitations and Directions for Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Committee on Prospering in the Global Economy of the 21st Century. Rising Above the Gathering Storm; The National Academies Press: Washington, DC, USA, 2007. [Google Scholar]

- World Economic Forum. The Future of Jobs Report 2020; World Economic Forum: Geneva, Switzerland, 2020. [Google Scholar]

- Wang, H.H.; Moore, T.J.; Roehrig, G.H.; Park, M.S. STEM integration: Teacher perceptions and practice. J. Pre-Coll. Eng. Educ. Res. 2011, 1, 1–13. [Google Scholar]

- Knipprath, H.; Thibaut, L.; Buyse, M.P.; Ceuppens, S.; De Loof, H.; De Meester, J.; Goovaerts, L.; Struyf, A.; Boeve-De Pauw, J.; Depaepe, F.; et al. STEM education in Flanders: How STEM@ school aims to foster STEM literacy and a positive attitude towards STEM. IEEE Instrum. Meas. Mag. 2018, 21, 36–40. [Google Scholar] [CrossRef]

- Spikic, S.; Van Passel, W.; Deprez, H.; De Meester, J. Measuring and Activating iSTEM Key Principles among Student Teachers in STEM. Educ. Sci. 2022, 13, 12. [Google Scholar] [CrossRef]

- Sanders, M.E. STEM, STEM education, STEMmania. Technol. Teach. 2008, 68, 20–26. [Google Scholar]

- National Academy of Engineering and National Research Council. STEM Integration in K-12 Education: Status, Prospects and an Agenda for Research; The National Academies Press: Washington, DC, USA, 2014. [Google Scholar] [CrossRef]

- Lederman, N.G.; Niess, M.L. Integrated, interdisciplinary, or thematic instruction? Is this a question or is it questionable semantics? Sch. Sci. Math. 1997, 97, 57–58. [Google Scholar] [CrossRef]

- Roehrig, G.H.; Moore, T.J.; Wang, H.-H.; Park, M.S. Is Adding the E Enough? Investigating the Impact of K-12 Engineering Standards on the Implementation of STEM Integration. Sch. Sci. Math. 2012, 112, 31–44. [Google Scholar] [CrossRef]

- De Loof, H.; Boeve-de Pauw, J.; Van Petegem, P. Integrated STEM education: The effects of a long-term intervention on students’ cognitive performance. Eur. J. STEM Educ.-[Sl] 2022, 7, 1–17. [Google Scholar] [CrossRef] [PubMed]

- De Loof, H.; Boeve-de Pauw, J.; Van Petegem, P. Engaging Students with Integrated STEM Education: A Happy Marriage or a Failed Engagement? Int. J. Sci. Math. Educ. 2022, 20, 1291–1313. [Google Scholar] [CrossRef]

- Aldemir, T.; Davidesco, I.; Kelly, S.M.; Glaser, N.; Kyle, A.M.; Montrosse-Moorhead, B.; Lane, K. Investigating Students’ Learning Experiences in a Neural Engineering Integrated STEM High School Curriculum. Educ. Sci. 2022, 12, 705. [Google Scholar] [CrossRef]

- Becker, K.; Park, K. Effects of integrative approaches among science, technology, engineering, and mathematics (STEM) subjects on students’ learning: A preliminary meta-analysis. J. STEM Educ. 2011, 12, 23–37. [Google Scholar]

- Depelteau, A.M.; Joplin, K.H.; Govett, A.; Miller, H.A.; Seier, E. SYMBIOSIS: Development, implementation and assessment of a model curriculum across biology and mathematics at the introductory level. CBE-Life Sci. Educ. 2010, 9, 342–347. [Google Scholar] [CrossRef] [PubMed]

- Kiray, S.A.; Kaptan, F. The Effectiveness of an Integrated Science and Mathematics Programme: Science-Centred Mathematics-Assisted Integration. Soc. Educ. Stud. 2012, 4, 943–956. [Google Scholar]

- Douglas, K.A.; Gane, B.D.; Neumann, K.; Pellegrino, J.W. Contemporary methods of assessing integrated STEM competencies. In Handbook of Research on STEM Education; Routledge: London, UK, 2020; pp. 234–254. [Google Scholar]

- Turpin, T.J. A Study of the Effects of an Integrated, Activity-Based Science Curriculum on Student Achievement, Science Process Skills and Science Attitudes. Ph.D. Thesis, University of Louisiana, Monroe, LA, USA, 2000. [Google Scholar]

- Eignor, D.R. The standards for educational and psychological testing. In Handbook of Testing and Assessment in Psychology, Volume 1: Test Theory and Testing and Assessment in Industrial and Organizational Psychology; Geisinger, K.F., Bracken, B.A., Carlon, J.F., Hansen, J.-I.C., Kuncel, N.R., Reise, S.P., Rodriguez, M.C., Eds.; American Psychological Association: Washington, DC, USA, 2013; pp. 245–250. [Google Scholar]

- Rizopoulos, D. ltm: An R package for latent variable modeling and item response theory analyses. J. Stat. Softw. 2006, 17, 1–25. [Google Scholar] [CrossRef]

- Crocker, L.; Algina, J. Introduction to Classical and Modern Test Theory; Harcourt Brace Jovanovich: Orlando, FL, USA, 1991. [Google Scholar]

- Obinne, A.D.E. Using IRT in Determining Test Item Prone to Guessing. World J. Educ. 2012, 2, 91–95. [Google Scholar] [CrossRef]

| Appropriate Content Knowledge | Inappropriate Content Knowledge | |

|---|---|---|

| Synthesizing ability present | Situation 1 1 | Situation 2 |

| Synthesizing ability absent | Situation 3 | Situation 4 |

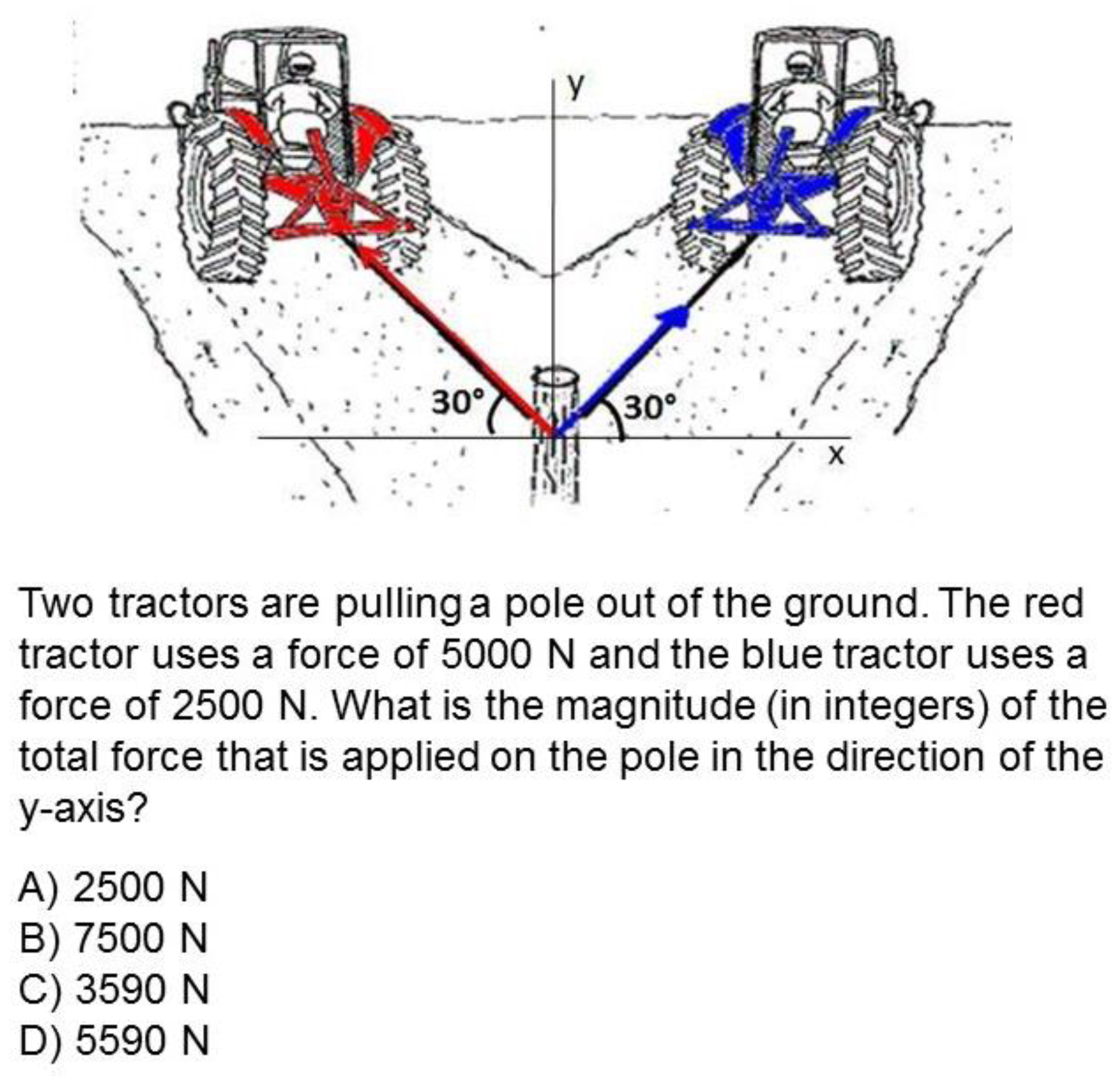

| Question: Driver A drives on a straight road from north to south with a constant speed of 15 m/s. Driver B is driving on the same road from south to north with a constant speed of 20 m/s. At time “t = 0 s”, the two drivers are 1 km apart and driving towards each other. Determine the position and the time at which the two drivers cross each other. | ||

Steps towards the ideal answer:

| ||

| Synthesizing ability present | Synthesizing ability absent | |

| Appropriate content knowledge | Steps (1) through (8) of the ideal answer are present in some form. The respondent understands the concepts of speed and velocity and understands that both cars perform a uniform linear motion described by a linear equation. The respondent can set up the equations for the drivers and understands that to find the crossing point, the system of equations must be solved for x and t. He/she is then able to solve the system of equations. | No steps of the ideal answer are present, except possibly step (1). The respondent writes down some correct equations relating to velocity (such as v = Δx/Δt) and position (such as x(t) = x _0″ + v ∙ t), but does not know what to do with them. No mathematics are present because the respondent does not know which mathematics to use, though this does not mean the respondent does not have the appropriate mathematical content knowledge; he/she just does not know how it can help solve the question. |

| Inappropriate content knowledge | The respondent understands that Step (4) of the ideal answer must be performed, but cannot perform Steps (1)–(3); even if the correct equations were provided, he/she would not be able to perform Steps (5)–(8). For example, the respondent might write the equation for Driver B without accounting for the opposite direction of the motion (i.e., the minus sign for the velocity): xB(t)= 1000 m − 20 m/s ∙ t”. Even if the correct system of equations were provided, the respondent would not be able to solve it correctly (e.g., he/she could only solve it for x and would not understand how to find the related time t). | None of the steps of the ideal answer are present. The respondent does not know what to do at all. The answer probably remains blank since there is no, or incorrect, content knowledge about velocity, or the respondent employs some incorrect formulae for velocity. Likely no mathematics will be observable in the solution at all since the respondent does not know which mathematics to use. |

| Physics | Mathematics |

|---|---|

| I. Position (uniformly accelerated linear motion) II. Velocity (uniformly accelerated linear motion) III. Average velocity IV. Acceleration V. Average acceleration VI. Force VII. Torque VIII. Reflection of light IX. Refraction of light | I. First-order function/equation II. Slope III. Surface trapezoid IV. System of equations V. Vector VI. Sine, cosine, tangent VII. Pythagoras |

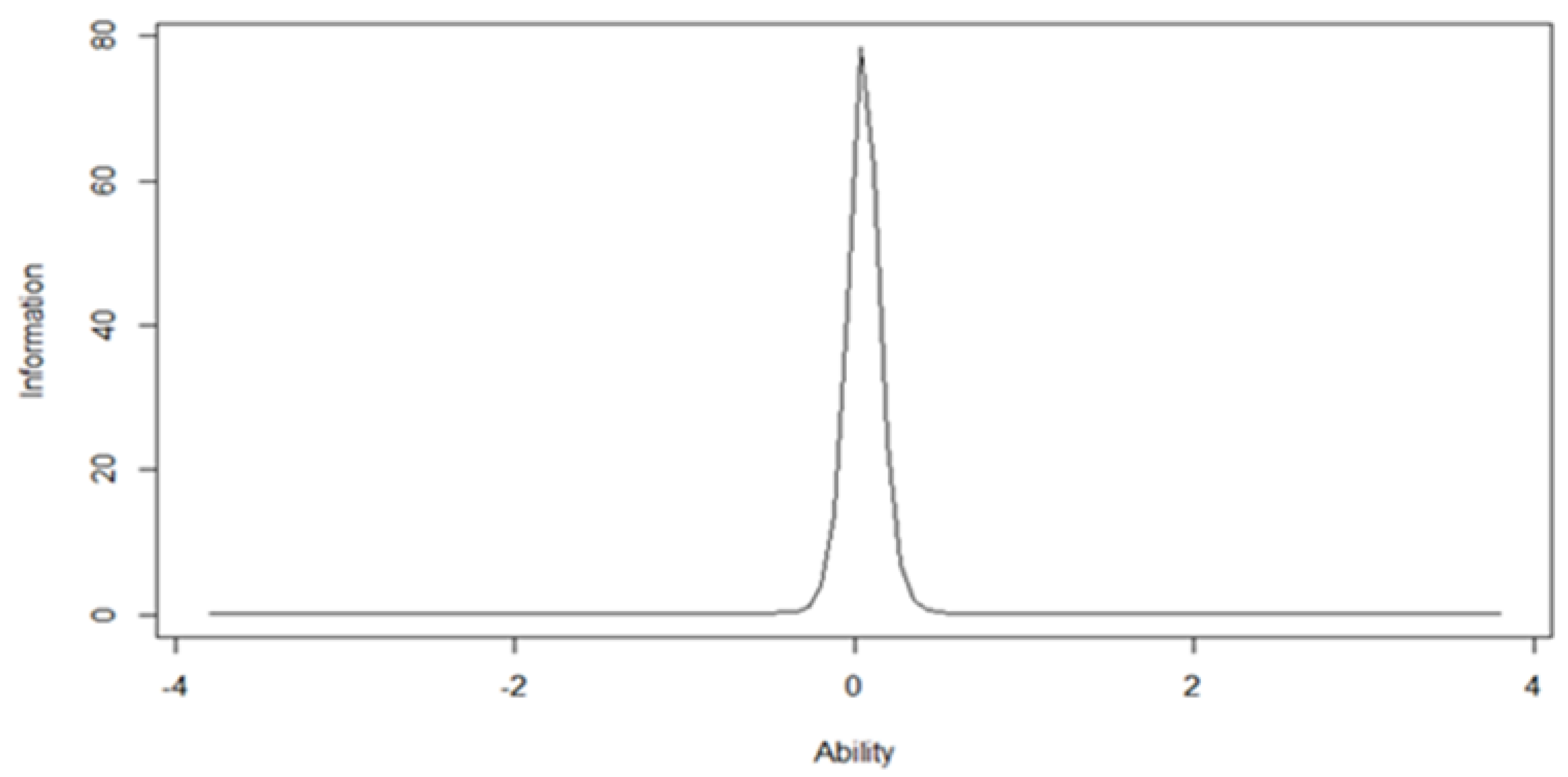

| AIC | BIC | Log-Likelihood | |

|---|---|---|---|

| Rash Model | 5269 | 5315 | −2625 |

| 1-PL model | 5202 | 5253 | −2591 |

| 2-PL model | 5193 | 5285 | −2579 |

| 3-PL model | 5203 | 5342 | −2576 |

| Items | α | β |

|---|---|---|

| I1 | 0.42 | −0.92 |

| I3 | 17.91 | 0.06 |

| I4 | 0.38 | 0.69 |

| I5 | 0.57 | 0.96 |

| I7 | 0.18 | 2.09 |

| I9 | 0.33 | 2.20 |

| I10 | 0.24 | 3.47 |

| I11 | 0.39 | 4.08 |

| I13 | 0.31 | 5.60 |

| Variables | 1. | 2. | 3. | 4. |

|---|---|---|---|---|

| 1. IPM | ||||

| 2. Physics application | 0.12 ** | |||

| 3. Mathematics application | 0.19 *** | 0.27 *** | ||

| 4. Technological concepts | −0.05 | −0.07 ** | −0.10 *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Loof, H.; Ceuppens, S.; De Meester, J.; Goovaerts, L.; Thibaut, L.; De Cock, M.; Dehaene, W.; Depaepe, F.; Knipprath, H.; Boeve-de Pauw, J.; et al. Toward a Framework of Integrating Ability: Conceptualization and Design of an Integrated Physics and Mathematics Test. Educ. Sci. 2023, 13, 249. https://doi.org/10.3390/educsci13030249

De Loof H, Ceuppens S, De Meester J, Goovaerts L, Thibaut L, De Cock M, Dehaene W, Depaepe F, Knipprath H, Boeve-de Pauw J, et al. Toward a Framework of Integrating Ability: Conceptualization and Design of an Integrated Physics and Mathematics Test. Education Sciences. 2023; 13(3):249. https://doi.org/10.3390/educsci13030249

Chicago/Turabian StyleDe Loof, Haydée, Stijn Ceuppens, Jolien De Meester, Leen Goovaerts, Lieve Thibaut, Mieke De Cock, Wim Dehaene, Fien Depaepe, Heidi Knipprath, Jelle Boeve-de Pauw, and et al. 2023. "Toward a Framework of Integrating Ability: Conceptualization and Design of an Integrated Physics and Mathematics Test" Education Sciences 13, no. 3: 249. https://doi.org/10.3390/educsci13030249

APA StyleDe Loof, H., Ceuppens, S., De Meester, J., Goovaerts, L., Thibaut, L., De Cock, M., Dehaene, W., Depaepe, F., Knipprath, H., Boeve-de Pauw, J., & Van Petegem, P. (2023). Toward a Framework of Integrating Ability: Conceptualization and Design of an Integrated Physics and Mathematics Test. Education Sciences, 13(3), 249. https://doi.org/10.3390/educsci13030249