Abstract

Purpose: This systematic review assesses the effectiveness of face-to-face and online delivery modes of continuing professional development (CPD) for science teachers. It focuses on three aspects: evaluating the effectiveness of these modes, summarizing the literature on the factors influencing them, and conducting a comparative analysis of their advantages. Methods: The research team employed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) method and the Mixed Methods Appraisal Tool (MMAT) for article quality assessment. A total of 12 articles, selected from a potential 82, were included in the study. Results: This research suggests that the face-to-face and online CPD modes are equally effective and that external factors, such as psychological variables and establishing communication communities, influence their effectiveness. Face-to-face CPD fosters communication communities, while online CPD offers geographical flexibility and cost-saving benefits. Implications: The effectiveness of face-to-face and online CPD relies on external psychosocial factors. Future research should focus on strategies to enhance participants’ communication engagement in online communities. Additionally, it is worth conducting further investigations of the potential relationships between psychosocial variables and the effectiveness of online CPD, along with the impact of digital skills on online CPD.

1. Introduction

Continuing professional development (CPD) refers to various learning activities to enhance professional capabilities [1,2]. Globally, governments and schools use CPD to improve teachers’ capabilities and the quality of teaching, thereby effectively promoting educational quality and reaching a consensus. CPD proves to be an effective means of elevating teachers’ pedagogical skills and instructional competence. For educators, professional development is widely recognized as a planned, continuous, and lifelong learning process upon which educational quality is enhanced [3]. During this process, teachers’ personal qualities and real-world practice are improved [4]. Teachers should be lifelong learners who must adapt and proficiently embrace new teaching methodologies and emerging tools over time. This adaptation is essential to ensuring their ability to seamlessly integrate new technology into the classroom with the highest level of quality [5,6].

The field of CPD research has yielded notable academic achievements [7,8]. Through CPD training, educators not only enrich their knowledge in their respective fields but also cultivate the improvement of teaching practices [9]. The significance and value of CPD for guaranteeing high-quality education has garnered substantial attention from researchers [10], with many studies on CPD being conducted in the educational field. The research designs of such studies exhibit a wide scope; for example, those that evaluate the impact of CPD projects on teaching practice and offer analyses of the factors that hinder effective participation. Additionally, research on CPD explores enhancements and policy frameworks in an attempt to solve the shortcomings of CPD initiatives [11,12,13].

The development of computer and networking technology has triggered some highly significant social changes, and the field of education has changed. The combination of education and technology has always been a focus of research; researchers have also proposed changes to the delivery modes of education involving the use of computer and networking technology, such as E-learning [14]. Harnessing the power of computers and network connections, learning resources can be easily accessed from almost any location [15]. E-learning has also led to new insights into the evolution of CPD-related fields [16,17]. The COVID-19 pandemic forced governments and educational institutions to adapt quickly, and the modes of E-learning, based on the Internet, assumed the role of maintaining the stable operation of the education system. The effective implementation of online education during the pandemic has generated new ideas for delivering CPD over the Internet, and research results have confirmed the success of the online CPD delivery mode [18,19,20,21]. In the post-COVID-19 era, there has been a notable surge in the initiation of CPD projects across diverse fields through online platforms. This approach not only adeptly mitigates the constraints inherent in face-to-face CPD, such as time schedule and geographical limitations [22,23,24,25]. This raises a fundamental question: are there indispensable aspects of face-to-face CPD delivery modes? This has sparked a series of reflections. For instance, can online CPD effectively enhance teachers’ professional knowledge and practical skills in comparison to traditional modes? What factors influence these two delivery methods, and are they consistent? Can online teaching models be leveraged for the professional development of educators in the future? These questions constitute the foundation of the present study. To address them, the research team conducted a systematic literature review that comprehensively assessed and analyzed the existing research findings regarding CPD across different delivery modes.

There are many research papers on CPD in the field of education. To ensure that this article’s focus is unambiguous, the research team focused on studies relevant to science teachers; this encompassed various disciplines, including science, technology, engineering, mathematics, biology, and chemistry. This decision was based on two considerations. First, science education is the cornerstone of a country’s competitiveness, especially during the K-12 education stage, where science education is of paramount importance [26]. Second, many countries, such as the United States, China, and India, have a shortage of high-quality teachers in science fields due to geographical territories and economic development levels. This shortage is more serious in high-poverty and rural areas; teachers seeking employment in these areas are generally less able to easily complete the high-quality requirements for all subjects they teach and are also unable to access content-specific professional development [27].

Effective CPD has the potential to change teachers’ practices and enhance students’ learning outcomes [28], but determining its effectiveness can be challenging because it is subjective; what works for one participant may not work for another. However, evaluating the efficacy of CPD is crucial not only for personal and professional development but also for determining whether CPD activities should be revisited. To this end, researchers have attempted to create various evaluation frameworks for assessing the effectiveness of CPD.

In 1975, Donald Kirkpatrick introduced an evaluation model in his book Evaluating Training Programs, comprising four core levels: reaction, learning, behavior, and results [29]. Kirkpatrick’s model has since garnered significant recognition as a tool for gauging the effectiveness of training programs, including teachers’ CPD; however, it does have certain limitations. First, the model places significant emphasis on quantitative measurements, yet the sections on behavior and results can be somewhat abstract and challenging to quantify. Second, it lacks an assessment of social variables and the educational organizational climate. The model is general and not specific to the educational field.

In 2000, Thomas Guskey provided a method primarily focused on specifically evaluating CPD’s effectiveness in the educational field. This method comprises five levels, providing a strong basis for ensuring that a CPD offering is effective. These include (1) participants’ reactions, (2) participants’ learning, (3) organization and support, (4) participants’ use of new knowledge or skills, and (5) student learning outcomes [30]. Additionally, Guskey summarized thirteen characteristics of effective CPD, as follows: (1) providing sufficient time and resources; (2) promoting collegiality and collaboration; (3) including procedures for evaluation; (4) modeling high-quality instruction; (5) being based in schools or on site; (6) building leadership capacity; (7) being built on the identified needs of the teachers; (8) being driven by analyses of student learning data; (9) focusing on individual and organizational improvement; (10) including follow-up and support; (11) being ongoing and embedded in the job; (12) taking a variety of forms; and (13) promoting continuous inquiry and reflection [31]. Guskey’s approach exhibits limitations, particularly in its neglect of contextual factors such as student characteristics, teacher attributes, and school features. Desimone’s suggested evaluative framework mitigates this constraint.

Desimone [32] provided a comprehensive framework for evaluating the effects of professional development. He thought that there were three aspects of professional development relevant to the measurement of its effectiveness: (1) the core features of effective professional development are content-focused active learning, coherence, duration, and collective participation; (2) the way this effective professional development affects teachers’ knowledge, their practice, and students’ learning; and (3) contextual factors, such as student characteristics, teacher characteristics, and school characteristics, are related to the effectiveness of professional development [32,33]. This conceptual framework encompasses the core elements of effective professional development while also considering the factors that mediate and moderate its impact. These factors include environment variables and the characteristics of the teachers themselves.

Darling-Hammond and McLaughlin [34] provided a set of essential characteristics necessary for optimal professional development. These attributes comprise (1) engaging teachers in the concrete tasks of teaching, assessment, observation, and reflection that illuminate the processes of learning and development; (2) being grounded in inquiry, reflection, and participant-driven experimentation; (3) being collaborative, involving a sharing of knowledge among educators and a focus on teachers’ communities of practice rather than on individual teachers; (4) being connected to and derived from teachers’ work with their students; (5) being sustained, ongoing, intensive, and supported by modeling, coaching, and the collective solving of specific problems in practice; and (6) being connected to other aspects of school change [34].

In 2017, Ravitz and his co-authors outlined seven prevailing characteristics that delineate successful teacher professional development programs: (1) a primary focus on the content; (2) the application of active learning strategies grounded in adult learning theory; (3) the encouragement of collaboration, often embedded within teachers’ work settings; (4) the utilization of models and demonstrations to illustrate effective teaching practices; (5) the provision of coaching and expert guidance; (6) the establishment of opportunities for feedback and self-reflection; and (7) a sustained duration [35].

From the work outlined above, it can be concluded that there are two main avenues for evaluating the effectiveness of CPD. One approach focuses on assessing the impact of CPD based on participants’ performance by measuring student learning outcomes. The second approach centers around evaluating the effectiveness of CPD through collecting teachers’ personal feedback and reflection reports. The distinct pathways of gauging CPD effectiveness, ranging from objective measurements of student learning outcomes to educators’ subjective reflections, underscore this educational phenomenon’s complexity.

1.1. Definition of Terms

In the context of this discussion, certain key terms are defined to provide clarity and understanding. CPD can be delivered in two types: face-to-face or via the Internet and a combination of a ‘Hybrid’ [36].

1.1.1. Face-to-Face Delivery of CPD

Face-to-face mode is a traditional way of delivering CPD. This mode can take various forms, such as workshops, seminars, and in-service training [37]. In this form of CPD, the project needs to be carried out at the same location at a specified time [38].

1.1.2. Online Delivery of CPD

Unlike the face-to-face delivery of CPD, participants can engage in online CPD activities based on their schedule and location as long as they can access the Internet using a computer [39,40]. The delivery modes of online CPD are more diverse, such as online meetings, Massive Open Online Courses (MOOCs), blended learning, etc. [17,41].

2. Research Questions

This study aims to provide a comprehensive analysis of the effectiveness of both online CPD and face-to-face CPD, as well as their impact on science educators, by conducting a systematic review. It aims to further explore issues in the field of online CPD and provide valuable insights, with a particular focus on the following questions:

- RQ1. How does the effectiveness of face-to-face CPD compare to that of online CPD for science educators?

- RQ2. What factors could potentially impact the efficacy of diverse forms of CPD programs?

- RQ3. What are the advantages of different CPD delivery modes?

3. Methodology

This study employed the systematic literature review (SLR) method to ensure that future scholars can replicate this research and conduct further studies. Throughout our systematic literature review, we adhered to the relevant guidelines provided by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) [42,43]. The research team conducted a systematic research process to address the questions posed in this study, including literature retrieval, organization, and analysis.

3.1. Inclusion and Exclusion Criteria

Inclusion and exclusion criteria were established to clearly compare the effectiveness of both traditional and online CPD models for science teachers and investigate the impact of various CPD delivery modes on the effectiveness of science teachers. The specific criteria for inclusion and exclusion are detailed in Table 1.

Table 1.

Inclusion and exclusion criteria.

3.2. Data Source

Electronic databases, specifically Web of Science (WoS), Scopus, ERIC, and ScienceDirect, were searched for the relevant literature published up to 12 October 2023 (these articles are included and updated in the database).

The research team chose these databases because they are internationally renowned academic literature databases that feature numerous peer-reviewed journals, indicating that the selected articles have undergone expert review, are of high quality, and their research findings can be relied upon. Additionally, these databases are strongly aligned with the research field, particularly the field of education, offering extensive coverage and rich resources; researchers can thus easily access documents related to their research topics. They also have global coverage and encompass research outcomes from various fields and regions, facilitating the acquisition of diverse and authoritative information on a global scale. Hence, selecting these four databases ensures the reliability and depth of the research.

3.3. Search Strategy

The primary objective of this study was to investigate and compare the impact of face-to-face and online CPD on science teachers’ literacy and practical capabilities. Recognizing the varied terminology used in fields closely related to CPD, the research team employed a comprehensive search strategy that integrated all pertinent search terms into the search algorithm. In addition, we diligently identified synonyms and commonly used alternatives to the term “CPD”. This meticulous approach resulted in the refined search terms presented in Table 2.

Table 2.

Refined search terms.

These keywords were combined using the Boolean operators AND and OR. The search strings used for database retrieval are summarized and presented below:

(“Continuing Professional Development” OR “Continuous Professional Development” OR “CPD”)

AND

Science Teachers

AND

(“Face-to-face” OR “Online”)

AND

(“Effectiveness” OR “Efficacy”)

This comprehensive approach allowed us to retrieve the relevant literature, forming the foundation for our systematic review. Each research team member applied the search strings for literature retrieval in the databases, with summary judgments made against each of the above criteria. The team then met to review all the decisions depicted in Table 3.

Table 3.

Search strategy and number of studies (results returned).

3.4. Data Extraction

During the data extraction stage, the research team used EndNote20 software to edit and organize the documents and Excel software (version 2022) to summarize and manage the results.

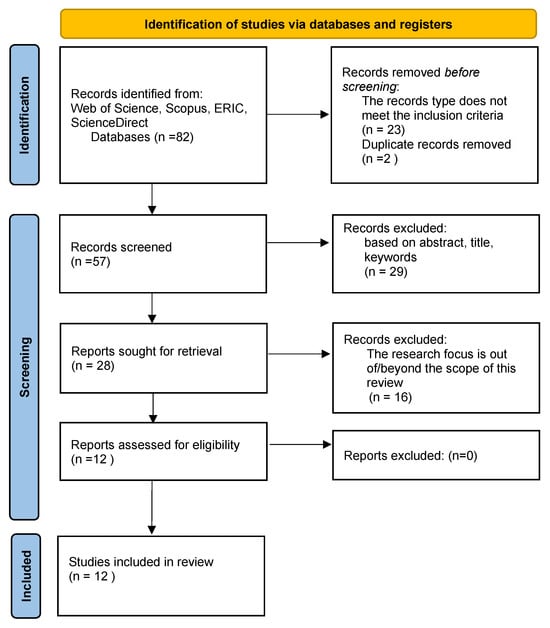

After conducting the systematic review, the preliminary reviewed articles were imported into Endnote20 for retention, and 82 manuscripts were retained; of these, 23 articles did not meet the first inclusion criterion related to the article type criterion, and 2 were duplicate manuscripts. Those documents were excluded. Then, the research team reviewed the articles’ titles, abstracts, and keywords and deleted 29 articles. These articles failed to meet the criterion, as these articles did not align with the research focus specified in the second inclusion criterion. Next, the team members further reviewed the full text of the remaining articles, 16 of which did not meet the second inclusion criteria and so were excluded. A quality assessment was performed on the remaining 12 documents. Figure 1 shows a PRISMA flowchart reporting the number of articles included and excluded at each step.

Figure 1.

Flowchart of the systematic review selection process based on the PRISMA flow diagram.

3.5. Quality Assessment

The Mixed Methods Appraisal Tool (MMAT) was used to judge the quality of the studies. The MMAT is an important tool for assessing the quality of studies based on five evaluation criteria and is used during the assessment stage of systematic reviews, that is, reviews containing studies using mixed methodologies and quantitative and qualitative approaches [44,45].

Quality assessment involves two steps: First, the research team screens the articles, categorizes them based on five categories of study design (e.g., mixed methods, qualitative, etc.), and then evaluates them based on the five quality criteria associated with each category. In addition, the research team should determine whether these documents should be included in the final review assessment. At this juncture, documents must satisfy both conditions to progress to the final evaluation phase. Per the MMAT User Guide, papers must meet two screening questions outlined by MMAT before undergoing the conclusive assessment. Responding with “No” or “Can’t tell” to either or both questions could signify that the paper is unsuitable for appraisal using the MMAT. Please refer to Table 4 for further details. Second, each category is evaluated based on five criteria associated with it. Each criterion can be rated in three ways: Y for yes, N for no, and CT for cannot tell. During the second-stage screening, when the answer is “no” or “cannot tell” to one or both screening questions, the steps detailed in Table 5 are followed.

Table 4.

Based on the first MMAT screening stage.

Table 5.

Based on MMAT’s second screening stage.

4. Results

According to this review, 12 studies referred to the effectiveness of different delivery modes of CPD for science teachers. The research design categories in Table 6 have been adjusted to correspond with those presented in the MMAT. Hong, Pluye, et al. [44] classified qualitative data collection and analysis methods that include case studies, such as focus groups, in-depth interviews, and hybrid thematic analyses (deductive and inductive), into Category 1 (qualitative studies). Studying the association between health-related outcomes and other factors at a specific point in time using cross-sectional analytical methods falls under Category 3 (quantitative non-randomized research). A “Survey Research method by which information is gathered by asking people questions on a specific topic and the data collection procedure is standardized and well defined” [44] belongs to Category 4 (quantitative descriptive studies). Research that involves combining qualitative (QUAL) and quantitative (QUAN) methods belongs to Category 5 (mixed-methods studies). The effectiveness of delivery modes of CPD is usually investigated using qualitative and mixed research approaches.

Table 6.

Research design of the included studies.

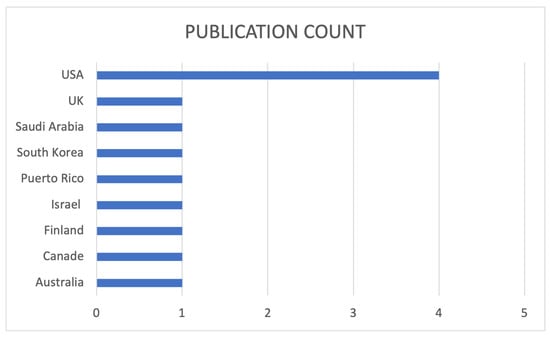

As shown in Figure 2, most of the studies included in this review were conducted in the United States of America; there were four research articles. Represented by only one research article each, the countries featured in the study included the United Kingdom, Saudi Arabia, South Korea, Puerto Rico, Israel, Finland, Canada, and Australia. It is evident that scholarly attention in America towards studying the effectiveness of various CPD modes for science teachers surpasses that of other nations.

Figure 2.

Studies categorized by country of origin.

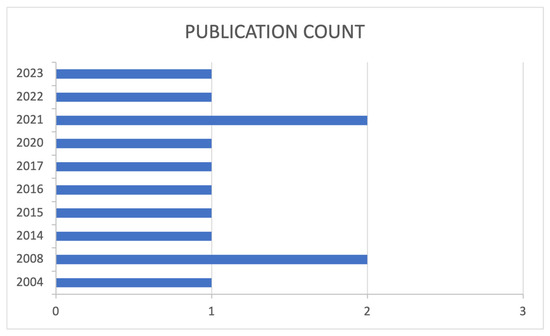

With just 12 publications included in the review analysis, Figure 3 illustrates how few published articles there are overall. The number of publications fluctuates between different years. The number of publications in 2008 and 2021 is higher than other years. This fluctuation may reflect developments and changes in the research field. In addition, the number of published articles has a certain distribution by year, indicating that research may receive attention at different times. This can result from the evolution of the field of study or topic.

Figure 3.

Publication trends over time: quantity and years.

Three factors may have contributed to this review’s limited selection of articles. First, this study only considers the analysis of the effectiveness of different delivery modes of CPD, which is limited to specific research fields. Second, this study strictly limits the research objects, and the research subjects of the articles included in the review were all science teachers. Third, the research team restricted the included documents to those written and published in English.

4.1. Comparative Effectiveness of Face-to-Face and Online CPD

Empirical studies have shown that the online delivery mode for CPD training for science teachers is just as effective as the face-to-face training mode. In some specific situations, online CPD is more effective than traditional CPD [19]. Binmohsen and Abrahams [21] conducted an empirical study using mixed methods in the context of Saudi Arabia to evaluate the effectiveness of different delivery modes comparatively. To ensure that the training content was roughly the same, this study set up two control groups, A and B, for comparative research. This study is highly representative. Group A adopted the traditional delivery mode, while Group B adopted the online mode. After the participants had completed their training, they filled out a questionnaire survey and participated in interviews. The findings indicate that online CPD is as effective as learning in a face-to-face environment and that the online method effectively resolves participants’ conflicts related to schoolwork schedules. It is more advantageous in the culture of Saudi Arabia, especially for female teachers. Many other studies have confirmed this finding; in other words, they show that the effectiveness of face-to-face and online CPD in cultivating teachers’ quality and ability is consistent [46].

Ravitz et al. [35] identified seven common characteristics of effective professional learning for teachers in professional development programs: “(1) Is content focused; (2) Incorporates active learning utilizing adult learning theory; (3) Supports collaboration, typically in job-embedded contexts; (4) Uses models and modeling of effective practice; (5) Provides coaching and expert support; (6) Offers opportunities for feedback and reflection; (7) Is of sustained duration” [34]. Hammond and his team used conventional criteria for assessing CPD effectiveness to gauge the efficacy of online CPD and used a mixed-research approach to analyze the effectiveness of online CPD, with six of the seven characteristics fully substantiated. However, the sixth feature, which provides an opportunity for feedback and reflection, failed to receive adequate confirmation. Nevertheless, the research findings reflect on teaching design, with no inclusion of reflection on implementation.

4.2. Factors Influencing Different CPD Delivery Modes

4.2.1. Factors Affecting Face-to-Face CPD Effectiveness

The first factor affecting the efficacy of the face-to-face CPD mode is the delivery cost, followed by the substance of the delivery.

In terms of delivery costs, traditional CPD entails comparatively high expenses. Teachers participating in the training often need to coordinate with the leaders of the institutions and the educational organization and modify the original teaching schedule before they can participate in CPD training [38]. Moreover, the traditional types of CPD often require participants to gather in designated areas and attend training sessions at specified times, directly leading to travel expenses generation [52]. Participants have to invest time and money to participate in face-to-face CPD.

In terms of content delivery, traditional CPD evaluations include many negative comments in terms of content; for example, it is noted that the training content is mostly theoretical, that the training content mostly revolves around standardized content, and that the training content lacks pertinence and is only weakly related to the profession of the trained teachers [38].

Above all, the impact of the effectiveness of face-to-face CPD can be summarized as follows: time costs, financial expenditure, the standardization and professional relevance of the training content, and support requirements for institutions and leadership.

4.2.2. Factors Affecting the Effectiveness of Online CPD

It is worth noting that the jury is still out regarding the practical impact of online CPD on teachers and students. Previous research showed that the factors that may affect the effectiveness of online CPD are not related to the delivery mode but constitute other factors.

There are many influencing factors; the first factor that cannot be ignored is a stable internet connection. Participation in an online CPD requires a computer with stable access to the Internet, but not all users can afford this; in developing countries in particular, this is still a major factor affecting the effectiveness of online CPD [21,52].

Ravitz et al. [35] revealed the factors that may affect the effectiveness of online CPD, including the personal background of the teachers participating in the training, their different competencies and abilities, and the results of the interaction between attitudes and the participants’ working environment. However, the study conducted by Ravitz et al. has certain shortcomings. For example, some socio-psychological variables were researched, but they ignored whether socio-demographic variables could potentially affect the effectiveness of online CPD. The research conducted by Mary and Cha [50] during the COVID-19 epidemic made up for this shortcoming. Using quantitative research methods, they pointed out that self-efficacy is a factor that can affect the effectiveness of online CPD and compared the effects of different genders and teaching experiences on self-efficacy. The study also found that online CPD containing UDL design elements, especially the webinar mode, positively impacts science teachers’ self-efficacy, thereby promoting positive teaching practices. In addition, another factor that affects the effectiveness of online CPD is establishing a communication community. Because of the technical aspects of online continuing education, instructors must communicate with participants via the Internet. Creating a community of real-time communication between instructors and participants makes it challenging. It also hinders communication among the participants themselves, which, in turn, affects their ability to exchange ideas and learn from one another. As a result, they have difficulty in interactively gaining insights that enhance learning [46]. Communication can effectively promote positive values and attitudes in teaching and learning.

Another factor mentioned by only one author out of the 12 articles reviewed was the role of trainers in online CPD. In an online CPD program, the training teachers are different from the teachers in the face-to-face mode. Training teachers adjust the training method according to the type of participants involved in the training. These detailed changes are more likely to be based on personal guidance.

4.3. Advantages of Different CPD Delivery Modes

Regarding the advantages of the two different delivery types, the main advantage of face-to-face CPD over online CPD is that traditional CPD provides a communication community for participants. They can communicate face-to-face with trainers in real time, and problems can be solved in real-time. Participants can also engage in real-time communication regarding the training content and receive feedback through peer interaction, thereby enhancing their learning experience [49].

The various advantages of online CPD make it a common form of learning and career development. First, online CPD has no geographical restrictions, which means participants can easily access online CPD resources worldwide, thereby gaining a wider range of professional knowledge and experience. Second, it is cost-effective, reducing the cost of travel and printing materials. Third, flexible learning allows participants to arrange their professional learning time flexibly according to their working schedule. Based on these three points, participants can flexibly arrange the time and location of professional learning according to their schedules without commuting costs. In addition, online CPD participants can access specialized professional development training materials aligned with their respective research fields for personalized learning. This addresses the limitations associated with the broad, generalized content often found in traditional CPD training programs [53,54].

Although online CPD has also established an online communication community, the research indicates that community interactions in the online mode cannot be compared with the face-to-face communication community established in the traditional delivery mode. The traditional mode has more significant features in terms of community interaction.

5. Discussion

The main goal of this literature review was to provide a comprehensive analysis of the comparative effectiveness of different modes of delivering CPD for science teachers. Using the PRISMA method, the research team retrieved four databases and selected a total of 12 articles for analysis after conducting a quality assessment. This article employed two methods to analyze the effectiveness of face-to-face CPD and online CPD. The first section offers a quantitative descriptive analysis of the overall article, analyzing the country and year of publication. The second section encompasses a qualitative analysis with a primary focus on the following three dimensions: assessing the effectiveness of diverse delivery modes in CPD, examining factors influencing the efficacy of different CPD delivery modes, and a comparative evaluation of the merits associated with different delivery approaches.

This article offers a relatively comprehensive analysis of the effectiveness of different CPD delivery modes for science teachers. Both were equally effective regarding the effectiveness of CPD delivery types for science teachers. However, the factors influencing effectiveness are not inherent to the delivery mode but depend on external variables. Factors influencing the effectiveness of face-to-face CPD include working schedules, financial expenditures, institutional and leadership support, and a lack of specialized content standardization. Factors that affect the effectiveness of online CPD include a stable network infrastructure, participants’ psychological variables, such as attitudes, beliefs, and self-efficacy, and the influence of the work environment and training teachers involved in online CPD programs.

Subsequently, the research team compared the advantages and differences between the two delivery modes. The primary benefit of in-person CPD is its capacity to foster an interactive learning community among participants. This mode enables direct, face-to-face communication between participants and trainers, facilitating prompt problem-solving and engagement. Moreover, participants can engage in real-time communication and discussions to enhance their learning experience. This is difficult to achieve via online modes. However, online CPD also built a real-time virtual network community in which participants could communicate; this kind of online community often required participants to have digital competence, and the requirement for digital competence inevitably became a factor potentially affecting the effectiveness of online CPD. Compared with the traditional mode, the advantages of online CPD are much clearer. For instance, participants could overcome geographical restrictions and flexibly choose training times according to their working schedule; they could also choose more specific courses based on the relevance of their subjects and research fields. Online CPD offers cost-saving advantages over traditional delivery modes by eliminating expenses such as commuting costs.

This study comprehensively evaluates significant phases, integrating the research findings of two delivery modes, face-to-face CPD, and online CPD, and it systematically compares the impact of these two modes on science teachers. Additionally, the study synthesizes findings from the current research on factors influencing the effectiveness of different delivery modes. This research contributes to the general literature on teachers’ continuing professional development and has implications for future research in CPD-related areas.

Although this study makes some contributions to the field of CPD, we must acknowledge that it has some limitations. First, the articles included in this study were retrieved from only four databases: Web of Science (WoS), Scopus, ERIC, and ScienceDirect. Additionally, the scope of the study was relatively narrow, and the choice of keywords was not inclusive enough, as it only focused on empirical research on the effectiveness of different delivery modes for science teachers. The selection criteria were restricted to research articles and conference papers, resulting in only 12 articles meeting the review criteria after a systematic screening process. This may have led to the exclusion of potentially valuable information. Furthermore, our review was confined to papers written and published in English, potentially overlooking valuable articles published in other languages.

Despite the limitations of this study, it suggests two main directions for future research: First, to examine the impact of social–psychological variables on the effectiveness of different CPD delivery modes; second, regarding the comparative analysis of online CPD and face-to-face CPD, it is crucial to underscore the profound impact of building a communication community on the effectiveness of CPD. This area constitutes a promising area for further investigation.

6. Conclusions

This article systematically reviews research findings regarding the effectiveness of diverse delivery modes for CPD for science teachers. It offers a comprehensive analysis and discussion encompassing three pivotal aspects: an evaluation of the effectiveness of various CPD delivery modes, an examination of the factors influencing the effectiveness of different CPD delivery modes, and a comparative assessment of the advantages associated with various delivery modes. A systematic review revealed that, when considering CPD delivery modes in isolation, the online and face-to-face methods have an equally effective impact on the professional development of science teachers. However, practical differences in their effectiveness are often attributed to external factors, including personal psychological variables and sociological factors. The sense of community created by the traditional CPD model plays a crucial role, and it is challenging to replicate it in online CPD. Additionally, digital competence may influence the effectiveness of online CPD.

7. Implications

External factors, such as personal psychological variables and sociological factors largely influence the variations in the effectiveness of CPD delivery modes. Recognizing the significance of these factors in practice is essential to enhancing the effectiveness of various CPD modes. In addition, future research should focus on strategies to effectively enhance participants’ communication and engagement in online CPD communities. Exploring the potential relationships between psychosocial variables, the effectiveness of CPD modes, and the impact of digital skills on online CPD effectiveness is an avenue for further investigation. While this article primarily focuses on science teachers, future studies can expand the scope of this research to include a broader range of subjects, facilitating more comprehensive discussions.

Author Contributions

Conceptualization, Z.L.; methodology, Z.L., N.C.H. and H.A.J.; software, Z.L.; validation, N.C.H. and H.A.J.; formal analysis, N.C.H. and H.A.J.; investigation, N.C.H. and H.A.J.; data curation, Z.L.; writing—original draft preparation, Z.L.; writing—review and editing, Z.L.; supervision, N.C.H. and H.A.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

I would like express my sincere gratitude to Seyedali Ahrari for his valuable insights during the writing of this paper. His expertise and thoughtful suggestions have significantly contributed to improvement of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sims, A. Introducing continuing professional development. Adv. Psychiatr. Treat. 1994, 1, 3–8. [Google Scholar] [CrossRef][Green Version]

- Collin, K.; Van der Heijden, B.; Lewis, P. Continuing professional development. Int. J. Train. Dev. 2012, 16, 155–163. [Google Scholar] [CrossRef]

- Njenga, M. Teacher Participation in Continuing Professional Development: A Theoretical Framework—Moses Njenga. J. Adult Contin. Educ. 2023, 29, 69–85. Available online: https://journals.sagepub.com/doi/10.1177/14779714221123603 (accessed on 15 October 2023). [CrossRef]

- Padwad, A.; Dixit, K. Continuing professional development policy ‘Think Tank’: An innovative experiment in India. In Innovations in the Continuing Professional Development of English Language Teachers; British Council: London, UK, 2014; p. 249. [Google Scholar]

- Harris, J.; Mishra, P.; Koehler, M.J. Teachers’ Technological Pedagogical Content Knowledge and Learning Activity Types. J. Res. Technol. Educ. 2009, 41, 393–416. Available online: https://www.tandfonline.com/doi/abs/10.1080/15391523.2009.10782536 (accessed on 11 October 2023). [CrossRef]

- Fütterer, T.; Scherer, R.; Scheiter, K.; Stürmer, K.; Lachner, A. Will, skills, or conscientiousness: What predicts teachers’ intentions to participate in technology-related professional development? Comput. Educ. 2023, 198, 104756. [Google Scholar] [CrossRef]

- Abakah, E. Teacher learning from continuing professional development (CPD) participation: A sociocultural perspective. Int. J. Educ. Res. Open 2023, 4, 100242. [Google Scholar] [CrossRef]

- Kutnick, P.; Gartland, C.; Good, D.A. Evaluating a programme for the continuing professional development of STEM teachers working within inclusive secondary schools in the UK. Int. J. Educ. Res. 2022, 113, 101974. [Google Scholar] [CrossRef]

- Rixon, S. Teacher Research in Language Teaching—A critical analysis. System 2013, 41, 1086–1088. [Google Scholar] [CrossRef]

- OECD. TALIS 2018 Technical Report; OECD: Paris, France, 2018. [Google Scholar]

- Jie, Y. Understanding and Enhancing Chinese TEFL Teachers’ Motivation for Continuing Professional Development through the Lens of Self-Determination Theory. Front. Psychol. 2021, 12, 768320. [Google Scholar] [CrossRef]

- Abakah, E.; Widin, J.; Ameyaw, E.K. Continuing Professional Development (CPD) Practices among Basic School Teachers in the Central Region of Ghana. SAGE Open 2022, 12, 21582440221094597. Available online: https://journals.sagepub.com/doi/full/10.1177/21582440221094597 (accessed on 15 October 2023). [CrossRef]

- Casanova, C.R.; King, J.A.; Fischer, D. Exploring the role of intentions and expectations in continuing professional development in sustainability education. Teach. Teach. Educ. 2023, 128, 104115. [Google Scholar] [CrossRef]

- Martínez-García, A.; Horrach-Rosselló, P.; Mulet-Forteza, C. Evolution and current state of research into E-learning. Heliyon 2023, 9, e21016. [Google Scholar] [CrossRef] [PubMed]

- Klein, D.A.; Ware, M. E-learning: New opportunities in continuing professional development. Learn. Publ. 2003, 16, 34–46. [Google Scholar] [CrossRef]

- Wong, B.; Wong, B.Y.-Y.; Yau, J.S.-W. The E-learning Trends for Continuing Professional Development in the Accountancy Profession in Hong Kong. Commun. Comput. Inf. Sci. 2018, 258–266. [Google Scholar] [CrossRef]

- Player, E.; Shiner, A.; Steel, N.; Rodrigues, V. Massive open online courses for continuing professional development of GPs. InnovAiT 2020, 13, 522–527. [Google Scholar] [CrossRef]

- Lichtenstein, G.; Phillips, M. Comparing Online vs. In-Person Outcomes of a Hands-On, Lab-Based, Teacher Professional Development Program: Research Experiences for Teachers in the Time of COVID-19. J. STEM Outreach 2021, 4, n2. [Google Scholar] [CrossRef]

- Beardsley, P.M.; Csikari, M.; Ertzman, A.; Jeffus, M. BioInteractive’s Free Online Professional Learning Course on Evolution. Am. Biol. Teach. 2022, 84, 68–74. [Google Scholar] [CrossRef]

- Bolton-King, R.; Nichols-Drew, L.J.; Turner, I.J. RemoteForensicCSI: Enriching teaching, training and learning through networking and timely CPD. Sci. Justice 2022, 62, 768–777. [Google Scholar] [CrossRef]

- Binmohsen, S.A.; Abrahams, I. Science teachers’ continuing professional development: Online vs face-to-face. Res. Sci. Technol. Educ. 2020, 40, 291–319. [Google Scholar] [CrossRef]

- Gottlieb, M.; Egan, D.J.; Krzyzaniak, S.; Wagner, J.; Weizberg, M.; Chan, T.M. Rethinking the Approach to Continuing Professional Development Conferences in the Era of COVID-19. J. Contin. Educ. Health Prof. 2020, 40, 187–191. [Google Scholar] [CrossRef]

- Nasrullah, N.; Rosalina, E.; Mariani, N. Measuring Teacher Professional Development Learning Activities in Post COVID-19. Alsuna 2022, 4, 168–191. [Google Scholar] [CrossRef]

- Bidwell, S.; Kennedy, L.; Burke, M.; Collier, L.M.; Hudson, B. Continuing professional development in the COVID-19 era: Evolution of the Pegasus Health Small Group model. J. Prim. Health Care 2022, 14, 268–272. [Google Scholar] [CrossRef] [PubMed]

- Mack, H.; Golnik, K.; Prior Filipe, H. Faculty Development of CPD Teachers in Low-Resource Environments Post-COVID-19. J. CME 2023, 12, 2161784. [Google Scholar] [CrossRef] [PubMed]

- National Academy of Sciences; National Academy of Engineering; Institute of Medicine. Rising above the Gathering Storm; National Academies Press: Washington, DC, USA, 2007. [Google Scholar] [CrossRef]

- Stevenson, M.; Stevenson, C.; Cooner, D. Improving Teacher Quality for Colorado Science Teachers in High Need Schools. J. Educ. Pract. 2015, 6, 42–50. Available online: https://eric.ed.gov/?id=EJ1083814 (accessed on 15 October 2023).

- Al Ofi, A.-H. Evaluating the Effectiveness of Continuous Professional Development Programmes for English Language Teachers. Int. J. Educ. Res. 2022, 10, 89–106. Available online: https://www.ijern.com/journal/2022/February-2022/08.pdf (accessed on 15 October 2023).

- Kirkpatrick, D.; Kirkpatrick, J. Evaluating Training Programs: The Four Levels; Berrett-Koehler Publishers: Oakland, CA, USA, 2006. [Google Scholar]

- Guskey, T.R. Evaluating Professional Development; Corwin Press: Thousand Oaks, CA, USA, 2000; Available online: https://learningforward.org/wp-content/uploads/2014/05/guskey-5-levels.pdf (accessed on 15 October 2023).

- Guskey, T.R. The Characteristics of Effective Professional Development: A Synthesis of Lists. Ed.gov. 2003. Available online: https://eric.ed.gov/?id=ED478380 (accessed on 15 October 2023).

- Desimone, L. Improving Impact Studies of Teachers’ Professional Development: Toward Better Conceptualizations and Measures. Educ. Res. 2009, 38, 181–199. [Google Scholar] [CrossRef]

- Kang, H.S.; Cha, J.; Ha, B.W. What Should We Consider in Teachers’ Professional Development Impact Studies? Based on the Conceptual Framework of Desimone. Creat. Educ. 2013, 4, 11–18. [Google Scholar] [CrossRef]

- Darling-Hammond, L.; McLaughlin, M.W. Policies That Support Professional Development in an Era of Reform. Phi Delta Kappan 2011, 92, 81–92. [Google Scholar] [CrossRef]

- Ravitz, J.; Stephenson, C.; Parker, K.; Blazevski, J. Early Lessons from Evaluation of Computer Science Teacher Professional Development in Google’s CS4HS Program. ACM Trans. Comput. Educ. 2017, 17, 1–16. Available online: https://eric.ed.gov/?id=EJ1252510 (accessed on 15 October 2023). [CrossRef]

- Masters, J.; De Kramer, R.M.; O’Dwyer, L.M.; Dash, S.; Russell, M. The Effects of Online Professional Development on Fourth Grade English Language Arts Teachers’ Knowledge and Instructional Practices. J. Educ. Comput. Res. 2010, 43, 355–375. Available online: https://journals.sagepub.com/doi/10.2190/EC.43.3.e (accessed on 15 October 2023). [CrossRef]

- Dalgarno, N.; Colgan, L. Supporting novice elementary mathematics teachers’ induction in professional communities and providing innovative forms of pedagogical content knowledge development through information and communication technology. Teach. Teach. Educ. 2007, 23, 1051–1065. [Google Scholar] [CrossRef]

- Cavalluzzo, L.; Lopez, D.; Ross, J.; Larson, M.; Martinez, M. Appalachia Educational Laboratory (AEL) at A Study of the Effectiveness and Cost of AEL’s Online Professional Development Program in Reading in Tennessee. 2005. Available online: https://files.eric.ed.gov/fulltext/ED489124.pdf (accessed on 15 October 2023).

- Russell, M.; Carey, R.; Kleiman, G.; Venable, J.D. Face-to-Face and Online Professional Development for Mathematics Teachers: A Comparative Study. J. Asynchronous Learn. Netw. 2009, 13, 71–87. Available online: https://www.learntechlib.org/p/104029/ (accessed on 15 October 2023). [CrossRef]

- Sun, P.; Ray Jui-Fang Tsai Finger, G.; Chen, Y.-Y.; Yeh, D. What drives a successful e-Learning? An empirical investigation of the critical factors influencing learner satisfaction. Comput. Educ. 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- Virani, S.R.; Saini, J.R.; Sharma, S. Adoption of massive open online courses (MOOCs) for blended learning: The Indian educators’ perspective. Interact. Learn. Environ. 2023, 31, 1060–1076. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.; Akl, E.A.; Brennan, S.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Hong, Q.N.; Pluye, P.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M.-P.; Griffiths, F.; Nicolau, B.; et al. Mixed Methods Appraisal Tool (MMAT), Version 2018. Registration of Copyright (#1148552); Canadian Intellectual Property Office, Industry Canada: Gatineau, QC, Canada, 2018. [Google Scholar]

- Ventista, O.M.; Brown, C. Teachers’ professional learning and its impact on students’ learning outcomes: Findings from a systematic review. Soc. Sci. Humanit. Open 2023, 8, 100565. [Google Scholar] [CrossRef]

- Bitan-Friedlander, N.; Dreyfus, A.; Milgrom, Z. Types of “teachers in training”: The reactions of primary school science teachers when confronted with the task of implementing an innovation. Teach. Teach. Educ. 2004, 20, 607–619. [Google Scholar] [CrossRef]

- Haydn, T.; Barton, R. “First do no harm”: Factors influencing teachers’ ability and willingness to use ICT in their subject teaching. Comput. Educ. 2008, 51, 439–447. [Google Scholar] [CrossRef]

- Arce, J.; Bodner, G.M.; Hutchinson, K. A study of the impact of inquiry-based professional development experiences on the beliefs of intermediate science teachers about “best practices” for classroom teaching. Int. J. Educ. Math. Sci. Technol. 2014, 2, 85–95. [Google Scholar] [CrossRef]

- Juuti, K.; Lavonen, J.; Aksela, M.; Meisalo, V. Adoption of ICT in Science Education: A Case Study of Communication Channels in a Teachers’ Professional Development Project. Eurasia J. Math. Sci. Technol. Educ. 2023, 5, 103–118. Available online: https://eric.ed.gov/?id=EJ905663 (accessed on 15 October 2023). [CrossRef] [PubMed]

- Mary, L.; Cha, J. Filipino Science Teachers’ Evaluation on Webinars’ Alignments to Universal Design for Learning and Their Relation to Self-Efficacy amidst the Challenges of the COVID-19 Pandemic. Asia-Pac. Sci. Educ. 2021, 7, 421–451. Available online: https://eric.ed.gov/?id=EJ1341612 (accessed on 15 October 2023).

- Owston, R.; Wideman, H.H.; Murphy, J.; Lupshenyuk, D. Blended teacher professional development: A synthesis of three program evaluations. Internet High. Educ. 2008, 11, 201–210. [Google Scholar] [CrossRef]

- Herbert, S.; Campbell, C.; Loong, E. Online Professional Learning for Rural Teachers of Mathematics and Science. Australas. J. Educ. Technol. 2016, 32, 99–114. Available online: https://eric.ed.gov/?id=EJ1106024 (accessed on 15 October 2023). [CrossRef]

- Modise, M.E.-P. Continuous professional development and student support in an open and distance e-learning institution: A case study. Int. J. Afr. High. Educ. 2020, 7. [Google Scholar] [CrossRef]

- Johnson, M.; Prescott, D.; Lyon, S. Learning in Online Continuing Professional Development: An Institutionalist View on the Personal Learning Environment. J. New Approaches Educ. Res. 2017, 6, 20–27. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).