Impact of a Low-Stakes Assessments Model with Retake in General Chemistry: Connecting to Student Attitudes and Self-Concept

Abstract

1. Introduction

2. Theoretical Frameworks

3. Research Questions

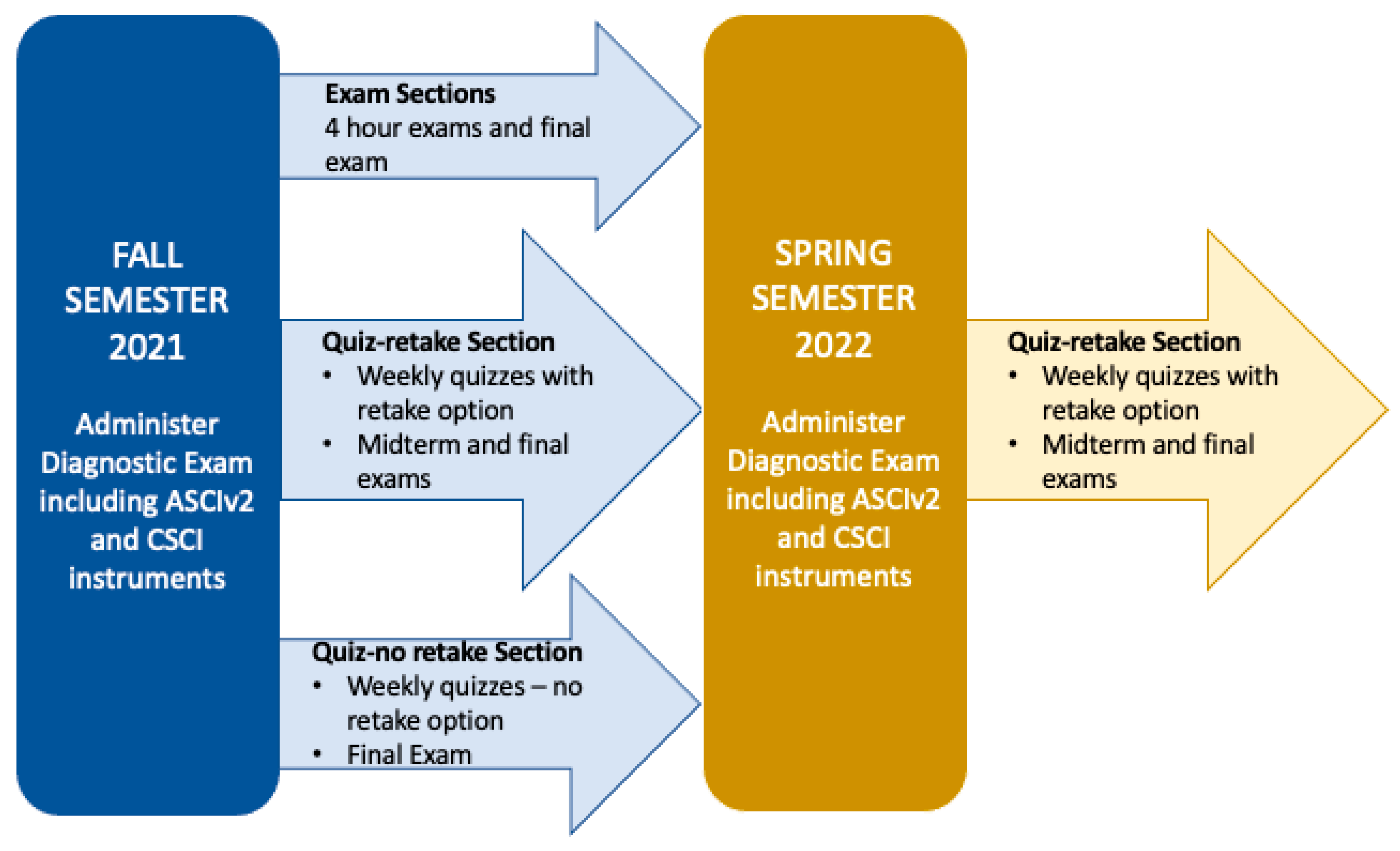

4. Student Demographics and Section Detail

5. Methodology

- Chemistry diagnostic score (M0);

- Initial ALEKS knowledge check (M00);

- ASCIv2 factor 1: Intellectual Accessibility (M1);

- ASCIv2 factor 2: Emotional Satisfaction (M2);

- CSCI factor 1: Mathematical Self-Concept (M3);

- CSCI factor 2: Chemistry Self-Concept (M4);

- CSCI factor 3: Academic Self-Concept (M5);

- CSCI factor 4: Academic Enjoyment Self-Concept (M6);

- CSCI factor 5: Creativity Self-Concept (M7).

6. Results and Discussion

6.1. Item Descriptive Statistics and Correlations

6.2. Group Comparisons

6.3. Comparsons across General Chemistry I/II

7. Limitations

8. Implications for Research and Practice

9. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cantley, I.; McAllister, J. The Gender Similarities Hypothesis: Insights From A Multilevel Analysis of High-Stakes Examination Results in Mathematics. Sex Roles 2021, 85, 481–496. [Google Scholar] [CrossRef]

- Cotner, S.; Ballen, C.J. Can mixed assessment methods make biology classes more equitable? PLoS ONE 2017, 12, e0189610. [Google Scholar] [CrossRef]

- Montolio, D.; Taberner, P.A. Gender differences under test pressure and their impact on academic performance: A quasi-experimental design. J. Econ. Behav. Organ. 2021, 191, 1065–1090. [Google Scholar] [CrossRef]

- O’Reilly, T.; McNamara, D.S. The impact of science knowledge, reading skill, and reading strategy knowledge on more traditional “high-stakes” measures of high school students’ science achievement. Am. Educ. Res. J. 2007, 44, 161–196. [Google Scholar] [CrossRef]

- Salehi, S.; Cotner, S.; Azarin, S.M.; Carlson, E.E.; Driessen, M.; Ferry, V.E.; Harcombe, W.; McGaugh, S.; Wassenberg, D.; Yonas, A.; et al. Gender Performance Gaps Across Different Assessment Methods and the Underlying Mechanisms: The Case of Incoming Preparation and Test Anxiety. Front. Educ. 2019, 4, 107. [Google Scholar] [CrossRef]

- Leeming, F.C. The exam-a-day procedure improves performance in psychology classes. Teach. Psychol. 2002, 29, 210–212. [Google Scholar] [CrossRef]

- Pennebaker, J.W.; Gosling, S.D.; Ferrell, J.D. Daily Online Testing in Large Classes: Boosting College Performance while Reducing Achievement Gaps. PLoS ONE 2013, 8, e79774. [Google Scholar] [CrossRef]

- Orr, R.; Foster, S. Increasing student success using online quizzing in introductory (majors) biology. CBE Life Sci. Educ. 2013, 12, 509–514. [Google Scholar] [CrossRef][Green Version]

- Walck-Shannon, E.M.; Cahill, M.J.; McDaniel, M.A.; Frey, R.F. Participation in Voluntary Re-quizzing Is Predictive of Increased Performance on Cumulative Assessments in Introductory Biology. CBE Life Sci. Educ. 2019, 18, ar15. [Google Scholar] [CrossRef]

- Sullivan, S.G.B.; Hoiriis, K.T.; Paolucci, L. Description of a change in teaching methods and comparison of quizzes versus midterms scores in a research methods course. J. Chiropr. Educ. 2018, 32, 84–89. [Google Scholar] [CrossRef]

- Casselman, B.L.; Atwood, C.H. Improving General Chemistry Course Performance through Online Homework-Based Metacognitive Training. J. Chem. Educ. 2017, 94, 1811–1821. [Google Scholar] [CrossRef]

- Crucho, C.I.C.; Avo, J.; Diniz, A.M.; Gomes, M.J.S. Challenges in Teaching Organic Chemistry Remotely. J. Chem. Educ. 2020, 97, 3211–3216. [Google Scholar] [CrossRef]

- Dempster, F.N. Distributing and managing the conditions of encoding and practice. In Human Memory; Bjork, E.L., Bjork, R.A., Eds.; Academic Press: Cambridge, MA, USA, 1996; pp. 197–236. [Google Scholar]

- Dempster, F.N. Using tests to promote classroom learning. In Handbook on Testing; Dillon, R.F., Ed.; Greenwood Press: Westport, CT, USA, 1997; pp. 332–346. [Google Scholar]

- Roediger, H.L.; Karpicke, J.D. The Power of Testing Memory Basic Research and Implications for Educational Practice. Perspect. Psychol. Sci. 2006, 1, 181–210. [Google Scholar] [CrossRef] [PubMed]

- Bjork, R.A.; Bjork, E.L. A new theory of disuse and an old theory of stimulus fluctuation. In From Learning Processes to Cognitive Processes: Essays in Honor of William K. Estes; Healy, A., Kosslyn, S., Shiffrin, R., Eds.; Erlbaum: Mahwah, NJ, USA, 1992; Volume 2, pp. 35–67. [Google Scholar]

- Bjork, R.A. Retrieval practice and the maintenance of knowledge. In Practical Aspects of Memory: Current Research and Issues; Gruneberg, M.M., Morris, P.E., Sykes, R.N., Eds.; Wiley: Hoboken, NJ, USA, 1988; Volume 1, pp. 396–401. [Google Scholar]

- Dweck, C.S. Mindset: The New Psychology of Success; Random House: New York, NY, USA, 2016. [Google Scholar]

- Murphy, S.; MacDonald, A.; Wang, C.A.; Danaia, L. Towards an Understanding of STEM Engagement: A Review of the Literature on Motivation and Academic Emotions. Can. J. Sci. Math. Technol. Educ. 2019, 19, 304–320. [Google Scholar] [CrossRef]

- Richmond, J.M.; Baumgart, N. A Hierarchical Analysis of Environmental Attitudes. J. Environ. Educ. 1981, 13, 31–37. [Google Scholar] [CrossRef]

- Haladyna, T.; Olsen, R.; Shaughnessy, J. Correlates of Class Attitude toward Science. J. Res. Sci. Teach. 1983, 20, 311–324. [Google Scholar] [CrossRef]

- Shrigley, R.L. Test of Science-Related Attitudes; Wiley: Hoboken, NJ, USA, 1983; Volume 20, pp. 87–89. [Google Scholar] [CrossRef]

- Schibeci, R.A. Students, Teachers, and the Assessment of Attitudes to School. Aust. J. Educ. 1984, 28, 17–24. [Google Scholar] [CrossRef]

- Shrigley, R.L.; Koballa, T.R. Attitude Measurement—Judging the Emotional Intensity of Likert-Type Science Attitude Statements. J. Res. Sci. Teach. 1984, 21, 111–118. [Google Scholar] [CrossRef]

- Schibeci, R.A.; Riley, J.P. Influence of Students Background and Perceptions on Science Attitudes and Achievement. J. Res. Sci. Teach. 1986, 23, 177–187. [Google Scholar] [CrossRef]

- Shrigley, R.L.; Koballa, T.R.; Simpson, R.D. Defining Attitude for Science Educators. J. Res. Sci. Teach. 1988, 25, 659–678. [Google Scholar] [CrossRef]

- Schibeci, R.A. Home, School, and Peer Group Influences on Student-Attitudes and Achievement in Science. Sci. Educ. 1989, 73, 13–24. [Google Scholar] [CrossRef]

- Shrigley, R.L.; Koballa, T.R. A Decade of Attitude Research Based on Hovland Learning-Theory Model. Sci. Educ. 1992, 76, 17–42. [Google Scholar] [CrossRef]

- Osborne, J.; Simon, S.; Collins, S. Attitudes towards science: A review of the literature and its implications. Int. J. Sci. Educ. 2003, 25, 1049–1079. [Google Scholar] [CrossRef]

- Flaherty, A.A. A review of affective chemistry education research and its implications for future research. Chem. Educ. Res. Pract. 2020, 21, 698–713. [Google Scholar] [CrossRef]

- Mager, R.F. Developing Attitude toward Learning; Fearon Publishers: Belmont, CA, USA, 1968. [Google Scholar]

- Ajzen, I.; Madden, T.J. Prediction of Goal-Directed Behavior—Attitudes, Intentions, and Perceived Behavioral-Control. J. Exp. Soc. Psychol. 1986, 22, 453–474. [Google Scholar] [CrossRef]

- Schunk, D.H. Self-Efficacy and Academic Motivation. Educ. Psychol. 1991, 26, 207–231. [Google Scholar] [CrossRef]

- Schunk, D.H. Self-Efficacy, Motivation, and Performance. J. Appl. Sport Psychol. 1995, 7, 112–137. [Google Scholar] [CrossRef]

- Gable, R.K. The Measurement of Attitudes toward People with Disabilities—Methods, Psychometrics and Scales. Contemp. Psychol. 1990, 35, 81. [Google Scholar] [CrossRef]

- Krumrei-Mancuso, E.J.; Newton, F.B.; Kim, E.; Wilcox, D. Psychosocial Factors Predicting First-Year College Student Success. J. Coll. Stud. Dev. 2013, 54, 247–266. [Google Scholar] [CrossRef]

- Beane, J.A.; Lipka, R.P.; Ludewig, J.W. Synthesis of Research on Self-Concept. Educ. Leadersh. 1980, 38, 84–89. [Google Scholar]

- Beane, J.A.; Lipka, R.P. Self-Concept and Self-Esteem—A Construct Differentiation. Child Study J. 1980, 10, 1–6. [Google Scholar]

- Marsh, H.W.; Walker, R.; Debus, R. Subject-Specific Components of Academic Self-Concept and Self-Efficacy. Contemp. Educ. Psychol. 1991, 16, 331–345. [Google Scholar] [CrossRef]

- Hassan, A.M.A.; Shrigley, R.L. Designing a Likert scale to measure chemistry attitudes. Sch. Sci. Math. 1984, 84, 659–669. [Google Scholar] [CrossRef]

- Bauer, C.F. Beyond “student attitudes”: Chemistry self-concept inventory for assessment of the affective component of student learning. J. Chem. Educ. 2005, 82, 1864–1870. [Google Scholar] [CrossRef]

- Nielsen, S.E.; Yezierski, E. Exploring the Structure and Function of the Chemistry Self-Concept Inventory with High School Chemistry Students. J. Chem. Educ. 2015, 92, 1782–1789. [Google Scholar] [CrossRef]

- Nielsen, S.E.; Yezierski, E.J. Beyond academic tracking: Using cluster analysis and self-organizing maps to investigate secondary students’ chemistry self-concept. Chem. Educ. Res. Pract. 2016, 17, 711–722. [Google Scholar] [CrossRef]

- Temel, S.; Sen, S.; Yilmaz, A. Validity and Reliability Analyses for Chemistry Self-Concept Inventory. J. Balt. Sci. Educ. 2015, 14, 599–606. [Google Scholar] [CrossRef]

- Werner, S.M.; Chen, Y.; Stieff, M. Examining the Psychometric Properties of the Chemistry Self-Concept Inventory Using Rasch Modeling. J. Chem. Educ. 2021, 98, 3412–3420. [Google Scholar] [CrossRef]

- Grove, N.; Bretz, S.L. CHEMX: An instrument to assess students’ cognitive expectations for learning chemistry. J. Chem. Educ. 2007, 84, 1524–1529. [Google Scholar] [CrossRef]

- Dalgety, J.; Coll, R.K.; Jones, A. Development of Chemistry Attitudes and Experiences Questionnaire (CAEQ). J. Res. Sci. Teach. 2003, 40, 649–668. [Google Scholar] [CrossRef]

- Lewis, S.E.; Shaw, J.L.; Heitz, J.O.; Webster, G.H. Attitude Counts: Self-Concept and Success in General Chemistry. J. Chem. Educ. 2009, 86, 744–749. [Google Scholar] [CrossRef]

- Bauer, C.F. Attitude towards chemistry: A semantic differential instrument for assessing curriculum impacts. J. Chem. Educ. 2008, 85, 1440–1445. [Google Scholar] [CrossRef]

- Xu, X.Y.; Lewis, J.E. Refinement of a Chemistry Attitude Measure for College Students. J. Chem. Educ. 2011, 88, 561–568. [Google Scholar] [CrossRef]

- Brandriet, A.R.; Xu, X.Y.; Bretz, S.L.; Lewis, J.E. Diagnosing changes in attitude in first-year college chemistry students with a shortened version of Bauer’s semantic differential. Chem. Educ. Res. Pract. 2011, 12, 271–278. [Google Scholar] [CrossRef]

- Brandriet, A.R.; Ward, R.M.; Bretz, S.L. Modeling meaningful learning in chemistry using structural equation modeling. Chem. Educ. Res. Pract. 2013, 14, 421–430. [Google Scholar] [CrossRef]

- Montes, L.H.; Ferreira, R.A.; Rodriguez, C. Explaining secondary school students’ attitudes towards chemistry in Chile. Chem. Educ. Res. Pract. 2018, 19, 533–542. [Google Scholar] [CrossRef]

- Kahveci, A. Assessing high school students’ attitudes toward chemistry with a shortened semantic differential. Chem. Educ. Res. Pract. 2015, 16, 283–292. [Google Scholar] [CrossRef]

- Chadwick, S.; Baker, A.; Alexander, J. Implementing ASCIv2 to measure students’ attitudes towards chemistry in large enrolment chemistry subjects at an Australian university. Abstr. Pap. Am. Chem. Soc. 2015, 249, 1155. [Google Scholar]

- Cha, J.; Kan, S.Y.; Wahab, N.H.A.; Aziz, A.N.; Chia, P.W. Incorporation of Brainteaser Game in Basic Organic Chemistry Course to Enhance Students’ Attitude and Academic Achievement. J. Korean Chem. Soc. 2017, 61, 218–222. [Google Scholar] [CrossRef]

- An, J.; Loppnow, G.R.; Holme, T.A. Measuring the impact of incorporating systems thinking into general chemistry on affective components of student learning. Can. J. Chem. 2021, 99, 698–705. [Google Scholar] [CrossRef]

- Morshead, R.W.; Krathwohl, D.R.; Bloom, B.S.; Masia, B.B. Taxonomy of Educational-Objectives Handbook Ii—Affective Domain. Stud. Philos. Educ. 1965, 4, 164–170. [Google Scholar] [CrossRef]

- Haertel, G.D.; Walberg, H.J.; Weinstein, T. Psychological Models of Educational Performance—A Theoretical Synthesis of Constructs. Rev. Educ. Res. 1983, 53, 75–91. [Google Scholar] [CrossRef]

- Gable, R.K.; Ludlow, L.H.; Wolf, M.B. The Use of Classical and Rasch Latent Trait Models to Enhance the Validity of Affective Measures. Educ. Psychol. Meas. 1990, 50, 869–878. [Google Scholar] [CrossRef]

- Anderson, M.C.; Bjork, R.A.; Bjork, E.L. Remembering Can Cause Forgetting—Retrieval Dynamics in Long-Term-Memory. J. Exp. Psychol. Learn. 1994, 20, 1063–1087. [Google Scholar] [CrossRef] [PubMed]

- Bjork, E.L.; Little, J.L.; Storm, B.C. Multiple-choice testing as a desirable difficulty in the classroom. J. Appl. Res. Mem. Cogn. 2014, 3, 165–170. [Google Scholar] [CrossRef]

- IBM. IBM SPSS Statistics; IBM: Armonk, NY, USA, 2021. [Google Scholar]

- McGraw Hill. ALEKS Corporation; McGraw Hill: New York, NY, USA, 2021; Available online: https://www.aleks.com/ (accessed on 1 January 2023).

- Amos Development Corporation. IBM SPSS Amos; Amos Development Corporation: McLean, VA, USA, 2022. [Google Scholar]

- Cronbach, L.J. My current thoughts on coefficient alpha and successor procedures. Educ. Psychol. Meas. 2004, 64, 391–418. [Google Scholar] [CrossRef]

- Williams, S. Pearson’s correlation coefficient. N. Z. Med. J. 1996, 109, 38. [Google Scholar]

- Kaltenbach, H.-M. A Concise Guide to Statistics; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Chan, J.Y.K.; Bauer, C.F. Identifying At-Risk Students in General Chemistry via Cluster Analysis of Affective Characteristics. J. Chem. Educ. 2014, 91, 1417–1425. [Google Scholar] [CrossRef]

- Pazicni, S. Mitigating Students’ Illusions of Competence with Self-Assessment. In Proceedings of the 247th ACS National Meeting, Dallas, TX, USA, 16–20 March 2014. Paper 1529. [Google Scholar]

- Smith, A.L.; Paddock, J.R.; Vaughan, J.M.; Parkin, D.W. Promoting Nursing Students’ Chemistry Success in a Collegiate Active Learning Environment: “If I Have Hope, I Will Try Harder”. J. Chem. Educ. 2018, 95, 1929–1938. [Google Scholar] [CrossRef]

- Rocabado, G.A.; Komperda, R.; Lewis, J.E.; Barbera, J. Addressing diversity and inclusion through group comparisons: A primer on measurement invariance testing. Chem. Educ. Res. Pract. 2020, 21, 969–988. [Google Scholar] [CrossRef]

- Canning, E.A.; Ozier, E.; Williams, H.E.; AlRasheed, R.; Murphy, M.C. Professors Who Signal a Fixed Mindset About Ability Undermine Women’s Performance in STEM. Soc. Psychol. Personal. Sci. 2022, 13, 927–937. [Google Scholar] [CrossRef]

| Subscale | Bauer [41] | This Study, All Sections | This Study, Quiz-retake Section |

|---|---|---|---|

| Mathematical Self-Concept | 0.90 | 0.91 | 0.92 |

| Chemistry Self-Concept | 0.91 | 0.88 | 0.88 |

| Academic Self-Concept | 0.77 | 0.67 | 0.67 |

| Academic Enjoyment Self-Concept | 0.77 | 0.81 | 0.81 |

| Creativity Self-Concept | 0.62 | 0.65 | 0.71 |

| Item | Values | N | Mean (SD) | Median | Skewness (SE) | Kurtosis (SE) |

|---|---|---|---|---|---|---|

| M0 | 0–10 | 522 | 6.60 (1.92) | 7.00 | −0.27 (0.11) | −0.28 (0.21) |

| M00 * | 0–10 | 167 | 2.90 (0.95) | 2.77 | 0.78 (0.19) | 1.45 (0.37) |

| M1 | 1–5 | 518 | 2.48 (0.62) | 2.50 | −0.07 (0.11) | −0.03 (0.21) |

| M2 | 1–5 | 518 | 3.33 (0.65) | 3.25 | −0.18 (0.11) | −0.08 (0.21) |

| M3 | 1–5 | 509 | 3.73 (0.78) | 3.82 | −0.64 (0.11) | 0.11 (0.22) |

| M4 | 1–5 | 509 | 3.20 (0.71) | 3.20 | −0.28 (0.11) | 0.03 (0.22) |

| M5 | 1–5 | 509 | 3.83 (0.44) | 3.83 | −0.21 (0.11) | −0.02 (0.22) |

| M6 | 1–5 | 509 | 4.14 (0.59) | 4.14 | −0.96 (0.11) | 1.89 (0.22) |

| M7 | 1–5 | 509 | 3.35 (0.78) | 3.25 | −0.05 (0.11) | −0.55 (0.22) |

| Item | Group | n | Mean (SD) | t Statistic | p Value (Two-Tailed) | Cohen’s d |

|---|---|---|---|---|---|---|

| M0 | Q1nr | 27 | 6.04 (1.56) | 1.83 | 0.07 (n.s.) | … |

| Q1r | 36 | 5.17 (2.08) | ||||

| M00 | Q1nr | 27 | 2.76 (0.78) | 1.49 | 0.14 (n.s.) | … |

| Q1r | 36 | 2.46 (0.78) | ||||

| M1 | Q1nr | 26 | 2.31 (0.54) | −0.30 | 0.77 (n.s.) | … |

| Q1r | 36 | 2.36 (0.67) | ||||

| M2 | Q1nr | 26 | 3.31 (0.62) | 0.07 | 0.94 (n.s.) | … |

| Q1r | 36 | 3.30 (0.73) | ||||

| M3 | Q1nr | 25 | 3.73 (0.73) | 1.52 | 0.13 (n.s.) | … |

| Q1r | 36 | 3.41 (0.84) | ||||

| M4 | Q1nr | 25 | 2.95 (0.78) | −0.52 | 0.60 (n.s.) | … |

| Q1r | 36 | 3.05 (0.68) | ||||

| M5 | Q1nr | 25 | 3.50 (0.55) | −0.95 | 0.34 (n.s.) | … |

| Q1r | 36 | 3.65 (0.61) | ||||

| M6 | Q1nr | 25 | 3.88 (0.59) | −1.12 | 0.27 (n.s.) | … |

| Q1r | 36 | 4.08 (0.78) | ||||

| M7 | Q1nr | 25 | 3.21 (1.00) | −2.59 | 0.02 (n.s.) | … |

| Q1r | 36 | 3.79 (0.74) |

| Item | Group | n | Mean (SD) | t Statistic | p Value (Two-Tailed) | Cohen’s d |

|---|---|---|---|---|---|---|

| M0 | Q1nr | 42 | 4.57 (1.75) | −1.07 | 0.29 (n.s.) | … |

| Q1r | 39 | 5.00 (1.85) | ||||

| M00 | Q1nr | 42 | 0.46 (0.18) | −0.54 | 0.59 (n.s.) | … |

| Q1r | 39 | 0.48 (0.19) | ||||

| M1 | Q1nr | 42 | 2.43 (0.59) | 0.95 | 0.34 (n.s.) | … |

| Q1r | 36 | 2.29 (0.67) | ||||

| M2 | Q1nr | 42 | 3.19 (0.74) | −0.68 | 0.50 (n.s.) | … |

| Q1r | 36 | 3.30 (0.59) | ||||

| M3 | Q1nr | 41 | 3.45 (0.72) | 0.61 | 0.55 (n.s.) | … |

| Q1r | 36 | 3.35 (0.63) | ||||

| M4 | Q1nr | 41 | 3.04 (0.55) | −1.43 | 0.16 (n.s.) | … |

| Q1r | 36 | 3.24 (0.67) | ||||

| M5 | Q1nr | 41 | 3.52 (0.59) | −2.01 | 0.048 (n.s.) | … |

| Q1r | 36 | 3.76 (0.44) | ||||

| M6 | Q1nr | 41 | 3.48 (0.54) | −3.63 | <0.001 | 0.83 |

| Q1r | 36 | 3.88 (0.41) | ||||

| M7 | Q1nr | 41 | 3.27 (0.65) | −0.80 | 0.42 (n.s.) | … |

| Q1r | 36 | 3.40 (0.75) | ||||

| Final | Q1nr | 42 | 59.4 (18.4) | −2.01 | 0.048 (n.s.) | … |

| Q1r | 39 | 66.7 (14.1) |

| GC I Group * | n | GC II Group | n | GC II Group % |

|---|---|---|---|---|

| Q1r | Q1r | 6 | 35% | |

| 17 | Q1nr | 4 | 24% | |

| Q0 | 7 | 41% | ||

| Q1nr | Q1r | 5 | 33% | |

| 15 | Q1nr | 8 | 53% | |

| Q0 | 2 | 13% | ||

| Q0 | Q1r | 9 | 13% | |

| 69 | Q1nr | 12 | 17% | |

| Q0 | 48 | 70% |

| Item | Comparison Group | n | Mean Difference (SD) | t Statistic | p Value (Two-Tailed) | Cohen’s d |

|---|---|---|---|---|---|---|

| M1 | GC II -GC I | 94 | 0.22 (0.59) | 3.54 | <0.001 | 0.37 |

| M2 | GC II -GC I | 94 | 0.12 (0.65) | 1.81 | 0.07 (n.s.) | … |

| M3 | GC II -GC I | 93 | −0.12 (0.47) | −2.47 | 0.015 (n.s) | … |

| M4 | GC II -GC I | 93 | 0.20 (0.58) | 3.36 | 0.001 | 0.35 |

| M5 | GC II -GC I | 93 | −0.05 (0.44) | −1.18 | 0.24 (n.s.) | … |

| M6 | GC II -GC I | 93 | −0.49 (0.49) | −9.67 | <0.001 | 1.00 |

| M7 | GC II -GC I | 93 | −0.09 (0.64) | −1.32 | 0.19 (n.s.) | … |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vyas, V.S.; Nobile, L.; Gardinier, J.R.; Reid, S.A. Impact of a Low-Stakes Assessments Model with Retake in General Chemistry: Connecting to Student Attitudes and Self-Concept. Educ. Sci. 2023, 13, 1235. https://doi.org/10.3390/educsci13121235

Vyas VS, Nobile L, Gardinier JR, Reid SA. Impact of a Low-Stakes Assessments Model with Retake in General Chemistry: Connecting to Student Attitudes and Self-Concept. Education Sciences. 2023; 13(12):1235. https://doi.org/10.3390/educsci13121235

Chicago/Turabian StyleVyas, Vijay S., Llanie Nobile, James R. Gardinier, and Scott A. Reid. 2023. "Impact of a Low-Stakes Assessments Model with Retake in General Chemistry: Connecting to Student Attitudes and Self-Concept" Education Sciences 13, no. 12: 1235. https://doi.org/10.3390/educsci13121235

APA StyleVyas, V. S., Nobile, L., Gardinier, J. R., & Reid, S. A. (2023). Impact of a Low-Stakes Assessments Model with Retake in General Chemistry: Connecting to Student Attitudes and Self-Concept. Education Sciences, 13(12), 1235. https://doi.org/10.3390/educsci13121235