2. Materials, Methods, and Results

The experimental method consisted of two phases. First, teachers and researchers collaborated to create a suitable and potent nudge intervention the teachers could use in their teaching practice (the design phase). This resulted in three nudges. Second, the nudges were tested in a quasi-experimental setting among students from the teachers’ classroom(s) (e.g., the nudge developed by Teacher A was tested in their classroom) (the experimental phase). For all three nudges, a separate experiment was planned. We describe the general method of all three studies, and then describe the specifics of the studies, as well as the results.

2.1. First Step: Design

Nudges have proven effective in changing different kinds of behavior in various situations, but the specific context has a large impact on a nudge’s effectiveness [

55]. Therefore, to create an effective nudge, it is important to tailor it to the specific context and target group. To achieve this, the concept of design patterns [

56] and stakeholder involvement in intervention design (e.g., [

57]) was applied to the nudge design procedure. This was combined with the framework for nudge design by Hansen [

55] and the theoretical framework EAST [

58] to design nudges that were potentially the most effective in changing the target behavior. All nudges were designed to be applicable in an online or hybrid classroom due to possible COVID-19 regulations, but the research was ultimately carried out in physical classrooms.

Design procedure. We recruited 30 teachers in vocational education and training (VET) and in higher vocational education (HVE) who expressed interest in using nudges to promote autonomous learning behavior. They were split equally, resulting in three parallel design groups of ten teachers, one for each target behavior (planning, preparing for class, and asking questions). A design group held three design sessions, each set up by two researchers—the first author and a researcher familiar with the specific school. In the first session, teachers were introduced to nudging and received examples of nudges being used in practice. Afterwards, they participated in a brainstorm. First, all teachers collectively listed factors they felt prevented students from performing the target behavior. The teachers were then distributed between four tables, corresponding with the four themes of EAST: Easy, Attractive, Social, and Timely [

58], where they used brainstorm playing cards [

59], (for an example see

Figure 2) to generate ideas. Teachers grouped the ideas on their table and presented the best ideas, creating a group discussion.

After the first session, the involved researchers collaborated to make a selection based on the teachers’ input and scientific insights, and worked out specific nudge proposals for each design group. These proposals and the theoretical justification for the final nudge design can be found in the additional materials. In the second design group session, the two researchers of the design group then presented these options. The teachers decided which option they preferred and proposed changes that made them fit better in their environment. These nudges were piloted for practical applicability by individual teachers by implementing them in their classes for a short period. In the third design group session, these teachers reported back to the design group, and the nudges were refined based on their feedback. This resulted in the final nudge that was subsequently experimentally tested in the teachers’ next semester. A visual overview of the process can be found in

Figure 3.

2.2. Second Step: Experimental Procedure

Participating teachers submitted a list of their available and suitable classes to the researcher. These were randomly assigned to either control or intervention nudge classes, aiming to keep the group sizes similar, and to balance education and education level as well as possible between conditions. Due to the nature of co-creation, it was not possible to blind teachers to the experiment, nor randomize the nudge they would be implementing. We took several precautions to avoid contamination. Teachers were specifically instructed during design and execution of the experiment that they should not change the way they taught their classes other than implementing the nudge. This, as well as whether the teachers were able to implement the nudge successfully in the nudge classes, was verified after the experiment by a researcher interviewing all participating teachers. Specific student demographics were not collected.

Students were informed about the experiment by their teacher. As opting out of the intervention was difficult for the class-wide interventions, all students were reminded of the possibility to opt out of data collection for the experiment. Informed consent was obtained during the first lesson of the semester in which the experiment took place. The semester took between 6 and 10 weeks, depending on the school. For all participants, final course grades were collected and specific behavioral data were gathered for each experiment (for details see the Methods of each experiment). Grades in the Netherlands range from 1 (very bad) to 10 (excellent), with 5.5 being a barely passing grade. To contextualize the findings, complementary interviews were held with all teachers to gain insight in their perceptions and the effects of the nudges. The interview structure can be found in the additional materials.

2.2.1. Analysis

Due to the extraordinary circumstances of the COVID-19 pandemic, which impacted the participating teachers’ and students’ personal and professional lives, participation in the experiment and data collection across the project proved difficult and at times impossible (see

Appendix A). This means that, for all studies, the final sample size was less than expected.

The main hypotheses were investigated using a mixed effects model approach with condition (control vs. nudge) as the independent variable. Dependent on the hypothesis, the behavioral outcome or the final grades were taken as the dependent variable. The variable teacher was taken as a fixed intercept, and the variable students, nested in the variable class, as random intercept. To optimize the random structure, the suggestions of Barr et al. [

60] were used as a guideline. The complete models are described in the preregistration on the Open Science Framework (see below). The reported models were run as preregistered.

2.2.2. Transparency and Openness

All participants in all experiments were asked for informed consent ahead of the experiment. This study obtained ethical approval from the Ethical Review Committee of the Department of Psychology, Education, and Child Studies; Erasmus University Rotterdam (application 19-052).

Data were analyzed using the statistical program R, version 3.6.3 [

61], using the lme4 package [

62]. Using a mixed-effects model approach, the cell means were compared to the grand mean to determine estimates of the effect. To determine

p-values, the parametric bootstrap function of the package

afex [

63] was used. This study’s design and its analysis were pre-registered. All data, analysis code, research materials, and preregistered analyses are available at the Open Science Framework (

https://osf.io/ye9av/?view_only=03fef7e33df04058983ad331b214b5a7 accessed on 23 December 2022).

2.2.3. Specific Setup

After the design phase, the three design groups carried out an experiment with their nudge for their target behavior. During the second design session for the group nudging planning behavior, the group split towards two nudge options: a video booth and reminders (described below). The teachers divided themselves in two groups of an equal number based on their interest. These groups each designed a nudge separately from each other, resulting in two nudges and two experiments aimed at planning: a video booth nudge and a reminder nudge. As a strong ceiling effect (i.e., nearly all participating students achieved the deadline that was targeted by the reminder nudge) prevented us from drawing any meaningful conclusions about the effects of the reminder nudge, for brevity of the article the setup and results of this experiment can be found in

Appendix B. The setup and results of the remaining three nudges are discussed below.

2.3. Experiment 1: Planning-Video Booth

In this experiment, we aimed to improve individual students’ meeting of deadlines by creating a video booth intervention. The hypotheses for this experiment were:

Hypothesis 1a. Students who received the video booth nudge would report more planning behavior than students who did not receive the video booth nudge.

Hypothesis 1b. Students who received the video booth nudge would miss fewer deadlines than students who did not receive the video booth nudge.

Hypothesis 1c. Students who received the video booth nudge would obtain higher course grades than students who did not receive the video booth nudge.

Participants. In total, 98 first- and 32 third-year VET-students (total 130 students) participated in this experiment. Due to the nature of the intervention, randomization was possible on an individual level instead of per class. Sixty-five students participated in the nudge condition, and sixty-five students participated in the control condition. All participants were VET-students in the sector marketing and events. Individual demographics of the students were not collected, but the students’ age range in this educational track is 18 to 21 years.

Design. Students were individually asked to take part in the experiment, in which they answered questions on cue cards while filming themselves in an empty classroom, the “video booth”. In the nudge condition, the cue cards were designed to self-persuade students of the importance of planning, identify obstacles for planning and design an implementation intention. First, students were being asked why they found planning to be important, thereby engaging in self-persuasion [

64], which is more effective in creating behavioral change [

65,

66]. Next, they were asked to identify their obstacle to demonstrating planning behavior (mental contrasting [

67]) and then find a specific solution on how they could circumvent this obstacle (implementation intention [

68]). Combining mental contrasting and implementation intentions has been successful in earlier research in education and previously increased attendance, preparation time and grades for students [

69,

70] but has not yet been applied to increase planning behavior. Similar interventions, called “wise interventions” have been successful in creating positive behavior change in several fields (for an overview, see [

71]). No explicit instruction on how to plan was provided on the cue cards. In the control condition, students followed the same procedure, but the topic of the instructions on the cue cards was about healthy eating.

Materials. In the booth, students sat at a desk in front of a handheld camera on a tripod. The camera was pointed at the chair the students sat in. Cue cards with instructions, including when to switch the camera on and off, were numbered in order and placed on the desk. For the used cue cards, see the additional materials.

Measures. Three outcome measures were used during this experiment: (1) self-reported planning behavior, (2) missed deadlines, and (3) final course grades. To measure successful adherence to planning, a short questionnaire was designed that accompanied the final test or deadline. This questionnaire consisted of four questions about a student’s planning adherence for that test or deadline, with answers on a seven-point scale. A sample question is “I was able to do all preparation I wanted to do for this [deadline/test]” with answers ranging from “completely disagree” (1) to “completely agree” (7). This questionnaire can be found in the additional materials. Construct reliability of the planning questionnaire was good (Cronbach’s alpha = 0.74). As an additional measure of successful planning behavior, we also recorded how many deadlines students missed during the semester. The first-year students had 45 deadlines throughout their period (one semester: September to November), and teachers recorded all missed deadlines. Due to being in their exam period more focused on exams rather than assignment deadlines, the third-year students had only a few deadlines. Additionally, the final grades of their courses were collected.

Procedure. The procedure took place over three consecutive school days. During a class, all students were asked to participate in the experiment. Two video booths—in separate rooms—were simultaneously available, so students were taken in pairs from their classes by an experimenter. The teacher remained blind to the condition the student was in. Students were informed in pairs about the experiment in a classroom set up as waiting room, where the experimenter outlined the procedure and told students that the goal of the experiment was to find out how students dealt with everyday problems—planning properly (experimental condition) and eating healthy (control condition). Here, students signed a consent form specific for the video booth, which can be found in the additional materials. This instruction took approximately five minutes. Then, they were taken to the video booth, which was an empty classroom in the school, where a handheld camera on a tripod was set up facing the desk the student was sat at. Participants received cue cards with instructions and were told to follow the instructions on the cards. Students read the cards out loud and answered the questions while thinking out loud, while filming themselves to strengthen their commitment to their answers [

72]. They were left alone to ensure their privacy and honesty during the procedure. Students were asked to sit down in front of the camera and were instructed to read the cue cards, follow the instructions on the cards and answered the questions on the cards. When finished, students informed the experimenter in the waiting room. The video booth took approximately five minutes per student. The experimenter thanked the students for participating and asked not to talk about the project with their classmates until the end of the day. Afterwards, students went back to their classes. The video made by the student was sent to the student if they had indicated to want this on their consent form, and then deleted. Deadline adherence was collected throughout the period, which was approximately ten weeks.

Figure 4 contains an overview of the timepoints of intervention and data collection.

Results. For the third-year students, no deadlines were missed. Because of this, all third-year students (n = 32) were excluded from the experiment. Three first-year students were excluded because their number of missed deadlines were more than three standard deviations (9.66) from the mean. Results did not change significantly when testing the hypotheses with these students included. This left 95 students in the final sample, of which 49 were in the control condition and 46 in the nudge condition.

For Hypothesis 1a, the planning questionnaire was filled in by 30 students that were also present in the final sample. Of these students, 16 were in the control condition and 14 were in the nudge condition. Bootstrapped multilevel analysis reveals a significant effect (Estimate = −0.44, SE = 0.15, t(30) = 2.94, p < 0.01): nudged students reported more planning behavior (M = 4.70, SD = 0.71) than students in the control condition (M = 3.81, SD = 0.97).

For Hypothesis 1b, the number of missed deadlines per condition were compared using multilevel analysis. No significant effect of the nudge was found on missed deadlines, Estimate = −0.09, SE = 0.19, t(89.90) = 0.51, p = 0.61. Students in the nudge condition did not miss significantly more deadlines (M = 1.15, SD = 2.00) than those in the control condition (M = 0.96, SD = 1.77). The removal of the two outlying students did not significantly change this outcome.

In an exploratory analysis, we correlated the number of missed deadlines with the self-reported planning score for the subgroup of 30 students who had filled in the planning questionnaire. No significant correlation was found between the two measures (ρ = −0.06, p = 0.75), indicating that no relation exists between the planning behavior a student reports and their realized deadlines.

During the research it became apparent that it was difficult, if not impossible, to combine all grades into a meaningful number, given the complexity of the students following different courses and educational tracks and the availability of the data. This led us to not pursue the effect of the nudge on grades.

Discussion. When using the video booth as a nudge to promote planning behavior, we found a positive effect of the nudge on reported planning behavior. However, no effect was found on meeting the actual deadline. A possible explanation is that the behavioral measurement (deadlines) and the intended behavioral change (the planning behavior) are not one on one comparable, as suggested by the lack of correlation between the two measurements. This corresponds with the findings of Ariely and Wertenbroch [

73] and Levy and Ramim [

43]. In these studies, students were nudged by being able to set their own deadlines. Although this did not lead to improved [

43,

73] this was because students tried to improve their planning, but did so suboptimally [

73]. Their findings correspond with Levy and Ramim’s findings [

43] that these students did report less procrastination behavior. In summary, these students attempted to improve their planning behavior, but this behavior was not (yet) reflected in their missed deadlines. A similar process can have taken place in our experiment: students attempted to engage in planning behavior (accepted Hypothesis (1a), but it did not (yet) show in their realized deadlines (rejected Hypothesis (1b). This interpretation should be done with caution, however, as the small sample size for the questionnaire makes finding small effects difficult. This sub-sample is also likely not a random sample of the participants, but likely consists of students who are more actively engaged with school. Additionally, because this was only measured using self-report, it is also a possibility that students overestimated their own planning behavior or gave socially desirable answers because they made the connection with the intervention at the start of the semester. Further research is necessary to verify whether the increase in self-reported planning behavior corresponds with an actual increase in planning behavior.

2.4. Experiment 2: Preparation for Class

In this experiment, we aimed to improve students’ preparation for class by creating a checklist intervention. The hypotheses for this experiment were:

Hypothesis 2a. Students who received the checklist-nudge would prepare for class more often than students who did not receive the checklist-nudge.

Hypothesis 2b. Students who received the checklist nudge would obtain higher course grades than students in the control condition.

Participants. Of the teachers participating in the design group that created the nudge for class preparation, 3 were ultimately able to participate in the experiment. Every teacher participated with two parallel classes—classes who were in the same year and who received the same course. Per teacher these classes were divided between control and nudge classes so that type of course and year of study were distributed evenly across conditions. In total, 3 classes with in total 76 students participated in the nudge condition, and 3 classes with in total 72 students participated in the control condition. All students were HVE-students, 98 of which were first-year students and 50 of which were third-year students, following the educational track to become English teachers. Examples of themes of participating courses are didactic skills and English literature. Individual demographics of the students were not collected, but the students’ age range in this educational track is 18 to 23 years.

Design. To improve preparation for class, teachers designed and provided a checklist for their students, where the specific preparation they needed to perform was outlined per class. Students could then tick the box for the work they had completed. Students in the control condition were provided with the regular course material, generally a course syllabus which outlined the preparatory work which was not presented in a checklist format.

Measures. Class preparation was registered per lesson per individual student by self-report (in case of reading/learning) or checking by the teacher (in case of exercises). This was registered as either complete, not complete, or partly complete (in the case of multiple exercises). To account for different courses and schedules, these scores were converted by the teachers to a score: from 1 (student did no preparatory work), 2 (some preparatory work), 3 (most preparatory work) to 4 (all preparatory work). Not all courses had the same number of classes that required preparation, which was taken into account in analysis.

Procedure. The teacher prepared a checklist of the relevant course preparation per lesson based on the regular course materials and preparatory instructions for all classes in the nudge condition, and distributed this at the start of the course. The control classes received the regular materials. This was usually a syllabus with the same information but not in a checklist format. Checklists were not sharable between classes due to specific dates, deadlines and exercises reducing possible contamination effects. An example checklist can be found in the additional materials. Each lesson, the teacher registered each student’s preparation. Individual grades were intended to be collected at the end of the course, but exams were postponed due to intensifying COVID-19 measures.

Figure 5 contains an overview of the timepoints of intervention and data collection.

Results. Data were collected per lesson, resulting in a total of 662 data points. The average class preparation score was 2.08 (

SD = 1.21). A frequency table of scores can be found in

Table 1.

Hypothesis 2a was rejected: students in the nudge condition (M = 2.15, SD = 1.24) did not score significantly higher on class preparation (Estimate = −0.03, SE = 0.06, t(104.61) = −0.53, p = 0.60) than students in the control condition (M = 2.00, SD = 1.18). Because in the preregistration class preparation was assumed to be a binary variable, these scores were converted to a binary and the same tests were run. These results remained stable when reverting scores to either a strict binary (1 = all preparatory work completed, 0 = not all preparatory work completed) or a lenient binary (1 = any preparatory work completed, 0 = no preparatory work completed).

Unfortunately, tests for the participating classes were postponed due to COVID-19, meaning that relevant grades for these classes could not be collected.

Discussion. We did not find a significant effect of the nudge on class preparation. A possible explanation for the non-significant finding is that uncertainty about what to prepare is not the main behavioral barrier encountered by students. For example, a present bias, an overconfidence in one’s own ability, or simply other ongoing affairs can cause a student to consciously decide not to prepare for class. Nudging when the nudgee’s attitude is unsupportive of the nudged behavior is ineffective [

74]. Nudging behavior in a transparent way—meaning that both the intervention and its purpose are apparent to those subjected to it [

75], as in this experiment—allows students to easily resist the attempted behavioral change [

75,

76]. Future research should investigate which behavioral barriers prevent students from preparing for class, to design more effective nudges.

2.5. Experiment 3: Asking Questions

In this experiment, we aimed to improve students’ question asking in class by creating a class-wide goal-setting nudge intervention. The hypotheses for this experiment were:

Hypothesis 3a. Students who received the goal-setting nudge would ask more questions during class than students who did not receive the goal setting nudge.

Hypothesis 3b. Students who received the goal-setting nudge would obtain higher course grades than who did not receive the goal-setting nudge.

Participants. Of the 7 recruited teachers, 3 ultimately participated in the experiment. Four dropped out due to the strain of data collection during their classes. Classes of the participating teachers were divided randomly between control and nudge condition. Five classes (of which 2 in VET and 3 in HVE) with in total 80 students participated in the nudge condition, and four classes (of which 2 in VET and 2 in HVE) with in total 92 students participated in the control condition. The average class contained 19 students. Students were excluded if they missed more than half of the lessons. Of the participants, 88 students were VET-students and 84 were HVE-students. The participating VET-students followed an educational track focused on ICT, and the experiment was held within their Dutch courses. The HVE-students were teachers-in-training and followed courses on professional development and didactic skills. Individual demographics of the students were not collected, but the students’ age range in this educational track is 18 to 21 years.

Design. To promote asking questions, a class-wide nudge was designed. Every lesson, a goal was set by the teacher: all students should aim to ask at least one question. This goal was explicitly mentioned at the start of the class, as well as that there was no negative consequence for not achieving this goal. This type of goal-setting fits the criteria for a nudge [

37] as its proposed effectiveness is due to mechanisms of goal commitment, not external rewards or punishment.

Measures. To measure asking questions, the teacher unobtrusively marked the number of questions an individual student asked in each lesson on the attendance sheet. All questions were counted, apart from necessary procedural questions (e.g., “Can I go to the bathroom?” or “Is my microphone unmuted?”).

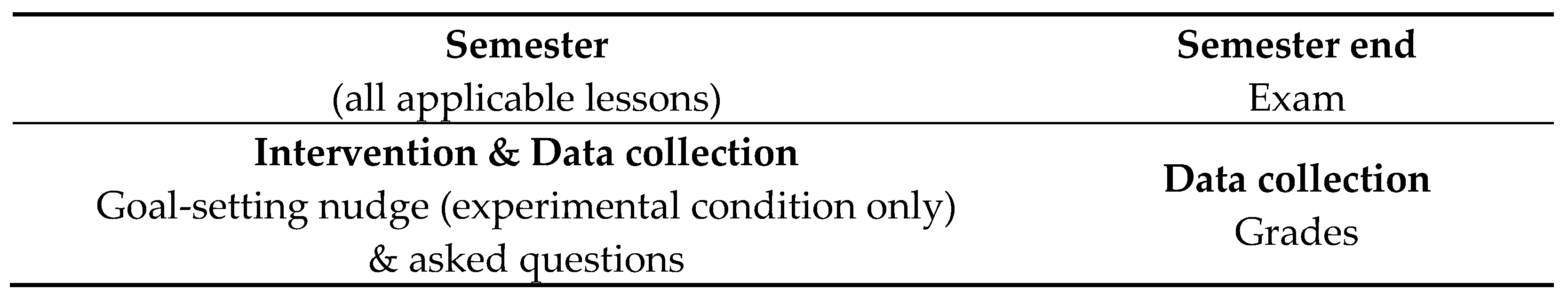

Procedure. At the start of each lesson, the nudge classes were reminded of the goal to ask at least one question per lesson. In both conditions, during the lesson the teacher registered the number of questions asked by each student in the class.

Figure 6 contains an overview of the timepoints of intervention and data collection.

Results. Ten students were dropped for missing more than half of the sessions, leaving 162 students. Of these students, 88 were in the control condition (of which 38 VET-students and 50 HVE-students) and 74 in the nudge condition (of which 43 VET-students and 31 HVE-students).

Data were collected per lesson, resulting in a total of 1296 data points. Students in the nudge condition (M = 1.49, SD = 1.23) asked 0.25 questions per lesson per student more than students in the control condition (M = 0.99, SD = 0.79). In other words, each student asked one more question every four lessons. Statistically, this was a positive trend (Estimate = 0.25, SE = 0.14, t(36.43) = 1.80, p = 0.08).

Additionally, we investigated the effect of the goal-setting nudge on the students’ grades. The final average grade of a subset of five classes were available for this analysis. All students in this analysis were following the same course with the same test. Students in the nudge condition (M = 6.98, SD = 0.98) scored higher (Estimate = 0.69, SE = 0.24, t(57) = 2.79, p < 0.01) than those in the control condition (M = 6.29, SD = 0.92). No direct effect of number of questions on final grade was found (Estimate = −0.03, SE = 0.11, t(55.74) = 0.30, p = 0.76).

In an exploratory fashion, we investigated whether the increase in questions was because of already active students asking more questions (“the usual suspects”) or that the nudge helped students ask questions where they previously would remain quiet. On the average lesson in the control condition, 60.5% of students (SD = 24.3%) asked a question, compared to 70.7% in the nudge condition (SD = 19.8%). In an exploratory general linear mixed-effects model analysis, we reduced the dependent variable to a binary factor (0 = no questions, 1 = one or more questions). We found no significant effect of condition (Estimate = −0.10, SE = 0.15, z(779) = 0.62, p = 0.54) indicating that we find no evidence of the intervention having increased the number of students asking questions.

Discussion. Regarding the number of questions students asked, we found a marginal positive effect of the nudge. In practice, this meant students on average going from 1 to 1.25 question per lesson in the nudge condition, meaning that teachers received about 25% more questions in their classes. In a course with 20 students consisting of 8 lessons this means 40 extra questions. This corresponds with the teachers’ evaluation of the nudge in the complementary interviews, and the higher grades of nudged students compared with those in the control condition. Teachers said the nudge resulted in an atmosphere where asking questions was normal and desirable. Given the size of the effect and its correspondence with the other findings, we are inclined to see this as a promising result of the nudge. It should be noted that the increase in number of questions asked was not paired with an increase in the number of students asking questions; in an exploratory analysis, we found no increase in the number of students who asked at least one question each lesson. So, although we find evidence that the nudge helped students to ask more questions, our findings support the idea that the nudge does not help students who normally would not ask a question, ask more questions.

Despite no direct effect of number of questions on final grade was found, the nudge did increase the students’ grades. A possible explanation is that the extra questions asked lead to extra answers from the teachers, which could have benefitted all students in class, irrespective of who asked the questions. Alternatively, the nudge contributed to a classroom climate that is productive for learning (and achievement), also for students who do not ask questions. A final explanation is that the teacher was not blind to the condition the students were in, and this—consciously or subconsciously—influenced their attitude positively towards the nudged classes. This possible bias is difficult to avoid for teacher-based interventions where teachers need to be aware of the different conditions. We believe the risk is small for this bias, because we did not find an effect for the other nudges on teacher-measured outcomes.

Due to the nested nature of the data, it is necessary to repeat this research with a larger and more diverse population, to be able to provide a more definitive answer to the effect of the nudge. Additionally, although the nudge increased the number of questions asked, we found no evidence of more students asking questions. Future research could identify whether different nudges could perhaps also help these students in asking questions.

An alternative explanation to consider is that the average class size for the nudge condition was 16 students, while this was an average of 22 for the control condition. Class size is an important factor in class interaction (e.g., [

77,

78]) with larger groups experiencing lower student participation and interaction. As the nudged classes were on average smaller, this could be an alternative explanation for the effect of the nudge. However, all but one of the participating classes fall within the established “medium” class size of 15 to 34 students [

79] (p. 10), for which class interaction has similar patterns. This leaves the possible explanatory power of class size limited.

Lastly, this experiment is limited by the quantitative approach to asking questions. Students’ questions are reduced to a count per student per class, while no question is the same. However, assessing how the question fits in each student’s learning process is very subjective and simply was not feasible to consider in our experiment. Future research could repeat this experimental setup with a more qualitative approach and investigate the type and quality of questions asked, or thoroughly interview teachers about this aspect.

3. Discussion

Autonomous learning behavior is important, but students often do not show this behavior. In this study, we investigated whether nudging is an effective teaching strategy to increase autonomous learning behavior in students. We developed an intervention design method and used this to design four nudges for three autonomous learning behaviors: planning, preparing for class, and asking questions. These behaviors represent the three phases of Zimmerman’s self-regulation model [

40] and are also behaviors that teachers find important and seek support for to improve. The nudges were tested in four field experiments. We designed the nudges reminders and video booth to increase planning, a checklist to increase preparation for class, and a goal-setting nudge to increase asking questions. Given the mixed results, it is difficult to provide a clear-cut answer to the research question can nudging as a teaching strategy support students’ autonomous learning behavior. This range of effectiveness of the nudge interventions can also be found in Damgaard and Nielsen’s general overview [

28] of nudging in education. Based on our findings, we can provide more insight in what nudges are effective when promoting autonomous learning behavior, and what kind of autonomous learning behavior is susceptible to being nudged. A limitation should be stressed beforehand, which is that all studies experienced teacher attrition due to the added work pressure caused by the pandemic. Although attrition was not based on educational track or educational level, it is possible that this left the teachers in our sample who were not as busy or more motivated than their colleagues. We believe the impact of this attrition to be limited, as the measurements used throughout the experiments are not centered around teachers or teacher characteristics. The experiments using randomization on class level (the

checklist and

goal-setting nudge) used multiple measurements per individual student. The video booth nudge experiment, which utilized one cumulative measurement of the dependent variable per student, was randomized on an individual level. All dependent variables reported are therefore individual-based, rather than class-based.

Of the three behaviors investigated, nudging students to ask questions seems the most promising one. Despite the small sample size [

80], the results indicated that the nudge helped students ask marginally more questions and resulted in higher grades. A nudge that promotes students generating and asking questions can be a useful supporting tool for teachers and students, as this behavior is essential for successful self-regulated learning [

48,

81], and students often do not show this behavior [

54,

82,

83]. Generating questions benefits students academically, but also helped motivate them [

84] and reduce their test anxiety [

85]. Especially higher-order generated questions are of great importance for students to achieve their learning goals [

85,

86]. For an overview of the importance of student-generated questions, see [

83]. In addition, a question that is asked enriches the learning of not only the help-seeking student, but also potentially that of other students in the (online) classroom. This was reflected by the modest increase in average grade found for all students after the intervention, reaffirming the nudge’s positive effect despite its marginal significance. Moreover, the positive feedback loop and possible individual “spillover behaviors” can strengthen the behavior [

87]. A student receiving a satisfying answer when asking a question is likely to ask more questions, which can “spill over” into asking questions out of class or demonstrating other autonomous learning behaviors. This essentially makes the initial increase in asking questions possibly “snowball” into more help-seeking behavior or even more autonomous learning behavior in general. Additionally, as grades went up also for students who did not ask more questions, this nudge also helps students indirectly, even if they do not ask more questions. Ultimately, while promising, the effect of the nudge and its possible spillover remains relatively small when assessing the practical usefulness of the nudge. Neither the nudge, nor its possible spillover, are sufficient to improve autonomous learning behavior in students on a large scale, but it can be a helpful tool in achieving small steps towards the desired behavior. This means that the Theory of Change described in

Figure 1 should be interpreted as being small in effect size, which means the likelihood of the nudge intervention single-handedly achieving larger long-term effects, like increased graduation, is low.

Unfortunately, not all nudges were equally successful. We found no effect of the checklist nudge for preparing for class and also could not determine an effect of the reminders. Although the video booth nudge did not show an effect on missed deadlines, exploratory analysis revealed that nudged students (unsuccessfully) tried to improve their planning skills, as they reported more planning behavior. This first step is a start for a complex skill like planning and self-regulation in general, and is why Ruggeri et al. [

40] and Weijers et al. [

29] warn against overreliance on just end results in education when discussing the success of behavioral interventions. This nudge could, perhaps combined with explicit support (as in [

12]), be helpful for improving planning behavior. When students are nudged into improving their planning behavior, they also need the explicit support and instruction on how they can do that successfully, because nudging students towards behavior they do not know how to perform will ultimately not work.

An explanation for the difference in effectiveness of the nudges can be sought in the type of targeted behavior. The more successful nudge targeted in-class behavior (asking questions) while the less successful nudge targeted out-of-class behavior (preparing for class). The nudge targeting planning behavior was a partial success, and this behavior takes place both in-class and out-of-class. The extra autonomy that a nudge gives when compared to external regulation could be a step too far for the students in the case of out-of-class behaviors, as they are still developing their skills in autonomous learning behavior and need more support. Indeed, Oreopoulos and Petronijevic [

32] found in a large sample of students that nudges were not successful in changing out-of-class behavior, like spending extra time studying. On the flip side, in-class behavior has the benefit of teachers being able to support it more directly than the out-of-class behavior: the longer the time between the intervention and the targeted behavior, the less effective the nudge [

88]. Future research should aim to improve in-class behaviors and find how nudges, perhaps combined with other techniques, can be used to improve out-of-class behaviors that are important for autonomous learning.

A different explanation for the difference in effectiveness can be sought in characteristics of individual students, in accordance with the concept of “nudgeability” [

89], describing that individual factors can determine susceptibility to a nudge. In our research, the goal-setting nudge seemed only to work for students who were already active during class. A possible driver of this difference between students could be academic motivation or engagement, as a different study in the educational field where class attendance was nudged had similar findings, where the nudge had a different effect on students depending on the degree they already were attending class [

90]. Different predictors, like gender (see, e.g., [

91], age, or educational level, should also be considered, which could be an important line for future research.

Taking the three nudged behaviors as a representation for the three phases of self-regulated learning [

45], we find suggestive evidence for a possible effect of nudging behavior in the forethought phase. We should be careful drawing conclusions here, as we only investigated one of each possible representative behavior for each phase. Autonomous learning behavior is a broad concept, and what it looks like can differ between ages, developmental stages, learning needs and even between students [

7]. Of course, the three researched behaviors (asking questions, preparing for class, and planning) are not fully representative of the entire spectrum of possible autonomous learning behaviors, but we believe they cover a large part of the relevant behaviors for students and represent the different aspects of autonomous learning behavior [

45] well. Future studies could, to obtain a fuller picture of nudging on self-regulated learning, investigate different behaviors associated with self-regulated learning. For example, further research could investigate nudging to promote goalsetting (as in [

92], linked to the forethought phase [

45]. Similarly, researchers could design a nudge to support their students to pay attention or remove distractions, behavior linked to the performance phase [

45]. Alternatively, future research can choose to dive deeper in nudging self-reflective behavior, targeting behaviors like incorporating feedback or performing self-evaluation [

48]. All alternative behaviors mentioned in this paragraph are indicated by VET- and HVE-teachers as behaviors they lack the tools to successfully support this behavior [

34].

Lastly, a possible limitation of the study is spillover between conditions, as all participants are students in the same school and often same educational track. Nevertheless, we think that there is little room for spillover to affect the results of the study. The goal-setting nudge was done by the teacher in class, making spillover to other classes very unlikely. The effect of the video booth nudge is achieved by answering the questions on the cue cards, and moreover, the students were asked to keep the content to themselves. Lastly, students could potentially share their checklist with other students in the control condition, but as different classes would have different due dates and assignments, the checklist is less usable to be shared with students from different classes.

4. Implications

The current study holds several important implications. In multiple ways, this study can be considered a novel approach. The study outlines a new method to design behavioral interventions based on design patters [

56] and stakeholder involvement [

57]. Using rapid redesign cycles, researchers collaborated with stakeholders to create nudge interventions that are based in scientific theory and also relevant and applicable in practice. Despite the mixed results, we believe that these design principles remain important when designing an impactful nudge for educational practice. Future research could investigate the effectiveness of this method as a behavioral intervention method, as much theory has been developed as to what aspects are important during nudge design [

55,

93] but little as for what makes an effective intervention design session [

94].

Furthermore, our experiments are indicative of that some types of autonomous learning behavior are more likely to be influenced by nudging than others, though more research is needed to substantiate this. Specifically, our results suggest that when designing a nudge as a teacher, there might be more potential in targeting in-class behaviors with nudging than targeting behaviors that are out-of-class. This corresponds with earlier findings (e.g., [

32]) that focusing nudges on continuous behavior that takes place out of the classroom is not very successful. Additionally, teachers should target behaviors that students are capable of performing, or also provide the support students need to be able to perform the behavior. First among these behaviors is asking questions, which—on a small scale—has already shown to be susceptible to nudging intervention.

Additionally, when investigating nudging in education, our study is one of the first studies to investigate nudging in which actual student behavior in the classroom is measured rather than considering behavioral proxies like grades or enrollment (see [

28]). Although often more difficult to achieve, behavioral measurements combined with learning outcomes should receive more attention in nudging research, especially focused on education [

29,

40]. Likewise, a focus of this study was to further investigate the effect of nudges on students, as it is especially important in education to investigate possible underlying processes that nudges affect [

29]. We included a questionnaire (see

Appendix A) including self-regulatory learning behavior [

95], perceived student autonomy [

5], behavioral and agentic engagement [

96,

97], and academic motivation [

98]. Unfortunately, lack of questionnaire input rendered these unusable for the research. Future studies should attempt to include these, or other, relevant measures when investigating nudging in education. To increase response rate, these variables could be investigated separately to avoid survey fatigue, or structurally arrange for these surveys to be administered during class time. Additionally, surveys like these could reveal how student characteristics may be determinants for the effectiveness of a nudge.

Our study demonstrates how nudges can be implemented as a teaching strategy even under challenging circumstances. Specifically, this study resulted in behavior changes in students under extraordinary circumstances for both teachers and students. Teachers, facing the extra pressure and difficulties of online teaching during the COVID-19 pandemic, were capable of successfully using nudges to change autonomous learning behavior. This is in sharp contrast with previous successful interventions, which were often not being applied due to time constraints [

16]—and that is when teaching in regular circumstances without the additional pressure caused by the COVID-19 pandemic.