Abstract

Serious games have been shown to be effective learning tools in various disciplines, including dental education. Serious-game learning environments allow learners to improve knowledge and skills. GRAPHIC (Games Research Applied to Public Health with Innovative Collaboration), a serious game for dental public health, was designed to simulate a town, enabling students to apply theoretical knowledge to a specific population by selecting health promotion initiatives to improve the oral health of the town population. This study employed a literature-based evaluation framework and a sequential explanatory mixed-methods research design to evaluate the use of GRAPHIC among final-year dental undergraduates across two learning contexts: King’s College London in the United Kingdom and Mahidol University in Thailand. Two hundred and sixty-one students completed all designated tasks, and twelve participated in semi-structured interviews. The findings demonstrated knowledge improvement after game completion based on pre- and post-knowledge assessments, and the students’ perceptions of the game as an interactive and motivational learning experience. The evaluation identified five serious-game dimensions and clear alignment between these dimensions, demonstrating the impact of serious games in dental public health and, more widely, in healthcare education.

1. Introduction

Serious games are designed for specific purposes other than pure entertainment. One of these purposes is to provide knowledge and skills in an organised fashion. With serious games, students are engaged and motivated with innovative teaching in learning environments [1,2]. Due to their benefits, serious games have been used in varied areas such as in government, military, education, and healthcare sectors [3]. In healthcare education, including dentistry, serious games have proved to be useful in supporting knowledge, skills, and attitudes improvements in comparison to traditional methods; in addition, the gaming approach appears to be more engaging and motivating [4].

In dentistry, dental public health (DPH) is the science and art of preventing oral disease, and promoting oral health and quality of life through the organised efforts and informed choices of society; organisations, public and private; communities and individuals [5]. Dental graduates from both the United Kingdom (UK) and Thailand are required to have population health-related competencies, according to the UK’s General Dental Council and the Dental Council of Thailand, respectively. Various teaching and learning strategies are required to support dental students to achieve learning outcomes, such as lectures, seminars, pre-recorded videos and practice in real settings. For example, a population-health-related decision-making skill may require learners to consider how to apply the skills they develop in an actual community project later in their professional lives [6]. Experiential learning in real communities is an effective learning approach for DPH to expose dental students to influential factors or challenges that may affect the design of oral-health promotion programmes.

There appear to be challenges in designing DPH education in real-world settings as part of a clinically focused curriculum and especially during this COVID-19 pandemic, when students were required to conduct learning activities by distance to minimise the risk of COVID-19 infection. In this context, alternative approaches should be carefully considered as replacements or supplements to assure the achievement of expected learning outcomes. There is evidence demonstrating that simulation-based learning can improve competencies regarding public health amongst dental students, such as the need for home visits [7], an understanding of poverty [8], and the importance of leadership for change [9]. The key strengths of an online serious game include positive educational impact, engagement and motivation, stealth assessment, interactive asynchronous distance learning, and a safe learning environment [10]. Consequently, simulation-based online serious games can support learners in DPH education.

There is increasing use of serious games in dental education; however, they have not been widely used when compared with other healthcare areas [4,10]. In addition, to the best of our knowledge, there has been no study investigating the use of serious games for learners from different contexts. GRAPHIC (Games Research Applied to Public Health with Innovative Collaboration), an online simulation-based serious game for dental public-health education was designed to be used in the undergraduate dental curriculum. To complete the game, students are required to choose the best options of health promotion initiatives using contemporary evidence to improve the oral health of a population. In addition, the gamer’s activity log system is used to indirectly observe student behaviours without interrupting learning activity [11]. The game was piloted, and the pilot results demonstrated that students could gain knowledge after completing the game [12]. The pilot evidence supported the further application of GRAPHIC in the context of DPH education. This study was conducted to evaluate the use of GRAPHIC across two learning contexts, that of undergraduate dental students from King’s College London (KCL) in the United Kingdom and Mahidol University (MU) in Thailand. To achieve this aim, our research objectives were to:

- (1)

- Evaluate knowledge improvement after interacting with the game;

- (2)

- Investigate student interaction during the game completion;

- (3)

- Explore perceptions of students towards the game.

2. Materials and Methods

2.1. Research Design

This research employed a mixed-methods approach using a sequential explanatory design, where the quantitative phase was followed by the qualitative phase [13]. The quantitative findings could have informed what was to be further explored in the qualitative phase; therefore, adaptation of the topic guide used for the semi-structured interviews might have been needed. In addition, it was important to draw sample from the quantitative findings to select information-rich participants.

2.2. Research Participants

GRAPHIC was assigned to final-year dental students in the two universities (157 at KCL and 115 at MU), as a part of their dental public-health course in the academic year 2015–2016. Participants from the quantitative phase were recruited for a semi-structured interview using purposive sampling until data saturation, by considering test scores obtained from the knowledge assessments and their interaction with the game, including submission attempts retrieved from the activity log. This sampling allowed the selection of information-rich samples [14,15].

2.3. Learning Activities within GRAPHIC

Students were invited to complete the pre-knowledge test integrated in the game interface (‘Pre-test’ tab). Following this, they were required to control a cartoon character walking around a virtual town to explore the learning scenario (Figure 1). They were then automatically navigated to the game tab, where they were asked to complete the game by selecting the best five options of the health promotion programmes to improve the oral health of the population in the town (Figure 2). To complete the game, there were three options for the learners. Firstly, the game task was considered completed if users achieved a score of 100% (full score). If the full score could not be achieved, the game would end if they used all available submission attempts (20 attempts) or made a request to end the game if they were satisfied with the score they achieved. Following the game completion, students were navigated to the ‘Post-test’ tab, where they were asked to complete the post-knowledge test. These learning activities could take three to five hours to complete. However, students could perform these interactive asynchronous learning tasks in their own time and pace. They were able to save their progress using the log activity system, interrupt and return later to complete the game.

Figure 1.

Interface of the learning scenario of GRAPHIC.

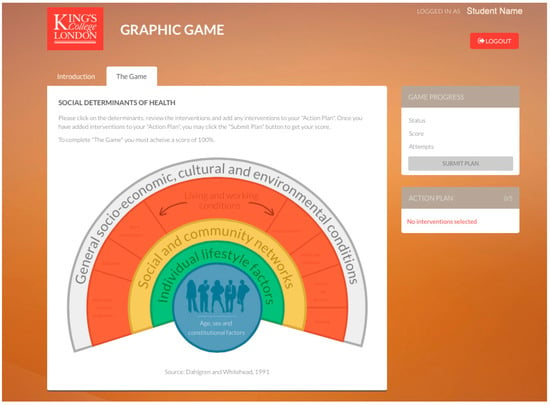

Figure 2.

The main interface of GRAPHIC allowing students to select the health promotion programs.

2.4. Data Collection Process

Pre- and post-knowledge assessments contained 20 multiple-choice questions addressing the learning outcomes of the game. The assessments were designed to cover topics related to the game, such as oral-health initiatives, common risk-factor approaches, and the rainbow diagram of the social determinants of health. Both tests contained the same questions; however, the sequence of question-and-answer choices was different to reduce test–retest memory effects [16,17]. The reliability and validity of the knowledge assessments were analysed using the Kuder–Richardson formula (KR-20), item difficulty, and item discrimination [18,19], to ensure the quality of the tests.

Students’ interactions with GRAPHIC were automatically recorded by the activity log function of the game. This technique, an indirect observation [11], helped observe how students interacted with the game tasks without distractions. This dataset comprised when and for how long students signed into GRAPHIC, how many times they submitted the set of answers, and what answers they selected. The game engine automatically recorded these data and exported them as a set of activity log data in an Excel spreadsheet.

Following the quantitative part, semi-structured interviews were performed to collect in-depth information about the use of GRAPHIC. The topic guide for the interview was constructed based on the findings of previous work and the evaluation framework for serious games was adapted from de Freitas and Oliver [20], and Mitgutsch and Alvarado [21], with necessary modifications to reflect quantitative findings (Table 1).

Table 1.

Topic guide used for the semi-structured interviews.

2.5. Data Analysis

Descriptive statistics were used to describe the overall features of the data. As the scores of both knowledge assessments were not normally distributed, non-parametric statistics were employed, including the Wilcoxon signed-ranks test to compare scores between pre-and post-knowledge tests and the Mann–Whitney U test to compare score differences between the two dental schools. The qualitative data were analysed using framework analysis, which involved charting and sorting data for the rigorous and transparent process of data management, comparison and interpretation of emerging themes [22]. The analysis was performed by the first author of this paper and validated by the co-authors. The statistical significance was taken at p < 0.05.

2.6. Ethical Considerations

This research was approved by the Biomedical Sciences, Dentistry, Medicine and Natural and Mathematical Sciences Research Ethics Subcommittee, College Research Ethics Committees, King’s College London, reference number: BDM/14/15-27 on 15 December 2014 with the modifications approved on 9 November 2015, and by the Faculty of Dentistry and the Faculty of Pharmacy, Mahidol University, Institutional Review Board, reference number MU-DT/PY-IRB 2015/002 on 19 January 2015 with the modifications approved on 20 November 2015.

3. Results

3.1. Participants

One hundred and fifty-seven KCL and 115 MU students interacted with the game. Six KCL and four MU students were excluded, as they did not complete at least one of the pre- and post-knowledge tests. Therefore, the data from 151 KCL and 111 MU students, 96.2% and 96.5%, respectively, were analysed quantitatively. Following the quantitative data analysis, 12 students, 6 (4 female and 2 male) MU and 6 (5 female and 1 male) KCL students participated in semi-structured interviews.

3.2. Pre- and Post-Knowledge Tests

The total score of both assessments was 20 marks. According to Table 2, the data from KCL students showed that the mean of the knowledge test score increased from 16.2 (SD = 2.25) for the pre-test to 16.7 (SD = 2.46) for the post-test, with the post-test score significantly higher (p < 0.05). Likewise, the data of MU students showed that the mean of pre- and post-test scores were 16.3 (SD = 2.36) and 17.1 (SD = 2.15), respectively, with the post-test score significantly higher (p < 0.001). The pre-test score, post-test score, and score difference were not significantly different between the two institutions.

Table 2.

Pre- and post-test scores.

3.3. GRAPHIC Completion and Submission Attempts

There were 148 (98.0%) KCL students who completed the game with the score of 100%, whilst two students used all submission attempts but could not achieve 100%, and one student opted to complete the game with a score of 95% using nine submission attempts (Table 3). For MU, 91 (81.0%) students completed the game with a score of 100%, whilst 19 students used all submission attempts but could not achieve 100%, and one student opted to complete the game with a score of 95% using 16 submission attempts (Table 3).

Table 3.

Types of game completion.

3.4. Using GRAPHIC: Evaluating the Learners’ Experience

Following the qualitative data analysis, five main themes were identified: (1) learning settings, (2) learner profile, (3) pedagogical aspect, (4) interactive functions, and (5) alignment. These five dimensions were arranged in order of their importance for the design and evaluation process of serious games. Table 4 summarises the five dimensions.

Table 4.

The description of the five main dimensions retrieved from the qualitative data analysis themes.

3.4.1. Dimension 1: Learning Settings

Learning settings appeared to be a key dimension when evaluating serious games. Components included location, schedule, and modes of implementation. As GRAPHIC was an online game, activities could be completed either on-campus or at home. Both locations offered strengths and limitations. On campus, students could obtain support from instructors if they had problems; however, time might be an issue, as they needed to complete the game within the prescribed duration of a class which might not be sufficient for them to engage fully with the underlying concepts of the game. Therefore, an approach could be to conduct game activities on campus to support students becoming familiar with the basics of the game, e.g., navigation. Students could then spend time completing the game at home. The game was also considered suitable in terms of its timeliness within the course curriculum. It was used at the end of the course drawing from knowledge gained from lectures, preparing learners for practice in real communities. Students supported the view that GRAPHIC should be used after attending all relevant lectures rather than replacing traditional learning approaches. Comments illustrating these views are included in Table 5.

Table 5.

Student comments on learning settings.

3.4.2. Dimension 2: Learner Profile

The learner profile was identified as important, according to the participants, for the design of serious games. Learner background and preferences, as well as prior experience with games, could impact learner performance. In addition, students might demonstrate different learning preferences (Table 6).

Table 6.

Student comments on learner profile.

3.4.3. Dimension 3: Pedagogical Aspect

This dimension determines the pedagogical approach of the game. This dimension is associated to the learning process in a serious game and includes learning outcomes, and features of the game that support learners to achieve these outcomes. After using GRAPHIC, the students reported that the game supported them in knowledge recall, knowledge improvement, and knowledge application. Therefore, this could be considered as a key dimension of the game evaluation. Table 7 presents the categories of this dimension.

Table 7.

Categories and subcategories of the pedagogical aspect.

Knowledge Gain

Knowledge gain appears to be an important learning outcome of GRAPHIC. The comments from the participants on knowledge gain are presented in Table 8. Knowledge gain comprises knowledge recall, knowledge improvement and knowledge application.

Table 8.

Student comments on knowledge gain.

Knowledge recall: Students thought the game helped them recall what they had learnt in DPH courses. Although students had encountered this topic in lectures before using the game, GRAPHIC enabled the recall of knowledge for fieldwork.

Knowledge improvement: Students reported that they gained new DPH knowledge, especially how to design oral-health promotion interventions in addition to the knowledge they had acquired in lectures.

Knowledge application: As GRAPHIC simulated an authentic learning environment, students believed that they could practice applying knowledge to a real community. This could be considered another strength of the game and would be impossible to achieve in lecture-based learning.

Learning Design Features

Several features of GRAPHIC were identified by participants as important in supporting them to achieve the learning outcomes associated to the game (Table 9).

Table 9.

Student comments on learning design features.

Learning content: Students indicated that the diagram of social determinants of health [23] containing a variety of health initiatives in each category (which was used in the game) supported them in identifying available initiatives in DPH. In addition, the research evidence provided in the game was perceived as helpful because students could access related resources.

Feedback: Feedback in the game was another feature that students perceived as supportive when achieving learning outcomes. Students reported that the clues provided in the feedback enabled them to learn from failure. They also commented that timely feedback allowed them to modify their incorrect answers straight away. However, they commented that feedback could be even more specific to their answers rather than providing generic clues or hints.

Pre- and post-knowledge tests: Participants agreed that the tests were relevant to the learning outcomes. They explained that the pre-test helped them identify knowledge gaps so they could pay attention to what they needed to learn from the game.

3.4.4. Dimension 4: Interactive Functions

This dimension was related to the user experience of the game engine and included usability, level of engagement, rules, and visual elements (Table 10).

Table 10.

Student comments on interactive functions.

Usability: Participants reported that the game was user-friendly and that they did not face any problems with the navigation.

Level of engagement: Level of engagement was important, as learners needed to be engaged with the game to achieve learning outcomes. Participants perceived the game as an engaging learning environment offering good opportunities for interaction. In addition, with immediate feedback, students could be guided by clues provided in the feedback rather than making random submissions.

Level of difficulty: Level of difficulty was important, as it could affect the level of engagement. The degree of difficulty had been set to be appropriate to their level of study. Participants believed that GRAPHIC had a suitable level of difficulty. If the game was too simplistic, it could affect the level of engagement; on the other hand, if it was too challenging, users might feel frustrated and give up before completing the game. They also thought that clues provided after successful submission were helpful.

Visual element (graphics): The use of images or diagrams also engaged users with the game. In addition, the colour and layout of the game interface were thought to be attractive. However, participants advised that this aspect could be further improved. For example, animations or images could be included in a pop-up box, when feedback was provided.

3.4.5. Dimension 5: Alignment

This aspect represents how the four dimensions are well-aligned to support students in achieving learning outcomes. Participants agreed that the order of activities was suitable and logical. The initial part of the game (the pre-knowledge test and the interactive presentation of the learning scenario) prepared students for the game task by motivating them to learn. Participants also believed that the introductory session was quite helpful, as they were allowed to ask for support when they faced any problems. They also recommended having a summary session, where the correct answers could be provided, so they could discuss them with their fellow students.

4. Discussion

This innovative serious game, the product of collaboration between two universities, demonstrated the potential for introducing dentistry students to managing population health activities. The game supported learner knowledge improvement, as evidenced in the pre- and post-knowledge assessments and student feedback. The evaluation of GRAPHIC in terms of knowledge improvement and level of engagement, followed by the enhancement of game usability and implementation are discussed below.

4.1. Knowledge Improvement

Knowledge improvement seems to be the most common factor when evaluating whether serious games improve knowledge and competencies [24,25,26,27]. GRAPHIC provided the opportunity to apply knowledge to a specific population through interaction within the game. The findings indicated that students improved their knowledge after game completion in both learning contexts, as evidenced by the data from the knowledge tests, the log activity function, focus groups and individual interviews. The findings are concurrent with other studies regarding the use of serious games in healthcare education [28,29,30,31,32,33], where post-test scores were higher than the pre-test scores. A serious game can provide an interactive asynchronous learning environment, where learners gain competence from their mistakes [10,34], which is one of the unique pedagogical features of game-based learning.

It could be argued that students performed better in the post-test, because they might recall what they did to complete the pre-test, according to the test–retest memory effect. This issue was addressed by using a different sequencing of questions and answer options in the post-test, a technique used by Andreatta et al. [16], and Gavin [17]. In addition, neither correct answers nor test scores were provided to students after completing the re-test, minimising such effects on knowledge assessment.

4.2. Level of Engagement

The level of engagement is fundamental in serious games, enabling them to be superior to other traditional approaches [4,10]. According to Garris et al. [34], a repetition of a game cycle leads to an achievement of learning outcomes; therefore, serious games need to be engaging enough to keep users engaged with these repetitions. Our findings demonstrated that GRAPHIC engaged learners because of its interactive format, especially when students had a chance to control a cartoon character walking around the simulated town. This feature provided a degree of freedom, as it allowed students to control the screen elements to find relevant information, instead of watching a slide presentation or reading a document. It led to an enhancement of the level of engagement [35,36]. In addition, an animated character can be introduced in storytelling, which can provide engagement [37]. Within GRAPHIC, students could control a character to explore infrastructure and obtain information.

The level of difficulty could also affect the level of engagement of the game. There was also evidence that feedback with clues could enhance the flow of GRAPHIC, i.e., the appropriate balance between the game challenge and learner competence. The flow of serious games is necessary to engage and motivate users to complete the game task [34,38,39,40,41]. The flow in GRAPHIC supported students who could not complete the game challenge, matching learners’ skills and knowledge [42]. If serious games are too difficult, users can feel frustrated when they fail a task too many times. On the other hand, students can become disengaged from a game task if it is too simple [37]. In other words, there should be an appropriate level of challenge for users to complete a game task.

Visual and audio aspects need to be considered when designing a game. Both sounds and graphics have been found to be relevant for the enjoyment of serious games [21,43,44].

4.3. The Enhancement of Game Usability

Perceived ease of use is an important aspect to be considered following the technology-acceptance model [45]. In addition, usability is a crucial element when evaluating serious games [46], as it could hinder students from achieving learning outcomes [47]. Most students from both dental schools thought that the user interface of GRAPHIC was easy to use and they could navigate through the game without major problems. Gaming experience may impact the perceived usability of games [48]. As most responding students reported they had video-game experience, they might be familiar with navigating video-game interfaces. In addition, as the game was first used in a tutorial, students had the chance to obtain support from their tutors or fellow students when they had problems navigating the game, and a guidance video on navigation was available before interacting with the game, which was helpful for students who had less gaming experience. Tutorials or support on usability can help users feel more confident and comfortable when interacting with games [48]. Finally, there was a recommendation that a tutorial should be embedded into the game, whereby students can learn how to navigate step-to-step when interacting with the game, instead of having a separate tutorial video.

4.4. Implementation of the Game

As outlined above, GRAPHIC was used as a formative learning tool, using which students could learn how to apply the knowledge they had acquired from their previous DPH course, and it was considered appropriate for this purpose. Whilst other studies in dental education have compared the effectiveness of serious games with traditional or ‘passive’ learning approaches [29,32,33,49], this study evaluated the impact of a DPH serious game as a supplementary formative tool, after DPH lectures, amongst students from two different learning contexts. Students need background knowledge from lectures or seminars, and a serious game will help them revise previous knowledge; simultaneously, the game can simulate authentic learning environments, where they can learn how to apply knowledge to real situations.

4.5. Strengths, Limitations and Further Research

This research focused on serious games such as GRAPHIC, a pedagogical approach built on game theories to deliver learning outcomes in DPH education. In addition, the game was evaluated using a novel evaluation framework drawn from the literature, which was considered suitable for serious games, especially when they are used in two learning contexts. Thus, it provided an opportunity to study the design and use of serious games in different contexts, revealing the point that a serious game can be applied in more than one learning environment with particular emphasis on the availability of resources and learner behaviours. A randomised-control-trial approach was not used in this study since the interaction with GRAPHIC was used as a requirement for students in both dental schools, and it was not feasible to separate students to compare different learning approaches. As the data retrieved from the game system were anonymized prior to data analysis, the gender and age of individual learners could not be identified. Further research could investigate whether these factors could have an impact on learning from serious games. While the sequences and details in the DPH curriculum for undergraduate students differed between KCL and MU, their expected learning outcomes were comparable, covering oral epidemiology, oral-health promotion, and public-health administration. Since the students in this study were all in their final year, they will have achieved all the learning outcomes in the DPH curriculum, thus having achieved equivalent learning profiles.

GRAPHIC has shown to have academic significance in DPH education, and it would be beneficial to design serious games for other subjects in a dental curriculum such as clinical dentistry, which would allow dental students to improve knowledge and skills in safe environments. However, each dental school may have different factors or conditions that impact the use of serious games. Despite positive attitudes, instructors might have insufficient skills in the implementation of serious games [50]. Access to computers and user readiness should also be considered, as technological performance is not just related to online communication or internet browsing but also to interactive tasks [51]. Consequently, it will be very helpful to perform research in other settings to explore whether there are other factors to be considered when implementing a serious game.

5. Conclusions

This research supports the adoption of a serious game, GRAPHIC, to enhance the quality of dental public-health education amongst senior dental students. This research employed an evaluation framework, which consisted of serious-game (pedagogical aspect and interactive functions) and non-serious-game dimensions (learning settings and learner profile), as well as a clear alignment between these dimensions. The game contributed to the participants’ dental public health education, at both participating dental schools, by improving knowledge and competencies in addition to enhancing engagement. Learning design attributes of serious games were revealed as supportive of the learning process, including feedback mechanisms. Overall, by creating a learning environment where learners can apply knowledge gained, this research contributed unique insights to the continuing debate of the impact of serious games in healthcare education and, in particular, in dental public health.

Author Contributions

Conceptualization, K.S., S.H., P.A.R. and J.E.G.; methodology, K.S., S.H., P.A.R. and J.E.G.; validation, K.S., S.H., P.A.R. and J.E.G.; formal analysis, K.S.; investigation, K.S. and T.V.; resources, K.S., S.H., P.A.R. and J.E.G.; data curation, K.S.; writing–original draft preparation, K.S.; writing–review and editing, S.H., T.V., P.A.R. and J.E.G.; visualization, K.S., S.H., P.A.R. and J.E.G.; supervision, S.H., P.A.R. and J.E.G. All authors have read and agreed to the published version of the manuscript.

Funding

The initial development of GRAPHIC was supported by the King’s College London Teaching Fund, and the APC was funded by MDPI.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Biomedical Sciences, Dentistry, Medicine and Natural and Mathematical Sciences Research Ethics Subcommittee, College Research Ethics Committees, King’s College London, reference number: BDM/14/15-27 on 15 December 2014, and by the Faculty of Dentistry and the Faculty of Pharmacy, Mahidol University, Institutional Review Board, reference number MU-DT/PY-IRB 2015/002 on 19 January 2015.

Informed Consent Statement

Informed consent was obtained from all participants involved in the qualitative phase. However, for the quantitative phase, informed consent was waived, as GRAPHIC was used as a requirement for all participants in both dental schools.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request. The data are not publicly available due to information that could compromise the privacy of research participants.

Acknowledgments

The authors are very grateful to Margaret Whitehead for the permission to use the rainbow diagram of the social determinants of health as the main interface of the game. We also would like to thank all students who participated in this research project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Breuer, J.; Bente, G. Why so serious? On the relation of serious games and learning. Eludamos. J. Comput. Game Cult. 2010, 4, 7–24. [Google Scholar] [CrossRef]

- Davis, J.S. Games and students: Creating innovative professionals. Am. J. Bus. Educ. 2011, 4, 1–11. [Google Scholar] [CrossRef]

- Zyda, M. From visual simulation to virtual reality to games. Computer 2005, 38, 25–32. [Google Scholar] [CrossRef]

- Sipiyaruk, K.; Gallagher, J.E.; Hatzipanagos, S.; Reynolds, P.A. A rapid review of serious games: From healthcare education to dental education. Eur. J. Dent. Educ. 2018, 22, 243–257. [Google Scholar] [CrossRef] [PubMed]

- Gallagher, J.E. Wanless: A public health knight. Securing good health for the whole population. Community Dent. Health 2005, 22, 66–70. [Google Scholar] [PubMed]

- Alqaderi, H.; Tavares, M.A.; Riedy, C. Residents’ Perspectives on and Application of Dental Public Health Competencies Using Case-Based Methods. J. Dent. Educ. 2019, 83, 1445–1451. [Google Scholar] [CrossRef] [PubMed]

- Richards, E.; Simpson, V.; Aaltonen, P.; Krebs, L.; Davis, L. Public Health Nursing Student Home Visit Preparation: The Role of Simulation in Increasing Confidence. Home Healthc Now 2010, 28, 631–638. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lampiris, L.N.; White, A.; Sams, L.D.; White, T.; Weintraub, J.A. Enhancing Dental Students’ Understanding of Poverty Through Simulation. J. Dent. Educ. 2017, 81, 1053–1061. [Google Scholar] [CrossRef]

- McCunney, D.; Davis, C.E.; White, B.A.; Howard, J. “Preparing students for what lies ahead”: Teaching dental public health leadership with simulated community partners. J. Appl. Res. High. Educ. 2019, 11, 559–573. [Google Scholar] [CrossRef]

- Sipiyaruk, K.; Hatzipanagos, S.; Reynolds, P.A.; Gallagher, J.E. Serious Games and the COVID-19 Pandemic in Dental Education: An Integrative Review of the Literature. Computers 2021, 10, 42. [Google Scholar] [CrossRef]

- Sipiyaruk, K.; Gallagher, J.E.; Hatzipanagos, S.; Reynolds, P.A. Acquiring critical thinking and decision-making skills: An evaluation of a serious game used by undergraduate dental students in dental public health. Technol. Knowl. Learn. 2017, 22, 209–218. [Google Scholar] [CrossRef] [Green Version]

- Sipiyaruk, K.; Hatzipanagos, S.; Gallagher, J.E.; Reynolds, P.A. Knowledge Improvement of Dental Students in Thailand and UK through an Online Serious Game in Dental Public Health. In Serious Games, Interaction, and Simulation: 5th International Conference, SGAMES 2015, Novedrate, Italy, September 16–18, 2015, Revised Selected Papers; Vaz de Carvalho, C., Escudeiro, P., Coelho, A., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 80–85. [Google Scholar]

- Creswell, J.W. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches, 3rd ed.; SAGE Publications: London, UK, 2009. [Google Scholar]

- Patton, M.Q. Qualitative Evaluation and Research Methods, 2nd ed.; SAGE Publications: Beverly Hills, CA, USA, 1990. [Google Scholar]

- Creswell, J.W. Qualitative Inquiry & Research Design: Choosing among Five Approaches, 3rd ed.; Sage Publications: London, UK, 2013. [Google Scholar]

- Andreatta, P.B.; Maslowski, E.; Petty, S.; Shim, W.; Marsh, M.; Hall, T.; Stern, S.; Frankel, J. Virtual reality triage training provides a viable solution for disaster-preparedness. Acad. Emerg. Med. 2010, 17, 870–876. [Google Scholar] [CrossRef]

- Gavin, K.A. Assessing a Food Safety Training Program Incorporating Active Learning in Vegetable Production Using Kirkpatrick’s Four Level Model. Ph.D. Thesis, Montana State University, Bozeman, MT, USA, 2016. [Google Scholar]

- McGahee, T.W.; Ball, J. How to read and really use an item analysis. Nurse Educ. 2009, 34, 166–171. [Google Scholar] [CrossRef]

- De Champlain, A.F. A primer on classical test theory and item response theory for assessments in medical education. Med. Educ. 2010, 44, 109–117. [Google Scholar] [CrossRef]

- De Freitas, S.; Oliver, M. How can exploratory learning with games and simulations within the curriculum be most effectively evaluated? Comput. Educ. 2006, 46, 249–264. [Google Scholar] [CrossRef] [Green Version]

- Mitgutsch, K.; Alvarado, N. Purposeful by design?: A serious game design assessment framework. In Proceedings of the International Conference on the Foundations of Digital Games, Raleigh, NC, USA, 29 May–1 June 2012; pp. 121–128. [Google Scholar]

- Ritchie, J.; Lewis, J.; Nicholls, C.M.; Ormston, R. Qualitative Research Practice: A Guide for Social Science Students and Researchers; Sage: London, UK, 2014. [Google Scholar]

- Dahlgren, G.; Whitehead, M. Tackling Inequalities in Health: What Can We Learn from What Has Been Tried? Working Paper Prepared for the King’s Fund International Seminar on Tackling Inequalities in Health; King’s Fund: Ditchely Park, Oxford, UK, 1993. [Google Scholar]

- Calderón, A.; Ruiz, M. A systematic literature review on serious games evaluation: An application to software project management. Comput. Educ. 2015, 87, 396–422. [Google Scholar] [CrossRef]

- Petri, G.; Von Wangenheim, C.G. How to evaluate educational games: A systematic literature review. J. Univers. Comput. Sci. 2016, 22, 992–1021. [Google Scholar]

- Petri, G.; Von Wangenheim, C.G. How games for computing education are evaluated? A systematic literature review. Comput. Educ. 2017, 107, 68–90. [Google Scholar] [CrossRef]

- Vlachopoulos, D.; Makri, A. The effect of games and simulations on higher education: A systematic literature review. Int. J. Educ. Technol. High. Educ. 2017, 14, 22. [Google Scholar] [CrossRef]

- Duque, G.; Fung, S.; Mallet, L.; Posel, N.; Fleiszer, D. Learning while having fun: The use of video gaming to teach geriatric house calls to medical students. J. Am. Geriatr. Soc. 2008, 56, 1328–1332. [Google Scholar] [CrossRef]

- Amer, R.S.; Denehy, G.E.; Cobb, D.S.; Dawson, D.V.; Cunningham-Ford, M.A.; Bergeron, C. Development and evaluation of an interactive dental video game to teach dentin bonding. J. Dent. Educ. 2011, 75, 823–831. [Google Scholar] [CrossRef] [PubMed]

- Thompson, M.E.; Ford, R.; Webster, A. Effectiveness of interactive, online games in learning neuroscience and students’ perception of the games as learning tools a pre-experimental study. J. Allied Health 2011, 40, 150–155. [Google Scholar] [PubMed]

- Duque, G.; Demontiero, O.; Whereat, S.; Gunawardene, P.; Leung, O.; Webster, P.; Sardinha, L.; Boersma, D.; Sharma, A. Evaluation of a blended learning model in geriatric medicine: A successful learning experience for medical students. Australas. J. Ageing 2013, 32, 103–109. [Google Scholar] [CrossRef] [PubMed]

- Hannig, A.; Lemos, M.; Spreckelsen, C.; Ohnesorge-Radtke, U.; Rafai, N. Skills-O-Mat: Computer supported interactive motion- and game-based training in mixing alginate in dental education. J. Educ. Comput. Res. 2013, 48, 315–343. [Google Scholar] [CrossRef]

- Dankbaar, M.E.W.; Richters, O.; Kalkman, C.J.; Prins, G.; Ten Cate, O.T.J.; Van Merrienboer, J.J.G.; Schuit, S.C.E. Comparative effectiveness of a serious game and an e-module to support patient safety knowledge and awareness. BioMed Cent. Med. Educ. 2017, 17, 30. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garris, R.; Ahlers, R.; Driskell, J.E. Games, motivation, and learning: A research and practice model. Simul. Gaming 2002, 33, 441–467. [Google Scholar] [CrossRef]

- Malone, T.W.; Lepper, M.R. Making learning fun: A taxonomy of intrinsic motivations for learning. In Aptitude, Learning, and Instruction Volume 3: Conative and Affective Process Analyses; Snow, R.E., Farr, M.J., Eds.; Lawrence Erlbaum Associates: Mahwa, NJ, USA, 1987; pp. 223–253. [Google Scholar]

- Tennyson, R.D.; Jorczak, R.L. A conceptual framework for the empirical study of instructional games. In Computer Games and Team and Individual Learning; O’Neil, H.F., Perez, R.S., Eds.; Elsevier: Amsterdam, The Netherlands, 2008; pp. 3–20. [Google Scholar]

- Winn, B.M. The design, play, and experience framework. In Handbook of Research on Effective Electronic Gaming in Education; Ferdig, R.E., Ed.; IGI Global: London, UK, 2009; pp. 1010–1024. [Google Scholar]

- Chou, T.J.; Ting, C.C. The role of flow experience in cyber-game addiction. CyberPsychology Behav. 2003, 6, 663–675. [Google Scholar] [CrossRef]

- Johnson, D.; Wiles, J. Effective affective user interface design in games. Ergonomics 2003, 46, 1332–1345. [Google Scholar] [CrossRef] [Green Version]

- Chen, J. Flow in games (and everything else). Commun. Assoc. Comput. Mach. 2007, 50, 31–34. [Google Scholar] [CrossRef]

- Cowley, B.; Charles, D.; Black, M.; Hickey, R. Toward an understanding of flow in video games. Comput. Entertain. 2008, 6, 1–27. [Google Scholar] [CrossRef] [Green Version]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Performance; HarperCollins Publishers: New York, NY, USA, 1990. [Google Scholar]

- Amory, A.; Naicker, K.; Vincent, J.; Adams, C. The use of computer games as an educational tool: Identification of appropriate game types and game elements. Br. J. Educ. Technol. 1999, 30, 311–321. [Google Scholar] [CrossRef]

- Amory, A. Game Object Model Version II: A theoretical framework for educational game development. Educ. Technol. Res. Dev. 2007, 55, 51–77. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. Manag. Inf. Syst. Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Sanchez, E. Key criteria for game design. A framework. In Business Game-Based Learning in Management Education; Baldissin, N., Bettiol, S., Magrin, S., Nonino, F., Eds.; Lulu.com: Morrisville, NC, USA, 2013; pp. 79–95. [Google Scholar]

- Law, E.L.C.; Sun, X. Evaluating user experience of adaptive digital educational games with Activity Theory. Int. J. Hum. Comput. Stud. 2012, 70, 478–497. [Google Scholar] [CrossRef]

- Cornett, S. The usability of massively multiplayer online roleplaying games: Designing for new users. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 703–710. [Google Scholar]

- Marcondes, F.K.; Gavião, K.C.; Cardozo, L.T.; Moraes, A.B.A. Effect of educational games on knowledge acquisition in physiology. Fed. Am. Soc. Exp. Biol. J. 2016, 30, 776.11. [Google Scholar]

- Belda-Medina, J.; Calvo-Ferrer, J.R. Preservice Teachers’ Knowledge and Attitudes toward Digital-Game-Based Language Learning. Educ. Sci. 2022, 12, 182. [Google Scholar] [CrossRef]

- Alontaga, J.V.Q. Internet Shop Users: Computer Practices and Its Relationship to E-Learning Readiness. Educ. Sci. 2018, 8, 46. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).