Abstract

In the framework of instrumental research, the adaptation of tests has been increasing. The aim of this paper is to explain the procedures followed for the adaptation of a test with a construct of a cultural nature to another context, reflecting on the difficulties and limitations related to its equivalence and validity. For this purpose, we start from the Test on the Construction of Historical Knowledge, originally designed for a Spanish context and targeted at elementary school students, in order to design and validate its Portuguese version. The process of “emic-etic” adaptation, in which the technical, semantic, conceptual, and metric equivalence of a test is sought, was carried out through group translation with post-translation empirical processes (expert judgement, a pilot study, and external criteria). It highlighted some issues closely linked to internal and external cultural factors. On one hand was the predominance of analytical-rational processes in the adaptation process, with an essential deep reflection on the construct and the meanings intimately linked to the context. On the other hand, were the difficulties of empirical procedures due to the qualitative nature of the construct. This led us to reflect on the possibility of minimizing the “emic” aspects in eminently cultural constructs without renouncing reliable and valid results with respect to the construct.

1. Introduction

In the face of empirical research, instrumental research focuses on the design of instruments and the analysis of the psychometric features of tests [] in order to obtain the best measurements for each domain adjusted to each receiver.

In the framework of this type of research, the adaptation of tests to other linguistic and cultural contexts has a long history that can be traced back to the work of Binet and Simon []. However, in recent decades there has been a notable increase in the adaptation of tests [], largely because of the need arising from the continuous interchange of people and ideas across the planet, the utility of applying a result to different contexts, and the proliferation of comparative studies. Thus, test adaptation as a cross-cultural product [] is becoming increasingly popular and useful.

A key aspect of these decades of development is the progressive distinction between the translation and adaptation of a test [,]. Adapting a test is much more than a linguistic issue; it involves cultural, conceptual, and metric elements [] that have an impact on the equivalence between the original and the resulting tests. The adaptation process involves attending to the internal and external validity of the instrument [], perceiving the process as flexible, and transcending literal translation.

The International Test Commission, in response to the proliferation of test adaptations, started a project in 1994 [,] to develop guidelines for test adaptation. The aim of these guidelines is that “the final product of the adaptation process should achieve the highest possible level of linguistic, cultural, conceptual and metric equivalence to the original test” [], p. 152. These guidelines are divided into different categories related to the process of test design and application: before development (analysis of the legal framework, design, and construct evaluation), during development (linguistic and cultural adaptation and pilot studies), confirmation (data collection, equivalence, reliability, and validation), application (administration), scoring and interpretation (interpretation of the results and comparability), and documentation (changes between versions and appropriate use). The adaptation process is, therefore, presented as a complex process, distant from the initial idea of translation.

In the context of increasing recognition of cultural influences when adapting a test [,,,,], advances have been achieved in methodological and technical developments for cross-cultural equivalence [,]. Several authors and institutions have recently offered guidelines for the translation and adaptation of tests [,,,,]. Each approach assumes that technical, semantic, and cultural equivalence must be sought in the construction of a scale because the meaning of terms can differ according to context. The search for conceptual equivalence [] implies the recognition that a concept may differ according to cultural context and that equivalence in the meaning of an item is not enough to ensure equivalence in its conceptualization and, consequently, the equivalence of the scale. In the same vein, it implies accepting the existence of some general elements in tests that influence the technical equivalence itself and that are culturally dependent. For example, this is the case for condescension, which is directly related to the response to items [,].

The results of studies focusing on test adaptation—[,,,,,] to mention a few examples—support the same idea, recognizing the differences between cultures, even to the extreme of invalidity of the scales [,].

In education, the rise of cultural adaptations finds paradigmatic examples in measurements through the Programme for International Student Assessment (PISA) or the Trends in International Mathematics and Science Study (TIMSS), among the most relevant. In addition to the influence that cultural factors exert on all tests and the intrinsic difficulties of measurement in education (the singularity of each school environment, the prevalence of a qualitative paradigm for certain constructs, etc.), there are also close relations with the cultural sphere established by certain constructs. In this sense, epistemological theories on the construction of historical knowledge [,,] necessarily imply the consideration of first- and second-order contents []. Therefore, if we understand epistemological theories as an organization of beliefs that assume a specific way of understanding knowledge, reflecting both its nature and its construction process [,], the notions of how to construct in history—in other words, the second-order content (causality, elaboration of evidence, historical perspective, etc.)—cannot be separated from the historical processes on which the construction is planned (first-order or substantive content). Thus, factual events directly related to the historical culture in which they are embedded [,] have an impact on the way of understanding the construction of historical knowledge within the same cultural context, especially when this context is modified.

Given a construct wherein most research is based on a qualitative approach [] and very few instruments with their own name are available for the generalization of results [,,], we adapted the Test on the Construction of Historical Knowledge (CONCONHIS) [], originally designed for a Spanish context, for a Portuguese context.

The purpose of this paper is to explain the procedures followed for the adaptation of a test that has a construct of a cultural nature to another context, reflecting on the difficulties and limitations regarding its equivalence and validity. To this end and in accordance with the singularity of the construct, we pay as much attention to the analytical-rational processes as to the empirical.

2. Original Test Design: Description of the Construct and of the Instrument

The CONCONHIS was designed to assess the construct of epistemological theories on the construction of historical knowledge, understood as those beliefs related to the nature and process of the construction of historical knowledge. Its aim is to evaluate subjects’ conceptions of each of the operations involved in the process of constructing historical knowledge. To this end, its design is based on the definition of a construct composed of three dimensions in which ten variables and twenty-one indicators are distributed.

The first dimension, inference making, includes epistemological theories relating to the choice of what is relevant and the formation and evaluation of inferences. The second dimension, structuring operations, includes the formulation and evaluation of problems; the search for, suitability, and reading of sources; the adoption of perspectives; the obtaining of evidence; the resolution and justification of questions; and the elaboration of discourses. A third dimension, alternative operations, involves variables related to theories around the observation of reality and its questioning; the search for alternatives; the retention and identification of perspectives; and the formulation of divergent questions about sources.

Based on these conceptual foundations, the CONCONHIS was designed as a standardized, objective, nonverbal test [,] consisting of 42 items (two for each indicator) distributed in Part I and Part II of the test and validated for Spanish students aged between twelve and fifteen years. It is a test for individual or collective application, requiring about 50 min to complete each part. Its items are multiple-choice questions, with three answer choices corresponding to three levels of development for the indicator measured. The wording of an item consists of a situational question in which the participant is asked about his or her perception of the way in which a particular historical procedure is conducted associated with a specific historical event; it includes an image in the style of a vignette, which may or may not be essential in order to answer the item.

The CONCONHIS has undergone various validity and reliability improvement procedures ensuring its content validity (with the participation of 17 experts), construct validity (confirmatory factor analysis based on the responses of 222 subjects; CFI = 0.99; TLI = 0.99; RMSEA = 0.01; SRMR = 0.04), and internal consistency (alpha = 0.63), which is understood as moderate in accordance with the nature of the construct [].

3. The Adaptation Process of the Instrument: Technical, semantic, and Conceptual Equivalence

The adaptation process conducted for the CONCONHIS can be defined as an emic-etic adaptation []. Accordingly, attention was paid both to the aspects that were common to the cultures involved (etic) (variables, general structure of the scale, items, etc.) and to the specific elements of the culture to which the test was to be adapted (emic)—in essence, to its social-historical context.

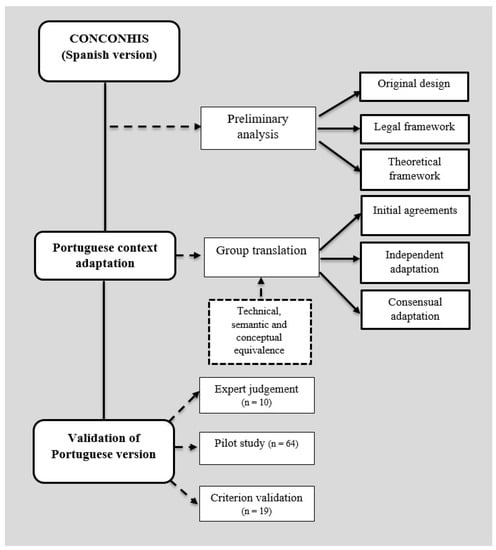

Figure 1 includes the analytical-rational processes for test adaptation in the search for technical, semantic, and conceptual equivalence [,] based, among others, on Gana et al. [].

Figure 1.

Analytical-rational and empirical processes of test adaption.

First, following the guidelines established prior to the development of the adaptation of the International Test Commission [,], we analyzed the issues related to the legal framework and the relevance of construct assessment in the new culture. We concluded that curricular reforms have been carried out in parallel in Spain and Portugal, following the same direction [,]; that, in both contexts, textbooks continue to be the predominant didactic resource, which implies a gap between the approach to historical thinking collected by the instrument and the school reality []; and that multiple research projects have been carried out in the Portuguese context with similar results to those in the Spanish context [,,,]. In addition, the original design was analyzed by identifying linguistic and cultural features relevant or irrelevant to the adaptation.

This allowed an adaptation to be developed where the translation process was presented as essential. A back-translation process was initially considered [], and we finally opted for a group translation. The group consisted of two researchers who were knowledgeable about the object of the research and familiar with the scientific approach [], as well as with the two languages and cultures [,]. Specifically, each of them is a native speaker of one of the languages (Spanish or Portuguese) and has completed long-term research stays in the other country.

In the group translation process, three differentiated phases were distinguished. In the first, a group phase, the variables, indicators, and items of the test were reviewed, considering that they should all be maintained in the new version in order to achieve technical equivalence. It was also agreed that, while maintaining the initial structure as far as possible, some changes in terms or examples were acceptable in order to ensure semantic and conceptual equivalence. In a second individual phase, the experts developed independent adaptations of the test, taking into account the elements identified by Hambleton and Zenisky [] for translation quality control. Finally, in a third phase, a consensus version was obtained, reflecting on the differences between the independent versions for each item.

Table 1 shows the main changes made to the items.

Table 1.

Comparison between the items in the Spanish and Portuguese versions.

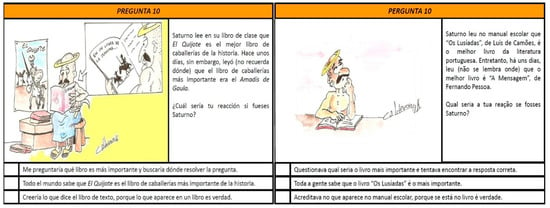

As can be seen, the modifications were mainly due to historical context, specifically to historical events of national influence whose meanings differed for Portuguese participants. Therefore, in the process of adaptation, priority was given to the search for a comparable historical event, both in terms of its meaning for the subject and in terms of its usefulness for measurement of the operation to which the item referred. This was the case for items 10, 14, 23, 24, 34, and 42. The change made to item 12 can also be explained by the context, although in this case it was related to the landscape or even the weather. Another type of modification related to the linguistic level (items 22 and 38), moving away from literal translation to consider connotative or historical aspects of the language. Moreover, it is interesting to highlight that modifications were not only limited to the verbal statements, but alterations were also necessary in the illustrations. As can be seen in Figure 2, for those items in which the illustrations were not essential for the response to the item, neutral illustrations were chosen to allow future adaptations.

Figure 2.

Example of the adaptation of an item (item 10).

As a result of all the above, we obtained a Portuguese version of the test of 42 items with linguistic and contextual adaptations, seeking in the translation process technical, semantic, and conceptual equivalence of the different versions.

4. First Steps in Test Validity and the Search for Metric Equivalence

The challenge of adapting a test to another context is not limited to the moment at which the design is adapted and where cultural issues are explicitly raised but includes a further validation process, which is also not free of cultural influences. In fact, the International Test Commission includes pilot studies as part of its development, discussing data collection, equivalence, reliability, and validation as components of test confirmation.

Along these lines, the adapted version of the test underwent three processes with the aim of seeking its validity and equivalence.

First, the CONCONHIS was examined by expert judges (n = 10) with a range of profiles (history, didactics, and methodology) and extensive teaching experience (M = 16.70, SD = 12.68 at university (70%) and non-university levels (30%). The evaluation sheet included 29 items divided into three blocks: assessment of the dimensions; assessment of the items; and global assessment of the test. The judges were asked to place themselves on a scale of 1 to 4, from not at all to totally adequate, and answer either relevant or clear for the items and coherent, appropriate, or sufficient for the dimensions. In addition, they were asked to answer yes or no to the following statement: “I consider item X of the Spanish and Portuguese version to be technically, conceptually, and semantically equivalent.” A statistical package, IBM SPSS Statistics (version 24.0.0), was used to calculate the mean, standard deviation, and frequency of the responses, and Kendall’s W test was applied to analyze the concordance between the average traits assigned by the judges.

In line with the objective of the present study, some of the results are highlighted herein. First, good results were obtained in the global evaluation of the test relative to the research objectives (M = 3.20, ST = 0.27), the target audience (M = 3.60, ST = 0.32), and the applicability (M = 3.50, DT = 0.43), with the overall evaluation of the test yielding M = 3.63 and SD = 0.36, which is almost entirely adequate. Second, the mean scores for the dimensions were close to the maximum value for appropriateness (M = 3.97, SD = 0.07), coherence (M = 3.87, SD = 0.31), and sufficiency (M = 3.87, SD = 0.27). Moreover, they were above the value of 3.50 for all the dimensions of the test (Table 2).

Table 2.

Scores given by the expert judges for the dimensions.

Third, in the assessment of the items’ adequacy (“I consider item X adequate”), relevance (“I consider item X relevant”), and clarity (“I consider item X clear”), all three exceeded a mean of 3.50 out of 4, with a standard deviation of less than 0.40 (Table 3).

Table 3.

Scores given by the expert judges for the items.

For adequacy, 77.27% of the items had a mean higher than 3.50, with only one item (item 1) scoring less than 3; for relevance, 88.63% of the items exceeded 3.50; and for clarity, 61.36% of the items reached 3.50.

Among the participating judges, six were recognized as having sufficient linguistic and contextual proficiency to assess equivalence; all items received 100 percent positive responses for their technical, semantic, and conceptual equivalence.

According to Kendall’s W test (W = 0.202, Ji2 = 239.648, gl = 9), there was a statistically significant agreement (p < 0.01) between the average traits assigned by the judges (J1 = 5.67, J2 = 3.85, J3 = 5.72, J4 = 6.27, J5 = 5.71, J6 = 5.30, J7 = 4.14, J8 = 5.63, J9 = 6.29, and J10 = 6.43), although the intensity of the agreement was insignificant.

Second, a pilot study was carried out with 64 eighth grade (i.e., 13- to 14-year-old) Portuguese students (26 boys, 38 girls). Using the IBM SPSS Statistics (version 24.0.0) and MPlus statistical packages, descriptive statistics, and Cronbach’s α, the ease and discrimination indices of the items were calculated, and a confirmatory factor analysis was conducted. Although the small sample size did not allow for an in-depth analysis of the psychometric characteristics of the test and item behavior, neither of the descriptions of the procedures mentioned above were relevant in the framework of this paper. The factor structure of the Spanish version (CFI = 0.99; TLI = 0.99; RMSEA = 0.01; and SRMR = 0.04) was conserved, and the model fit indices were very similar (CFI = 0.99; TLI = 0.98; RMSEA = 0.02; and SRMR = 0.04). Although a separate confirmatory factor analysis was necessary, we understood the results as suggesting metric equivalence based on the recognition of the factor structure as an element supporting this equivalence [].

Third, we searched for the criterion validity of the test, understood as the relationship between the scores obtained by this test and an external criterion of measurement of the same construct it deals with []. Specifically, we focused on the concurrent validity [,] applicable when the measures of the criterion and the test are obtained, approximately, at the same time. A relevant, valid, and uncontaminated criterion [] was considered to be the assessment for each of the students by a teacher with in-depth knowledge of the group and the object of the research. Thus, the teacher scored the historical operations relating to each variable using the same scales on which the test was designed according to the perceived development for each of those assessed (n = 19). The validity coefficient (Pearson’s correlation coefficient) between the test variables and the external criterion scores was calculated using IBM SPSS Statistics (version 24.0.0). The analysis of the validity coefficient revealed a high, positive, and statistically significant correlation between the total score of the test and the total score of the external criterion and a higher correlation between the variables of a methodical nature than between the more creative variables. Nevertheless, bearing in mind that coefficients of 0.20 or greater were considered useful for predicting criterion and that values greater than 0.60 were considered unusual for criterion validity [,], we understood the results as evidence of this type of validity.

Therefore, the analytical-rational and empirical processes involving the Portuguese adaptation of the test allowed us to speak of a technical, semantic, and conceptual equivalence between the versions and suggestions of metric equivalence.

5. Conclusions, Limitations and Future Perspectives

In this paper, we set out to explain the procedures followed for the adaptation of a test with a construct of a cultural nature to another context. The adaptation process developed, based on the guidelines of the International Test Commission [,] and in line with other adaptation studies (e.g., []), showed the complexity of the object of the study and the process of adaptation to another culture. In this regard, some reflections can be undertaken, by way of conclusions, that are of interest for the adaptation processes of this type of test.

Firstly, and always with the aim of finding the best measurements for each construct [], the analytical-rational processes were placed in the foreground. The adaptation process was, thus, understood as a complex, reasoned, and analytical procedure in which a deep knowledge of the object of study was essential. In this sense, the analysis of the construct, its operationalization in items, and reflections on the influence of cultural changes on the conceptualization of the process of constructing historical knowledge were presented as decisive for the equivalence of the versions. Thus, in a context of test design where the empirical prevails over the analytical, where constructs are difficult to define through objective variables and items, and where the contextual and cultural influence is inseparable from the construct itself, the process of adaptation and the search for equivalence between versions must be understood as a continuous, even cyclical, interaction between rational and empirical processes.

Secondly, and following the same line, the difficulties of empirical procedures were explained by the qualitative nature of the construct. We started from a test whose original version had moderate internal consistency. This reality, a priori, explains why any variation in the test implied a difficulty in maintaining the indices relating to the validity and reliability of the instrument. This led us, once again, to rethink the construct, which may have led to oversimplification or excessive opportunity cost [], which ultimately distorted what we really wanted to measure. However, the necessary commitment to the development of tests that allow generalization of the results of complex constructs inevitably implies assuming these problems and understanding the challenge in the medium or long term. It also implies adopting a flexible judgement from the point of view of metric equivalence.

Thirdly, the effect that culture had on the test, both externally and internally, provoked a reflection that we consider to be of interest for the development of this type of test. On the one hand, the cultural element—more specifically in this case, the historical events—may influence the level of development of the epistemological theories related to each historical operation; on the other hand, including in the items events of special significance for the students implies a particular adaptation for each context. This led us to ask ourselves to what extent was it possible to construct neutral tests, removed from national history, for the measurement of epistemological theories related to the process and not to the fact? Was it possible to minimize the emic aspects in eminently cultural constructs without renouncing reliable and valid results with respect to the construct? In this sense, the adaptation carried out facilitated the elaboration of later versions—especially regarding the illustrations—assuming, however, episodes of Portuguese national history in the search for the greatest equivalence. Apart from the above, we believe that this is a point for reflection, debate, and research.

Finally, to sum up, we can say that the scale was valid for other cultures in contrast to the results of other studies []. This can be affirmed by taking into account some limitations. Firstly, the affirmation of its validity was made for closed cultures. The consideration of other cultural contexts, within or outside of a Western framework, would entail added difficulties and the need for further studies. Secondly, the process of adapting the test was still partial. In this respect, while it was possible to speak of technical, semantic, and conceptual equivalence, the metrical equivalence can be understood as an indicium. Thus, larger studies are needed with a greater number of participants to allow an exhaustive analysis of the equivalence of the model and its factorial structure, as well as the psychometric characteristics of the test and the items (e.g., discrimination and difficulty indices, among others).

Despite the above, we consider this version to be valid and the process carried out to be useful for reflection and decisions regarding the adaptation of tests to other cultures. In this sense, the greater the complexity and the more qualitative and cultural the nature of the construct, the more necessary it is to approach the adaptation process through reflection and analysis, rather than as a mechanical procedure.

Author Contributions

Conceptualization, A.I.P.G.; methodology, A.I.P.G. and F.J.S.P.; software, A.I.P.G.; formal analysis, A.I.P.G. and F.J.S.P.; investigation, A.I.P.G.; original draft preparation, A.I.P.G.; writing—review and editing, A.I.P.G. and F.J.S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundación Séneca, Agencia de Ciencia y Tecnología de la Región de Murcia, grant number 19811/FPI/15, and Spanish Ministry of Economy and Competitiveness, grant number EDU2015-65621-C3-2-R.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of University of Murcia.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ato, M.; López, J.; Benavente, A. Un sistema de clasificación de los diseños de investigación en psicología. Ann. Psychol. 2013, 29, 1038–1058. [Google Scholar] [CrossRef]

- Binet, A.; Simon, T. Méthodes nouvelles pour le diagnostic du niveau intellectuel des anormaux. L’Année Psychol. 1904, 11, 191–244. [Google Scholar] [CrossRef]

- Muñiz, J.; Elosua, P.; Hambleton, R.K. Directrices para la traducción y adaptación de los tests: Segunda edición. Psicothema 2013, 25, 151–157. [Google Scholar] [CrossRef] [PubMed]

- Syed, M.S.; Shamsul, B.M.T.; Rahman, R. The efficacy of a quantitative cross cultural product design survey instrument. Adv. Environ. Biol. 2014, 8, 207–212. [Google Scholar]

- Geisinger, K.F. Cross-Cultural Normative Assessment: Translation and Adaptation Issues Influencing the Normative Interpretation of Assessment Instruments. Psychol. Assess. 1994, 6, 304–312. [Google Scholar] [CrossRef]

- Haug, T.; Mann, W. Adapting tests of sign language assessment for other sign languages. A review of linguistic, cultural, and psychometric problems. J. Deaf Stud. Deaf. Educ. 2008, 13, 138–147. [Google Scholar] [CrossRef]

- Hambleton, R.K. Guidelines for adapting educational and psychological tests: A progress report. Eur. J. Psychol. Assess. 1994, 10, 229–244. [Google Scholar] [CrossRef]

- Muñiz, J.; Hambleton, R.K. Directrices para la traducción y adaptación de tests. Papel. Psic. 1996, 66, 63–70. [Google Scholar]

- Beaton, D.E.; Bombardier, C.; Guillemin, F.; Ferraz, M.B. Guidelines for the process of cross-cultural adaptation of self-report measures. Spine 2000, 25, 3186–3191. [Google Scholar] [CrossRef]

- Callegaro, J.; Figueiredo, B.; Ruschel, D. Cross-cultural adaptation and validation of psychological instruments: Some considerations. Paidéia 2012, 22, 423–432. [Google Scholar] [CrossRef]

- Hambleton, R.K. Issues, designs, and technical guidelines for adapting tests into multiple languages and cultures. In Adapting Educational and Psychological Tests for Cross-Cultural Assessment; Hambleton, R.K., Merenda, P.F., Spielberger, C.D., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2005; pp. 3–38. [Google Scholar]

- Sireci, S.G.; Yang, Y.; Harter, J.; Ehrlich, E. Evaluating guidelines for test adaptations: A methodological analysis of translation quality. J. Cross-Cult. Psychol. 2006, 37, 557–567. [Google Scholar] [CrossRef]

- Byrne, B.M. Testing for multigroup equivalence of a measuring instrument: A walk through the process. Psicothema 2008, 20, 872–882. [Google Scholar] [PubMed]

- Elosua, P. Evaluación progresiva de la invarianza factorial entre las versiones original y adaptada de una escala de autoconcepto. Psicothema 2005, 17, 356–362. [Google Scholar]

- International Test Commission (ITC). ITC Guidelines for Translating and Adapting Tests (Second Edition). Int. J. Test. 2018, 18, 101–134. [Google Scholar] [CrossRef]

- Yasir, A. Cross Cultural Adaptation & Psychometric Validation of Instruments: Step-wise Description. Int. J. Psychiatry 2016, 1, 1–4. [Google Scholar] [CrossRef]

- Daouk-Öyry, L.; Zeinoun, P. Testing across cultures: Translation, adaptation and indigenous test development. In Psychometric Testing: Critical Perspectives; Cripps, B., Ed.; John Wiley & Sons: New York, NY, USA, 2017; pp. 221–233. [Google Scholar]

- Gana, K.; Boudouda, N.E.; Ben, S.; Calcagni, N.; Broc, G. Adaptation transculturelle de tests et échelles de mesure psychologiques: Guide pratique basé sur les Recommandations de la Commission Internationale des Tests et les Standards de pratique du testing de l’APA. Prat. Psychol. 2021, 27, 223–240. [Google Scholar] [CrossRef]

- Khan, G.; Mirza, N.; Waheed, W. Developing guidelines for the translation and cultural adaptation of the Montreal Cognitive Assessment: Scoping review and qualitative synthesis. BJPsych Open 2022, 8, 1–17. [Google Scholar] [CrossRef]

- Gaite, L.; Ramírez, N.; Herrera, S.; Vázquez-Barquero, J.L. Traducción y adaptación transcultural de instrumentos de evaluación en psiquiatría: Aspectos metodológicos. Arch. Neurobiol. 1997, 60, 91–111. [Google Scholar]

- Marín, G.; Gamba, R.; Marín, B. Extreme response style and acquiescence among hispanics. The role of acculturation and education. J. Cross-Cult. Psych. 1992, 23, 498–509. [Google Scholar] [CrossRef]

- Smith, P. Acquiescent response bias as an aspect of cultural communication style. J. Cross-Cult. Psychol. 2004, 35, 50–61. [Google Scholar] [CrossRef]

- Cernas, D.A.; Mercado, P.; León, F. Satisfacción laboral y compromiso organizacional: Prueba de equivalencia de medición entre México y Estados Unidos. Contaduría Adm. 2018, 63, 1–23. [Google Scholar] [CrossRef]

- Coo, S.; Aldoney, D.; Mira, A.; López, M. Cultural adaptation of the Spanish version of the perceptions of play scale. J. Child Fam. Stud. 2020, 29, 1212–1219. [Google Scholar] [CrossRef]

- Jordan, A.N.; Anning, C.; Wilkes, L.; Ball, C.; Pamphilon, N.; Clark, C.E.; Bellenger, N.G.; Shore, A.C.; Sharp, A.S.P.; Valderas, J.M. Cross-cultural adaptation of the Spanish MINICHAL instrument into English for use in the United Kingdom. Health Qual. Life Outcomes 2022, 20. [Google Scholar] [CrossRef] [PubMed]

- Pillati, A.; Godoy, J.C.; Brussino, S.A. Adaptación de instrumentos entre culturas: Ejemplos de procedimientos seguidos para medir las expectativas hacia el alcohol en el ámbito argentino. Trastor. Adict. 2012, 14, 58–64. [Google Scholar] [CrossRef]

- Swami, V.; Barron, D. Translation and validation of body image instruments: Challenges, good practice guidelines, and reporting recommendations for test adaptation. Body Image 2019, 31, 191–197. [Google Scholar] [CrossRef] [PubMed]

- Mathis, C.; Parkes, R. Historical thinking, epistemic cognition and history teacher education. In Palgrave Handbook of History and Social Studies Education; Berg, C., Christou, T., Eds.; Palgrave MacMillan: London, UK, 2020; pp. 189–212. [Google Scholar]

- Miguel-Revilla, D.; Carril-Merino, T.; Sánchez-Agustí, M. An Examination of Epistemic Beliefs about History in Initial Teacher Training: A Comparative Analysis between Primary and Secondary Education Prospective Teachers. J. Exp. Educ. 2021, 89, 54–73. [Google Scholar] [CrossRef]

- Wiley, J.; Griffin, T.D.; Steffens, B.; Britt, M.A. Epistemic beliefs about the value of integrating information across multiple documents in history. Learn. Instr. 2020, 65, 101266. [Google Scholar] [CrossRef]

- Peck, C.; Seixas, P. Benchmarks of Historical Thinking: First Steps. Can. J. Educ. 2008, 31, 1015–1038. [Google Scholar] [CrossRef]

- Hofer, B.K. Epistemological understanding as a metacognitive process: Thinking aloud during online searching. Educ. Psychol. 2004, 39, 43–55. [Google Scholar] [CrossRef]

- Schommer-Aikins, M.; Beuchat-Reichardt, M.; Hernández Pina, F. Creencias epistemológicas y de aprendizaje en la formación inicial de profesores. Ann. Psychol. 2012, 28, 465–474. [Google Scholar] [CrossRef][Green Version]

- Grever, M.; Adriaansen, R.J. Historical culture: A concept revisited. In Palgrave Handbook of Research in Historical Culture and Education; Carretero, M., Berger, S., Grever, M., Eds.; Palgrave Macmillan: London, UK, 2017; pp. 73–90. [Google Scholar]

- Rüsen, J. ¿Qué es la Cultura Histórica? Reflexiones Sobre una Nueva Manera de Abordar la Historia. 1994. Available online: http://www.culturahistorica.es/ (accessed on 17 April 2022).

- Kropman, M.; Van Drie, J.; Van Boxtel, C. Multiperspectivity in the history classroom: The role of narrative and metaphor. In Narrative and Metaphor in Education: Look Both Ways; Hanne, M., Kaal, A.A., Eds.; Routledge: London, UK, 2019; pp. 63–75. [Google Scholar]

- Maggioni, L.; Van Sledright, B.; Alexander, P.A. Walking on the Borders: A Measure of Epistemic Cognition in History. J. Experim. Educ. 2009, 77, 187–214. [Google Scholar] [CrossRef]

- Seixas, P.; Gibson, L.; Ercikan, K. A design process for assessing historical thiking: The case of a one-hour test. In New Directions in Assessing Historical Thinking; Ercikan, K., Seixas, P., Eds.; Routledge: New York, NY, USA, 2015; pp. 102–106. [Google Scholar]

- Smith, M.; Breakstone, J.; Wineburg, S. History Assessments of Thinking: A Validity Study. Cogn. Instr. 2018, 37, 118–144. [Google Scholar] [CrossRef]

- Ponce, A.I. Teorías Epistemológicas y Conocimiento Histórico del Alumnado: Diseño y Validación de una Prueba. Ph.D. Thesis, University of Murcia, Murcia, Spain, University of Porto, Porto, Portugal, 2019. [Google Scholar]

- Aiken, L.R. Test Psicológicos y Evaluación; Pearson Educación: Madrid, Spain, 2003. [Google Scholar]

- Mateo, J.; Martínez, F. Medición y Evaluación; La Muralla: Madrid, Spain, 2008. [Google Scholar]

- Taber, K.S. The use of Cronbach’s Alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 2018, 48, 1273–1296. [Google Scholar] [CrossRef]

- Sartorius, N.; Kuyken, W. Translation of health status instruments. In Quality of Life Assessment: International Perspectives; Orley, J., Kuyken, W., Eds.; Springer: New York, NY, USA, 1994; pp. 1–18. [Google Scholar]

- Hernández, A.; Hidalgo, M.D.; Hambleton, R.K.; Gómez-Benito, J. International Test Comission guidelines for test adaptation: A criterion checklist. Psicothema 2020, 32, 390–398. [Google Scholar] [CrossRef] [PubMed]

- Pinto, H.; Molina, S. La educación patrimonial en los currículos de ciencias sociales en España y Portugal. Educ. Siglo XXI 2015, 33, 103–128. [Google Scholar] [CrossRef]

- Santisteban, A.; Gomes, A.; Sant, E. El currículum de historia en Inglaterra, Portugal y España: Contextos diferentes y problemas comunes. Educ. Rev. 2021, 37. [Google Scholar] [CrossRef]

- Rodríguez-Pérez, R.A.; Solé, G. Los manuales escolares de Historia en España y Portugal. Reflexiones sobre su uso en Educación Primaria y Secundaria. ARBOR 2018, 194, 788. [Google Scholar] [CrossRef]

- Alves, L.A. Educação histórica e formação inicial de professores. In Vinte Anos das Jornadas Internacionais de Educação Histórica; Alves, L.A., Gago, M., Lagarto, M., Eds.; CITCEM: Porto, Portugal, 2021; pp. 127–140. [Google Scholar]

- Barca, I. Educação histórica: Desafios epistemológicos para o ensino e a aprendizagem da história. In Diálogo(s), Epistemologia(s) e Educação Histórica: Um Primeiro Olhar; Alves, L.A., Gago, M., Eds.; CITCEM: Porto, Portugal, 2021; pp. 59–69. [Google Scholar]

- Gago, M. Consciência Histórica e Narrativa na Aula de História; CITCEM: Porto, Portugal, 2018. [Google Scholar]

- Pinto, H. Educação Histórica e Patrimonial: Conceções de Alunos e Professores Sobre o Passado em Espaços do Presente; CITCEM: Porto, Portugal, 2016. [Google Scholar]

- Bundgaard, K.; Brøgger, M.N. Who is the back translator? An integrative literature review of back translator descriptions in cross-cultural adaptation of research instruments. Perspect. Stud. Transl. Theory Pract. 2019, 27, 833–845. [Google Scholar] [CrossRef]

- Cassepp-Borges, V.; Balbinotti, M.A.A.; Teodoro, M.L.M. Tradução e validação de conteúdo: Uma proposta para a adaptação de instrumentos. In Instrumentação Psicológica: Fundamentos e Práticas; Pasquali, L., Ed.; Artmed: Porto Alegre, Brazil, 2010; pp. 506–520. [Google Scholar]

- Hambleton, R.K.; Zenisky, A.L. Translating and adapting tests for cross-cultural assessments. In Cross-Cultural Research Methods in Psychology; Mutsumoto, D., Van de Vijver, F.J.R., Eds.; Cambridge University Press: Cambridge, UK, 2011; pp. 46–70. [Google Scholar]

- Pedrero, V.; Manzi, J. Un instrumento de medición y diferentes grupos: ¿cuándo podemos hacer comparaciones válidas? Rev. Méd. Chile 2020, 148, 1518–1519. [Google Scholar] [CrossRef]

- Abad, F.J.; Olea, J.; Ponsoda, V.; García, C. Medición en Ciencias Sociales y de la Salud; Síntesis: Madrid, Spain, 2011. [Google Scholar]

- Cohen, R.J.; Swerdlik, M.E. Psychological Testing and Assessment: An Introduction to Tests and Measurement; McGraw-Hill: New York, NY, USA, 2009. [Google Scholar]

- Reich, G.A. Imperfect models, imperfect conclusions: An exploratory study of multiple-choice tests and historical knowledge. J. Soc. Stud. Res. 2013, 37, 3–16. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).