1. Introduction

In modern science- and technology-based societies, competencies that allow citizens to reason scientifically play a key role for science- and technology-based careers as well as for democratic co-determination (e.g., [

1]). Most challenges that societies are facing (e.g., climate change, food and energy supply, or—recently—the COVID-19 pandemic) and also many questions that we encounter as individuals in our everyday lives (e.g., vaccination, nutrition, electric mobility) are strongly related to science. Therefore, participating in the public discourse on societal challenges as well as making informed decisions in one’s own life requires not only a basic understanding of science-based concepts, but also an understanding of the ways in which scientists think and reason. Developing scientific reasoning competencies is, hence, considered an important goal of science education in many countries around the globe (e.g., [

2,

3]).

The terms “scientific reasoning” and “scientific reasoning competencies” are not consistently defined in the literature. In this editorial, we will, therefore, first analyze existing definitions of scientific reasoning in science education and outline similarities and differences between them. On the basis of this analysis, we will argue that it is important to adopt a fine-grained perspective that accounts for the specific abilities, as well as the corresponding knowledge, usually included under the umbrella term “scientific reasoning”. Second, we will illustrate the potential benefits of conceptualizing scientific reasoning as a competency rather than merely as abilities and/or knowledge. Third, we will use these theoretical considerations to provide a structured overview of the contributions in this Special Issue.

2. Definitions of Scientific Reasoning

Scientific reasoning is typically conceptualized as a complex construct that encompasses a wide range of abilities as well as corresponding knowledge; clear definitions, however, have only seldom been proposed. On the basis of the notion of competency (see below), Mathesius et al. [

4] defined scientific reasoning as the “competencies that are needed to understand the processes through which scientific knowledge is acquired” (p. 94). It is important to note that this definition of scientific reasoning has a significant overlap with the related goals of science education, such as scientific inquiry or scientific thinking. This overlap can be seen in the fact that these terms often refer to similar abilities and knowledge. These abilities, which are typically summarized under the term “scientific reasoning”, comprise the following: identifying scientific problems, developing questions and hypotheses, categorizing and classifying entities, engaging in probabilistic reasoning, generating evidence through modeling or experimentation, and communicating, evaluating, and scrutinizing claims (e.g., [

5,

6,

7,

8]). The corresponding knowledge—that is, knowledge necessary to reason scientifically in a given context—comprises content knowledge, procedural knowledge, and epistemic knowledge.

Content knowledge about science concepts (e.g., theories, laws, definitions) refers to knowledge about the objects that science reasons with and about [

9]. This kind of knowledge is necessary as a basis for scientific reasoning in a specific context (“knowing what”; [

8]). For instance, to develop meaningful scientific hypotheses about the variables that impact the efficiency of a solar panel, students need a sufficient conceptual understanding of the variables that are potentially relevant in that context (e.g., voltage, current, electric energy, inclination, charge separation).

Procedural knowledge refers to knowledge about the rules, practices, and strategies that the processes of scientific reasoning are based upon (“knowing how”; [

8]). For instance, in the example given above, students require not only knowledge about science concepts but also knowledge of the rules on how to formulate a suitable scientific hypothesis (e.g., hypotheses should be as precise as possible; hypotheses have to be falsifiable; see [

10,

11,

12]). In addition to content and procedural knowledge, students should also have an epistemic understanding about why scientific reasoning (or a specific part of it, e.g., developing a scientific hypothesis) is important and how it contributes to building reliable scientific knowledge (“knowing why”; [

8,

12]). Such

epistemic knowledge is not directly necessary to develop a well-formulated and falsifiable scientific hypothesis, but it is mandatory to ensure that formulating hypotheses is more than rote performance [

8,

13].

Early and seminal descriptions of scientific reasoning (or similar constructs such as logical thinking or scientific thinking) already appeared in the work of the developmental psychologists Inhelder and Piaget [

14] about the stages of human thinking. In their work, evaluating claims based on observations was, for instance, part of the highest cognitive stage (formal operational reasoning). This implied, however, one single cognitive ability and not a multidimensional nature of reasoning, as stressed by later approaches.

In the late 1980s, Klahr and colleagues proposed the very influential model of scientific discovery as dual search (SDDS) as a way to capture scientific reasoning (e.g., [

15,

16]). In the SDDS model, scientific reasoning is conceptualized as a search in the following two problem spaces: the hypothesis space and the experiment space. On the basis of this ”dual search”, Klahr and colleagues distinguished between the scientific reasoning processes of hypotheses generation (“search hypothesis”), experimental design (“test hypothesis”), and hypotheses evaluation (“evaluate evidence”; [

16], p. 33). In the SDDS model, scientific reasoning is positioned within the context of problem solving (which, in retrospect, can be envisioned as a link to the notion of competency; see below). Additionally, the idea of a single cognitive ability (see [

14]) is extended to a differentiation between multiple distinct abilities. The SDDS model was adopted in a number of different studies with backgrounds in psychology and science education (e.g., [

17,

18,

19]). Furthermore, the three-phase structure is still a prominent way of modeling scientific reasoning in science education [

17].

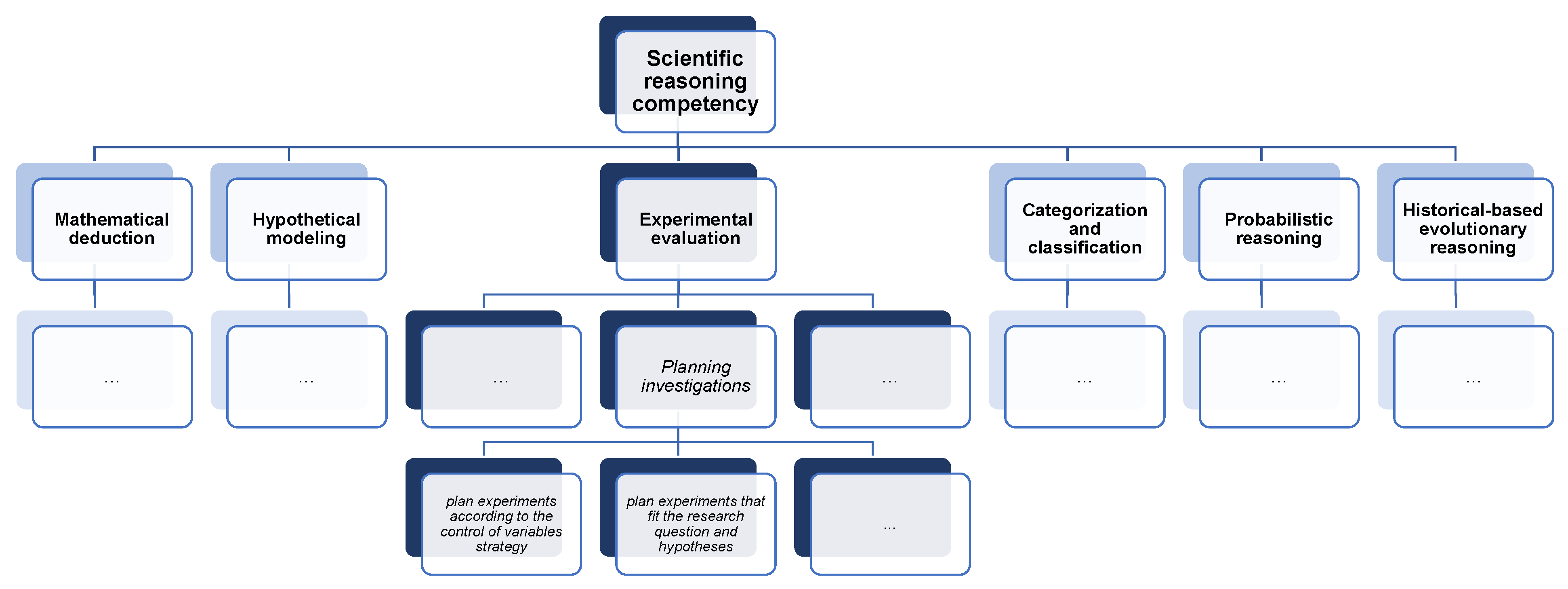

More recent approaches have shown a diversity of conceptualizations, particularly by either broadening what is considered to be scientific reasoning

beyond the realm of experimental hypothesis testing (aligned horizontally in

Figure 1) or differentiating between more or different sub-processes of scientific reasoning

within that realm (aligned vertically in

Figure 1). The former can be observed, for instance, in the work of Cullinane, Erduran, and Wooding [

20], who not only investigated experimental hypothesis testing (manipulative types of methods) but also at non-manipulative approaches. Another example is the work of Kind and Osborne [

9], who proposed six different styles of reasoning (mathematical deduction, experimental evaluation, hypothetical modeling, categorization and classification, probabilistic reasoning, and historical-based evolutionary reasoning). Here, the SDDS model and related descriptions of scientific reasoning would probably just represent a more detailed description of the experimental evaluation style, while all the other styles go beyond what is typically understood as scientific reasoning. An example of the latter type of conceptualization is the work by Krüger et al. [

21], who distinguished between four process-related dimensions (called “sub-competencies”) of conducting scientific investigations by formulating questions, generating hypotheses, planning investigations, and analyzing data and drawing conclusions. White and Frederiksen [

22] even went a step further by distinguishing between the six sub-processes of questioning, hypothesizing, investigating, analyzing, modeling, and evaluating.

In sum, most of the more recent conceptualizations of scientific reasoning competencies share the assumption that there are several sub-processes that can be modeled as separate dimensions of scientific reasoning (

Figure 1). However, as outlined above, the ways in which these dimensions are shaped vary significantly. To capture and systemize the (increasing) variance in conceptualizations of (and research on) scientific reasoning (competencies), three different aspects have been identified, in which conceptualizations of scientific reasoning competencies may differ: “(a) the skills they include, (b) whether there is a general, uniform scientific reasoning ability or, rather, more differentiated dimensions of scientific reasoning, and (c) whether they assume scientific reasoning to be domain-general or domain-specific” [

23] (p. 80). While these aspects are certainly very helpful in capturing the variance in conceptualizations of scientific reasoning, we argue that there is even more to be discovered beneath the surface.

3. Grain Sizes: A Source of Relevant but Seldom Addressed Variance

Empirical research that focuses on scientific reasoning typically describes the addressed competency as well as the corresponding outcomes with a rather large “grain size”. For instance, most studies offer a clear conceptual description of the addressed competency (the larger grain), while the specific abilities as well as the corresponding procedural and epistemic knowledge (i.e., the smaller grains that “make up” these competencies) often remain unclear (see discussion in [

24]). Therefore, different conceptualizations of scientific reasoning may seem similar regarding the competency/sub-competencies they comprise, but may still address different abilities and/or the different content, procedural, and epistemic knowledge associated with them [

24]. For example, the competency of “planning scientific investigations” may include the ability to (a) “plan investigations according to the control of variables strategy”, (b) “plan investigations considering repeated measurements”, (c) “plan investigations that fit the research question and hypotheses”, or a combination of these abilities (

Figure 1). All of the aforementioned abilities clearly belong to the competency of “planning scientific investigations”; however, the abilities addressed in an assessment or an instructional intervention have considerable consequences for the tasks students are working on and the knowledge they need to solve them, and, hence, may significantly impact the results [

24]. For instance, (a) requires procedural knowledge of the control of variables strategy as well as of terminology such as the dependent variable, independent variable, etc., and epistemic knowledge about the function of controlling variables. In contrast, (b) requires procedural knowledge of strategies for how to determine the appropriate number of repetitions of measurement procedures as well as epistemic knowledge about why repeating measurements is important.

This example illustrates that the results of studies that have adopted one specific conceptualization of a scientific reasoning competency (i.e., the larger grain) may vary considerably depending on which abilities and corresponding knowledge (i.e., smaller grains) are considered to be part of that specific conceptualization. Differences on a fine-grained level between the conceptualizations of scientific reasoning adopted in various studies are not per se a problem. Given that the construct of scientific reasoning is manifold, it is often even necessary to focus on a few specific abilities and the corresponding knowledge. However, without a fine-grained description of the chosen conceptualization, these differences cannot be properly accounted for. This is also emphasized by Shavelson [

25], who argues that a clear construct definition is necessary in order to develop authentic assessments that are capable of holistically assessing all (or as many as possible) of the components of a given competency (holistic approach of competency assessment). From a more analytical point of view, it has been proposed that all skills of a given competency need to be clearly defined so that tasks (i.e., indicators) can be developed for each [

26].

Aside from improving the comparability between studies, a more fine-grained perspective may have additional positive effects, for instance, regarding the communication and application of empirical findings. Empirical studies often report aggregated measures (larger grains), for example, in the form of a single (i.e., global) measure of students’ scientific reasoning competency, their procedural understanding of the control of variables strategy, or their epistemic understanding (e.g., naïve vs. sophisticated). This grain size is sufficient when the goal of a study is, for example, to investigate the effectiveness of a specific instructional intervention or to obtain system-monitoring data. However, goals such as designing instruction that matches students’ current understanding and specific learning needs require more detailed insights. From an educational point of view, insights into which procedural and epistemic knowledge students have and which they lack and into which abilities they have mastered and which they have not mastered yet is of vital importance for designing instructional interventions.

We argue that research would profit from examining and reporting scientific reasoning by using more fine-grained perspectives in addition to the rather global grain sizes typically reported. Linking these fine-grained and global perspectives would make it possible to compare and even to coordinate research approaches. Additionally, research on the development of students’ scientific reasoning competencies and the corresponding learning processes—a key challenge for science education research on scientific reasoning competencies—would benefit from linking fine-grained and global perspectives and not only sticking to the one or the other perspective.

4. What the Notion of Competency Implies for Research on Scientific Reasoning in Science Education

Following the increasing attention that the notion of describing learning outcomes as competencies has received in recent decades (in particular, in German-speaking countries), an increasing number of studies that (explicitly or implicitly) describe scientific reasoning as a competency have emerged (e.g., [

7,

27]). Competencies can be defined broadly as “dispositions that are acquired by learning and needed to successfully cope with certain situations or tasks in specific domains” [

28] (p. 9). Most conceptualizations of competencies in science education share a common core of characteristic features (see [

29,

30,

31]): First, competencies comprise cognitive as well as motivational, volitional, ethical, social, and further dispositions [

32]. Second, these dispositions are domain-specific and learnable [

28], and, third, these dispositions are transferable in the sense that they enable individuals to perform successfully within the same family of problems [

33]. More recent definitions of the term “competency” have also emphasized the role of situation-specific skills such as “perception” or “decision-making”, which mediate the transition of dispositions to actual performance [

34]. The notion of competency and its development adds new and interesting facets to research on scientific reasoning, which, as we will detail below, are yet to be explored.

In science education research, scientific reasoning is often conceptualized in terms of skills or abilities (e.g., [

35,

36]) that require specific knowledge, and corresponding studies typically focus on learners’ cognitive dispositions. A similar focus on cognitive dispositions can also be observed in studies that describe scientific reasoning as a competency (e.g., [

37]). There is no doubt that cognitive dispositions are an important element of scientific reasoning competency. However, given that a key goal of promoting scientific reasoning is to allow (future) citizens to reason scientifically in their personal and professional lives (e.g., [

1]), we argue that it is important to consider all elements of the notion of competency outlined above in conceptualizations of scientific reasoning competency (e.g., cognitive, motivational, and social). For instance, to use scientific reasoning to make an informed decision on a science- or technology-related topic or problem in an everyday situation, it is not sufficient to be

able to reason scientifically (i.e., to have specific abilities and corresponding knowledge). One also has to realize that the particular situation actually requires scientific reasoning or even a specific style of scientific reasoning (perception) and, at the same time, one needs to be

willing to apply the related abilities and knowledge (motivational dispositions). Therefore, we argue that considering scientific reasoning as a competency has multiple fruitful implications for future research: First, it suggests that we require the means to assess and promote learners’ cognitive dispositions (e.g., abilities and knowledge related to scientific reasoning) but also means to assess and promote their motivational dispositions (e.g., willingness to engage in scientific reasoning, beliefs about the usefulness of scientific reasoning). Second, alongside focusing on students’ cognitive, motivational, and social dispositions, it is also important to identify the situation-specific skills that facilitate or hinder the process by which these dispositions are translated into performance as well as to find the means to assess and foster them. Lastly, and probably most challengingly, it is often assumed that promoting students’ abilities to plan and scrutinize scientific investigations or to analyze and interpret data (i.e., fostering their dispositions and/or situation-specific skills) helps them to solve scientific problems as well as to make informed decisions in their everyday lives (i.e., improves their performance); the relationship between dispositions, situation-specific skills, and performance in a (close to) real-life situation, however, has—to the best of our knowledge—only rarely been investigated so far (examples in [

38]). In sum, considering scientific reasoning as a competency is more than a rebranding; it emphasizes the role of motivational and social dispositions, situation-specific skills, and actual performance and, thereby, outlines a promising agenda for science education research.

5. Contributions in This Special Issue

The contributions in this Special Issue demonstrate the variety of conceptualizations of scientific reasoning in science education. Several contributions have based their research on well-established conceptualizations, such as formulating research questions, generating hypotheses, planning experiments, observing and measuring, preparing data for analysis, and drawing conclusions (e.g., [

39]). Others have broadened their scope and discuss aspects that are somewhat “on the sidelines” of what is typically considered scientific reasoning, such as the relevance of conceptual knowledge for reasoning in a specific context [

40] and through reasoning on controversial science issues [

41]. Most of the contributions address the abilities related to experimentation and modeling (e.g., [

39,

42,

43]); this mirrors the focus of science education on these two styles of reasoning. However, this focus has been criticized as “an impoverished account” of scientific reasoning [

9] (p. 17).

The articles in this Special Issue also show how scientific reasoning competencies can be addressed based on smaller and larger grain sizes. For example, Bicak et al. [

39] conceptualize scientific reasoning in a six-step approach (see above) based on a larger grain size. In their work, the authors provide an insight into how they operationalized scientific reasoning in a coding manual for video recordings and written records in a hands-on task. For the sub-competency of observing and measuring, for instance, students reached the highest proficiency level when their observations or measurements were purposeful, exhaustive, and correct and their data was recorded correctly by using a suitable method of measurement. The approach of coding outlined in [

39] indicates that scientific correctness is at the center of this conceptualization and not measurement repetition or error analysis, as it might have been in a physics approach. Another example is provided by Khan and Krell [

42], who describe a two-dimensional competency-based approach to scientific reasoning (conducting scientific investigations and using scientific models) and also define sub-competencies of scientific reasoning and the associated abilities, including the necessary procedural and epistemic knowledge (based on [

11]). They argue that the ability of generating hypotheses requires the knowledge that hypotheses are empirically testable, intersubjectively comprehensible, clear, logically consistent, and compatible with an underlying theory [

42] (p. 3). This also presents the opportunity to link global and fine-grained perspectives on scientific reasoning competencies.

Considering the different aspects of competency outlined above, it is evident that the contributions in this Special Issue focus primarily on cognitive dispositions related to scientific reasoning competencies. However, there are interesting exceptions. For instance, the study of Beniermann, Mecklenburg, and Upmeier zu Belzen [

41] investigated reasoning processes in the context of controversial science issues. They argue that decisions or attitudes regarding these issues depend “highly on individual norms and values” [

41] (p. 2) and are, therefore, not only dependent on learners’ content knowledge or their ability to reason scientifically. The authors were able to identify not only intersubjective but also subjective types of reasoning in their analyses; this finding can be interpreted as empirical support for the assumed role of non-cognitive dispositions in scientific reasoning processes. Furthermore, their work suggests that controversial science issues might be a promising context in which to further investigate the non-cognitive components of scientific reasoning competency. Another interesting example is presented by Meister and Upmeier zu Belzen [

44], who investigated the role of anomalous data in scientific reasoning processes. Their approach highlights that learners’ perceived relevance and subsequent appraisal of data depend on their prior conceptions. As learners’ prior conceptions are presumably very situation-specific, perception and appraisal processes may serve as an interesting example of the situation-specific skills that mediate the transformation of dispositions into performance [

34]. In a similar vein, the authors were also able to show that the role of the perceived acceptance of an interpretation by others is—in some cases—more relevant than evidential considerations, which, again, illustrates the role of situation-specific skills and emotional dispositions in scientific reasoning processes. In sum, among the contributions in this Special Issue, there are some interesting approaches that consider both cognitive dispositions and motivational dispositions or situation-specific skills. However, the role that aspects of competency other than cognitive dispositions play in scientific reasoning remains a rather underrepresented field of research that, in our opinion, provides considerable potential for further research.