User-Oriented Policies in European HEIs: Triggering a Participative Process in Today’s Digital Turn—An OpenU Experimentation in the University of Paris 1 Panthéon-Sorbonne

Abstract

1. Introduction

- Distinguish between accomplishment and behavior to define worthy performance: worthy performance is characterized by a person’s behavior and accomplishments;

- Identify methods for determining the potential for improvement (PIP), amounting to the ratio between typical performance and exemplary performance;

- Describe six components of behavior that can be manipulated to change performance, among which are environmental components (data, resources, and incentives), as well as knowledge, capacity, and motives [12].

2. Materials and Methods

2.1. Specification of Context, Population, and Field of Study

- Research Question 1. (RQ1)—What are the current barriers to the digital turn, as seen by non-strategic members of the community?

- Research Question 2. (RQ2)—How to implement an inclusive user-oriented participative approach in the digitalization of university, i.e., how to ensure participation, and adherence?

- Research Question 3 (RQ3)—Which ethical issues are at play when building policies based on such approaches?

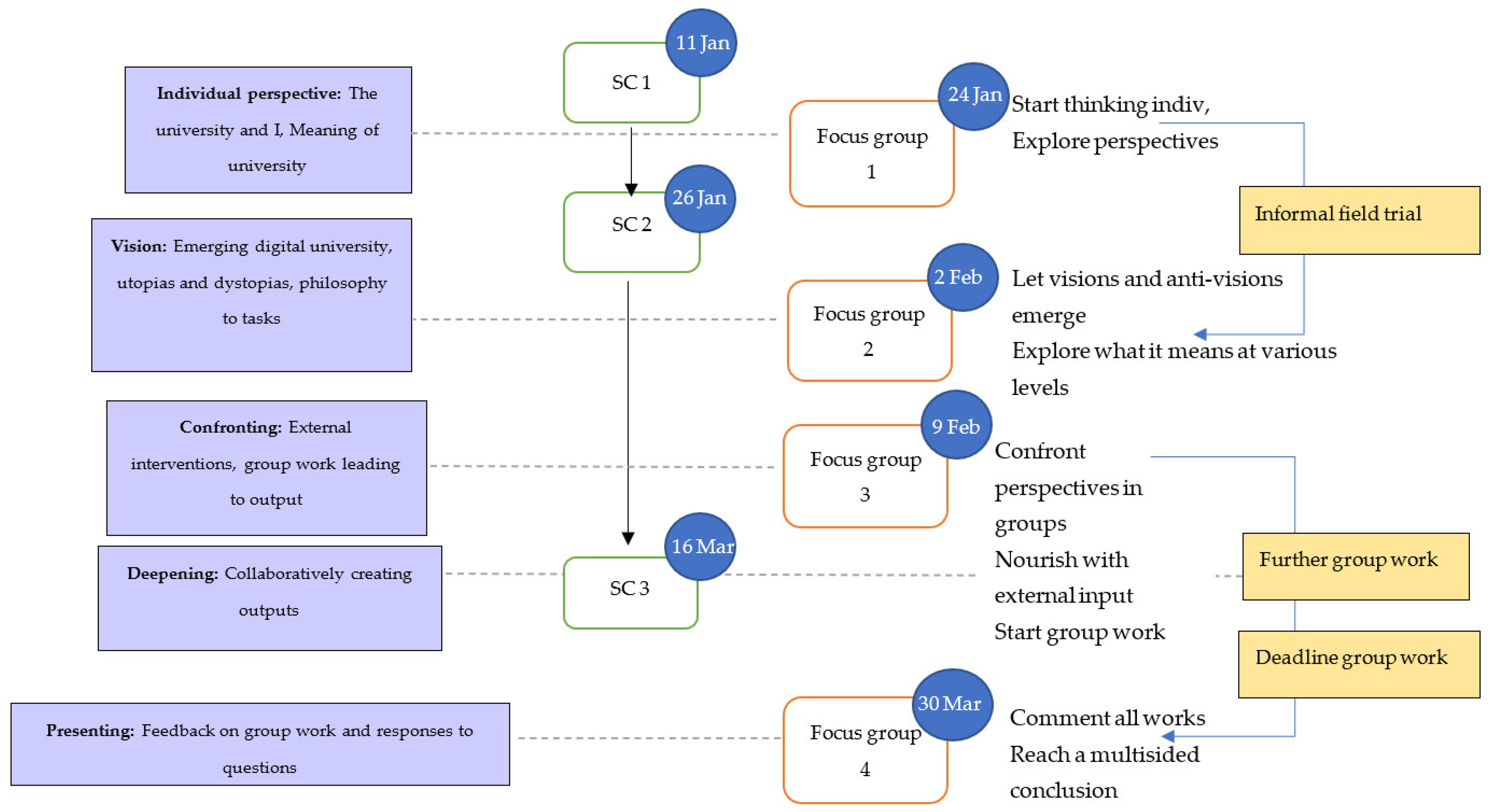

2.2. Data Collection in Two Phases

2.2.1. First Experimentation Phase

- What does the ideal digital university look like?

- How does digital use correspond or diverge from the missions of the university?

- How does the digital university raise concerns?

- How does digitalization of the university have an impact on your daily life and work?

- What are your expectations with regards to security and privacy of digital universities (and why?)

- Where to start?

- Does digitalization improve pedagogical content?

- How does fragmentation and interoperability of digital services affect your work?

- What did you learn from your experiences with the digital university during the pandemic? (positives and negatives, necessary/desirable/unwanted things)

- Is digitalization necessary? It is inevitable?

- Can links be established between the digital university and society at large?

- What institutional guarantees would you like for the digital university? (diploma, ECTS, transferable credits to your home university)

- How to overcome the language barrier?

- How can digital functionalities support mobility?

- Which changes would be necessary for the coordination and delivery of this new pedagogy?

2.2.2. Second Experimentation Phase

- Identification of the respondent.

- Distance learning conditions.

- Students’ experiences with digital technology at the university.

- Preferences of the student for improvement of the digital system currently in place.

- Students’ preferences with respect to communicative and informative measures in the digital university.

2.3. Data Analysis

- (1)

- Results of benchmark evaluations as collected at the start of the first phase from participants of focus groups and steering committee meetings.

- (2)

- Material outputs stemming from working sessions of the first phase—here, written recommendations intended for strategic, institutional, and political levels, as well as a tool kit for facilitating interactive and inclusive pedagogies and decision processes.

- (3)

- The final survey resulting from exchanges and meetings with the Student Steering Committee during the second phase.

- (4)

- Data collected through the survey from 304 students of the University Paris 1 Panthéon-Sorbonne.

- (5)

- Participatory rapid appraisals and observations emerging from working sessions and meetings of both phases, and collected using a collaborative and overarching log book.

3. Results

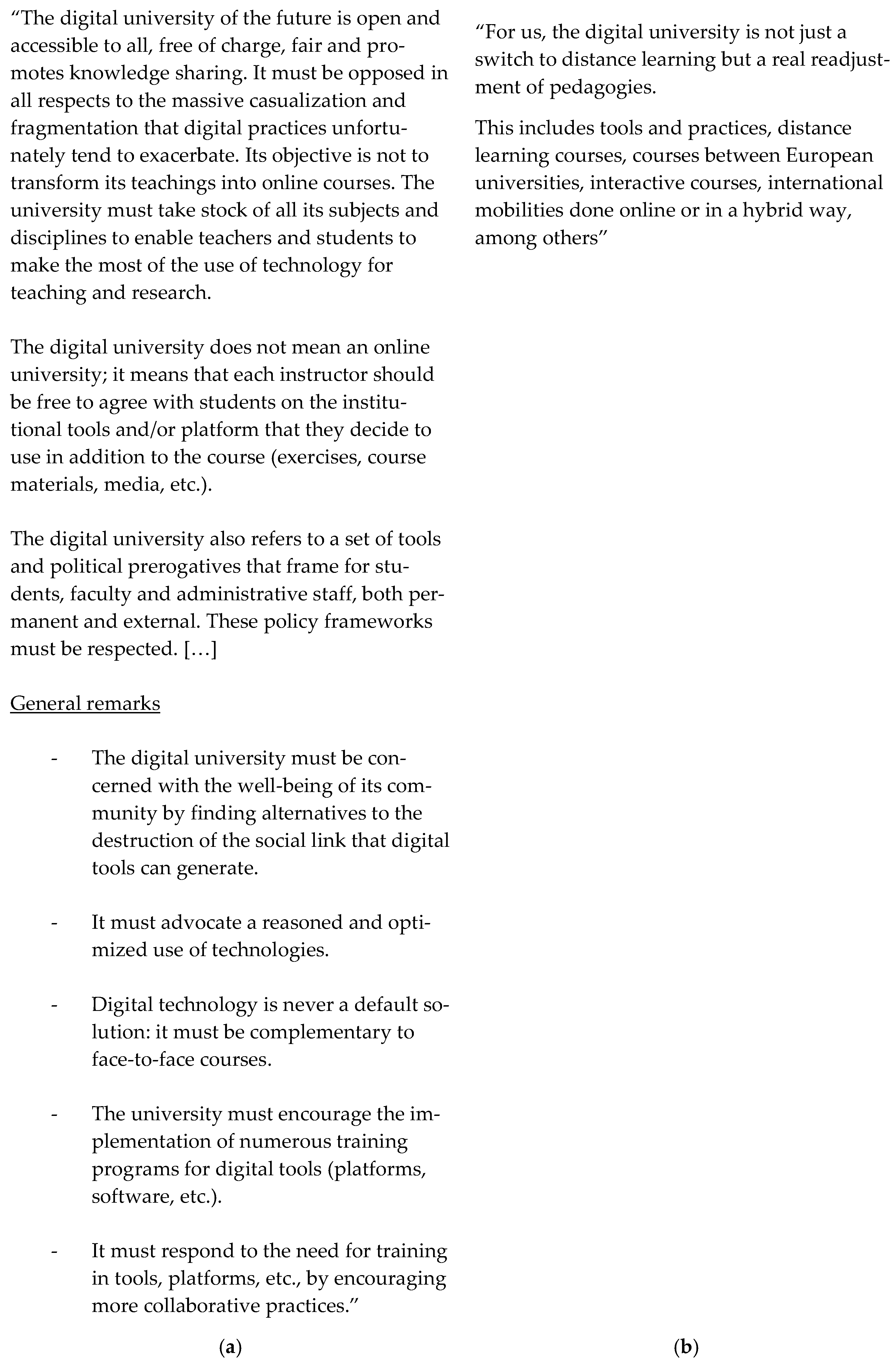

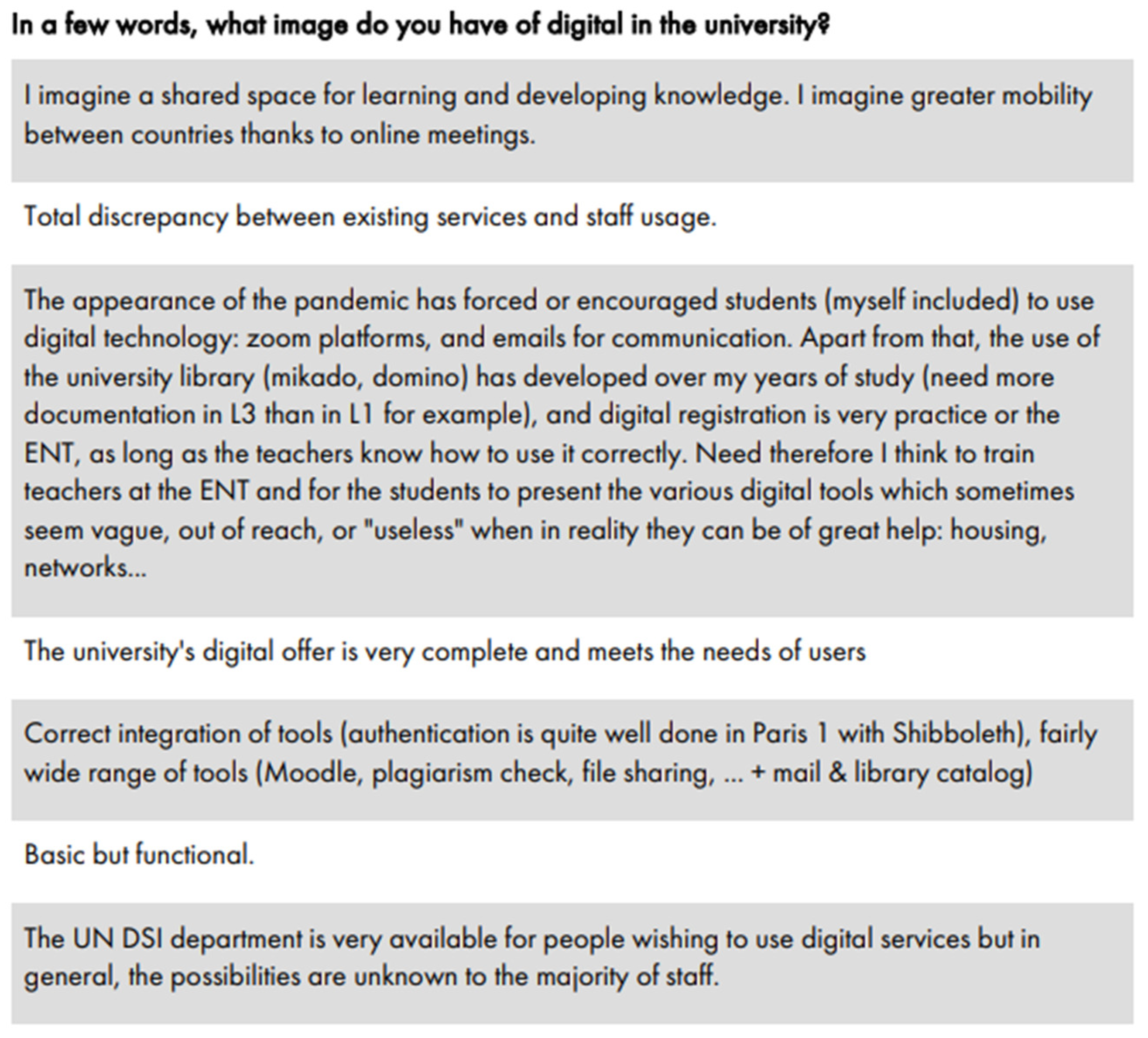

3.1. Remarks on the Perception of Digital(ized) Universities

3.1.1. Digital University: Definition

- Means: equipment, tools, computer, functioning, wheels, slope, skidding.

- Interaction: collaboration, exchange, open, argument, commotion, discord, argument, share, diversity, jump, multitude.

- Environment: calmness, residence, domesticity, privacy, international, fragile, outside, window, fragile, fear, slope, language.

- Time frame: future, old, slow, antiquated, temporality.

- Participants: students, teacher, together, student, people, professors, users.

- Timeframe: years, after, already, schedule, moment, then, time.

- Variation: change, develop, become, new, can, project, transition, turning point, chance.

- Means: tools, equipment.

- Well-being: confidence, concern, request, division, justice, fear, wish.

- Environment: life, experience, facing, have to, place, institution, opportunity, power, political, politics.

- Interaction: open, share, talk, meet, alone.

- Educational content: content, classes, knowledge, practices, know, work.

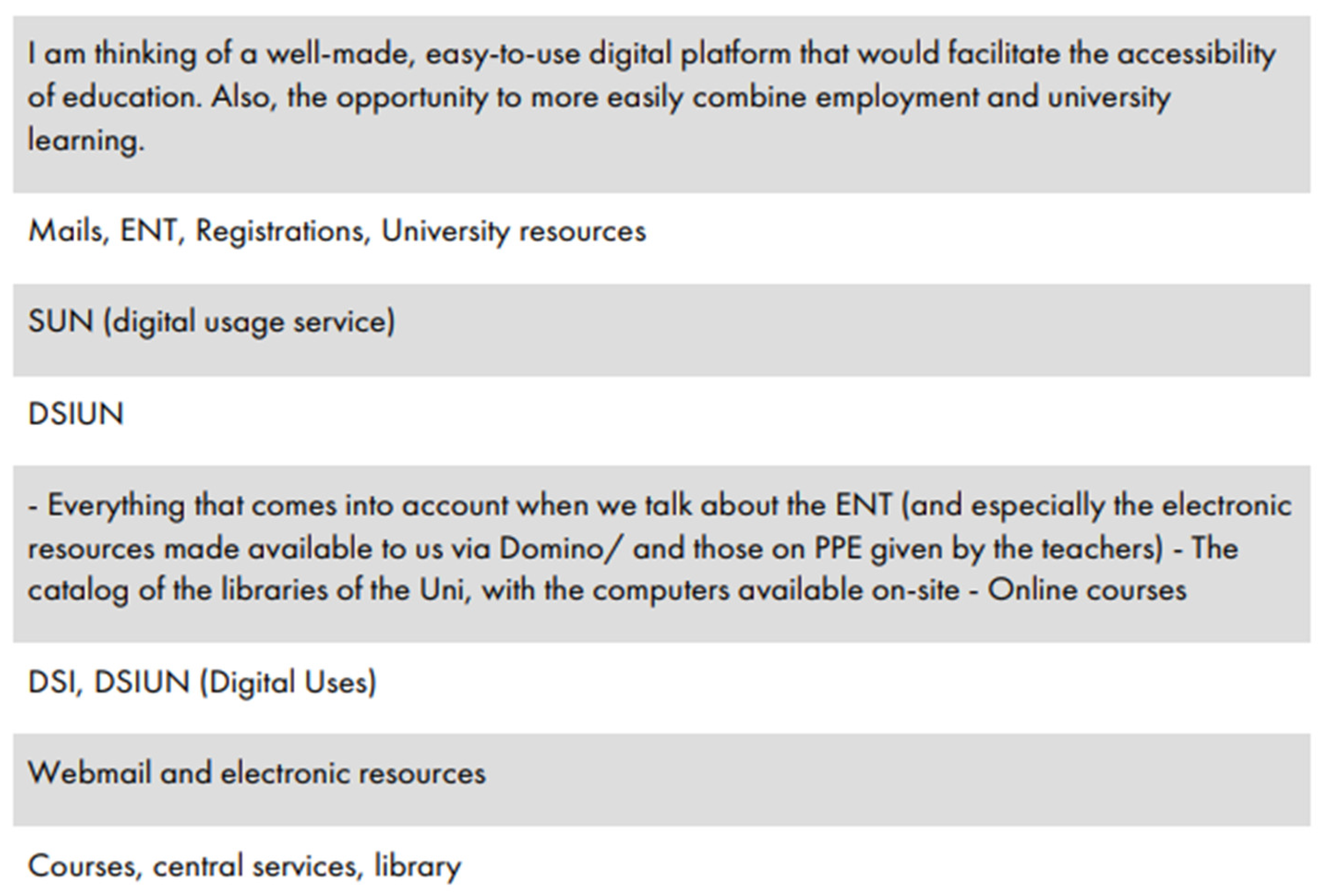

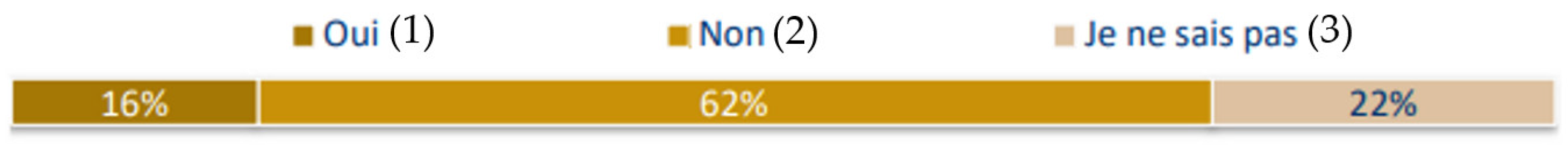

3.1.2. Existing Information on the Digital University

- Interactions

- Means

- Environment and timeframe

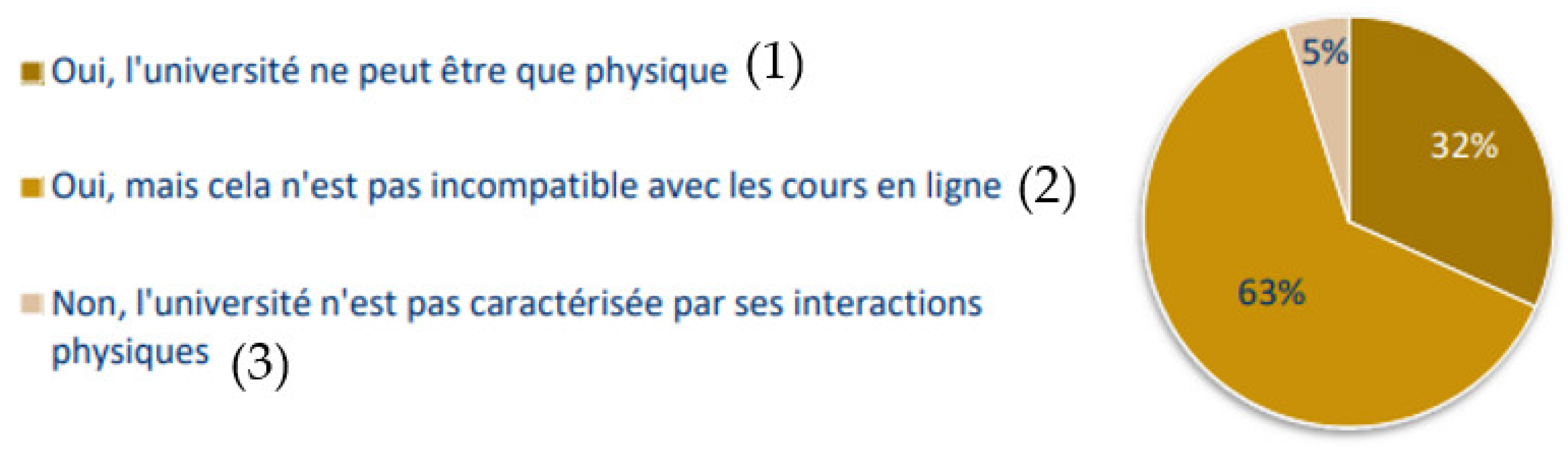

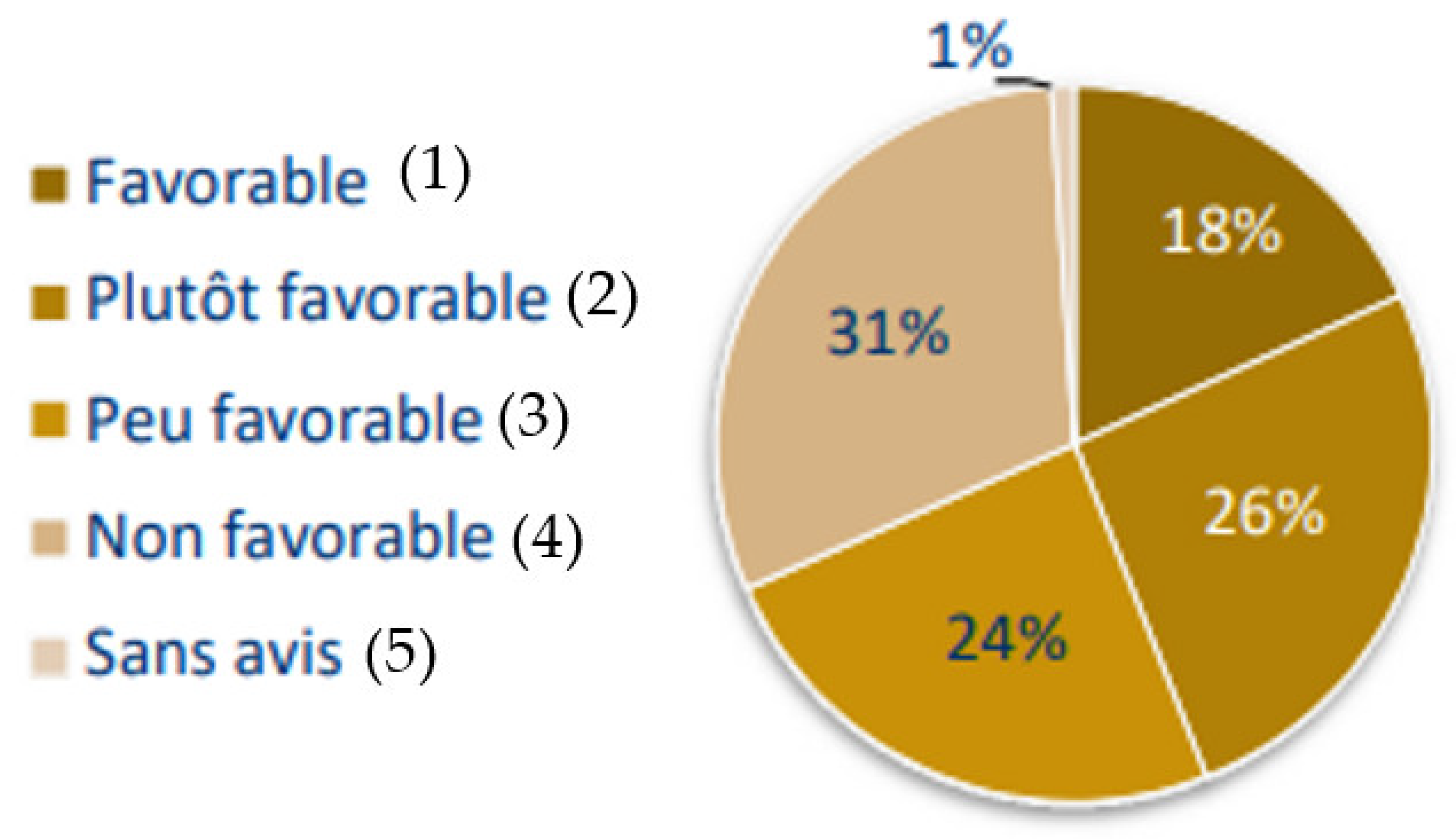

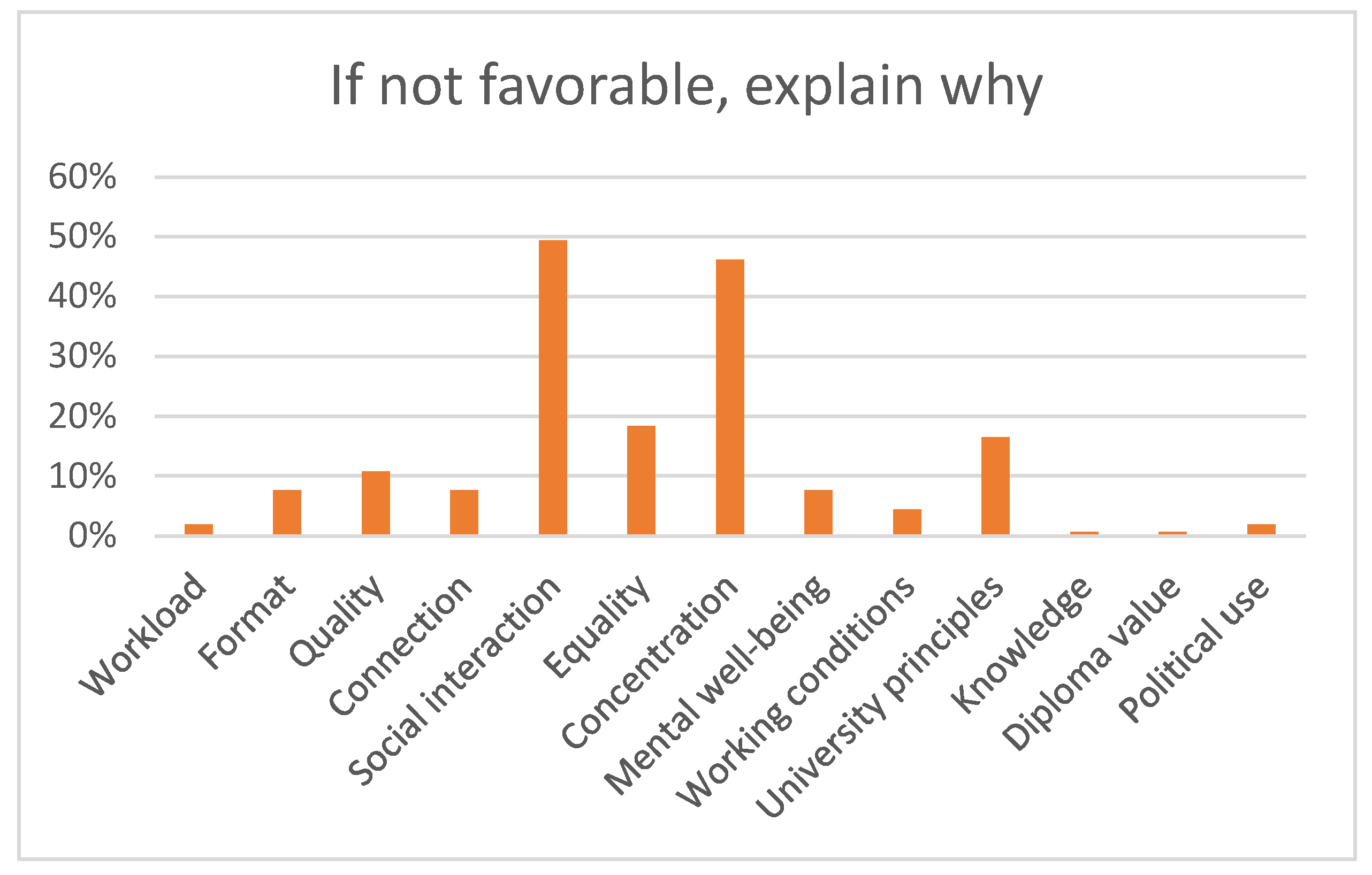

3.1.3. Acceptance of a Digital University

3.2. Individual and Collective Stances towards Digitalization

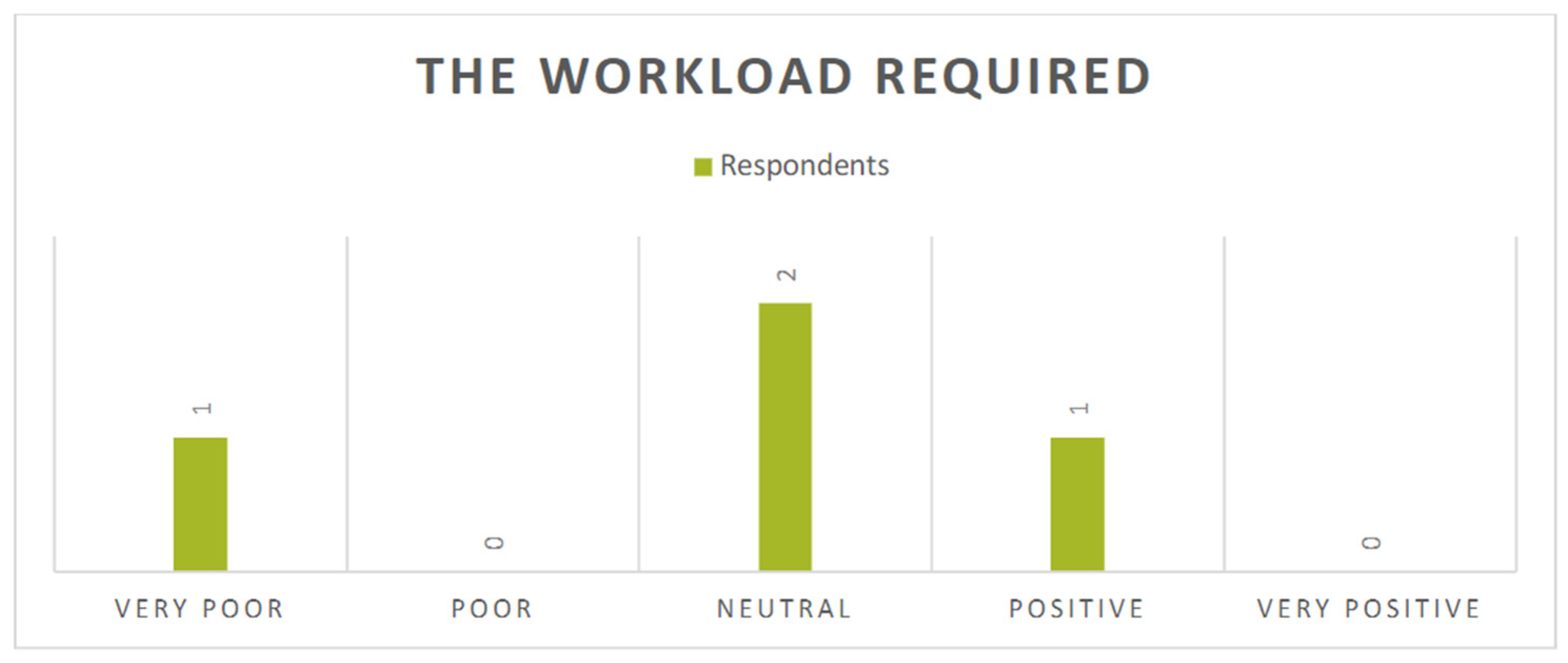

3.2.1. Factors of Favorability

3.2.2. Indifference and Interest towards Digitalization

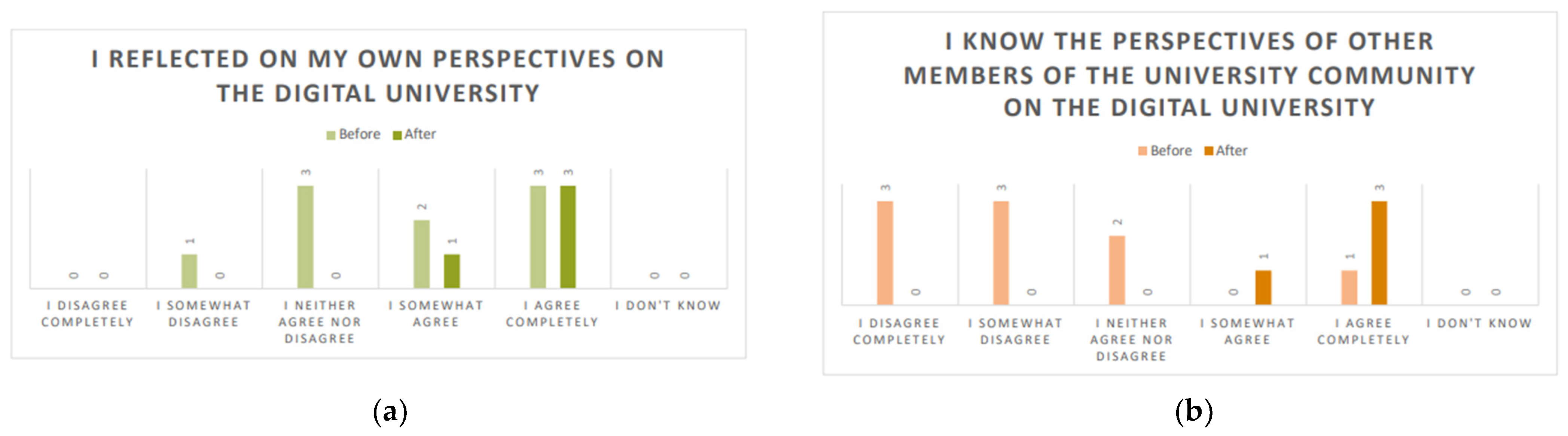

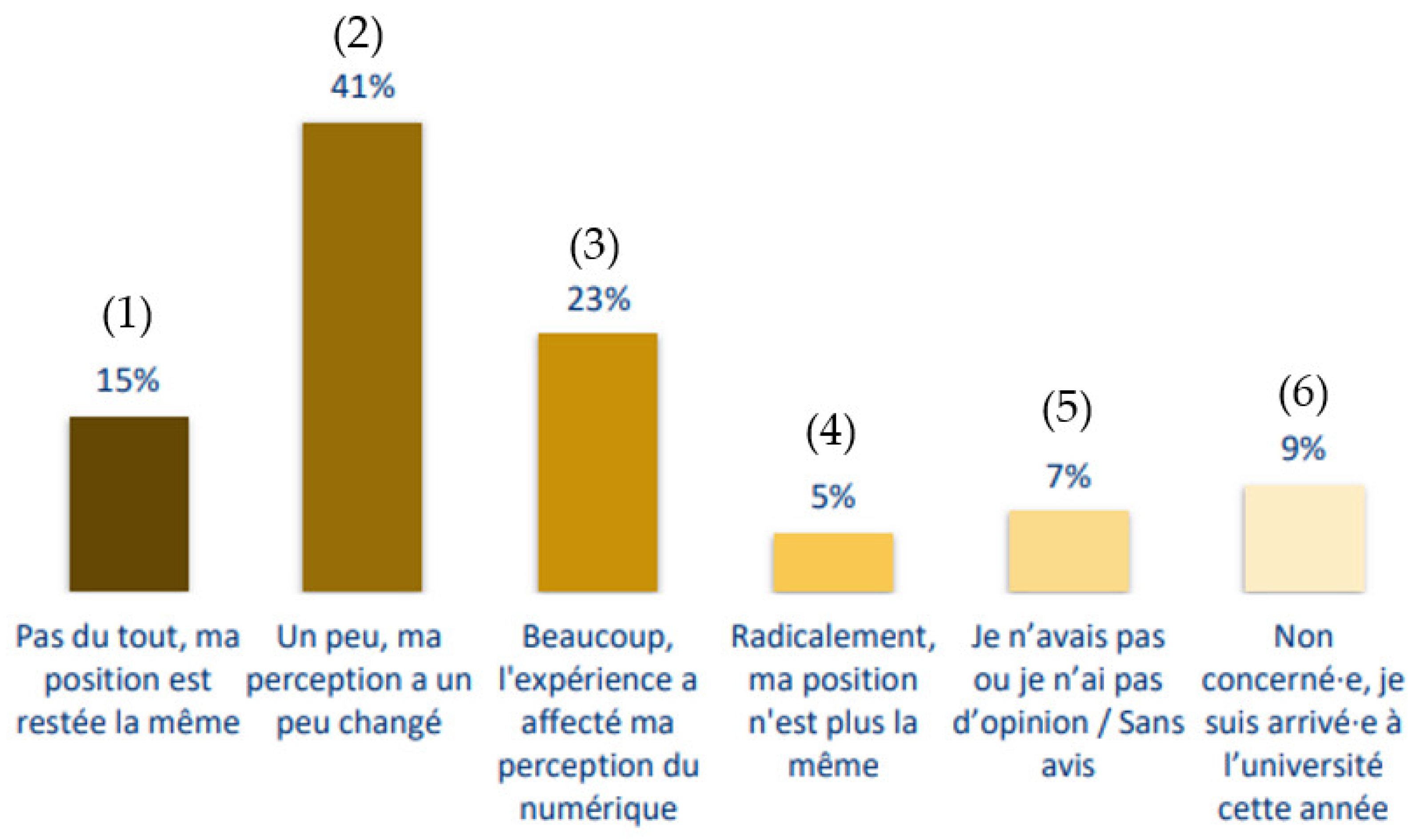

3.3. Participative Measures’ Impact

3.3.1. Rate vs. Content of Involvement

- The strategic and political level of the university was necessary to support the accompaniment and identification of project leaders and interested networks.

- In spite of the call explicitly mentioning “teachers-researchers, BIATSS and students”, members did not necessarily assimilate themselves to the word “users”.

- The call for expression of interest raised some questions with regards to the readability of the project, as well as multilingualism. Terms such as “focus groups” are still viewed as being neoliberal and illegible.

- Students also expressed the need to have a dedicated readable platform; mailbox is mainly used by professors and students’ unions.

“Following a question, I would like to point out that the information mailing lists are non-existent in my eyes or very badly managed. I am an MBA student at IAE Paris Panthéon Sorbonne and I am bombarded with emails that make no sense for my training (language catch-up for the Bachelor’s degree, why inform me of that?) but especially my profile. Or I will be informed of initiatives (sport, language, una europa...) in which I can’t even participate when I ask for it (you are not concerned/eligible), because it seems that the pro students of the IAE Paris Panthéon Sorbonne in continuing education are not part of the students of the Sorbonne in fact, which is very sad and deprives us of exchange with other courses. We lack availability during the day of course being in professional activity in parallel with the training, but conferences or other could interest us. Also, it is curious that we receive everything (and anything?) concerning the Sorbonne, but we are not informed of the conferences of the IAE, or at least only by the screens on-site, and we are only present every 2 weeks. In short, I would be delighted to participate much more in the academic life of the Sorbonne and hope that this survey will help to mobilize in the future all the students of the university, whatever the training. Thank you for your thoughts on the subject!”(Phase 2)

3.3.2. Incentives for Deeper Participation

- Direct, personalized interaction

- Ownership of decisions

“Designed by a group of students from different disciplines, this survey is aimed at the entire student community of the University of Paris 1 Panthéon-Sorbonne. Its aim is to find out your needs and expectations (both in times of crisis and outside of them) to feed into the current thinking. Your point of view and your experience will be brought to the attention of the institutions involved in the digital transformation. It is therefore a way to become actors of change, and to actively participate in the evolution of our university, and to potentially be heard. It is in this context that we would like to collect your opinion.”

- Pedagogical gain, scientific benefit

“I have never heard of the project, and would have never had, had I not met [person]. The topic interested me and I wanted to learn more”(Phase 2)

“I hope that we can share the word and arrive at collective answers”(Phase 1)

“I would like to see what this project can give as a result”(Phase 1)

“I would like to make the project more concrete: talking about one’s fears and expectations is a first approach, but in the rest of the project, the ideal for me would be to talk about the articulations of the future digital university because how to express one’s fears vis-à-vis of a project that does not even have a substance or a form? So try to give ideas to “optimize” the platform in its practical and functional aspect.”(Phase 1)

“[I liked] especially:-the context allowing the meeting between the different bodies of the universities (students, admin, profs)-the meeting with the external speakers and the whole day of work-the political discussions on the digital issues”

4. Discussion and Conclusions

4.1. Lessons Learned

4.2. Ethical Limitations and Recommendations for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Magro, E.; Wilson, J.R. Policy-mix evaluation: Governance challenges from new place-based innovation policies. Res. Policy 2019, 48, 103612. [Google Scholar] [CrossRef]

- Christopher, A.; Bartenberger, M. Varieties of Experimentalism. Ecol. Econ. 2016, 130, 64–73. [Google Scholar]

- Campbell, D.T.; Stanley, J.C. Experimental and Quasi-Experimental Designs for Research; Rand McNally & Company: Chicago, IL, USA, 1963. [Google Scholar]

- Heilmann, S. Policy Experimentation in China’s Economic Rise. Stud. Comp. Int. Dev. 2008, 43, 1–26. [Google Scholar] [CrossRef]

- Morgan, K. Experimental Governance and Territorial Development. In Background Paper for an OECD/EC Workshop on “Broadening Innovation Policy: New Insights for Regions and Cities”, Paris, France, 14 December 2018; OECD/EC: Paris, France, 2018; Available online: https://orca.cardiff.ac.uk/id/eprint/125697/1/Morgan%282018%29ExperimentalGovernanceAndTerritorialDevelopment_OECD_FINAL%20report.pdf (accessed on 29 March 2022).

- European Commission/EACEA/Eurydice. Support Mechanisms for Evidence-Based Policy-Making in Education, Eurydice Report; Publications Office of the European Union: Luxembourg, 2017; Available online: https://eige.europa.eu/resources/206_EN_Evidence_based_policy_making.pdf (accessed on 2 June 2022).

- Parsons, W. Public Policy. An Introduction to the Theory and Practice of Policy Analysis; Edward Elgar: Aldershot, UK, 1995; pp. 618–675. [Google Scholar]

- Burns, T.; Köster, F. Governing Education in a Complex World, Educational Research and Innovation; OECD Publishing: Paris, France, 2016; pp. 3–238. [Google Scholar]

- Centre for Educational Research and Innovation, Evidence in Education: Linking Research and Policy, OECD. 2007. Available online: https://www.oecd.org/education/ceri/evidenceineducationlinkingresearchandpolicy.htm#3 (accessed on 2 June 2022).

- Mahoney, J.; Thelen, K. A Theory of Gradual Institutional Change. In Explaining Institutional Change: Ambiguity, Agency, and Power; Cambridge University Press: Cambridge, UK, 2010; pp. 1–37. [Google Scholar]

- Musselin, C. La Grande Course des Universités; Presses de Sciences Po: Paris, France, 2017; 303p. [Google Scholar]

- Gilbert, T.F. Human Competence: Engineering Worthy Performance; McGraw-Hill: New York, NY, USA, 1978; 370p. [Google Scholar]

- Finger, M.; Audouin, M. The Governance of Smart Transportation Systems: Towards New Organizational Structures for the Development of Shared, Automated, Electric and Integrated Mobility; The Urban Book Series; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Norman, D. Emotional Design: Why We Love (or Hate) Everyday Things; Basic Books: New York, NY, USA, 2004; 287p. [Google Scholar]

- Hesselgren, M.; Sjöman, M.; Pernestål, A. Understanding user practices in mobility service systems: Results from studying large scale corporate MaaS in practice. Travel Behav. Soc. 2019, 21, 318–327. [Google Scholar] [CrossRef]

- Veryzer, R.W.; Borja de Mozota, B. The Impact of User-Oriented Design on New Product Development: An Examination of Fundamental Relationships. J. Prod. Innov. Manag. 2005, 22, 128–143. [Google Scholar] [CrossRef]

- Vredenburg, K.; Isensee, S.; Righi, C. User-Centered Design: An Integrated Approach; Prentice Hall: Hoboken, NJ, USA, 2002; 288p. [Google Scholar]

- Clark, K.B.; Fujimoto, T. The Power of Product Integrity. Harv. Bus. Rev. 1190, 68, 107–118. [Google Scholar]

- Henderikx, P.; Ubachs, G.; Antonaci, A. Models and Guidelines for the Design and Development of Teaching and Learning in Digital Higher Education; Global Academic Press: Maastricht, The Netherlands, 2002. [Google Scholar]

- Maltz, E.; Souder, W.E.; Kumar, A. Influencing R&D/marketing integration and the use of market information by R&D managers: Intended and unintended effects of managerial actions. J. Bus. Res. 2001, 52, 69–82. [Google Scholar] [CrossRef]

- De Berny, C.; Rousseau, A.; et Deschatre, M. Les usages du numérique dans l’enseignement supérieur, L’Institut Paris Région: Paris, France October 2021. Available online: https://www.institutparisregion.fr/fileadmin/NewEtudes/000pack2/Etude_2690/Rapport_usage_du_numerique_enseig._sup._oct._2021.pdf (accessed on 19 May 2022).

- Brouns, F.; Wopereis, I.; Klemke, R.; Albert, S.; Wahls N Pieters, M.; Riccò, I. Report on Mapping (post-)COVID-19 Needs in Universities. DigiTeL Pro, EADTU. Project Funded by the European Commission. 2022. Available online: https://digitelpro.eadtu.eu/outcomes (accessed on 30 November 2022).

- Fuenfschilling, L.; Frantzeskaki, N.; Coenen, L. Urban experimentation & sustainability transitions. Eur. Plan. Stud. 2018, 27, 219–228. [Google Scholar] [CrossRef]

- Huitema, D.; Jordan, A.; Munaretto, S.; Hildén, M. Policy experimentation: Core concepts, political dynamics, governance and impacts. Policy Sci. 2018, 51, 143–159. [Google Scholar] [CrossRef]

- Kivimaa, P.; Rogge, K.S. Interplay of policy experimentation and institutional change in sustainability transitions: The case of mobility as a service in Finland. Res. Policy 2021, 51, 104412. [Google Scholar] [CrossRef]

- Mascret, A. Enseignement Supérieur et Recherche en France: Une Ambition D’excellence; La documentation Française: Paris, France, 2015. [Google Scholar]

- Liere-Netheler, K.; Packmohr, S.; Vogelsang, K. Drivers of Digital Transformation in Manufacturing. In Proceedings of the Hawaii International Conference on System Science 2018, Waikoloa Village, HI, USA, 3–6 January 2018. [Google Scholar]

- International Relations Department. International Annual Report; Université Paris 1 Panthéon-Sorbonne: Paris, France, 2022. [Google Scholar]

- Berkowitz, A.D. From Reactive to Proactive Prevention: Promoting an Ecology of Health on Campus. In A Handbook on Substance Abuse for College and University Personnel; Rivers, P.C., Shore, E., Eds.; Greenwood Press: Westport, CT, USA, 1997; Chapter 6. [Google Scholar]

- Morin, E. Introduction à la Pensée Complexe; ESF Éditeur: Paris, France, 1990. [Google Scholar]

- Cros, F. L’innovation en éducation et en formation. Rev. Française Pédagogie 1997, 118, 127–157. [Google Scholar]

- Plottu, B.; et Plottu, É. Contraintes et vertus de l’Évaluation participative. J. Rev. Française De Gest. 2009, 192, 31–58. [Google Scholar] [CrossRef]

- Tripier; Laufer, R.; Burlaud, A. Management public, Gestion et Légitimité. Sociol. Trav. 1981, 3, 364–366. [Google Scholar]

- Patton, M.Q. Utilization-Focused Evaluation: The New Century Text, 3rd ed.; Sage Publications: Thousand Oaks, CA, USA, 1997. [Google Scholar]

- Ryan, R.M.; Deci, E.L. An overview of self-determination theory: An organismic dialectical perspective. In Handbook of Self-Determination Research; Deci, E.L., Ryan, R.M., Eds.; University of Rochester Press: Rochester, NY, USA, 2002; pp. 3–33. [Google Scholar]

- Wigfield, A.; Eccles, J.S. Expectancy–Value Theory of Achievement Motivation. Contemp. Educ. Psychol. 2000, 25, 68–81. [Google Scholar] [CrossRef]

- Schiefele, U. Situational and individual interest. In Handbook of Motivation at School; Routledge/Taylor & Francis Group: New York, NY, USA, 2009; pp. 197–222. [Google Scholar]

- Cavell, S. Little Did I Know: Excerpts from Memory; Stanford University Press: Stanford, CA, USA, 2010; p. 85. [Google Scholar]

- Sadoff, S. The role of experimentation in education policy. Oxf. Rev. Econ. Policy 2014, 30, 597–620. [Google Scholar] [CrossRef]

- Smirnova, E.; Balog, K. A User-Oriented Model for Expert Finding. In Advances in Information Retrieval. ECIR 2011; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6611. [Google Scholar]

- Hidi, S.; Harackiewicz, J.M. Motivating the academically unmotivated: A critical issue for the 21st century. Rev. Educ. Res. 2000, 70, 151–179. [Google Scholar] [CrossRef]

- Hidi, S.; Renninger, K.A. The Four-Phase Model of Interest Development. Educ. Psychol. 2006, 41, 111–127. [Google Scholar] [CrossRef]

- Schiefele, U. The role of interest in motivation and learning. In Intelligence and Personality. Bridging the Gap in Theory and Measurement; Collis, J.M., Messick, S., Eds.; Erlbaum: Mahwah, NJ, USA, 2001; pp. 163–193. [Google Scholar]

- Lave, J.; Wenger, E. Situated Learning: Legitimate Peripheral Participation; Cambridge University Press: New York, NY, USA, 1991. [Google Scholar]

- Knekta, E.; Rowland, A.A.; Corwin, L.A.; Eddy, S. Measuring university students’ interest in biology: Evaluation of an instrument targeting Hidi and Renninger’s individual interest. Int. J. STEM Educ. 2020, 7, 23. [Google Scholar] [CrossRef]

- Wenger, E. Communities of Practice: Learning, Meaning, and Identity; Cambridge University Press: New York, NY, USA, 1998. [Google Scholar] [CrossRef]

- Paquelin, D.; Crosse, M. Responsabilisation, ouverture et confiance: Pistes pour l’enseignement supérieur du futur. Enjeux Société 2021, 8, 190–215. [Google Scholar] [CrossRef]

- Sen, A. Development as Freedom; Alfred Knopf: New York, NY, USA, 1999. [Google Scholar]

- Draxler-Weber, N.; Packmohr, S.; Brink, H. Barriers to Digital Higher Education Teaching and How to Overcome Them—Lessons Learned during the COVID-19 Pandemic. Educ. Sci. 2022, 12, 870. [Google Scholar] [CrossRef]

- Kvernbekk, T. Evidence-Based Educational Practice. Oxford Research Encyclopedia of Education. 2017. Available online: https://oxfordre.com/education/view/10.1093/acrefore/9780190264093.001.0001/acrefore-9780190264093-e-187 (accessed on 10 March 2022).

- Pellegrini, M.; Vivanet, G. Evidence-Based Policies in Education: Initiatives and Challenges in Europe. ECNU Rev. Educ. 2020, 4, 25–45. [Google Scholar] [CrossRef]

| Participant | Function | Words Associated with Chosen Image |

|---|---|---|

| 1 | Admin. | Perspective, discord, realities, diversity |

| 2 | Admin. | Tools, functioning, collaboration, wheels |

| 3 | Admin. | Outside, window, exchange, open |

| 4 | Student | Web, fragile, solidity, domesticity, fear |

| 5 | Student | Skidding, slope, slippery, jump |

| 6 | Student | Collaboration, human touch, interaction, difficulties |

| 7 | Student | Future, English, language, international |

| 8 | Researcher | Temporality, snail, slow, residence, domesticity, privacy |

| 9 | Researcher | Commotion, argument, calmness |

| 10 | Researcher | Computer, antiquated, old, equipment, multitude |

| 12 | Researcher | Share, exchange, international |

| Category | University | Digital University |

|---|---|---|

| Means | Tools, equipment | Equipment, tools, computer, functioning, wheels, slope, skidding |

| Interaction | Open, share, talk, meet, alone | Collaboration, exchange, open, argument, commotion, discord, argument, share, diversity, jump, multitude |

| Environment | Life, experience, facing, have to, place, institution, opportunity, power, political, politics | Calmness, residence, domesticity, privacy, international, fragile, outside, window, fragile, fear, slope, language |

| Timeframe | Years, after, already, schedule, moment, then, time | Future, old, slow, antiquated, temporality |

| Variation | Change, develop, become, new, can, project, transition, turning point, chance | - |

| Participants | Students, teacher, together, student, people, professors, users | - |

| Well-being | Confidence, concern, request, division, justice, fear, wish | - |

| Educational content | Content, classes, knowledge, practices, know, work | - |

| Tool/Service | I Know and Use | I Know but Do Not Use | I Use if I Have to but do Not Know | I Don’t Know |

|---|---|---|---|---|

| 93% | 4% | 1% | 0% | |

| Interactive pedagogical interface | 90% | 3% | 2% | 2% |

| Planning | 27% | 47% | 4% | 20% |

| Address list | 24% | 40% | 13% | 19% |

| Library catalogue | 43% | 28% | 12% | 15% |

| Documentary access (Mikado) | 30% | 24% | 9% | 33% |

| Documentary access (Domino) | 60% | 12% | 8% | 18% |

| Office 365 | 49% | 29% | 3% | 17% |

| Document transfer (Filex) | 9% | 8% | 4% | 77% |

| Internship and job opportunities browser | 14% | 42% | 10% | 30% |

| Mobility opportunities browser | 10% | 40% | 6% | 41% |

| Forum and chatbox | 6% | 43% | 5% | 43% |

| Collaborative document (Framapad) | 6% | 14% | 3% | 73% |

| Poll (Evento) | 5% | 14% | 3% | 74% |

| Medical appointments tool | 11% | 28% | 5% | 54% |

| Field | Favorable | Somewhat Favorable | Somewhat Unfavorable | Unfavorable |

|---|---|---|---|---|

| Social and human sciences | 12% | 19% | 29% | 41% |

| Law and political science | 22% | 25% | 25% | 26% |

| Art | 0 | 3 (43%) | 1 (14%) | 3 (43%) |

| Institutes | 21% | 26% | 42% | 11% |

| Total | Social and Human Sciences | Law and Political Sciences | Arts | Institutes | |||

|---|---|---|---|---|---|---|---|

| Social Profile | Gender | F | 64% | 67% | 70% | 5 (71%) | 69% |

| M | 33% | 29% | 29% | 2 (29%) | 25% | ||

| Age | 17–24 | 67% | 67% | 70% | 5 (71%) | 47% | |

| 25–34 | 8% | 10% | 6% | 1 (14.5%) | 21% | ||

| 35+ | 25% | 23% | 25% | 1 (14.5%) | 32% | ||

| Finance | Yes | 72% | 71% | 72% | 6 (85.5%) | 69% | |

| No | 26% | 28% | 25% | 1 (14.5%) | 26% | ||

| Level (LMD) | Y 1–2 | 32% | 34% | 36% | 4 (57%) | 27% | |

| Y3 | 33% | 29% | 28% | 5 (71%) | 26% | ||

| MA | 36% | 35% | 36% | 3 (42%) | 53% | ||

| PhD | 4% | 7% | 4% | 1 (14.5%) | 0 | ||

| Working Environment | Good working conditions | Yes | 84% | 78% | 86% | 6 (85.5%) | 100% |

| No | 15% | 22% | 12% | 1 (14.5%) | 0 | ||

| Adequate space | Yes | 63% | 57% | 71% | 1 (14.5%) | 63% | |

| No | 37% | 43% | 29% | 6 (85.5%) | 0 | ||

| Equipment | Personal computer | 97% | 96% | 97% | 6 (85.5%) | 100% | |

| Internet | 76% | 68% | 82% | 5 (71%) | 84% | ||

| Webcam | 93% | 90% | 97% | 5 (71%) | 100% | ||

| Mic | 97% | 96% | 99% | 5 (71%) | 94% | ||

| COVID-19 Experience | Overall | Good | 15% | 10% | 17% | 0 | 5% |

| Somewhat good | 30% | 31% | 24% | 3 (42%) | 42% | ||

| Somewhat bad | 24% | 23% | 33% | 1 (14.5%) | 11% | ||

| Bad | 18% | 22% | 13% | 2 (29%) | 16% | ||

| Educational content | Adapted | 48% | 48% | 50% | 2 (29%) | 63% | |

| Not adapted | 40% | 40% | 38% | 4 (57%) | 16% | ||

| Resources | Gender | |||||||

|---|---|---|---|---|---|---|---|---|

| Sufficient | Insufficient | Female | Male | |||||

| Num | % | Num | % | Num | % | Num | % | |

| Favorable | 36 | 17% | 16 | 21% | 28 | 18% | 12 | 15% |

| Somewhat favorable | 55 | 26% | 20 | 27% | 42 | 28% | 19 | 24% |

| Somewhat unfavorable | 54 | 26% | 16 | 21% | 38 | 25% | 18 | 23% |

| Unfavorable | 63 | 30% | 23 | 31% | 43 | 28% | 27 | 35% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dell’Omodarme, M.R.; Cherif, Y. User-Oriented Policies in European HEIs: Triggering a Participative Process in Today’s Digital Turn—An OpenU Experimentation in the University of Paris 1 Panthéon-Sorbonne. Educ. Sci. 2022, 12, 919. https://doi.org/10.3390/educsci12120919

Dell’Omodarme MR, Cherif Y. User-Oriented Policies in European HEIs: Triggering a Participative Process in Today’s Digital Turn—An OpenU Experimentation in the University of Paris 1 Panthéon-Sorbonne. Education Sciences. 2022; 12(12):919. https://doi.org/10.3390/educsci12120919

Chicago/Turabian StyleDell’Omodarme, Marco Renzo, and Yasmine Cherif. 2022. "User-Oriented Policies in European HEIs: Triggering a Participative Process in Today’s Digital Turn—An OpenU Experimentation in the University of Paris 1 Panthéon-Sorbonne" Education Sciences 12, no. 12: 919. https://doi.org/10.3390/educsci12120919

APA StyleDell’Omodarme, M. R., & Cherif, Y. (2022). User-Oriented Policies in European HEIs: Triggering a Participative Process in Today’s Digital Turn—An OpenU Experimentation in the University of Paris 1 Panthéon-Sorbonne. Education Sciences, 12(12), 919. https://doi.org/10.3390/educsci12120919