Digital Competence Assessment Methods in Higher Education: A Systematic Literature Review

Abstract

1. Introduction

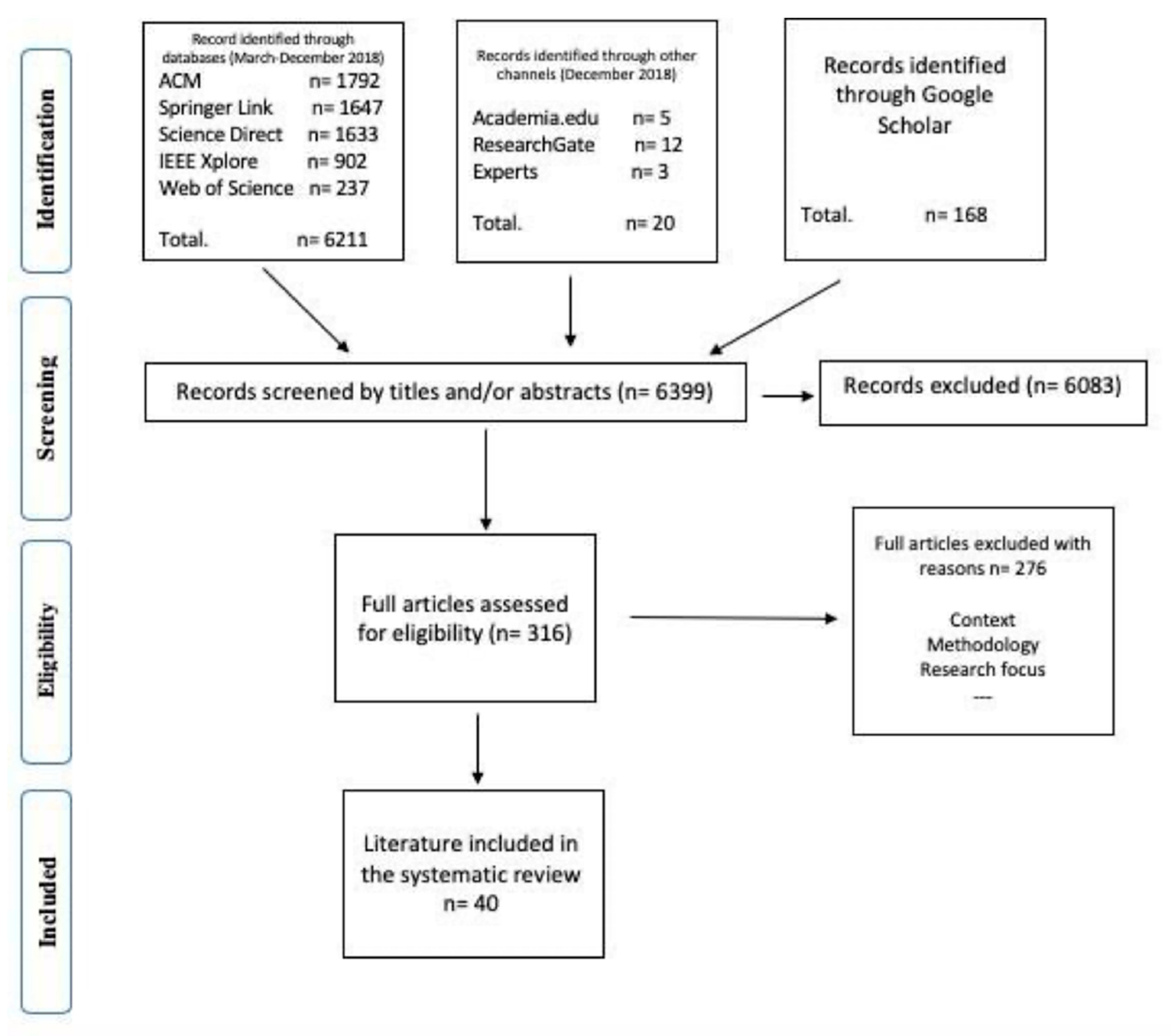

2. Systematic Review Methodology

2.1. Search Procedures

- Digital competence: Digital competency, ICT literacy, digital literacy, ICT skills, digital skills, computer skills, technology literacies, digital competencies, 21st century skills;

- Measurement: Assessment, evaluation, testing, measuring, questionnaire.

2.2. Eligibility Criteria and Screening Process

- Research focused on digital competence assessment;

- ICT literacy or other equally defined concept assessment in higher education;

- The articles describe the development process of a digital competence (or equal concept) assessment tool and include a sample in the validation process;

- The research method includes digital competence self-assessment or self-reporting;

- Research which focuses on variety of interventions in technology enhanced learning and include digital competence assessment as a preliminary stage of the research.

- Insufficient or no reporting on the sample, digital competence assessment instrument or educational setting;

- Characteristics of the used tool or instrument was not given.

3. Results

3.1. RQ1—Characteristics of Digital Competence Assessment Processes and Methods in Higher Education

3.2. RQ2; RQ3—Overview of Current Trends and Challenges in Digital Competence Assessment in Higher Education

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bawden, D. Information and digital literacies: A review of concepts. J. Doc. 2001, 57, 218–259. [Google Scholar] [CrossRef]

- Carretero, S.; Vuorikari, R.; Punie, Y. DigComp 2.1: The Digital Competence Framework for Citizens. With eight proficiency levels and examples of use. Publ. Off. Eur. Union 2017. [Google Scholar] [CrossRef]

- International Society for Technology in Education. ISTE Standards. 2007. Available online: https://iste.org/iste-standards (accessed on 13 March 2018).

- UNESCO. Unesco ict Competency Framework for Teachers; UNESCO: Paris, France, 2011. [Google Scholar]

- Redecker, C.; Punie, Y. Digital Competence of Educators. DigCompEdu. 2017. [Google Scholar] [CrossRef]

- Van Deursen, A.; van Dijk, J. Measuring Digital Skills: Performance Tests of Operational, Formal, Informal and Strategic Internet Skills among the Dutch Population. ICA Conference. 2008. Available online: https://www.utwente.nl/en/bms/vandijk/news/measuring_digital_skills/MDS.pdf (accessed on 13 March 2018).

- Cukurova, M.; Mavrikis, M.; Luckin, R. Evidence-Centered Design and Its Application to Collaborative Problem Solving in Practice-based Learning Environments About Analytics for Learning (A4L); SRI International: London, UK, 2017. [Google Scholar]

- Ala-Mutka, K. Institute for Prospective Technological Studies. 2011. Mapping Digital Competence: Towards a Conceptual Understanding. Available online: https://pdfs.semanticscholar.org/6282/f40a4146985cfef2f44f2c8d45fdb59c7e9c.pdf%0Ahttp://ftp.jrc.es/EURdoc/JRC67075_TN.pdf%5Cnftp://ftp.jrc.es/pub/EURdoc/EURdoc/JRC67075_TN.pdf (accessed on 13 March 2021).

- Van Laar, E.; Van Deursen, A.J.A.M.; Van Dijk, J.A.G.M.; De Haan, J. The relation between 21st-century skills and digital skills: A systematic literature review. Comput. Hum. Behav. 2017, 72, 577–588. [Google Scholar] [CrossRef]

- Voogt, J.; Roblin, N.P. A comparative analysis of international frameworks for 21stcentury competences: Implications for national curriculum policies. J. Curric. Stud. 2012, 44, 299–321. [Google Scholar] [CrossRef]

- Zhao, Y.; Llorente, A.M.P.; Gómez, M.C.S. Digital competence in higher education research: A systematic literature review. Comput. Educ. 2021, 168, 104212. [Google Scholar] [CrossRef]

- Siddiq, F.; Hatlevik, O.E.; Olsen, R.V.; Throndsen, I.; Scherer, R. Taking a future perspective by learning from the past—A systematic review of assessment instruments that aim to measure primary and secondary school students’ ICT literacy. Educ. Res. Rev. 2016, 19, 58–84. [Google Scholar] [CrossRef]

- Gough, D.; Oliver, S.; Thomas, J. An Introduction to Systematic Reviews; SAGE Publications Ltd.: Newbury Park, CA, USA, 2012. [Google Scholar]

- Petticrew, M.; Roberts, H. Systematic Reviews in the Social Sciences: A Practical Guide; Wilet-Blackwell: Hoboken, NJ, USA, 2005. [Google Scholar]

- Chandler, J.; Higgins, J.P.; Deeks, J.J.; Davenport, C.; Clarke, M.J. Cochrane Handbook for Systematic Reviews of Interventions Handbook for Systematic Reviews of Interventions; Cochrane: London, UK, 2017. [Google Scholar]

- Põldoja, H.; Väljataga, T.; Laanpere, M.; Tammets, K. Web-based self- and peer-assessment of teachers’ digital competencies. World Wide Web 2012, 17, 255–269. [Google Scholar] [CrossRef]

- Vázquez-Cano, E.; Meneses, E.; García-Garzón, E. Differences in basic digital competences between male and female university students of Social Sciences in Spain. Int. J. Educ. Technol. High. Educ. 2017, 14. [Google Scholar] [CrossRef]

- Pinto, M.; Pascual, R.F.; Puertas, S. Undergraduates’ information literacy competency: A pilot study of assessment tools based on a latent trait model. Libr. Inf. Sci. Res. 2016, 38, 180–189. [Google Scholar] [CrossRef]

- Soomro, K.A.; Kale, U.; Curtis, R.; Akcaoglu, M.; Bernstein, M. Development of an instrument to measure Faculty’s information and communication technology access (FICTA). Educ. Inf. Technol. 2018, 23, 253–269. [Google Scholar] [CrossRef]

- Blayone, T.J.B.; Mykhailenko, O.; Kavtaradze, M.; Kokhan, M.; Vanoostveen, R.; Barber, W. Profiling the digital readiness of higher education students for transformative online learning in the post-soviet nations of Georgia and Ukraine. Int. J. Educ. Technol. High. Educ. 2018, 15, 37. [Google Scholar] [CrossRef]

- Kiss, G.; Gastelú, C.A.T. Comparison of the ICT Literacy Level of the Mexican and Hungarian Students in the Higher Education. Procedia Soc. Behav. Sci. 2015, 176, 824–833. [Google Scholar] [CrossRef]

- Pérez-Escoda, A.; Rodríguez-Conde, M.J. Digital literacy and digital competences in the educational evaluation. In Proceedings of the Third International Conference on Technological Ecosystems for Enhancing Multiculturality—TEEM’15, Porto, Portugal, 7–9 October 2015; pp. 355–360. [Google Scholar]

- Katz, I.R.; Macklin, A.S. Information and communication technology (ICT) literacy: Integration and assessment in higher education. J. Syst. Cybern. Inf. 2007, 5, 50–55. [Google Scholar]

- Basque, J.; Ruelland, D.; Lavoie, M.-C. A Digital Tool for Self-Assessing Information Literacy Skills. In Proceedings of the World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education, Quebec City, QC, Canada, 15 October 2007; pp. 6997–7003. Available online: http://biblio.teluq.uquebec.ca/portals/950/docs/pdf/digital.pdf%0Ahttp://www.editlib.org/p/26893 (accessed on 13 March 2021).

- Guo, R.X.; Dobson, T.; Petrina, S. Digital Natives, Digital Immigrants: An Analysis of Age and Ict Competency in Teacher Education. J. Educ. Comput. Res. 2008, 38, 235–254. [Google Scholar] [CrossRef]

- Peeraer, J.; Van Petegem, P. ICT in teacher education in an emerging developing country: Vietnam’s baseline situation at the start of ‘The Year of ICT’. Comput. Educ. 2011, 56, 974–982. [Google Scholar] [CrossRef]

- Soh, T.M.T.; Osman, K.; Arsad, N.M. M-21CSI: A Validated 21st Century Skills Instrument for Secondary Science Students. Asian Soc. Sci. 2012, 8, 38. [Google Scholar] [CrossRef][Green Version]

- Wong, S.L.; Aziz, S.A.; Yunus AS, M.; Sidek, Z.; Bakar, K.A.; Meseran, H.; Atan, H. Gender Differences in ICT Competencies among Academicians at University Putra Malaysia. Malays. Online J. Instr. Technol. MOJIT 2005, 2, 62–69. Available online: http://myais.fsktm.um.edu.my/1648/ (accessed on 13 March 2021).

- Evangelinos, G.; Holley, D. Developing a Digital Competence Self-Assessment Toolkit for Nursing Students. Eur. Distance E Learn. Netw. EDEN 2014, 1, 206–212. Available online: http://www.eden-online.org/publications/proceedings.html (accessed on 13 March 2018).

- Costa, F.A.; Viana, J.; Cruz, E.; Pereira, C. Digital literacy of adults education needs for the full exercise of citizenship. In Proceedings of the 2015 International Symposium on Computers in Education, SIIE 2015, Setubal, Portugal, 25–27 November 2015; IEEE: Piscataway, NJ, USA, 2016; pp. 92–96. [Google Scholar] [CrossRef]

- Maderick, J.A.; Zhang, S.; Hartley, K.; Marchand, G. Preservice Teachers and Self-Assessing Digital Competence. J. Educ. Comput. Res. 2016, 54, 326–351. [Google Scholar] [CrossRef]

- Tolic, M.; Pejakovic, S. Self-assessment of digital competences of Higher Education professors. In Proceedings of the 5th International Scientific Symposium Economy of Eastern Croatia—Vision and Growth, Osijek, Croatia, 2–4 June 2016; pp. 570–578. [Google Scholar]

- Heerwegh, D.; De Wit, K.; Verhoeven, J.C. Exploring the Self-Reported ICT Skill Levels of Undergraduate Science Students. J. Inf. Technol. Educ. Res. 2016, 15, 019–047. [Google Scholar] [CrossRef]

- Cazco, G.H.O.; González, M.C.; Abad, F.M.; Mercado-Varela, M.A. Digital competence of the university faculty. In Proceedings of the Fourth International Conference on Technological Ecosystems for Enhancing Multiculturality, Salamanca, Spain, 2–4 November 2016; pp. 147–154. [Google Scholar] [CrossRef]

- Almerich, G.; Orellana, N.; Suárez-Rodríguez, J.; Díaz-García, I. Teachers’ information and communication technology competences: A structural approach. Comput. Educ. 2016, 100, 110–125. [Google Scholar] [CrossRef]

- Turiman, P.; Osman, K.; Wook, T.S.M.T. Digital age literacy proficiency among science preparatory course students. In Proceedings of the 2017 6th International Conference on Electrical Engineering and Informatics (ICEEI), Langkawi, Malaysia, 25–27 November 2017; pp. 1–7. [Google Scholar]

- Corona, A.G.-F.; Martínez-Abad, F.; Rodríguez-Conde, M.-J. Evaluation of Digital Competence in Teacher Training. TEEM 2017, 2017, 1–5. [Google Scholar] [CrossRef]

- Casillas, S.; Cabezas, M.; Ibarra, M.S.; Rodríguez, G. Evaluation of digital competence from a gender perspective. In Proceedings of the 5th International Conference on Mobile Software Engineering and Systems, ACM, Cádiz, Spain, 18–20 October 2017; p. 25. [Google Scholar]

- Deshpande, N.; Deshpande, A.; Robin, E. Evaluation of ICT skills of Dental Educators in the Dental Schools of India—A questionnaire study. J. Contemp. Med Educ. 2016, 4, 149. [Google Scholar] [CrossRef]

- Türel, Y.K.; Özdemir, T.Y.; Varol, F. Teachers’ ICT Skills Scale (TICTS): Reliability and Validity. Cukurova Univ. Fac. Educ. J. 2017, 46, 503–516. [Google Scholar] [CrossRef]

- Instefjord, E.J.; Munthe, E. Educating digitally competent teachers: A study of integration of professional digital competence in teacher education. Teach. Teach. Educ. 2017, 67, 37–45. [Google Scholar] [CrossRef]

- Guzmán-Simón, F.; Jiménez, E.G.; López-Cobo, I. Undergraduate students’ perspectives on digital competence and academic literacy in a Spanish University. Comput. Hum. Behav. 2017, 74, 196–204. [Google Scholar] [CrossRef]

- Alam, K.; Erdiaw-Kwasie, M.O.; Shahiduzzaman; Ryan, B. Assessing regional digital competence: Digital futures and strategic planning implications. J. Rural Stud. 2018, 60, 60–69. [Google Scholar] [CrossRef]

- Belda-Medina, J. ICTs and Project-Based Learning (PBL) in EFL: Pre-service Teachers’ Attitudes and Digital Skills. Int. J. Appl. Linguist. Engl. Lit. 2021, 10, 63–70. [Google Scholar] [CrossRef]

- Moreno-Fernández, O.; Moreno-Crespo, P.; Hunt-Gómez, C. University Students in Southwestern Spain Digital Competences. SHS Web Conf. 2018, 48, 01012. [Google Scholar] [CrossRef]

- Lopes, P.; Costa, P.; Araujo, L.; Ávila, P. Measuring media and information literacy skills: Construction of a test. Communications 2018, 43, 508–534. [Google Scholar] [CrossRef]

- Napal Fraile, M.; Peñalva-Vélez, A.; Mendióroz Lacambra, A. Development of Digital Competence in Secondary Education Teachers’ Training. Educ. Sci. 2018, 8, 104. [Google Scholar] [CrossRef]

- Bartol, T.; Dolničar, D.; Podgornik, B.B.; Rodič, B.; Zoranović, T. A Comparative Study of Information Literacy Skill Performance of Students in Agricultural Sciences. J. Acad. Libr. 2018, 44, 374–382. [Google Scholar] [CrossRef]

- Güneş, E.; Bahçivan, E. A mixed research-based model for pre-service science teachers’ digital literacy: Responses to “which beliefs” and “how and why they interact” questions. Comput. Educ. 2018, 118, 96–106. [Google Scholar] [CrossRef]

- Tondeur, J.; Aesaert, K.; Prestridge, S.; Consuegra, E. A multilevel analysis of what matters in the training of pre-service teacher’s ICT competencies. Comput. Educ. 2018, 122, 32–42. [Google Scholar] [CrossRef]

- Saxena, P.; Gupta, S.K.; Mehrotra, D.; Kamthan, S.; Sabir, H.; Katiyar, P.; Prasad, S.S. Assessment of digital literacy and use of smart phones among Central Indian dental students. J. Oral Biol. Craniofacial Res. 2018, 8, 40–43. [Google Scholar] [CrossRef]

- Techataweewan, W.; Prasertsin, U. Development of digital literacy indicators for Thai undergraduate students using mixed method research. Kasetsart J. Soc. Sci. 2018, 39, 215–221. [Google Scholar] [CrossRef]

- Miranda, P.; Isaias, P.; Pifano, S. Digital Literacy in Higher Education: A Survey on Students’ Self-Assessment; Springer Nature; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2018; pp. 71–87. [Google Scholar]

- Serafín, Č.; Depešová, J. Understanding Digital Competences of Teachers in Czech Republic. Eur. J. Sci. Theol. 2019, 15, 125–132. [Google Scholar]

- König, J.; Jäger-Biela, D.J.; Glutsch, N. Adapting to online teaching during COVID-19 school closure: Teacher education and teacher competence effects among early career teachers in Germany. Eur. J. Teach. Educ. 2020, 43, 608–622. [Google Scholar] [CrossRef]

- Mislevy, R.J.; Almond, R.; Lukas, J.F. A brief introduction to evidence-centered design. ETS Res. Rep. Ser. 2003, 2003. [Google Scholar] [CrossRef]

| Author (Year) | Year | Country | Year (Data Collection) | Sample Size | Methodology | Competency Model | Assessment Method | Tool | Task/Item Design | Test Time |

|---|---|---|---|---|---|---|---|---|---|---|

| Katz & Macklin [23] | 2007 | USA | 2005 | 4048 | Quantitative | L | TEST | ETS | AUTH | 30 min 2 × 60 min |

| Basque et al. [24]. | 2007 | Canada | - | 35 | Quantitative | L | TEST | infoCompétences+ | MC-INT | NR |

| Guo, Dobson & Petrina [25] | 2008 | Canada | 2001–2004 | 2000 | Quantitative | F (ISTE) | Survey | Survey tool | MC | NR |

| Peeraer & Van Petegem [26] | 2011 | Vietnam | 2008–2009 | 783 | Quantitative | L | Questionnaire | - | MC | NR |

| Soh, Osman, & Arsad [27] | 2012 | Malaysia | - | 760 | Qualitative | L (M-21CSI) | Interview + validity by factor analysis | M-21CSI | INT | NR |

| Wong & Cheung [28] | 2012 | China | 2011 | 640 | Quantitative | - | Summative assessment | - | INT | NR |

| Evangelinos & Holley | 2014 | UK | - | 102 | Quantitative | F (DigComp) | Test (iterative Delphi-type survey) | Survey Monkey | MC | NR |

| Van [29] van Laar. et al. [9] | 2014 | UK Netherland | 2013–2014 | 630 | Quantitative | F (Internet Skills) | Questionnaire | - | MC-INT | NR |

| Põldoja et al. [16] | 2014 | Estonia | - | 50 | Mixed | F (ISTE) | TEST | DigiMina | MC-INT | NR |

| Costa et al. [30]. | 2015 | Portugal | 2015 | 106 | Mixed | L | Survey/Interview | - | MC-INT | NR |

| Maderick et al. [31] | 2015 | USA | 2013 | 174 | Quantitative | L | Survey | - | MC | NR |

| Perez-Escoda & Rodriguez-Conde [22] | 2015 | USA, Spain | - | 35 000 | Quantitative | F (The framework of 21st century skills) | TEST | ATCS21S | AUTH | 30 min + 10 min + 10 min |

| Kiss & Torres Gasteú [21] | 2015 | Mexico, Hungary | - | 720 | Quantitative | L | Questionnaire | - | MC | NR |

| Pinto, Fernandez-Pascual & Puertas [18] | 2016 | Spain | 2013 | 195 | Quantitative | L | Questionnaire | IL-HUMASS | MC-INT | |

| Tolic & Pejakovic [32] | 2016 | Croatia | 2016 | 1800 | Quantitative | L | Questionnaire | - | MC | NR |

| Heerwegh et al. [33]. | 2016 | Belgium | - | 297 | Quantitative | L | Questionnaire | Survey tool | MC | NR |

| Cazco et al. [34]. | 2016 | Ecuador | 2016 | 178 | Quantitative | L | Questionnaire | - | MC-INT | NR |

| Almerich et al. [35] | 2016 | Spain | - | 1095 | Quantitative | L | Questionnaire | - | MC | NR |

| Turiman, Osman &Wook. [36] | 2017 | Malaysia | - | 240 | Quantitative | L (M-21CSI) | Survey | M-21CSI | MC-INT | NR |

| Corona et al. [37] | 2017 | Spain | - | 316 | Quantitative | F (DigComp) | TEST | Google Forms | MC-INT | NR |

| Casillas et al. [38] | 2017 | Spain | - | 580 | Quantitative | L | Questionnaire | - | MC-INT | |

| Vazques-Cano et al. [17]. | 2017 | Spain | 2014–2016 | 923 | Quantitative | L | TEST | COBADI | AUTH | NR |

| Deshpande et al. [39] | 2017 | India | 2016 | 320 | Quantitative | L | Questionnaire | - | MC | NR |

| Türel et al. [40]. | 2017 | Turkey | 2012–2013 | 304 | Quantitative | L | Questionnaire | - | MC | NR |

| Instefjord & Munthe. [41] | 2017 | Norway | 2014 | 1381 | Quantitative | F (DigComp) | Questionnaire | - | MC | NR |

| Guzman-Simon et al. [42] | 2017 | Spain | 2012–2014 | 786 | Quantitative | L | Survey | SurveyMonkey | MC | NR |

| Alam et al. [43]. | 2018 | Australia | 2017 | 95 | Qualitative | L | Focus-group interviews (SWOT analysis) | - | INT | 50 min |

| Belda-Medina [44] | 2021 | Spain | 188 | Quantitative | L | TEST | - | MC-INT | NR | |

| Moreno-Fernandez et al. [45] | 2018 | Spain | - | - | Quantitative | L | TEST | COBADI | MC | NR |

| Soomro et al. [19] | 2018 | Pakistan | - | 322 | Quantitative | L | Survey | FICTA scale | MC-INT | NR |

| Lopes et al. [46] | 2018 | Portugal | 2011–2012 | 500 | Quantitative | L | TEST (item response theory) | Media and Information Literacy Test | AUTH | NR |

| Nepal-Fraile et al. [47] | 2018 | Spain | 2015–2018 | 44 | Qualitative | F (DigComp) | TEST (S-A) | Physical test | INT | 45 min |

| Bartol et al. [48]. | 2018 | Serbia | - | 310 | Quantitative | L | ILT (information literacy) test | ILT | AUTH | 45 min |

| Günes & Bahcivan [49] | 2018 | Turkey | - | 979 | Quantitative | L | Questionnaire | - | MC-INT | NR |

| Tondeur et al. [50] | 2018 | Belgium | - | 931 | Quantitative | L | Questionnaire | - | MC | NR |

| Saxena et al. [51] | 2018 | India | 2016 | 260 | Quantitative | L | Survey | - | MC-INT | NR |

| Techataweewan & Prasertsin [52] | 2018 | Thailand | 2015 | 1183 | Quantitative | L | Questionnaire | - | MC-INT | NR |

| Blayone et al. [20] | 2018 | Georgia Ukraine | 2017 | 279 | Quantitative | F (GRCU Digital Competency Framework) | TEST | DCP-Digital Competency Profiler | AUTH | NR |

| Miranda, Isaias & Pifano [53] | 2018 | Portugal | - | 177 | Quantitative | L | Questionnaire | - | MC-INT | NR |

| Serafin & Depešova [54] | 2019 | Slovak Republic | 2016 | 351 | Quantitative | L | Questionnaire | - | MC | NR |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sillat, L.H.; Tammets, K.; Laanpere, M. Digital Competence Assessment Methods in Higher Education: A Systematic Literature Review. Educ. Sci. 2021, 11, 402. https://doi.org/10.3390/educsci11080402

Sillat LH, Tammets K, Laanpere M. Digital Competence Assessment Methods in Higher Education: A Systematic Literature Review. Education Sciences. 2021; 11(8):402. https://doi.org/10.3390/educsci11080402

Chicago/Turabian StyleSillat, Linda Helene, Kairit Tammets, and Mart Laanpere. 2021. "Digital Competence Assessment Methods in Higher Education: A Systematic Literature Review" Education Sciences 11, no. 8: 402. https://doi.org/10.3390/educsci11080402

APA StyleSillat, L. H., Tammets, K., & Laanpere, M. (2021). Digital Competence Assessment Methods in Higher Education: A Systematic Literature Review. Education Sciences, 11(8), 402. https://doi.org/10.3390/educsci11080402