Design and Initial Validation of a Questionnaire on Prospective Teachers’ Perceptions of the Landscape

Abstract

1. Introduction

2. Materials and Methods

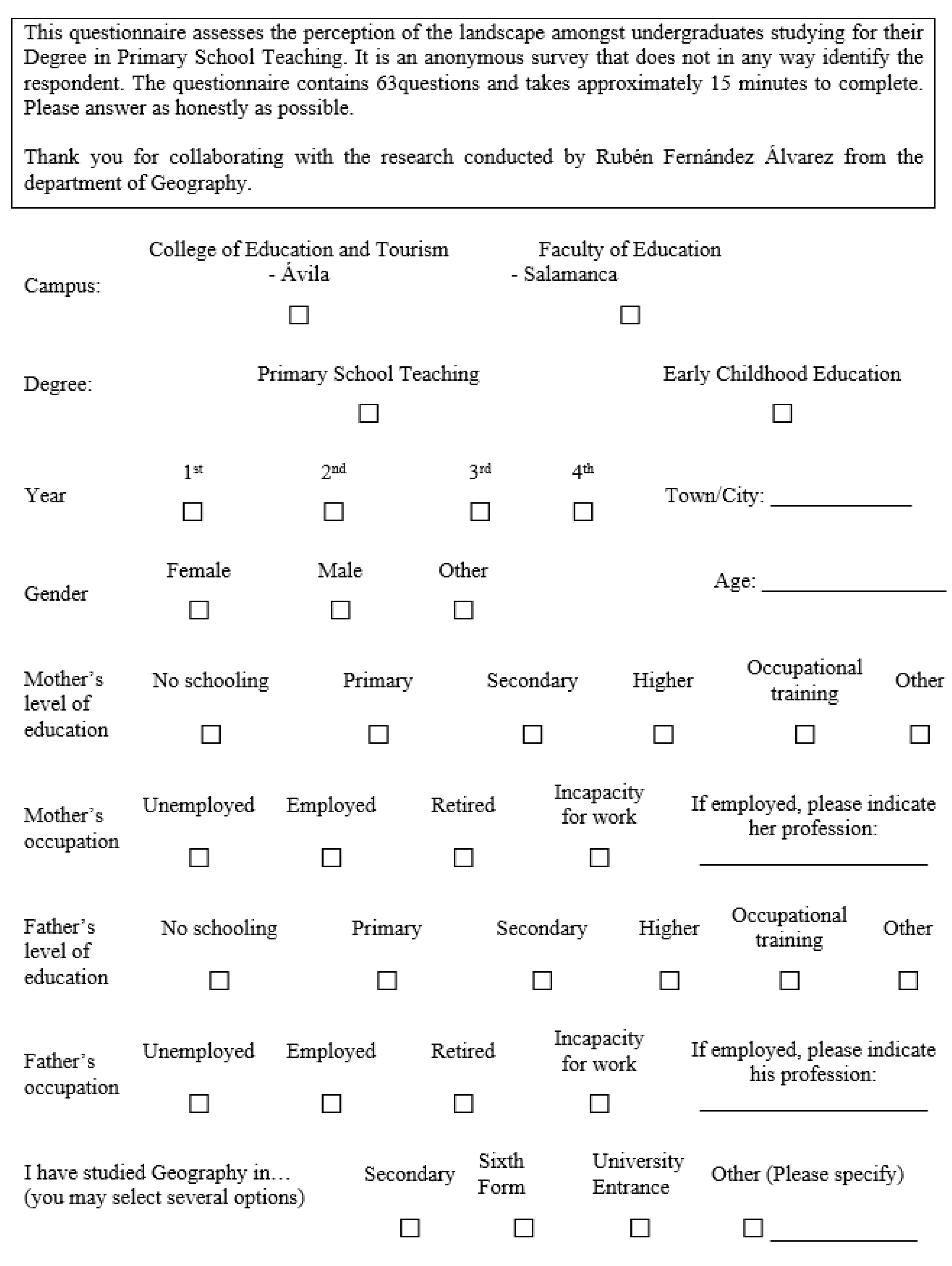

2.1. Participants: Panel of Experts and Sample

2.2. Instruments

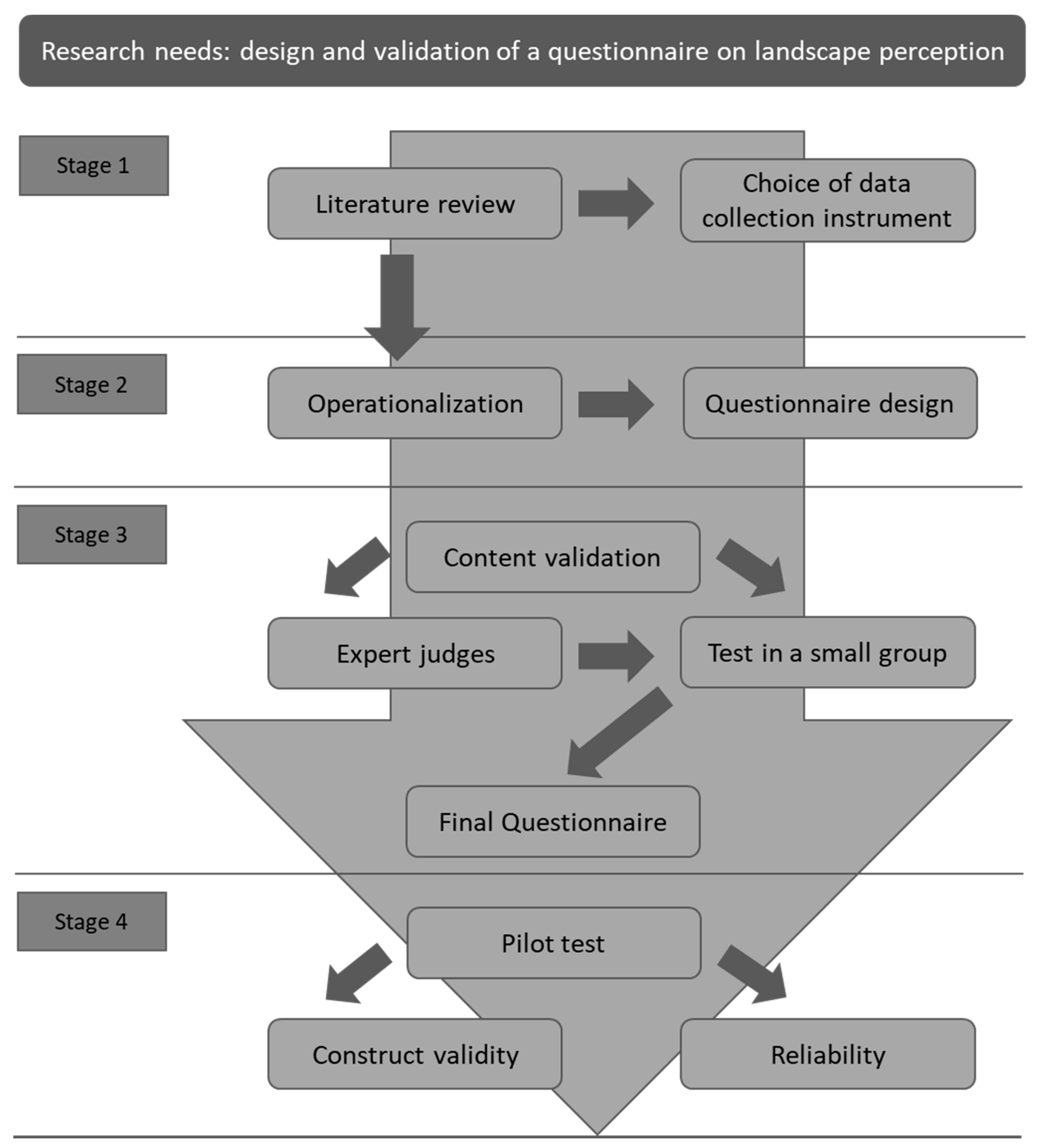

2.3. Procedure

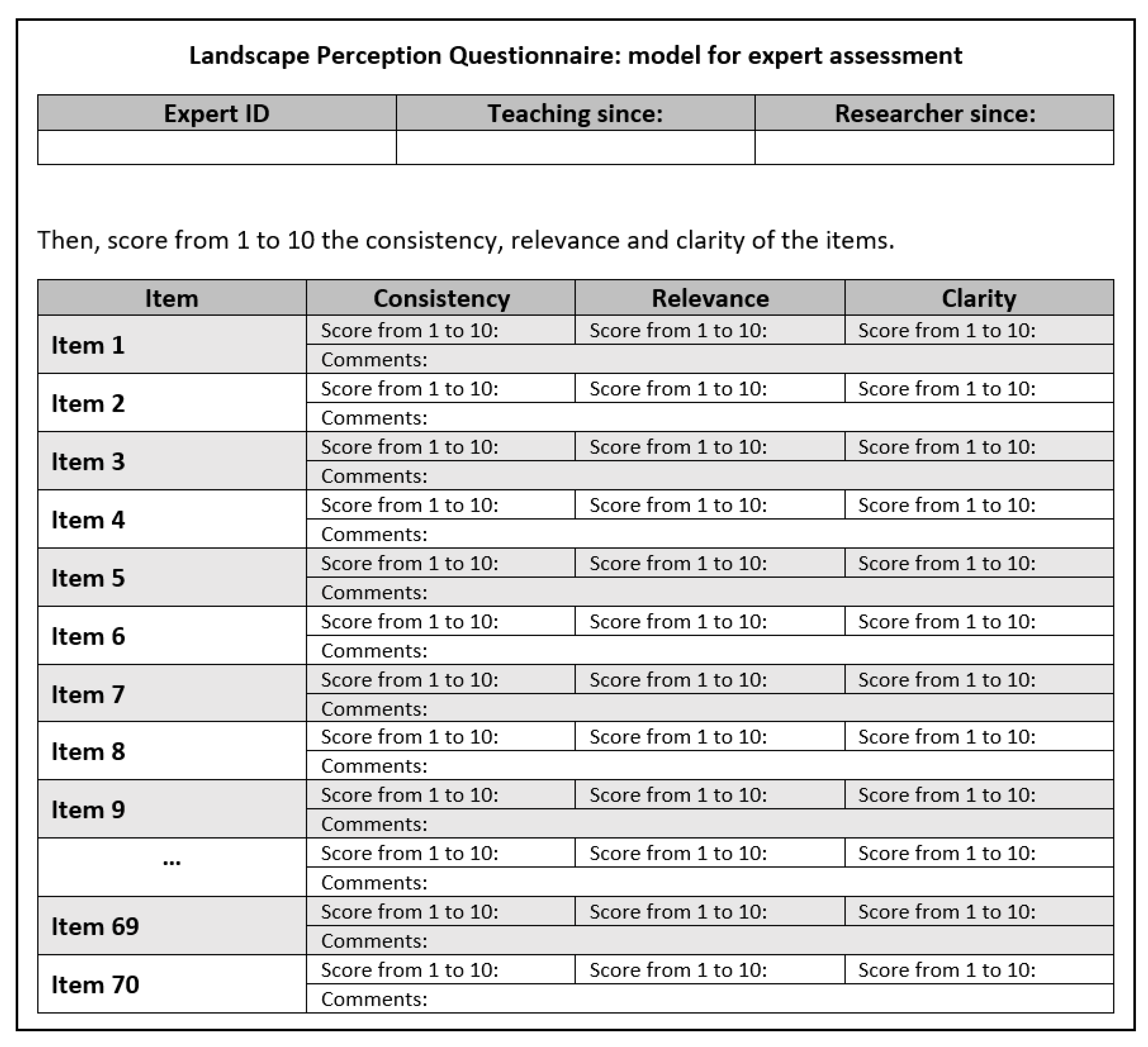

2.3.1. Design and Content Validation

2.3.2. Construct’s Validity

3. Results

3.1. Content Validity and Reliability

3.2. Construct Validity

3.3. Final Questionnaire on Landscape Perception: Cuestionario Sobre Percepción del Paisaje-CPP

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fernández, R. La aplicación de Landscape Character Assessment a los espacios de montaña media: El paisaje del macizo de Las Villuercas. Ciudad. Territ. Estud. Territ. 2015, 185, 499–518. [Google Scholar]

- Nogué, J.; Puigbert, L.; Sala, P.; Bretcha, G. Landscape and Public Participation. The Experience of the Landscape Catalogues of Catalonia; Catalan Landscape Observatory and Regional Government of Catalunya: Barcelona, Spain, 2010. [Google Scholar]

- Ortega, M. El Convenio Europeo del Paisaje: Claves para un compromiso. Ambienta 2007, 63, 18–26. [Google Scholar]

- Zoido, F. El paisaje, patrimonio público y recurso para la mejora de la democracia. Boletín Inst. Andal. Patrim. Hist. 2004, 50, 66–73. [Google Scholar]

- Fernández, R.; Plaza, J.I. Participación ciudadana y educación en materia de paisaje en el marco del Convenio Europeo del Paisaje en España. Cuad. Geogr. 2019, 58, 262–286. [Google Scholar] [CrossRef]

- Prieur, M.; Durousseau, S. Landscape and Public participation. In Landscape and Sustainable Development: Challenges of the European Landscape Convention; Prieur, M., Luginbühl, Y., Zoido, F., De Montmollin, B., Pedroli, B., Van Mansvelt, J.D., Durousseau, S., Eds.; Council of Europe: Strasbourg, France, 2006; pp. 165–208. [Google Scholar]

- Zoido, F. Landscape and spatial planning policies. In Landscape and Sustainable Development: Challenges of the European Landscape Convention; Prieur, M., Luginbühl, Y., Zoido, F., De Montmollin, B., Pedroli, B., Van Mansvelt, J.D., Durousseau, S., Eds.; Council of Europe: Strasbourg, France, 2006; pp. 52–88. [Google Scholar]

- Pedroli, B.; Van Mansvelt, J.D. Landscape and awareness-raising, training and education. In Landscape and Sustainable Development: Challenges of the European Landscape Convention; Prieur, M., Luginbühl, Y., Zoido, F., De Montmollin, B., Pedroli, B., Van Mansvelt, J.D., Durousseau, S., Eds.; Council of Europe: Strasbourg, France, 2006; pp. 119–142. [Google Scholar]

- Casas, M.; Puig, J.; Erneta, L. El paisaje en el contexto curricular de la LOMCE: Una oportunidad educativa, ¿aprovechada o desaprovechada? Didact. Geogr. 2017, 18, 39–68. [Google Scholar]

- Delgado, E. El paisaje en la formación de maestros, un recurso educativo de alto interés para la Educación Primaria. Tabanque Rev. Pedagog. 2015, 28, 117–138. [Google Scholar]

- García, A. Un enfoque innovador en didáctica del paisaje: Escenario y secuencia geográfica. In Innovación en la Enseñanza de la Geografía ante los Desafíos Sociales y Territoriales; De Miguel, R., De Lázaro, M.L., Marrón, M.J., Eds.; Instituto Fernando el Católico: Zaragoza, Spain, 2013; pp. 257–277. [Google Scholar]

- Tort, J. El paisaje como pedagogía del territorio. Didact. Geogr. 2004, 6, 133–153. [Google Scholar]

- García, A. El paisaje: Un desafío curricular y didáctico. Rev. Didact. Especificas 2018, 4, 7–26. [Google Scholar]

- Fernández, R. La enseñanza del paisaje desde una concepción constructivista: Propuesta didáctica. Dedica. Rev. Educ. Hum. 2019, 15, 135–159. [Google Scholar] [CrossRef]

- BOE (Boletín Oficial del Estado—Spain’s Offocial State Gazette). Royal Decree 126/2014, of 28 de February, establishing the basic syllabus for Primary Education, 2014. Available online: https://www.boe.es/buscar/pdf/2014/BOE-A-2014-2222-consolidado.pdf (accessed on 1 July 2020).

- Busquets, J. La educación en paisaje. Iber. Didact. Cienc. Soc. Geogr. Hist. 2010, 65, 7–17. [Google Scholar]

- Nardi, A. El paisaje como instrumento de intermediación cultural en la escuela. Iber. Didactica Cienc. Soc. Geogr. Hist. 2010, 65, 25–37. [Google Scholar]

- Domínguez, A.; López, R. Patrimonio, paisaje y educación: Formación inicial del profesorado y educación cívica del alumnado de primaria. Clio. Hist. Hist. Teach. 2014, 40, 1–26. [Google Scholar]

- Gómez-Zotano, J.; Riesco-Chueca, P. Landscape learning and teaching: Innovations in the context of The European Landscape Convention. In Proceedings of the INTED2010 Conference, Valencia, Spain, 8–10 March 2010; pp. 1–13. [Google Scholar]

- Zanato, O. Lo sguardo sul paesaggio da una prospettiva padagógico-ambientale. In Il Paesaggio Vicino a Noi. Educazione, Consapevolezza, Responsabilità; Castiglioni, B., Celi, M., Gamberoni, E., Eds.; Museo Civico di Storia Naturale e Archeologia: Montebelluna, Italy, 2007. [Google Scholar]

- Santana, D.; Morales, A.J.; Souto, X.M. Las representaciones sociales del paisaje en los trabajos de campo con Educación Primaria. In Nuevas Perspectivas Conceptuales y Metodológocas para la Educación Geográfica; Martínez, R., Tonda, E.M., Eds.; Asociación Española de Geografía: Córdoba, Spain, 2014; pp. 167–182. [Google Scholar]

- Castiglioni, B. Education on landscape: Theoretical and practical approaches in the frame of the European Landscape Convention. In Geographical View on Education for Sustainable Development; Reinfried, S., Schleicher, Y., Rempfler, A., Eds.; Geographiedidaktische Forschungen: Lucerne, Switzerland, 2007. [Google Scholar]

- McMahon, M.; Pospisil, R. Laptops for a digital lifestyle: Millennial students and wireless mobile technologies. Available online: https://www.researchgate.net/publication/49280225_Laptops_for_a_Digital_Lifestyle_Millennial_Students_and_Wireless_Mobile_Technologies/stats (accessed on 8 September 2020).

- Cabero, J.; Barroso, J. The educational possibilities of Augmented Reality. New Approaches Educ. Res. 2016, 5, 44–50. [Google Scholar]

- Prensky, M. Digital Natives, Digital Immigrants. Horizon 2001, 9, 1–20. [Google Scholar]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education; Routledge: New York, NY, USA, 2011. [Google Scholar]

- Torrado, M. Estudios de Encuesta. In Metodología de la Investigación Educativa, 5th ed.; Bisquerra, R., Ed.; La Muralla: Madrid, Spain, 2016; pp. 223–249. [Google Scholar]

- Ayuga, E.; González, C.; Ortiz, M.A.; Martínez, E. Diseño de un cuestionario para evaluar conocimientos básicos de estadística de estudiantes del último curso de ingeniería. Form. Univ. 2012, 5, 21–32. [Google Scholar] [CrossRef][Green Version]

- Cabero, J.; Llorente, M.C. La aplicación del juicio de experto como técnica de evaluación de las tecnologías de la información (TIC). Eduweb. Rev. Tecnol. Inf. Comun. Educ. 2013, 7, 11–22. [Google Scholar]

- Ding, C.; Hershberger, S. Assessing content validity and content equivalence using structural equation modelling. Struct. Equ. Modeling Multidiscip. J. 2002, 9, 283–297. [Google Scholar] [CrossRef]

- Escurra, L.M. Cuantificación de la validez de contenido por criterio de jueces. Rev. Psicol. 1988, 6, 103–111. [Google Scholar]

- Garrido, M.; Romero, S.; Ortega, E.; Zagalaz, M. Diseño de un cuestionario para niños sobre los padres y madres en el deporte (CHOPMD). J. Sport Health Res. 2011, 3, 153–164. [Google Scholar]

- Oloruntegbe, K.O.; Zamri, S.; Saat, R.M.; Alam, G.M. Development and validation of measuring instruments of contextualization of science among Malaysian and Nigerian serving and preservice chemistry teachers. Int. J. Phys. Sci. 2010, 5, 2075–2083. [Google Scholar]

- Escobar-Pérez, J.; Cuervo-Martínez, A. Validez de contenido y juicio de expertos: Una aproximación a su utilización. Av. En Med. 2008, 6, 27–36. [Google Scholar]

- Utkin, L.V. A method for processing the unreliable expert judgments about parameters of probability distributions. Eur. J. Oper. Res. 2005, 175, 385–398. [Google Scholar] [CrossRef]

- Backhoff, E.; Aguilar, J.; Larrazolo, N. Metodología para la validación de contenidos de exámenes normativos. Rev. Mex. Psicol. 2006, 23, 79–86. [Google Scholar]

- Delgado-Rico, E.; Carretero-Dios, H.; Ruch, W. Content validity evidences in test development: An applied perspective. Intern. J. Clin. Health Psych. 2012, 31, 67–74. [Google Scholar]

- Gable, R.K.; Wolf, J.K. Instrument Development in the Affective Domain: Measuring Attitudes and Values in Corporate and School Settings; Kluwer Academic: Boston, MA, USA, 1993. [Google Scholar]

- Grant, J.S.; Davis, L.L. Selection and use of content experts for instrument development. Res. Nurs. Health 1997, 20, 269–274. [Google Scholar] [CrossRef]

- Lynn, M. Determination and quantification of content validity. Nurs. Res. 1986, 35, 382–385. [Google Scholar] [CrossRef]

- McGartland, D.; Berg-Weger, M.; Tebb, S.S.; Lee, E.S.; Rauch, S. Objectifying content validity: Conducting a content validity study in social work research. Soc. Work Res. J. 2003, 27, 94–104. [Google Scholar]

- Chacón, S.; Pérez-Gil, J.A.; Holgado, F.P.; Lara, A. Evaluación de la calidad universitaria: Validez de contenido. Psicothema 2001, 13, 294–301. [Google Scholar]

- Martín-Romera, A.; Molina, E. Valor del conocimiento pedagógico para la docencia en Educación Secundaria: Diseño y validación de un cuestionario. Estud. Pedagog. 2017, 43, 195–220. [Google Scholar] [CrossRef]

- García, E.; Cabero, J. Diseño y valoración de un cuestionario dirigido a describir la evaluación en procesos de educación a distancia. Edutec-E 2011, 35, 1–26. [Google Scholar]

- Merino-Barrero, J.; Valero-Valenzuela, A.; Moreno-Murcia, J. Análisis psicométrico del cuestionario estilos de enseñanza en Educación Física (EEEF). Rev. Int. Med. Cienc. Act. Fis. Deporte 2017, 17, 225–241. [Google Scholar]

- Singh, K. Quantitative Social Research Methods; Sage Publications: London, UK, 2007. [Google Scholar]

- Galán, M. Desarrollo y validación de contenido de la nueva versión de un instrumento para clasificación de pacientes. Rev. Latinoam. Enferm. 2011, 19, 1–9. [Google Scholar]

- McMillan, J.H.; Schumacher, S. Investigación Educativa. Una Introducción Conceptual, 5th ed.; Pearson Educación: Madrid, Spain, 2011. [Google Scholar]

- De Winter, J.C.F.; Dodou, D.; Wieringa, P.A. Exploratory factor analysis with small sample sizes. Multivar. Behav. Res. 2009, 44, 147–181. [Google Scholar] [CrossRef]

- Ferrando, P.J.; Anguiano-Carrasco, C. El análisis factorial como técnica de investigación en Psicología. Pap. Psicólogo 2010, 31, 18–33. [Google Scholar]

- Lloret-Segura, S.; Ferreres-Traver, A.; Hernández-Baeza, A.; Tomás-Marco, I. El análisis factorial exploratorio de los ítems: Una guía práctica, revisada y actualizada. An. Psicol. 2014, 30, 1151–1169. [Google Scholar] [CrossRef]

- Frías-Navarro, D.; Pascual, M. Prácticas del análisis factorial exploratorio (AFE) en la investigación sobre la conducta del consumidor y marketing. Suma Psicol. 2012, 19, 45–58. [Google Scholar]

- Joshi, A.; Kale, S.; Chandel, S.; Pal, D.K. Likert Scale: Explored and Explained. Br. J. Appl. Sci. Technol. 2015, 7, 396–403. [Google Scholar] [CrossRef]

- Zube, E.H.; Sell, J.L.; Taylor, L.G. Landscape perception: Research, application and theory. Landsc. Urban Plan. 1982, 9, 1–33. [Google Scholar] [CrossRef]

- Mata, R. Métodos de estudio del paisaje e instrumentos para su gestión. Consideraciones a partir de experiencias de planificación territorial. In El Paisaje y la Gestión del Territorio. Criterios Paisajísticos en la Ordenación del Territorio y el Urbanismo; Mata, R., Torroja, A., Eds.; Diputación (Provincial Council) de Barcelona: Barcelona, Spain, 2006; pp. 199–239. [Google Scholar]

- Oliva, J.; Iso, A. Diseños metodológicos para la planificación participativa del paisaje. Empiria. Rev. Metodol. Cienc. Soc. 2014, 27, 95–120. [Google Scholar]

- Serrano, D. Paisajes y políticas públicas. Investig. Geográficas 2007, 42, 109–123. [Google Scholar] [CrossRef]

- Zoido, F. El paisaje, ideas para la actuación. In Estudios Sobre el Paisaje; Martínez de Pisón, E., Ed.; Fundación Duques de Soria: Madrid, Spain, 2000; pp. 293–311. [Google Scholar]

- Calcagno, A. Landscape and education. In Landscape Dimensions. Reflections and Proposals for the Implementation of the European Landscape Convention; Council of Europe: Strasbourg, France, 2017; pp. 55–119. [Google Scholar]

- Beauchamp, E.; Clements, T.; Milner-Gulland, E.J. Investigating Perceptions of Land Issues in a Threatened Landscape in Northern Cambodia. Sustainability 2019, 11, 5881. [Google Scholar] [CrossRef]

- Cornwall, A. Locating citizen participation. Inst. Dev. Stud. Bull. 2002, 33, 9–19. [Google Scholar] [CrossRef]

- Liceras, A. Didáctica del paisaje. Didáctica Cienc. Soc. Geogr. Hist. 2013, 74, 85–93. [Google Scholar]

- Peng, J.; Yan, S.; Strijker, D.; Wu, Q.; Chen, W.; Ma, Z. The influence of place identity on perceptions of landscape change: Exploring evidence from rural land consolidation projects in Eastern China. Land Use Policy 2020, 99. [Google Scholar] [CrossRef]

- Rodríguez, E.J.; Granados, C.S.T.; Santo-Tomás, R. Landscape perception in Peri-Urban Areas: An expert-based methodological approach. Landsc. Online 2019, 75, 1–22. [Google Scholar] [CrossRef]

- Yli-Panula, E.; Persson, C.; Jeronen, E.; Eloranta, V.; Pakula, H.M. Landscape as experienced place and worth conserving in the drawings of Finnish and Swedish students. Educ. Sci. 2019, 9, 93. [Google Scholar] [CrossRef]

- Council of Europe. Explanatory Report to the European Landscape Convention; Council of Europe: Florence, Italy, 2000. [Google Scholar]

- Iurk, M.C.; Biondi, D.; Dlugosz, F.L. Perception, landscape and environmental education: An investigation with students from the municipality of Irati, state of Paraná, Brazil. Floresta 2018, 48, 143–152. [Google Scholar] [CrossRef]

- Batllorí, R.; Serra, J.M. D’ensenyar geografía a través del paisatge a educar en paisatge. Doc. Anal. Geogr. 2017, 63, 617–630. [Google Scholar]

- García, A. Perspectivas de futuro en el aprendizaje del paisaje. Didact. Geogr. 2019, 20, 55–77. [Google Scholar]

- Liceras, A. Observar e Interpretar el Paisaje. Estrategias Didácticas; Grupo Editorial Universitario: Granada, Spain, 2003. [Google Scholar]

- Nevrelová, M.; Ružicková, J. Educational Potential of Educational Trails in Terms of Their Using in the Pedagogical Process (Outdoor Learning). Eur. J. Contemp. Educ. 2019, 8, 550–561. [Google Scholar]

- García, A. El itinerario geográfico como recurso didáctico para la valoración del paisaje. Didact. Geogr. 2004, 6, 79–95. [Google Scholar]

- Coll, C. Constructivismo y educación escolar: Ni hablamos siempre de los mismo ni lo hacemos siempre desde la misma perspectiva epistemológica. Anu. Psicol. 1996, 69, 153–178. [Google Scholar]

- Díaz, F.; Hernández, G. Estrategias Docentes Para un Aprendizaje Significativo. Una Interpretación Constructivista; McGraw Hill: Mexico City, Mexico, 2002. [Google Scholar]

- García, A. Aplicación didáctica del aprendizaje basado en problemas al análisis geográfico. Rev. Didact. Especificas 2010, 2, 43–60. [Google Scholar]

- Solé, I.; Coll, C. Los profesores y la concepción constructivista. In El Constructivismo en el Aula; Coll, C., Mauri, T., Miras, M., Solé, I., Zabala, A., Eds.; Graó: Barcelona, Spain, 1993; pp. 7–24. [Google Scholar]

- Zamora-Polo, F.; Corrales-Serrano, M.; Sánchez-Martín, J.; Espejo-Antúnez, L. Nonscientific University Students Training in General Science Using an Active-Learning Merged Pedagogy: Gamification in a Flipped Classroom. Educ. Sci. 2019, 9, 297. [Google Scholar] [CrossRef]

- Mateo, J. La Investigación “Ex-Post-Facto”; Universitat Oberta de Catalunya: Barcelona, Spain, 1997. [Google Scholar]

- Aiken, L.R. Three coefficients for analyzing the reliability and validity of ratings. Educ. Psychol. Meas. 1985, 45, 131–142. [Google Scholar] [CrossRef]

- Charter, R.A. A Breakdown of reliability coefficients by test type and reliability method, and the clinical implications of low reliability. J. Gen. Psychol. 2003, 130, 290–304. [Google Scholar] [CrossRef]

- Penfield, R.D.; Giacobbi, P.R. Applying a score confidence interval to Aiken’s item content relevance index. Meas. Phys. Educ. Exerc. Sci. 2004, 8, 213–225. [Google Scholar] [CrossRef]

- Merino, C.; Livia, J. Intervalos de confianza asimétricos para el índice de validez de contenido: Un programa Visual Basic para la V de Aiken. An. Psicol. 2009, 21, 169–171. [Google Scholar]

- Wilson, E.B. Probable inference, the law of succession and statistical inference. J. Am. Stat. Assoc. 1927, 22, 209–212. [Google Scholar] [CrossRef]

- Álvarez-García, O.; Sureda-Negre, J.; Comas-Forgas, R. Diseño y validación de un cuestionario para evaluar la alfabetización ambiental del profesorado de primaria en formación inicial. Profr. Rev. Curric. Form. Profr. 2018, 22, 265–284. [Google Scholar] [CrossRef]

- León-Larios, F.; Gómez-Baya, D. Diseño y validación de un cuestionario sobre conocimientos de sexualidad responsable en jóvenes. Rev. Esp. Salud Publica. 2020, 92, 1–15. [Google Scholar]

- Méndez, C.; Rondón, M.A. Introducción al análisis factorial exploratorio. Rev. Colomb. Psiquiatr. 2012, 41, 197–207. [Google Scholar]

- Forero, C.G.; Maydeu-Olivares, A.; Gallardo-Pujol, D. Factor and analysis with ordinal indicators: A monte Carlo study comparing DWLS and ULS estimation. Struct. Equ. Modeling 2009, 16, 625–641. [Google Scholar] [CrossRef]

- Costello, A.B.; Osborne, J. Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Pract. Assess. Res. Eval. 2005, 10, 1–9. [Google Scholar]

- Hair, J.F.; Anderson, R.E.; Tatham, R.; Black, W.C. Análisis Multivariante, 5th ed.; Prentice Hall: Madrid, Spain, 1999. [Google Scholar]

- Kaiser, H.F. The varimax criterium for analytic rotation in factor analysis. Psychometrika 1958, 23, 187–200. [Google Scholar] [CrossRef]

- Landa, M.R.; Ramírez, M.Y. Diseño de un cuestionario de satisfacción de estudiantes para un curso de nivel profesional bajo modelo de aprendizaje invertido. Rev. Paginas Educ. 2018, 11, 153–175. [Google Scholar]

- Hernández, R.; Fernández, C.; Baptista, P. Metodología de la Investigación; Mc-Graw Hill: Mexico City, Mexico, 2006. [Google Scholar]

- Pegalajar, M.C. Diseño y validación de un cuestionario sobre percepciones de futuros docentes hacia las TIC para el Desarrollo de prácticas inclusivas. Pixel-Bit. Rev. Medios Educ. 2015, 47, 89–104. [Google Scholar] [CrossRef]

- Santos, M.A.; Jover, G.; Naval, C.; Álvarez, J.L.; Vázquez, V.; Sotelino, A. Diseño y validación de un cuestionario sobre práctica docente y actitud del profesorado universitario hacia la innovación (CUPAIN). Educ. Xxi 2017, 20, 39–71. [Google Scholar] [CrossRef]

- Merenda, P. A guide to the proper use of factor analysis in the conduct and reporting of research: Pitfalls to avoid. Meas. Eval. Couns. Eval. 1997, 30, 156–163. [Google Scholar] [CrossRef]

- Kim, J.; Mueller, C.W. Factor analysis, statistical methods and practical issues. In Factor Analysis and Related Techniques; Lewis-Beck, M., Ed.; Sage Publications: London, UK, 1994; pp. 75–155. [Google Scholar]

- Pérez, E.R.; Medrano, L. Análisis Factorial Exploratorio: Bases conceptuales y metodológicas. Rev. Argent. Cienc. Comport. 2010, 2, 58–66. [Google Scholar]

- Arribas, M. Diseño y validación de cuestionarios. Matronas Prof. 2004, 5, 23–29. [Google Scholar]

- Kline, P. An Easy Guide to Factor Analysis; Sage: Newbury Park, Ventura Country, CA, USA, 1994. [Google Scholar]

- Long, J.S. Confirmatory Factor Analysis: A preface to LISREL; Paper Series on Quantitative Applications in the Social Sciences: London, UK, 1983. [Google Scholar]

- Stapleton, C.D. Basic concepts in Exploratory Factor Analysis (EFA) as a tool to evaluate score validity: A right-brained approach. In Proceedings of the Annual Meeting of the Southwest Educational Research Association, Austin, TX, USA, 24 January 1997. [Google Scholar]

- Batista-Foguet, J.M.; Coenders, G.; Alonso, J. Análisis Factorial Confirmatorio. Su utilidad en la validación de cuestionarios relacionados con la salud. Med. Clin. 2004, 122, 21–27. [Google Scholar] [CrossRef]

- Fernández, A. Aplicación del análisis factorial confirmatorio a un modelo de medición del rendimiento académico en lectura. Rev. Cienc. Econ. 2015, 33, 39–66. [Google Scholar] [CrossRef]

- Herrero, J. El análisis factorial confirmatorio en el estudio de la estructura y estabilidad de los instrumentos de evaluación: Un ejemplo con el Cuestionario de Autoestima CA-14. Interv. Psicosoc. 2010, 19, 289–300. [Google Scholar] [CrossRef]

- Urrutia, M.; Barrios, S.; Gutiérrez, M.; Mayorga, M. Métodos óptimos para determinar validez de contenido. Educ. Med. Super. 2014, 28, 1–9. [Google Scholar]

| Items | Aiken’s V | CI (95%) | Items | Aiken’s V | CI (95%) | ||

|---|---|---|---|---|---|---|---|

| Item 1 | 8.4 | 0.74 | 0.59–0.85 | Item 36 | 8.8 | 0.78 | 0.63–0.88 |

| Item 2 | 8.4 | 0.74 | 0.59–0.85 | Item 37 | 9 | 0.8 | 0.65–0.89 |

| Item 3 | 5 | 0.40 | 0.25–0.55 | Item 38 | 9.4 | 0.84 | 0.70–0.92 |

| Item 4 | 9.2 | 0.82 | 0.67–0.91 | Item 39 | 9 | 0.8 | 0.65–0.89 |

| Item 5 | 9.2 | 0.82 | 0.67–0.91 | Item 40 | 5.6 | 0.46 | 0.32–0.61 |

| Item 6 | 9.8 | 0.88 | 0.74–0.95 | Item 41 | 9.8 | 0.88 | 0.74–0.95 |

| Item 7 | 9 | 0.8 | 0.65–0.89 | Item 42 | 9.4 | 0.84 | 0.70–0.92 |

| Item 8 | 8.8 | 0.78 | 0.63–0.88 | Item 43 | 9.2 | 0.82 | 0.67–0.91 |

| Item 9 | 9.4 | 0.84 | 0.70–0.92 | Item 44 | 6.2 | 0.52 | 0.37–0.67 |

| Item 10 | 9.2 | 0.82 | 0.67–0.91 | Item 45 | 9 | 0.8 | 0.65–0.89 |

| Item 11 | 9.8 | 0.88 | 0.74–0.75 | Item 46 | 9.4 | 0.84 | 0.70–0.92 |

| Item 12 | 8.8 | 0.78 | 0.63–0.88 | Item 47 | 6.4 | 0.54 | 0.39–0.68 |

| Item 13 | 9.6 | 0.86 | 0.72–0.94 | Item 48 | 9 | 0.8 | 0.65–0.89 |

| Item 14 | 10 | 0.9 | 0.77–0.96 | Item 49 | 9.8 | 0.88 | 0.74–0.95 |

| Item 15 | 10 | 0.9 | 0.77–0.96 | Item 50 | 9.4 | 0.84 | 0.70–0.92 |

| Item 16 | 9.8 | 0.88 | 0.74–0.95 | Item 51 | 9 | 0.8 | 0.65–0.89 |

| Item 17 | 10 | 0.9 | 0.77–0.96 | Item 52 | 8.6 | 0.76 | 0.61–0.87 |

| Item 18 | 9.2 | 0.82 | 0.67–0.91 | Item 53 | 3 | 0.2 | 0.10–0.35 |

| Item 19 | 4 | 0.3 | 0.18–0.45 | Item 54 | 8.6 | 0.76 | 0.61–0.87 |

| Item 20 | 8.8 | 0.78 | 0.63–0.88 | Item 55 | 9.4 | 0.84 | 0.70–0.92 |

| Item 21 | 5.8 | 0.48 | 0.33–0.63 | Item 56 | 9 | 0.8 | 0.65–0.89 |

| Item 22 | 9 | 0.8 | 0.65–0.89 | Item 57 | 9.4 | 0.84 | 0.70–0.92 |

| Item 23 | 8.2 | 0.72 | 0.57–0.84 | Item 58 | 9.2 | 0.82 | 0.67–0.91 |

| Item 24 | 9 | 0.8 | 0.65–0.89 | Item 59 | 9.4 | 0.84 | 0.70–0.92 |

| Item 25 | 9.6 | 0.86 | 0.72–0.94 | Item 60 | 9.4 | 0.84 | 0.70–0.92 |

| Item 26 | 8.2 | 0.72 | 0.57–0.84 | Item 61 | 8.8 | 0.78 | 0.63–0.88 |

| Item 27 | 9.4 | 0.84 | 0.70–0.92 | Item 62 | 8.6 | 0.76 | 0.61–0.87 |

| Item 28 | 9.6 | 0.86 | 0.72–0.94 | Item 63 | 10 | 0.9 | 0.77–0.96 |

| Item 29 | 9 | 0.8 | 0.65–0.89 | Item 64 | 9.2 | 0.82 | 0.67–0.91 |

| Item 30 | 4.8 | 0.38 | 0.25–0.53 | Item 65 | 9.4 | 0.84 | 0.70–0.92 |

| Item 31 | 9.8 | 0.88 | 0.74–0.95 | Item 66 | 9.2 | 0.82 | 0.67–0.91 |

| Item 32 | 8.8 | 0.78 | 0.63–0.88 | Item 67 | 9.4 | 0.84 | 0.70–0.92 |

| Item 33 | 9.8 | 0.88 | 0.74–0.98 | Item 68 | 9.8 | 0.88 | 0.74–0.95 |

| Item 34 | 9 | 0.8 | 0.65–0.89 | Item 69 | 9.4 | 0.84 | 0.70–0.92 |

| Item 35 | 5.8 | 0.48 | 0.33–0.63 | Item 70 | 9.4 | 0.84 | 0.70–0.92 |

| KMO and Bartlett Tests | ||

| Kaiser-Meyer-Olkin (KMO) Measure of Sampling Adequacy | 0.814 | |

| Bartlett’s test of sphericity | Approx. Chi-squared | 1719.359 |

| df | 190 | |

| Signif. | 0.000 | |

| Total Variance Explained | |||||||||

| Component | Initial Eigenvalues | Extraction Sums of Squared Loadings | Rotation Sums of Squared Loadings | ||||||

| Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative% | |

| 1 | 3.915 | 19.573 | 19.573 | 3.915 | 19.573 | 19.573 | 2.450 | 12.248 | 12.248 |

| 2 | 1.613 | 8.065 | 27.638 | 1.613 | 8.065 | 27.638 | 1.996 | 9.980 | 22.228 |

| 3 | 1.377 | 6.886 | 34.524 | 1.377 | 6.886 | 34.524 | 1.595 | 7.977 | 30.205 |

| 4 | 1.171 | 5.855 | 40.379 | 1.171 | 5.855 | 40.379 | 1.436 | 7.179 | 37.383 |

| 5 | 1.090 | 5.449 | 45.828 | 1.090 | 5.449 | 45.828 | 1.389 | 6.946 | 44.330 |

| 6 | 1.001 | 5.003 | 50.830 | 1.001 | 5.003 | 50.830 | 1.300 | 6.500 | 50.830 |

| 7 | 0.962 | 4.810 | 55.640 | ||||||

| 8 | 0.916 | 4.578 | 60.218 | ||||||

| 9 | 0.906 | 4.530 | 64.748 | ||||||

| 10 | 0.816 | 4.082 | 68.830 | ||||||

| 11 | 0.771 | 3.856 | 72.686 | ||||||

| 12 | 0.737 | 3.684 | 76.370 | ||||||

| 13 | 0.720 | 3.598 | 79.968 | ||||||

| 14 | 0.682 | 3.409 | 83.377 | ||||||

| 15 | 0.645 | 3.226 | 86.603 | ||||||

| 16 | 0.613 | 3.067 | 89.670 | ||||||

| 17 | 0.570 | 2.850 | 92.520 | ||||||

| 18 | 0.542 | 2.711 | 95.230 | ||||||

| 19 | 0.497 | 2.485 | 97.715 | ||||||

| 20 | 0.457 | 2.285 | 100.000 | ||||||

| Extraction method: principal component analysis. | |||||||||

| Rotated Component Matrix a | ||||||

| Component | ||||||

| 1 | 2 | 3 | 4 | 5 | 6 | |

| Item57 | 0.767 | |||||

| Item58 | 0.630 | |||||

| Item59 | 0.522 | |||||

| Item51 | 0.480 | |||||

| Item36 | 0.665 | |||||

| Item55 | 0.587 | |||||

| Item48 | 0.517 | |||||

| Item62 | 0.490 | |||||

| Item29 | 0.442 | |||||

| Item38 | 0.713 | |||||

| Item52 | 0.693 | |||||

| Item8 | 0.403 | |||||

| Item17 | 0.846 | |||||

| Item31 | 0.450 | |||||

| Item18 | 0.439 | |||||

| Item1 | 0.804 | |||||

| Item2 | 0.802 | |||||

| Item4 | 0.716 | |||||

| Item41 | 0.463 | |||||

| Item61 | −0.447 | |||||

| Number of the Item in the Questionnaire Prior to Validation | Number in Final Questionnaire | Variable | Likert (1 to 5) |

| Item 0 | Item 1 | Are you familiar with the European Landscape Convention? | YES/NO |

| Item 1 | Item 2 | The landscape is any part of the territory that people perceive | 1 to 5 |

| Item 2 | Item 3 | The landscape is everything that can be seen from a given place | 1 to 5 |

| Item 29 | Item 4 | The landscape needs to be taught through fieldwork or day trips | 1 to 5 |

| Item 36 | Item 5 | The landscape should be taught as a mainstream subject in primary education | 1 to 5 |

| Item 48 | Item 6 | The landscape is a dynamic environment | 1 to 5 |

| Item 62 | Item 7 | The landscape is part of the syllabus in primary education | 1 to 5 |

| Item 38 | Item 8 | The landscape should be taught through a textbook | 1 to 5 |

| Item 52 | Item 9 | The cultural landscape is made up of human features | 1 to 5 |

| Item 8 | Item 10 | The cultural landscape is more important than the natural landscape | 1 to 5 |

| Item 17 | Item 11 | The social context we live in conditions the way we study the landscape | 1 to 5 |

| Item 31 | Item 12 | Pupils should know how to identify anthropic features in order to interpret the landscape | 1 to 5 |

| Item 18 | Item 13 | A combination of photographs and fieldwork is useful for studying the landscape’s social features | 1 to 5 |

| Item 4 | Item 14 | Contemplating any kind of landscape generates positive feelings | 1 to 5 |

| Item 41 | Item 15 | Simulation techniques enable me to understand how the landscape and the feelings it conveys change | 1 to 5 |

| Item 61 | Item 16 | There is a need to further our understanding of the landscape because it is a feature that arouses positive feelings | 1 to 5 |

| Item 57 | Item 17 | The city helps me to understand the urban landscape | 1 to 5 |

| Item 58 | Item 18 | The landscape helps me to teach about rural life | 1 to 5 |

| Item 59 | Item 19 | The main reason for studying the landscape is to provide pupils with tools for understanding geography | 1 to 5 |

| Item 51 | Item 20 | Augmented reality is very useful for teaching about the landscape | 1 to 5 |

| Item 55 | Item 21 | The landscape is a didactic instrument | 1 to 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fernández Álvarez, R.; Fernández, J. Design and Initial Validation of a Questionnaire on Prospective Teachers’ Perceptions of the Landscape. Educ. Sci. 2021, 11, 112. https://doi.org/10.3390/educsci11030112

Fernández Álvarez R, Fernández J. Design and Initial Validation of a Questionnaire on Prospective Teachers’ Perceptions of the Landscape. Education Sciences. 2021; 11(3):112. https://doi.org/10.3390/educsci11030112

Chicago/Turabian StyleFernández Álvarez, Rubén, and José Fernández. 2021. "Design and Initial Validation of a Questionnaire on Prospective Teachers’ Perceptions of the Landscape" Education Sciences 11, no. 3: 112. https://doi.org/10.3390/educsci11030112

APA StyleFernández Álvarez, R., & Fernández, J. (2021). Design and Initial Validation of a Questionnaire on Prospective Teachers’ Perceptions of the Landscape. Education Sciences, 11(3), 112. https://doi.org/10.3390/educsci11030112

.jpg)