Assessing and Benchmarking Learning Outcomes of Robotics-Enabled STEM Education

Abstract

1. Introduction

2. Analysis of Related Works

3. Research Questions

4. Materials and Resources

5. Research Methods and Procedures

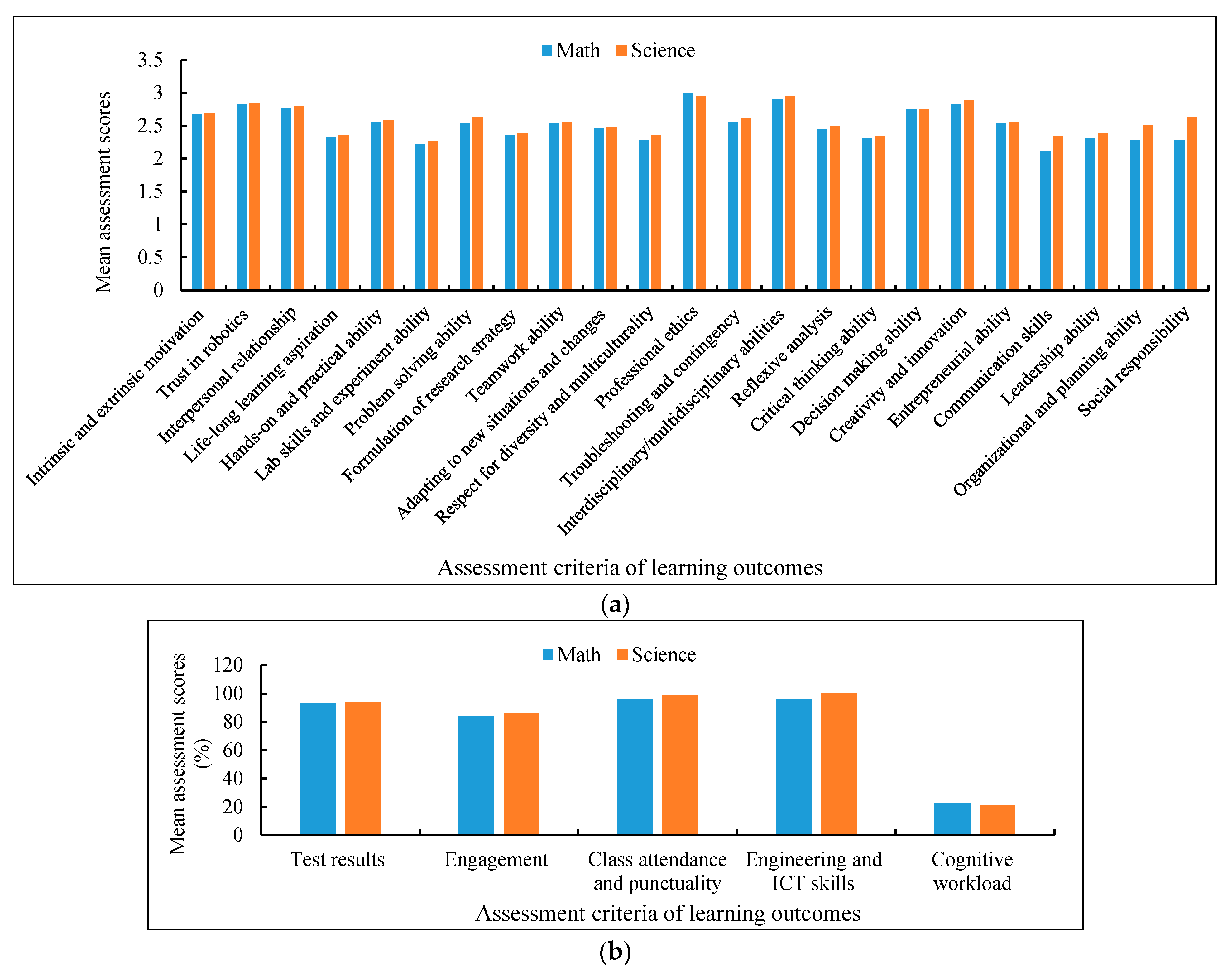

6. Research Results and Analyses

6.1. Determining the Assessment Criteria and Metrics

6.2. Validation of the Learning Outcome Assessment Methods and Metrics

7. Discussion

8. Conclusions and Future Works

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Ethics Statements

Appendix A

Appendix B

| Assessment Criteria (Learning Outcomes) | Assessment/Measurement Metrics/Scales and Methods | Proposed Format to Express the Measurement |

|---|---|---|

| Test results | Test scores on selected math topics can be used to assess this criterion. Quizzes/tests can be arranged by concerned teachers. In addition, the Dimension of Success (DoS) observation tool can be used to assess math knowledge and practices [31]. | percentage (%) of test scores obtained |

| Computational thinking ability [2] | Computational thinking can be assessed based on custom-developed specific problem-solving scenarios developed and implemented by the teachers. For example, a specific scenario can be developed where students need to solve a particular problem that reflects students’ computational thinking abilities. The teachers can observe the students and assess the computational thinking ability of each student separately or of the team as a whole. The teachers can use a 7-point Likert scale to rate the computation thinking ability subjectively based on observations (see note 1). In addition, the computational thinking can be assessed taking inspiration from the methods proposed by Kong [32]. | Subjective rating score (see note 2) |

| Intrinsic and extrinsic motivation | Intrinsic and extrinsic motivation expressed through students’ interest in math and their awareness levels for their math-related careers can be assessed directly using a subjective rating scale (e.g., a 7-point Likert scale) based on observations and interviews with the participating students administered by concerned teachers [4]. In addition, the Intrinsic Motivation Instrument (i.e., Self-Determination [33]) may be used to assess the motivation levels of the students for their career path in math. STEM Career Awareness tool may be used to assess their math-related career awareness levels [34]. The PEAR Institute’s Common Instrument Suite Student (CIS-S) survey may be used to assess students’ math-related attitudes in terms of math engagement, identity, career interest, and career knowledge and activity participation [35]. The DoS can be used to assess math activity engagement, math practices (inquiry and reflection), and youth development in math [31]. | Subjective rating score |

| Trust in robotics | Trust of students in robotics as a pedagogical tool expressed through students’ interest to rely on or to believe in the math-related solutions provided by the robotic system can be assessed directly by concerned teachers using a subjective rating scale (e.g., a 7-point Likert scale) based on observations and interviews with the participating students administered by the concerned teachers [5]. See note 3 for more. | Subjective rating score |

| Engagement in class activities | Work sampling method may be used to assess students’ engagement in their robotics-enabled lessons [36]. In this method, the teachers may observe each student separately or the team as a whole after a specified time interval (e.g., after every 5 min) during the class, and mark whether they are engaged in their lessons or not. At the end of the observations, the percentage of total class time the students are engaged (or not engaged) can be determined. This is a probabilistic but quantitative assessment method. The following formula may be used to assess student engagement (E) using work sampling, where is the total number of observations in a class and is the total number of observations in that class when student(s) was/were found engaged. | Percentage (%) of total class time students are engaged in the lesson |

| Class attendance and punctuality | Attendance record can be used to assess each student’s attendance and punctuality (e.g., timely attendance or late attendance) in the class. Percentage (%) of attendance in a specific time period can be calculated. In addition, percentage of timely or late attendance in a specific time period may also be calculated. The objective is to check if student attendance in regular classes increases after participating in the robotics-enabled lessons or being inspired by the robotics-enabled lessons. | Percentage (%) of attendance |

| Interpersonal relationship | The teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their interpersonal relationships (e.g., how a student addresses his/her team members, reacts at his/her team members’ opinions, etc.), and assess each student or the team as a whole using a 7-point Likert scale for their interpersonal relationships. Alternatively, the assessment may be performed as satisfactory or unsatisfactory. In addition, the CIS-S survey can be used to assess the 21st century skills or the socio-emotional learning (SEL) of the students, e.g., relationships with peer students and teachers [35]. | Subjective rating score |

| Engineering and ICT skills | Tests/quizzes administered by the teachers on students’ engineering and ICT skills can be used to assess this criterion. | Percentage (%) of test scores obtained |

| Life-long learning aspiration | The teachers can observe the students for their robotics-enabled lessons, take interviews of each student to know their future plans and goals about their math learning and applications, and assess each student or the team as a whole using a 7-point Likert scale for their life-long learning aspiration. | Subjective rating score |

| Hands-on and practical ability | Observations administered by the teachers on students’ hands-on practical works during a robotics-enabled lesson can be used to assess this criterion. The teachers can observe the class activities performed by the students and rate the hands-on and practical ability of each student or of the team using a 7-point Likert scale. | Subjective rating score |

| Lab skills and experiment ability | Observations administered by the teachers on students’ lab skills and experiment ability during an experiment conducted by the students as a part of a robotics-enabled lesson can be used to assess this criterion. The teachers can observe the class activities and rate the lab skills and experiment ability of each student or of the team using a 7-point Likert scale. | Subjective rating score |

| Problem solving ability | Observations administered by the teachers on students’ problem solving ability as a part of a robotics-enabled lesson can be used to assess this criterion. Assume, there is a problem related to a real-world situation in a robotics-enabled lesson that the students need to solve using math. The students should identify the problem, formulate the problem and determine the strategies to solve the problem using math knowledge and skills. The teachers can observe the ability of each student or of the team in these efforts and rate their abilities using a 7-point Likert scale. The CIS-S survey can also be used to assess the 21st century skills or the socio-emotional learning (SEL) of students, e.g., problem solving/perseverance [35]. | Subjective rating score |

| Formulation of research strategy | Observations administered by the teachers on students’ formulation of research strategy during a robotics-enabled lesson can be used to assess this criterion. Assume, there is a problem in a robotics-enabled lesson that the students need to solve using math. The students should identify the problem, formulate the problem, identify the objective, determine hypotheses and research questions, determine the experimental methods and procedures, and analyze the results with future directions. The teachers can observe the ability of each student or of the team in these efforts and rate their abilities using a 7-point Likert scale. | Subjective rating score |

| Teamwork ability | The youth teamwork skills survey can be used to assess the teamwork ability [37]. In addition, the teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their teamwork ability (e.g., how the students split the entire activities of the lesson and assign them to different team members of the team), and assess each student or the team as a whole using a 7-point Likert scale for their teamwork ability. | Subjective rating score |

| Cognitive workload in learning | NASA TLX can be administered by the teachers on the participating students at the end of each robotics-enabled lesson [28]. Note that the least cognitive workload is the best [29]. | Percentage (%) total cognitive workload |

| Adapting to new situations and changes | The teachers can observe the students for their robotics-enabled lessons, identify a few cues relevant to adapting to new situations and changes (e.g., whether a student can adjust if he/she is transferred to a new team or if a sudden change occurs in the lesson activities), and assess each student or the team as a whole using a 7-point Likert scale for their ability to adapt with new situations and changes. | Subjective rating score |

| Respect for diversity and multiculturality | The teachers can observe the students for their robotics-enabled lessons, identify a few cues relevant to respect for diversity and multiculturality (e.g., whether a student can adjust with another team member who has different nationality, color, ethnicity, food habits, etc.), and assess each student or the team as a whole using a 7-point Likert scale for their respect for diversity and multiculturality. | Subjective rating score |

| Professional ethics | The teachers can observe the students for their robotics-enabled lessons, identify a few ethical cues relevant to the class events (e.g., whether a student captures and records true data and does not manipulate the data) and assess each student or the team as a whole using a 7-point Likert scale for their professional ethics. | Subjective rating score |

| Troubleshooting and contingency | The teachers can observe the students for their robotics-enabled lessons, identify a few cues related to troubleshooting and contingency (e.g., how a student or a team troubleshoots in case the robotics-based experimental system does not work temporarily), and assess each student or the team as a whole using a 7-point Likert scale for their ability for troubleshooting and contingency. | Subjective rating score |

| Interdisciplinary/multidisciplinary abilities | The teachers can observe the students for their robotics-enabled lessons and assess each student or the team as a whole using a 7-point Likert scale for their ability to learn and use interdisciplinary and multidisciplinary knowledge and skills (e.g., math content knowledge combined with engineering and computer programming skills to solve a math problem). | Subjective rating score |

| Reflexive analysis | The teachers can observe the students for their robotics-enabled lessons, take their interviews, and assess each student or the team as a whole using a 7-point Likert scale for their ability to summarize what they learn during the lesson, identify their limitations and develop action plans for improvements in the next lessons. | Subjective rating score |

| Critical thinking ability | The teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their critical thinking ability (e.g., how the students analyze and compare different alternative possibilities of experimental procedures based on prior findings), and assess each student or the team as a whole using a 7-point Likert scale for their critical thinking ability. In addition, the CIS-S survey can be used to assess the 21st century skills or the socio-emotional learning (SEL) of the students, e.g., critical thinking [35]. | Subjective rating score |

| Decision making ability | The teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their decision-making ability (e.g., how the students make a decision based on the experimental findings, and how they decide the next experiments based on prior findings), and assess each student or the team as a whole using a 7-point Likert scale for their decision-making ability. In addition, the DORA tool can be used to assess reasoning and decision-making abilities of the students [38]. | Subjective rating score |

| Creativity and innovation | The teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their creativity and innovation (e.g., how the students propose a new configuration of the robotic device to solve a particular math problem), and assess each student or the team as a whole using a 7-point Likert scale for their creativity and innovation. In addition, the creativity and innovation can be assessed by the approach proposed by Barbot, Besançon, and Lubart [39]. | Subjective rating score |

| Entrepreneurial ability | The students build a robotic device and verify its suitability to learn math and solve real-world problems using math. Such building practices may inculcate entrepreneurial aspiration in the students, which may direct them towards starting a new business initiative to market their ideas and develop new business ventures in the future. The teachers can observe the students for their robotics-enabled lessons, take interviews of each student to know their business plans if any, and assess each student or the team as a whole using a 7-point Likert scale for their entrepreneurial aspiration or ability. In addition, the entrepreneurial ability of the students can be assessed taking inspiration from the methods proposed by Bejinaru [40], and Coduras, Alvarez and Ruiz [41]. | Subjective rating score |

| Communication skills | The teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their communication skills (e.g., how the students communicate the findings of the experiments during their robotics-enabled lessons to their team leader, teachers and each team member), and assess each student or the team as a whole using a 7-point Likert scale for their communication skills. In addition, the CIS-S survey can be used to assess the 21st century skills or the socio-emotional learning (SEL) of the students, e.g., communication skills [35]. | Subjective rating score |

| Leadership ability | Based on specific tasks and scenarios during students’ engagement with the robotics-enabled lesson, the surveys proposed by Mazzetto [42] and Chapman and Giri [43] can be used to assess leadership skills of the students. Alternatively, the teachers can observe the students for their robotics-enabled lessons, identify a few cues related to their leadership ability (e.g., how the students decide their leader for a lesson, how the leader directs the team members towards the goal of the lesson, and how the student members follow the directions of the leader), and assess each student or the team as a whole using a 7-point Likert scale for their leadership ability. | Subjective rating score |

| Organizational and planning ability | The teachers can observe the students for their robotics-enabled lessons, identify a few issues related to organization and planning of the robotics-enabled lesson (e.g., how the students split the responsibility of each team member and determine and ensure the required resources for each member in each step/phase of the entire lesson), and assess each student or the team as a whole using a 7-point Likert scale for their organizational and planning ability. | Subjective rating score |

| Social responsibility | The teachers can observe the students for their robotics-enabled lessons, identify a few social cues relevant to the class events (e.g., whether a student wishes another student in his/her birthday that falls on the day of a robotics-enabled lesson, or how a student feels if another student of the team is known to be sick), and assess each student or the team as a whole using a 7-point Likert scale for their social responsibility. | Subjective rating score |

| Learning Outcome Criteria | Schools | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | |

| Test results (see note 4) | 93 | 94 | 98 | 92 | 97 | 90 | 91 | 91 | 94 | 89 | 99 | 95 | 96 | 92 | 90 | 97 | 88 | 94 | 99 | 93 |

| Computational thinking ability | 2.29 | 2.46 | 2.87 | 2.33 | 2.49 | 2.65 | 2.27 | 2.78 | 2.61 | 2.82 | 2.66 | 2.57 | 2.49 | 2.77 | 2.84 | 2.19 | 2.38 | 2.73 | 2.92 | 2.68 |

| Intrinsic and extrinsic motivation | 2.67 | 2.63 | 2.68 | 2.45 | 2.69 | 2.56 | 2.46 | 2.59 | 2.42 | 2.73 | 2.57 | 2.46 | 2.74 | 2.81 | 2.38 | 2.54 | 2.78 | 2.29 | 2.85 | 2.96 |

| Trust in robotics | 2.82 | 2.39 | 2.68 | 2.56 | 2.71 | 2.36 | 2.80 | 2.53 | 2.44 | 2.72 | 2.81 | 2.75 | 2.70 | 2.88 | 2.52 | 2.26 | 2.66 | 2.42 | 2.61 | 2.63 |

| Engagement | 84 | 91 | 97 | 89 | 93 | 96 | 95 | 99 | 88 | 89 | 92 | 90 | 93 | 99 | 99 | 86 | 95 | 92 | 88 | 89 |

| Class attendance and punctuality | 96 | 96 | 96 | 95 | 99 | 92 | 94 | 100 | 98 | 98 | 100 | 100 | 100 | 99 | 99 | 96 | 100 | 90 | 96 | 98 |

| Interpersonal relationship | 2.77 | 2.49 | 2.56 | 2.42 | 2.54 | 2.76 | 2.33 | 2.52 | 2.48 | 2.71 | 2.58 | 2.39 | 2.44 | 2.81 | 2.67 | 2.28 | 2.59 | 2.64 | 2.88 | 2.51 |

| Engineering and ICT skills | 96 | 99 | 96 | 98 | 93 | 95 | 98 | 93 | 96 | 100 | 93 | 100 | 99 | 97 | 89 | 100 | 94 | 97 | 100 | 98 |

| Life-long learning aspiration | 2.33 | 2.40 | 2.73 | 2.74 | 2.38 | 2.49 | 2.56 | 2.62 | 2.58 | 2.77 | 2.54 | 2.36 | 2.67 | 2.69 | 2.55 | 2.82 | 2.64 | 2.51 | 2.74 | 2.45 |

| Hands-on and practical ability | 2.56 | 2.73 | 2.71 | 2.62 | 2.33 | 2.49 | 2.89 | 2.76 | 2.55 | 2.64 | 2.69 | 2.52 | 2.60 | 2.72 | 2.67 | 2.66 | 2.72 | 2.59 | 2.48 | 2.65 |

| Lab skills and experiment ability | 2.22 | 2.46 | 2.82 | 2.39 | 2.68 | 2.29 | 2.48 | 2.63 | 2.54 | 2.78 | 2.61 | 2.53 | 2.44 | 2.46 | 2.67 | 2.35 | 2.48 | 2.72 | 2.62 | 2.66 |

| Problem solving ability | 2.54 | 2.56 | 2.75 | 2.66 | 2.54 | 2.78 | 2.46 | 2.80 | 2.48 | 2.43 | 2.62 | 2.50 | 2.83 | 2.61 | 2.45 | 2.37 | 2.39 | 2.78 | 2.29 | 2.59 |

| Formulation of research strategy | 2.36 | 2.54 | 2.74 | 2.39 | 2.44 | 2.36 | 2.47 | 2.67 | 2.73 | 2.72 | 2.65 | 2.50 | 2.43 | 2.79 | 2.62 | 2.37 | 2.56 | 2.52 | 2.76 | 2.28 |

| Teamwork ability | 2.53 | 2.75 | 2.73 | 2.69 | 2.45 | 2.77 | 2.56 | 2.87 | 2.54 | 2.67 | 2.73 | 2.54 | 2.63 | 2.75 | 2.69 | 2.69 | 2.74 | 2.67 | 2.47 | 2.26 |

| Cognitive workload | 23 | 26 | 19 | 31 | 25 | 23 | 28 | 17 | 33 | 12 | 22 | 25 | 28 | 18 | 19 | 27 | 16 | 30 | 19 | 20 |

| Adapting to new situations and changes | 2.46 | 2.77 | 2.29 | 2.38 | 2.67 | 2.64 | 2.60 | 2.74 | 2.53 | 2.71 | 2.33 | 2.52 | 2.79 | 2.72 | 2.67 | 2.35 | 2.40 | 2.51 | 2.68 | 2.49 |

| Respect for diversity and multiculturality | 2.28 | 2.41 | 2.76 | 2.38 | 2.46 | 2.66 | 2.25 | 2.46 | 2.66 | 2.81 | 2.59 | 2.54 | 2.50 | 2.79 | 2.81 | 2.27 | 2.37 | 2.65 | 2.63 | 2.87 |

| Professional ethics | 3.00 | 2.95 | 2.87 | 2.68 | 2.50 | 2.66 | 2.55 | 2.80 | 2.66 | 2.56 | 3.00 | 2.90 | 2.95 | 3.00 | 3.00 | 2.86 | 2.56 | 2.75 | 2.88 | 2.43 |

| Troubleshooting and contingency | 2.56 | 2.44 | 2.65 | 2.32 | 2.57 | 2.63 | 2.71 | 2.62 | 2.74 | 2.48 | 2.69 | 2.54 | 2.44 | 2.62 | 2.26 | 2.75 | 2.42 | 2.61 | 2.78 | 2.81 |

| Interdisciplinary/ multidisciplinary abilities | 2.91 | 2.52 | 2.28 | 2.79 | 2.42 | 2.33 | 2.48 | 2.30 | 2.59 | 2.77 | 2.63 | 2.51 | 2.82 | 2.38 | 2.61 | 2.75 | 2.39 | 2.54 | 2.83 | 2.47 |

| Reflexive analysis | 2.45 | 2.49 | 2.73 | 2.24 | 2.35 | 2.54 | 2.84 | 2.63 | 2.36 | 2.29 | 2.48 | 2.83 | 2.27 | 2.54 | 2.56 | 2.47 | 2.66 | 2.77 | 2.91 | 2.89 |

| Critical thinking ability | 2.31 | 2.49 | 2.69 | 2.60 | 2.48 | 2.63 | 2.45 | 2.39 | 2.78 | 2.57 | 2.64 | 2.65 | 2.91 | 2.43 | 2.63 | 2.67 | 2.71 | 2.29 | 2.70 | 2.22 |

| Decision making ability | 2.75 | 2.78 | 2.75 | 2.50 | 2.68 | 2.60 | 2.81 | 2.44 | 2.29 | 2.34 | 2.63 | 2.33 | 2.46 | 2.72 | 2.80 | 2.46 | 2.50 | 2.48 | 2.88 | 2.45 |

| Creativity and innovation | 2.82 | 2.67 | 2.91 | 2.30 | 2.43 | 2.66 | 2.78 | 2.73 | 2.41 | 2.85 | 2.63 | 2.47 | 2.69 | 2.37 | 2.64 | 2.62 | 2.43 | 2.79 | 2.90 | 2.86 |

| Entrepreneurial ability | 2.54 | 2.45 | 2.84 | 2.39 | 2.47 | 2.61 | 2.29 | 2.73 | 2.60 | 2.80 | 2.56 | 2.52 | 2.50 | 2.38 | 2.83 | 2.71 | 2.78 | 2.69 | 2.82 | 2.58 |

| Communication skills | 2.12 | 2.87 | 2.28 | 2.39 | 2.90 | 2.85 | 2.22 | 2.62 | 2.60 | 2.67 | 2.60 | 2.49 | 2.58 | 2.29 | 2.81 | 2.33 | 2.45 | 2.74 | 2.35 | 2.42 |

| Leadership ability | 2.31 | 2.49 | 2.72 | 2.36 | 2.62 | 2.69 | 2.28 | 2.66 | 2.63 | 2.56 | 2.37 | 2.58 | 2.57 | 2.72 | 2.80 | 2.59 | 2.33 | 2.44 | 2.08 | 2.66 |

| Organizational and planning ability | 2.28 | 2.56 | 2.67 | 2.42 | 2.42 | 2.68 | 2.33 | 2.58 | 2.89 | 2.83 | 2.63 | 2.55 | 2.46 | 2.70 | 2.23 | 2.17 | 2.57 | 2.42 | 2.65 | 2.75 |

| Social responsibility | 2.28 | 2.72 | 2.29 | 2.34 | 2.48 | 2.60 | 2.22 | 2.19 | 2.56 | 2.65 | 2.62 | 2.53 | 2.62 | 2.75 | 2.81 | 2.56 | 2.39 | 2.72 | 2.91 | 2.62 |

| Learning Outcome Criteria | Schools | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | |

| Test results | 94 | 96 | 99 | 94 | 99 | 94 | 97 | 96 | 95 | 93 | 99 | 98 | 97 | 95 | 94 | 98 | 93 | 96 | 98 | 99 |

| Imagination ability | 2.33 | 2.47 | 2.88 | 2.45 | 2.54 | 2.69 | 2.43 | 2.93 | 2.69 | 2.88 | 2.75 | 2.78 | 2.64 | 2.79 | 2.86 | 2.65 | 2.61 | 2.78 | 2.83 | 2.71 |

| Intrinsic and extrinsic motivation | 2.69 | 2.67 | 2.72 | 2.48 | 2.73 | 2.59 | 2.48 | 2.64 | 2.47 | 2.76 | 2.59 | 2.48 | 2.76 | 2.86 | 2.39 | 2.56 | 2.82 | 2.43 | 2.76 | 2.97 |

| Trust in robotics | 2.85 | 2.67 | 2.76 | 2.59 | 2.78 | 2.38 | 2.85 | 2.57 | 2.48 | 2.79 | 2.85 | 2.77 | 2.71 | 2.89 | 2.57 | 2.28 | 2.69 | 2.41 | 2.66 | 2.65 |

| Engagement | 86 | 93 | 98 | 90 | 94 | 98 | 97 | 99 | 90 | 91 | 93 | 92 | 94 | 99 | 99 | 88 | 98 | 94 | 89 | 90 |

| Class attendance and punctuality | 99 | 97 | 98 | 98 | 100 | 93 | 95 | 100 | 99 | 99 | 100 | 100 | 98 | 98 | 99 | 97 | 100 | 96 | 97 | 99 |

| Interpersonal relationship | 2.79 | 2.53 | 2.59 | 2.45 | 2.58 | 2.79 | 2.36 | 2.56 | 2.49 | 2.75 | 2.63 | 2.43 | 2.46 | 2.85 | 2.69 | 2.33 | 2.63 | 2.67 | 2.89 | 2.54 |

| Engineering and ICT skills | 100 | 99 | 98 | 99 | 96 | 97 | 99 | 96 | 100 | 99 | 96 | 99 | 100 | 98 | 92 | 99 | 98 | 100 | 99 | 100 |

| Life-long learning aspiration | 2.36 | 2.46 | 2.74 | 2.77 | 2.39 | 2.54 | 2.58 | 2.65 | 2.59 | 2.79 | 2.58 | 2.39 | 2.68 | 2.72 | 2.58 | 2.83 | 2.67 | 2.55 | 2.76 | 2.52 |

| Hands-on and practical ability | 2.58 | 2.77 | 2.75 | 2.66 | 2.36 | 2.50 | 2.93 | 2.78 | 2.59 | 2.66 | 2.72 | 2.54 | 2.68 | 2.79 | 2.68 | 2.67 | 2.83 | 2.65 | 2.49 | 2.68 |

| Lab skills and experiment ability | 2.26 | 2.49 | 2.87 | 2.43 | 2.69 | 2.35 | 2.49 | 2.66 | 2.59 | 2.79 | 2.65 | 2.57 | 2.47 | 2.49 | 2.68 | 2.39 | 2.65 | 2.78 | 2.65 | 2.69 |

| Problem solving ability | 2.63 | 2.58 | 2.78 | 2.67 | 2.57 | 2.79 | 2.47 | 2.84 | 2.49 | 2.47 | 2.69 | 2.62 | 2.74 | 2.65 | 2.54 | 2.42 | 2.53 | 2.79 | 2.35 | 2.64 |

| Formulation of research strategy | 2.39 | 2.55 | 2.78 | 2.43 | 2.46 | 2.39 | 2.49 | 2.68 | 2.68 | 2.83 | 2.74 | 2.68 | 2.47 | 2.82 | 2.68 | 2.39 | 2.59 | 2.56 | 2.78 | 2.34 |

| Teamwork ability | 2.56 | 2.81 | 2.79 | 2.76 | 2.47 | 2.79 | 2.57 | 2.89 | 2.58 | 2.69 | 2.76 | 2.57 | 2.68 | 2.77 | 2.83 | 2.73 | 2.76 | 2.68 | 2.49 | 2.54 |

| Cognitive workload | 21 | 23 | 18 | 22 | 21 | 20 | 24 | 18 | 25 | 18 | 20 | 13 | 22 | 14 | 16 | 23 | 15 | 19 | 16 | 18 |

| Adapting to new situations and changes | 2.48 | 2.78 | 2.34 | 2.39 | 2.72 | 2.66 | 2.66 | 2.77 | 2.59 | 2.82 | 2.42 | 2.61 | 2.82 | 2.78 | 2.68 | 2.46 | 2.48 | 2.64 | 2.69 | 2.53 |

| Respect for diversity and multiculturality | 2.35 | 2.49 | 2.77 | 2.39 | 2.47 | 2.68 | 2.34 | 2.49 | 2.73 | 2.78 | 2.63 | 2.55 | 2.54 | 2.78 | 2.88 | 2.56 | 2.67 | 2.68 | 2.67 | 2.85 |

| Professional ethics | 2.95 | 2.99 | 2.88 | 2.75 | 2.58 | 2.69 | 2.60 | 2.84 | 2.68 | 2.58 | 2.90 | 2.92 | 2.98 | 2.98 | 2.87 | 2.88 | 2.59 | 2.79 | 2.65 | 2.89 |

| Troubleshooting and contingency | 2.62 | 2.46 | 2.68 | 2.62 | 2.78 | 2.64 | 2.74 | 2.66 | 2.79 | 2.53 | 2.76 | 2.68 | 2.46 | 2.67 | 2.73 | 2.76 | 2.56 | 2.66 | 2.79 | 2.84 |

| Interdisciplinary/ multidisciplinary abilities | 2.95 | 2.62 | 2.56 | 2.83 | 2.65 | 2.78 | 2.56 | 2.34 | 2.65 | 2.78 | 2.69 | 2.57 | 2.88 | 2.46 | 2.68 | 2.78 | 2.48 | 2.73 | 2.67 | 2.58 |

| Reflexive analysis | 2.49 | 2.50 | 2.77 | 2.29 | 2.38 | 2.58 | 2.85 | 2.64 | 2.39 | 2.46 | 2.49 | 2.85 | 2.44 | 2.66 | 2.60 | 2.56 | 2.68 | 2.78 | 2.95 | 2.92 |

| Critical thinking ability | 2.34 | 2.54 | 2.72 | 2.65 | 2.49 | 2.66 | 2.48 | 2.46 | 2.79 | 2.63 | 2.68 | 2.81 | 2.87 | 2.49 | 2.68 | 2.68 | 2.73 | 2.45 | 2.76 | 2.49 |

| Decision making ability | 2.76 | 2.79 | 2.78 | 2.57 | 2.69 | 2.66 | 2.88 | 2.49 | 2.56 | 2.39 | 2.67 | 2.39 | 2.49 | 2.78 | 2.89 | 2.61 | 2.58 | 2.60 | 2.91 | 2.48 |

| Creativity and innovation | 2.89 | 2.78 | 2.92 | 2.45 | 2.48 | 2.69 | 2.79 | 2.77 | 2.48 | 2.89 | 2.65 | 2.49 | 2.74 | 2.56 | 2.66 | 2.67 | 2.49 | 2.83 | 2.93 | 2.89 |

| Entrepreneurial ability | 2.56 | 2.49 | 2.85 | 2.43 | 2.48 | 2.65 | 2.33 | 2.75 | 2.65 | 2.85 | 2.58 | 2.55 | 2.56 | 2.39 | 2.86 | 2.76 | 2.79 | 2.78 | 2.85 | 2.62 |

| Communication skills | 2.34 | 2.89 | 2.54 | 2.45 | 2.91 | 2.86 | 2.57 | 2.68 | 2.67 | 2.71 | 2.65 | 2.50 | 2.59 | 2.33 | 2.82 | 2.53 | 2.48 | 2.78 | 2.39 | 2.45 |

| Leadership ability | 2.39 | 2.54 | 2.75 | 2.43 | 2.63 | 2.70 | 2.47 | 2.68 | 2.64 | 2.58 | 2.64 | 2.62 | 2.73 | 2.74 | 2.82 | 2.67 | 2.38 | 2.46 | 2.23 | 2.75 |

| Organizational and planning ability | 2.51 | 2.66 | 2.68 | 2.59 | 2.54 | 2.69 | 2.56 | 2.64 | 2.92 | 2.86 | 2.66 | 2.56 | 2.49 | 2.72 | 2.46 | 2.36 | 2.68 | 2.54 | 2.78 | 2.79 |

| Social responsibility | 2.63 | 2.78 | 2.56 | 2.59 | 2.52 | 2.66 | 2.37 | 2.45 | 2.58 | 2.66 | 2.67 | 2.58 | 2.67 | 2.78 | 2.86 | 2.68 | 2.42 | 2.77 | 2.94 | 2.72 |

References

- Rahman, S.M.M.; Krishnan, V.J.; Kapila, V. Optimizing a teacher professional development program for teaching STEM with robotics through design-based research. In Proceedings of the 2018 ASEE Annual Conference & Exposition, Salt Lake City, UT, USA, 24–27 June 2018; pp. 1–20. [Google Scholar]

- Rahman, S.M.M.; Chacko, S.M.; Rajguru, S.B.; Kapila, V. Determining prerequisites for middle school students to participate in robotics-based STEM lessons: A computational thinking approach. In Proceedings of the 2018 ASEE Annual Conference & Exposition, Salt Lake City, UT, USA, 24–27 June 2018; pp. 1–27. [Google Scholar]

- Mallik, A.; Rahman, S.M.M.; Rajguru, S.B.; Kapila, V. Examining the variations in the TPACK framework for teaching robotics-aided STEM lessons of varying difficulty. In Proceedings of the 2018 ASEE Annual Conference & Exposition, Salt Lake City, UT, USA, 24–27 June 2018; pp. 1–23. [Google Scholar]

- Rahman, S.M.M.; Krishnan, V.J.; Kapila, V. Exploring the dynamic nature of TPACK framework in teaching STEM using robotics in middle school classrooms. In Proceedings of the 2017 ASEE Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017; pp. 1–29. [Google Scholar]

- Rahman, S.M.M.; Chacko, S.M.; Kapila, V. Building trust in robots in robotics-focused STEM education under TPACK framework in middle schools. In Proceedings of the 2017 ASEE Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017; pp. 1–25. [Google Scholar]

- Rahman, S.M.M.; Kapila, V. A systems approach to analyzing design-based research in robotics-focused middle school STEM lessons through cognitive apprenticeship. In Proceedings of the 2017 ASEE Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017; pp. 1–25. [Google Scholar]

- Chen, N.S.; Quadir, B.; Teng, D.C. Integrating book, digital content and robot for enhancing elementary school students’ learning of English. Australas. J. Educ. Technol. 2011, 27, 546–561. [Google Scholar] [CrossRef]

- Mosley, P.; Kline, R. Engaging students: A framework using LEGO robotics to teach problem solving. Inf. Technol. Learn. Perform. J. 2006, 24, 39–45. [Google Scholar]

- Whitman, L.; Witherspoon, T. Using LEGOs to interest high school students and improve K12 STEM education. In Proceedings of the ASEE/IEEE Frontiers in Education Conference, Westminster, CO, USA, 5–8 November 2003; p. F3A6-10. [Google Scholar]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef] [PubMed]

- Toh, L.P.E.; Causo, A.; Tzuo, P.; Chen, I.; Yeo, S.H. A review on the use of robots in education and young children. J. Educ. Technol. Soc. 2016, 19, 148–163. [Google Scholar]

- Barreto, F.; Benitti, V. Exploring the educational potential of robotics in schools: A systematic review. Comput. Educ. 2012, 58, 978–988. [Google Scholar]

- Danahy, E.; Wang, E.; Brockman, J.; Carberry, A.; Shapiro, B.; Rogers, C.B. LEGO-based robotics in higher education: 15 years of student creativity. Int. J. Adv. Robot. Syst. 2014, 11, 27. [Google Scholar] [CrossRef]

- Rahman, S.M.M. Instructing a mechatronics course aligning with TPACK framework. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 15–19 June 2019. [Google Scholar]

- Rahman, S.M.M. Instruction design of a mechatronics course based on closed-loop 7E model refined with DBR method. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 15–19 June 2019. [Google Scholar]

- Rahman, S.M.M. Comparative experiential learning of mechanical engineering concepts through the usage of robot as a kinesthetic learning tool. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 15–19 June 2019. [Google Scholar]

- Erikson, M.G.; Erikson, M. Learning outcomes and critical thinking–good intentions in conflict. Stud. High. Educ. 2018, 44, 2293–2303. [Google Scholar] [CrossRef]

- Brooks, S.; Dobbins, K.; Scott, J.J.A.; Rawlinson, M.; Norman, R.I. Learning about learning outcomes: The student perspective. Teach. High. Educ. 2014, 19, 721–733. [Google Scholar] [CrossRef]

- Melguizo, T.; Coates, H. The value of assessing higher education student learning outcomes. AERA Open 2017, 3. [Google Scholar] [CrossRef]

- Prøitz, T.S. Learning outcomes: “What are they? Who defines them? When and where are they defined?” Educ. Assess. Eval. Account. 2010, 22, 119–137. [Google Scholar]

- Farquharson, K. Regulating sociology: Threshold learning outcomes and institutional isomorphism. J. Sociol. 2013, 49, 486–500. [Google Scholar] [CrossRef]

- Watson, P. The role and integration of learning outcomes into the educational process. Act. Learn. High. Educ. 2002, 3, 205–219. [Google Scholar] [CrossRef]

- Smith, B.W.; Zhou, Y. Assessment of learning outcomes: The example of spatial analysis at Bowling Green State University. Int. Res. Geogr. Environ. Educ. 2005, 14, 211–216. [Google Scholar] [CrossRef]

- Oliver, B.; Tucker, B.; Gupta, R.; Yeo, S. eVALUate: An evaluation instrument for measuring students’ perceptions of their engagement and learning outcomes. Assess. Eval. High. Educ. 2008, 33, 619–630. [Google Scholar] [CrossRef]

- Svanström, M.; Lozano-García, F.J.; Rowe, D. Learning outcomes for sustainable development in higher education. Int. J. Sustain. High. Educ. 2008, 9, 339–351. [Google Scholar] [CrossRef]

- Shephard, K. Higher education for sustainability: Seeking affective learning outcomes. Int. J. Sustain. High. Educ. 2008, 9, 87–98. [Google Scholar] [CrossRef]

- Rahman, S.M.M.; Ikeura, R. Calibrating intuitive and natural human-robot interaction and performance for power-assisted heavy object manipulation using cognition-based intelligent admittance control schemes. Int. J. Adv. Robot. Syst. 2018, 15. [Google Scholar] [CrossRef]

- Rahman, S.M.M.; Ikeura, R. Cognition-based variable admittance control for active compliance in flexible manipulation of heavy objects with a power assist robotic system. Robot. Biomim. 2018, 5, 1–25. [Google Scholar]

- de Jong, T. Cognitive load theory, educational research, and instructional design: Some food for thought. Instruct. Sci. 2010, 38, 105–134. [Google Scholar] [CrossRef]

- Leite, A.; Soares, D.; Sousa, H.; Vidal, D.; Dinis, M.; Dias, D. For a healthy (and) higher education: Evidences from learning outcomes in health sciences. Educ. Sci. 2020, 10, 168. [Google Scholar] [CrossRef]

- DoS. Available online: https://www.thepearinstitute.org/dimensions-of-success (accessed on 18 December 2020).

- Kong, S. Components and methods of evaluating computational thinking for fostering creative problem-solvers in senior primary school education. In Computational Thinking Education; Kong, S., Abelson, H., Eds.; Springer: Singapore, 2019. [Google Scholar] [CrossRef]

- Deci, E.L.; Eghrari, H.; Patrick, B.C.; Leone, D. Facilitating internalization: The self-determination theory perspective. J. Personal. 1994, 62, 119–142. [Google Scholar] [CrossRef]

- Kier, M.W.; Blanchard, M.R.; Osborne, J.W.; Albert, J.L. The development of the STEM career interest survey (STEM-CIS). Res. Sci. Educ. 2014, 44, 461–481. [Google Scholar] [CrossRef]

- CIS-S. Available online: http://www.pearweb.org/atis/tools/common-instrument-suite-student-cis-s-survey (accessed on 18 December 2020).

- Robinson, M. Work sampling: Methodological advances and new applications. Hum. Factors Ergon. Manuf. Serv. Ind. 2009, 20, 42–60. [Google Scholar] [CrossRef]

- Available online: https://www.informalscience.org/youth-teamwork-skills-survey-manual-and-survey (accessed on 18 December 2020).

- Algozzine, A.; Newton, J.; Horner, R.; Todd, A.; Algozzine, K. Development and technical characteristics of a team decision-making assessment tool: Decision observation, recording, and analysis (DORA). J. Psychoeduc. Assess. 2012, 30, 237–249. [Google Scholar] [CrossRef]

- Barbot, B.; Besançon, M.; Lubart, T. Assessing creativity in the classroom. Open Educ. J. 2011, 4, 58–66. [Google Scholar] [CrossRef]

- Bejinaru, R. Assessing students’ entrepreneurial skills needed in the knowledge economy. Manag. Mark. Chall. Knowl. Soc. 2018, 13, 1119–1132. [Google Scholar] [CrossRef]

- Coduras, A.; Alvarez, J.; Ruiz, J. Measuring readiness for entrepreneurship: An information tool proposal. J. Innov. Knowl. 2016, 1, 99–108. [Google Scholar] [CrossRef]

- Mazzetto, S. A practical, multidisciplinary approach for assessing leadership in project management education. J. Appl. Res. High. Educ. 2019, 11, 50–65. [Google Scholar] [CrossRef]

- Chapman, A.; Giri, P. Learning to lead: Tools for self-assessment of leadership skills and styles. In Why Hospitals Fail; Godbole, P., Burke, D., Aylott, J., Eds.; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- NGSS. Next Generation Science Standards (NGSS): For States, by States; The National Academies Press: Washington, DC, USA, 2013; Available online: http://www.nextgenscience.org/ (accessed on 20 December 2020).

- Elen, J.; Clarebout, G.; Leonard, R.; Lowyck, J. Student-centred and teacher-centred learning environments: What students think. Teach. High. Educ. 2007, 12, 105–117. [Google Scholar] [CrossRef]

- Chan, C.C.; Tsui, M.S.; Chan, M.Y.C.; Hong, J.H. Applying the structure of the observed learning outcomes (SOLO) taxonomy on student’s learning outcomes: An empirical study. Assess. Eval. High. Educ. 2002, 27, 511–527. [Google Scholar] [CrossRef]

- Koretsky, M.; Keeler, J.; Ivanovitch, J.; Cao, Y. The role of pedagogical tools in active learning: A case for sense-making. Int. J. STEM Educ. 2018, 5, 18. [Google Scholar] [CrossRef] [PubMed]

- Garbett, D.L. Assignments as a pedagogical tool in learning to teach science: A case study. J. Early Child. Teach. Educ. 2007, 28, 381–392. [Google Scholar] [CrossRef]

- Ahmed, A.; Clark-Jeavons, A.; Oldknow, A. How can teaching aids improve the quality of mathematics education. Educ. Stud. Math. 2004, 56, 313–328. [Google Scholar] [CrossRef]

- Geiger, T.; Amrein-Beardsley, A. Student perception surveys for K-12 teacher evaluation in the United States: A survey of surveys. Cogent Educ. 2019, 6, 1602943. [Google Scholar] [CrossRef]

- Sturtevant, H.; Wheeler, L. The STEM Faculty Instructional Barriers and Identity Survey (FIBIS): Development and exploratory results. Int. J. STEM Educ. 2019, 6, 1–22. [Google Scholar] [CrossRef]

- Jones, N.D.; Brownell, M.T. Examining the use of classroom observations in the evaluation of special education teachers. Assess. Eff. Interv. 2014, 39, 112–124. [Google Scholar] [CrossRef]

- Rizi, C.; Najafipour, M.; Haghani, F.; Dehghan, S. The effect of the using the brainstorming method on the academic achievement of students in grade five in Tehran elementary schools. Procedia Soc. Behav. Sci. 2013, 83, 230–233. [Google Scholar] [CrossRef]

- Ritzhaupt, A.D.; Dawson, K.; Cavanaugh, C. An investigation of factors influencing student use of technology in K-12 classrooms using path analysis. J. Educ. Comput. Res. 2012, 46, 229–254. [Google Scholar] [CrossRef]

- Batdi, V.; Talan, T.; Semerci, C. Meta-analytic and meta-thematic analysis of STEM education. Int. J. Educ. Math. Sci. Technol. 2019, 7, 382–399. [Google Scholar]

- Gao, X.; Li, P.; Shen, J.; Sun, H. Reviewing assessment of student learning in interdisciplinary STEM education. Int. J. STEM Educ. 2020, 7, 1–14. [Google Scholar] [CrossRef]

- Hartikainen, S.; Rintala, H.; Pylväs, L.; Nokelainen, P. The concept of active learning and the measurement of learning outcomes: A review of research in engineering higher education. Educ. Sci. 2019, 9, 276. [Google Scholar] [CrossRef]

- Miskioğlu, E.E.; Asare, P. Critically thinking about engineering through kinesthetic experiential learning. In Proceedings of the 2017 IEEE Frontiers in Education Conference (FIE), Indianapolis, IN, USA, 18–21 October 2017; pp. 1–3. [Google Scholar]

- Emerson, L.; MacKay, B. A comparison between paper-based and online learning in higher education. Br. J. Educ. Technol. 2011, 42, 727–735. [Google Scholar] [CrossRef]

- Collins, A. Cognitive apprenticeship and instructional technology. In Educational Values and Cognitive Instruction: Implications for Reform; Routledge: New York, NY, USA, 1991; pp. 121–138. [Google Scholar]

- Capraro, R.; Slough, S. Why PBL? Why STEM? Why now? An Introduction to STEM Project-Based Learning. In STEM Project-Based Learning; Capraro, R., Capraro, M., Morgan, J., Eds.; Sense Publishers: Rotterdam, The Netherlands, 2013. [Google Scholar] [CrossRef]

- Dringenberg, E.; Wertz, R.; Purzer, S.; Strobel, J. Development of the science and engineering classroom learning observation protocol. In Proceedings of the 2012 ASEE Annual Conference & Exposition, San Antonio, TX, USA, 10–13 June 2012. [Google Scholar]

- Michelsen, C. IBSME—Inquiry-based science and mathematics education. MONA-Matematik-Og Naturfagsdidaktik 2011, 6, 72–77. [Google Scholar]

- Anderson, J.R.; Reder, L.M.; Simon, H.A. Situated learning and education. Educ. Res. 1996, 25, 5–11. [Google Scholar] [CrossRef]

| Assessment Criteria of Learning Outcomes and Their Frequencies in Parentheses | |

|---|---|

| Traditional Teaching | Robotics-Enabled Teaching |

|

|

| Assessment Criteria of Learning Outcomes and Their Frequencies in Parentheses | |

|---|---|

| Traditional Teaching | Robotics-Enabled Teaching |

|

|

| Assessment Criteria (Expected Learning Outcomes) | Themes |

|---|---|

| Test results (20) | Educational |

| Life-long learning aspiration (7) | Behavioral |

| Intrinsic and extrinsic motivation (12) | Behavioral |

| Trust in robotics (4) | Behavioral |

| Engagement in class (11) | Behavioral |

| Class attendance and punctuality (8) | Behavioral |

| Adapting to new situations and changes (5) | Behavioral |

| Respect for diversity and multiculturality (4) | Behavioral |

| Professional ethics (3) | Behavioral |

| Teamwork ability (10) | Behavioral |

| Engineering and ICT skills (7) | Scientific/technical |

| Formulation of research strategy (8) | Scientific/technical |

| Hands-on and practical ability (5) | Scientific/technical |

| Lab skills and experiment ability (4) | Scientific/technical |

| Troubleshooting and contingency (6) | Scientific/technical |

| Interdisciplinary/multidisciplinary abilities (11) | Scientific/technical |

| Problem solving ability (11) | Intellectual |

| Reflexive analysis (5) | Intellectual |

| Critical thinking ability (9) | Intellectual |

| Computational thinking ability (9) | Intellectual |

| Decision making ability (13) | Intellectual |

| Creativity and innovation (9) | Intellectual |

| Cognitive workload (14) | Cognitive |

| Entrepreneurial ability (3) | Managerial/leadership |

| Communication skills (14) | Managerial/leadership |

| Leadership ability (9) | Managerial/leadership |

| Organizational and planning ability (7) | Managerial/leadership |

| Social responsibility (2) | Social |

| Interpersonal relationship (12) | Social |

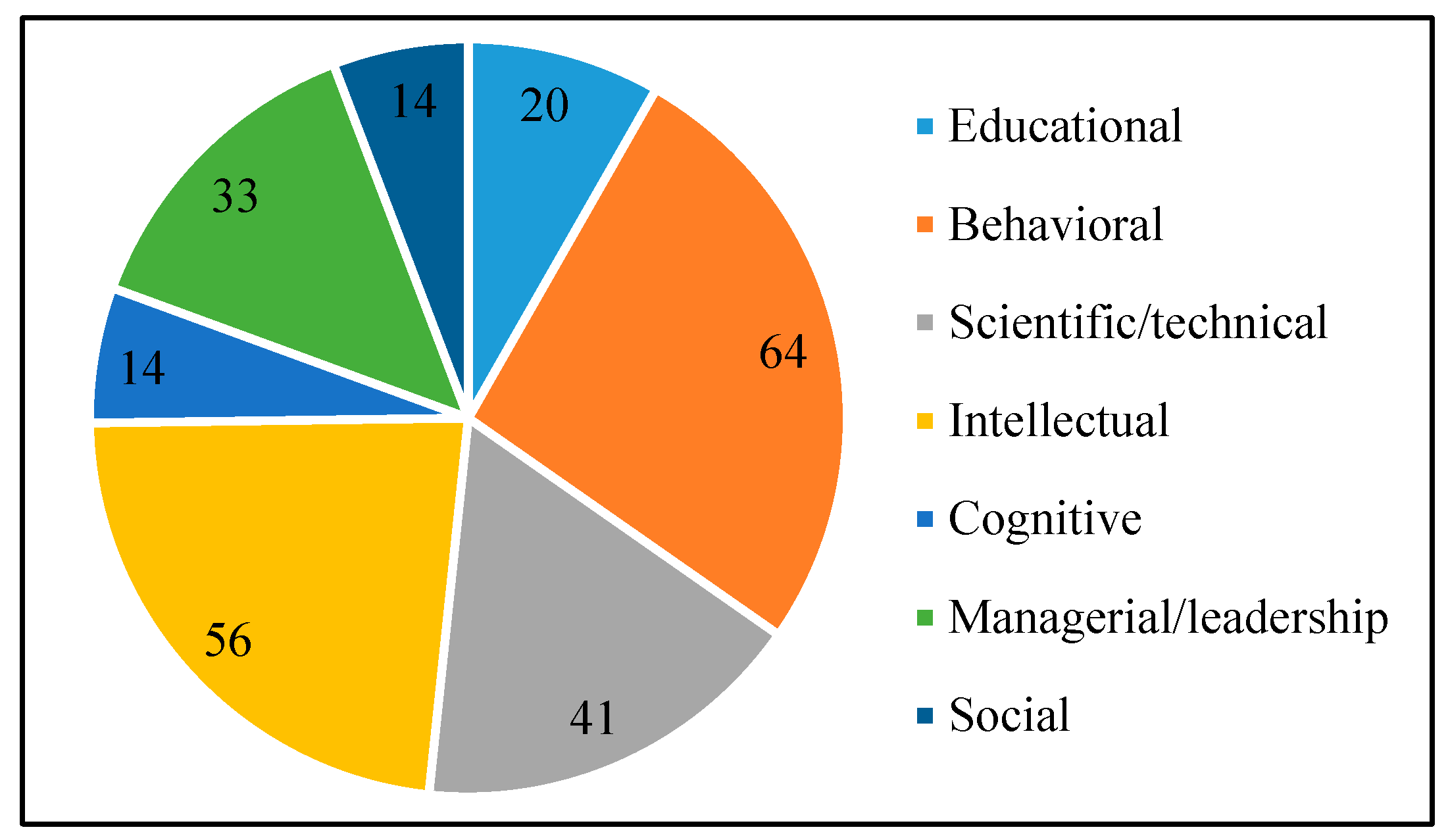

| Themes (of Learning Outcomes) | Total Frequencies |

|---|---|

| Educational | 20 |

| Behavioral | 64 |

| Scientific/technical | 41 |

| Intellectual | 56 |

| Cognitive | 14 |

| Managerial/leadership | 33 |

| Social | 14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, S.M.M. Assessing and Benchmarking Learning Outcomes of Robotics-Enabled STEM Education. Educ. Sci. 2021, 11, 84. https://doi.org/10.3390/educsci11020084

Rahman SMM. Assessing and Benchmarking Learning Outcomes of Robotics-Enabled STEM Education. Education Sciences. 2021; 11(2):84. https://doi.org/10.3390/educsci11020084

Chicago/Turabian StyleRahman, S. M. Mizanoor. 2021. "Assessing and Benchmarking Learning Outcomes of Robotics-Enabled STEM Education" Education Sciences 11, no. 2: 84. https://doi.org/10.3390/educsci11020084

APA StyleRahman, S. M. M. (2021). Assessing and Benchmarking Learning Outcomes of Robotics-Enabled STEM Education. Education Sciences, 11(2), 84. https://doi.org/10.3390/educsci11020084