1. Introduction

The lock down of schools as a measurement against the Covid19 pandemic resulted in en masse transfer from classroom teaching to on-line education. Students were trying to copy teacher and information centred classroom teaching, resulting mostly in ‘talking heads’ on the students’ screens and the scrolling of information. This resulted in students complaining against on-line learning because they missed the interaction with teachers and peers. De Jong and Lans [

1] discovered in their research among students and teachers in the first months of the lock down that students missed variation and interactions. Later during the Covid19 period in the Netherlands, the possibility of blended learning or a mix of on-line and face-to-face learning became possible. The present study is an example of such a blended learning situation. The study is framed in the context of the Erasmus+ knowledge Alliance video-supported collaborative learning (ViSuAL) project. The main objective of this project is to research pedagogy for using video in supporting collaborative learning [

2]. In the present article, we report an experiment on bachelor-level courses of a Vocational Education and Training (VET) teacher education curriculum in the Netherlands. This experiment aimed to support student teachers’ development from ’novice’ to ’starting expert’ by using students’ video recordings and peer feedback in a blended curriculum.

The use of video has shown its potential to impact teaching practice, both in teachers’ pre-service education and in-service professional development [

3,

4,

5]. However, the combination of video use with more current pedagogical approaches such as knowledge building or active, collaborative learning is rarely seen in the curriculum design. Teachers do not know how to cross the barrier of direct instruction and a ’knowledge-telling’ way of teaching [

3] and explore video’s abilities to support collaborative learning [

6,

7,

8].

In teacher education, videos are frequently used to improve authenticity in teaching practice. According to Radović et al. [

9] more authenticity facilitates experiential learning while strengthening the ties between theory and practical learning experience. How to implement video use in lessons or how to design a curriculum with it is a challenge for teacher trainers and their students, especially how to do it with a more or less collaborative learning approach.

1.1. Problem

Teaching is a knowledge-rich profession [

10] and teachers regularly evaluate knowledge in relation to their practice to update their knowledge base, thus improving their teaching practice. Being an expert is more than knowing a list of facts and formulas relevant to the teacher’s domain [

11]. Instead, experts organize their knowledge around core concepts or ‘big ideas’ that guide their thinking about domains and acting in the classroom.

According to Larkin et al. [

12], beginners rarely refer to major principles. When watching a videotaped lesson, expert teachers’ perceptions differ from those of novice teachers [

13]. As novices, student teachers have different views and a different understanding of how to explain differences and deal with the differing opinions in the literature [

14] and practices concerning reflective practices [

15].

Expert teachers interpret practices according to very different standards, using more sophisticated pattern recognition and segmentation. Experts are not formed only by instructions on how to teach; ‘it takes experts to make experts’ [

16]. To become an expert, a teacher also needs experiences in authentic practices [

9]. Thus, in teacher education, students will not intuitively become expert teachers; there is a distinct need to stimulate and broaden their thinking about their acting in and organising their teaching practices [

17]. In a collaborative learning setting, teachers may even strengthen their education practice so that students become ‘starting’ experts [

18,

19,

20] (see

Section 1.2). Meyer [

17] observed that pre-service and first-year teachers who discussed knowledge concerning the facilitation of learning had limited views on the importance of learning. For second- and third-year students, Meyer [

17] found that students had more ideas about the importance of prior learning. Thus, students’ ideas about ‘good’ teaching probably grow during their studies as they are exposed to literature and to their teachers’ expert knowledge.

Previous ‘knowledge building’ research confirms that it supports the literacy development of students, including vocabulary growth [

21,

22] and written composition and reading comprehension [

23]. However, do peer feedback and reflection such as ‘discussion and dialogue’ increase students’ use of the relevant knowledge they acquire from literature or lectures? During a course, one would expect that students’ use of words related to these ‘big’ ideas in pedagogical theory, as crystallized expert knowledge would increase if they exchanged feedback with peers or wrote substantive reflections at the end of a course. This increased professional jargon use—the lexical growth—can be seen as an indication of becoming more expert.

1.2. The Impact of Peer Feedback

Do students learn from a collaborative learning setting where they give feedback to video recordings of their peers’ teaching practice? Teachers must develop professional understanding of motivation, self-regulation skills, teaching ideas, and authentic situations in order to function as ‘expert’ teachers in practice. Training teachers by practice is a regular approach in teacher education [

24]. The use of reflection can be seen as a self-critical and inquisitive process that improves teaching quality. This process generates thoughts and new knowledge about pedagogical decisions during the practice. In general, feedback is seen as a paramount requirement for improvement and self-reflection and is one of the core activities in developing one’s professional identity [

24].

The quality of the feedback is crucial. Adequate feedback positively influences the learning process and increases performance, but inadequate feedback decreases it [

24,

25]. With adequate feedback, the learner reflects on and improves his performance [

24]. For Gaudin and Chaliès [

26], video in learning is a tool and not part of a curriculum; it enhances learning. Weber et al. [

24] argue that the use of video develops more than a vision; it also fosters knowledge and increases choices during teaching, for instance about classroom management.

1.3. Reflection and Video/ Novice Experts

Novice and expert teachers have different knowledge about classroom management and a different professional vision [

24]. Novice teachers also possess more limited understanding of their students’ prior knowledge [

17,

24]. Thus, novice teachers’ development depends on their understanding and perceptions of education and how they put these into practice [

17].

Nielsen [

27] revealed that structured collaborative analysis of video recordings of students’ school practice leads them to a more nuanced consideration of concrete incidents and supports them in reconstructing their student experiences with a focus on student learning. Students say they benefited from the peer support and had a positive view of the structured approach. Ingram [

28] also discovered a case of shifting from an egocentric focus towards a learner-centred focus when watching video clips with students. Moreover, peer dialogues also have a reflective power. They are comparable to the practical knowledge of students, for example, knowledge derived from experiential and practical experiences in the classroom and the richness of argumentation students have [

29]. Dovigo [

30] showed that when students analysed videos of their teaching activities together, they developed a sense of collaboration and shared understanding that led to a more reflective stance. This could strengthen their ability to transform educational principles into everyday teaching by bridging the gap between theory and practice. Calandra et al. [

31] also found that students who captured their lessons on digital video and reflected on critical incidents produced broader perspectives than a group of pre-service teachers discussing immediately after teaching. It seems that video-aided reflection is an important instrument for facilitating the development of novice teachers into experts.

This brings us to the following question: is students’ transformation from novice to expert reflected in an increase in lexical richness, semantic cohesion and constructive use of key terms from the literature they study? This study tries to answer this question by making use of a computational analytic technics, for example, natural language processing and semantic social network analysis. In that sense, the study contributes to one of the provocations for the future of the field of computer supported learning [

32]: vigorously pursuing computational approaches to understanding collaborative learning. Of course, peer feedback is not collaboration in the sense of negotiation and interdependency; however, it is a matter of joint attention and meaning making true a kind of dialogue, for example, the peer feedback and student’s making sense to their own way of teaching to increase their teaching practice. During the dialogues in the face-to-face meetings, students as members of a small group were engaged in a joint task, for example, to improve their way of teaching by communicating their practice by video, giving peer feedback and improving their ideas of teaching (knowledge construction). So, this study also provides insight if computational approaches are able to determine what happens with the lexical richness, semantic cohesion and constructive use of key terms from the literature during the students’ ‘collaboration’ in the time of the course.

2. Materials and Methods

This study concerns a pre-experimental one-group case study design [

33,

34], where repeated observations are made. It follows the structure of x-O-x-O-x-O-x-O-Of, where x stands for a video recording of authentic teaching practice of a student teacher, O for peer feedback from several peers, and Of for students’ final reflection assignment. Dependent variables to indicate the growth of expertise were lexical richness, semantic cohesion and betweenness centrality.

2.1. Participants

The student teachers worked together in small groups. The class group consisted of 15 part-time student teachers (ten males and five females) in a Bachelor’s teacher education program in the Netherlands. The student teachers were already teaching in different domains at vocational secondary education schools (VET). The average age of the students was 42.4 years (sd 8.7).

2.2. Variables

Lexical richness has previously been used as one of the linguistic variables to assess Alzheimer’s disease progression, where patients tend to have a low lexical richness rate [

35]. In contrast to the loss of words and meaning as in Alzheimer’s patients, our hypothesis is that students will acquire more vocabulary items and professional terms during the learning process, and that their lexical richness will be increased at the end of the course. Formal academic writings also present high values of lexical richness [

36,

37]. For this study, we used the Type Token Ratio (TTR) to measure the lexical richness of students’ vocabulary. In our case, we evaluated the students’ vocabulary each month to detect when it increased. While the lexical richness reflects the variety of the lexical items, it does not reflect the meaning that they create together. Thus, we included the assessment of the semantic cohesion of the students’ comments as a complement to the lexical analysis. We used two metrics that reflected the semantic cohesion. The first one was based on the semantic similarity between all words in a given text. The second one was based on the centroid distance between all words given in a segment of text [

38].

We used KBDeX, a social network analysis application for knowledge building discourse to calculate the betweenness centrality to measure the extent to which a word influenced other words in the conceptual network of words [

39]. The reason is that we wanted to know the mediating function of the words that are representative of topics emerging in the literature. At the word level, a betweenness centrality value of 1 means that a word is highly influential, whereas a value of 0 means that a word is equally as influential as other words. The betweenness centrality measures the number of node pairs and the shortest path between them that passes through a node. It suggests that the selected node works as a key mediator in linking other nodes [

39,

40,

41].

2.3. Procedure

As part of their four-year curriculum, the students took part in a course about pedagogy. The course content comprised the six main roles of a teacher [

42] and collaborative learning. During the course, which lasted four months, the students had to follow lectures and read literature, and also video-recorded their own teaching practice in VET schools. They uploaded their recordings into the Iris Connect environment in which they could provide monthly peer feedback on the video recordings of peers. To give their commentary on each other’s recorded videos, students were divided into four small groups. In the last month, the student teachers used the peer feedback and course literature to write reflections on their teaching practice as a final assignment. The data we collected consisted of students’ comments (peer feedback) on video recordings during their teaching at VET schools in the Netherlands and their final reflections.

2.4. Analysis

To determine the ’expert’ growth of the students’, we used two analysis methods and reflections as expertise indicators. Firstly, we used semantic analysis by applying topic modelling to determine underlying dimension topics in the literature represented by related terms. Secondly, we used lexical analysis. For the lexical analysis, we used the complete peer feedback dataset of the entire class group. We explored the students’ peer feedback discourse by using the peer feedback and reflection data from each of the four student sub-groups. Thus, we tackled this part of the analysis as a multiple case study design [

43].

2.5. Topic Modelling of the Literature Students Had to Study

We identified topics by applying topic modelling methods, a probabilistic technique used in machine learning (ML) and Natural Language Processing (NLP), to explore a collection of documents. A topic represents a group of words with a high likelihood of occurring together in a document [

44]. The rationale behind this method is that meanings are relational [

45,

46]. The resulting group of words may also be interpreted as lexical fields. The meaning of the words in a lexical field depend on each other; together, they form a conceptual structure that is part of a particular activity or specialist field, such as a lexical field associated with school (e.g., teacher, book, notebook, pencil, student, etc.).

We used a well-known statistical language model, Latent Dirichlet Allocation (LDA), to generate the topics [

44]. The data used for this analysis corresponded to the literature used by students during their course. We used the LDAvis [

47] library in Python, which allowed us to compute topic models and visualise topics in a Cartesian-like space. This library uses LDA as a technique to identify topics [

48]. For the topic modelling analysis, we did not consider the time as a variable to analyse the topics’ evolution during the semester.

We pre-processed the data by conducting the usual tokenisation, lemmatisation, and part-of-speech (POS) tagging. Tokenising involves separating a text into sentences and sentences into words. Lemmatisation reduces a word to its canonical form; for example, nouns are put into their singular form (children-child), and verbs into the infinitive form (was-be). POP tagging identifies the lexical part of speech, whether a word is a noun, a verb, an adverb, and so on. This process allowed us to filter tokens by their POS tags and used only nouns and adjectives, which are some of the linguistic features common in informational writings [

36]. The reason for filtering only using nouns and adjectives is that we wanted to analyse the attitude towards learning and teaching of the student teachers. Moreover, the frequency of nouns and adjectives has been used to analyse the differences between scientific writings and technical reports. According to Biber and Conrad [

36], academic prose has a higher frequency of nouns than conversations.

Thus, using these linguistic features to track students’ attitude towards learning and the student teachers’ teaching was justifiable, since the data originated in a conversational forum. Moreover, students learned an academic writing style, which is more informational, during their studies at the university; it was also close to academic prose. We expected that these linguistic features would be more prominent at the end of the semester. To determine the number of topics in a model, Korenčić, et al. [

38] proposed to evaluate the semantic cohesion of the topics with semantic measures. The authors suggest training the models with n topics, where n is the number of topics, initially set at two and growing until a given number. Then, the semantic cohesion is assessed for each topic, and a final score is associated with each model. The model with the best score values is thought to be the one that people will interpret more easily. In our case, we trained 20 different models to find the best model with the most coherent topics based on proposition. The model with the best semantic cohesion value was designated as the model with the ideal number of topics.

2.6. Lexical Analysis of Students’ Peer Feedback Comments

To perform the lexical richness analysis, we used the TTR measure to analyse the students’ interventions on a digital platform:

where

represents the counting of different words (no repetitions) and

is the number of all words in a given text, including repetitions. To compute the semantic cohesion, we used the vector representation of words (word embeddings) that captured part of the semantics of the language [

49]. To measure the semantic cohesion, we used two approaches: the first one was based on the word similarity between a group of words, the second on the centroid distance. These analyses were conducted in a longitudinal setting, where we considered each month as time markers during the semester. We then measured the growth of both the lexical richness and the semantic cohesion of the students’ peer feedback comments over time. We did not use any grammar or spelling correction, since we wanted to keep the texts in their natural setting. However, we conducted text normalisation, which involves lemmatisation. After computing the TTR for all students’ interventions, we used a boxplot to visualise their distribution over time.

We expected to see that the semantic similarity and the semantic cohesion in the students’ peer feedback had increased during the course.

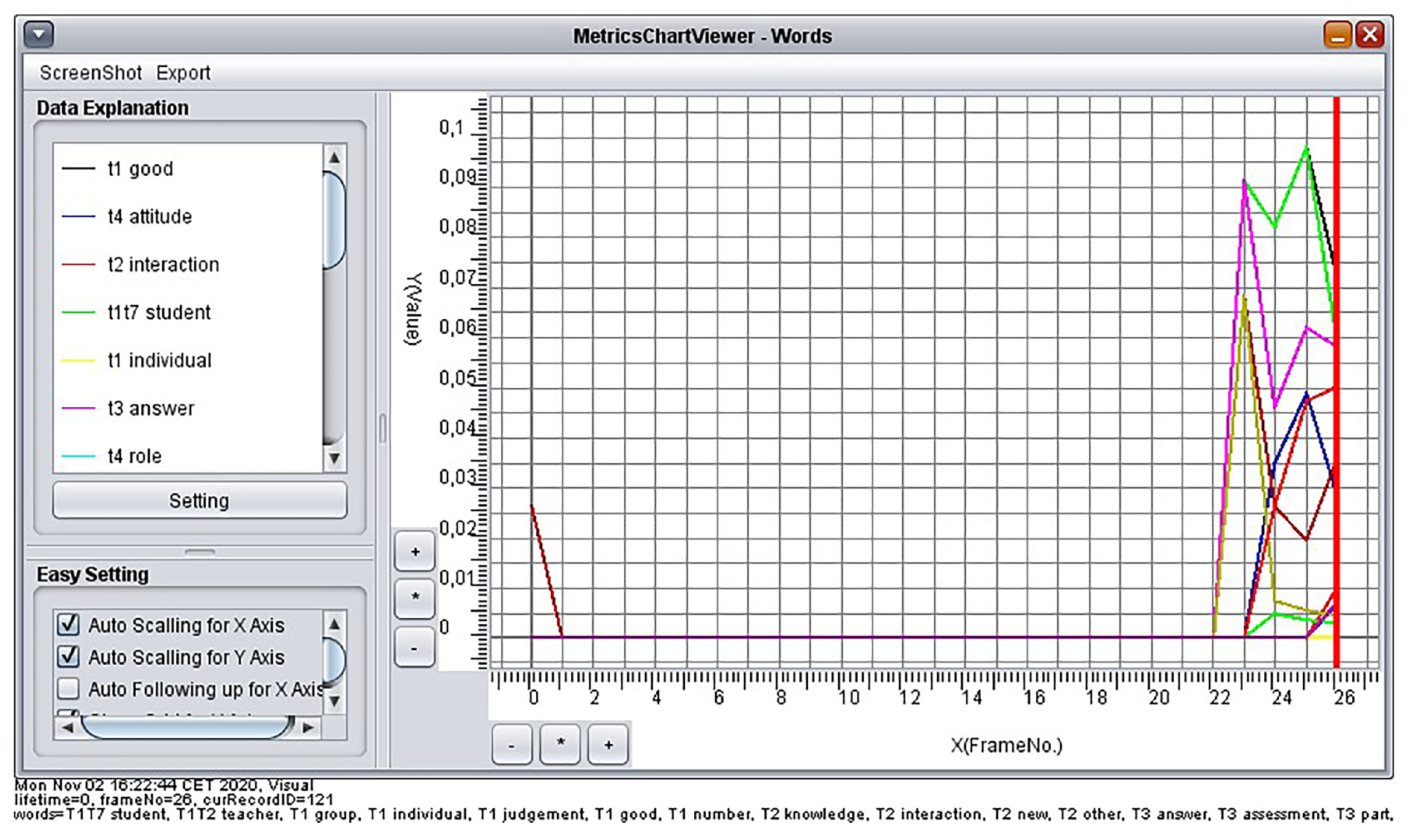

2.7. Social Semantic Network Analysis

Because of the complex and dynamic nature of peer feedback and reflections, we adopted a mixed-methods design to seek clarification of results. More specifically, we adopted a sequential approach of social and temporal network analyses. First, we prepared the raw data from comments, the literature and final assignments and second we selected the ten most prominent words in each of the topics identified by the topic modelling analysis. We used these words in the knowledge-building discourse explorer (KBDeX), a content-based social and temporal network analysis tool [

50]. KBDeX visualizes three network structures (persons, words, and notes). These networks can be analysed by unit-by-unit construction, and the process of the network building can be paused and resumed. With this social semantic network analyses (SNA), we could determine how topic terms function as key linking mediators in the conceptual growth of the students’ peer feedback and reflections over time. KBDeX analysis is based on the co-occurrence of words in a note. Network visualisations show the combined words, notes, and networks of co-creation by students. It is also possible to observe the strengths of connections between words and notes over time in network metrics.

The first stage of the analysis consisted of quantitative analyses on the total group level. Thus, we used social and temporal network analyses to examine group network patterns. This helped us to determine the number of different words representing the topics with central constructive functionality in the peer feedback and reflections over time. The second stage consisted of qualitative analyses where we analysed the content and context of central word-related notes to identify specific knowledge construction in each of the four student groups.

Interventionary studies involving animals or humans, and other studies that require ethical approval, must list the authority that provided approval and the corresponding ethical approval code.

3. Results

3.1. Topic Modelling

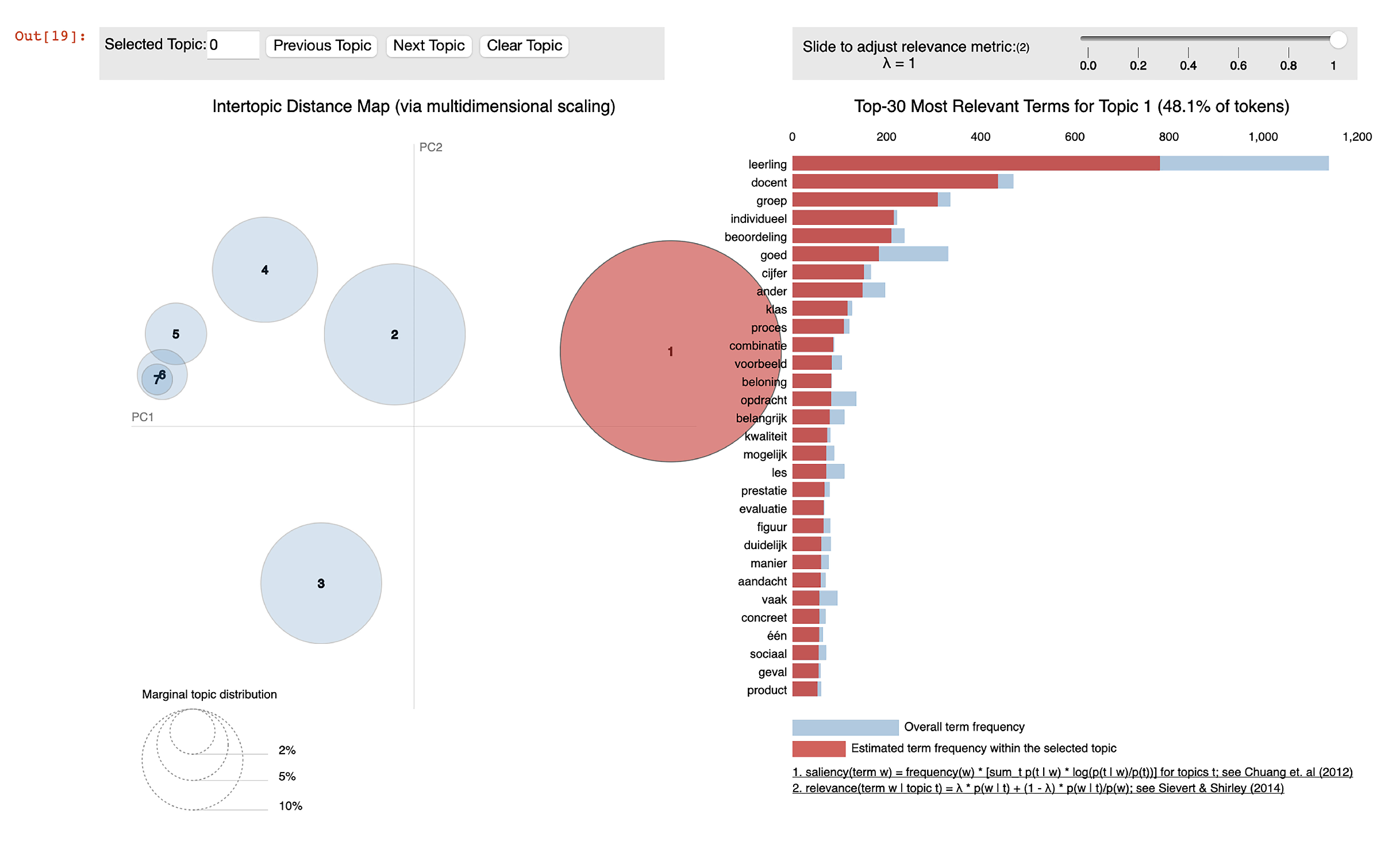

When evaluating the topic modelling, we found that the model that gave more semantically coherent topics contained seven topics (see

Table 1). The first measure that we used to evaluate the cohesion of topics concerned the semantic similarity of the group of words that belonged to a single topic. For the semantic similarity-based measure, the values go from 0.0 to 1.0. The higher the value, the most coherent a topic is. The second measure concerned the distance centroid of all words belonging to a topic. For the distance centroid-based measure, the values start from 0.0; the maximum value is delimited by the vector space representation. In this case, lower values express a high semantic cohesion. When selecting a model, we kept the highest value for the semantic similarity-based and one of the lower values for the distance centroid-based, leading us to the model with seven topics (see

Figure 1 and

Table 1).

The topics could be interpreted as: (1) Testing and monitoring (students, teacher, group, individual, judgment, good, mark); (2) Group and interaction (knowledge, interaction, new, other); (3) Working on an assignment (answer, assessment, part, assignment); (4) Teacher’s role (role, education, level, development, attitude); (5) Constructivism and learning theory (subjective, reality, information, constructivism); (6) Learning process (cognitive, learning activity, child, learning process, affective); and (7) Behaviourism and learning theory (active, instruction, environment, behaviourism, perspective).

3.2. The Lexical Richness and Cohesion Analysis

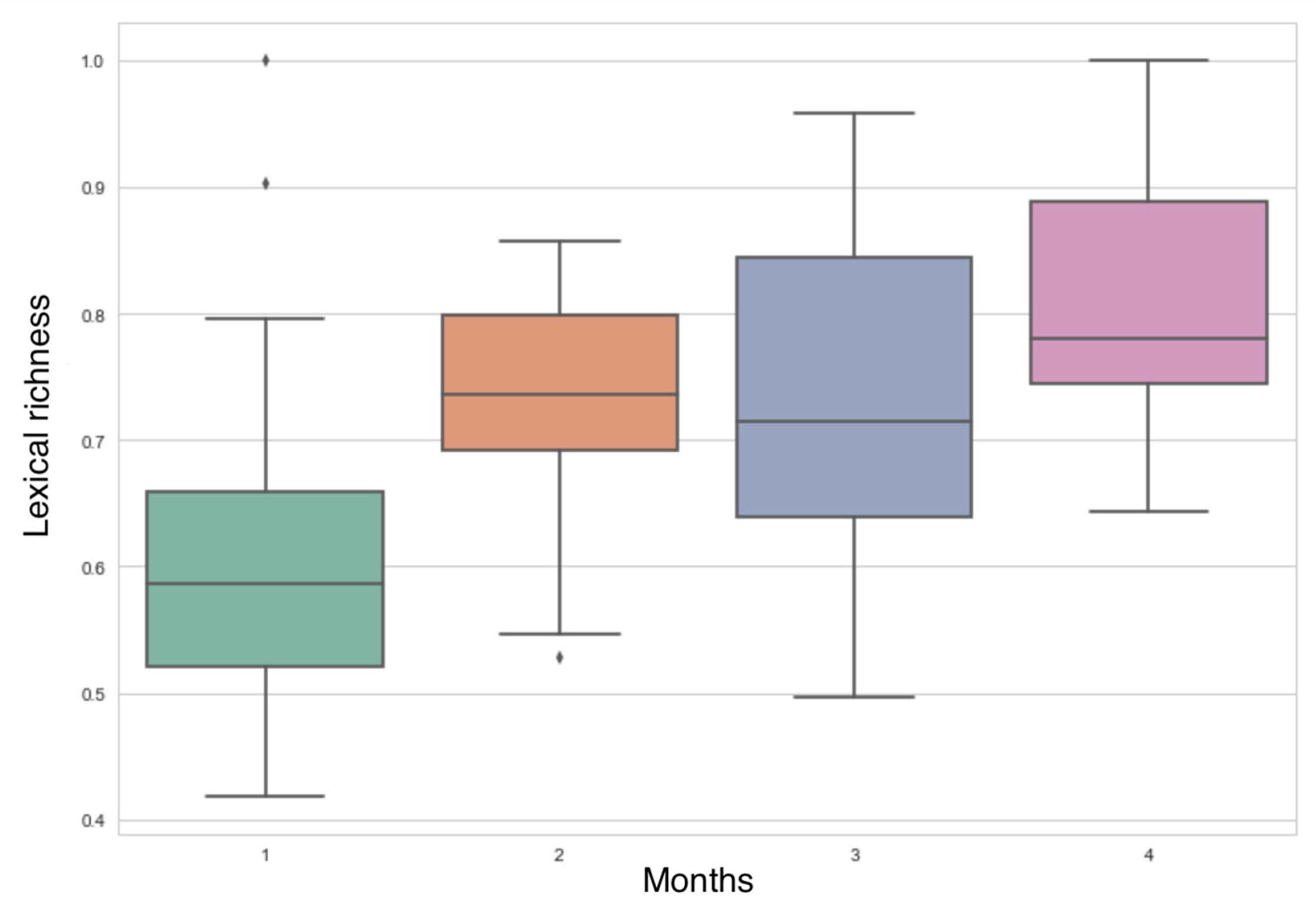

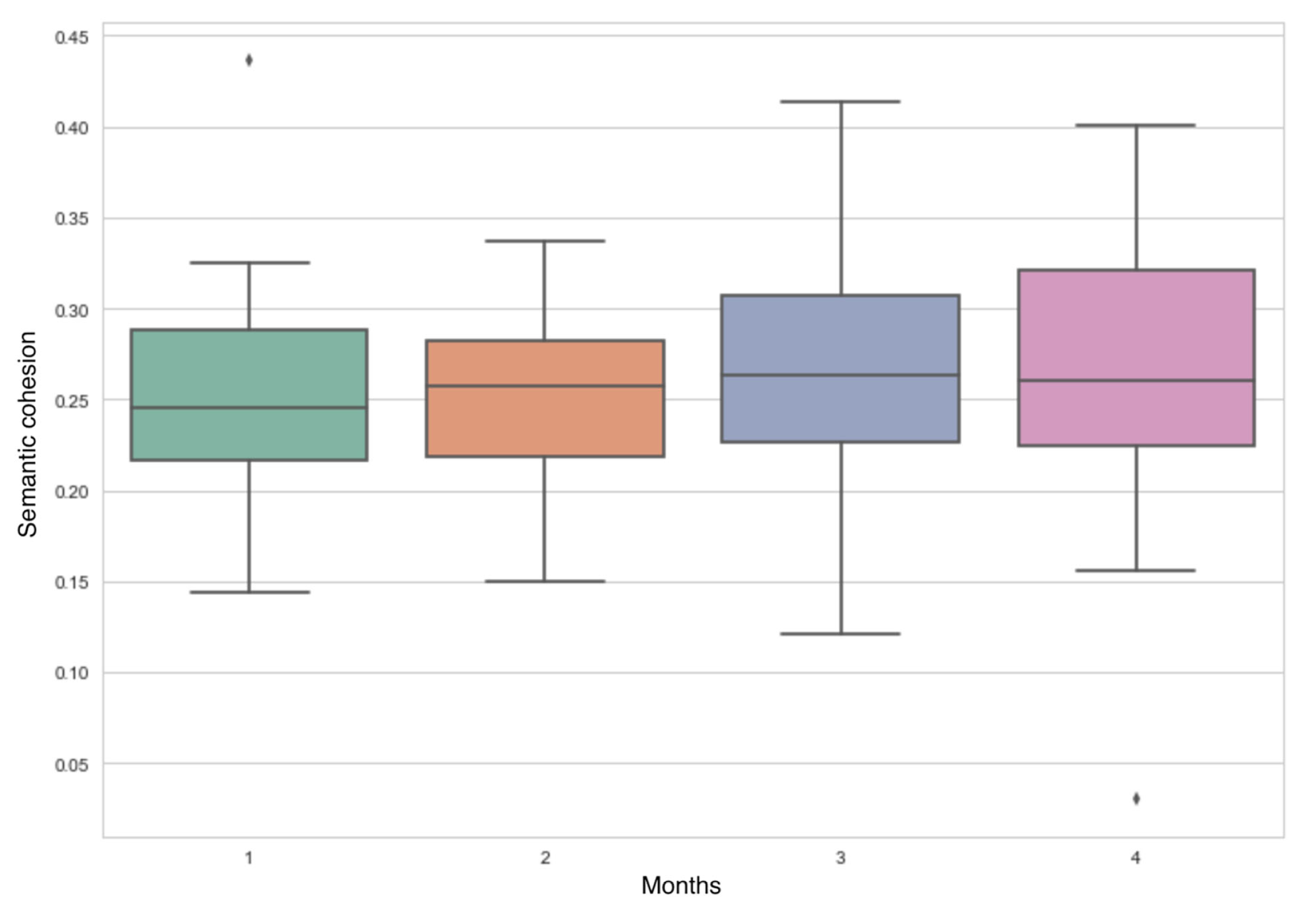

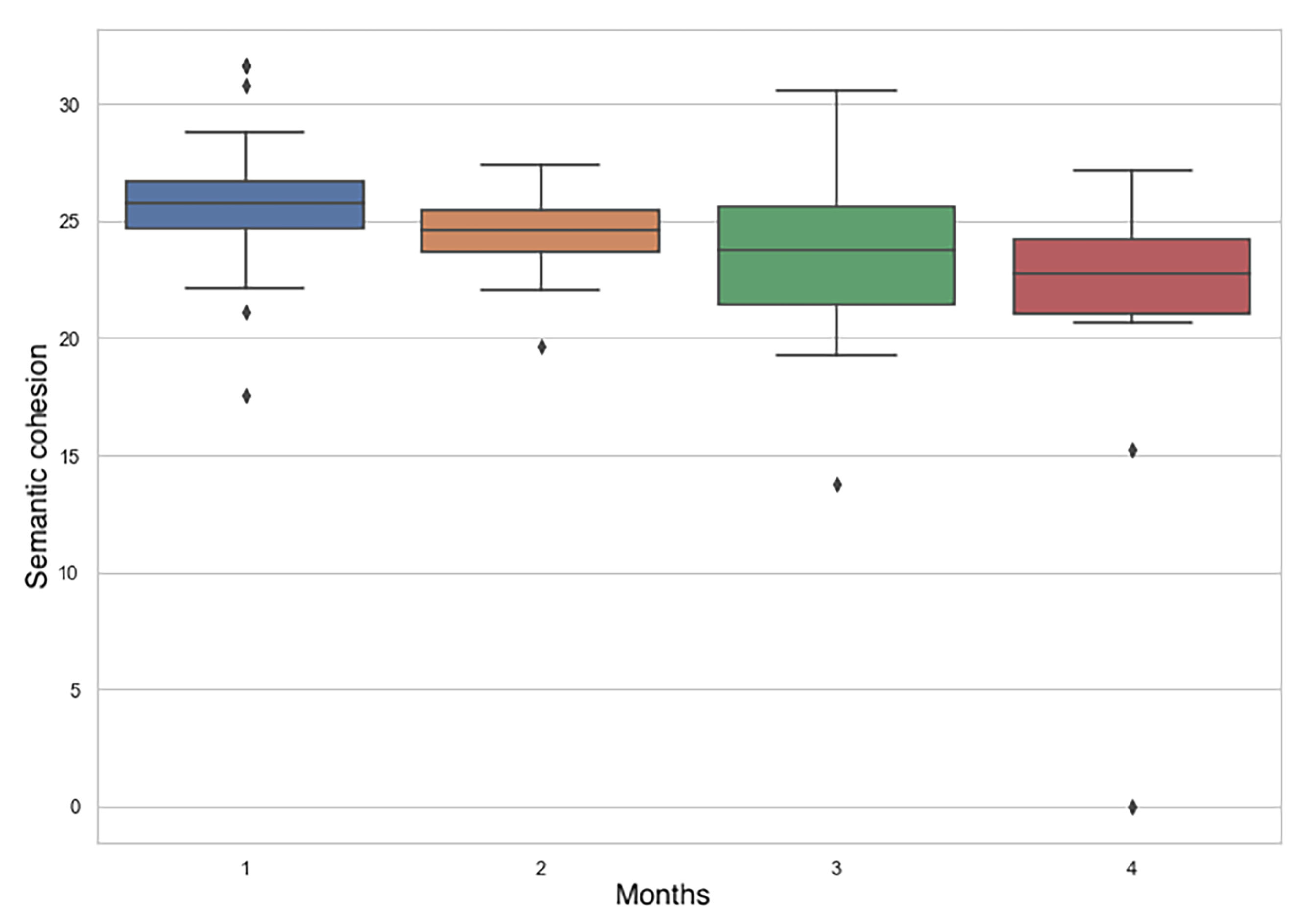

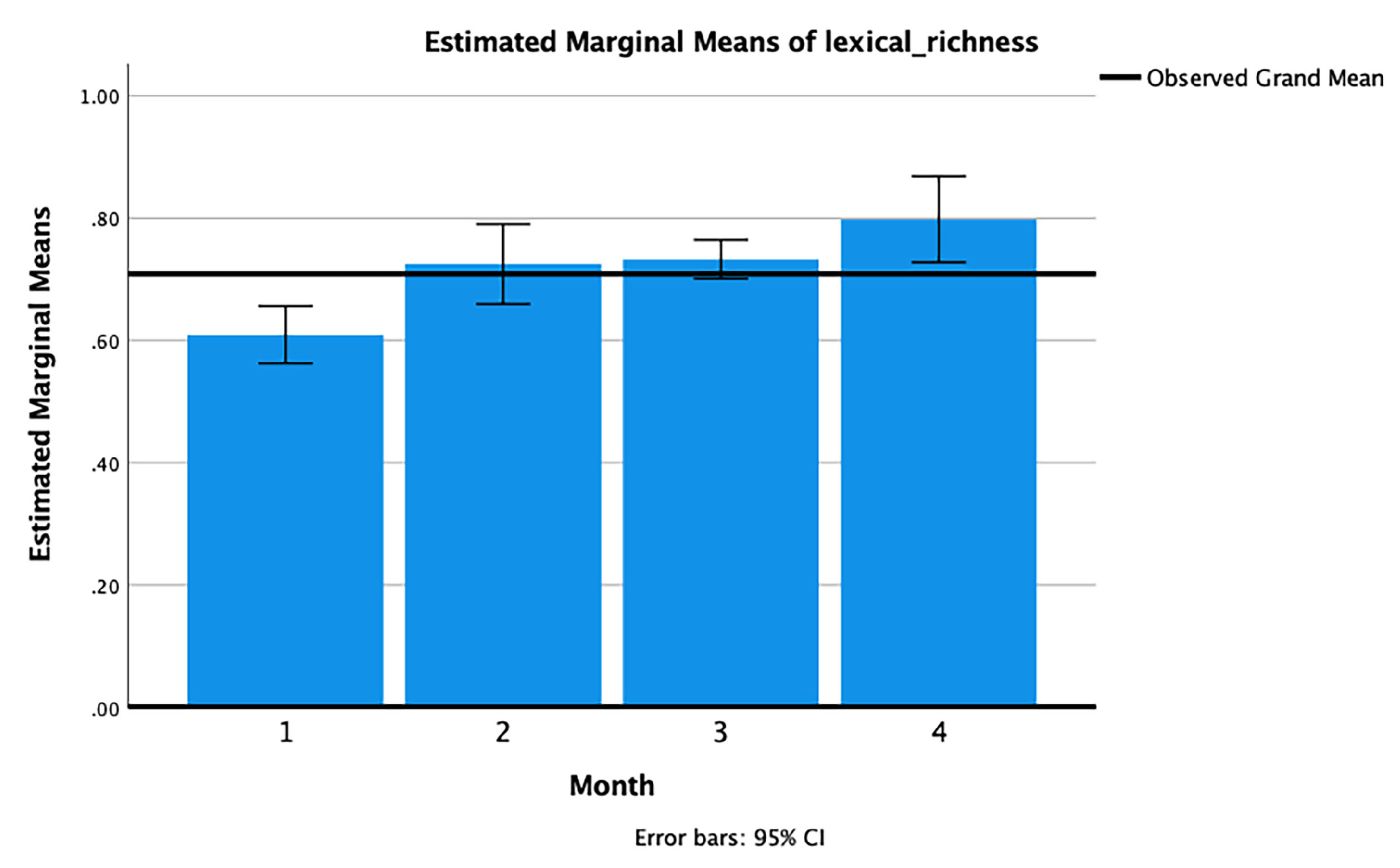

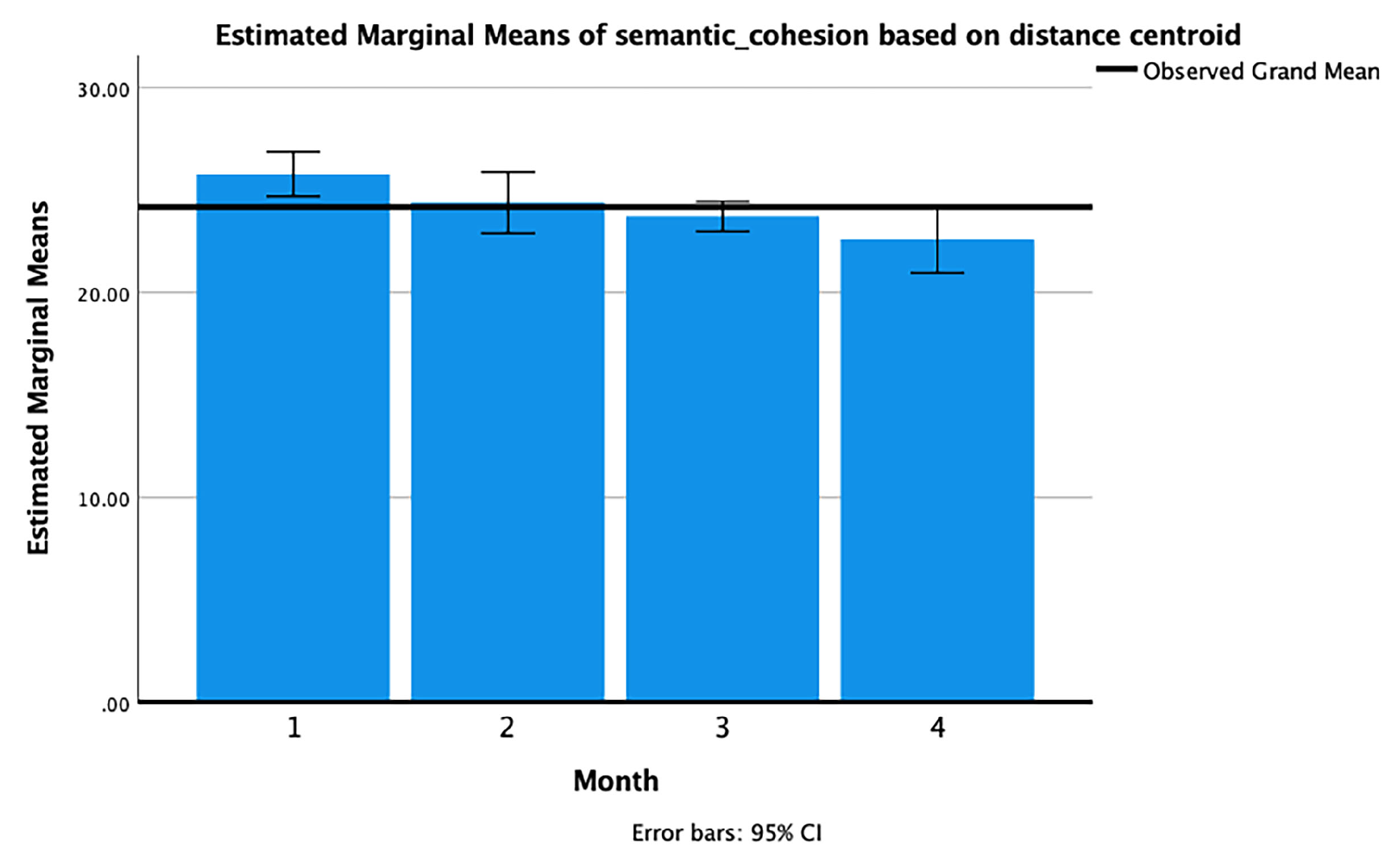

Figure 2 and

Figure 3 show the semantic cohesion distribution with both approaches (semantic similarity-based and distance centroid-based). In the two approaches, we see that students’ semantic cohesion increased in the participation in the fourth month. For the semantic cohesion based on the semantic similarity (

Figure 3), the values varied from 0 to 1. A higher number signifies a higher semantic cohesion. We can see higher semantic values in the fourth month: the values of the comments were in the range of 0.16 to 0.4. The third month contained the highest semantic cohesion values, but their distribution was more dispersed than in month four. For the approach based on the distance centroid, a smaller value expresses a higher degree of semantic cohesion (

Figure 4). In both cases, the semantic cohesion was higher in the last month, which reveals that the students’ semantic cohesion increased over time.

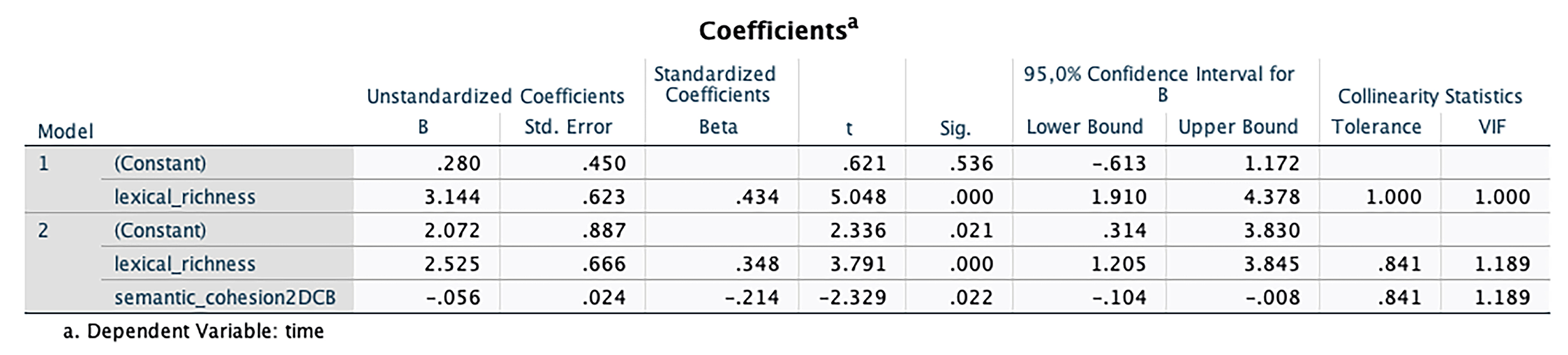

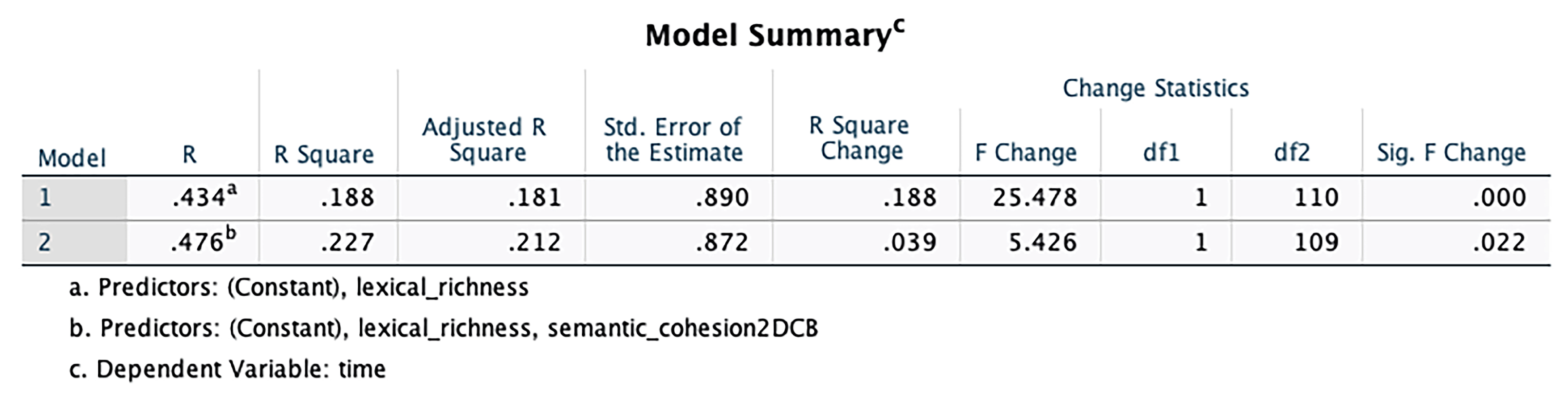

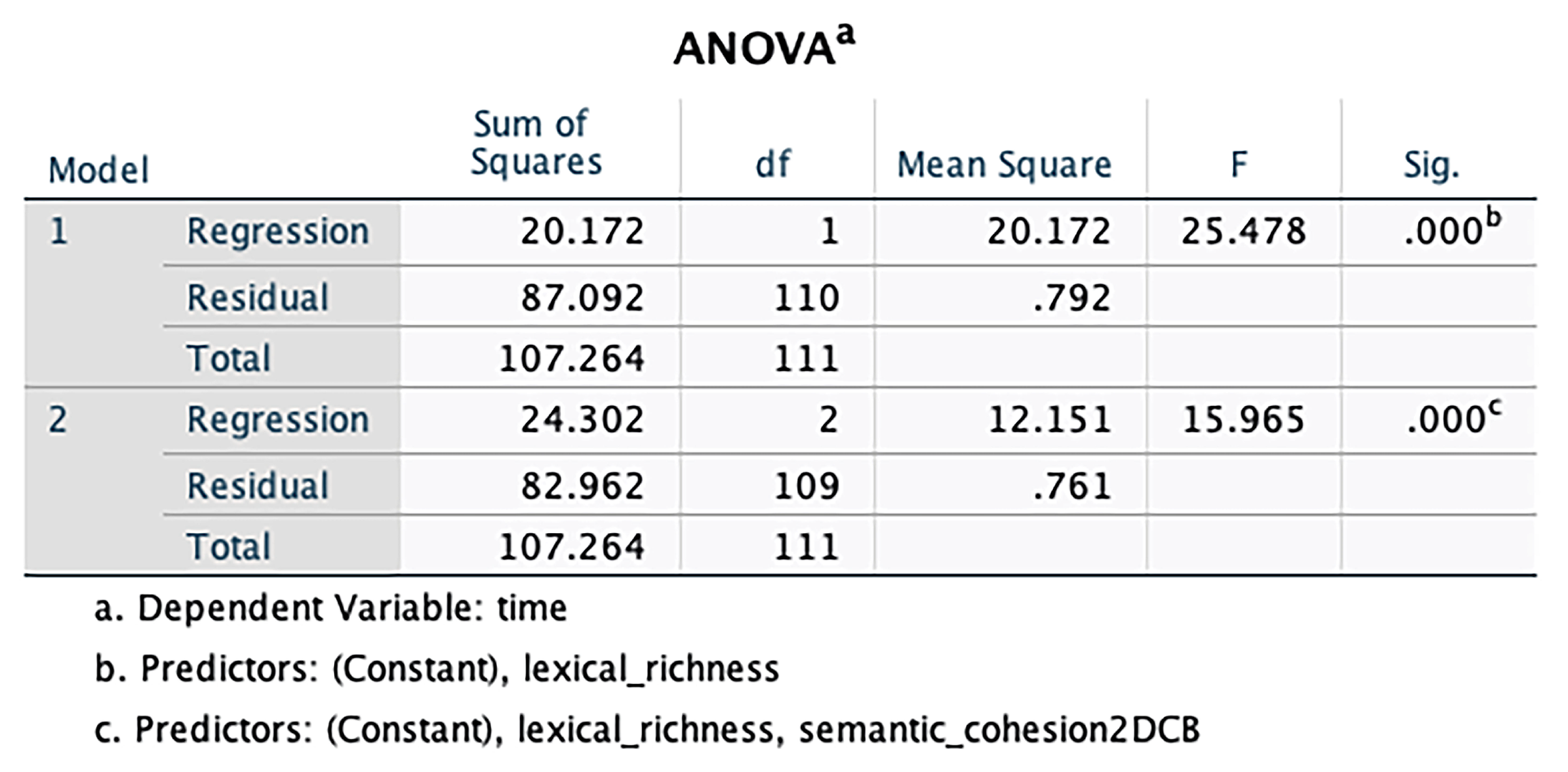

A step forward regression analysis was carried out to determine the predictive value of the predictor variables’ lexical richness’ and ‘semantic cohesion’ (both semantic similarity-based and centroid distance-based) for the ’peer feedback given in a particular month’ (dependent variable). Two significant models appeared (

Figure 5) within the first model, lexical richness, and the second model, lexical richness and distance centroid-based semantic cohesion. The latter significantly had explained extra variance to model two, indicating its own specific characteristic (See

Figure 6 and

Figure 7).

A MANOVA with similarity-based semantic cohesion as dependent variables and time (‘the particular month of peer feedback’) as variate appear with a significant MANOVA effect for time (F = 3.17; Df = 9.324; p = 0.01; Eta 0.197; Power 0.99).

The univariate tests showed a significant effect of time for lexical richness (F = 8.82; Df = 3.108; p < 0.000; Eta 0.197; Power 0.99). Therefore, the lexical richness was not the same for the peer feedback in each month. Semantic cohesion based on similarity did not show a significant time effect. Consequently, it did not differentiate between peer feedback for individual months. Distance-centroid based cohesion showed also a significant effect of time (F = 4.73; Df = 3.108; p < 0.004; Eta 0.116; Power 0.89)

Post-hoc analysis showed a significant difference between the lexical richness for month one compared with months two, three, and four (

p < 0.005; 0.000; 0.000). Months two, three, and four did not differ from each other (

Figure 8). Post-hoc analysis concerning semantic cohesion based on distance centroid showed significant differences between months one, three, and four (

p < 0.002; 0.001). Month two did not differ from the other months. Thus, month three only differed from month one, like month four (

Figure 9).

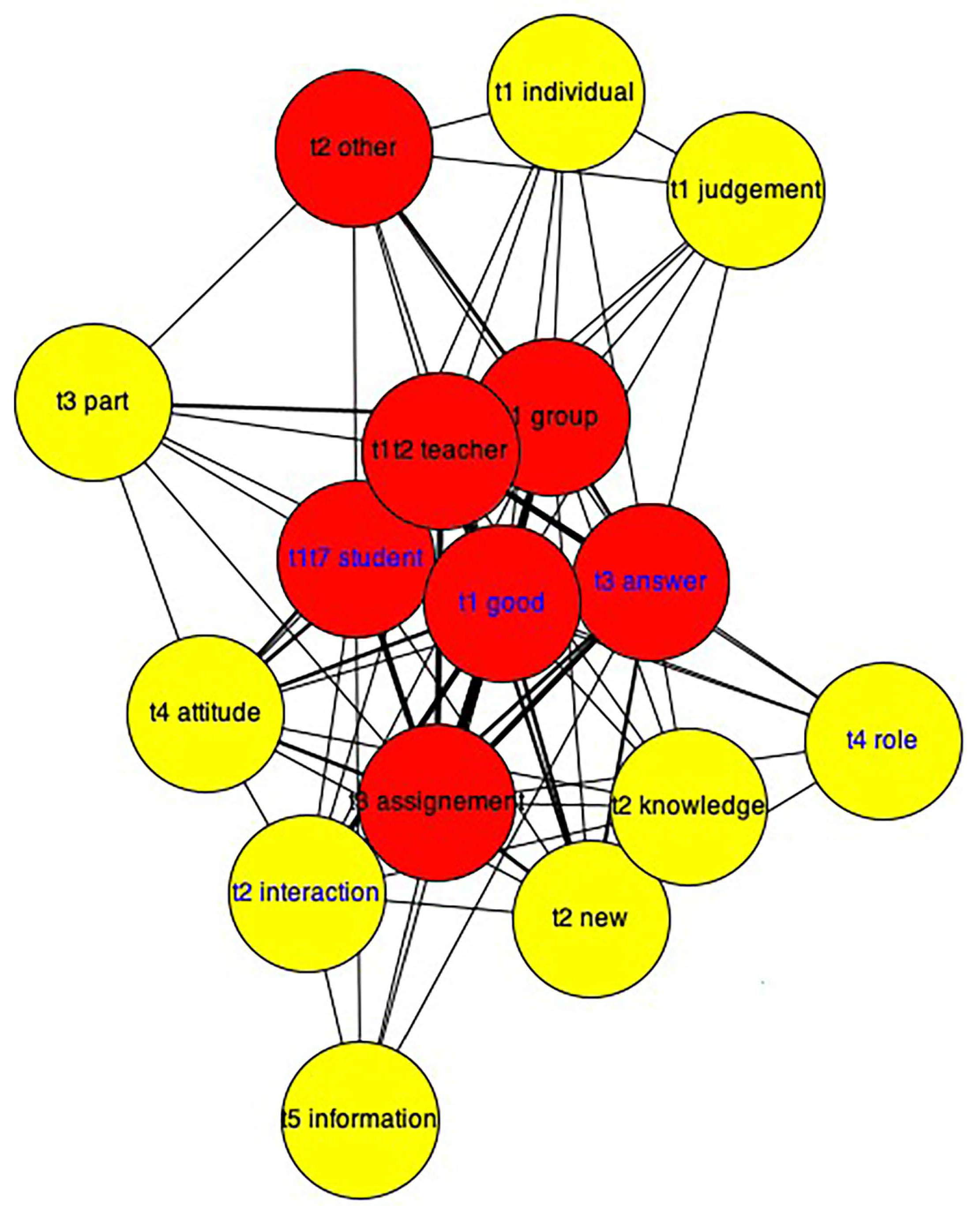

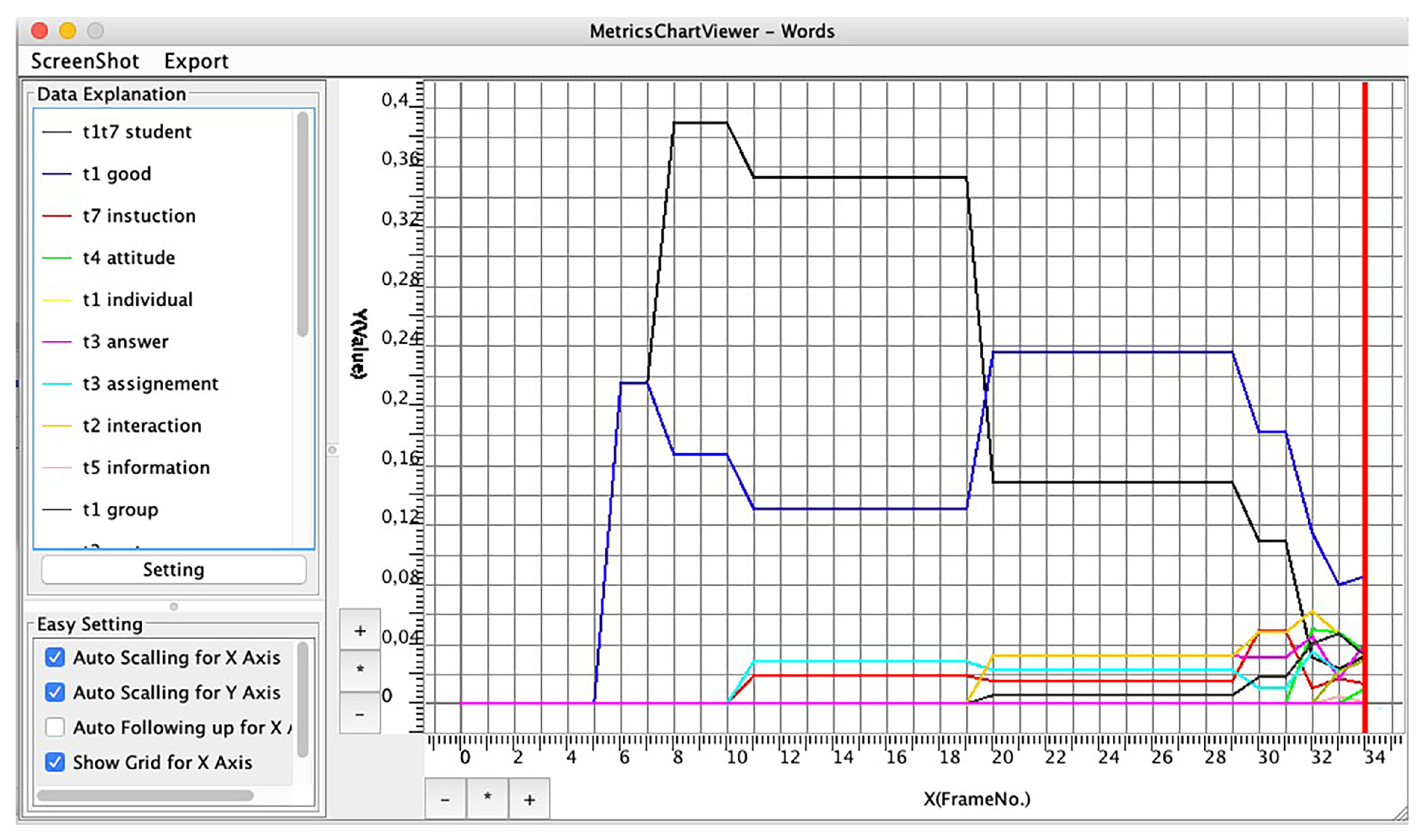

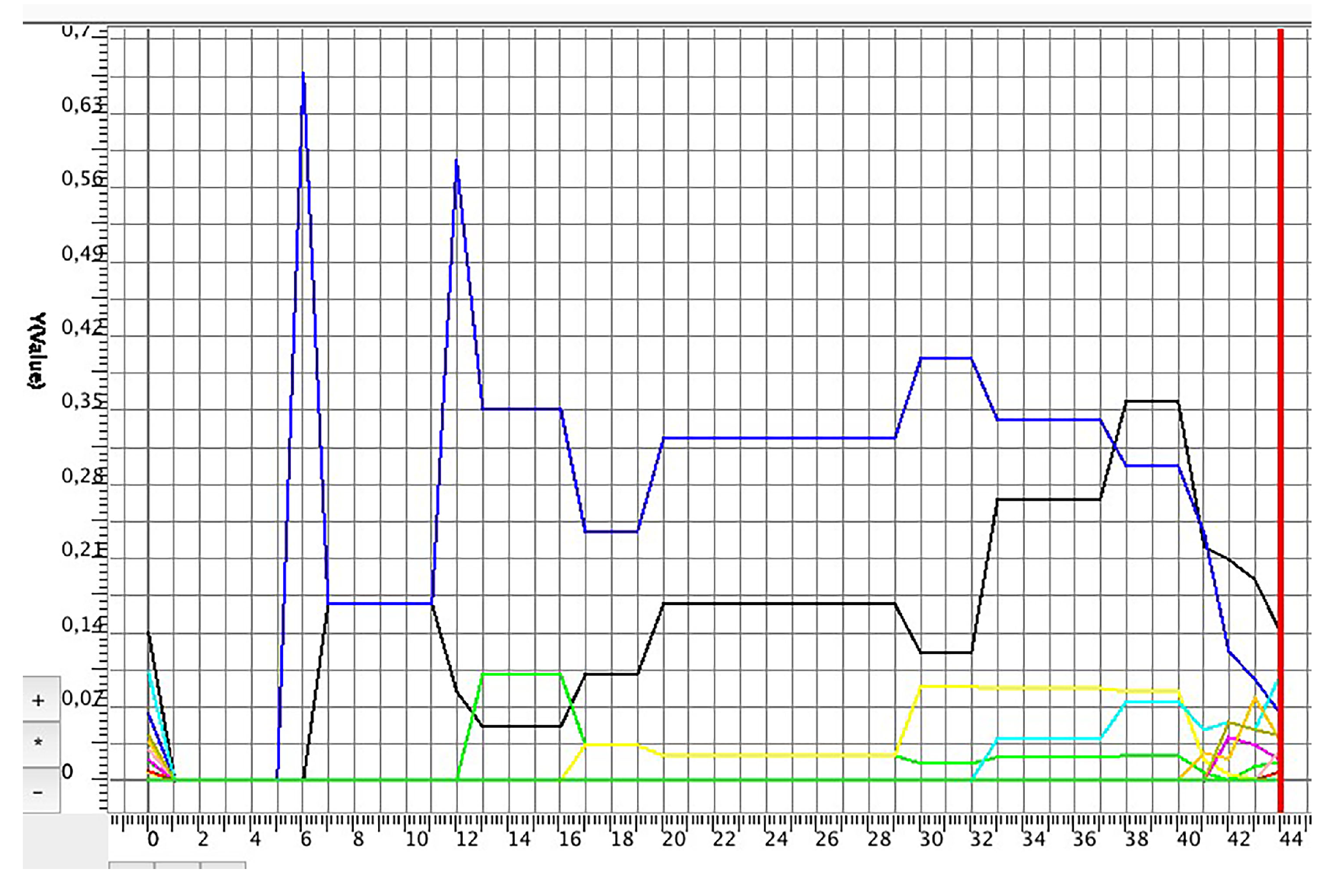

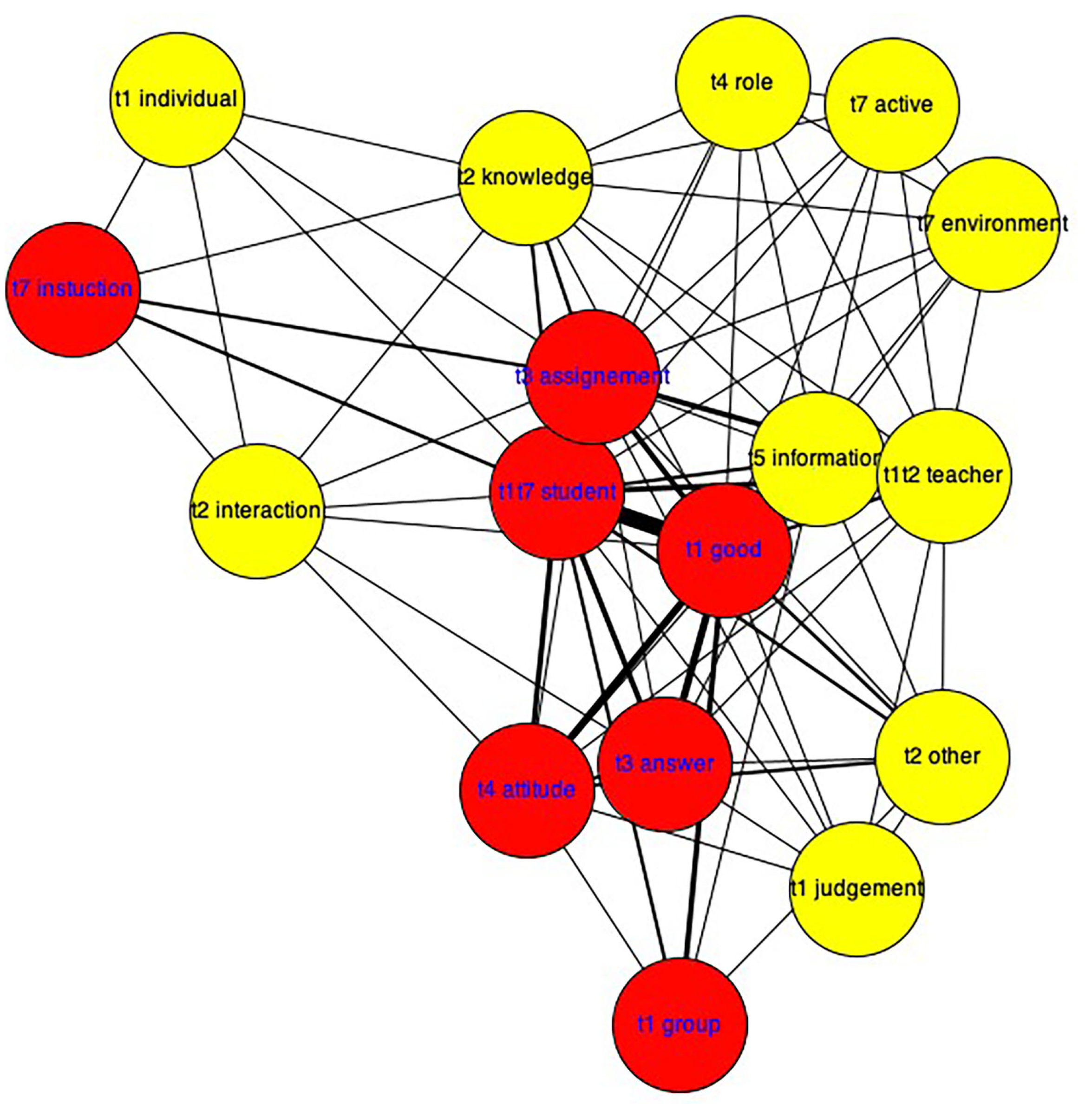

3.3. Kbdex Social Semantic Network Analysis

3.3.1. Quantitative Results:

The quantitative analyses at the total group level revealed that, of the 34 selected words related to the seven topics covering the literature students had studied, 16 words functioned as key mediators (

Table 2). These words linked other conceptual expressions by students and, in that way, contributed to the lexical richness and student’s understanding of the role of teachers in classroom interactions.

Of the seven topics, ‘testing and monitoring’ was represented five times in the list; ‘group and interactions’ four times; ‘working on an assignment’ twice; ‘role of the teacher’ three times; ‘constructivism and learning theory’ once; ‘behaviourism and learning theory’ three times; and ‘learning process’ was not represented.

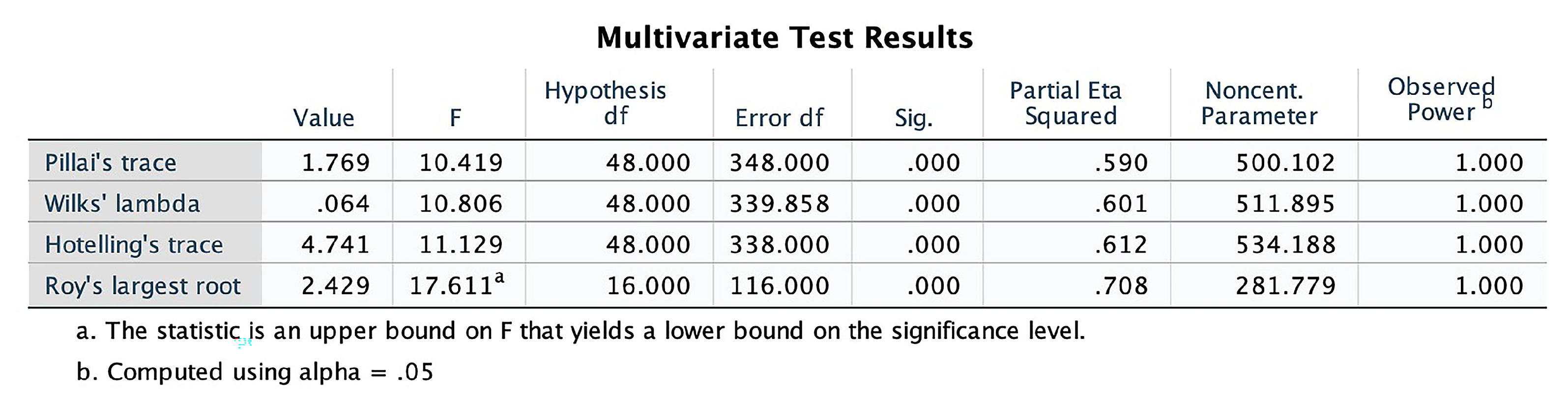

We carried out a MANOVA, where the variates were the selected words and the dependent variable was the betweenness total centrality in each feedback or reflection contribution. Group (four student work groups) was the between factor. A multivariate effect was found for the Group factor (

Figure 10). This means that not all four student work groups were equal in how they used words related to topics.

A univariate test resulted in significant differences (

Figure 11) in the key mediation role of the following lexical terms related to the literature topics: Student (t1t7); Good (t1); Attitude (t4); Interaction (t2); Answer (t3); Role (t4); Instruction (t7); Assignment (t3); Group (t1); and Part (t3) (tx stands for indicating the topic).

3.3.2. Qualitative Results:

To better understand the knowledge construction process, we carried out a qualitative analysis of the students’ comments. The comments were linked to topic words that had the highest degree of centrality at the end of each of the four months and in the reflections in month five. There was a focus on subgroup that supported the quantitative analysis of the topic ‘modeling’ and the Kbdex analysis of lexical richness over time. This provided a deeper understanding of the students’ development. Individual group summaries are presented below.

3.3.3. Group One:

In peer feedback for month one, students used topic terms of equal importance (

Figure 12 and

Figure 13): ‘student’, ‘interaction’ and ‘answer’. These terms connected most strongly to ideas in the peer feedback. In month two, the students did not give feedback, and in month three, the topic ‘role’ also connected more strongly to the other three terms. Over time, the conversations and the peer feedback changed from how a teacher can present himself and interact with students, to the different roles of teachers and what role is important in a particular stage of a lesson.

The final assignment reflections in month five showed that the students had integrated more topics than in the peer feedback during months one and four. The peer feedback was more disconnected and over time the relations of the topics increased and showed a stronger connection. In the students’ reflections, the topic terms ‘student’, ‘answer’, ‘interaction’, ‘good’ and ‘role’ showed the strongest connection. In the reflections by the students, ‘answer’ was quite important, which suggests that students were especially interested in answering and responding to the pupils’ learning in a way that stimulates collaborative learning.

3.3.4. Group Two:

In month three, the topics ‘student’, ‘good’, ‘interaction’ and ‘assignment’ were strongly connected. These terms are from topics t1 and t3 and come from the literature used in the lessons. It is remarkable that the betweenness centrality was higher at the end, because this group gave little peer feedback during the entire course. The reflections showed that the topic ‘answer’ also made a strong connection in the final assignments (

Figure 14 and

Figure 15).

3.3.5. Group Three:

During month one, few topic terms were used. In month two, feedback was more orientated on how communication supports class management. In the third month, the teachers’ role as communicator and coach emerged in the feedback, with strong focus on ‘good’ interaction. Month four showed more class management feedback but focussed on the teacher’s instruction, interaction and physical position in the classroom. The last feedback round and the self-reflections showed that students integrated a wider variety of content in the concepts (

Figure 16 and

Figure 17).

In the final word-relational network, ‘good’ and ‘teacher’ were positioned very centrally in the semantic network, and therefore in the ideas students developed. This centre connected the aspects of group processes, interaction, attitudes (of students and teachers), instruction, answers of students to questions and feedback. Moreover, in the network analysis with information, level, development, active, others, learning process, individual, knowledge, new and role. The topics (1) ‘testing, monitoring, control’, (2) group interaction, (7) behaviourist learning theory/instruction, (3) working on an assignment/task and (4) role/attitude were becoming more and more related to each other during the feedback discourse converging in the final self-reflections.

3.3.6. Group Four:

During the four months, the terms ‘good’ from the topic words ‘testing and monitoring’, and ‘student’ from t1 and t7, for example, ‘behaviourism and learning theory’ were very connective and central to the structure of the feedback and reflections. This was indicative of thinking centred on pupils and the role of the teacher in supporting learning. The centrality, that is, the connectiveness of these terms, also assumed a more connective position, and more evenly distributed connections were observed. This was especially true of terms related to t7, ‘behaviourism and learning theory’, like instruction, guidance and support by the teacher, and for t5, ‘constructivism and learning theory’, the presence of more student-centred thinking in the reflections indicated that students were thinking more deeply, and often used their knowledge experiences in the practical assignments to build their own knowledge. Achieving this required not only a particular student attitude and interaction related to the role of the teacher and student (t4) and interaction with peers, but also opportunities for questions and answers (t3) during instruction or when working on an assignment (t3) (

Figure 18 and

Figure 19).

4. Discussion and Conclusions

Overall, our results lead to the conclusion that the lexical richness in the students’ peer feedback and reflections increased, indicating that these students were developing from novices into experts. This is in line with Bransford [

16] and other literature referred to in the introduction. That video recordings of students’ authentic teaching practice stimulates this developmental process is illustrated by one of the students’ reflections: ‘Where I work, we are unfortunately not allowed to film or make audio recordings.. I personally regret this, because I know from previous experiences how much I can learn from this. Through video interaction you will look at yourself in a very different way and you remember images better than words.’ This illustrates the importance of the practice to becoming an expert, as [

9] have concluded, but similar to the ‘learning by doing’ pedagogical approach, it is only effective when students reflect on their practice [

51].

It can be concluded that the students’ use of ‘expert vocabulary’ grew during the course, as evidenced by the lexical richness and the semantic cohesion based on centroid distance. In other words, students incorporated new vocabulary and maintained semantic consistency.

In the beginning of the course, student teachers had little knowledge of the literature concerning interaction and teaching practice. Giving more useful, content-related peer feedback on peers’ teaching practice requires more knowledge and understanding that leads to a cohesive teaching concept. This developed during the course, as could be seen by two factors. First, lexical richness increased steadily over time for all four subgroups. Second, relations in the word networks at the end of the course were stronger (indicated by thicker lines between the words) than in the word networks at the start. At the end of the course, stronger relations were established between a larger number of topic keywords. Thus, expanding students’ activities with video recordings, feedback, interactions and reflections did not hinder their conceptual development and growth of expertise.

Our findings shows that the influence of peer feedback on video recordings of authentic teaching situations stimulates the creation of new ‘personal’ concepts about teaching. The findings may encourage student teachers to update their knowledge base by using pedagogical and methodological insights offered by the teacher trainer and the course literature. The findings can motivate student teachers to improve their teaching skills and practice, and make them realise that recognising relevant patterns in their thinking about teaching will help them become more expert: true professionals.

4.1. Limitations of the Study

Although there are interesting findings, this study has limitations. First, the number of respondents was limited, and there was no intervention or control group. Secondly, there was only one teacher trainer involved and the influence of teaching style, experience and other personal characteristics were not investigated. Furthermore, the influence of collaborative learning by peer feedback was not investigated at an intensive level of collaborative learning as was intended.

Although we analysed data from several points in time, as in an equivalent time sample design, no effect of testing, selection, or other internal validity errors is to be expected. This is because the students did not know about the analysis, and the data consisted of their periodic feedback instead of repeated survey answers. The Covid-19 pandemic had an impact on the teaching practice during the experimental period and some student groups were unable to give peer feedback at the agreed-on times. Nonetheless, lexical growth and use of ‘expert’ concept words were still observed.

4.2. Practical Implications

This study shows that students who actively use video, record their own teaching practice and exchange peer feedback can enhance their active knowledge base. This helps students to integrate ‘cold’ knowledge from the literature with personal knowledge derived from experiential and practical experiences, and to incorporate it into a ‘warm’ teacher’s knowledge base promoting effective teaching and student learning.

We are aware that knowledge is an important factor in becoming an expert teacher. It is true that the competences of an expert professional teacher also involve skills, attitudes and motivational variables [

52]. Even so, self- knowledge about these skills, attitudes and motivations is also important, as can be seen from one student’s reflections: “(…) the role as presenter was my strongest point of this lesson. Because I speak enthusiastically about my lesson and adopt a calm attitude, I can reach everyone well. This also makes the student enthusiastic and I try to convey the subject content well. Because I am still fairly young, the students trust me and I can quickly build a bond with them. I think this is one of my strongest points, though this can also be a pitfall, because I can lose dominance in the class sometimes. I am happy, and I am still the teacher and not their friend.”