Declared and Real Level of Digital Skills of Future Teaching Staff

Abstract

1. Introduction

2. Theoretical Framework

3. Research Methodology

3.1. Research Problems

- What is the level of knowledge and skills related to the use of ICT equipment among students of education?

- How do future educators assess their own digital competencies?

- To what extent is the level of digital competence in using an office suite related to the self-diagnosis of digital competence, the assessment of the relevance of using ICT in education, and previous experience with formal education in the development of digital competence—in short, how well does the objective align with the subjective in the evaluation of digital competence?

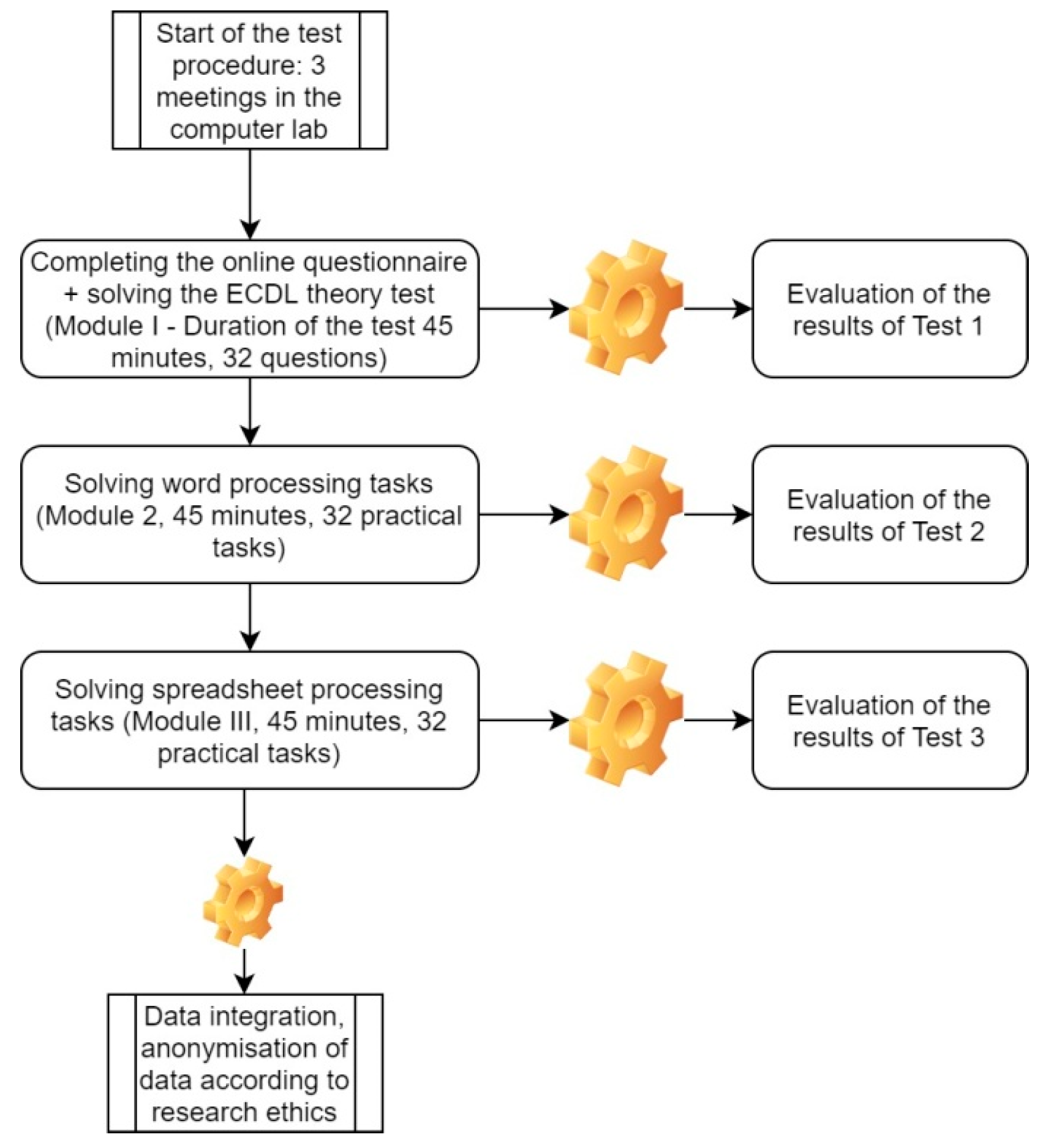

3.2. Test Procedure

3.3. Research Tools

- Operation of digital devices and knowledge of IT equipment (theoretical test) consisting of 32 questions with single-choice answers. A maximum of 1 point could be obtained for each question. The points were then converted into a percentage of correct answers on a scale of 0 to 100%. Each student had 45 min to complete the test.

- Use of word processing software at a basic level. Each student received a set of instructions in a PDF file and a set of work files in which the activities were performed. Each student was given 45 min to complete 32 tasks. Each correctly completed task could be awarded 1 point. The points were then converted into percentages on a scale from 0 to 100%.

- Spreadsheet maintenance. As in the previous modules, students were given work files and 32 tasks to complete with a time limit of 45 min. Results were then compiled and stored as percentages.

- A general self-assessment of digital competence level (5 questions) using a Likert scale from 1—very low to 5—very high. Previously used tools [34] were employed to develop the scale. The Cronbach alpha coefficient was 0.778.

- An assessment of the ability to use new hardware and software (3 questions). Previous multiauthor studies from the Smart Ecosystem for Learning and Inclusion (SELI) project [37] were used to create the scale using responses on a 5-degree Likert scale from 1—very rarely to 5—very often. The Cronbach alpha coefficient was 0.720.

- The validity of the use of ICT in education consisting of 6 questions using a 5-degree response scale from 1—very much disagree to 5—very much agree. The scale was the author’s own and was derived from Serbian research on the use of ICT in education [38]. The Cronbach alpha coefficient was 0.630.

3.4. Sampling Procedure and Characteristics of the Study Sample

3.5. Research Ethics

4. Test Results

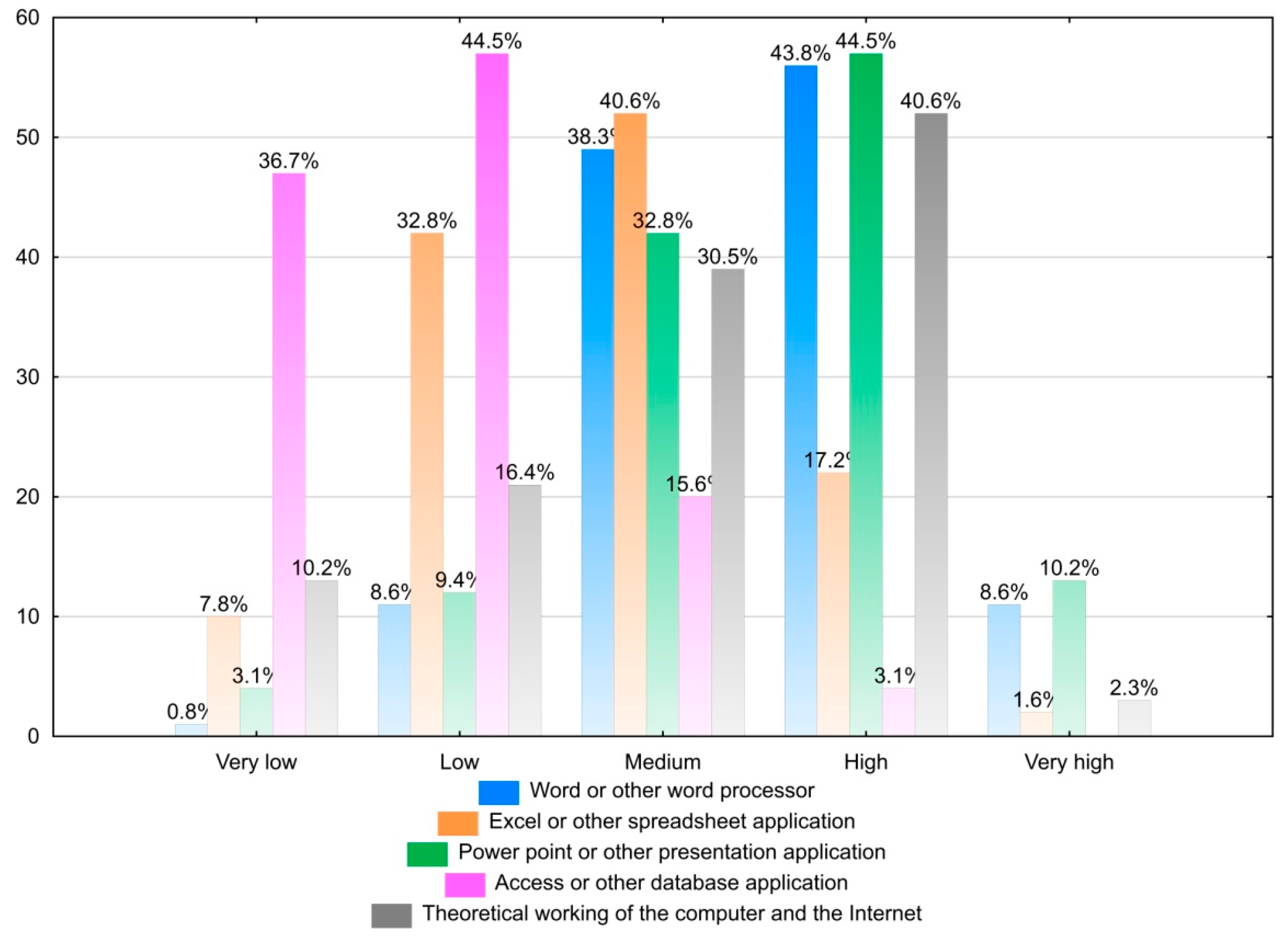

4.1. Declared Level of Digital Literacy—A Diagnostic Survey

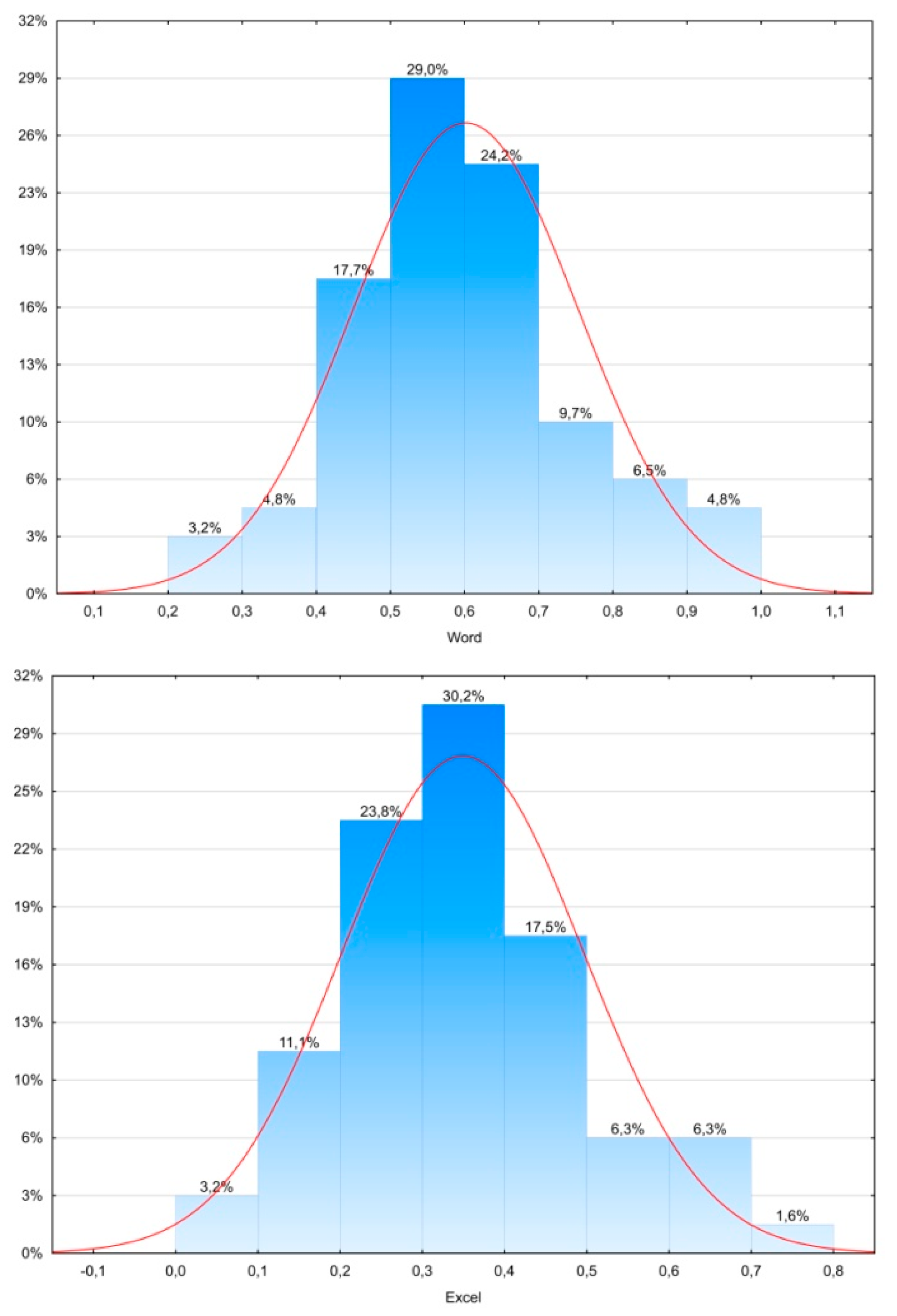

4.2. Objective Level of Digital Skills—Skills Test

4.3. Declared vs. Actual Level of Digital Skills

4.4. Prediction of Real Level of Digital Skills

5. Discussion

6. Research Limitations and New Directions in Digital Literacy Research

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Kaiser–Meyer–Olkin test | |||||

| MSA | |||||

| Overall MSA | 0.751 | ||||

| I can create a web page | 0.730 | ||||

| I can work with a computer better than others | 0.830 | ||||

| I can work on the Internet better than others | 0.813 | ||||

| I can use a smartphone better than others | 0.795 | ||||

| I can also use the Internet in a creative way | 0.680 | ||||

| Handling a word processor—self-assessment | 0.776 | ||||

| Self-assessment on using a spreadsheet programme. | 0.792 | ||||

| Self-assessment in using a presentation software | 0.776 | ||||

| Self-assessment on using a database editor. | 0.770 | ||||

| Knowledge of how computers and the Internet work | 0.837 | ||||

| Experimenting with new software | 0.813 | ||||

| Ease of use of new software | 0.714 | ||||

| Ease of use of new ICT devices | 0.648 | ||||

| Prediction of using ICT in the professional work of an educator | 0.751 | ||||

| Education using ICT is interesting | 0.650 | ||||

| ICT used in teaching improves students’ concentration | 0.543 | ||||

| ICT used in teaching increases student engagement | 0.742 | ||||

| ICT used in teaching increases students’ interest in the subject matter | 0.743 | ||||

| Modern school needs ICT | 0.730 | ||||

| Level of Computer Science teaching in a secondary school | 0.730 | ||||

| Equipment of a computer lab in a secondary school | 0.623 | ||||

| Content-related preparation of computer science teachers in secondary school | 0.638 | ||||

| Bartlett’s test | |||||

| X2 | df | p | |||

| 1001.309 | 231.000 | <001 | |||

| Chi-squared Test | |||||

| Value | df | p | |||

| Model | 242.486 | 149 | <001 | ||

| Factor Loadings | |||||

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | Uniqueness | |

| I can create a web page. | 0.817 | ||||

| I can work with a computer better than others. | 0.727 | 0.390 | |||

| I can work on the Internet better than others. | 0.903 | 0.243 | |||

| I can use a smartphone better than others. | 0.816 | 0.411 | |||

| I can also use the Internet in a creative way. | 0.898 | ||||

| Handling a word processor—self-assessment. | 0.410 | 0.647 | |||

| Self-assessment on using a spreadsheet programme. | 0.607 | 0.478 | |||

| Self-assessment in using a presentation software | 0.663 | ||||

| Self-assessment on using a database editor. | 0.632 | 0.616 | |||

| Knowledge of how computers and the Internet work. | 0.494 | 0.628 | |||

| Experimenting with new software. | 0.663 | 0.604 | |||

| Ease of use of new software. | 0.672 | 0.585 | |||

| Ease of use of new ICT devices. | 0.617 | 0.657 | |||

| Prediction of using ICT in the professional work of an educator. | 0.458 | 0.738 | |||

| Education using ICT is interesting. | 0.403 | 0.717 | |||

| ICT used in teaching improves students’ concentration. | −0.423 | 0.797 | |||

| ICT used in teaching increases student engagement. | 0.736 | 0.466 | |||

| ICT used in teaching increases students’ interest in the subject matter. | 0.647 | 0.550 | |||

| Modern schools need ICT. | 0.745 | 0.418 | |||

| Level of computer-science teaching in a secondary school. | 0.566 | 0.508 | |||

| Equipment of a computer lab in a secondary school. | 0.824 | 0.315 | |||

| Content-related preparation of computer science teachers in secondary school. | 0.648 | 0.569 | |||

| Note: applied rotation method is promax. | |||||

| Factor Characteristics | |||||

| SumSq. Loadings | Proportion var. | Cumulative | |||

| Factor 1 | 2.925 | 0.133 | 0.133 | ||

| Factor 2 | 2.580 | 0.117 | 0.250 | ||

| Factor 3 | 2.232 | 0.101 | 0.352 | ||

| Factor 4 | 1.549 | 0.070 | 0.422 | ||

| Factor Correlations | |||||

| Factor 1 | Factor 2 | Factor 3 | Factor 4 | ||

| Factor 1 | 1.000 | 0.598 | 0.291 | 0.217 | |

| Factor 2 | 0.598 | 1.000 | 0.198 | −0.025 | |

| Factor 3 | 0.291 | 0.198 | 1.000 | 0.005 | |

| Factor 4 | 0.217 | −0.025 | 0.005 | 1.000 | |

| Additional fit indices | |||||

| RMSEA | RMSEA 90% confidence | TLI | BIC | ||

| 0.078 | 0.054–0.086 | 0.806 | −480.467 | ||

References

- Tomczyk, L.; Eliseo, M.A.; Costas, V.; Sanchez, G.; Silveira, I.F.; Barros, M.-J.; Amado-Salvatierra, H.R.; Oyelere, S.S. Digital Divide in Latin America and Europe: Main Characteristics in Selected Countries. In Proceedings of the 2019 14th Iberian Conference on Information Systems and Technologies, Coimbra, Portugal, 19–22 June 2019. [Google Scholar]

- Potyrała, K.; Tomczyk, Ł. Teachers in the Lifelong Learning Process: Examples of Digital Literacy. J. Educ. Teach. 2021, 47, 255–273. [Google Scholar] [CrossRef]

- Hajduová, Z.; Smoląg, K.; Szajt, M.; Bednárová, L. Digital Competences of Polish and Slovak Students—Comparative Analysis in the Light of Empirical Research. Sustainability 2020, 12, 7739. [Google Scholar] [CrossRef]

- Záhorec, J.; Hašková, A.; Poliaková, A.; Munk, M. Case Study of the Integration of Digital Competencies into Teacher Preparation. Sustainability 2021, 13, 6402. [Google Scholar] [CrossRef]

- Tomczyk, L.; Potyrala, K.; Demeshkant, N.; Czerwiec, K. University Teachers and Crisis E-Learning: Results of a Polish Pilot Study on: Attitudes towards e-Learning, Experiences with e-Learning and Anticipation of Using e-Learning Solutions after the Pandemic. In Proceedings of the 2021 16th Iberian Conference on Information Systems and Technologies, Chaves, Portugal, 23–26 June 2021. [Google Scholar]

- Guillén-Gámez, F.D.; Ramos, M. Competency Profile on the Use of ICT Resources by Spanish Music Teachers: Descriptive and Inferential Analyses with Logistic Regression to Detect Significant Predictors. Technol. Pedagog. Educ. 2021, 30, 511–523. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Romero-Tena, R.; Palacios-Rodríguez, A. Evaluation of Teacher Digital Competence Frameworks through Expert Judgement: The Use of the Expert Competence Coefficient. J. New Approaches Educ. Res. 2020, 9, 275. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J.; Contreras-Rosado, J.A. Incidence of Gender in the Digital Competence of Higher Education Teachers in Research Work: Analysis with Descriptive and Comparative Methods. Educ. Sci. 2021, 11, 98. [Google Scholar] [CrossRef]

- Evtyugina, A.; Zhuminova, A.; Grishina, E.; Kondyurina, I.; Sturikova, M. Cognitive-Conceptual Model for Developing Foreign Language Communicative Competence in Non-Linguistic University Students. Int. J. Cogn. Res. Sci. Eng. Educ. 2020, 8, 69–77. [Google Scholar]

- Rabiej, A.; Banach, M. Testowanie Biegłości Językowej Uczniów w Wieku Szkolnym Na Przykładzie Języka Polskiego Jako Obcego. Acta Univ. Lodz. 2020, 27, 451–467. [Google Scholar]

- Potyrała, K.; Demeshkant, N.; Czerwiec, K.; Jancarz-Łanczkowska, B.; Tomczyk, Ł. Head Teachers’ Opinions on the Future of School Education Conditioned by Emergency Remote Teaching. Educ. Inf. Technol. 2021, 1–25. [Google Scholar] [CrossRef]

- Lankshear, C.; Knobel, M. Digital Literacy and Digital Literacies: Policy, Pedagogy and Research Considerations for Education. Nord. J. Digit. Lit. 2015, 10, 8–20. [Google Scholar] [CrossRef]

- Biezā, K.E. Digital Literacy: Concept and Definition. Int. J. Smart Educ. Urban Soc. 2020, 11, 1–15. [Google Scholar] [CrossRef]

- Koltay, T. The Media and the Literacies: Media Literacy, Information Literacy, Digital Literacy. Media Cult. Soc. 2011, 33, 211–221. [Google Scholar] [CrossRef]

- Eger, L.; Tomczyk, Ł.; Klement, M.; Pisoňová, M.; Petrová, G. How Do First Year University Students Use ICT in Their Leisure Time and for Learning Purposes? Int. J. Cogn. Res. Sci. Eng. Educ. 2020, 8, 35–52. [Google Scholar] [CrossRef]

- Ramowy Katalog Kompetencji Cyfrowych. Available online: https://depot.ceon.pl/bitstream/handle/123456789/9068/Ramowy_katalog_kompetencji_cyfrowych_Fra.pdf?sequence=1&isAllowed=y (accessed on 28 September 2021).

- Liu, Z.-J.; Tretyakova, N.; Fedorov, V.; Kharakhordina, M. Digital Literacy and Digital Didactics as the Basis for New Learning Models Development. Int. J. Emerg. Technol. Learn. 2020, 15, 4. [Google Scholar] [CrossRef]

- Novković Cvetković, B.; Stošić, L.; Belousova, A. Media and Information Literacy—the Basic for Application of Digital Technologies in Teaching from the Discourses of Educational Needs of Teachers/Medijska i Informacijska Pismenost—Osnova Za Primjenu Digitalnih Tehnologija u Nastavi Iz Diskursa Obra. Croat. J. Educ. 2018, 20. [Google Scholar] [CrossRef]

- Ziemba, E. Synthetic Indexes for a Sustainable Information Society: Measuring ICT Adoption and Sustainability in Polish Government Units. The Neuropharmacology of Alcohol; Springer International Publishing: Cham, Switzerland, 2019; pp. 214–234. [Google Scholar]

- Ziemba, E. The Contribution of ICT Adoption to the Sustainable Information Society. J. Comput. Inf. Syst. 2019, 59, 116–126. [Google Scholar] [CrossRef]

- Majewska, K. Przygotowanie Studentów Pedagogiki Resocjalizacyjnej Do Stosowania Nowych Technologii w Profilaktyce Problemów Młodzieży. Pol. J. Soc. Rehabil. 2020, 20, 283–298. [Google Scholar]

- Kiedrowicz, G. Współczesny Student w Świecie Mobilnych Urządzeń. Lub. Rocz. Pedagog. 2018, 36, 49. [Google Scholar] [CrossRef]

- Wobalis, M. Kompetencje Informatyczne Studentów Filologii Polskiej w Latach 2010–2016. Pol. Innow. 2016, 4, 109–124. [Google Scholar] [CrossRef]

- Jędryczkowski, J. New media in the teaching and learning process of students. Dyskursy Młodych/Adult Educ. Discourses 2019, 20. [Google Scholar] [CrossRef]

- Pulak, I.; Staniek, J. Znaczenie Nowych Mediów Cyfrowych w Przygotowaniu Zawodowym Nauczycieli Edukacji Wczesnoszkolnej w Kontekście Potrzeb Modernizacji Procesu Dydaktycznego. Pedagog. Przedszkolna i Wczesnoszkolna 2017, 5, 77–88. [Google Scholar]

- Romaniuk, M.W.; Łukasiewicz-Wieleba, J. Zdalna Edukacja Kryzysowa w APS w Okresie Pandemii COVID-19; Akademia Pedagogiki Specjalnej: Warszawa, Poland, 2020. [Google Scholar]

- List, A. Defining Digital Literacy Development: An Examination of Pre-Service Teachers’ Beliefs. Comput. Educ. 2019, 138, 146–158. [Google Scholar] [CrossRef]

- List, A.; Brante, E.W.; Klee, H.L. A Framework of Pre-Service Teachers’ Conceptions about Digital Literacy: Comparing the United States and Sweden. Comput. Educ. 2020, 148, 103788. [Google Scholar] [CrossRef]

- García-Martín, J.; García-Sánchez, J.-N. Pre-Service Teachers’ Perceptions of the Competence Dimensions of Digital Literacy and of Psychological and Educational Measures. Comput. Educ. 2017, 107, 54–67. [Google Scholar] [CrossRef]

- Arteaga, M.; Tomczyk, Ł.; Barros, G.; Sunday Oyelere, S. ICT and Education in the Perspective of Experts from Business, Government, Academia and NGOs: In Europe, Latin America and Caribbean; Universidad del Azuay: Cuenca, Ecuador, 2020. [Google Scholar]

- Fedeli, L. School, Curriculum and Technology: The What and How of Their Connections. Educ. Sci. and Soc. 2018, 2. [Google Scholar] [CrossRef]

- Konan, N. Computer Literacy Levels of Teachers. Procedia Soc. Behav. Sci. 2010, 2, 2567–2571. [Google Scholar] [CrossRef][Green Version]

- Leahy, D.; Dolan, D. Digital Literacy: A Vital Competence for 2010? In Key Competencies in the Knowledge Society; Springer: Berlin/Heidelberg, Germany, 2010; pp. 210–221. [Google Scholar]

- Tomczyk, Ł.; Szotkowski, R.; Fabiś, A.; Wąsiński, A.; Chudý, Š.; Neumeister, P. Selected Aspects of Conditions in the Use of New Media as an Important Part of the Training of Teachers in the Czech Republic and Poland—Differences, Risks and Threats. Educ. Inf. Technol. 2017, 22, 747–767. [Google Scholar] [CrossRef]

- Eger, L.; Klement, M.; Tomczyk, Ł.; Pisoňová, M.; Petrová, G. Different User Groups of University Students and Their Ict Competence: Evidence from Three Countries in Central Europe. J. Balt. Sci. Educ. 2018, 17, 851–866. [Google Scholar] [CrossRef]

- Eger, L.; Egerová, D.; Mičík, M.; Varga, V.; Czeglédi, C.; Tomczyk, L.; Sládkayová, M. Trust Building and Fake News on Social Media from the Perspective of University Students from Four Visegrad Countries. Commun. Today 2020, 1, 73–88. [Google Scholar]

- Oyelere, S.S.; Tomczyk, Ł. ICT for Learning and Inclusion in Latin America and Europe Case Study from Countries: Bolivia, Brazil, Cuba, Dominican Republic, Ecuador, Finland, Poland, Turkey, Uruguay; University of Eastern Finland: Joensuu, Finland, 2019. [Google Scholar]

- Stošić, L.; Stošić, I. Perceptions of Teachers Regarding the Implementation of the Internet in Education. Comput. Human Behav. 2015, 53, 462–468. [Google Scholar] [CrossRef]

- Pangrazio, L.; Godhe, A.-L.; Ledesma, A.G.L. What Is Digital Literacy? A Comparative Review of Publications across Three Language Contexts. E-Learn. digit. media 2020, 17, 442–459. [Google Scholar] [CrossRef]

- Falloon, G. From Digital Literacy to Digital Competence: The Teacher Digital Competency (TDC) Framework. Educ. Technol. Res. Dev. 2020, 68, 2449–2472. [Google Scholar] [CrossRef]

- Reynolds, R. Defining, Designing for, and Measuring “Social Constructivist Digital Literacy” Development in Learners: A Proposed Framework. Educ. Technol. Res. Dev. 2016, 64, 735–762. [Google Scholar] [CrossRef]

- Tomczyk, Ł. Skills in the Area of Digital Safety as a Key Component of Digital Literacy among Teachers. Educ. Inf. Technol. 2020, 25, 471–486. [Google Scholar] [CrossRef]

- Trifonas, P.P. (Ed.) Learning the Virtual Life: Public Pedagogy in a Digital World; Routledge: London, UK, 2012. [Google Scholar]

- Peled, Y. Pre-Service Teacher’s Self-Perception of Digital Literacy: The Case of Israel. Educ. Inf. Technol. 2020, 26, 2879–2896. [Google Scholar] [CrossRef]

- Mahmood, K.; University of the Punjab. Do People Overestimate Their Information Literacy Skills? A Systematic Review of Empirical Evidence on the Dunning-Kruger Effect. Commun. Inf. Lit. 2016, 10, 199. [Google Scholar] [CrossRef]

- Dunning, D. The Dunning–Kruger Effect. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 2011; pp. 247–296. [Google Scholar]

- Frania, M. Selected Aspects of Media Literacy and New Technologies in Education as a Challenge of Polish Reality. Perspect. Innov. Econ. Bus. 2014, 14, 109–112. [Google Scholar] [CrossRef]

- Duda, E.; Dziurzyński, K. Digital Competence Learning in Secondary Adult Education in Finland and Poland. Int. J. Pedagogy Innov. New Technol. 2019, 6, 22–32. [Google Scholar] [CrossRef]

- Góralczyk, N. Identity and Attitudes Towards The Past, Present and Future of Student Teachers in The Digital Teacher of English Programme. Teaching English with Technology 2020, 20, 42–65. [Google Scholar]

- Hall, R. Systemu Certyfikacji ECDL–Wpływ Zmian w Procedurze Oceny Egzaminów Na Jakość Systemu Certyfikacji. Nierówności społeczne a wzrost gospodarczy 2015, 1, 192–204. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Guillen-Gamez, F.D.; Ruiz-Palmero, J.; Palacios-Rodríguez, A. Classification Models in the Digital Competence of Higher Education Teachers Based on the DigCompEdu Framework: Logistic Regression and Segment Tree. J. e-Learn. Knowl. Soc. 2021, 17, 49–61. [Google Scholar] [CrossRef]

- Eger, L. Learning a Jeho Aplikace (E-Learning and Its Applications); Západočeská univerzita v Plzni: Plzen, Czech, 2020. [Google Scholar]

- Jakešová, J.; Kalenda, J. Self-Regulated Learning: Critical-Realistic Conceptualization. Procedia Soc. Behav. Sci. 2015, 171, 178–189. [Google Scholar] [CrossRef]

- Mirke, E.; Kašparová, E.; Cakula, S. Adults’ Readiness for Online Learning in the Czech Republic and Latvia (Digital Competence as a Result of ICT Education Policy and Information Society Development Strategy). Period. Eng. Nat. Sci. (PEN) 2019, 7, 205. [Google Scholar] [CrossRef]

- Jakešová, J.; Gavora, P.; Kalenda, J.; Vávrová, S. Czech Validation of the Self-Regulation and Self-Efficacy Questionnaires for Learning. Procedia Soc. Behav. Sci. 2016, 217, 313–321. [Google Scholar] [CrossRef][Green Version]

- Tomczyk, Ł. Edukacja Osób Starszych. Seniorzy w Przestrzeni Nowych Mediów; Difin: Warszawa, Poland, 2015. [Google Scholar]

- Tomczyk, Ł.; Jáuregui, V.C.; de La Higuera Amato, C.A.; Muñoz, D.; Arteaga, M.; Oyelere, S.S.; Akyar, Ö.Y.; Porta, M. Are Teachers Techno-Optimists or Techno-Pessimists? A Pilot Comparative among Teachers in Bolivia, Brazil, the Dominican Republic, Ecuador, Finland, Poland, Turkey, and Uruguay. Educ. Inf. Technol. 2020, 26, 2715–2741. [Google Scholar] [CrossRef]

| Authors | Tool | Conclusions |

|---|---|---|

| Majewska (2020) [21] | Triangulation of tools, including a survey questionnaire | About 20% of respondents use ICT efficiently |

| Kiedrowicz (2018) [22] | Diagnostic tests | The Internet is most often used for entertainment and communication |

| Wobalis (2016) [23] | Triangulation of tools, including knowledge test | Digital literacy levels have been steadily declining for several years |

| Jedryczkowski (2019) [24] | Data from e-learning platform | Students of pedagogical faculties have a low level of information literacy and self-education in relation to operating e-learning platforms |

| Pulek, Staniek (2017) [25] | Diagnostic survey, self-evaluation | Students highly rate their skills in operating entertainment websites (e.g., social networking sites, music and video sites), while their skills in operating office software are much lower |

| Romaniuk, Łukasiewicz-Wieleba (2020) [26] | Diagnostic survey, self-evaluation | Most students rate their own digital skills as good |

| Word | Excel | Theoretical | |

|---|---|---|---|

| Mean | 0.599 | 0.347 | 0.617 |

| Std. error of mean | 0.019 | 0.018 | 0.009 |

| Median | 0.594 | 0.375 | 0.625 |

| Mode | 0.469 | 0.375 | 0.656 |

| Std. deviation | 0.150 | 0.146 | 0.098 |

| Skewness | 0.017 | 0.429 | −1.059 |

| Std. error of skewness | 0.304 | 0.302 | 0.214 |

| Kurtosis | 0.250 | −0.165 | 3.914 |

| Std. error of kurtosis | 0.599 | 0.595 | 0.425 |

| Shapiro–Wilk | 0.985 | 0.959 | 0.937 |

| P value of Shapiro–Wilk | 0.644 | 0.034 | <0.001 |

| Range | 0.719 | 0.656 | 0.688 |

| Minimum | 0.219 | 0.063 | 0.125 |

| Maximum | 0.938 | 0.719 | 0.813 |

| 25th percentile | 0.508 | 0.219 | 0.563 |

| 50th percentile | 0.594 | 0.375 | 0.625 |

| 75th percentile | 0.688 | 0.406 | 0.688 |

| 25th percentile | 0.508 | 0.219 | 0.563 |

| 50th percentile | 0.594 | 0.375 | 0.625 |

| 75th percentile | 0.688 | 0.406 | 0.688 |

| Variable | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| 1. Word–self-evaluation | — | ||||||

| 2. Excel–self-evaluation | 0.577 *** | — | |||||

| 3. PowerPoint–self-evaluation | 0.570 *** | 0.511 *** | — | ||||

| 4. Access–self-evaluation | 0.21 * | 0.478 *** | 0.208 * | — | |||

| 5. Theory–self-evaluation | 0.385 *** | 0.382 *** | 0.340 *** | 0.419 *** | — | ||

| 6. Theoretical test ECDL | 0.089 | 0.077 | 0.018 | −0.033 | 0.161 | — | |

| 7. Word ECDL | 0.354 ** | 0.128 | 0.181 | −0.143 | 0.060 | 0.283 * | — |

| 8. Excel ECDL | 0.273 * | 0.471 *** | 0.312 * | 0.152 | 0.223 | 0.235 | 0.067 |

| Model 1: Theoretical | Model 2: Word | Model 3: Excel | |||||||

|---|---|---|---|---|---|---|---|---|---|

| β | SE | p | β | SE | p | β | SE | p | |

| I can create a web page | −0.11 | 0.09 | 0.25 | 0.09 | 0.14 | 0.52 | 0.13 | 0.14 | 0.35 |

| I can work with a computer better than others | −0.11 | 0.14 | 0.44 | 0.35 | 0.24 | 0.15 | 0.16 | 0.25 | 0.52 |

| I can work on the Internet better than others | −0.07 | 0.15 | 0.65 | −0.02 | 0.26 | 0.95 | −0.23 | 0.26 | 0.36 |

| I can use a smartphone better than others | 0.22 | 0.13 | 0.10 | −0.25 | 0.23 | 0.29 | 0.32 | 0.20 | 0.11 |

| I can also use the Internet in a creative way | 0.01 | 0.09 | 0.89 | −0.12 | 0.14 | 0.39 | −0.09 | 0.14 | 0.54 |

| Experiment with new software | −0.14 | 0.10 | 0.18 | −0.07 | 0.15 | 0.63 | 0.05 | 0.17 | 0.79 |

| Ease of use of new software | 0.22 | 0.12 | 0.08 | 0.33 | 0.17 | 0.06 | 0.26 | 0.19 | 0.19 |

| Ease of use of new ICT devices | 0.13 | 0.12 | 0.29 | 0.08 | 0.18 | 0.63 | −0.03 | 0.18 | 0.87 |

| Prediction of using ICT in the professional work of an educator | −0.02 | 0.11 | 0.88 | 0.12 | 0.15 | 0.41 | −0.20 | 0.16 | 0.22 |

| Education using ICT is interesting | 0.05 | 0.11 | 0.63 | −0.24 | 0.17 | 0.17 | 0.31 | 0.16 | 0.06 |

| ICT used in teaching improves students’ concentration | −0.02 | 0.10 | 0.85 | −0.14 | 0.14 | 0.30 | −0.11 | 0.15 | 0.49 |

| ICT used in teaching increases student engagement | −0.06 | 0.12 | 0.60 | 0.14 | 0.20 | 0.49 | 0.06 | 0.16 | 0.71 |

| ICT used in teaching increases students’ interest in the subject matter | 0.05 | 0.12 | 0.66 | 0.01 | 0.19 | 0.94 | −0.21 | 0.17 | 0.24 |

| Modern schools need ICT | 0.11 | 0.12 | 0.37 | 0.06 | 0.20 | 0.77 | 0.18 | 0.19 | 0.34 |

| Level of computer science teaching in a secondary school | 0.00 | 0.12 | 0.98 | −0.28 | 0.20 | 0.17 | −0.25 | 0.16 | 0.13 |

| Equipment of a computer lab in a secondary school | 0.16 | 0.12 | 0.18 | −0.10 | 0.22 | 0.65 | 0.03 | 0.16 | 0.84 |

| Content-related preparation of secondary school computer science teachers | −0.06 | 0.11 | 0.56 | 0.16 | 0.17 | 0.35 | 0.24 | 0.17 | 0.16 |

| Assessment in computer science in secondary school | 0.10 | 0.10 | 0.32 | 0.20 | 0.14 | 0.17 | −0.08 | 0.15 | 0.62 |

| R = 0.412; R2 = 0.170; F = 1.245; p < 0.001 | R = 0.610; R2 = 0.372; F = 1.418; p < 0.001 | R = 0.602; R2 = 0.362; F = 1.389; p < 0.001 | |||||||

| Digital Competence | Self-Declarations | Knowledge and Skills Test |

|---|---|---|

| Advantages |

|

|

| Disadvantages |

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tomczyk, Ł. Declared and Real Level of Digital Skills of Future Teaching Staff. Educ. Sci. 2021, 11, 619. https://doi.org/10.3390/educsci11100619

Tomczyk Ł. Declared and Real Level of Digital Skills of Future Teaching Staff. Education Sciences. 2021; 11(10):619. https://doi.org/10.3390/educsci11100619

Chicago/Turabian StyleTomczyk, Łukasz. 2021. "Declared and Real Level of Digital Skills of Future Teaching Staff" Education Sciences 11, no. 10: 619. https://doi.org/10.3390/educsci11100619

APA StyleTomczyk, Ł. (2021). Declared and Real Level of Digital Skills of Future Teaching Staff. Education Sciences, 11(10), 619. https://doi.org/10.3390/educsci11100619