Abstract

Metacognition is a construct that is noteworthy for its relationship with the prediction and enhancement of student performance. It is of interest in education, as well as in the field of cognitive psychology, because it contributes to competencies, such as learning to learn and the understanding of information. This study conducted research at a state school in the Community of Madrid (Spain) with a sample of 130 students in Grade 3 of their primary education (8 years old). The research involved the use of a digital teaching platform called Smile and Learn, as the feedback included in the digital activities may have an effect on students’ metacognition. We analyzed the implementation of the intelligent platform at school and the activities most commonly engaged in. The Junior Metacognitive Awareness Inventory (Jr. MAI) was the measuring instrument chosen for the external evaluation of metacognition. The study’s results show a higher use of logic and spatial activities. A relationship is observed between the use of digital exercises that have specific feedback and work on logic and visuospatial skills with metacognitive knowledge. We discuss our findings surrounding educational implications, metacognition assessment, and recommendations for improvements of the digital materials.

1. Introduction

The study of knowledge and how it is developed, built, and regulated has been a feature of the literature on different constructs and variables since the research by James, Piaget, and Vygotsky. Many of these variables are now contained in the theories on metacognition and self-regulated learning [1,2,3]. Flavell [4,5] began to use the term metacognition in the 1980s, and its study has continued uninterruptedly since its consolidation in the 1980s and 1990s [1,6,7,8,9,10].

According to Flavell [5], the term “metacognition” may be described as the ability to know what one knows and how that knowledge is used. The theory propounded by Flavell [5] considers sundry variables that could be linked to metacognitive knowledge, such as tasks, strategies, and individual characteristics. Metacognitive knowledge is, therefore, defined as: “that part of your accumulated world knowledge that has to do with people as cognitive agents and their cognitive tasks, goals, actions and experiences” (p. 906, [5]). Brown [11] pursues another line of study, focusing on the understanding students have of their cognitive processes and their ability to regulate them. These frameworks have informed studies into students’ metacognitive development [1]. Interest in the study of metacognition reappeared in recent years in line with the need to develop the profiles of students capable of coping with future challenges [10,12,13]. This highlights the importance of metacognition in regulating and controlling learning [14,15,16]. These processes in may also establish an important relationship with other variables, such as students’ cognitive processes and motivation to perform classroom tasks [12,17,18,19,20,21,22]. This research stream includes several studies, such as those by Pintrich [2], Magno [9], and Schmitt and Sha [23]. Nevertheless, its nature as a multidimensional construct means that there is a wide diversity of definitions in the literature, depending on the interpretations or perspectives that scholars adopt for the relationships between the variables [8].

Besides being useful during schooling, the development of metacognitive skills and knowledge may be beneficial in the acquisition of competencies such as learning to learn [13]. Metacognition helps to bolster critical thinking and the assimilation of information, which are becoming crucial factors for the use of new technologies in our globalized world [12,20,24,25,26,27,28]. The literature in this academic field contains studies on memory-related metacognition [29], reading [11,23,30,31], and the subjects of mathematics [31,32,33] and sciences [24,34,35], along with others that focus the different variables related to metacognition for improving the learning process [15,19,36]. Most of these studies stress the importance of making students realize the different ways of learning through which they can regulate their cognitive processes [10,12].

It is worth understanding how, therefore, students begin to gain an awareness of their knowledge and how they develop their monitoring and regulating strategies. In turn, this metacognitive knowledge advances in the different stages of schooling, from early childhood education [13,20,23,37], with its improvements in use and comprehension from the ages of seven or eight, to adulthood [7,16,25]. Metacognitive regulation can also be worked on through the practice and experience acquired from the skills and strategies promoted in the students’ milieu [6,12]. Nevertheless, the development of this regulation is slower than the acquisition of metacognitive knowledge [23]. At this point, the teacher’s role is important because of a teacher’s ability to influence the student’s thinking process as it develops. To ensure that this process unfolds in the appropriate manner, students need to be provided with feedback on their progress, thereby helping them to acknowledge what they have learnt and to understand what processes they are using, as well as the knowledge acquired. This will enable them to establish a platform upon which they can regulate their improvement [7,12].

Digital teaching materials and games often provide feedback for students that help them in their decision-making and in improving their performance over the course of the activity [32,38,39,40]. For this material to fulfil the purpose of developing metacognition by working on cognitive processes through feedback, it needs to have certain pedagogical characteristics and an appropriate design (e.g., [32,41,42]. Digital materials may instill in students the necessary level of engagement for succeeding in tasks and repeating their success, according to the feedback obtained when continuing to improve with practice [24,28,38,41]. This could mean increasing the students’ long-term retention and learning abilities [39]. Nevertheless, there is still a need to continue exploring the variables that may be useful to build a design framework for digital materials to facilitate students’ learning and skill development [41,42]. The importance of the methods for implementing these materials and instructing teachers in their classroom use should also be highlighted [42].

Addressing cognitive processes through digital activities or games may be done to promote reflection processes. Working on these aspects, as well as on the students’ learning strategies, may aid metacognitive development [43,44]. Consideration should also be given to students’ cognitive limitations when choosing the material to be used in the classroom. These restrictions depend on the students’ development stage, as it may affect the understanding of and performance at the tasks the material [14,44]. In turn, the use of these digital materials in the classroom may provide teachers with support in the schooling processes that are most suited to their students, which will also guide the individual observations and assessments of student progress [41].

The present research analyzes the use of different activities by area of knowledge in the lessons of 3rd Grade students (8 years old). As a second aim, we explore the relations that the use of digital materials, with several kinds of feedback, have to metacognitive knowledge or its regulation among students in Grade 3 of their primary education.

The Smile and Learn Platform

Smile and Learn is an intelligent platform in the field of educational technologies. As of March 2020, this intelligent platform contains more than 5000 digital activities. These activities can be classified as games, quizzes, videos, and tales, all of which have been designed and supervised by a multidisciplinary team of educators. Most of these activities can be played at different levels of difficulty. The different activities are organized through categories into eight worlds according to the type of knowledge they represent. Worlds are related to various academic subjects: Science, Logic, Literacy, Emotions, and Arts. These areas provide teachers additional material and activities for use in their lessons [45]. Also, a recommendation system based on artificial intelligence is featured in the platform to recommend activities according to the children’s performance [46].

One advantage of the implementation and analysis of the Smile and Learn platform is that it includes activities for all subjects. This allows one to analyze usability of several activities during lessons. This process may reveal the preferences for different areas at school to use technological resources. In the same way, a learning analytics system is included to provide feedback to teachers based on student performance. The feedback graphics (Figure 1) via learning analytics illustrate the progress in percentages or right or wrong answers and the time needed to complete the activity.

Figure 1.

Graphics of the feedback for each game on the Smile and Learn platform: (a) Feedback graphics of the right and wrong answers each time played; (b) feedback of the progress from several play sessions and levels played.

The feedback included for the digital activities is designed to show the students their successes and mistakes, their time taken to perform the tasks, or a time counter of their undertakings, as well as their progress or increase in levels as they advance. These are activities that can be constantly repeated through different exercises to facilitate student instruction.

The following are a number of examples that could help develop metacognition: pairing activities, jigsaw puzzles, comparison exercises, activities for finding and selecting information, self-assessments, and activities that link knowledge to its application in everyday life, among others [7,12,13,17]. Within these activities, teachers may provide students with different types of feedback: summative, formative, indirect, direct, simple, complex, etc. [38,40,47,48]. Suitable feedback on each task’s performance might be based on the learning process through monitoring, guidance, and self-assessments for metacognitive development [10]. At the same time, feedback can help students self-regulate their learning, thereby reinforcing their engagement and the achievements of their goals [22,47].

According to these guidelines, the following are some of the characteristics that should not be ignored in the activities: initial self-assessment questions, the establishment of clear goals at the beginning of each activity, feedback on progress and areas for improvement, and the possibility of repeating the activity as often as necessary, among others [10]. Feedback is required to record achievements through student actions. This fulfils two basic requirements: providing information and helping draw up a new strategy to offer a response for achieving the goal [38]. Feedback can, therefore, be defined in this way—as the information the students receive about the efficacy of their actions and as an aspect that empowers their interest in persevering in the activity [49].

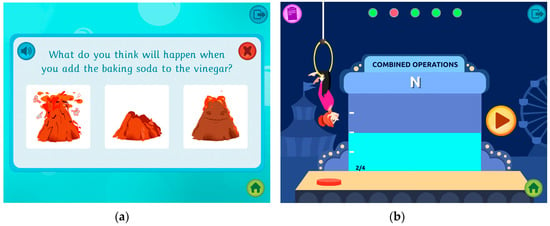

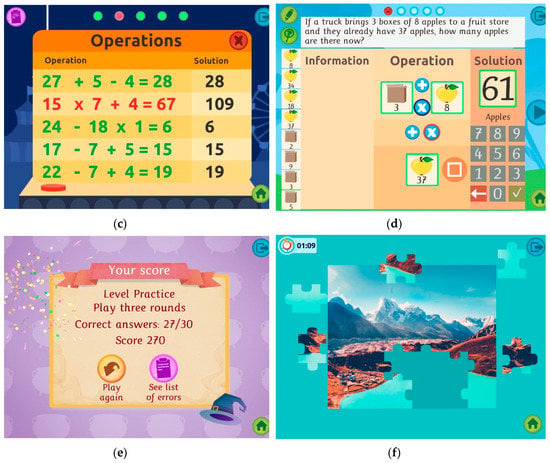

On the Smile and Learn platform, there are feedback differences between several worlds, described as follows (Figure 2):

Figure 2.

Screenshots of feedback examples at the activities: (a) Initial self-assessment questions for the experiment activity in the science world; (b) screen of the level progress in the adaptative calculation; on the top of the screenshot, the right and wrong answers can be assessed; (c) assessment of right and wrong answers in adaptative calculations; (d) feedback corrections to promote self-assessment; (e) final score screen for practice multiplication; (f) timed feedback for the spatial world in the puzzle activity.

- Science: This world features activities in the field of science. Feedback included during this activity includes initial self-assessment questions and right or wrong answers.

- Spatial: This world trains visual–spatial and cognitive–spatial skills. Feedback on these activities includes right or wrong answers during the activities and the time needed to complete them.

- Logic: This world works logic–mathematical skills. Feedback included in these platform activities is more variable than that in other worlds. Feedback includes right or wrongs answers, corrections and specific feedback for mistakes, the time needed to complete the activities, and the level reached during the progress of adaptative calculations.

- Literacy: These activities are based on tales, vocabulary, word games, etc. Feedback for these activities offers right or wrong answers.

- Emotions: This world has activities used to develop emotional skills. Feedback provided during these activities includes right or wrong answers, as well as the time needed to complete the activity (for some).

- Arts: Artistic activities that include right or wrong answers.

- Multiplayer: This activity includes games against the machine or classmates. Feedback includes right and wrong answers.

- An additional activity without feedback where the student manages the resources earned by the activities performed to build virtual villages.

2. Materials and Methods

2.1. Participants and Cohorts

The sample consisted of 130 students in Grade 3 (8 years old) at a state school in the Community of Madrid (Spain). The cohort was selected by means of non-probabilistic sampling. The school was selected from among several schools that joined the pilot groups within the Community of Madrid during the first year of implementation. Some characteristics for selection were: being a state school, having a sufficient number of digital devices for every child to use, engagement of the teachers with the platform, and collaborating with the research project. At the beginning of the school year, the students’ ages were as follows: Mean = 7.89; SD = 0.311. At the end of the year, the ages were: Mean = 8.49; SD = 0.503. There were 72 boys (55.38%) and 58 girls (44.62%).

2.2. Instruments

The questionnaire Junior Metacognitive Awareness Inventory (Jr. MAI) [50] was used for compiling data on the metacognition variable. Model A was used and adapted for children aged 8–11. This questionnaire consists of 12 questions, with an answer scale ranging from 1 to 3 and an α-Cronbach of 0.76 [50]. This questionnaire is composed of two factors that correspond to the metacognitive concept formulated by Brown [11].

- Metacognition Knowledge (K): This factor assesses students’ knowledge about their learning process. It consists of 6 items that provide the final score for this factor. Metacognitive knowledge corresponds to an understanding of cognition or of cognitive processes, focusing on declarative knowledge regarding “knowing about”. This involves knowledge on the strategies and procedures for resolving the task (processes of reading, writing, memory, problem-solving, etc.) and also includes understanding the effectiveness of the individual capabilities, skills, and experiences for performing a task [51,52].

- Metacognition Regulation (R): This scale assesses whether students recognize their regulation process in learning tasks, as well as if they apply this process when they study or work in class. R is built with 6 items that give the final score. Metacognitive regulation (or the regulation of activities that control thought and learning) focuses on procedural knowledge—in other words, “knowing how”. Three key processes are distinguishable within this aspect. First, there is the anticipation or planning of activities prior to a task’s resolution to anticipate activities and their outcomes. Then, there is the control, monitoring, and regulation of strategies during a task’s resolution, which are applied to a task’s resolution through the verification, correction, or review of the strategy used. Third, there is the assessment of the results obtained, according to the goals pursued and their efficacy [51,52].

Since the questionnaire was originally drafted in English, it was translated into Spanish to ensure that all the students can fully understand it. We followed the basic recommendations to adapt the questionnaire according Muniz, Elousa, and Hambleton [53]. The α-Cronbach is 0.56.

This questionnaire was selected after a literature review and was able to be applied to the age group selected for the study. Although research on metacognitive variables has increased in recent years, the evidence found in the literature is usually applied to older populations with better management of their metacognitive regulation (e.g., [14,53]). There are few questionnaires that assess metacognition at age 8. First, however, we decided to carry out a study on metacognition as it is a relevant construct for the academic lives of students. We also started with this analysis because children begin to use technology at very young ages and because we wanted to determine whether there is relationship between both variables at these ages.

On the other hand, most of the instruments designed for analyzing said data are based on interviews or self-assessments and self-reported questionnaires (e.g., [37,50,54,55]). This generates controversy when evaluating metacognitive variables. For 8-year-olds, carrying out a self-assessment of one’s abilities and processes [5,54] is psychologically difficult to perform and troublesome to communicate. Despite this, self-reports are the test most commonly used to collect data from large samples [56]. This study used the Jr. MAI questionnaire due to its simple application to the selected population and its extensive use in the literature, together with its counterpart for other ages: the Metacognitive Assessment Inventory (MAI) [8,26,32,54,57,58]. The instrument used for compiling metrics on the usage time of the digital material was the Smile and Learn platform itself, which was used during the present study with the class. Each activity played on Smile and Learn platform records the time and provides additional feedback on performance, allowing the creators to analyze and study general patterns of behavior to design more personalized experiences for users. This collection of usage time facilitates the analysis of the most commonly played areas and the time dedicated to each.

2.3. Procedure

The first step involved explaining the procedure to the school and seeking the families’ consent. The information provided included assuring the school community that the data gathered would be used solely for the research project in this study. For the assessment of the digital material and its relationship with the development of metacognition, a quasi-experimental study was conducted during the school day. Pre-test data were collected at the beginning of the school year in October 2018. Following this initial step, the teachers were instructed in the use of the platform, which they deployed during class time over six months. Post-test data were then collected in April 2018 following the same procedure. The questionnaire was administered by two external evaluators who responded to any queries the students might have had.

The teachers’ sessions, held on both a group and individual basis, involved showing the students how the Smile and Learn platform works, how to use its functions to customize their profiles, and how to prepare teaching units through the choice of applications (for example). Furthermore, to help teachers choose the material that could be used each year, a teacher’s book was compiled with the activities corresponding to each year according to the school curriculum of the Community of Madrid. Didactic guidelines and activities information were also provided (included as Supplementary Materials).

The purpose of the material developed by Smile and Learn is to complement and assist teachers. Based on this premise, and thanks to the platform’s unrestricted use at the school, we assessed the implementation and real-world use of the different applications (games, quizzes, videos, and tales) within the classroom. Teachers were free to choose the activities they wanted to work with in class to analyze their preferences. The time spent on each activity may reveal the temporal differences between each one of the worlds into which the activities are grouped according to the skills they address: science, spatial, logic, literacy, emotional, art, and multiplayer.

2.4. Data Analysis

The students were mapped according to their user data gathered by the platform. The users registered in the platform’s database were tracked in tandem with all their peers in their Grade 3 classes (8 years old) to obtain a 1:1 ratio. The time of use was calculated according to the time taken by the different activities that comprise each of the worlds.

The statistical software used for analyzing the data was SPSS Statistics 22. First, to analyze the usage, we applied descriptive statistics. These data answer the objective of evaluating the implementation and teachers’ preferences.

Secondly, this study weeks to verify whether there is a relationship with the metacognitive variables (knowledge and regulation) through the use of the Smile and Learn platform’s teaching applications. In order to test our hypotheses according to the stated goal, the two covered alternatives are described below:

- Relationships with the variable Metacognition knowledge:

- H0: There is no relationship between the use of digital activities with the incorporated feedback and metacognitive knowledge.

- H1: There is a relationship between the use of digital activities with the incorporated feedback and metacognitive knowledge.

- Relationships with the variable Metacognition regulation:

- H0: There is no relationship between the use of digital activities with the incorporated feedback and metacognitive regulation.

- H1: There is a relationship between the use of digital activities with the incorporated feedback and metacognitive regulation.

For the inter-subjects of this analysis, the data were analyzed using an ANOVA of repeated measures, with time of use as the covariable. The scores recorded for the metacognitive variables were taken as the dependent variables. H0 is discarded when p ≤ 0.05.

Our variables are quantitative. To define the variables for Metacognition knowledge and Metacognition regulation, we followed the Theorem of the Central Limit [59,60]. Moreover, the variables are a summation of several items that can be identified as interval variables. Likewise, the platform usage time variable is a ratio variable.

3. Results

3.1. Analysis of Usage

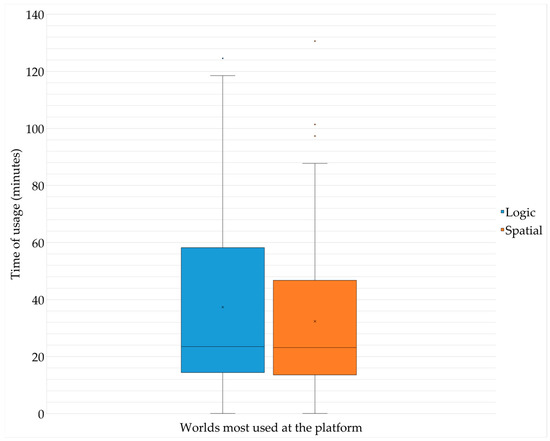

The analysis of the usage of each of the worlds during class time recorded the following percentages: Logic (86.92%), Spatial (83.08%), Arts (14.62%), Science (10%), Emotions (7.69%), Multiplayer (5.38%), and Literacy (3.85%).

Greater use was made of the applications related to logic and visuospatial aspects given unrestricted use at school. This study selected the worlds whose activities exceeded 50% usage. Thus, we focus on the Logic and Spatial worlds. The descriptive statistics of usage for the Logic and Spatial worlds are presented in Figure 3.

Figure 3.

Graphic of the descriptive statistical use for the Logic and Spatial worlds in the 3rd grade of primary education. Number of students who played Logic activities = 113. Average time engaging in Logic activities = 37.34 min. Number of students who played Spatial activities = 108. Average time spent with Spatial activities = 32.39 min.

The results obtained shows the teachers’ preferences for producing digital activity-based lessons that work Logic and Spatial skills. This use may indicate that teachers have a greater interest in working on these skills with technological resources. On the other hand, there activities may be the best designed or adapted to teaching needs.

3.2. Analysis of the Effects of Using Digital Material on Metacognition

To assess the metacognition variables, we used the Jr. MAI. student scores from the questionnaire, which are included in Table 1. A greater improvement in the scores was found for Metacognition Regulation. The descriptive statistics of all students who completed the pre-test and post-test are included. The differences between the number of students correspond to the experimental mortality of this type of research at school.

Table 1.

Descriptor statistics of the average according to the scores taken pre-test and post-test. The legend of the column titles is the following: N (number of students), AVG (average), SD (standard deviation), Min. (minimum value), and Max. (maximum value).

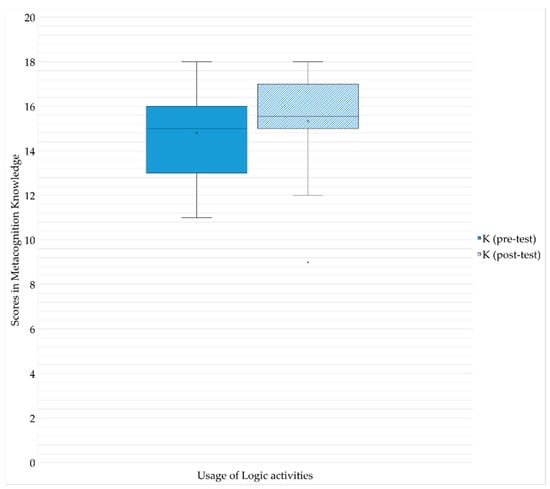

In order to observe the effects of using digital material for the scores obtained from the Jr. MAI questionnaire, we analyzed the results through an ANOVA of repeated measures. This statistical test and the structure of the database allows SPSS Statistics to recognize paired data to select the students who fit the defined conditions. First, the condition selected relates to students who completed pre-test and post-test and played in the Logic World. Second, to apply a statistical analysis, the condition involved students who played Spatial activities and completed both questionnaires. The results reveal significance with Metacognition Knowledge (K) through the use of teaching applications from the Logic and Spatial worlds. The relationship results of using Logic activities with Metacognition Knowledge are as follows: F (1) = 4.250, p = 0.043 (p < 0.05), ƞp2 = 0.058, p = 0.529 (Figure 4). With the use of logic applications, H0 (p ≤ 0.05) is discarded for the first assumption of the relationship with metacognitive knowledge. The relationship between the variables studied, however, cannot be discarded itself.

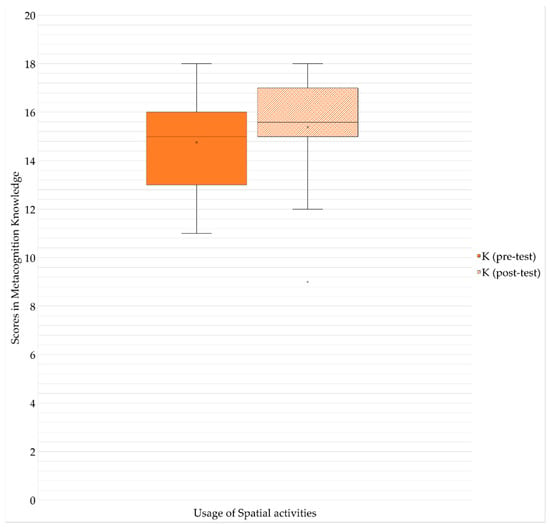

Figure 4.

Graphic representation of the differences between the descriptive statistical scores for Metacognition Knowledge (K) with the use of teaching applications that address logical–mathematical skills. The statistical representations correspond with students who completed the pre-test and post-test and played Logic activities. Number of students = 71.

The use of applications from the Spatial world is also significant, showing higher scores in Metacognition Knowledge (K), F (1) = 5.417, p = 0.023 (p < 0.05), ƞp2 = 0.077, p = 0.630. The power effect is higher for the use of Spatial applications than for Logic concerning their relationship to Metacognition Knowledge (K). These results may represent feedback for the time impact, which is more representative in the Spatial world (Figure 5). Thus, when p ≤ 0.05 is obtained, H0 is discarded relative to the first assumption. Thus, there may be relationships between the use of applications in the Spatial world with the incorporated feedback and metacognitive knowledge in the samples of this study.

Figure 5.

Graphic representation of the differences between the descriptive statistical scores for Metacognition Knowledge (K) with the use of teaching applications that address visuospatial skills. The statistical representations correspond to students who completed the pre-test and post-test and played Spatial activities. Number of students = 67.

Nevertheless, no significance was recorded for metacognition regulation. H0 is, thus, accepted (p > 0.05) for the second assumption. There may be no relationship between digital activities with the incorporated feedback and metacognitive regulation among our students. This event might be explained by the lack of a relationship between feedback and metacognitive regulation. Other factors that could affect these results are the age of the students or the limitations presented by this type of questionnaire. The lack of other worlds limits the comparisons and further analyses of the platform’s usability.

4. Discussion

The use at school shows teachers’ preferences for activities related to Logic and Spatial skills. The increased use of these activities may be due to their ease of implementation in the classroom. One of the limitations in this study is the lack of time in classes to explain the syllabus, which reduces the possibility of incorporating new methods that take time to implement [61,62]. Likewise, the scarce use of the worlds of Science and Literacy could be addressed by the fact that they require more time in the classroom for each of their activities to be completed. On the other hand, their greater use compared to other worlds, such as those of Multiplayer or Emotions, may be due to the differences in the number of activities included in each world. In the Logic and Spatial worlds, there are more activities than in the worlds of Multiplayer and Emotions (Cf. additional material). Thus, in the analysis of usage, it can be said that the use of Logic activities was chosen by teachers because of their time of use in the classroom, as they are used as complementary materials to reinforce the school curriculum.

However, Spatial world activities are also highly used. These activities are also short, although they are more focused on developing student skills or personal competence. Such implementation may be understood by their interest on developing other students’ competences or due to their prior academic performance. Thus, the teacher interest in reinforcing student learning is highlighted by using brief and easy-to-implement activities that they can use as support for, or as a supplement to, their classes [62,63].

The implementation of this material is considered satisfactory, as the teachers’ collaboration and interest has always been helpful. However, the motivations for teacher to use digital activities has not ensured good use of all materials [62]. The implementation related to the differences among areas may be questionable. This can be explained by the teachers’ need to receive more specific training or due to the training in the use of these materials themselves. Teachers’ training in the use of technological material in the classroom should help them to accompany students in the use of that technology [63]. In this way, greater benefits could have been obtained by adopting training intensification to assist in the use of said materials to impact student learning. This training, which is more focused on how to make the applications work with students, could help improve their dedication to worlds like Science and Literacy.

It would be necessary to continue investigating the teaching needs and areas of interest for the use of technology in the classroom. The design of activities can be improved by using materials that are more attuned to teaching and classroom needs. Quick activities, such as puzzles or math operations, may need to be planned in as many areas as possible to adapt to class rhythm.

The results obtained reveal relationships with metacognitive knowledge when using digital materials with feedback included and also with the activities in the Logic and Spatial worlds. The feedback included in these kinds of activities mainly involves goal setting (right or wrong answers) and time spent on the activity. This feedback could help students understand what they have to do and provide encouragement to achieve their goals. Further, this feedback may have helped the students in Grade 3 (8 years old) recognize what they understood about the activities that address logical–mathematical and visuospatial skills. Moreover, the characteristic feedback for activities such as adaptive calculations may help students understand their progress, as well as their metacognition knowledge. In turn, students with learning difficulties in the subject of mathematics may benefit from acquiring metacognitive knowledge, as these difficulties are often caused by not knowing what they have to do in the tasks [64]. Accordingly, significance is found in the relationship between the use of mathematics applications and metacognitive knowledge. Metacognition is related to mathematical skills, and this relationship may be two-way, whereby the mathematical exercises help to develop metacognitive knowledge [22,64]. In turn, mathematical skills are related to spatial skills, which might explain the significant results in both areas [64,65]. Obtaining results with greater effects on spatial skills raises the possibility that feedback for time and progress can be relevant for metacognitive knowledge. This means that addressing logical–mathematical and spatial skills through digital material might impact the cognitive work that facilitates metacognitive knowledge. Metacognitive instruction by feedback seems to aid students’ metacognition knowledge recognition. That could be most significant for students who lack favorable conditions and opportunities to spontaneously learn metacognitive knowledge and skills from referents like parents, peers, or teachers [4,36,37].

Nevertheless, no relationships were found with metacognitive regulation, although the between scores during the test found higher differences with that variable. The literature generally reports that most metacognitive activities emerge between the ages of 8 and 10 and are developed and perfected in later years [13,14]. This could explain the results for metacognition knowledge acquisition at age 8 but not for managing such knowledge to put it into practice. However, there are studies that seem to raise doubts about this, such as that by Whitebread et al. [37], who contends that the tools for measuring metacognition at early ages are not suited to children’s language skills. Flavell [5] contends that young children have limitations when applying metacognitive strategies, as they make scant use of their cognitive abilities. It is during the first years of primary education that children explore the development and management of their cognitive and metacognitive skills in step with the heightened demands of their school activities. As they progress through the various stages of schooling, children begin to more clearly understand what they know and how they learn, becoming able to determine their parameters of action [33,37]. Nevertheless, it should be noted that some processes unfold before others. Metacognitive skills such as monitoring or assessment seem to mature later than skills like planning. Thus, very young children can show a basic understanding of orientation, planning, and reflection if the task captures their interest and is suited to their level of understanding. This may be addressed in classrooms through different approaches to enable the children to develop metacognitive strategies for problem-solving [14,19,33]. Metacognitive skills are developed, for example, when the student faces the need to learn something new during stimulating situations like learning challenges. Depending on these challenges, the student must choose the correct strategy for achieving their goal. The metacognitive aspects of learning are related to the individual’s awareness of the requirements for resolving the tasks and the repertoire of strategies that can be used to complete those tasks successfully [9,12,15]. This means finding numerous possible strategies and practices for developing metacognitive skills. Moreover, if digital materials have an effect on metacognitive regulation, more complex feedback is needed. To help students apply their metacognition knowledge during tasks, more personalized feedback and activities to practice their metacognition (such as discussions) need to be designed.

These results are consistent with the findings reported in the study by Kurtz and Borkowski [29], in which there is a noticeable improvement in the results obtained but not in the metacognitive processes of knowledge transfer. The same applies to Ke [32], in which no significance was found in the regulation of learning when using digital activities, although the achievements made were indeed greater. This means that technology may provide a basis for assisting students, but there are still no digital materials that can help in their regulation, as this is a task that befalls the tutors or teachers that work with the students during their learning process. In this same vein, the study by Sáiz and Román [33] involving an intervention into metacognitive skills acquisition considered the importance of the student’s cognitive development in coping with the different processes that metacognition encompasses, obtaining significant results regarding the students’ self-management of the tasks.

Learning metacognitive strategies calls for systematic modelling involving constant supervision of how the task is performed and positive or ongoing feedback [17,25]. A further option for working on metacognitive strategies is to include them in the teaching material to enable students to understand when, why, and how to apply them [9,13,34]. This will help reinforce metacognitive knowledge and regulations, which can improve with instruction and training strategies by facilitating attention, the use of the students’ capacities, and greater awareness of comprehension issues [66]. This may enable students to learn more than just memory-based knowledge by showing them how to use strategies, coordinate them, extrapolate them to similar tasks, etc. [35,67]. As noted, metacognition interacts with numerous aspects of students, such as their skills, personalities, and learning styles. At the same time, metacognition converges with other attributes linked to the skills required for success at school [67]. Nevertheless, it is not easy to develop the thought processes and self-assessments required in this learning process to improve the development of cognitive and metacognitive skills [17].

Metacognition regulation and knowledge are complementary, which requires one to specify the type of process or knowledge involved when addressing a metacognitive study. Metacognition seeks to study the knowledge of diverse mental operations and determine how, when, and why we should use them [52]. An analysis of the relationship between metacognition and experience reveals that metacognitive experiences are interrelated and develop as experience is gained. In other words, the experiences linked to metacognitive errors affect the student’s metacognitive system. On the other hand, metacognitive skills may be fostered by a student’s experiences [5]. As an individual’s experience increases in the field, together with their availability and production, the use of associated strategies increases [2]. The incorporation of strategies in teaching for the development of metacognition may be an alternative way to improve the learning process and provide support for children with special educational needs. However, this debate should focus on finding the right methods and activities to begin implementing strategies and improve students’ metacognitive processes.

The globalization of modern society and advances in new technologies are prompting constant changes that force education to deal with new challenges. Academic instruction, therefore, has to adapt to these changing times by developing the competencies that hone the skills and attitudes that permit lifelong learning [26,27]. Today’s children are no longer the only ones that have to learn, as adults also need skills to recognize, assess, and reconstruct existing knowledge to tackle everyday challenges in the workplace. Herein lies the importance of metacognition in education for fostering a society that can evolve, thus revealing the need to adopt strategies for learning, thinking, managing information, and equipping oneself with instruments to ensure that students are self-reliant in their personal development [19,21,57].

By including feedback on the students’ progress and performance in the tasks, digital materials could help students personalize their learning, as well as foment the assessment and support processes the students require to develop their metacognitive skills [24,41,42]. This would lead to a more effective impact on the students’ performance in diverse knowledge areas by making both student and teacher aware of the student’s learning needs. Progress in this matter requires a more detailed study of the instructional processes that underpin digital materials of this nature through feedback for knowledge acquisition and the development of metacognitive regulation [17,24,39]. In turn, it has also been observed that, as a teaching support, the classroom use of digital materials and games is more effective when students are involved in peer learning or teamwork in which they take part in group tutorials [7,13,32,39]. This means that digital materials should also be designed to facilitate these lines of work in the classroom.

4.1. Study Limitations

Metacognition refers to the mental processes of the highest order involved in learning [8,14]. Assessing or measuring metacognitive skills (such as knowledge or regulation) requires awareness and understanding of what is being done (and how) to inform the evaluator [8]. The studies associated with metacognition involve numerous related terms, such as metacognitive beliefs, metacognitive knowledge, metamemory, metacognitive experiences, metacognitive skills, learning strategies, self-regulation, self-control, and self-assessment [14,53]. There is an explicit limitation in the literature in the metrics used to assess metacognitive regulation and knowledge [25]. As noted by Ke [32] and Schmitt and Sha [23], a quantitative metacognitive study does not provide results that are as representative as those obtained qualitatively, as it offers a report that calls upon children to self-assess themselves. In the second case, observation during these processes could indicate whether the students are using metacognitive strategies for regulating their learning processes but do not know how to express those strategies in words or in a self-report. Nevertheless, the students may also be familiar with their metacognitive strategies or have metacognitive knowledge, although this does not mean this knowledge is put into practice in an appropriate manner [23]. This aspect could be addressed through classroom practice or activities that help the students acknowledge what they know and how to transfer that knowledge to different tasks. The literature agrees, however, that the training of metacognitive skills is of use to the learning process [19,33,43]. It would be expedient, nonetheless, to regulate these practices or training processes to also support the knowledge transfer process [23,29,33]. Furthermore, there is a need to work on self-knowledge, how one learns, what tools can be used, and how to set learning targets to help students acknowledge and regulate their knowledge [2,5,14,15].

4.2. Importance of Activities Designed for Learning and Training Metacognitive Skills

These methods for analyzing metacognitive processes could be improved via the assistance of technology through computer activities, as posited in the study of Veenman and Elshout [68], or through online courses, as suggested by Pellas [36]. This requires incorporating the parameters appropriate for data-gathering to assess the variables involved in metacognitive processes through smart platforms [21] or following pre-established classification models, such as the one by Zhang, Franklin, and Dasgupta [69]. This means that activities could be regulated in line with the students’ progress, providing the teacher with a guide on how to support each student’s metacognitive regulation and providing more personalized feedback based on also helping metacognitive regulation and not only metacognitive knowledge. The drafting of longitudinal studies on this importance is also pertinent for determining these assessment methods. Once the importance of metacognitive regulation and knowledge to student development and academic progress has been established, as reported by Perry et al. [10], the next step would ideally involve the development of instruments for assessing metacognition in action and the long-term impacts that metacognitive development might have.

There is still a need to continue working on feedback from the relevant platforms to ensure that feedback is not just summative or informative but instead more personal and detailed to help each pupil think more about his or her progress and how digital material may facilitate metacognitive regulation [47]. Explaining and providing more detailed feedback on the efficacy of tasks could also help the students improve (as reported by Cameron and Dwyer [38]) and increase their activity [40]. In their findings, Erhel and Jamet [48] contend that the use of instructions in educational games or activities, along with continuous feedback on the student’s progress, provides more significant learning. Instruction is still needed when choosing games and activities to teach a specific strategy or provide a step forward in the development of the relevant skills, which involves starting with simple activities [13]. This allows the thought process related to the purpose of that particular game or activity to be more explicit.

A further way to enhance these studies is to explore different implementation and collaboration options with teachers for the use of such games in class, as well as to continue investigating ways to improve these educational activities. One should not ignore the fact that metacognition is a multidimensional construct [15]. The more variables that are gathered depending on metacognitive processes, the closer we approach a suitable model for metacognitive instruction and measurement.

5. Conclusions

This research analyzed the use of the platform Smile and Learn in the 3rd Grade (8 years old) at a state school, as well as the relationship between the feedback of student knowledge and the regulation of metacognition. In summary, the assessment of the platform’s usage was satisfactory for the activities included in the topic-related Logic and Spatial worlds. Digital activities in these areas are preferred by teachers. More research is needed to improve teachers’ training and their implementation of knowledge from other areas. For the activity design, further analytics empowering usability characteristics need to be developed.

Secondly, this study examined the relationships between feedback and metacognitive variables (knowledge and regulation). Some types of feedback from the activities, such as time or correct results, revealed relationships with the effects of knowledge metacognition. It is important to continue analyzing more specific relationships to improve digital materials to be implemented in class. This focus may improve the digital design of such activities.

Thus, digital materials should be designed with more personalized feedback, and specific tools for assessing metacognition via artificial intelligence should be developed to analyze their effects. For that purpose, variables that define the metacognition process can be defined as metrics to be collected via artificial intelligence through digital activities. This could support teachers in their evaluative work and aid student progress. Moreover, this data collection may help researchers find more relationships among the variables that involve metacognitive skills.

However, understanding the role that age plays is key to assessing these metacognitive processes. These complex processes start around the age of 8, which is a limitation for measuring such processes through the self-assessments of very young students. As a final remark, metacognition skills are relevant for student learning. Thus, teacher support is needed for successful training. In this sense, it will be urgent and decisive for the field to ensure studies on metacognition remain linked with new technology and future advances in the field.

Ethical Statements

Added in the cover letter. This study was carried out following the guidelines in accordance with the Organic Law 3/2018, passed on December 5th and Article 13 of the EU 2016/679 Law, and acts in accord with the European General Data Protection Regulation (GDPR). For more information you can go to Smile and Learn website: https://smileandlearn.com/privacy-policy/?lang=en. This project was reviewed and approved by the Research Ethics Committee of the University Camilo José Cela (CEI-UCJC).

Supplementary Materials

Didactic guides of the content, methodology, and tutorials used in this study can be found online at the following link: https://smileandlearn.com/schools/?lang=en. The Smile and Learn platform can be download from several app stores: Android: https://play.google.com/store/apps/details?id=net.smileandlearn.libraryandhl=en; iOS: https://apps.apple.com/es/app/smile-and-learn/id1062523369?l=en; Windows: https://www.microsoft.com/en-us/p/smile-and-learn-educational-games-for-kids/9mvxkgmmbknt?activetab=pivot:overviewtab. Part of this content has free access of use. If you want to have access to the whole platform, you can subscribe via the following link: https://payments.smileandlearn.com/payments?l0kl3=en. Moreover, if you are a teacher or an educational center, you can ask for more information at info@smileandlearn.com. Data can be accessed by contacting Smile and Learn or by request from the corresponding author.

Author Contributions

Methodology, N.L.N.-M. and M.Á.P.-N.; software, A.B.; investigation, N.L.N.-M. and A.B.; data curation, N.L.N.-M.; formal analysis, N.L.N.-M. and M.Á.P.-N.; resources, N.L.N.-M.; writing—original draft preparation, N.L.N.-M.; writing—review and editing, N.L.N.-M., A.B. and M.Á.P.-N.; visualization, N.L.N.-M. and A.B.; supervision, M.Á.P.-N.; project administration, M.Á.P.-N.; funding acquisition, N.L.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Community of Madrid ‘Industrial PhD grants’, under project number IND2017/SOC-7874.

Acknowledgments

Special mention to Alejandro Cardeña Martínez for his support at the field of assessment and UX Research, and his feedbacks contributions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Boekaerts, M. Metacognitive experiences and motivational state as aspects of self-awareness: Review and discussion. Eur. J. Psychol. Educ. 1999, 14, 571–584. [Google Scholar] [CrossRef]

- Pintrich, P.R. The role of metacognitive knowledge in learning, teaching, and assessing. Theory Into Pract. 2002, 41, 219–225. [Google Scholar] [CrossRef]

- Fox, E.; Riconscente, M. Metacognition and self-regulation in James, Piaget, and Vygotsky. Educ. Psychol. Rev. 2008, 20, 373–389. [Google Scholar] [CrossRef]

- Flavell, J.H. First discussant’s comments: What is memory development the development of? Hum. Dev. 1971, 14, 272–278. [Google Scholar] [CrossRef]

- Flavell, J.H. Metacognition and cognitive monitoring: A new area of cognitive developmental inquiry. Am. Psychol. 1979, 34, 906–911. [Google Scholar] [CrossRef]

- Garner, R.; Alexander, P.A. Metacognition: Answered and unanswered questions. Educ. Psychol. 1989, 24, 143–158. [Google Scholar] [CrossRef]

- Fisher, R. Thinking about thinking: Developing metacognition in children. Early Child Dev. Care 1998, 141, 1–15. [Google Scholar] [CrossRef]

- Baker, L.; Cerro, L. Assessing metacognition in children and adults. In Issues in the Measurement of Metacognition; Schraw, G., Impara, J., Eds.; Buros Institute of Mental Measurements: Lincoln, NE, USA, 2000; pp. 99–145. Available online: https://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1003&context=burosmetacognition (accessed on 9 December 2019).

- Magno, C. Investigating the Effect of school ability on self-efficacy, learning approaches, and metacognition. Online Submiss. 2009, 18, 233–244. Available online: https://files.eric.ed.gov/fulltext/ED509128.pdf (accessed on 9 December 2019). [CrossRef]

- Perry, J.; Lundie, D.; Golder, G. Metacognition in schools: What does the literature suggest about the effectiveness of teaching metacognition in schools? Educ. Rev. 2019, 71, 483–500. [Google Scholar] [CrossRef]

- Brown, A.L. Metacognitive development and reading. In Theoretical Issues in Reading Comprehension; Spiro, R.J., Bruce, B.C., Brewer, W.F., Eds.; Erlbaum: Hillsdale, NJ, USA, 1980; pp. 453–481. [Google Scholar]

- Vermunt, J.D. Metacognitive, cognitive and affective aspects of learning styles and strategies: A phenomenographic analysis. High. Educ. 1996, 31, 25–50. [Google Scholar] [CrossRef]

- Chatzipanteli, A.; Grammatikopoulos, V.; Gregoriadis, A. Development and evaluation of metacognition in early childhood education. Early Child Dev. Care 2014, 184, 1223–1232. [Google Scholar] [CrossRef]

- Veenman, M.V.; Van Hout-Wolters, B.H.; Afflerbach, P. Metacognition and learning: Conceptual and methodological considerations. Metacogn. Learn. 2006, 1, 3–14. [Google Scholar] [CrossRef]

- Efklides, A. The role of metacognitive experiences in the learning process. Psicothema 2009, 21, 76–82. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.1031.6590&rep=rep1&type=pdf (accessed on 26 April 2018). [PubMed]

- Veenman, M.V.; Bavelaar, L.; De Wolf, L.; Van Haaren, M.G. The on-line assessment of metacognitive skills in a computerized learning environment. Learn. Individ. Differ. 2014, 29, 123–130. [Google Scholar] [CrossRef]

- Casado Goti, M. Metacognición y motivación en el aula. Rev. Psicodidáct. 1998, 6, 99–107. Available online: https://www.redalyc.org/pdf/175/17514484009.pdf (accessed on 26 April 2018).

- Mayer, R.E. Cognitive, metacognitive, and motivational aspects of problem solving. Instr. Sci. 1998, 26, 49–63. [Google Scholar] [CrossRef]

- Azevedo, R. Theoretical, conceptual, methodological, and instructional issues in research on metacognition and self-regulated learning: A discussion. Metacogn. Learn. 2009, 4, 87–95. [Google Scholar] [CrossRef]

- Lai, E.R. Metacognition: A literature review. Always Learn. Pearson Res. Rep. 2011, 24, 1–40. Available online: http://images.pearsonassessments.com/images/tmrs/metacognition_literature_review_final.pdf (accessed on 25 June 2018).

- Winne, P.H.; Baker, R.S. The potentials of educational data mining for researching metacognition, motivation and self-regulated learning. JEDM 2013, 5, 1–8. [Google Scholar] [CrossRef]

- Karaali, G. Metacognition in the classroom: Motivation and self-awareness of mathematics learners. PRIMUS 2015, 25, 439–452. [Google Scholar] [CrossRef]

- Schmitt, M.C.; Sha, S. The developmental nature of metacognition and the relationship between knowledge and control over time. J. Res. Read. 2009, 32, 254–271. [Google Scholar] [CrossRef]

- Huffaker, D.A.; Calvert, S.L. The new science of learning: Active learning, metacognition, and transfer of knowledge in e-learning applications. J. Educ. Comput. Res. 2003, 29, 325–334. [Google Scholar] [CrossRef]

- Georghiades, P. From the general to the situated: Three decades of metacognition. Int. J. Sci. Educ. 2004, 26, 365–383. [Google Scholar] [CrossRef]

- Magno, C. The role of metacognitive skills in developing critical thinking. Metacogn. Learn. 2010, 5, 137–156. [Google Scholar] [CrossRef]

- Allen, J.P.; Van der Velden, R.K.W. Skills for the 21st Century: Implications for Education; ROA Research Memoranda; Researchcentrum voor Onderwijs en Arbeidsmarkt, Faculteit der Economische Wetenschappen: Maastricht, The Netherlands, 2012; Volume 11, Available online: http://staff.uks.ac.id/%23LAIN%20LAIN/KKNI%20DAN%20AIPT/Undangan%20Muswil%2023-24%20Jan%202016/references/kkni%20dan%20skkni/referensi%20int%20dan%20kominfo/job%20descriptions/mobile%20computing/file25776.pdf (accessed on 9 December 2019).

- De Freitas, S.I. Using games and simulations for supporting learning. Learn. Media Technol. 2006, 31, 343–358. [Google Scholar] [CrossRef]

- Kurtz, B.E.; Borkowski, J.G. Children’s metacognition: Exploring relations among knowledge, process, and motivational variables. J. Exp. Child Psychol. 1984, 37, 335–354. [Google Scholar] [CrossRef]

- Paris, S.G.; Oka, E.R. Children’s reading strategies, metacognition, and motivation. Dev. Rev. 1986, 6, 25–56. [Google Scholar] [CrossRef]

- Borkowski, J.G. Metacognitive theory: A framework for teaching literacy, writing, and math skills. J. Learn. Disabil. 1992, 25, 253–257. [Google Scholar] [CrossRef]

- Ke, F. Computer games application within alternative classroom goal structures: Cognitive, metacognitive, and affective evaluation. Educ. Technol. Res. Dev. 2008, 56, 539–556. [Google Scholar] [CrossRef]

- Sáiz, M.C.; Román, J.M. Entrenamiento metacognitivo y estrategias de resolución de problemas en niños de 5 a 7 años. Int. J. Psychol. Res. 2011, 4, 9–19. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=3904244 (accessed on 10 September 2019).

- Maturano, C.I.; Soliveres, M.A.; Macías, A. Estrategias cognitivas y metacognitivas en la comprensión de un texto de Ciencias. Enseñ. Cienc. Rev. Investig. Exp. Didáct. 2002, 20, 415–426. Available online: https://www.raco.cat/index.php/Ensenanza/article/download/21831/21665/0 (accessed on 10 September 2019).

- Schraw, G.; Crippen, K.J.; Hartley, K. Promoting self-regulation in science education: Metacognition as part of a broader perspective on learning. Res. Sci. Educ. 2006, 36, 111–139. [Google Scholar] [CrossRef]

- Pellas, N. The influence of computer self-efficacy, metacognitive self-regulation and self-esteem on student engagement in online learning programs: Evidence from the virtual world of Second Life. Comput. Hum. Behav. 2014, 35, 157–170. [Google Scholar] [CrossRef]

- Whitebread, D.; Coltman, P.; Pasternak, D.P.; Sangster, C.; Grau, V.; Bingham, S.; Almeqdad, Q.; Demetriou, D. The development of two observational tools for assessing metacognition and self-regulated learning in young children. Metacogn. Learn. 2009, 4, 63–85. [Google Scholar] [CrossRef]

- Cameron, B.; Dwyer, F. The effect of online gaming, cognition and feedback type in facilitating delayed achievement of different learning objectives. J. Interact. Learn. Res. 2005, 16, 243–258. Available online: https://www.learntechlib.org/p/5896/ (accessed on 10 September 2019).

- Wouters, P.; Van Nimwegen, C.; Van Oostendorp, H.; Van Der Spek, E.D. A meta-analysis of the cognitive and motivational effects of serious games. J. Educ. Psychol. 2013, 105, 249–265. [Google Scholar] [CrossRef]

- Lyons, E.J. Cultivating engagement and enjoyment in exergames using feedback, challenge, and rewards. Games Health J. 2015, 4, 12–18. [Google Scholar] [CrossRef]

- Bellotti, F.; Ott, M.; Arnab, S.; Berta, R.; De Freitas, S.; Kiili, K.; De Gloria, A. Designing serious games for education: From pedagogical principles to game mechanisms. In Proceedings of the 5th European Conference on Games Based Learning, Athens, Greece, 20–21 October 2011; pp. 26–34. Available online: https://hal.archives-ouvertes.fr/docs/00/98/58/00/PDF/BELLOTTI_ET_AL.pdf (accessed on 18 November 2019).

- Arnab, S.; Clarke, S. Towards a trans-disciplinary methodology for a game-based intervention development process. Br. J. Educ. Technol. 2017, 48, 279–312. [Google Scholar] [CrossRef]

- Hessels-Schlatter, C. Development of a theoretical framework and practical application of games in fostering cognitive and metacognitive skills. J. Cogn. Educ. Psychol. 2010, 9, 116–138. [Google Scholar] [CrossRef]

- Blumberg, F.C.; Fisch, S.M. Introduction: Digital games as a context for cognitive development, learning, and developmental research. New Dir. Child Adolesc. Dev. 2013, 139, 1–9. [Google Scholar] [CrossRef]

- Lara Nieto-Márquez, N.; Baldominos, A.; Cardeña Martínez, A.; Pérez Nieto, M.Á. Un análisis exploratorio de la implementación y uso de una plataforma inteligente para el aprendizaje en educación primaria. Appl. Sci. 2020, 10, 983. [Google Scholar] [CrossRef]

- Baldominos, A.; Quintana, D. Revisión de interacción basada en datos de una aplicación. Sensors 2019, 19, 1910. [Google Scholar] [CrossRef] [PubMed]

- Butler, D.; Winne, P. Feedback and self-regulated learning: A theoretical synthesis. Rev. Educ. Res. 1995, 65, 245–281. [Google Scholar] [CrossRef]

- Erhel, S.; Jamet, E. Digital game-based learning: Impact of instructions and feedback on motivation and learning effectiveness. Comput. Educ. 2013, 67, 156–167. [Google Scholar] [CrossRef]

- Lieberman, D.A.; Bates, C.H.; So, J. Young children’s learning with digital media. Comput. Sch. 2009, 26, 271–283. [Google Scholar] [CrossRef]

- Sperling, R.A.; Howard, B.C.; Miller, L.A.; Murphy, C. Measures of children’s knowledge and regulation of cognition. Contemp. Educ. Psychol. 2002, 27, 51–79. [Google Scholar] [CrossRef]

- Baker, L.; Brown, A. Metacognitive skills and reading. In Handbook of Reading Research; Pearson, P.D., Ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1984; Volume 1, pp. 1–91. Available online: https://files.eric.ed.gov/fulltext/ED195932.pdf (accessed on 6 April 2020).

- Burón, J. Enseñar a Aprender: Introducción a la Metacognición; Mensajero: Bilbao, Spain, 1993. [Google Scholar]

- Muñiz, J.; Elosua, P.; Hambleton, R.K. Directrices para la traducción y adaptación de los tests: Segunda edición. Psicothema 2013, 25, 151–157. [Google Scholar] [CrossRef]

- Schraw, G.; Dennison, R.S. Assessing metacognitive awareness. Contemp. Educ. Psychol. 1994, 19, 460–475. Available online: http://wiki.biologyscholars.org/@api/deki/files/99/=Schraw1994.pdf (accessed on 26 April 2018). [CrossRef]

- Sperling, R.A.; Howard, B.C.; Staley, R.; DuBois, N. Metacognition and self-regulated learning constructs. Educ. Res. Eval. 2004, 10, 117–139. [Google Scholar] [CrossRef]

- Karlen, Y. Differences in students’ metacognitive strategy knowledge, motivation, and strategy use: A typology of self-regulated learners. J. Educ. Res. 2016, 109, 253–265. [Google Scholar] [CrossRef]

- Zyromski, B.; Mariani, M.; Kim, B.; Lee, S.; Carey, J. The impact of student success skills on students’ metacognitive functioning in a naturalistic school setting. Prof. Couns. 2017, 7, 33–44. Available online: https://eric.ed.gov/?id=EJ1159653 (accessed on 21 May 2018). [CrossRef][Green Version]

- Ning, H.K. The bifactor model of the junior Metacognitive Awareness Inventory (Jr. MAI). Curr. Psychol. 2019, 38, 367–375. [Google Scholar] [CrossRef]

- Fontanelli, O.; Miramontes, P.; Mansilla, R. Distribuciones de probabilidad en las ciencias de la complejidad: Una perspectiva contemporánea. arXiv Prepr. 2019, arXiv:2002.09263. Available online: https://arxiv.org/ftp/arxiv/papers/2002/2002.09263.pdf (accessed on 7 April 2020).

- Dagnino, J. La distribución normal. Rev. Chil. Anest. 2014, 43, 116–121. Available online: http://revistachilenadeanestesia.cl/PII/revchilanestv43n02.08.pdf (accessed on 7 April 2020). [CrossRef]

- Baek, Y.K. What hinders teachers in using computer and video games in the classroom? Exploring factors inhibiting the uptake of computer and video games. Cyberpsychol. Behav. 2008, 11, 665–671. [Google Scholar] [CrossRef]

- Uluyol, Ç.; Şahin, S. Elementary school teachers’ ICT use in the classroom and their motivators for using ICT. Br. J. Educ. Technol. 2016, 47, 65–75. [Google Scholar] [CrossRef]

- Kreijns, K.; Van Acker, F.; Vermeulen, M.; Van Buuren, H. What stimulates teachers to integrate ICT in their pedagogical practices? The use of digital learning materials in education. Comput. Hum. Behav. 2013, 29, 217–225. [Google Scholar] [CrossRef]

- Cornoldi, D.L.C. Mathematics and metacognition: What is the nature of the relationship? Math. Cogn. 1997, 3, 121–139. [Google Scholar] [CrossRef]

- Bravelier, D.; Green, C.S.; Pouget, A.; Schrater, P. Brain plasticity through life span: Learning to learn and action video games. Annu. Rev. Neurosci. 2012, 35, 391–416. [Google Scholar] [CrossRef]

- Corno, L. The metacognitive control components of self-regulated learning. Contemp. Educ. Psychol. 1986, 11, 333–346. [Google Scholar] [CrossRef]

- Sternberg, R. Metacognition, abilities, and developing expertise: What makes an expert student? Instr. Sci. 1998, 26, 127–140. [Google Scholar] [CrossRef]

- Veenman, M.V.; Elshout, J.J. Differential effects of instructional support on learning in simultation environments. Instr. Sci. 1994, 22, 363–383. [Google Scholar] [CrossRef]

- Zhang, Z.; Franklin, S.; Dasgupta, D. Metacognition in software agents using classifier systems. In Proceedings of the AAAI/IAAI, Madison, WI, USA, 27–29 July 1988; pp. 83–88. Available online: http://www.aaai.org/Papers/AAAI/1998/AAAI98-012.pdf (accessed on 21 May 2018).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).