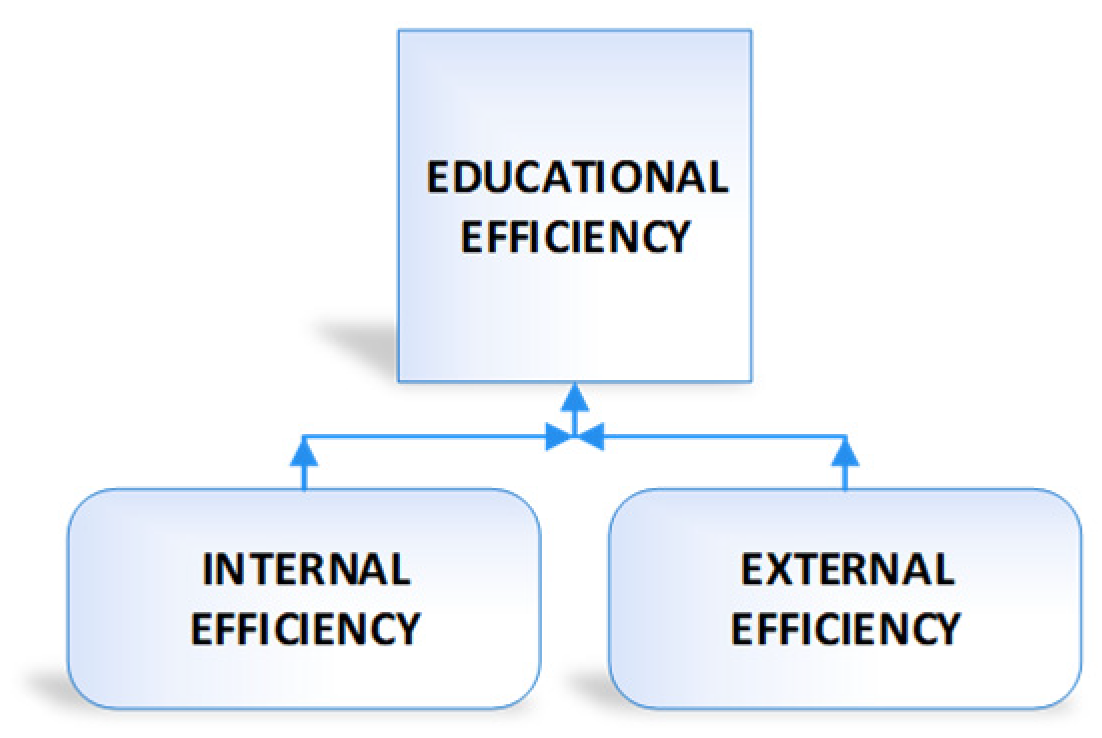

Conceptual Model of Measuring MHEI Efficiency

Abstract

:1. Introduction

2. Literature Overview

- Are all the students ready for labour market upon the completion of their education?

- To what extent is the system available to students with different previous education?

- Do the differences in previous education affect students’ success?

- What is the percentage of scientific productivity?

- How does the institution utilise the resources it has?

- What is the average duration of studies per student?

- Are contemporary methods used in teaching and what is their efficiency?

3. Conceptual Model of Measuring MHEI Efficiency

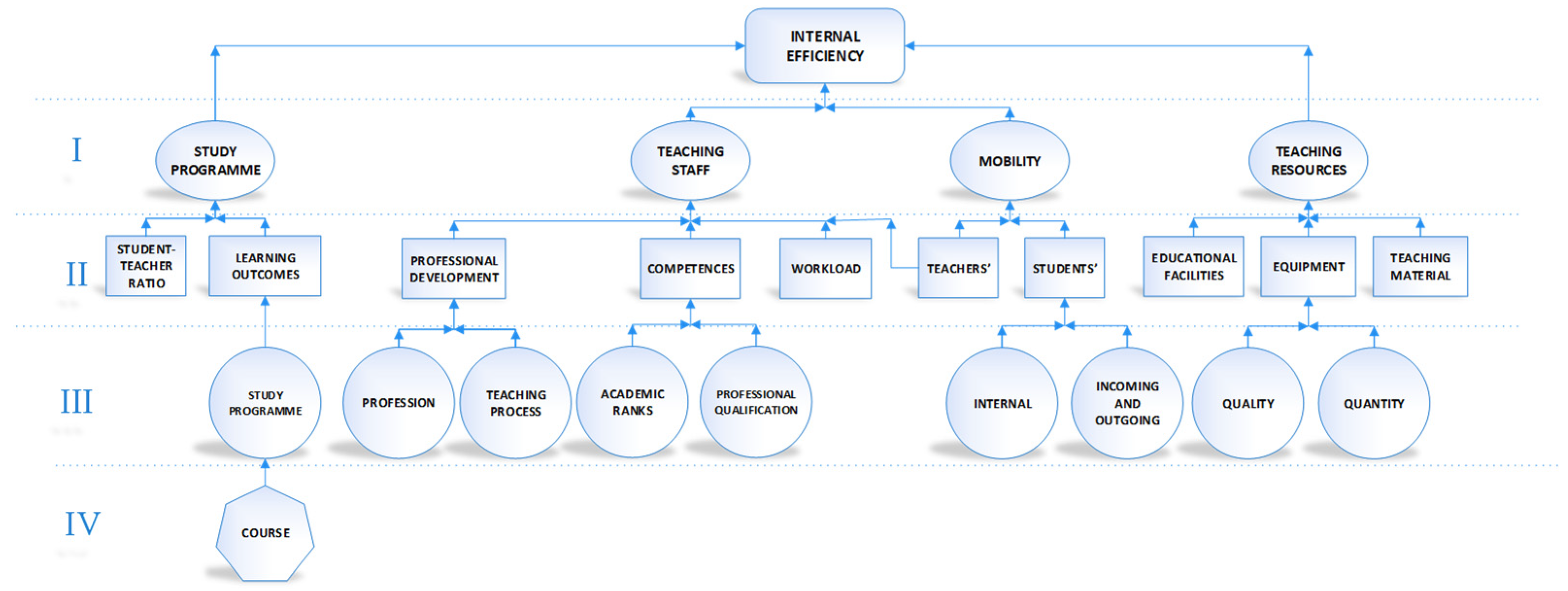

3.1. Internal Efficiency

3.1.1. Study Programme

3.1.2. Teaching Staff

3.1.3. Mobility

3.1.4. Teaching Resources

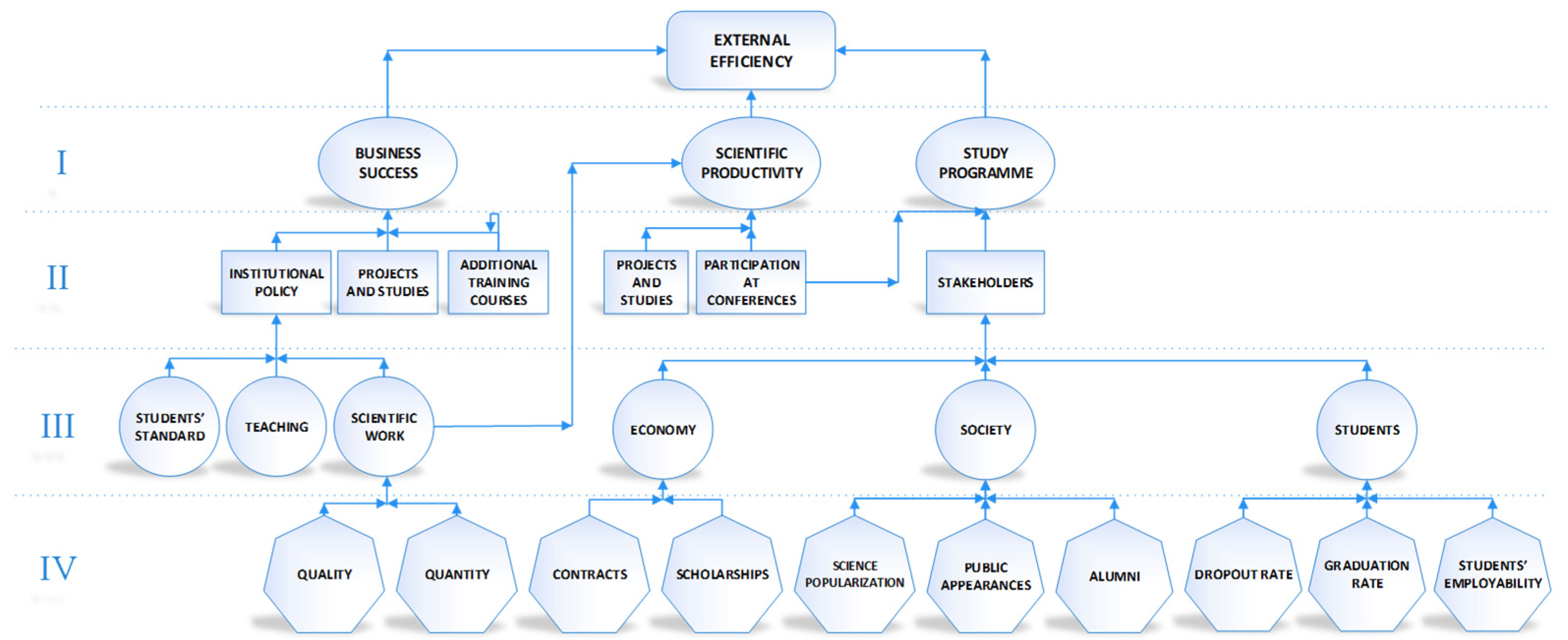

3.2. External Efficiency

3.2.1. Scientific Productivity

3.2.2. Business Success

3.2.3. Study Programme

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- IMHE Info, Programme on Institutional Management in Higher Education. 2006. Available online: https://files.eric.ed.gov/fulltext/ED504117.pdf (accessed on 27 September 2020).

- Cornali, F. Effectiveness and Efficiency of Educational Measures: Evaluation Practices, Indicators and Rhetoric. Sociol. Mind 2012, 2, 255. [Google Scholar] [CrossRef] [Green Version]

- Lockheed, M.E.; Hanushek, E.A. Concepts of Educational Efficiency and Effectiveness; World Bank: Washington, DC, USA, 1994. [Google Scholar]

- Kenny, J. Efficiency and Effectiveness in Higher Education: Who is Accountable for what? Aust. Univ. Rev. 2008, 50, 11. [Google Scholar]

- Cowan, J. Effectiveness and Efficiency in Higher Education. High. Educ. 1985, 14, 235–239. [Google Scholar] [CrossRef]

- Guangli, Z. The Effectiveness of the Higher Education Quality Assessment System: Problems and Countermeas. China. Chin. Educ. Soc. 2016, 49, 39–48. [Google Scholar] [CrossRef]

- Remmers, H.H. Rating Methods in Research of Teaching. In Handbook of Research on Teaching; Gage, N.L., Ed.; Rand McNally: Chicago, IL, USA, 1971. [Google Scholar]

- Cameron, K.S. Domains of Organizational Effectiveness in Colleges and Universities. Acad. Manag. J. 1981, 24, 25–47. [Google Scholar]

- Leguey Galán, S.; Leguey Galán, S.; Matosas López, L. ¿De qué depende la satisfacción del alumnado con la actividad docente? Espacios 2018, 39, 13–29. [Google Scholar]

- Nazarko, J.; Šaparauskas, J. Application of DEA Method in Efficiency Evaluation of Public Higher Education Institutions. Technol. Econ. Dev. Econ. 2014, 20, 25–44. [Google Scholar] [CrossRef] [Green Version]

- Seiler, M.F.; Ewalt, D.J.A.G.; Jones, J.T.; Landy, B.; Olds, S.; Young, P. Indicators of Efficiency and Effectiveness in Elementary and Secondary Education Spending. In Legislative Research Commission; Office of Education Accountability: Frankfort, KY, USA, 2006. [Google Scholar]

- Massy, W. Metrics for Efficiency and Effectiveness in Higher Education: Completing the Completion Agenda. In Proceedings of the State Higher Education Executive Officers’(SHEEO) Annual Meeting, Boulder, CO, USA, 4–6 November 2011. [Google Scholar]

- Robinson-Bryant, F. Defining a Stakeholder-Relative Model to Measure Academic Department Efficiency at Achieving Quality in Higher Education. Ph.D. Thesis, University of Central Florida, Orlando, FL, USA, 2013. [Google Scholar]

- Gates, S.M.; Stone, A. Understanding Productivity in Higher Education; RAND Corporation: Santa Monica, CA, USA, 1997. [Google Scholar]

- Palekčić, M. Uspješnost i/ili učinkovitost obrazovanja nastavnika. Odgoj. Znan. 2008, 10, 403–423. [Google Scholar]

- Andersson, C.; Antelius, J.; Månsson, J.; Sund, K. Technical Efficiency and Productivity for Higher Education Institutions in Sweden. Scand. J. Educ. Res. 2017, 61, 205–223. [Google Scholar] [CrossRef]

- Duguleana, C.; Duguleana, L. Efficiency in Higher Education. Bull. Transilv. Univ. Bras. Econ. Sci. 2011, 4, 115. [Google Scholar]

- Kraipetch, C.; Kanjanawasee, S.; Prachyapruit, A. Organizational Effectiveness Evaluation for Higher Education Institutions, Ministry of Tourism and Sports. Res. High. Educ. J. 2013, 19, 1. [Google Scholar]

- Kaur, H.; Bhalla, G.S. Determinants of Effectiveness in Public Higher Education—Students’ Viewpoint. Int. J. Educ. Manag. 2018, 32, 1135–1155. [Google Scholar] [CrossRef]

- Gil Edo, M.T.; Roca Puig, V.; Camisón Zornoza, C. Hacia modelos de calidad de servicio orientados al cliente en las universidades públicas: El caso de la Universitat Jaume I. Investigaciones Europeas de Dirección y Economía de la Empresa 1999, 5, 69–92. [Google Scholar]

- Srairi, S.A. The Efficiency of Tunisian Universities: An Application of a Two-Stage DEA Approach. J. Knowl. Glob. 2014, 7, 31–58. [Google Scholar]

- Ramírez-Correa, P.; Peña-Vinces, J.C.; Alfaro-Pérez, J. Evaluating the Efficiency of the Higher Education System in Emerging Economies: Empirical Evidences from Chilean Universities. Afr. J. Bus. Manag. 2012, 6, 1441. [Google Scholar] [CrossRef]

- Agasisti, T.; Pohl, C. Comparing German and Italian Public Universities: Convergence or Divergence in the Higher Education Landscape? Manag. Decis. Econ. 2011, 33, 71–85. [Google Scholar] [CrossRef]

- Daraio, C.; Bonaccrosi, A.; Simar, L. Efficiency and Economies of Scale and Scope in European Universities: A Directioanl Distance Approach. J. Informetr. 2015, 9, 430–448. [Google Scholar] [CrossRef] [Green Version]

- Parteka, A.; Wolszczak-Derlacz, J. The Impact of Trade Integration with the European Union on Productivity in a Post Transition Economy: The Case of Polish Manufacturing Sectors. Emerg. Mark. Financ. Trade 2013, 49, 84–104. [Google Scholar] [CrossRef]

- Bursalioglu, S.A.; Selim, S. Factors Determining the Efficiency of Higher Education in the European Union and Turkey. BILIG 2015, 74, 45–69. [Google Scholar]

- Johnes, J.; Li, Y.U. Measuring the Research Performance of Chinese Higher Education Institutions Using Data Envelopment Analysis. China Econ. Rev. 2008, 19, 679–696. [Google Scholar] [CrossRef] [Green Version]

- Scheerens, J.; Luyten, H.; van Ravens, J. Measuring Educational Quality by Means of Indicators. In Perspectives on Educational Quality; Springer: Dordrecht, The Netherlands, 2011; pp. 35–50. [Google Scholar]

- Cunha, M.; Rocha, V. On the Efficiency of Public Higher Education Institutions in Portugal: An Exploratory Study. Univ. Porto FEP Work. Pap. 2012, 468, 1–30. [Google Scholar]

- Ramzi, S.; Ayadi, M. Assessment of Universities Efficiency Using Data Envelopment Analysis: Weights Restrictions and Super-Efficiency Measure. J. Appl. Manag. Investig. 2016, 5, 40–58. [Google Scholar]

- Agasisti, T.; Pérez-Esparrells, C. Comparing Efficiency in a Cross-country Perspective: The Case of Italian and Spanish State Universities. High. Educ. 2010, 59, 85–103. [Google Scholar] [CrossRef] [Green Version]

- Matosas-López, L.; Leguey-Galán, S.; Doncel-Pedrera, L.M. Converting Likert scales into Behavioral Anchored Rating Scales(Bars) for the evaluation of teaching effectiveness for formative purposes. J. Univ. Teach. Learn. Pract. 2019, 16, 1–24. [Google Scholar]

- Panaretos, J.; Malesios, C. Assessing a Researcher’s Scientific Productivity and Scholarly Impact. In A Guide to the Scientific Career; Wiley: Hoboken, NJ, USA, 2019; pp. 69–79. [Google Scholar] [CrossRef]

- Paura, L.; Arhipova, I. Cause Analysis of Students’ Dropout Rate in Higher Education Study Program. Procedia Soc. Behav. Sci. 2014, 109, 1282–1286. [Google Scholar] [CrossRef] [Green Version]

- Korent, D.; Detelj, K.; Vuković, K. Evaluating the Efficiency of Croatian Counties in Entrepreneurship Using Data Envelopment Analysis. In Proceedings of the 5th South-East European (SEE) Meeting & Scientific Conference of Management Departments, Entrepreneurial Society: Current Trends and Future Prospects in Entrepreneurship, Organization and Management, Varaždin, Croatia, 25–26 September 2015. [Google Scholar]

- Bogović, T. Assessment of the Efficiency of Croatian Cities Using Data Envelopment Analysis (DEA). Ph.D. Thesis, University of Zagreb, Varaždin, Croatia, 2014. [Google Scholar]

- Brint, S.; Clotfelter, C.T. US Higher Education Effectiveness. RSF Russell Sage Found. J. Soc. Sci. 2016, 2, 2–37. [Google Scholar]

- Hrvatske, V.R. Hrvatski Kvalifikacijski Okvir: Uvod u Kvalifikacije; Ministarstvo Znanosti, Obrazovanja i Športa: Zagreb, Croatia, 2009.

- Lončar-Vicković, S.; Dolaček-Alduk, Z. Learning Outcomes—Ishodi Učenja-Priručnik za Sveučilišne Nastavnike; Josip Juraj Strossmayer University of Osijek: Osijek, Croatia, 2009. [Google Scholar]

- What is the Student—Teacher Ratio and How Big are Classes? Indicator D2. What is the Student—Teacher Ratio and How Big are Classes? Education at the Glance, OECD. 2019. Available online: https://www.oecd-ilibrary.org/docserver/a1ef3bfe-en.pdf?expires=1602074454&id=id&accname=guest&checksum=DDD99CF2C4AB721730F8333436C7A59F (accessed on 25 September 2020).

- Povećanje Mobilnosti Hrvatske Ekonomske Zajednice: Ulazak Hrvatske u Program Erasmus, Institut Za Razvoj Obrazovanja. 2008. Available online: https://iro.hr/wp-content/uploads/2018/02/4.IRO_MOBIL_prirucnik_HR.pdf (accessed on 25 August 2020).

- Mobility for Better Learning. Mobility Strategy 2020 for the European Higher Education Area. 2012. Available online: https://www.cmepius.si/wp-content/uploads/2014/02/2012-EHEA-Mobility-Strategy.pdf (accessed on 25 September 2020).

- Sánchez-Barrioluengo, M.; Flisi, S. Student Mobility in Tertiary Education: Institutional Factors and Regional Attractiveness; No. JRC108895; Joint Research Centre (Seville Site): Seville, Spain, 2017. [Google Scholar]

- EuroDesk, Kako Mobilnost Mladih u Svrhu UčEnja MožE Povećati Njihovu ZapošLjivost? European Youth Week. 2015. Available online: https://www.mobilnost.hr/cms_files/2017/01/1483369017_hr-learning-mobility.pdf (accessed on 27 September 2020).

- Government of the Republic of Croatia. Act on the Agency for Vocational Education and Training and Adult Education (Official Gazette, no. 24/10); Government of the Republic of Croatia: Zagreb, Croatia, 2010.

- Agencija za Znanost i Visoko Obrazovanje (AZVO). Produktivnost Znanstvenog Istraživanja, Dostupno na. Available online: https://www.azvo.hr/hr/component/content/category/55-vrednovanja (accessed on 27 September 2020).

- González-Sala, F.; Osca-Lluch, J.; Haba-Osca, J. Information Resources: Differential Characteristics between Ibero-American and Dutch JCR Psychology Journals from 1998 to 2017. Resources 2019, 8, 111. [Google Scholar] [CrossRef] [Green Version]

- Eurostat. Tertiary Education Statistics. 2020. Available online: https://ec.europa.eu/eurostat/statistics-explained/pdfscache/63642.pdf (accessed on 27 September 2020).

- European Council, Council of the European Union, Europa 2020. Available online: https://www.consilium.europa.eu/en/policies/education-economic-growth/ (accessed on 21 August 2020).

- Živčić-Bećirević, I.; Smojver-Ažić, S.; Kukić, M.; Jasprica, S. Akademska, socijalna i emocionalna prilagodba na studij s obzirom na spol, godinu studija i promjenu mjesta boravka. Psihol. Teme 2007, 16, 121–140. [Google Scholar]

- Obadić, A. Nezaposlenost mladih i usklađenost obrazovnog sustava s potrebama tržišta rada. Ekonomska Misao i Praksa 2017, 1, 129–150. [Google Scholar]

- Eurostat. Employment Rates of Recent Graduates. 2017. Available online: http://ec.europa.eu/eurostat/statistics-explained/index.php/Employment_rates_of_recent_graduates (accessed on 21 August 2020).

- Zlatović, S. Čemu suradnja između visokog obrazovanja i gospodarstva? Polytech. Des. 2013, 1, 70–74. [Google Scholar]

- Roth, D.M.; Scherer, A.S. Science Popularization: Interdiscursivity among Science, Pedagogy, and Journalism. Bakhtiniana 2016, 11, 171–194. [Google Scholar]

- Sveučilište u Zadru. Available online: www.unizd.hr (accessed on 21 August 2020).

| Input Criteria | Output Criteria |

|---|---|

| Number of teaching staff [21,22,23,24,25,26,27] | Number of graduates [21,22,23,25,27,28,29,30,31] |

| Number of non-teaching staff [21,23,24,27] | Total amount of external grants and number of research contracts [21,23,31] |

| Teachers’ competences [28,32] | Number of PhD degrees awarded [29] |

| Non-operating expenditures [21,23,24] | Number of courses [29] |

| Number of students [21,25,27] | Number of publications [22,24,25,26,27] |

| Teaching staff per student [29] | Ratio of total number of students to the number of graduates [26,28] |

| Operating expenditures [22] | Students employability [26] |

| Government funding [23] | Number of research units and laboratories [30] |

| Scientific productivity [27,33] | |

| Dropout rate [28,34] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gundić, A.; Županović, D.; Grbić, L.; Baric, M. Conceptual Model of Measuring MHEI Efficiency. Educ. Sci. 2020, 10, 385. https://doi.org/10.3390/educsci10120385

Gundić A, Županović D, Grbić L, Baric M. Conceptual Model of Measuring MHEI Efficiency. Education Sciences. 2020; 10(12):385. https://doi.org/10.3390/educsci10120385

Chicago/Turabian StyleGundić, Ana, Dino Županović, Luka Grbić, and Mate Baric. 2020. "Conceptual Model of Measuring MHEI Efficiency" Education Sciences 10, no. 12: 385. https://doi.org/10.3390/educsci10120385

APA StyleGundić, A., Županović, D., Grbić, L., & Baric, M. (2020). Conceptual Model of Measuring MHEI Efficiency. Education Sciences, 10(12), 385. https://doi.org/10.3390/educsci10120385