1. Introduction

The COVID-19 pandemic is also known as the coronavirus pandemic. It is caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) that was first identified in December 2019 in Wuhan, China. The outbreak was declared a Public Health Emergency of International Concern in January 2020, and a pandemic in March 2020. Countries around the world closed educational institutions in an attempt to contain the spread of the virus and approximately 70% of the world’s student population from primary to tertiary education (over 1.5 billion learners) were affected by the temporary closure of schools, colleges, and universities owing to COVID-19. The United Nations Educational, Scientific and Cultural Organization (UNESCO) is supporting countries in their efforts to mitigate the immediate impact of school closures, especially more vulnerable and disadvantaged communities, by facilitating the continuity of education for all through remote learning. This pandemic has exposed many inequities and inadequacies in the diverse education systems across the globe. Some education systems are still struggling to convert to a completely online platform due to issues of internet connectivity and a lack of devices and resources for online education systems. The most critical is the misalignment between resources and the needs for teaching and learning. During the pandemic, learners from the marginalised groups are plagued with digital poverty and lack basic access to learning resources and facilities. The lack of a self-directed learning impetus/ interest could leave this group staggering behind their peers. The need to convert traditional classes into a fully online digital platform should ensure that no students are left behind in the process [

1,

2,

3].

The emergence and spread of COVID-19 has disrupted education at a critical time. In the Northern Hemisphere, the disruptions impacted the second half of the academic year, including the final assessments. The long summer break in the school/post-school education setting provided an opportunity for teachers to prepare for the ongoing changes forced by the COVID-19 pandemic. In the Southern Hemisphere, the academic year had just begun when the pandemic was announced. This situation impacted learning, teaching, and assessment throughout the Southern Hemisphere, and educators were without the advantage of the time to reassess systems in the middle of the calendar year. In the COVID era, the world’s universities shifted curriculum delivery to online platforms such as Moodle, Google Classroom, and Blackboard Collaborate, etc., to cater to the students’ needs whilst ensuring that academic progress was not hindered. Institutions that offer online classes face many challenges in determining the methods to assess the students’ knowledge, skill, and competency via an internet-based approach.

Medical education involves the application of educational theories, principles, and concepts, while delivering the knowledge of theory, practical and clinical components to prepare future physicians and health professionals. The terms medical education and allied health professional education have been used interchangeably [

4]. This review is concerned with the impacts of the COVID-19 pandemic on systems associated with the delivery of education and training of medical and allied health care professionals. Assessment is an integral aspect of any teaching and learning system. Learning outcomes drive assessment measures and are critical for the design and structure of a learning environment. The final assessment is a cumulative activity that gives an accurate reflection of whether the learner was able to attain the course learning outcomes. The implementation of high-quality assessment acts as a catalyst and motivates students to perform at a higher standard in the future, by providing an idea of their current strengths and weaknesses. To establish how effectively the learning has taken place, a valid, reliable, cost-effective, acceptable, and impactful assessment is indispensable. Some challenges encountered in the asynchronous assessment include miscommunication/misunderstanding and limited feedback due to a lack of real-time/live interaction with the students. For laboratory and clinical examinations, it is difficult to assess the student’s skill and attitude through the asynchronous assessment [

5,

6,

7]. This paper focuses on asynchronous environment assessment methods in medical and allied health professional education and the limitations associated with its use.

2. The Framework of a Good Assessment

An assessment framework provides a robust roadmap of areas to assess. This framework clarifies the learning outcomes to be accomplished by medical graduates and reflects the areas to be assessed. The framework provides basic grounds for subsequent development, along with technical and practical considerations of appropriateness and feasibility of the assessment. No single method of assessment measures all the learning outcomes equally. The most appropriate assessment methods must be selected, which are aligned with the learning outcomes being tested. An assessment framework enables educators to determine appropriate methods of evaluation that aids in measuring students’ learning outcomes. Medical and allied health professional education demands high standards of practice and comprises consistent scientific content. The use of a framework as the foundation for developing assessment is necessary for educators [

6,

7].

The cognitive domain as per Bloom’s taxonomy forms the basis of assessment, however, where the psychomotor and affective domains play a critical role in the training and education of medical and allied health professionals. According to Miller’s pyramid, a learner’s competence can be evaluated at different levels of proficiency: knowledge (knows), followed by competence (know-how), performance (show how), and action (does) [

8,

9].

The assessment framework also takes into account the elements and characteristics of a good evaluation, which is defined as the value of an assessment, which is a function of Reliability, Validity, Feasibility, Educational Impact, Cost-Effectiveness. While adhering to the asynchronous assessment framework, it is essential to preserve the principles of integrity, equity, inclusiveness, fairness, ethics, and safety [

10,

11,

12,

13].

3. Assessment in a Synchronous and Asynchronous Environment

Assessment can be conducted in a synchronous and asynchronous environment. Assessment in a synchronous environment is conducted in real-time and can be face-to-face or online, whereas asynchronous environment interaction does not take place in real-time can be via virtual or any other mode. Difference between synchronous and asynchronous environment assessment is given in

Table 1.

During COVID-19, social distancing has become the “new normal” and it is difficult to conduct synchronous examinations face-to-face because they are complex and require significant infrastructural development. Although this is challenging in the immediate pandemic period, many modifications are being tested. This has led to innovations in teaching, learning, and assessment such that asynchronous learning, interactive visuals, or graphics can play a significant role in stimulating the learning process to reach higher-order thinking.

The asynchronous method allows more flexibility regarding time and space. Several online assessment methods are flexible, where the interaction of participants may not occur at the same time. The asynchronous online examination can offer a practicable solution for a fair assessment of the students by providing complex problems where the application of theoretical knowledge is required. The structure allows adequate time for research and response and is a suitable method of assessment in the present situation. In light of COVID-19, the conventional assessment appears far from feasible and we are left with little choice but to implement the online asynchronous assessment methods [

10,

12,

14].

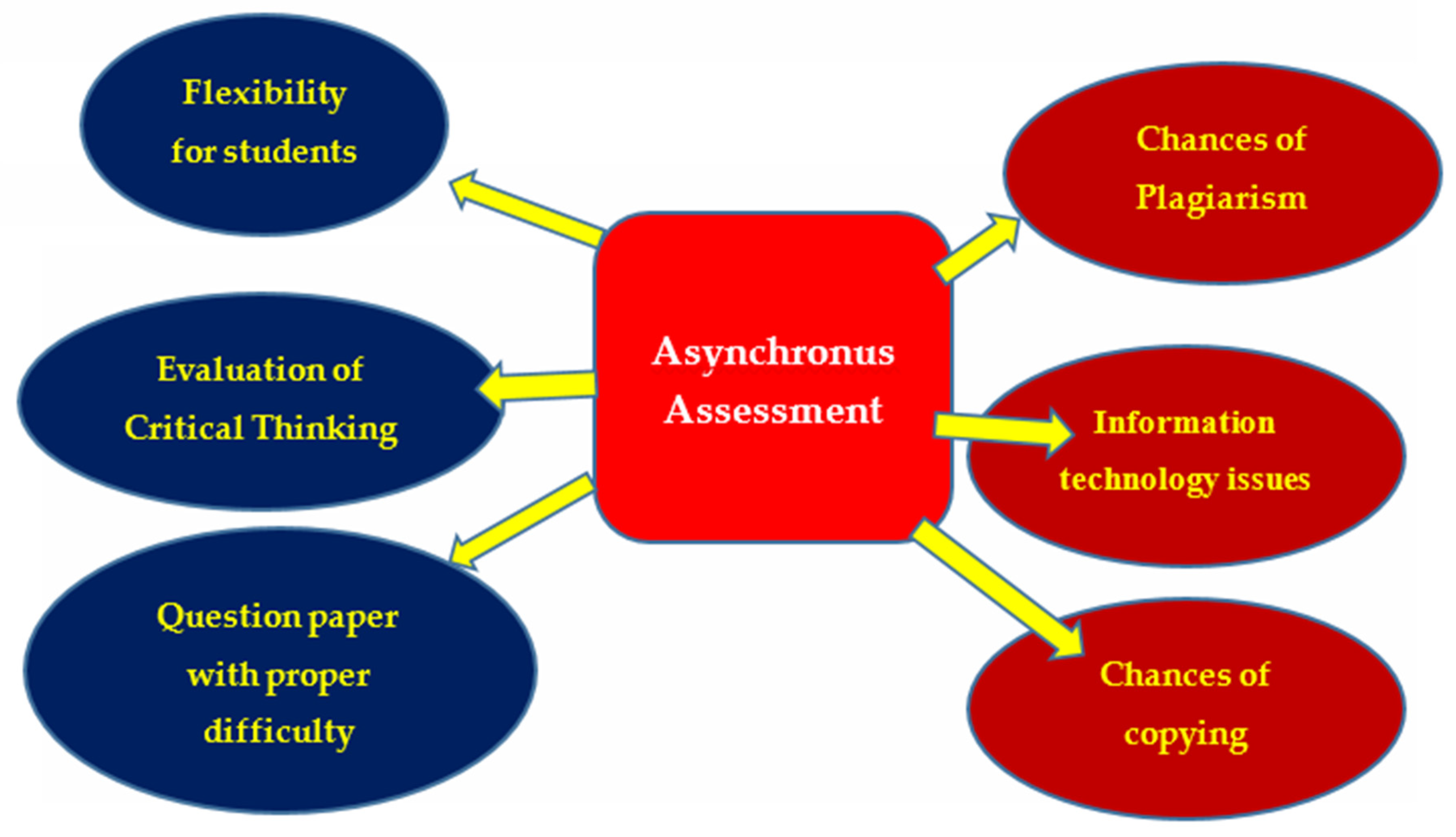

4. Development of Assessment for an Asynchronous Environment

Assessment in an asynchronous environment can be given to the students by posting the material online and allowing students the freedom to research and complete the assignment within the allotted period. It broadens the assessment possibilities and offers the teacher an opportunity to explore innovative tools, because it represents the open book format of an examination but suffers from drawbacks of plagiarism and copying, especially in mathematical subjects. There are also information technology, issues such as software availability and internet connectivity (

Figure 1) [

12,

15,

16].

In medical education, asynchronous assessment modalities should require the application of theoretical knowledge as well as critical thinking while interpreting clinical data. These could be realised by case studies and problem-based questions.

A valid, fair, and reliable asynchronous assessment method can only be designed and developed by considering the level of target students, curricular difficulty, and the pattern of knowledge, skill, and competence levels to be assessed. The level of target students refers to the target group, i.e., whether the examination paper is intended for undergraduate or postgraduate students. Checking the knowledge of the undergraduate students’ exam papers will be based on their year of study. Students in the first year of study will be assessed on theoretical knowledge, while final year students will be faced with questions that require more clinical-based knowledge.

The validation of the assessment method should be done on a trial basis before it is approved for implementation. Evaluation should be conducted based on item analysis of student performance and the difficulty index of questions to differentiate excellent, good, and poor students [

13,

14,

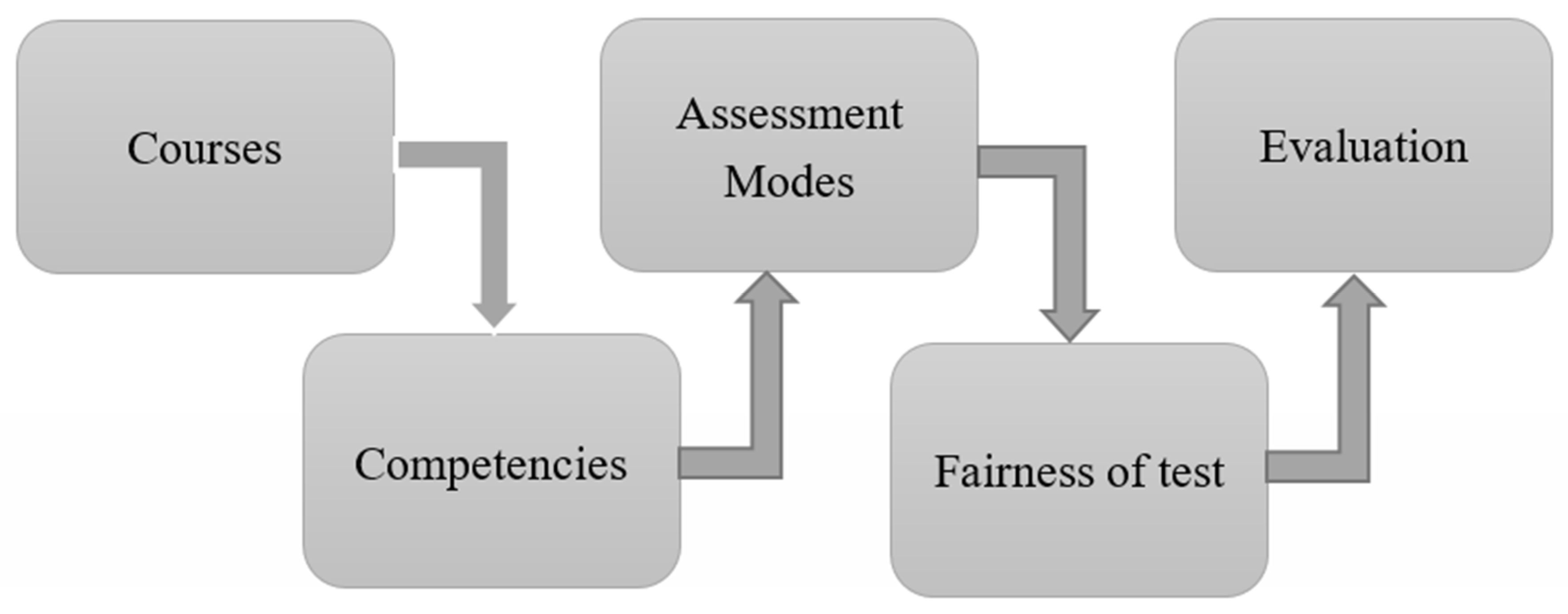

16]. For asynchronous assessment, the teacher utilises various tools to diagnose the knowledge, skill, and competence of students; some of these modalities include open-ended questions, problem-based questions, virtual OSCE (Objective structural Clinical Examination), and an oral examination. A well-designed course with the competencies and measurable learning outcomes helps determine the modes of assessment. Upon choosing a mode of assessment, the questions are developed considering the criteria of reliability, validity, and accuracy. After the questions are prepared and administered to the students, the final step is to evaluate their performance (

Figure 2) [

15,

16,

17].

4.1. Open-Ended Questions

Open-ended questions are useful when a teacher wants insight into the learner’s view and to gather a more elaborate response about the problem (instead of a “yes” or “no” answer). The response to open-ended questions is dynamic and allows the student to express their answer with more information or new solutions to the problem. An open-ended question allows the teacher to check the critical thinking power of the students by applying why, where, how, and when type questions, that encourage critical thinking. The teacher is open to and expects different possible solutions to a single problem with justifiable reasoning. The advantage of open-ended questions over multiple-choice questions (MCQs) is that it is suitable to test deep learning. The MCQ format is limited to assess the facts only, whereas open-ended questions evaluate the students’ understanding of a concept. Open-ended questions help students to build confidence by naturally solving the problem. It allows teachers to evaluate students’ abilities to apply information to clinical and scientific problems, and also reveal their misunderstandings about essential content. If open-ended questions are properly structured as per the rubrics, it can allow students to include their feelings, attitudes, and understanding about the problem statement which requires ample research and justifications, but this may not be applicable in all cases, e.g., ‘What are the excipients required to prepare a pharmaceutical tablet?’—in this case, only a student’s knowledge can be evaluated. Marking open-ended questions is also a strenuous and time-consuming job for the teachers because it increases their workload. Open-ended questions have lower reliability than those of MCQs. The teacher has to consider all these points carefully and develop well-structured open-ended questions to assess the higher-order thinking of the students A teacher can assess student knowledge and ability to critically evaluate a given situation through such questions, e.g., ‘What is the solution to convert a poorly flowable drug powder into a good flowable crystal for pharmaceutical tablet formulation?’ [

17,

18,

19,

20,

21,

22,

23].

4.2. Modified Essay Questions

A widely used format in medical education is the modified essay question (MEQ), where a clinical scenario is followed by a series of sequential questions requiring short answers. This is a compromise approach between multiple-choice and short answer questions (SAQ) because it tests higher-order cognitive skills when compared to MCQs, while allowing for more standardised marking than the conventional open-ended question [

20,

21,

22].

Example 1: A 66-year-old Indian male is presented to the emergency department with a complaint of worsening shortness of breath and cough for one week. He smokes two packs of cigarettes per month. His past medical history includes hypertension, diabetes, chronic obstructive pulmonary disease (COPD), and obstructive sleep apnoea. What are the laboratory tests needed to confirm the diagnosis, and what should be the best initial treatment plan? After four days the patient’s condition is stable; what is the best discharge treatment plan for this patient?

Example 2: You are working as a research scientist in a pharmaceutical company and your team is involved in the formulation development of controlled-release tablets for hypertension. After an initial trial, you found that almost 90% of the drug is released within 6 h. How can this problem be overcome, and what type of formulation change would you suggest so that drug release will occur over 24 h instead of 6 h?

4.3. “Key Featured” Questions

In such a question, a description of a realistic case is followed by a small number of questions that require only essential decisions. These questions may be either multiple-choice or open-ended depending on the content of the question. Key feature questions (KFQs) measure problem-solving and clinical decision-making ability validly and reliably. The questions in KFQs mainly focus on critical areas such diagnosis and management of clinical problems The construction of the questions is time-consuming, with inexperienced teachers needing up to three hours to produce a single key feature case with questions, while experienced ones may produce up to four an hour. Key feature questions are best used for testing the application of knowledge and problem solving in “high stake” examinations [

23,

24,

25].

4.4. Script Concordance Test

Script concordance test (SCT) is a case-based assessment format of clinical reasoning in which questions are nested into several cases and intended to reflect the students’ competence in interpreting clinical data under circumstances of uncertainty [

25,

26]. A case with its related questions constitutes an item. Scenarios are followed by a series of questions, presented in three parts. The first part (“if you were thinking of”) contains a relevant diagnostic or management option. The second part (“and then you were to find”) presents a new clinical finding, such as a physical sign, a pre-existing condition, an imaging study, or a laboratory test result. The third part (“this option would become”) is a five-point Likert scale that captures examinees’ decisions. The task for examinees is to decide what effect the new finding will have on the status of the option in direction (positive, negative, or neutral) and intensity. This effect is captured with a Likert scale because script theory assumes that clinical reasoning is composed of a series of qualitative judgments. This is an appropriate approach for asynchronous environment assessment because it demands both critical and clinical thinking [

27,

28,

29].

4.5. Problem-Based Questions

Problem-based learning is an increasingly integral part of higher education across the world, especially in healthcare training programs. It is a widely popular and effective small group learning approach that enhances the application of knowledge, higher-order thinking, and self-directed learning skills. It is a student-centred teaching approach that exposes students to real-world scenarios that need to be solved using reasoning skills and existing theoretical knowledge. Students are encouraged to utilise their higher thinking faculty, according to Bloom’s classification, to prove their understanding and appreciation of a given subject area. Where the physical presence of students in the laboratory is not feasible, assessment can be conducted by providing challenging problem-based case studies (as per the level of the student). The problem-based questions can be an individual/group assignment, and the teacher can use a discussion forum on the learning management system (LMS) online platform, allowing students to post their views and possible solutions to the problem. The asynchronous communication environment is suitable for problems based on case studies because it provides sufficient time for the learner to gather resources in the search for solutions [

24,

25,

28].

Teachers can engage online learners for their weekly assessments on discussion boards using their LMS. A subject-related issue based on lessons of the previous week can be created to allow student interaction and enhance problem-solving, skills e.g., pharmaceutical formulation problems with pre-formulation study data, clinical cases with disease symptoms, diagnostic, therapeutic data, and patient medication history. Another method is to divide the problem into various facets and assign each part to a separate group of students. At the end of the individual session, all groups are asked to interact to solve the main issue by putting their pieces together in an amicable way. Students must be given clear timelines for responses and a well-structured question which is substantial, concise, provocative, timely, logical, grammatically sound, and clear [

26,

27,

30]. The structure should afford a stimulus to initiate the thinking process and offer possible options or methods that can be justified. It should allow students to achieve the goal depending on their interpretation of the data provided and the imagination of each responder to predict different possible solutions. The participants need to complement and challenge each other to think deeper by asking for explanations, examples, checking facts, considering extreme conditions, and extrapolating conclusions. The moderator should post the questions promptly and allow sufficient time for responders to post their responses. The moderator then facilitates the conversations, and intervenes only if required to obtain greater insight, stimulate, or guide further responses. The subject teacher moderates the interactions between the groups and their competence can be adjudged based on individual contributions [

25,

26,

28]. A format of assessment rubrics is given in

Table 2.

The discussion board is to be managed and monitored for valid users in a closed forum from a registered device through an official IP address. The integrity issue raised is really difficult and the examiner has to rely on the ethical commitment of the examinee.

4.6. Virtual OSCE

Over recent years, we have seen an increasing use of Objective Structured Clinical Examinations (OSCEs) in the health professional training to ensure that students achieve minimum clinical standards. In OSCE, simulated patients are useful assessment tools that evaluate student–patient interactions related to clinical and medical issues. In the current COVID-19 crisis, students will not be able to appear for the traditional physical OSCE, and a more practicable approach is based on their interaction with the virtual patient. The use of a high-fidelity virtual patient-based learning tool in OSCE is useful for medical and healthcare students for clinical training assessment [

30,

31,

32].

High fidelity patients use simulators with programmable physiologic responses to disease states, interventions, and medications. Some examples of situations where faculty members can provide a standardised experience with simulation include cardiac arrest, respiratory arrest, surgeries, allergic reactions, cardiac pulmonary resuscitation, basic first aid, myocardial infarction, stroke procedures, renal failure, bleeding, and trauma. Although simulation should not replace students spending time with real patients, it provides an opportunity to prepare students, complements classroom learning, fulfils curricular goals, standardises experiences, and enhances assessment opportunities in times when physical face-to-face interaction is not possible. Virtual simulation tools are also available for various pharmaceutical, analytical, synthetic, clinical experimental environments, and industry operations [

31,

32,

33].

4.7. Oral Examination

The oral exam is a commonly used mode of evaluation to assess competencies, including knowledge, communication skills, and critical thinking ability. It is a significant evaluation tool for a comprehensive assessment of the clinical competence of a student in the health profession. The oral assessment involves student’s verbal response to questions asked, and its dimensions include primary content type (object of assessment), interaction (between the examiner and student), authenticity (validity), structure (organised questions), examiners (evaluators), and orality (oral format). All six dimensions are equally important in the oral examination where the mode of communication between examiners and students will be purely online instead of physical face-to-face interaction. Clear instructions regarding the purpose and time limit shall be important to make the online oral examination relevant and effective [

32,

33,

34,

35].

Oral examination can be conducted with the use of Blackboard Collaborate, Zoom, Cisco Webex, and other online platforms. As indicated in

Table 3, not all the assessment modalities discussed above are relevant in the present pandemic situation.

As indicated in

Table 3, not all the asynchronous assessment modalities discussed are practicable in the COVID-19 pandemic. Short questions, open-ended questions, and problem-based questions are relevant and effective asynchronous means to assess the knowledge, skill, and attitude of the students because these types of questions require critical thinking, and can act as a catalyst for the students to provide new ideas in problem-solving. Although MCQs, extended matching questions (EMQs), and true/false questions possess all psychometric properties of a good assessment, these modalities may not be recommended for online asynchronous assessment because they are more subjected to cheating which can have serious implications on the validity of examinations. However, they can be appropriately adapted for time-bound assessments for continuous evaluation. Mini-CEX, DOPS, OSCE assessment are not feasible during a pandemic because face-to-face interaction is required at the site, which is not permissible due to gathering restrictions and social distancing. When the physical presence of the student is not feasible at the hospital or laboratory site, virtual oral examination and virtual OSCE becomes more relevant because the examiner can interact with the students via a suitable platform and ask questions relevant to the experiment/topic. In virtual OSCE, students will be evaluated based on their interactions with virtual patients [

32,

33,

36,

37,

38,

39,

40,

41,

42,

43].

There are some benefits as well as challenges for both synchronous and asynchronous assessment method (

Table 4)

5. Assessment Modalities in Online Assessment

MCQ, EMQ, and true/false, questions though not relevant for the online final examination, can be appropriately adapted for time-bound assessments for continuous evaluation. Quizzes can be utilised after a regular lecture or practical demonstration with a short time limit that precludes the chances of integrity infringement. When an online discussion is used as a grading tool, there is usually a timeline for posting comments for grading. Often there is the possibility that students may post comments close to the deadline date which makes it difficult to engage the comments into the discussion. There is also the possibility of robust discussions, which means that students will have to keep up with voluminous responses which can be time-consuming [

34,

35,

36,

44]. This also adds to the burden of the academic staff who must read all contributions and guide the discussion. In these sessions where a timeline for responses is indicated, technology failure at the tutor or student side will negatively impact the quality of the discussion. This can create further anxiety in the student because these activities count to their final grade. On the other hand, when online discussions are created to assist the development of competence in the subject area without contributing to a grade, there is a possibility that the exercise may be ignored because it would not affect their final mark [

36,

37,

45].

Self-check quizzes can be used as an informal method to gauge student knowledge and understanding whilst providing appropriate feedback to help them correct misconceptions about the topic. Another method employs a flipped blended classroom where students are provided with a reading assignment and they take an ungraded online exam which can comprise true/false or multiple-choice questions. When the session is complete, feedback is provided to them along with the correct answers. This allows for valuable self-assessment and even though the quiz does not contribute to their final grade, the score is entered into the grade book within the LMS for both the student and instructor to review.

6. Challenges Faced in Asynchronous Environment Assessment

Due to the current COVID-19 crisis, all courses are being conducted virtually which has led to the dissemination of medical information to health sciences students through various LMS and online platforms. It encourages students to familiarise themselves and engage with online learning tools whilst understanding the concepts from their homes. The improvisation of the home is multifaceted, with challenges ranging from technical issues, distractions from family members, availability of online devices, and broadband connectivity issues. Many students lack personal devices and often utilise on-campus devices to aid in their study. The difficulty with unpredictable changes in broadband frequency can lead to abrupt disruption of classes, which would require additional time to re-join the session. This can lead to frustration with dysfunctional electronic devices, making it more difficult to engage and actively participate in online sessions. Coupled with the challenges of technological integration, is the social aspect of home-based learning which can be easily overlooked. Students at home may be faced with added distractions from family members and younger siblings. With the challenges faced by parents/caregivers to provide financially during the pandemic, students may have additional household responsibilities and chores which would reduce the time for school interaction and review of course material. The lack of face-to-face interaction can precipitate uncertainty and the inability to fully understand course expectations. Although faced with many challenges, online classes from their home remain the most feasible option during a pandemic when face-to-face interaction is restricted. In the new normal with restricted interaction, their homes represent a haven for students to pursue their academic goals. Though not ideal, it presents the most viable option in the present pandemic scenario [

39,

42,

45,

46,

47,

48,

49,

50]. Moreover, they can watch recorded videos, classroom lectures, and review them again to understand core concepts. Asynchronous learning skills offer advantages to pursue coursework and prepare students for licensing exams in the future. The attendance for virtual classes has significantly increased, which demonstrates a preference for the asynchronous mode of learning [

30,

40,

41].

There are numerous challenges during the asynchronous assessment which include the impact of physical distance between instructors and students and adaptations due to the use of technology for communicating with students and managing workload and time efficiently. It is important to realise that it is not simply assessing the final performance of the student, there is a need to monitor the students’ progress throughout the semester, culminating in the assessment examination. By using asynchronous examinations, it is often difficult to gauge the progress of the student because the instructor cannot be certain that the work submitted represents the true student effort. Some asynchronous assignments involve complex problem-solving tasks which are often conducted via a stepwise approach. In face-to-face sessions, understanding and mastery of the topic can be established, and feedback provided accordingly. In asynchronous modes, it would become necessary to divide the task into smaller sections which enables the teacher to assess the students at different time points. The instructor would have to make an effort to utilise Vygotsky’s concept of scaffolding by developing mini-tutorials or have regular sessions to meet with students, ensuring student progress in the specified field. Although feedback is of paramount importance, preparing such feedback for many questions across multiple programs/courses would require a significant amount of time [

36,

42,

43].

Both students and instructors need to adapt to technical proficiency to decode the course materials and fully utilise the available LMS. Issues related to adaptability can arise while switching from face-to-face to online teaching. It takes time to adapt to computer-based eLearning and LMS. The asynchronous assessment offers various options such as recorded video presentations, online feedback, video tutorials, presentation assessment, online quizzes, multiple-choice questions, and short answer questions. Quick adaptability and accepting a new learning environment is required for students to prepare themselves for online classes and assessments. The use of these methods may require more planning and time, because lecturers would need to include formative assessments to test student understanding [

42,

43,

44]. Formative assessment is an essential component of medical and allied health profession education. It provides feedback to the students about their strengths and weaknesses in learning. The main advantage of this assessment is that students gain the opportunity to know about their performance compared to the standard at regular intervals. It does not only help to guide students in their continuous progress during the training, but also helps educators to know the improvement and shortcomings of their students. However, the students may not see the value of formative assessment if it does not contribute to the grade. The absence of continuous feedback culture by the lecturers is another setback to formative assessment. When a deadline for submission is provided, high usage of the LMS for submission across the entire institution may result in higher internet usage with obvious bandwidth issues for some students who may experience lag times and difficulty in uploading their assignments. The examination sections must institute proper protocols that allow students the ability to upload assignments after the due time once an acceptable reason has been provided for their failure to do so. It is a major issue for students living on-campus where high-speed internet is in-demand and shared by multiple individuals, with increased usage during the COVID-19 crisis. Apart from that, many students seek technical assistance to navigate their way through the LMS. To ease learning through asynchronous assessment, students should be equipped with guided tutorials and manuals for successful course completion [

38,

46,

47].

There are a variety of online tools available for formative assessment that can be easily utilised to check student understanding of a topic. These tools are highly interactive, and students often enjoy the experience due to their game-based approaches.

Poll everywhere is a free online tool for classes of 30 students or fewer, and utilises an online polling platform for students to vote on polls generated by the teacher through text messaging (SMS), smartphone, or a computer by visiting the website. For assessment purposes several useful tools are available. Socrative, Kahoot, Quiz revolution, Quibblo, Quizpedia, and Moodle are but a few of the online tools available for administering quizzes. These applications are free for a maximum of 30–50 users and premium members have access to additional features such as performance tracking, and the ability to generate progress reports. These tools can be used for formative assessments in the classroom where users can participate in the game using their mobile phones. It creates a fun learning environment where students can reflect on the material taught during the lecture session. When used for summative synchronous assessments, some offer options for the randomisation of questions to minimise sharing and deliberation of answers amongst students. Time and resource management when using asynchronous assessments is of critical importance both to the student and the academic staff. The use of online technologies means higher output is required for both parties with strict time limits as universities attempt to maintain their students’ academic progress. Academic staff will be required to provide examinations and grades within a stipulated time, and multiple examinations should not be administered simultaneously to ensure that students can focus on the assessment at hand without being distracted by another submission that may be required within the same period. Careful planning is required by examination sections to ensure student examinations are scheduled appropriately to allow sufficient time for preparation and submission of the final response [

35,

46].

7. Conclusions

In the present COVID-19 pandemic, face-to-face interaction with the student in a physical space (classroom, laboratory, hospital setup) is not possible. In the interim, the knowledge, skill, and attitude of the learner can be conveniently assessed by the asynchronous assessment methods using online platforms. This system offers a variety of question types, such as open-ended, short answer, and problem-based questions that can be used for asynchronous assessment. Online platforms can also be used for assessing student competence in domains of the practical component of the subject through asynchronous oral examination and virtual OSCE. Some challenges of fairness and integrity can be suitably addressed by implementing technological tools such as video and audio recording surveillance software. Alternative online strategies to assess the knowledge, skill, and attitude of the learner need to be developed and validated that will not only provide the back up in an emergency but also involve more students in the education system due to its convenience in teaching, learning and assessment.