A Meta-Analysis of Graduate School Enrollment from Students in the Ronald E. McNair Post-Baccalaureate Program

Abstract

1. Introduction

2. Overview of McNair Scholars Program

2.1. Previous Research on Effectiveness of McNair Scholars Program

2.2. Current Study

3. Methods

3.1. Study Selection

3.2. Extracted Information

3.3. Data Analysis

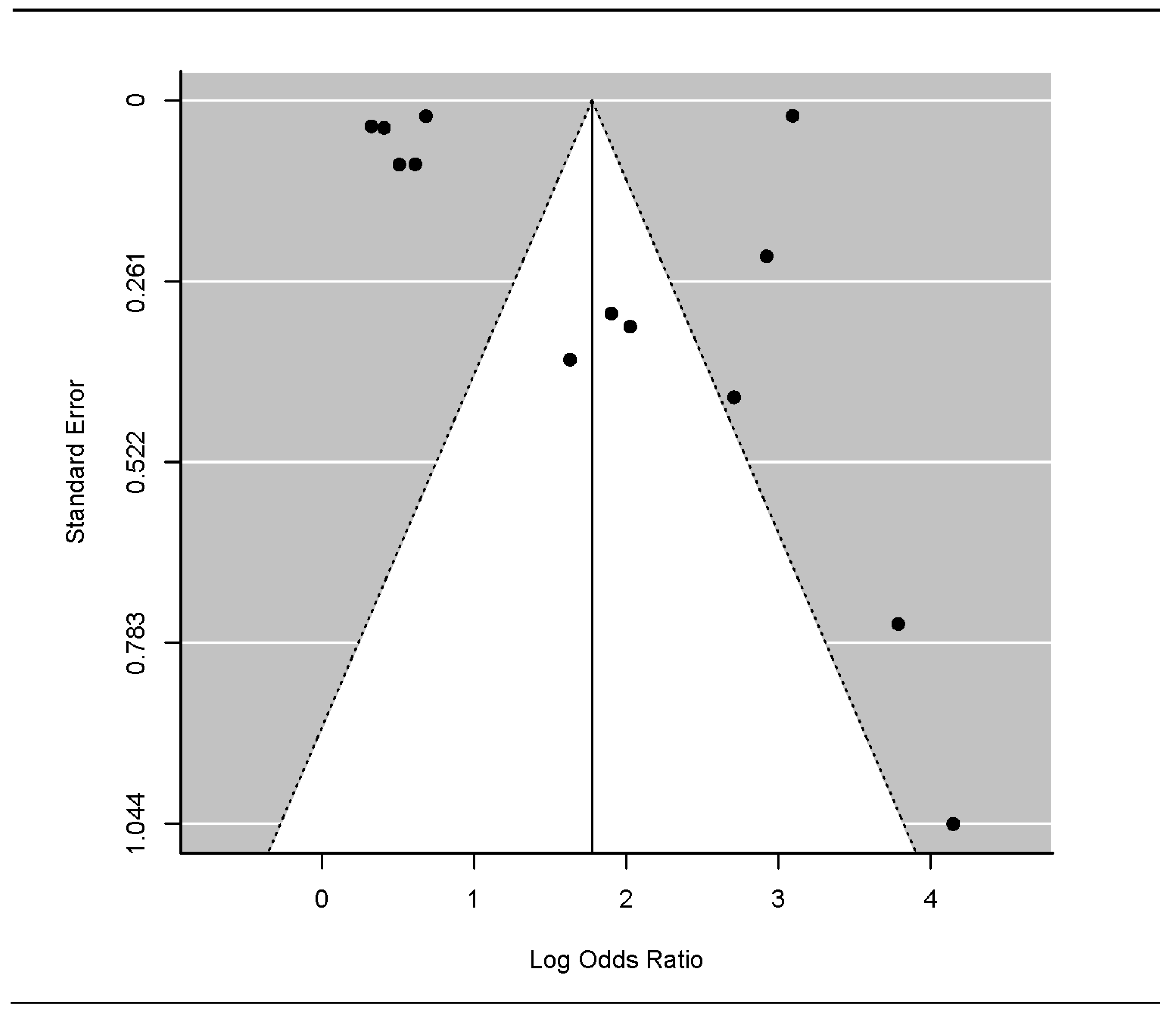

3.4. Publication Bias

4. Results

4.1. Search Results

4.2. Model Results

4.3. Publication Bias

5. Discussion

5.1. Implications

5.2. Limitations

5.3. Future Research

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Baum, S.; Steele, P. Who Goes to Graduate School and Who Succeeds? Access Group: Washington, DC, USA, 2017. [Google Scholar]

- Mullen, A.; Goyette, K.; Soares, J. Who goes to graduate school? Social and academic correlates of educational continuation after college. Sociol. Educ. 2003, 76, 143–169. [Google Scholar] [CrossRef]

- National Science Foundation. Doctorate Recipients from U.S. Universities: 2015; NSF Publication No. 17-306; National Science Foundation: Washington, DC, USA, 2017.

- Okahana, H.; Feaster, K.; Allum, J. Graduate Enrollment and Degrees: 2005 to 2015; Council of Graduate Schools: Washington, DC, USA, 2016. [Google Scholar]

- Sowell, R.; Allum, J.; Okahana, H. Doctoral Initiative on Minority Attrition and Completion; Council of Graduate Schools: Washington, DC, USA, 2015. [Google Scholar]

- U. S. Department of Education. The Condition of Education 2017; NCES 2017-144; National Center for Education Statistics: Washington, DC, USA, 2017.

- U. S. Department of Education. The Condition of Education 2015; NCES 2015-144; National Center for Education Statistics: Washington, DC, USA, 2015.

- Lord, C.G. A guide to PhD graduate school: How they keep score in the big leagues. In The Complete Academic: A Career Guide; Darley, J.M., Zanna, M.P., Roediger, H.L., III, Eds.; American Psychological Association: Washington, DC, USA, 2004; pp. 3–15. [Google Scholar]

- Ramirez, E. “No one taught me the steps”: Latinos’ experiences applying to graduate school. J. Lat. Educ. 2011, 10, 204–222. [Google Scholar] [CrossRef]

- Gardner, S.K.; Holley, K.A. “Those invisible barriers are real”: The progression of first-generation students through doctoral education. Equity Excell. Educ. 2011, 44, 77–92. [Google Scholar] [CrossRef]

- Holley, K.A.; Gardner, S. Navigating the pipeline: How socio-cultural influences impact first-generation doctoral students. J. Divers. High. Educ. 2012, 5, 112. [Google Scholar] [CrossRef]

- Jury, M.; Smeding, A.; Stephens, N.M.; Nelson, J.E.; Aelenei, C.; Darnon, C. The experience of low-SES students in higher education: Psychological barriers to success and interventions to reduce social-class inequality. J. Soc. Issues 2017, 73, 23–41. [Google Scholar] [CrossRef]

- Ramirez, E. Examining Latinos/as’ graduate school choice process: An intersectionality perspective. J. Hisp. High. Educ. 2013, 12, 23–36. [Google Scholar] [CrossRef]

- Dervarics, C. McNair/Harris: Two programs that live up to their namesakes. Black Issues High. Educ. 1994, 11, 26. [Google Scholar]

- U. S. Department of Education. Ronald E. McNair Postbaccalaureate Achievement Program. Available online: https://www2.ed.gov/programs/triomcnair/index.html (accessed on 20 September 2018).

- Rossi, P.H.; Lipsey, M.W.; Freeman, H.E. Evaluation: A Systematic Approach, 7th ed.; Sage: Thousand Oaks, CA, USA, 2004. [Google Scholar]

- National Academy of Sciences, National Academy of Engineering, Institute of Medicine. Expanding Underrepresented Minority Participation: America’s Science and Technology Talent at the Crossroads; The National Academies Press: Washington, DC, USA, 2011. [Google Scholar]

- Council for Opportunity in Education. COE statement on White House Proposal to Eliminate Ronald E. McNair Postbaccalaureate Achievement and Education Opportunity Centers TRIO Programs [Press Release]. 2017. Available online: http://www.coenet.org/press_releases_052317.shtml (accessed on 16 March 2017).

- U.S. Office of the Press Secretary. Off-Camera Briefing of the FY18 Budget by Office of Management and Budget Director Mick Mulvaney. Available online: https://www.whitehouse.gov/briefings-statements/off-camera-briefing-fy18-budget-office-management-budget-director-mick-mulvaney-052217/ (accessed on 22 May 2017).

- Abdul-Alim, J. Obama administration official touts’ critical role’ of TRIO amid funding cuts. Divers. Issues High. Educ. 2012, 29, 7. [Google Scholar]

- Jean, R. Bootstraps: Federal TRIO programs, if funded, could help close income gap. N. Engl. J. High. Educ. 2011. Available online: http://www.nebhe.org/thejournal/bootstraps-federal-trio-programs-if-funded-could-help-close-income-gap/ (accessed on 19 April 2017).

- Basken, P. What Trump’s budget outline would mean for higher Ed. Chron. High. Educ. Available online: https://www.chronicle.com/article/What-Trump-s-Budget-Outline/239511 (accessed on 16 March 2017).

- Seburn, M.; Chan, T.; Kirshstein, R. A Profile of the Ronald E. McNair Postbaccalaureate Achievement Program: 1997–1998 through 2001–2002; U.S. Department of Education: Washington, DC, USA, 2005. Available online: https://www2.ed.gov/programs/triomcnair/mcnairprofile1997-2002.pdf (accessed on 19 April 2017).

- Mansfield, W.; Sargent, K.D.; Cahalan, M.W.; Belle, R.L., Jr.; Bergeron, F. A Profile of the Ronald E. McNair Post-Baccalaureate Achievement Program: 1998–99 with Selected Data from 1997–98 and 1996–97; Mathematica Policy Research: Washington, DC, USA, 2002; Available online: https://ideas.repec.org/p/mpr/mprres/fd1c5b2f63fd437cae85f7382136959b.html (accessed on 19 April 2017).

- Kniffin, K.M. Accessibility to the PhD and professoriate for first-generation college graduates: Review and implications for students, faculty, and campus policies. Am. Acad. 2007, 3, 49–79. [Google Scholar]

- Parker, K.D. Achieving diversity in graduate education: Impact of the Ronald E. McNair Postbaccalaureate Achievement Program. Negro Educ. Rev. 2003, 54, 47–50. [Google Scholar]

- Ethington, C.A.; Smart, J.C. Persistence to graduate education. Res. High. Educ. 1986, 24, 287–303. [Google Scholar] [CrossRef]

- King, S.E.; Chepyator-Thomson, J.R. Factors affecting the enrollment and persistence of African-American doctoral students. Phys. Educ. 1996, 53, 170–180. [Google Scholar]

- Ong, M.; Wright, C.; Espinosa, L.; Orfield, G. Inside the double bind: A synthesis of empirical research on undergraduate and graduate women of color in science, technology, engineering, and mathematics. Harv. Educ. Rev. 2011, 81, 172–209. [Google Scholar] [CrossRef]

- Gittens, C.B. The McNair program as a socializing influence on doctoral degree attainment. Peabody J. Educ. 2014, 89, 368–379. [Google Scholar] [CrossRef]

- Huerta, A.L. First-Generation College Students and Undergraduate Research: Narrative Inquiry into the University of Arizona’s Ronald E. McNair Achievement Program and the Phenomenon of Student Transformation. Ph.D. Thesis, University of Arizona, Tucson, AZ, USA, 2013. [Google Scholar]

- Posselt, J.R.; Black, K.R. Developing the research identities and aspirations of first-generation college students. Int. J. Res. Dev. Leeds 2012, 3, 26–48. [Google Scholar] [CrossRef]

- Fifolt, M.; Engler, J.; Abbott, G. Bridging STEM professions for McNair Scholars through faculty mentoring and academic preparation. Coll. Univ. 2014, 89, 24–33. [Google Scholar]

- Thomas, E.P. Taking the First Steps toward Graduate Education: A Report on the Ronald E. McNair Postbaccalaureate Achievement Program; OCLC: New Brunswick, NJ, Canada, 1994. [Google Scholar]

- Baness King, D. Journey to the doctorate: Motivating factors for persistence and completion of doctoral programs among McNair scholars. Ph.D. Thesis, University of New Mexico, Albuquerque, NM, USA, 2011. [Google Scholar]

- Exstrom, B.K. A Case Study of McNair Program Participant Experiences. Ph.D. Thesis, University of Nebraska, Lincoln, NE, USA, 2003. [Google Scholar]

- Gallagher Trayhan, E.K. Sources of Resilience of Female Mexican American College Students Enrolled in the McNair Scholars Program. Ph.D. Thesis, Our Lady of the Lake University, San Antonio, TX, USA, 2010. Available from ProQuest Dissertations and Theses database. (UMI No. 3421741). [Google Scholar]

- Carrera, S.R. An Evaluation of the Mentoring Component in the Ronald E. McNair Post-Baccalaureate Achievement Program: A national Sample. Ph.D. Thesis, Texas Tech University, Lubbock, TX, USA, 2002. [Google Scholar]

- Wyre, D. Set Up for Success: An Examination of the Ronald E. McNair Postbaccalaureate Achievement Program’s Mentoring Component. Ph.D. Thesis, University of Southern Mississippi, Hattiesburg, MS, USA, 2011. [Google Scholar]

- Lewis, N. Developing and Testing a Model to Predict Underrepresented Students’ Plans for Graduate Study: Analysis of the 1988–2006 Cohorts of a Summer Research Program. Ph.D. Thesis, The University of North Carolina at Chapel Hill, Chapel Hill, NC, USA, 2007. Available from ProQuest Dissertations and Theses database. (UMI No. 3257547). [Google Scholar]

- Willison, S.; Gibson, E. Graduate school learning curves: McNair Scholars’ postbaccalaureate transitions. Equity Excell. Educ. 2011, 44, 153–168. [Google Scholar] [CrossRef]

- Derk, A. Highlighting Hope: An Exploration of the Experiences of West Virginia University McNair Scholars. Ph.D. Thesis, West Virginia University, Morgantown, WV, USA, 2007. Available from ProQuest Dissertations and Theses database. (UMI No. 3298546). [Google Scholar]

- Schunk, D.H. Social cognitive theory. In APA Educational Psychology Handbook, Vol 1: Theories, Constructs, and Critical Issues; Harris, K.R., Graham, S., Urdan, T., McCormick, C.B., Sinatra, G.M., Sweller, J., Eds.; American Psychological Association: Washington, DC, USA, 2012; pp. 101–123. [Google Scholar]

- Restad, C. Beyond the McNair Program: A Comparative Study of McNair Scholars’ Understandings of the Impacts of Program Participation on Their Graduate School Experiences. Master’s Thesis, Portland State University, Portland, OR, USA, 2014. [Google Scholar]

- Beal, R.Y. “You mean they’ll pay me to think?” How low income, first generation and underrepresented minority McNair students construct an academic identity as scholar. Ph.D. Thesis, University of Colorado at Boulder, Boulder, CO, USA, 2007. [Google Scholar]

- Simpson, M.T. Exploring the Academic and Social Transititon Experiences of Ethnic Minority Graduate Students. Ph.D. Thesis, Virginia Tech, Blacksburg, VA, USA, 2003. [Google Scholar]

- Farro, S.A. Achievements and Challenges of Undergraduates in Science, Technology, Engineering, and Mathematics Fields in the Ronald E. McNair Program. Ph.D. Thesis, Colorado State University, Fort Collins, CO, USA, 2009. Available from ProQuest Dissertations and Theses database. (UMI No. 3385152). [Google Scholar]

- Keopuhiwa, T. Under the Surface: An Examination of Voice, Space, and Identity in West Virginia University McNair Scholars. Ph.D. Thesis, West Virginia University, Morgantown, WV, USA, 2012. Available from ProQuest Dissertations and Theses database. (UMI No. 3530314). [Google Scholar]

- Olivas, B. Supporting First-Generation Writers in the Composition Classroom: Exploring the Practices of the Boise State University McNair Scholars Program. Ph.D. Thesis, University of Nebraska, Lincoln, NE, USA, 2016. [Google Scholar]

- Williams, E.G. Academic, Research, and Social Self-Efficacy among African American Pre-McNair Scholar Participants and African American Post-McNair Scholar Participants. Ph.D. Thesis, Virginia Tech, Blacksburg, VA, USA, 2004. [Google Scholar]

- Brown, S.D.; Tramayne, S.; Hoxha, D.; Telander, K.; Fan, X.; Lent, R.W. Social cognitive predictors of college students’ academic performance and persistence: A meta-analytic path analysis. J. Vocat. Behav. 2008, 72, 298–308. [Google Scholar] [CrossRef]

- Ford, L. A Phenomenological Study Exploring the Undergraduate McNair Program Experience of Program Alumni Currently Serving as College Faculty. Ph.D. Thesis, Loyola University, Chicago, IL, USA, 2011. [Google Scholar]

- Ishiyama, J.T.; Hopkins, V.M. Assessing the impact of the McNair Program on students at a public liberal arts university. Oppor. Outlook 2001, 20, 20–24. [Google Scholar]

- Graham, L. Learning a new world: Reflections on being a first-generation college student and the influence of TRIO programs. New Dir. Teach. Learn. 2011, 2011, 33–38. [Google Scholar] [CrossRef]

- Olive, T. Desire for higher education in first-generation Hispanic college students. Int. J. Interdiscip. Soc. Sci. 2010, 5, 377–389. [Google Scholar] [CrossRef]

- Love, E. A simple step: Integrating library reference and instruction into previously established academic programs for minority students. Ref. Libr. 2009, 50, 4–13. [Google Scholar] [CrossRef]

- Nnadozie, E.; Ishiyama, J.; Chon, J. Undergraduate research internships and graduate school success. J. Coll. Stud. Dev. 2001, 42, 145. [Google Scholar]

- Scripa, A.J.; Lener, E.F.; Gittens, C.B.; Stovall, C. The McNair Scholars Program at Virginia Tech: A unique model of librarian mentoring. Va. Libr. 2012, 58. Available online: https://ejournals.lib.vt.edu/valib/article/view/1224/1624 (accessed on 19 April 2017). [CrossRef]

- Cruz, I. Reimagining the Ronald E. McNair Scholars Program through the lens of intellectual entrepreneurship. Plan. High. Educ. 2015, 43, 33–39. [Google Scholar]

- Greene, K. Alumni Perceptions of the McNair Scholars Program at Kansas Universities. Ph.D. Thesis, Kansas State University, Manhattan, KS, USA, 2007. Available from ProQuest Dissertations and Theses database. (UMI No. 3274485). [Google Scholar]

- Smell, A. McNair Scholars: Overcoming the obstacles of underrepresented students. Ursidae Undergrad. Res. J. Univ. North. Colo. 2015, 5, 19. Available online: https://digscholarship.unco.edu/urj/vol5/iss1/19/ (accessed on 1 March 2017).

- Grimmett, M.A.S.; Bliss, J.R. Assessing federal TRIO McNair program participants’ expectations and satisfaction with projects. J. Negro Educ. 1998, 67, 404–415. [Google Scholar] [CrossRef]

- National Center for Educational Statistics. Integrated Postsecondary Education Data System. [Data File]. Available online: https://nces.ed.gov/ipeds/ (accessed on 1 May 2017).

- U. S. Census Bureau. Statistical Abstract of the United States, 119th ed.; U. S. Census Bureau: Suitland, MD, USA, 1999. Available online: https://www.census.gov/library/publications/1999/compendia/statab/119ed.html (accessed on 20 April 2017).

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.T.; Rothstein, H.R. Introduction to Meta-Analysis; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Paule, R.C.; Mandel, J. Consensus values and weighting factors. J. Res. Natl. Bur. Stand. 1982, 87, 377–385. [Google Scholar] [CrossRef]

- Veroniki, A.A.; Jackson, D.; Viechtbauer, W.; Bender, R.; Bowden, J.; Knapp, G.; Salanti, G. Methods to estimate the between-study variance and its uncertainty in meta-analysis. Res. Synth. Methods 2016, 7, 55–79. [Google Scholar] [CrossRef] [PubMed]

- Burnham, K.P.; Anderson, D.R. Multimodel inference: Understanding AIC and BIC in model selection. Sociol. Methods Res. 2004, 33, 261–304. [Google Scholar] [CrossRef]

- Stijnen, T.; Hamza, T.H.; Özdemir, P. Random effects meta-analysis of event outcome in the framework of the generalized linear mixed model with applications in sparse data. Stat. Med. 2010, 29, 3046–3067. [Google Scholar] [CrossRef] [PubMed]

- Viechtbauer, W. Conducting meta-analyses in R with the metafor package. J. Stat. Softw. 2010, 36, 1–48. Available online: https://www.jstatsoft.org/article/view/v036i03 (accessed on 20 April 2017). [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018; Available online: https://www.R-project.org/ (accessed on 1 May 2017).

- Hedges, L.V. Meta-analysis. J. Educ. Stat. 1992, 17, 279–296. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef] [PubMed]

- Orwin, R.G. A fail-safe N for effect size in meta-analysis. J. Educ. Stat. 1983, 8, 157–159. [Google Scholar] [CrossRef]

- Rosenberg, M.S. The file-drawer problem revisited: A general weighted method for calculating fail-safe numbers in meta-analysis. Evolution 2005, 59, 464–468. [Google Scholar] [CrossRef]

- Borenstein, M.; Hedges, L.V.; Higgins, J.P.T.; Rothstein, H.R. A basic introduction to fixed-effect and random-effects models for meta-analysis. Res. Synth. Methods 2010, 1, 97–111. [Google Scholar] [CrossRef]

| Study | Odds Ratio | Sampling Variance | McNair Type | Created Comparison | nMcNair | ncomparison |

|---|---|---|---|---|---|---|

| Chatman (1995) | 15.01 | 1.20 | Single | Yes | 25 | 1,668,700 |

| Fifolt, Engler, and Abbott (2014) | 6.71 | 1.10 | Single | Yes | 47 | 2,607,801 |

| Ishiyama & Hopkins (2001) | ||||||

| First-generation, low-income, ambitious, and high academic ability | 5.08 | 1.15 | Single | Yes | 47 | 118 |

| First-generation and low-income * | 7.60 | 1.11 | Single | Yes | 47 | 399 |

| Mansfield et al. (2002) | ||||||

| Finished McNair in 1999 | 1.98 | 1.00 | Multiple | Yes | 9090 | 1,743,411 |

| Finished McNair in 1998 | 1.39 | 1.00 | Multiple | Yes | 4140 | 1,720,320 |

| Finished McNair in 1997 | 1.51 | 1.00 | Multiple | Yes | 3618 | 1,706,661 |

| McCoy, Wilkinson, and Jackson (2008) | ||||||

| McNair 89–93 cohorts | 18.58 | 1.05 | Multiple | No | 100 | 1,679,162 |

| McNair 89–00 cohorts * | 22.07 | 1.00 | Multiple | No | 12,530 | 1,742,196 |

| Seburn, Chan, and Kirshstein (2005) | ||||||

| Nationally representative sample | 1.66 | 1.01 | Multiple | No | 1282 | 118,000 |

| Demographically similar sample * | 1.84 | 1.01 | Multiple | No | 1282 | 700,000 |

| Thomas (1994) | ||||||

| McNair at Rutgers, 1993 | 46.20 | 1.81 | Single | Yes | 88 | 1,688,400 |

| McNair at Rutgers, 1994 | 58.21 | 2.77 | Single | Yes | 91 | 1,721,500 |

| Model | k | a (SE) | b (SE) | AICc | BIC | τ 2 (SE) | QB (df) | QW (df) | I2 |

|---|---|---|---|---|---|---|---|---|---|

| All Studies | |||||||||

| Baseline | 13 | 5.91 (1.43) | – | 47.73 | 47.66 | 1.51 (0.67) | – | – | 99.80 |

| Single MSP | 13 | 3.37 (1.50) | 3.71 (1.89) | 46.92 | 45.95 | 1.15 (0.54) | 4.24 (1) | 8288.12 (11) | 99.76 |

| Created Comp. | 13 | 5.93 (1.92) | 1.01 (2.21) | 51.39 | 50.42 | 1.67 (0.77) | <0.01 (1) | 1521.86 (11) | 99.72 |

| Studies with Non-Overlapping Samples | |||||||||

| Baseline | 10 | 6.18 (1.55) | – | – | – | 1.73 (0.89) | – | – | 99.86 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Renbarger, R.; Beaujean, A. A Meta-Analysis of Graduate School Enrollment from Students in the Ronald E. McNair Post-Baccalaureate Program. Educ. Sci. 2020, 10, 16. https://doi.org/10.3390/educsci10010016

Renbarger R, Beaujean A. A Meta-Analysis of Graduate School Enrollment from Students in the Ronald E. McNair Post-Baccalaureate Program. Education Sciences. 2020; 10(1):16. https://doi.org/10.3390/educsci10010016

Chicago/Turabian StyleRenbarger, Rachel, and Alexander Beaujean. 2020. "A Meta-Analysis of Graduate School Enrollment from Students in the Ronald E. McNair Post-Baccalaureate Program" Education Sciences 10, no. 1: 16. https://doi.org/10.3390/educsci10010016

APA StyleRenbarger, R., & Beaujean, A. (2020). A Meta-Analysis of Graduate School Enrollment from Students in the Ronald E. McNair Post-Baccalaureate Program. Education Sciences, 10(1), 16. https://doi.org/10.3390/educsci10010016