1. Introduction

Predictive models are essential in business practice, as they enable early risk identification and support effective decision-making. Their use is particularly critical in financial distress prediction, where they can help minimise financial losses for all stakeholders (

Hammond et al., 2023). Assessing the financial sustainability of business partners, customers, or financial institutions is particularly important given that corporate failures result in substantial economic losses not only for shareholders and creditors but also for society, imposing broader social and economic costs (

Zelenkov & Volodarskiy, 2021;

Laitinen et al., 2023). However, financial distress or bankruptcy can often be anticipated through various warning signals. Early recognition and accurate interpretation of these signals enable managers to take timely and effective actions to mitigate the consequences of a potential crisis (

Balina et al., 2021). Consequently, the ability to reliably predict corporate failure has become a vital element of strategic decision-making in both financial and non-financial firms (

Citterio, 2024).

Currently, the business environment faces challenges such as global health crises, supply chain disruptions, geopolitical tensions, and rising inflation and interest rates, all of which fundamentally affect firms’ financial stability (

Svabova et al., 2022). The COVID-19 pandemic has made even large companies vulnerable, emphasising the need for reliable tools to identify risks and predict potential insolvency (

Archanskaia et al., 2023). Therefore, the reliability and robustness of prediction models are particularly important not only for the affected companies but also for investors, creditors, and regulators, who must make informed decisions under conditions of uncertainty (

Chakrabarti et al., 2024).

Conventional bankruptcy prediction models, including Altman’s Z-score and logit models, have typically been developed and tested under economically stable conditions (

Shi & Li, 2024;

Bobitan & Dumitrescu, 2024). However, their application during times of crisis may yield misleading results due to the dynamic evolution of the relationship between financial ratios and the probability of bankruptcy over time (

Dasilas & Rigani, 2024). Therefore, it is crucial to assess their effectiveness at different stages of the economic cycle (

Papik & Papikova, 2024). Despite the growing importance of this issue, the literature contains relatively limited research that methodologically compares the performance of predictive models across different economic conditions. This research area offers the potential for deeper analysis that can contribute to more effective financial risk management and the development of more robust predictive tools applicable in practice.

Although this study focuses primarily on classification-based predictive techniques, it is important to recognise that time-series forecasting methods have also played a major role in economic and financial prediction. The Box-Jenkins ARIMA framework has historically been one of the earliest and most influential approaches for modelling in economics (

Box & Jenkins, 1970;

Box et al., 2016). Its relevance extends across the economic and social sciences, where ARIMA models have been widely applied to predict macroeconomic aggregates, market movements, and firm-level financial ratios (

Chatfield, 2003;

Hyndman & Athanasopoulos, 2018). In financial distress research, time-series approaches can complement ratio-based classification models by modelling the temporal evolution of financial indicators or macroeconomic conditions that shape insolvency risk (

Kaczmarek, 2012). The usefulness of ARIMA has been reinforced during periods of heightened volatility, including the COVID-19 pandemic, when it was widely used to generate reliable forecasts. For instance,

Das (

2020) demonstrated the strong predictive performance of the ARIMA model in forecasting COVID-19 incidences across highly affected countries, highlighting the method’s robustness and accuracy. While our study does not employ ARIMA directly, acknowledging this methodological stream extends the broader forecasting context within which bankruptcy prediction research is situated.

Therefore, the impact of different economic periods on the accuracy of financial distress prediction is analysed in this paper. Moreover, two approaches to model creation are compared. First, separate models for three different economic periods (pre-pandemic, pandemic, and post-pandemic) are developed. The period 2022–2023 is referred to as the post-pandemic period, representing the phase after pandemic-related restrictions ended. We acknowledge that this period includes additional shocks, most notably the war in Ukraine and subsequent inflationary pressures. Therefore, this period is not treated as fully homogeneous but rather as a distinct phase characterised by macroeconomic conditions different from those of the acute pandemic years. Second, a single comprehensive model is created that incorporates period-indicating variables. The research examines whether models customised for specific business cycle phases exhibit greater predictive capability than a universal model with the period indicator.

The motivation for this study arises from the growing need to understand how economic disruptions, particularly extreme events such as the COVID-19 pandemic, affect the stability and reliability of financial distress prediction models. Although bankruptcy prediction has been extensively studied for decades, the existing literature rarely examines whether these models’ performance remains consistent when economic conditions change significantly. Most prior studies focus either on crisis or on stable periods alone, without systematically comparing predictive accuracy across multiple phases of the economic cycle or assessing whether a unified modelling framework can adapt to shifting financial dynamics. This research gap is particularly important because, during the pandemic, companies faced abrupt financial pressures, structural changes in cash flows, and increased liquidity risk, raising concerns about whether prediction models developed under normal conditions remain stable in periods of economic instability. For risk managers, investors, regulators, and policymakers, the ability to rely on prediction models during turbulent periods is essential for timely intervention, credit allocation, and systemic risk monitoring. By addressing this gap, this study responds to these needs by providing a systematic comparison of model performance across three distinct economic periods and evaluating whether a single comprehensive model can maintain predictive accuracy even when the economic environment changes significantly.

Reflecting the aim of the research, the following question and hypotheses were set for the purpose of this research:

RQ: How does changing the modelling approach affect the ability to predict financial distress over different economic periods?

H1. The relative predictive performance ranking of modelling techniques differs significantly across economic periods (pre-pandemic, pandemic, and post-pandemic periods).

H2. Period-specific models achieve higher accuracy than a universal model with a period indicator.

The next parts of the paper are organised as follows. The Literature review section highlights the current state of financial distress prediction.

Section 2 explains the study methodology, briefly characterises the modelling techniques, and describes the data used in the study.

Section 3 presents the results for both modelling approaches. The final section concludes the study.

Literature Review

The prediction of corporate failure has been extensively studied since the work of

Beaver (

1966) and

Altman (

1968). Among the best-known models is Altman’s Z-score, based on multivariate discriminant analysis (

Khashei et al., 2024), which is widely used due to its ease of application and relative reliability (

Alam et al., 2021). However, its limitations have also been highlighted in the literature, including its origins in US industrial firms, the use of static indicators, and its sensitivity to accounting manipulations (

Dieperink et al., 2024).

The predictive model’s performance is strongly influenced by economic conditions. Using a random forest algorithm, the key factors contributing to the increased bankruptcy risk during the COVID-19 pandemic were identified by

Bozkurt and Kaya (

2023), highlighting that the country’s macroeconomic conditions had an even greater impact on bankruptcy risk. These results were confirmed by

Durana et al. (

2025a) for the V4 countries, emphasising the importance of national economic specificities, and by

Narvekar and Guha (

2021), who highlighted the significance of business cycle phases for model performance.

The importance of considering the broader context of corporate bankruptcies is also emphasised by

Kitowski et al. (

2022) and

Amankwah-Amoah et al. (

2021). It is argued that the focus should be on globalisation, accelerating change, and new forms of risk that reduce the effectiveness of existing prediction models. It is also pointed out that relying on experience can become a disadvantage precisely because of the strategic rigidity of firms.

Similarly, it was found by

Papik and Papikova (

2022) that models trained on pre-pandemic data are significantly less reliable during a pandemic. During the crisis, debt indicators were important, while liquidity dominated the stable period. Similar findings are reported in the study by

Spiler et al. (

2022), in which traditional models failed to account for structural changes during the 2020 pandemic crisis, resulting in a decline in prediction accuracy. Therefore, the development of more flexible models that can respond to market dynamics in times of crisis is recommended.

Modifications to the model for the crisis period are also discussed by

Halteh (

2025), whose research found that the COVID-19 crisis primarily affected larger firms. A divergence in factors affecting financial distress between small and large firms was also revealed, underscoring the need for firm-specific models. While small firms were mainly affected by liquidity and debt, large firms were primarily impacted by profitability and capital structure.

The methodology for model creation was discussed by

Shetty et al. (

2022), and the reliability of simple financial data models was demonstrated. However, the benefits of machine learning techniques are highlighted by

Mate et al. (

2023) and

Durica et al. (

2023). In the study of

Elsayed et al. (

2024), the random forest algorithm achieved the best results. Moreover, it was emphasised that the correct selection of input variables significantly enhances prediction accuracy.

2. Methodology

In this study, several models for predicting companies’ financial distress have been developed using two modelling approaches. Firstly, separate period-specific models for the pre-pandemic, pandemic, and post-pandemic periods were developed. Secondly, a comprehensive model covering all periods that contains the period-indicating variables among the potential predictors was created. Several techniques for model development were selected, including CART, CHAID, and C5 decision trees, as well as logistic regression, discriminant analysis, and neural networks. All these methods are suitable for the classification task of predicting the company’s financial state. Therefore, in the following section, all methods of model creation and the measures for their evaluation are briefly characterised.

2.1. Modelling Techniques Used in the Study

Decision tree algorithms are widely used in predictive modelling, mainly due to their interpretability and flexibility. These algorithms recursively partition the dataset into increasingly homogeneous subsets based on the decision rules derived from the explanatory variables (

Rudd Mph & Priestley, 2017). In decision-tree terminology, the root node represents the initial node containing the entire dataset before any partitioning occurs. From this point, the algorithm applies splitting rules based on selected predictor variables to divide the data into more homogeneous groups. The splitting variables and the splitting criteria are selected automatically according to the criterion of best separation between or among the groups of the outcome variable. This criterion varies across decision tree techniques. The nodes resulting from these intermediate splits are called internal nodes; each contains a subset of observations and may be further partitioned if doing so improves node purity. The process continues until no additional meaningful splits can be made or a stopping criterion is reached. The splitting process stops when the stop criteria are met, such as a maximum tree depth, a minimum number of cases in a parent or child node, or a minimal change in impurity. At this stage, the algorithm produces terminal nodes, also known as leaves, which represent the final, indivisible subsets of the data. The entire process yields a tree-structured model, where each internal node represents a division based on a predictor variable, and each leaf node represents a specific prediction. In classification tasks, the predicted class is determined by the modal class; in regression tasks, by the average value. The tree should be rewritten into a set of if-then criteria or depicted as a tree. This hierarchical representation enables decision trees to capture non-linear relationships and interaction effects without requiring distributional assumptions or prior specification of functional forms. The tree prediction model should be used for both predictive and descriptive purposes, as it can not only classify new cases but also provide easily interpretable relationships between the predictors and the outcome.

The CART (Classification and Regression Trees) algorithm, originally introduced by

Breiman et al. (

1984), represents one of the most influential methods in the family of decision tree techniques. It is a non-parametric predictive modelling method based on recursive partitioning of the predictor space. CART constructs a decision tree by recursively partitioning the data into increasingly homogeneous subsets according to values of the explanatory variables. The tree is binary, meaning each parent node has two child nodes. At each step, the method evaluates potential splits and selects the one automatically that yields the greatest improvement in node purity. The input variables for these splits are chosen automatically based on a criterion that maximises the homogeneity of the resulting subsets. For classification tasks, the most frequently used criterion for measuring the homogeneity is the Gini impurity index, while regression trees rely on minimising the variance within nodes (

Loh, 2011). In our study, the technique is employed to solve the classification task, with a minimum impurity change of 0.0001. The stopping rules are as follows: a maximum tree depth of 5, a minimum of 2% of records in the parent branch, and 1% of records in the child branch. To address the problem of overfitting, in which the resulting CART model becomes overly complex, the tree can be pruned by removing branches that do not significantly improve the model’s prediction accuracy (

Gabrikova et al., 2023).

CHAID (Chi-Square Automatic Interaction Detection) is a decision tree algorithm that utilises chi-square statistical tests to identify the optimal splits at each step of the recursive partitioning process. For continuous predictors, CHAID groups values into categories prior to testing, whereas for categorical predictors, it merges categories that do not differ significantly from each other with respect to the outcome variable. This merging ensures that only the categories with distinct relationships with the outcome remain (

Kass, 1980;

Song & Lu, 2015). CHAID trees can have multiple branches at each node, making them more universal and capable of capturing more complex relationships and interactions among the predictors and the outcome (

Magidson, 1993). However, as CHAID employs statistical significance testing, the computational complexity in the case of large datasets is likely to be overly high (

Durica et al., 2019;

Kicova et al., 2025). The stopping criteria used in this study are as follows: a maximum tree depth of 5, a minimum of 2% of records in the parent branch, and 1% of records in the child branch, a significance level for splitting and merging the continuous predictors of 0.05, and a minimum change in expected cell frequencies for categorical predictors (if any) of 0.001.

The C5.0 algorithm constructs decision trees by selecting predictors and their splitting criteria based on the concept of information entropy. Each parent node can be divided into multiple child nodes, allowing for the capture of the complexity of the relationships among the variables. This technique is particularly known for its speed, accuracy, and ability to handle noisy data (

M.-Y. Chen, 2011). The stopping criteria used in this study are defined by a pruning severity of 75% and a minimum of 2 records in the child branch. This technique enables pruning after tree growth, where branches without a significant contribution to the model’s predictive performance are omitted to improve readability and simplify the tree structure (

Massahi Khoraskani et al., 2018;

Vlaović Begović & Bonić, 2020).

Logistic regression (LR) is a widely used statistical technique for modelling the probability of a binary outcome and has long been applied to financial distress prediction, primarily due to its simplicity and interpretability. It estimates the probability of a data case belonging to a particular class of the outcome variable, which is most commonly binomial, by modelling the log-odds as a linear combination of the explanatory variables (

Hosmer et al., 2013). In our study, the LR models the probability of a company’s financial distress, given the values of the financial ratios and other variables included in the model. Because the outcome of interest in LR is a probability, it must, by definition, lie between 0 and 1. Therefore, a probability model must ensure that no combination of predictor variables produces values outside this range. A simple linear regression model, expressed as a linear combination of predictors, cannot guarantee this restriction, because the linear predictor can take any real value. Consequently, the estimated probabilities could fall below 0 or above 1, which is mathematically invalid and meaningless for classification. To avoid this problem, logistic regression applies a link function that transforms the real values into the

interval. Such a transformation is achieved by modelling the log-odds (or logit) of the outcome rather than the probability itself. Formally, if

denotes the probability of financial distress, the logistic model specifies that

where

represents the logit transformation of the probability

, and

denote the values of predictor variables. The log-odds transformation ensures that the predicted values remain bounded between 0 and 1 (

Menard, 2002). The regression coefficients

are typically estimated using the method of maximum likelihood, which identifies the parameter values that maximise the probability of obtaining the observed data (

Long, 1997;

Hosmer et al., 2013). After estimating the regression coefficients of predictors, the logistic function for the probability of financial distress is

where

and

are the estimated values of coefficients

.

The estimated coefficients can be interpreted in terms of odds ratios and describe the direction and magnitude of the relationship between each predictor and the probability of the outcome, thereby providing an intuitive understanding of the relative impact of financial indicators on the likelihood of distress (

Peng et al., 2002). The reverse transformation by exponentiating the coefficient

into

, yields an odds ratio, which quantifies the multiplicative change in the odds of financial distress associated with a one-unit increase in the corresponding predictor, holding other variables constant (

Menard, 2002). In this study, the logistic regression coefficients estimate how the one-unit changes in a particular financial ratio affect the probability that a company enters financial distress within the one-year horizon.

LR has several advantages, including ease of estimation, well-understood statistical properties and straightforward interpretability of the coefficients. Its parametric form provides a clear quantification of relationships and allows for hypothesis testing (

Peng et al., 2002). However, LR relies on linearity in the logit and several other assumptions that may limit its effectiveness in capturing complex, non-linear, or interactive patterns that often characterise financial data (

Zizi et al., 2021).

Discriminant analysis (DA) is one of the earliest statistical techniques applied to corporate failure prediction,, with the most famous model being Altman’s Z-score model, which was created using linear DA and represents the most prominent example of its successful use in this field (

Altman, 1968). The purpose of DA is to classify observations into predefined groups of the outcome variable based on a set of explanatory variables. Discrimination is achieved by deriving a discriminant function that best separates the groups based on their multivariate profiles. In the case of linear DA, which is the most common form, the discriminant function is constructed as a linear combination of predictors in the form

where the coefficients

are selected to maximise the separation between distressed and non-distressed companies. Mathematically, the optimisation criterion is based on maximising the ratio of the between-group variance to the within-group variance, which ensures the greatest discriminatory power (

Johnson & Wichern, 2008;

Rencher & Christensen, 2012).

Once the discriminant function is estimated, its values are compared against a classification threshold, which may be fixed or estimated from the data, to classify each company into one of the predefined outcome groups. In binary classification tasks, such as financial distress prediction, companies are classified into one of two groups based on the outcome variable (distressed or healthy). The discriminant coefficients can be interpreted similarly to regression coefficients, reflecting the relative contribution of each financial indicator to the value of the discriminant function. Additionally, structure coefficients or canonical correlations may be examined to understand the relationships between the predictors and the discriminant function. Discriminant analysis has been extensively used for predicting corporate financial distress, as it classifies companies into categories based on the outcome variable using a discriminant rule and an associated threshold value that separates financially distressed from non-distressed firms.

The advantage of DA is its effectiveness and interpretability of the results. However, it has several statistical assumptions, such as that the independent variables follow a multivariate normal distribution within each group and that the covariance matrices are equal across groups, which ensures linear separation of the classes (

Huberty & Olejnik, 2006;

Lachenbruch, 2006). These assumptions may limit its applicability to real-world financial data, where they are often not met (

Soni, 2019;

Yakymova & Kuz, 2019). However, despite its limitations, such as sensitivity to violations of normality and homogeneity assumptions, discriminant analysis remains a benchmark technique in financial distress prediction studies due to its interpretability and historical significance (

Huberty & Olejnik, 2006).

Artificial Neural Networks (ANNs) represent a class of computational models inspired by biological neural systems. ANNs have gained prominence in financial distress prediction due to their capacity to model complex, non-linear relationships between financial indicators and corporate outcomes (

Dubea et al., 2021). ANNs consist of interconnected processing nodes (neurons) organised in an input layer, one or more hidden layers, and an output layer. Each neuron transforms incoming signals through an activation function, such as the logistic sigmoid, hyperbolic tangent or rectified linear unit, and weighted connections (

Haykin, 1999). The learning process typically involves optimising these weights of the connections between neurons so that the network reproduces the mapping between input variables and the observed output as accurately as possible. This is typically achieved using algorithms such as backpropagation to minimise prediction error, combined with optimisation algorithms such as gradient descent or its modern variants, which iteratively update the weights to minimise a predefined loss function (

Jang et al., 2019;

Goodfellow et al., 2016). Through repeated exposure to training data, the network gradually improves its internal representation of the underlying structure of the financial ratios and other covariates relevant for distress prediction.

Unlike traditional statistical methods, ANNs do not require stringent assumptions of multivariate normality, linearity, or homogeneity of variances, making them particularly suitable when financial data characterised by skewness, heavy tails, outliers, or multicollinearity (

Wu et al., 2022).

Nevertheless, despite these strengths, ANNs also present several methodological challenges. They are prone to overfitting, especially when trained on relatively small samples or noisy financial data (

Goodfellow et al., 2016). Their performance depends heavily on the selection of hyperparameters, such as the number of layers, the number of neurons, the learning rate, and the activation functions. Furthermore, they are often criticised for their limited interpretability, as the internal structure of a trained neural network does not readily reveal how individual financial indicators contribute to the predicted outcome, a characteristic often described as the “black box” nature (

Aydin et al., 2022).

2.2. Outcome Variable and Models’ Evaluation

The binary outcome variable

in all prediction models is a company’s financial state. In line with standard practice in classification modelling, the company’s state of financial distress was coded as the positive category,

, while the healthy state was coded as the negative category,

. Although this notation may appear counterintuitive from a semantic perspective, the positive class in predictive modelling corresponds to the event of primary analytical interest (a so-called hit category); in this case, identifying companies at risk of financial distress. This coding facilitates the interpretation of commonly used evaluation metrics, mentioned below, such as sensitivity (true positive rate), and it is widely applied in bankruptcy prediction research (e.g.,

Barboza et al., 2017;

Durana et al., 2025b;

Valaskova et al., 2023;

Zelenkov & Volodarskiy, 2021;

Zizi et al., 2021). While these two categories of the outcome variable are strongly unbalanced in the data used in this study (see

Table 1 below), an oversampling technique was applied, which artificially increases the number of companies in the minority class by duplicating existing ones. This approach helps balance the dataset, allowing predictive models to learn the characteristics of both groups more effectively and thereby improve their ability to detect financially distressed firms.

Finally, after creating the models, their prediction performance was assessed using a confusion matrix, which shows the distribution of companies into the following possible situations: (

Kicova et al., 2025)

True positives (TP—actually distressed companies predicted as distressed);

False positives (FP—non-distressed companies predicted as distressed);

True negatives (TN—correctly predicted non-distressed companies);

False negatives (FN—truly distressed companies predicted as non-distressed).

Here, we consider a financially distressed company a positive case; conversely, a healthy company is considered a negative one. As the most important evaluation metrics calculated from the confusion matrix, the following were considered:

Total accuracy, which is the proportion of all correctly predicted companies among all companies (i.e., the ratio of TP + TN to TP + FP + TN + FN);

Sensitivity, which measures the model’s ability to identify distressed companies correctly (i.e., the ratio of TP to TP + FN).

Lastly, the Area Under the Curve (AUC) of the Receiver Operating Characteristic (ROC) curve was also used as a measure of evaluation. The ROC curve is constructed by plotting the true positive rate (sensitivity) against the false positive rate at various classification thresholds. The values of AUC close to 1 indicate strong discrimination, while an AUC below 0.7 generally suggests weak predictive performance.

To ensure reliable performance evaluation, the dataset was divided into a training set used to build the model and a testing set used to evaluate the model’s predictive performance on independent data, with a 50:50 ratio. Therefore, in

Section 3, only the results of the models’ evaluation on the training part of the data are presented, whereas the best models themselves are presented as they were trained on the testing part of the data. To assess the robustness of the results, additional random train–test splits (namely 60:40, 70:30, and 80:20) were evaluated alongside the main 50:50 partition. These checks produced results consistent with the reported findings, confirming that the relative performance of the modelling approaches does not depend materially on the specific split ratio.

It is necessary to admit that several methodological considerations should be considered and justified. Firstly, because the sample is highly imbalanced, oversampling of distressed firms was applied to balance the training data. While this technique can increase sensitivity to duplicated patterns, it is widely used in financial distress prediction and enables the models to learn minority-class characteristics more effectively. Then, because the dataset contains missing values for some financial ratios, industry-mean imputation was applied to maintain sample completeness. Although this widely used approach may slightly reduce variability within industries, it ensures consistency across the dataset and prevents further data loss. Given the size and structure of the sample, this imputation method remains appropriate and does not undermine the models’ internal comparability. Finally, the predictors include only firm-level financial ratios, thereby excluding potential macroeconomic, qualitative, or structural determinants of distress, although this is a standard practice in bankruptcy modelling. Despite these limitations, the dataset remains sufficiently large, diverse, and representative to support stable model estimation, and the comparative design applied across periods ensures that all modelling approaches are evaluated under consistent and transparent conditions.

2.3. Data Used in the Study

In this study, comprehensive data on companies operating in Slovakia from 2018 to 2023 were utilised. The company-level data used in this study were obtained from the Finstat database, a commercial provider that aggregates publicly available annual financial statements of companies registered in the Slovak Republic. Based on this source, information on small and medium-sized enterprises (SMEs) operating in Slovakia during 2018–2023 was extracted, and a panel of 320,490 firm-year observations was compiled. The size of companies was classified according to the SME criteria used in the underlying data, using thresholds for the number of employees, annual turnover, and total assets: small enterprises have fewer than 50 employees and an annual turnover and total assets below 10 million €, while medium-sized enterprises have fewer than 250 employees and an annual turnover below 50 million € and total assets below 43 million €. Finstat covers the vast majority of active Slovak companies that are required by law to submit financial statements. However, the final analytical sample does not represent the entire set of companies. Entities that did not submit financial statements in the years analysed are not included. In addition, only companies with balance sheets and profit and loss items sufficient to derive basic indicators of liquidity, indebtedness, and profitability were retained. Observations with missing or economically inconsistent mandatory items were removed during pre-processing, while missing values in the remaining financial indicators were imputed using industry-specific methods, as described below. The analysed dataset should, therefore, be interpreted as a high-coverage sample of economically relevant Slovak SMEs rather than a complete inventory of all enterprises. For the purposes of this study, the period into three parts: firstly, the data on companies from 2018 to 2019 represents a pre-pandemic period; then, the dataset covering the years 2020 to 2021 represents the pandemic period; and finally, the last dataset covering the years 2022 to 2023 represents the post-pandemic period. Additionally, the entire period from 2018 to 2023 is utilised for the comprehensive common model.

As all the models are created as one-year-ahead, the financial ratio values come from the first-mentioned year in each of the three periods, while the second year is used to state the companies’ financial state. To identify companies’ financial distress, the following criteria were used based on Act 513/1991 Coll—Commercial Code, which defines a company in crisis as one in financial distress or threatened by it. Following this definition, the company is in crisis if it is considered insolvent, i.e., if its current liabilities exceed 1.3 times the value of its cash assets. Subsequently, the company’s status, threatened by financial distress, is defined as a state in which the level of coverage of liabilities by equity is below 0.8 (equity-to-debt ratio). These two criteria are grounded in practices commonly used in Slovakia for determining the company’s financial state. (

Valaskova et al., 2023).

Using these conditions, the following frequencies of companies were obtained.

Table 1 presents the numbers for each of the two groups, as well as the number of companies in the training and testing samples where the oversampling technique was applied to the training sample.

In this study, only small and medium enterprises were considered. All selected financial ratios underwent a correctness check, and all companies with economically invalid values (i.e., negative values in ratios that must be positive) were removed from further analysis. Additionally, missing values were substituted with the industry-specific mean values.

Table 2 presents the financial ratios used in the study as input variables, along with their means and standard deviations (in parentheses) for each of the three periods and the whole period under review.

3. Results

In this study, the comparison of the models’ performances is prioritised over the interpretation of the relationships between the company’s financial state and the input financial ratios. Therefore, in this section, mainly the performance of the models is presented. The predictive performance of the models was evaluated on the testing data using a confusion matrix and key evaluation metrics, including sensitivity, accuracy, and AUC. Nevertheless, the models with the highest prediction performance are presented in more detail.

A comparative overview of the performance of the created bankruptcy prediction models across three distinct economic periods: pre-pandemic, pandemic, and post-pandemic, reveals the following results. Additionally, a comprehensive model covering all periods is presented.

Table 3 presents the results for the pre-pandemic period. Among the six methods applied to create the prediction model, several achieved high predictive performances. The CHAID and CART models have the best overall balance of sensitivity and accuracy, and the CHAID tree also achieved the highest AUC value. The C5.0 model and the neural network performed strongly as well. Logistic regression achieved solid results, while discriminant analysis was clearly the weakest across all metrics.

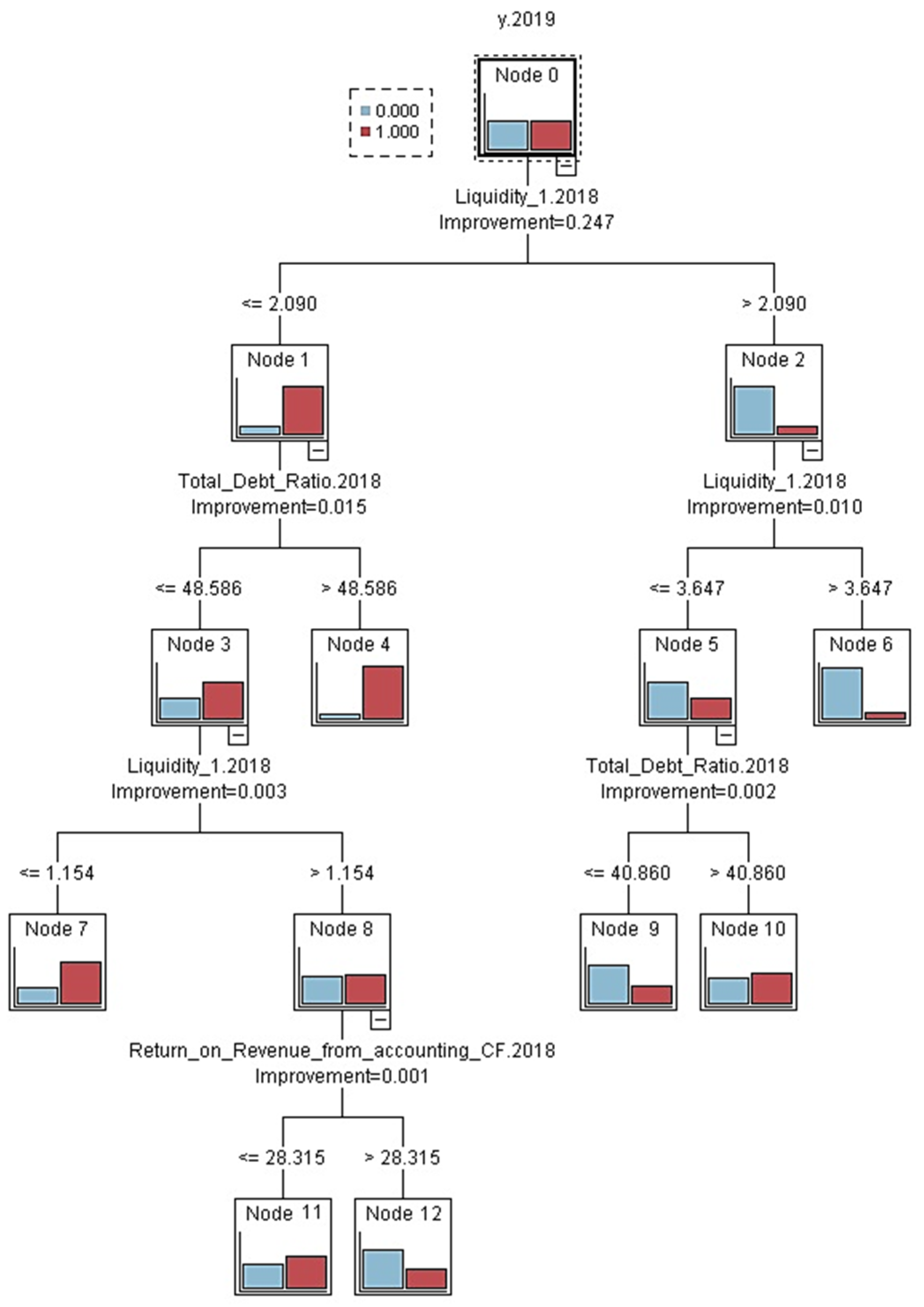

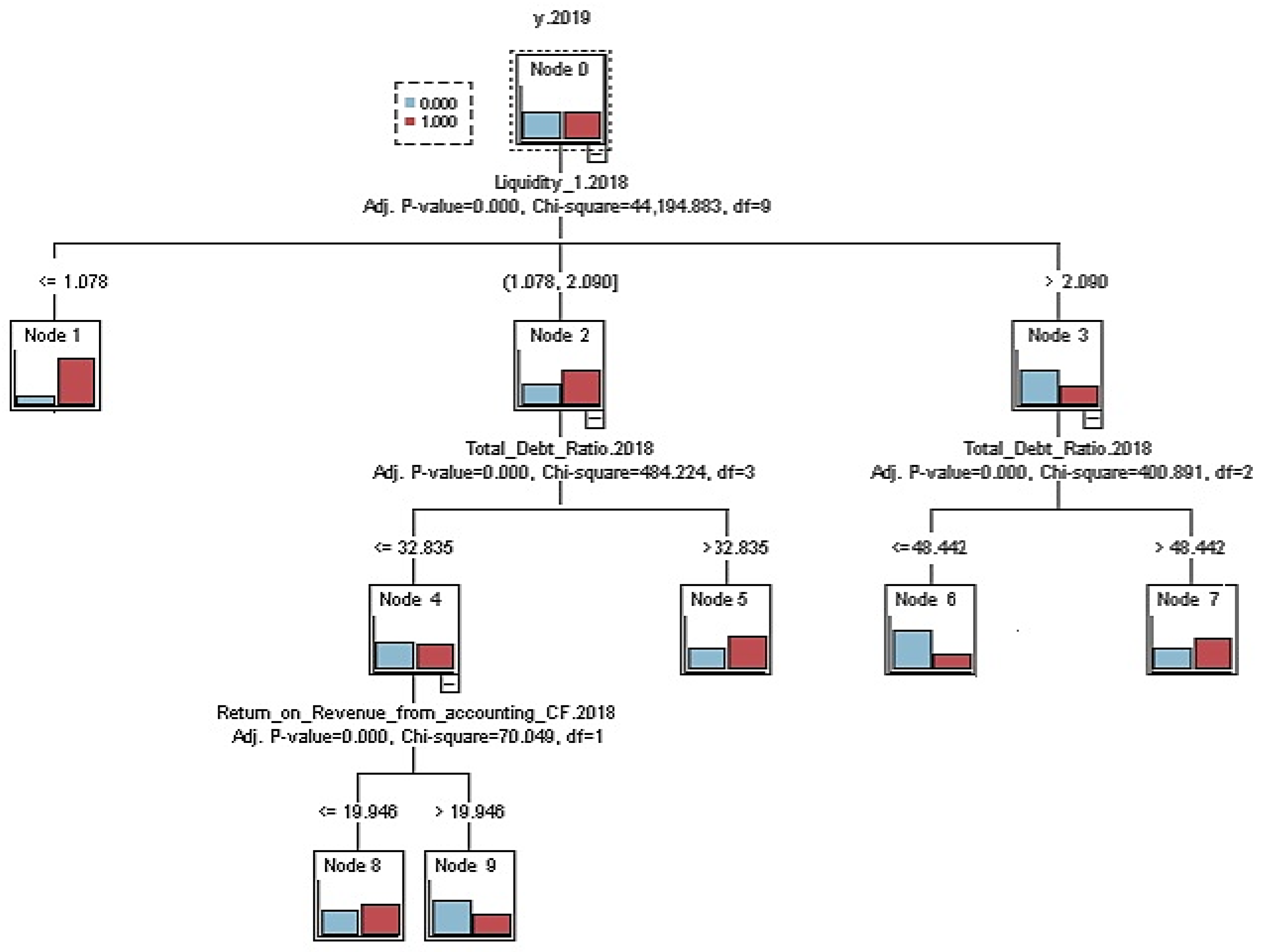

If the models are ordered by their sensitivity, accuracy, and AUC in the period before the pandemic, the first place belongs to CART, closely followed by CHAID model. Therefore, these two models are presented in detail in

Figure 1 and

Figure 2. In all models, blue colour indicates stable companies, while red indicates companies in financial distress. Both models are very similar, with the same ratios and the same values selected by the method as the most important in distinguishing between financially healthy and distressed companies.

In the CART model, the first dividing criterion is Liquidity 1. Companies with Liquidity 1 at or below 2.090 form the left branch, while those with Liquidity 1 above 2.090 form the right branch. On the left branch, the next split is Total Debt Ratio. If the Total Debt Ratio exceeds 48.586, the segment shifts toward higher risk even without further splits. If the Total Debt Ratio is at or below 48.586, the model splits again by Liquidity 1 at 1.154. Values at or below 1.154 indicate a high-risk segment characterised by a predominance of distressed firms. For Liquidity 1 above 1.154, the last distribution is Return on Revenue from accounting Cash Flow, where values at or below 28.315 indicate a higher likelihood of distress, and lower values are linked to a higher share of stable companies. On the right side of the tree, node 2 is split again according to Liquidity 1, with a threshold value of 3.647. Companies with Liquidity 1 higher than 3.647 are immediately classified into the group of stable companies, whereas those with Liquidity 1 in the interval , the Total debt ratio becomes crucial. Among these companies, those with debts of less than 40.860 are classified as stable, while those with higher debts are predominantly distressed.

In the CHAID model, the first dividing criterion is again the Liquidity 1 indicator. Companies with a Liquidity 1 value of 1.078 or lower are classified as high-risk, primarily comprising distressed companies. The remaining companies with liquidity between 1.078 and 2.090 are further split by Total Debt Ratio. If it is at most 32.835, the next division considers Return on Revenue from accounting Cash Flow. A value of up to 19.946 indicates a higher likelihood of distress, while a value above is associated with a higher proportion of stable companies. If the Total Debt Ratio exceeds 32.835 in the moderate liquidity group, the proportion of distressed companies rises even if other liquidity measures are acceptable. For companies with high liquidity (Liquidity 1 above 2.090) the Total Debt Ratio becomes a key factor again. A value of up to 48.442 typically corresponds to a segment mainly composed of stable companies. However, if the Total Debt Ratio exceeds 48.442, the risk of distress increases despite high liquidity. Based on the ranking, short-term liquidity is identified as the most important factor, followed by total debt, with Return on Revenue from Accounting Cash Flow serving as a supporting indicator.

Table 4 presents the results for the pandemic period. Although the models slightly decreased their prediction performance, this may be attributed to the increased economic uncertainty caused by the COVID-19 pandemic. However, it is notable that the DA model has improved compared to its pre-pandemic performance. The CHAID model maintained its high performance, achieving the highest AUC, which highlights its robustness across various economic environments. The neural network achieved the highest sensitivity and the highest accuracy among the compared methods. CART delivered solid accuracy with a stable AUC, and C5.0 performed competitively as well. Logistic regression showed moderate and relatively stable results, but it still lagged behind the tree-based and NN approaches.

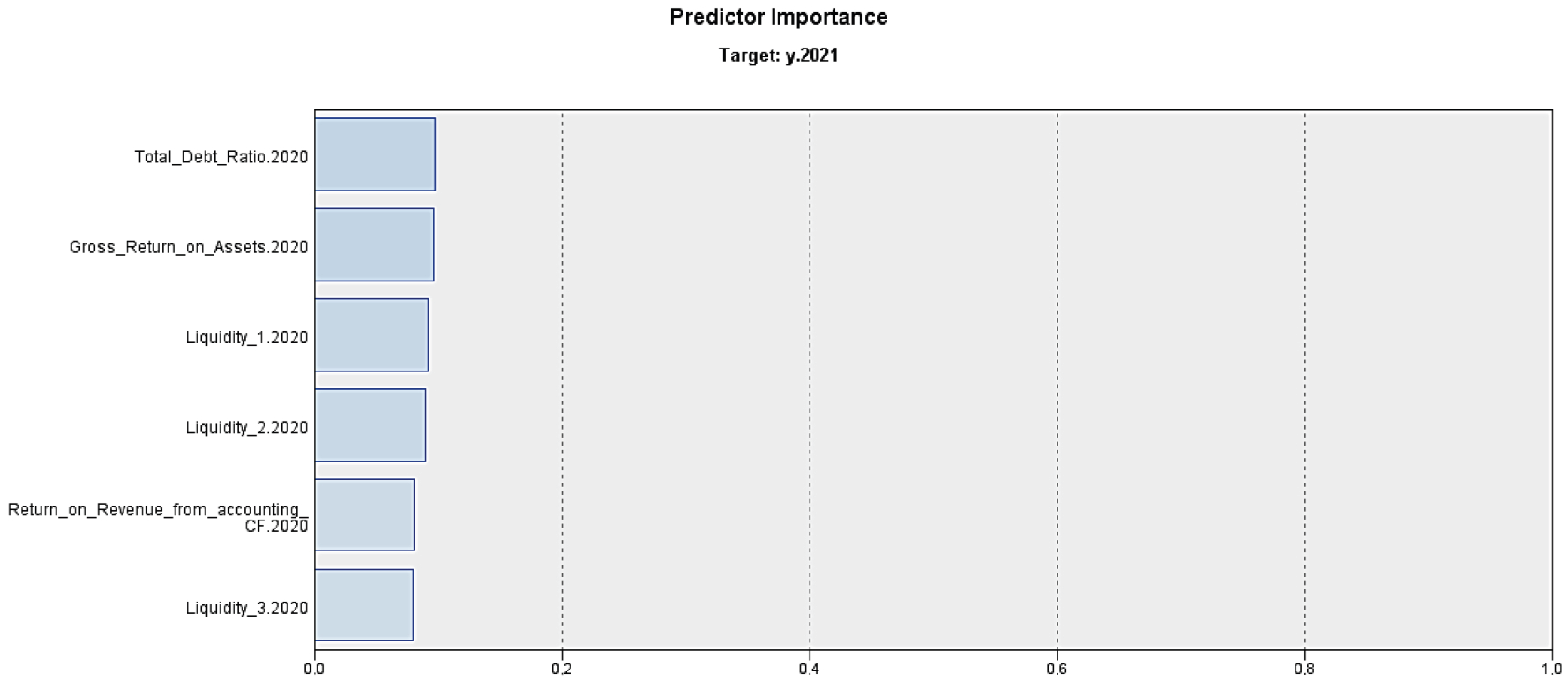

When the models are compared in terms of their evaluation measures, it can be concluded that ANN achieves the highest prediction performance, followed by the CHAID model. Nevertheless, as the ANN model is of a black-box nature, a detailed description of it cannot be provided. However, the importance of the predictors used in this model can be presented.

Figure 3 illustrates these details for the six most important variables included in the model, ordered by their predictor importance. The total importance of all predictors in the model is one.

In this case, the Total Debt Ratio and Gross Return on Assets share first place, with an importance of 0.10, followed by Liquidity 1 and Liquidity 2, with an importance of 0.09. The third place belongs to the Return on Revenue from Accounting Cash Flow and Liquidity 3, with the importance of 0.08.

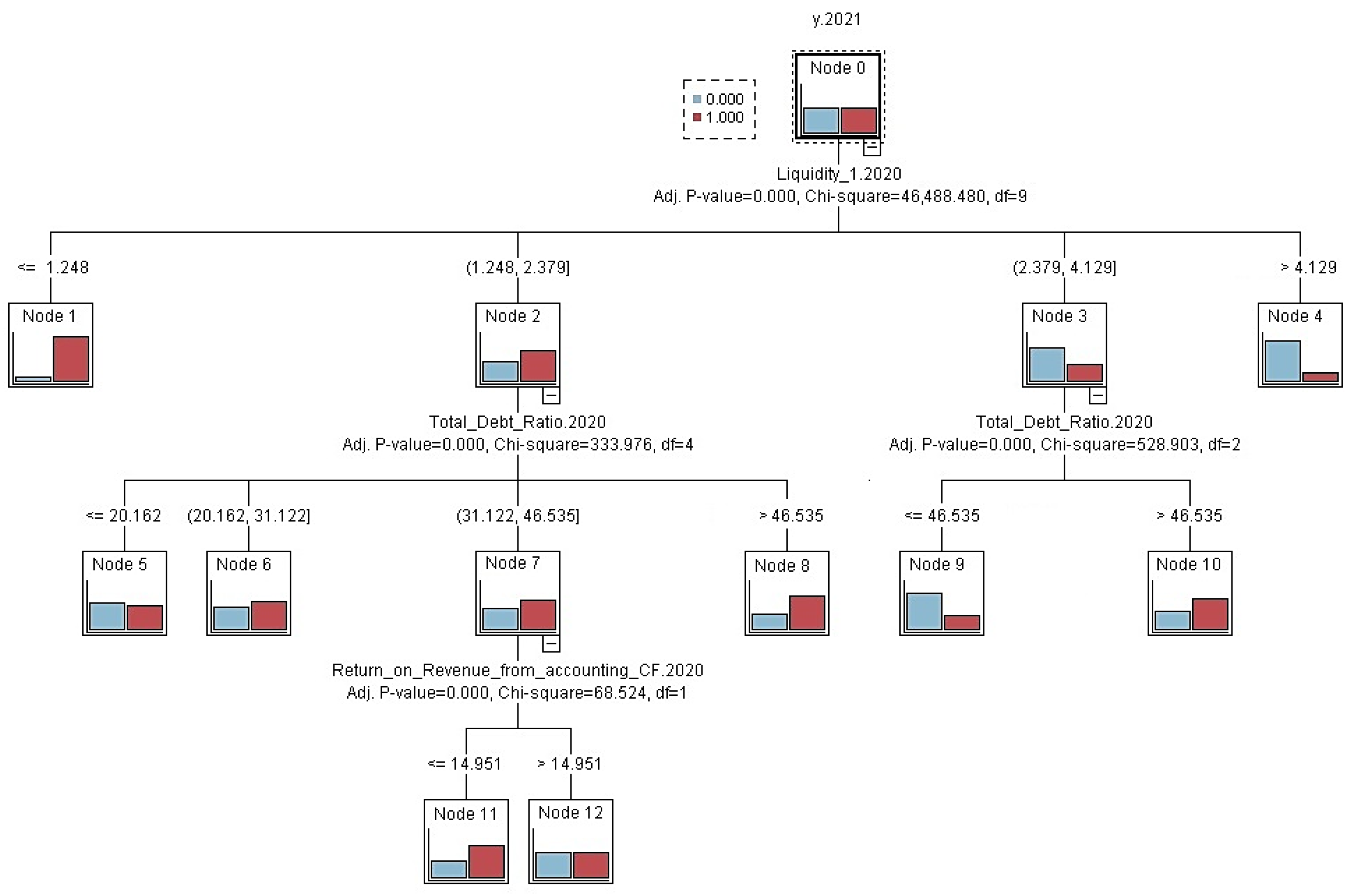

Figure 4 presents the CHAID model for the pandemic period.

As in the pre-pandemic period, the Liquidity 1 indicator maintained its position as the first dividing criterion in the CHAID tree. Companies with a liquidity value of 1.248 or less are classified as high risk and are mostly in distress. The remaining companies with liquidity between 1.248 and 2.379 are further split by the Total Debt Ratio. If the Total Debt Ratio is at most 20.162, the segment is mostly stable. If the total debt ratio is 20.162 or less, the segment is mostly stable with a slight occurrence of blue cases. On the contrary, values between 20.162 and 31.122 show a slight predominance of problematic companies, indicating a slight move towards higher risk. Suppose the Total Debt Ratio is between 31.122 and 46.535. In that case, the next split considers the Return on Revenue from accounting Cash Flow, where a value below 14.951 indicates a higher probability of distress, and a value above 14.951 indicates a higher proportion of stable companies. When the Total Debt Ratio exceeds 46.535 in this group, the proportion of companies in difficulty increases. For companies with higher liquidity, meaning liquidity between 1 and 2.379 and 4.129, the total debt ratio again becomes crucial. A value of up to 46.535 typically corresponds to a segment comprising mostly stable companies, while values above this level increase the risk of distress. At very high liquidity above 4.129, the resulting segment is generally favourable.

In

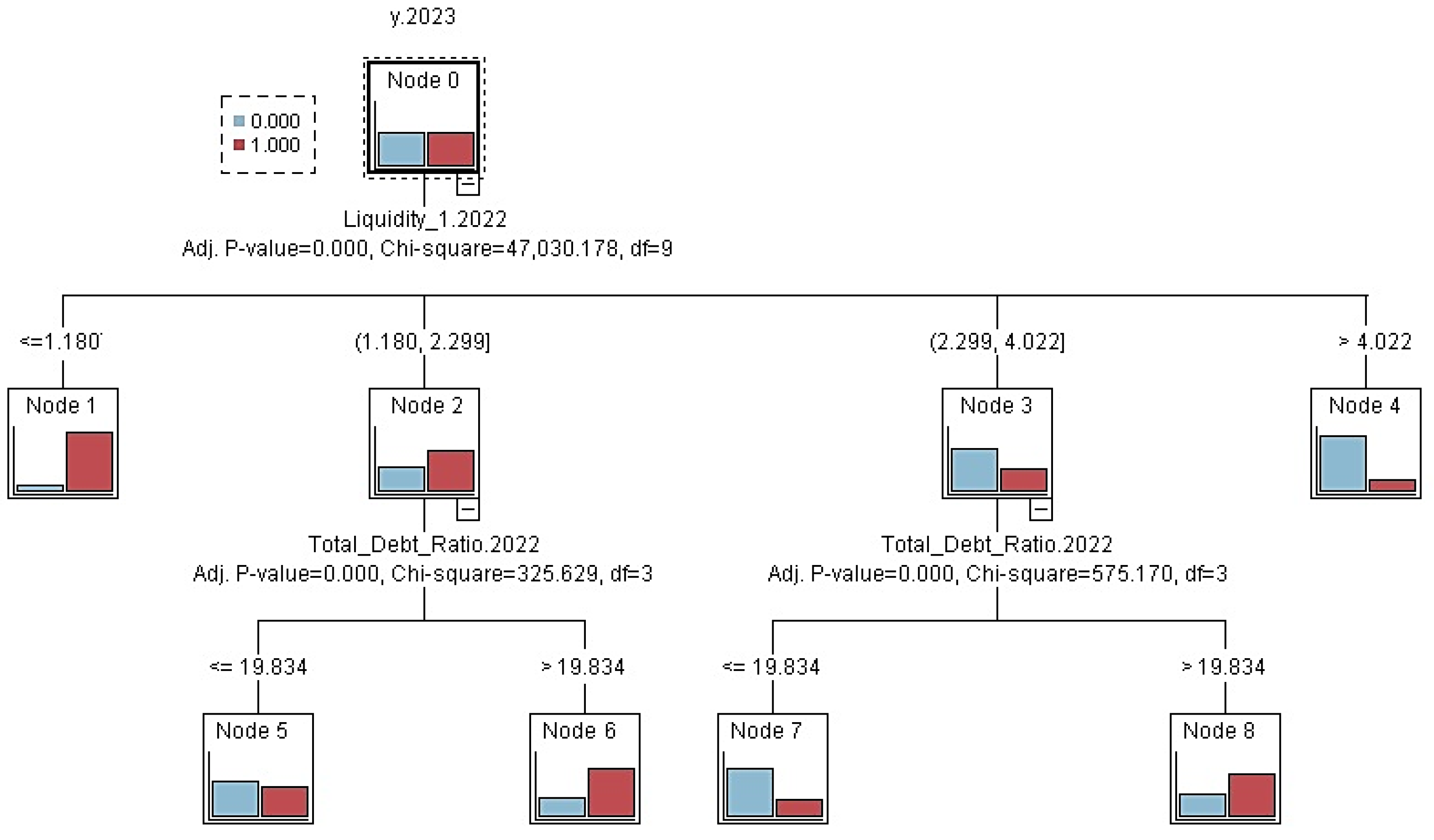

Table 5, which reflects the post-pandemic period, results indicate a partial recovery in performance for most methods. The CHAID model achieved the highest accuracy, confirming its strong overall balance. The CART model achieved the highest sensitivity and maintained a close accuracy. The neural network performed competitively across all metrics, maintaining stable accuracy. Logistic regression achieved the lowest sensitivity yet still yielded a relatively high AUC. The C5.0 tree delivered solid results, and discriminant analysis improved compared with the pandemic period but remained the least effective, particularly in identifying distressed firms. When ranking the models based on all their evaluation measures, the CHAID model should be considered the one with the highest predictive performance. Therefore,

Figure 5 presents the CHAID model for the post-pandemic period.

Comparing the model rankings based on their evaluation measures, we can conclude that CHAID achieves the highest predictive performance.

Figure 5 shows the resulting classification tree, including the nodes and the criteria used to divide them.

In the CHAID model, the first dividing criterion is again the Liquidity 1 indicator. Companies with a liquidity value of 1.180 or less are classified as high risk and are mostly in distress. The remaining companies with liquidity between 1.180 and 2.299 are further split by the Total Debt Ratio. If the Total Debt Ratio is at most 19.834, the segment is generally stable; however, values above 19.834 are associated with a higher proportion of distressed firms. For companies with liquidity between 2.299 and 4.022, the total debt ratio becomes crucial again. A debt level of 19.834 or lower indicates a segment dominated by stable companies, while a level above 19.834 increases the risk of distress. With very high liquidity above 4.022, the resulting segment is generally favourable, with a dominance of stable companies.

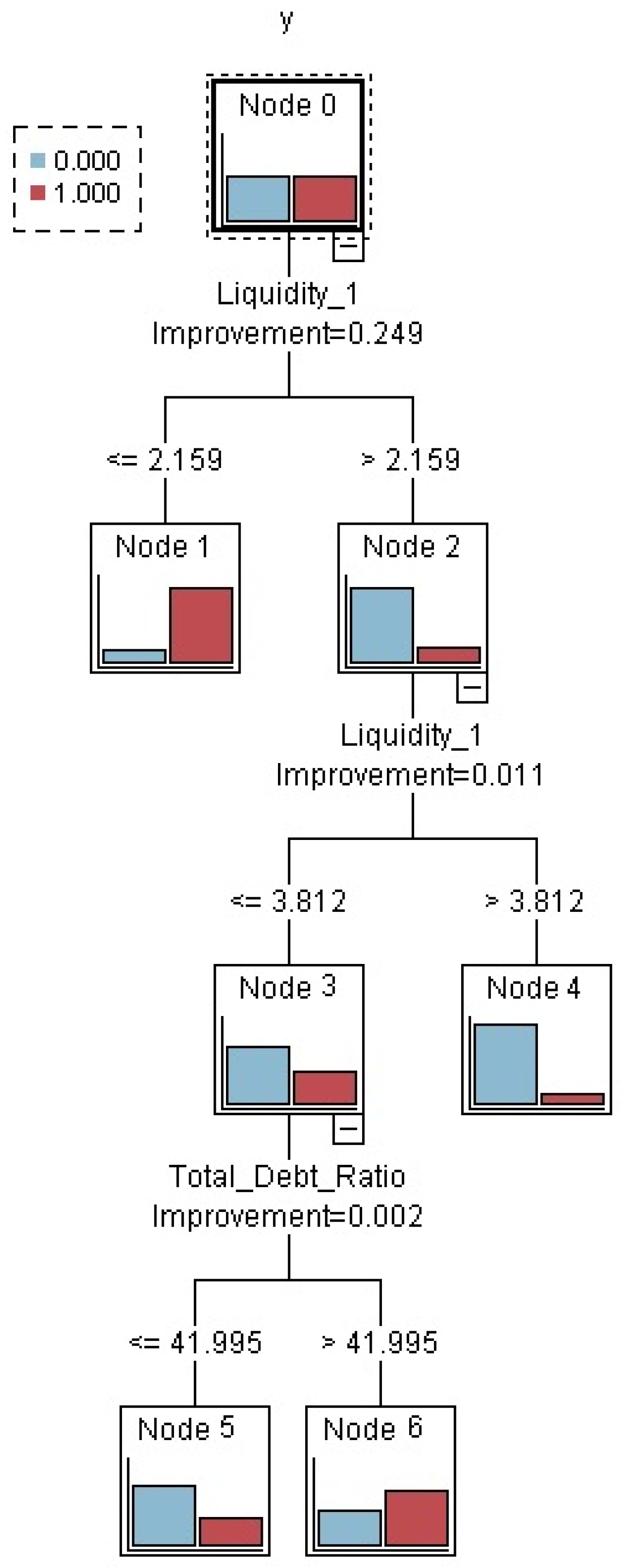

Table 6 summarises the results of the complex model trained on data covering all periods. Discriminant analysis performed worst across all measurements. The CHAID model again achieved the highest AUC value and high accuracy and sensitivity, confirming its top performance. The CART model and the neural network also achieved very good results, combining high sensitivity with solid accuracy. The C5.0 tree was the weakest among the tree models, but its results remained competitive and at a good level. Clearly, the performance of this comprehensive model was not significantly different from the separate models. This suggests that one comprehensive model can be as effective as individual, economically specific models. In this case, across all evaluation metrics, CART should be considered the most effective method, while DA is the least effective.

Figure 6 presents the comprehensive CART model for the entire period under review, which has the best predictive properties.

In the CART comprehensive model, the first dividing criterion is also Liquidity 1 indicator. Companies with a Liquidity 1 value at or below 2.159 are classified as high risk, with a dominance of companies in distress. For the remaining companies with Liquidity 1 above 2.159, the tree splits again by Liquidity 1 at 3.812. When Liquidity 1 is at or below 3.812, the next criterion is the Total Debt Ratio. If the Total Debt Ratio is 41.995 or lower, the segment consists mainly of stable companies, whereas values above 41.995 exhibit a higher proportion of companies in difficulty. For companies with high liquidity above 3.812, the resulting segment is generally favourable, with a dominance of stable companies.

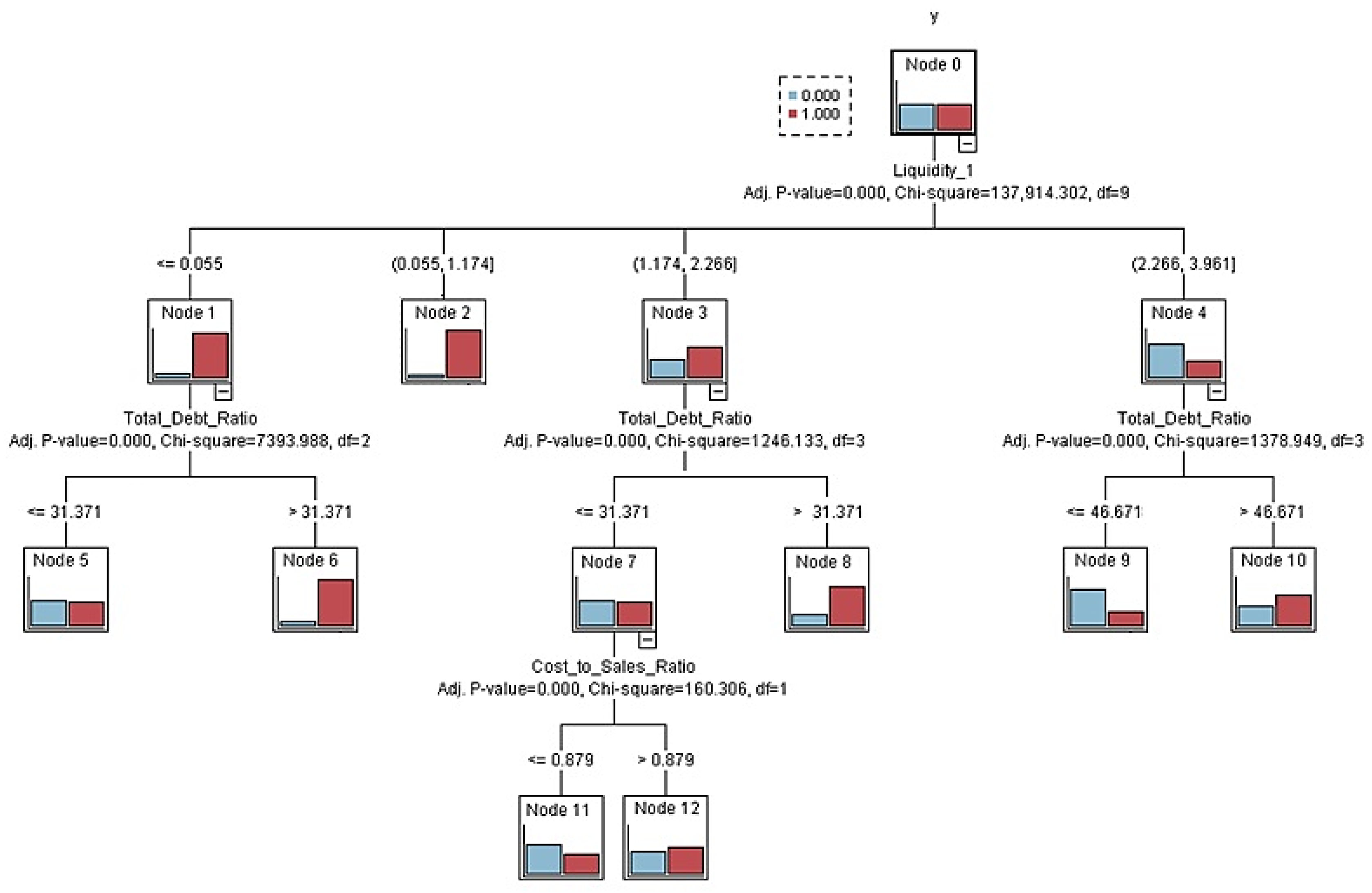

Figure 7 presents the CHAID comprehensive model for the entire period under review, which has the second-highest prediction performance.

In the CHAID model, the first dividing criterion is the Liquidity 1 indicator. Companies with Liquidity 1 equal to or below 0.055 form a separate group, characterised by a higher proportion of companies in difficulty. This group is further divided according to the total debt ratio. When the total debt ratio exceeds 31.371, the segment is clearly dominated by companies in difficulty. When it is at or below 31.371, stable companies dominate. Companies with liquidity 1 in the range of 0.055 to 1.174 form a separate group with an increased proportion of distressed companies. For companies with Liquidity 1 between 1.174 and 2.266, the next split is based on the Total Debt Ratio. If the Total Debt Ratio is 31.371 or lower, the segment is mostly stable. Consequently, it branches according to the Cost-to-Sales Ratio indicator. At values above 0.879, the proportion of companies in difficulty increases, while at values equal to or lower than 0.879, companies without problems dominate. On the contrary, total debt values above 31.371 indicate a segment with a significant number of companies in difficulty. For companies with higher liquidity between 2.266 and 3.961, the Total Debt Ratio becomes decisive again. Debt at or below 46.671 corresponds to a segment dominated by stable firms, whereas debt above 46.671 increases the probability of distress despite higher liquidity.

To supplement the detailed overview of the distribution rules, the key predictors identified by the CART, CHAID, and ANN models in each period are summarised in

Table 7. In all decision trees, Liquidity 1 and Total debt ratio are consistently shown as primary distribution variables, while profitability measures such as Return on Revenue from accounting CF are selected as secondary predictors. These recurring patterns suggest that the liquidity position of firms and total debt remain key determinants of financial distress regardless of the economic phase.

Comparison of Individual Models and the Comprehensive All-Periods Model

In

Table 8, the evaluation of two modelling approaches is summarised: (1) separate models estimated for each period, and (2) a unified comprehensive model estimated on the full sample with a period indicator. The first approach aims to capture phase-specific financial patterns, whereas the second applies a consistent predictive framework across all periods. Across periods, CHAID, CART, and ANN dominate, while DA is clearly the weakest, and LR is consistently average. It is essential to note that the period-indicating variables were not selected as classification criteria in the tree models, indicating their limited additive value for classification purposes. They may be present among the inputs in the ANN, but their high significance has not been proven, and their role cannot be directly interpreted due to the model’s black box nature. The table also shows that the complex model provides the best overall performance. Within this approach, CART achieves the best balance between sensitivity and accuracy, while CHAID is a close competitor, achieving the highest AUC.

Since the CART comprehensive model was identified as the best overall, its performance was cross-evaluated in separate tree periods: pre-pandemic, pandemic, and post-pandemic. This evaluation was performed by comparing the actual state of the companies with the predictions of the comprehensive model, followed by calculating the sensitivity, accuracy, and AUC separately for each period.

Table 9 presents these values. Overall, the model’s performance can be considered remarkably stable across periods. Sensitivity varies only slightly, so the small decline during the pandemic is insignificant. Accuracy is virtually unchanged, indicating consistent overall classification quality. AUC remains stable across all periods, and the Gini index is likewise steady, further confirming stable discriminatory power.

5. Conclusions

This study examined how a change in modelling approach impacts the ability to predict a company’s financial state across different economic periods. Our findings indicate no significant differences in prediction accuracy across the individual periods compared to the comprehensive universal model for all periods. Even though the economic disruptions caused by the COVID-19 pandemic significantly affect companies’ financial performance, the use of a period-specific model, or at least a period indicator variable, helps maintaining the models’ predictive accuracy.

Hypothesis H1, which assumed that the relative ranking of the predictive performance of modelling techniques would be significantly varied across economic periods, was therefore only partially supported. During the pandemic, a significant improvement in discriminant analysis was observed compared to the periods before and after, while the performance of other methods changed only slightly, leaving the overall ranking largely stable across periods. Hypothesis H2 was not supported, as the period-specific models did not achieve higher accuracy than a comprehensive model that included a period indicator. Across all techniques evaluated, CHAID slightly outperformed other models in terms of sensitivity, accuracy, and AUC, while discriminant analysis consistently showed the lowest predictive performance. These results suggest that a robustly constructed complex model can offer equally reliable predictive accuracy and provide a simpler yet practically beneficial approach. As a result, practitioners, investors, and regulators can confidently apply such complex models, even during periods of economic uncertainty.

This study also has several limitations that suggest directions for further research. First, the models were evaluated by using the 50:50 train–test split. In the future, the performance of the models created in this study should be evaluated using either a cross-validation technique or by verifying the accuracy of their predictions on newly generated data from consecutive years. Second, missing values in the financial ratios were imputed with industry averages, which may weaken variability and relationships between variables. In further research, more advanced imputation methods, such as model-based imputation, could help mitigate the bias caused by the imputation. Third, the modelling framework does not specify the causal relationships between a company’s financial state and financial indicators or their structural interactions with pandemic-related effects. Instead, these interdependencies are absorbed implicitly through the financial ratios and the COVID-19 dummy variable. While this approach is appropriate for predictive modelling, it does not capture the theoretical directionality of financial relationships, which represents a methodological limitation. Then, although we compared six popular techniques (CART, CHAID, C5.0, ANN, LR, DA), we did not explore more complex techniques, such as ensemble modelling, gradient boosting, or hybrid models, which often improve performance but are often hindered by their low readability. Next, the models are one year ahead, and in this study, they are purposely prioritised for predictive performance over interpretability. After that, the set of potential financial distress predictors consists exclusively of firm-level ratios. Adding macroeconomic and sectoral covariates, such as demand shocks and policy measures, and their interaction with firm characteristics, could shed light on why performance remains stable across periods and whether there is heterogeneity by size or industry. Furthermore, classifying 2022–2023 as a post-pandemic period is an oversimplification. These years were still affected by major macroeconomic shocks, notably the war in Ukraine, rising energy prices, and high inflation. Therefore, the post-pandemic period in this study should be interpreted as a phase with overlapping shocks rather than a completely stable regime, and future research could refine the periodisation or explicitly model multiple concurrent shocks to verify the robustness of the results. Another limitation is the very high variability and extreme values observed in some financial indicators. These wide ranges are caused by companies with extreme but economically acceptable indicator values, which are typical for populations of small and medium-sized enterprises with small denominators, large losses, or one-off gains. In the environment of SMEs, even slight changes in income, assets, or liabilities can translate into very large movements in financial ratios, so such variability does not usually indicate errors in the data but rather reflects the underlying financial reality. In addition, extreme values may signal emerging financial distress and therefore provide essential information for predictive modelling. Removing these observations would eliminate precisely the type of signals that financial distress models seek to detect and would bias the sample toward financially stable firms. In this study, only observations with economically inconsistent or technically incorrect values in mandatory items were removed, while extreme but acceptable indicator values were retained, and no winsorisation or robust transformation of indicators were used. As a result, the reported standard deviations remain high, and future research could use alternative scaling, trimming, or winsorisation procedures to assess the sensitivity of the models to such extremes. Finally, our findings are most closely related to Slovak SMEs. Replication in other countries, legal environments, and with alternative definitions of distress would test the transferability and boundary conditions.