Evaluating the Performance of Eigenface, Fisherface, and Local Binary Pattern Histogram-Based Facial Recognition Methods under Various Weather Conditions

Abstract

1. Introduction

- Easy to implement and most widely used

- Demonstrate more stable performance on small dataset

- Run smoothly on an average CPU-based computer, chrome book, tab, and mobile device and require less computation time

2. Literature Review

2.1. Indoor vs. Unconstrained Environment

2.2. Effect of Fog on Real Time Images

2.3. Effect of Rain on Real-Time Image

3. Dataset Creation

Dataset Description

Database Comparison

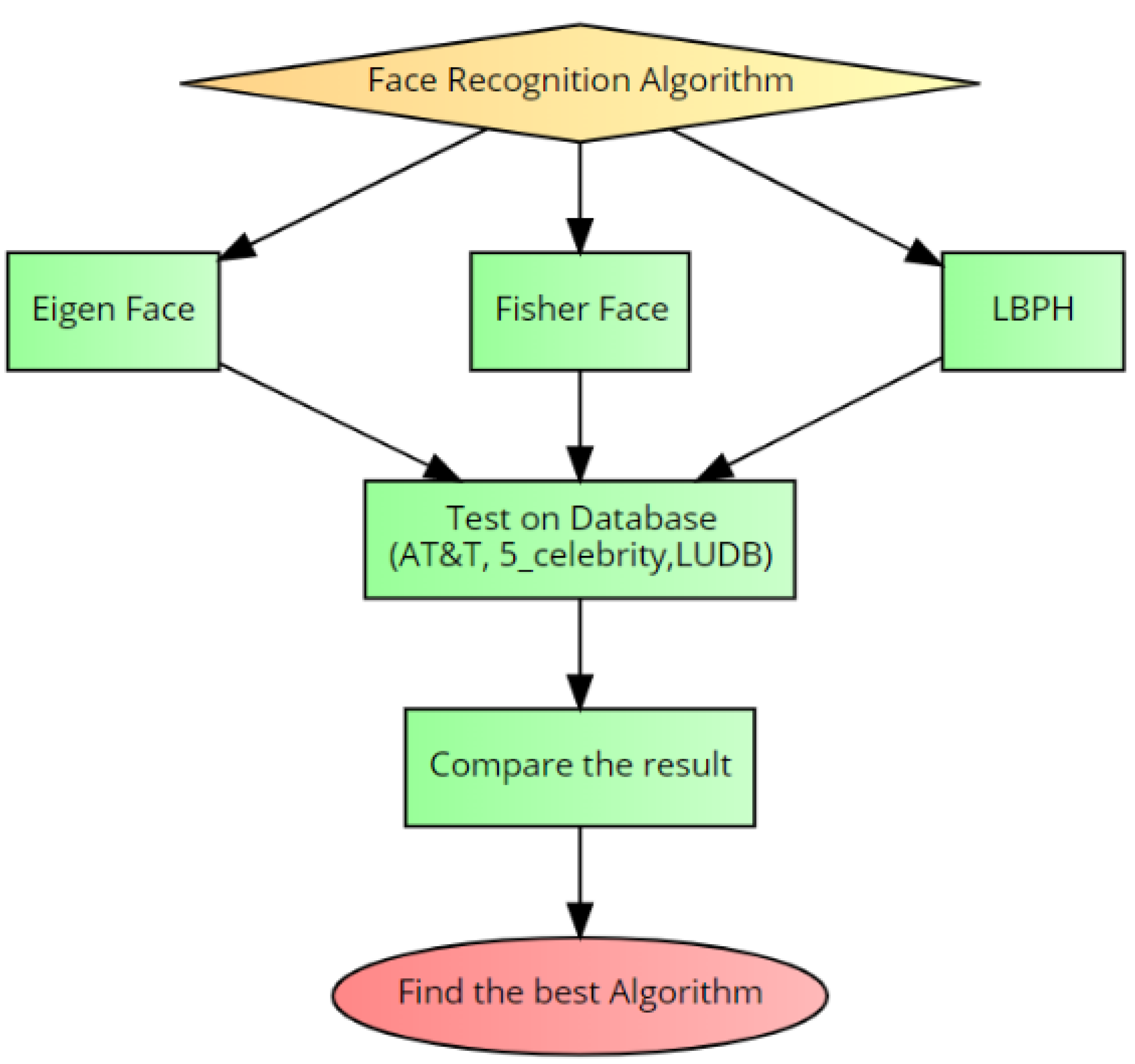

4. Methodology

4.1. Eigenfaces

4.2. Fisherfaces

4.3. Local Binary Pattern Histogram

5. Results

6. Discussion

- Since the experiment was conducted during the summer, it was impossible to develop a dataset containing images captured under foggy weather. Thus, an adjustment was made using artificial fog on the image and overall experiments were conducted.

- The performance of all three algorithms on the 5_Celebrity dataset was very poor due to the many pose variations and illumination conditions. Several possible FR techniques are available such as CNN and RNN that could be used for this experiment and might obtain higher accuracy than the results presented in this paper.

- The dataset was relatively small and contained only 15 male and 2 female participants. Although the number of images was enough to conduct the pilot test, due to the lack of female participants, the effect of gender on face recognition was ignored as well.

- The dataset contains images with different emotions as well, but the results are presented considering all the images instead of referring to individual emotions and face angles.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahsan, M.M.; Li, Y.; Zhang, J.; Ahad, M.T.; Yazdan, M.M. Face Recognition in an Unconstrained and Real-Time Environment Using Novel BMC-LBPH Methods Incorporates with DJI Vision Sensor. J. Sens. Actuator Netw. 2020, 9, 54. [Google Scholar] [CrossRef]

- Ahsan, M.M. Real Time Face Recognition in Unconstrained Environment; Lamar University-Beaumont: Beaumont, TX, USA, 2018. [Google Scholar]

- Lee, H.; Park, S.H.; Yoo, J.H.; Jung, S.H.; Huh, J.H. Face recognition at a distance for a stand-alone access control system. Sensors 2020, 20, 785. [Google Scholar] [CrossRef] [PubMed]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Jegham, I.; Khalifa, A.B.; Alouani, I.; Mahjoub, M.A. Vision-based human action recognition: An overview and real world challenges. Forensic Sci. Int. Dig. Investig. 2020, 32, 200901. [Google Scholar] [CrossRef]

- Safdar, F. A Comparison of Face Recognition Algorithms for Varying Capturing Conditions. Available online: https://uijrt.com/articles/v2/i3/UIJRTV2I30001.pdf (accessed on 20 April 2021).

- Jagtap, A.; Kangale, V.; Unune, K.; Gosavi, P. A Study of LBPH, Eigenface, Fisherface and Haar-like features for Face recognition using OpenCV. In Proceedings of the 2019 International Conference on Intelligent Sustainable Systems (ICISS), Palladam, India, 21–22 February 2019; pp. 219–224. [Google Scholar]

- Ouahabi, A.; Taleb-Ahmed, A. Deep learning for real-time semantic segmentation: Application in ultrasound imaging. Pattern Recognit. Lett. 2021, 144, 27–34. [Google Scholar] [CrossRef]

- Karanwal, S.; Purwar, R.K. Performance Analysis of Local Binary Pattern Features with PCA for Face Recognition. Indian J. Sci. Technol. 2017, 10. [Google Scholar] [CrossRef]

- Campisi, P.; Colonnese, S.; Panci, G.; Scarano, G. Reduced complexity rotation invariant texture classification using a blind deconvolution approach. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 28, 145–149. [Google Scholar] [CrossRef]

- Abuzneid, M.A.; Mahmood, A. Enhanced human face recognition using LBPH descriptor, multi-KNN, and back-propagation neural network. IEEE Access 2018, 6, 20641–20651. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Jacques, S. Multi-Block Color-Binarized Statistical Images for Single-Sample Face Recognition. Sensors 2021, 21, 728. [Google Scholar] [CrossRef]

- Adeshina, S.O.; Ibrahim, H.; Teoh, S.S.; Hoo, S.C. Custom Face Classification Model for Classroom Using Haar-Like and LBP Features with Their Performance Comparisons. Electronics 2021, 10, 102. [Google Scholar] [CrossRef]

- Salh, T.A.; Nayef, M.Z. Face recognition system based on wavelet, pca-lda and svm. Comput. Eng. Intell. Syst. J. 2013, 4, 26–31. [Google Scholar]

- Marami, E.; Tefas, A. Face detection using particle swarm optimization and support vector machines. In Hellenic Conference on Artificial Intelligence; Springer: Berlin, Germany, 2010; pp. 369–374. [Google Scholar]

- Chen, L.; Zhou, C.; Shen, L. Facial expression recognition based on SVM in E-learning. Ieri Procedia 2012, 2, 781–787. [Google Scholar] [CrossRef]

- Li, R.s.; Lee, F.f.; Yan, Y.; Qiu, C. Face Recognition Using Vector Quantization Histogram and Support Vector Machine Classifier. DEStech Trans. Comput. Sci. Eng. 2016. [Google Scholar] [CrossRef]

- Qi, Z.; Tian, Y.; Shi, Y. Robust twin support vector machine for pattern classification. Pattern Recog. 2013, 46, 305–316. [Google Scholar] [CrossRef]

- Fontaine, X.; Achanta, R.; Süsstrunk, S. Face recognition in real-world images. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 1482–1486. [Google Scholar]

- Kim, K.I.; Kim, J.H.; Jung, K. Face recognition using support vector machines with local correlation kernels. Int. J. Pattern Recog. Artif. Intell. 2002, 16, 97–111. [Google Scholar] [CrossRef]

- Tiwari, R.; Khandelwal, A. Fog Removal Technique with Improved Quality through FFT. Int. J. Recent Trends Eng. Res. 2017, 3. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, H.D.; Huang, J.; Tang, X. An effective and objective criterion for evaluating the performance of denoising filters. Pattern Recog. 2012, 45, 2743–2757. [Google Scholar] [CrossRef]

- Ouahabi, A. A review of wavelet denoising in medical imaging. In Proceedings of the 2013 8th International Workshop on Systems, Signal Processing and their Applications (WoSSPA), Algiers, Algeria, 12–15 May 2013; pp. 19–26. [Google Scholar]

- Ruiz-del Solar, J.; Verschae, R.; Correa, M. Recognition of faces in unconstrained environments: A comparative study. EURASIP J. Adv. Signal Process. 2009, 2009, 1–19. [Google Scholar] [CrossRef]

- Hermosilla, G.; Ruiz-del Solar, J.; Verschae, R.; Correa, M. A comparative study of thermal face recognition methods in unconstrained environments. Pattern Recognit. 2012, 45, 2445–2459. [Google Scholar] [CrossRef]

- Zhao, R.; Zhu, Z.; Li, Y.; Zhang, J.; Zhang, X. Use a UAV System to Enhance Port Security in Unconstrained Environment. In International Conference on Applied Human Factors and Ergonomics; Springer: Berlin, Germany, 2020; pp. 78–84. [Google Scholar]

- Surekha, N.; Naveen Kumar, J. An improved fog-removing method for the traffic monitoring image. Int. J. Mag. Eng. Technol. Manag. Res. 2016, 3, 2061–2065. [Google Scholar]

- Nousheen, S.; Kumar, S. Novel Fog-Removing Method for the Traffic Monitoring Image. 2016. Available online: https://core.ac.uk/download/pdf/228549366.pdf (accessed on 20 April 2021).

- Deshpande, D.; Kale, V. Analysis of the atmospheric visibility restoration and fog attenuation using gray scale image. In Proceedings of the Satellite Conference ICSTSD 2016 International Conference on Science and Technology for Sustainable Development, Kuala Lumpur, Malaysia, 24–26 May 2016; pp. 32–37. [Google Scholar]

- Schwarzlmüller, C.; Al Machot, F.; Fasih, A.; Kyamakya, K. Adaptive contrast enhancement involving CNN-based processing for foggy weather conditions & non-uniform lighting conditions. In Proceedings of the Joint INDS’11 & ISTET’11, Klagenfurt am Wrthersee, Austria, 25–27 July 2011, ISTET’11; pp. 1–10.

- Mohanram, S.; Aarthi, B.; Silambarasan, C.; Hephzibah, T.J.S. An optimized image enhancement of foggy images using gamma adjustment. Int. J. Adv. Res. Electr. Commun. Eng. 2014, 3, 155–159. [Google Scholar]

- Shabna, D.; Manikandababu, C. An efficient haze removal algorithm for surveillance video. Int. J. Innov. Res. Sci. Eng. Technol. 2016, 5. [Google Scholar]

- Barnum, P.C.; Narasimhan, S.; Kanade, T. Analysis of rain and snow in frequency space. Int. J. Comput. Vis. 2010, 86, 256. [Google Scholar] [CrossRef]

- Bossu, J.; Hautiere, N.; Tarel, J.P. Rain or snow detection in image sequences through use of a histogram of orientation of streaks. Int. J. Comput. Vis. 2011, 93, 348–367. [Google Scholar] [CrossRef]

- Zhao, W.; Chellappa, R.; Phillips, P.J.; Rosenfeld, A. Face recognition: A literature survey. ACM Comput. Surv. 2003, 35, 399–458. [Google Scholar] [CrossRef]

- Luo, Y.; Xu, Y.; Ji, H. Removing rain from a single image via discriminative sparse coding. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3397–3405. [Google Scholar]

- Kim, J.H.; Lee, C.; Sim, J.Y.; Kim, C.S. Single-image deraining using an adaptive nonlocal means filter. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 914–917. [Google Scholar]

- Kang, L.W.; Lin, C.W.; Fu, Y.H. Automatic single-image-based rain streaks removal via image decomposition. IEEE Trans. Image Process. 2011, 21, 1742–1755. [Google Scholar] [CrossRef]

- Eigen, D.; Krishnan, D.; Fergus, R. Restoring an image taken through a window covered with dirt or rain. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 633–640. [Google Scholar]

- Pei, S.C.; Tsai, Y.T.; Lee, C.Y. Removing rain and snow in a single image using saturation and visibility features. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Brewer, N.; Liu, N. Using the shape characteristics of rain to identify and remove rain from video. In Joint IAPR International Workshops on Statistical Techniques in Pattern Recognition (SPR) and Structural and Syntactic Pattern Recognition (SSPR); Springer: Berlin, Germany, 2008; pp. 451–458. [Google Scholar]

- Garg, K.; Nayar, S.K. Detection and removal of rain from videos. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 41, p. 1. [Google Scholar]

- Liu, S.; Piao, Y. A novel rain removal technology based on video image. In Proceedings of the Selected Papers of the Chinese Society for Optical Engineering Conferences, International Society for Optics and Photonics, Changchun, China, July 2016; Volume 10141, p. 101411O. [Google Scholar]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Rain streak removal using layer priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2736–2744. [Google Scholar]

- Lin, Y.Y.; Hsiung, P.A. An early warning system for predicting driver fatigue. In Proceedings of the 2017 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Taipei, Taiwan, 12–14 June 2017; pp. 283–284. [Google Scholar]

- Narin, A.; Kaya, C.; Pamuk, Z. Automatic detection of coronavirus disease (covid-19) using X-ray images and deep convolutional neural networks. arXiv 2020, arXiv:2003.10849. [Google Scholar]

- Anzalone, C. How to Make Fog with Photoshop. 2019. Available online: https://yourbusiness.azcentral.com/chalky-look-photoshop-10803.html (accessed on 20 April 2021).

- üge Çarıkçı, M.; Özen, F. A face recognition system based on eigenfaces method. Procedia Technol. 2012, 1, 118–123. [Google Scholar] [CrossRef]

- Delbiaggio, N. A Comparison of Facial Recognition’s Algorithms. 2017. Available online: https://www.theseus.fi/handle/10024/132808 (accessed on 20 April 2021).

- Sánchez López, L. Local Binary Patterns Applied to Face Detection and Recognition. 2010. Available online: https://upcommons.upc.edu/handle/2099.1/10772 (accessed on 20 April 2021).

- Ahsan, M.M.; Ahad, M.T.; Soma, F.A.; Paul, S.; Chowdhury, A.; Luna, S.A.; Yazdan, M.M.S.; Rahman, A.; Siddique, Z.; Huebner, P. Detecting SARS-CoV-2 from Chest X-ray using Artificial Intelligence. IEEE Access 2021, 9, 35501–35513. [Google Scholar] [CrossRef]

- Ahsan, M.M.; Gupta, K.D.; Islam, M.M.; Sen, S.; Rahman, M.; Shakhawat Hossain, M. COVID-19 Symptoms Detection Based on NasNetMobile with Explainable AI Using Various Imaging Modalities. Mach. Learn. Knowl. Extract. 2020, 2, 490–504. [Google Scholar] [CrossRef]

| Database | Subjects | Images | Size (pixel) | Unconstrained |

|---|---|---|---|---|

| AT&T | 40 | 400 | 92 × 112 | No |

| 5_Celebrity | 5 | 118 | Not equal | Yes |

| LUDB | 17 | 250 | 500 × 500 | Yes |

| Algorithm | Accuracy | Precision | Recall | F1 Score | Execution Time |

|---|---|---|---|---|---|

| EigenFace | 99% ± 0.87% | 0.985 ± 0.01 | 0.99 ± 0.009 | 0.987 ± 0.010 | 2.74 s |

| FisherFace | 100% | 1 | 1 | 1 | 1.37 s |

| LBPH | 98% ± 1.24% | 0.97 ± 0.015 | 0.98 ± 0.012 | 0.97 ± 0.015 | 1.84 s |

| Algorithm | Accuracy | Precision | Recall | F1 Score | Execution Time |

|---|---|---|---|---|---|

| EigenFace | 33% ± 7.18% | 0.318 ± 0.072 | 0.33 ± 0.072 | 0.305 ± 0.073 | 2.73 s |

| FisherFace | 37% ± 6.96% | 0.398 ± 0.07 | 0.37 ± 0.07 | 0.352 ± 0.071 | 1.37 s |

| LBPH | 40% ± 6.8% | 0.36 ± 0.07 | 0.4 ± 0.068 | 0.358 ± 0.07 | 1.95 s |

| Algorithm | Accuracy | Precision | Recall | F1 Score | Execution Time |

|---|---|---|---|---|---|

| EigenFace | 86% ± 3.3% | 0.803 ± 0.04 | 0.86 ± 0.033 | 0.82 ± 0.037 | 3.0167 s |

| FisherFace | 84% ± 3.51% | 0.781 ± 0.041 | 0.84 ± 0.035 | 0.801 ± 0.04 | 1.44 s |

| LBPH | 95% ± 1.96% | 0.925 ± 0.024 | 0.95 ± 0.02 | 0.934 ± 0.023 | 2.09 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahsan, M.M.; Li, Y.; Zhang, J.; Ahad, M.T.; Gupta, K.D. Evaluating the Performance of Eigenface, Fisherface, and Local Binary Pattern Histogram-Based Facial Recognition Methods under Various Weather Conditions. Technologies 2021, 9, 31. https://doi.org/10.3390/technologies9020031

Ahsan MM, Li Y, Zhang J, Ahad MT, Gupta KD. Evaluating the Performance of Eigenface, Fisherface, and Local Binary Pattern Histogram-Based Facial Recognition Methods under Various Weather Conditions. Technologies. 2021; 9(2):31. https://doi.org/10.3390/technologies9020031

Chicago/Turabian StyleAhsan, Md Manjurul, Yueqing Li, Jing Zhang, Md Tanvir Ahad, and Kishor Datta Gupta. 2021. "Evaluating the Performance of Eigenface, Fisherface, and Local Binary Pattern Histogram-Based Facial Recognition Methods under Various Weather Conditions" Technologies 9, no. 2: 31. https://doi.org/10.3390/technologies9020031

APA StyleAhsan, M. M., Li, Y., Zhang, J., Ahad, M. T., & Gupta, K. D. (2021). Evaluating the Performance of Eigenface, Fisherface, and Local Binary Pattern Histogram-Based Facial Recognition Methods under Various Weather Conditions. Technologies, 9(2), 31. https://doi.org/10.3390/technologies9020031