SQUbot: Enhancing Student Support Through a Personalized Chatbot System

Abstract

1. Introduction

- 1.

- Newly admitted students are not aware of the academic regulations;

- 2.

- Some students feel shy about visiting advisors;

- 3.

- Some students have language issues and can’t express their requirements directly to advisors;

- 4.

- Sometimes students need an immediate answer that advisors cannot provide due to their schedules and commitments.

- How can a bilingual AI chatbot be designed to support Arabic and English language queries effectively in a university setting?

- What are the technical and linguistic challenges of implementing Arabic NLP using IBM Watson, and how can they be addressed?

- How effective is the chatbot in responding to real-world student queries, and what are the limitations of its current implementation?

- Development of a robust chatbot using IBM Watson Assistant supporting both English and Arabic languages;

- Capability of understanding a wide range of simple and complex queries;

- Ability to customize responses based on the individual needs of the students;

- Development of an API to connect the IBM Watson cloud to the university’s data center to maintain users’ data security and privacy.

2. Literature Review

3. Technical Implementation Details

3.1. Training Data Configuration

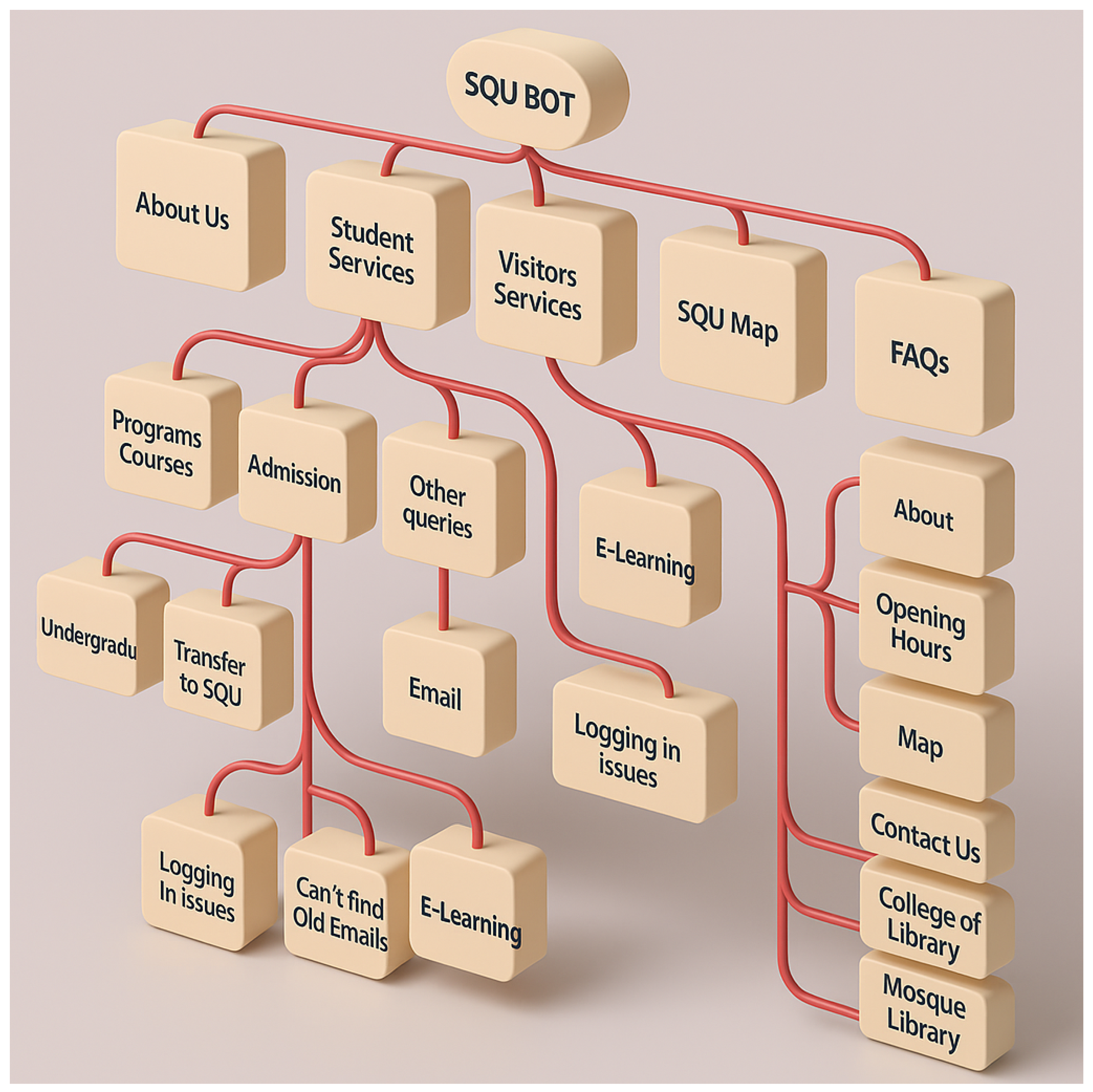

- Our chatbot was configured with over 90 distinct intents, designed to cover both general inquiries and personalized services relevant to university life.

- Each intent was trained using 15–25 sample sentences, created carefully in both English and Arabic, to reflect a wide range of natural student phrasing, including the usual local jargon used within the university.

- The overall training data consisted of the following:

- –

- Around 2500 manually extracted entries from the SQU website for general services.

- –

- 45 intents tailored specifically for Arabic, incorporating cultural and linguistic nuances relevant to the region.

- –

- For personalized services, in addition to intents and entities, the chatbot relied on real-time integration with the university’s internal systems through a secure API. API Payload was prepared by extracting relevant data from the conversation through entities.

3.2. Watson Assistant Pipeline Configuration

- We used IBM Watson’s Natural Language Understanding (NLU) engine as the core of the assistant.

- Intent recognition was based on a confidence-scoring model, with a custom threshold of 0.65 (higher than the default of 0.2) to strike a balance between precision and fallback handling.

- Over 15 custom entities were defined (e.g., @empname, @coursecode, @degreetype), each populated with relevant synonyms and variants to enhance recognition accuracy.

- The dialog flow was structured using over 200 nodes, combining condition-based branching, fallback prompts, and multilingual support, including button-based disambiguation where appropriate.

3.3. Challenges in Arabic NLP

- Morphological richness: Arabic’s structure required careful synonym expansion and multiple phrasing examples to ensure proper intent recognition.

- Dialectal variation: Although we focused on Modern Standard Arabic (MSA) for consistency, we also included standard Gulf dialect expressions to make the chatbot feel more natural and relatable to local users.

- Code-switching behavior: Many student queries included a mix of Arabic and English. Handling such input required manual tuning and diverse training samples, as out-of-the-box support was limited.

- Training effort: We found that Arabic intents required more example variations than their English counterparts to achieve comparable performance, which was an important finding for future multilingual bot development.

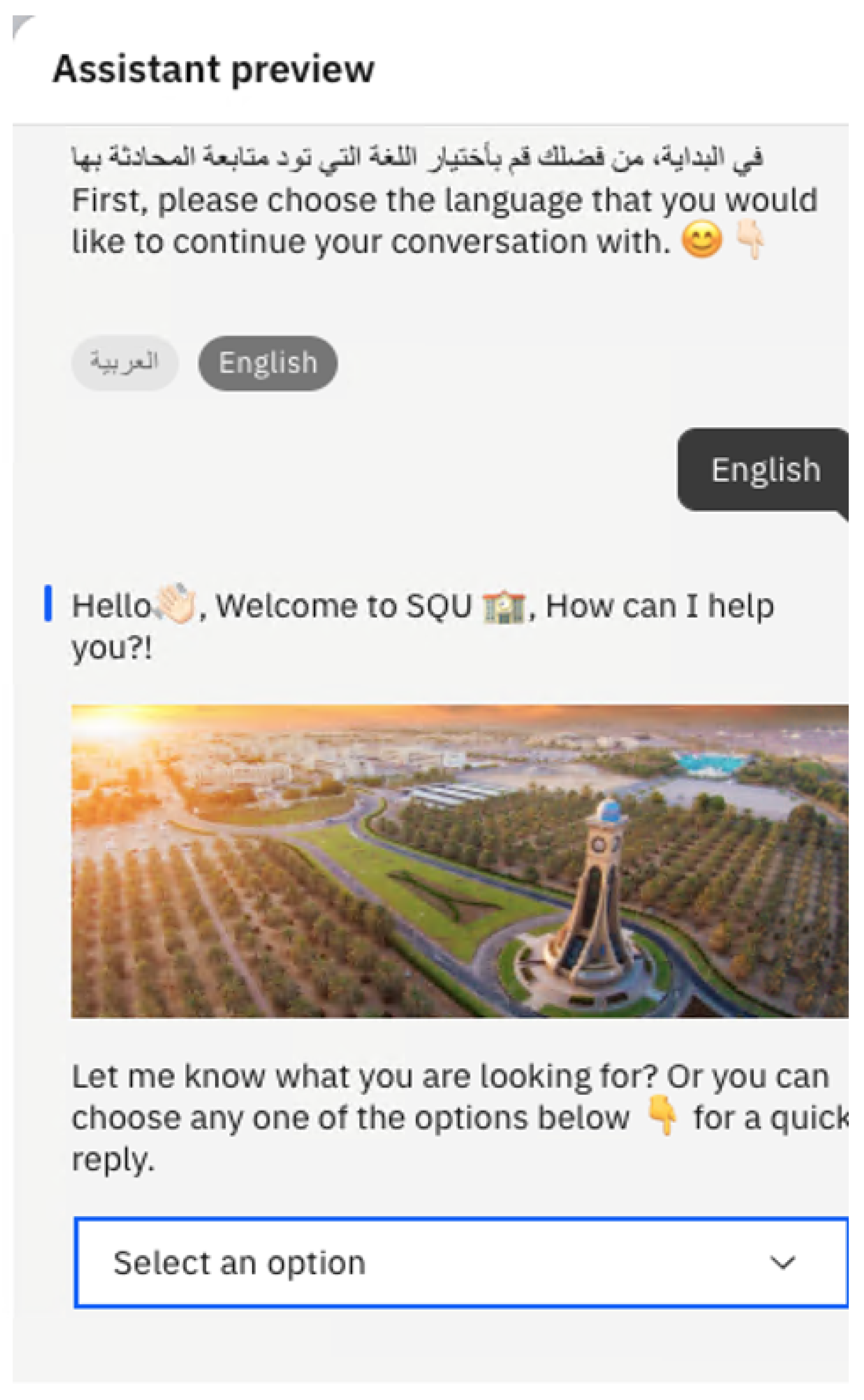

- Mixed Arabic–English Queries: The system is designed for the user to select either Arabic or English at the beginning of the conversation, with separate intent and entity flows for each language. Mixed Arabic–English queries within a single input are not explicitly supported; such inputs typically trigger a clarification prompt or fallback. During pilot testing, a general observed behaviour was that a mix of Arabic and English was mainly the use of domain-specific jargon (such as course codes, GPA, etc.) from the English language, while conversation was basically in Arabic. For future work, we can incorporate a lightweight language identification step and add code-switched utterances for standard terms to improve mixed-input handling.

4. Conversational AI: Selecting a Platform

4.1. Key Factors

- Features

- API Integration

- Localization

- Cost and Efforts

- Data Privacy

4.2. Selection Process

- One-to-One Demo Sessions

- Trial Implementation

- Documentation Study and Community Survey

4.3. Our Understanding

- All of them are usable to create a basic to advanced chatbot and automate the customer service agent for an organization.

- Each one has its way of implementation, including concepts and design, due to which some of them are a little hard to follow.

- Each has its pros and cons. As a result, if a good feature is present in one, then it is missing from the other. For example, if ease of use is better in one, then it has low customization, and vice versa.

- API integration is available in all, but the flexibility level is different in each one of them.

- Some of them even have templates for educational institutions, which can be customized according to requirements.

4.4. Final Selection

- Google DialogFlow

- IBM Watson

- More flexible API integration with university systems.

- Cloud-based platforms with a variety of products and services for future growth.

- Follow industry standards and best practices.

- Have a subscription-based pricing model.

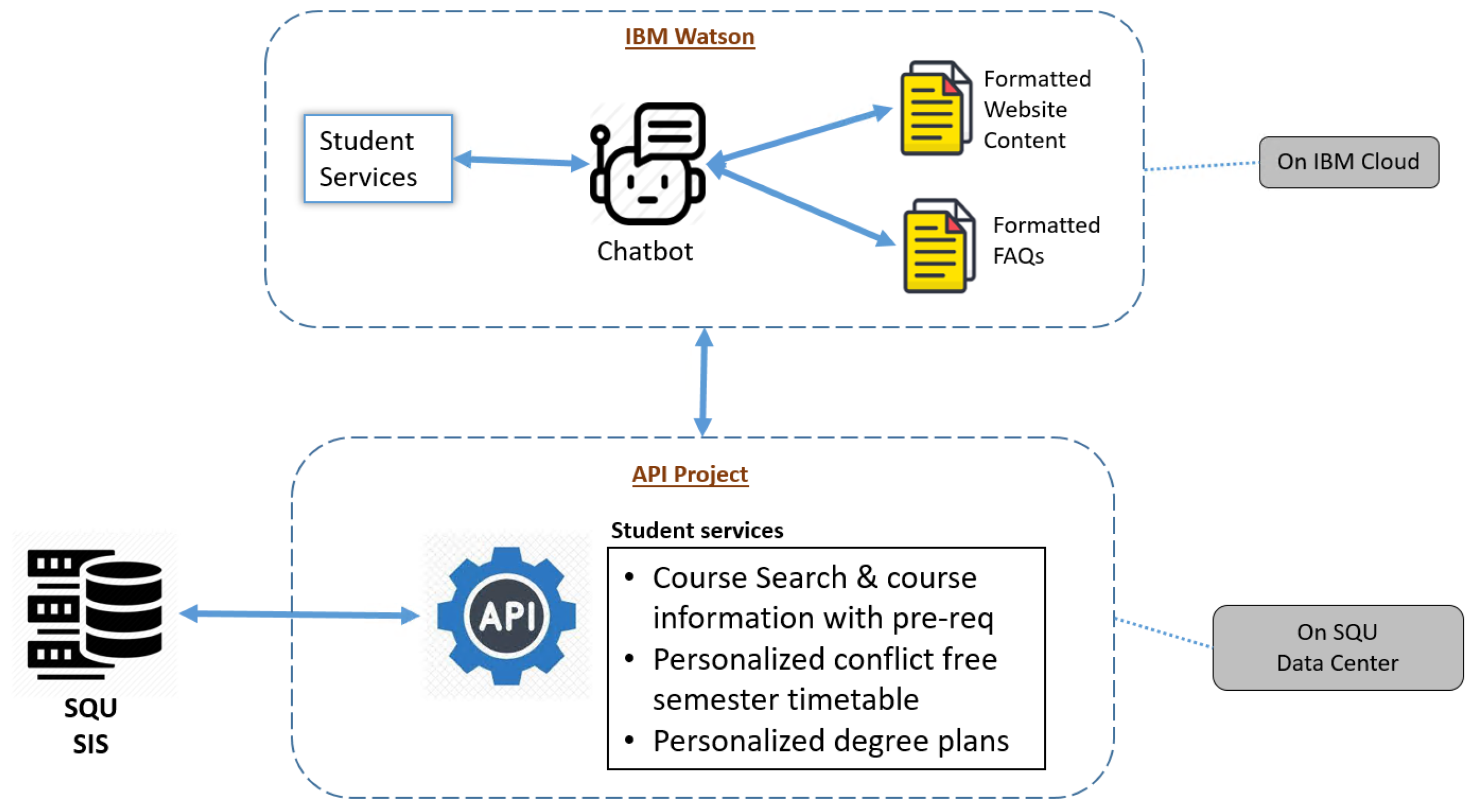

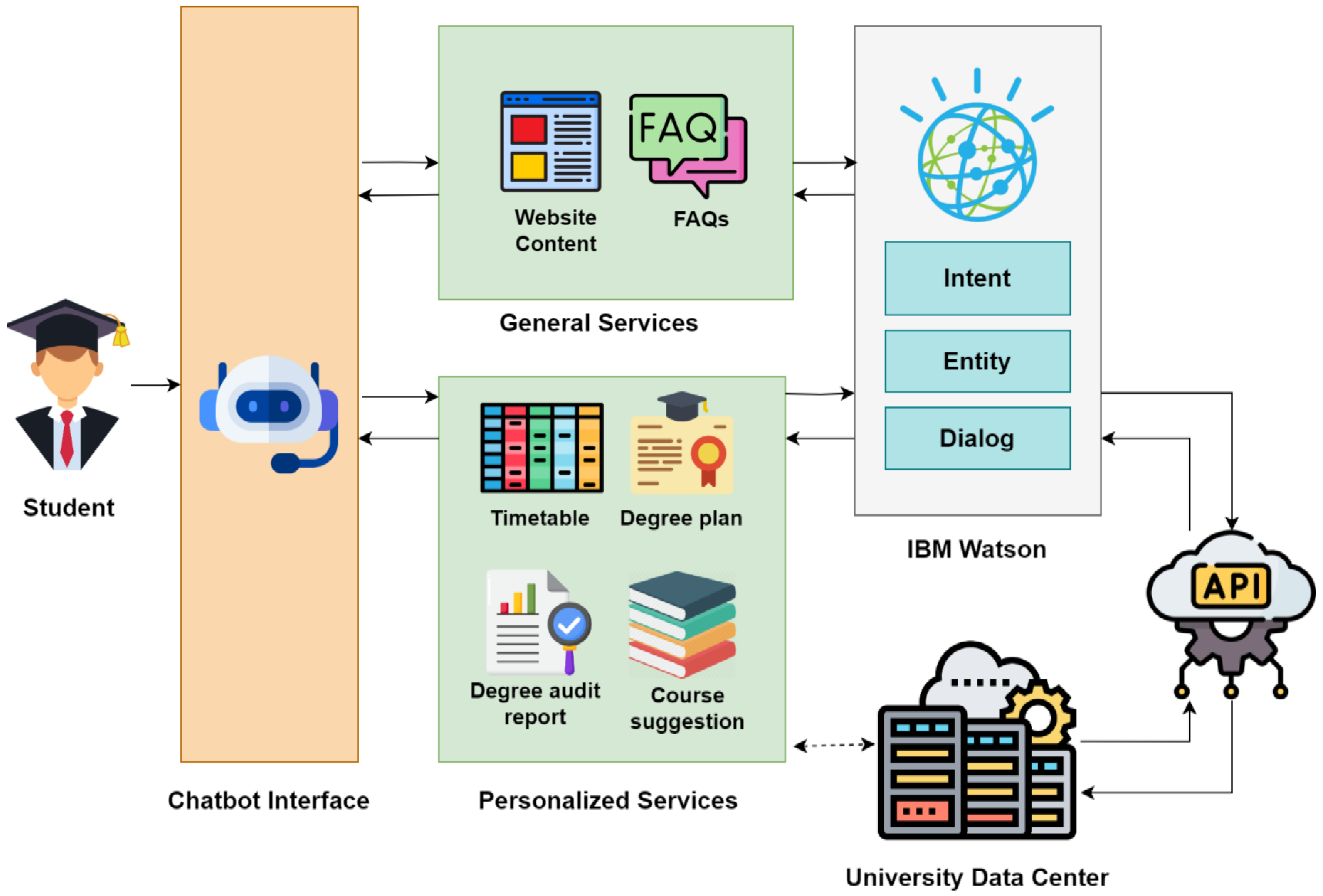

5. Design, Implementation, and Proposed System Architecture

- AI Chatbot (IBM Watson).

- API Project.

5.1. System Overview

5.2. Data Sources

- General Services: Information regarding university policies, calendars, contact directories, and course offerings was manually extracted from the SQU website and structured into training data for IBM Watson.

- Personalized Services: For personalized queries (e.g., retrieving student timetables, degree plans, or audit reports), data is accessed via a custom API layer that connects IBM Watson with secure university databases. No sensitive data is stored in the cloud, ensuring compliance with privacy standards.

5.3. Intents

5.4. Entities

5.5. Dialog Management

5.5.1. General Services

- Search a Course: The SQUbot allows users to search for relevant courses by typing a general phrase with the search keyword within it. The bot will connect to the API to run the search process and provide the user with a list of courses matching the keyword in the user’s query. For example, a user may request the programming-related courses offered by the University, and the bot will provide a list of all the courses related to programming along with their course codes. This feature saves students valuable time and effort by eliminating the need to browse or search through course catalogs manually.

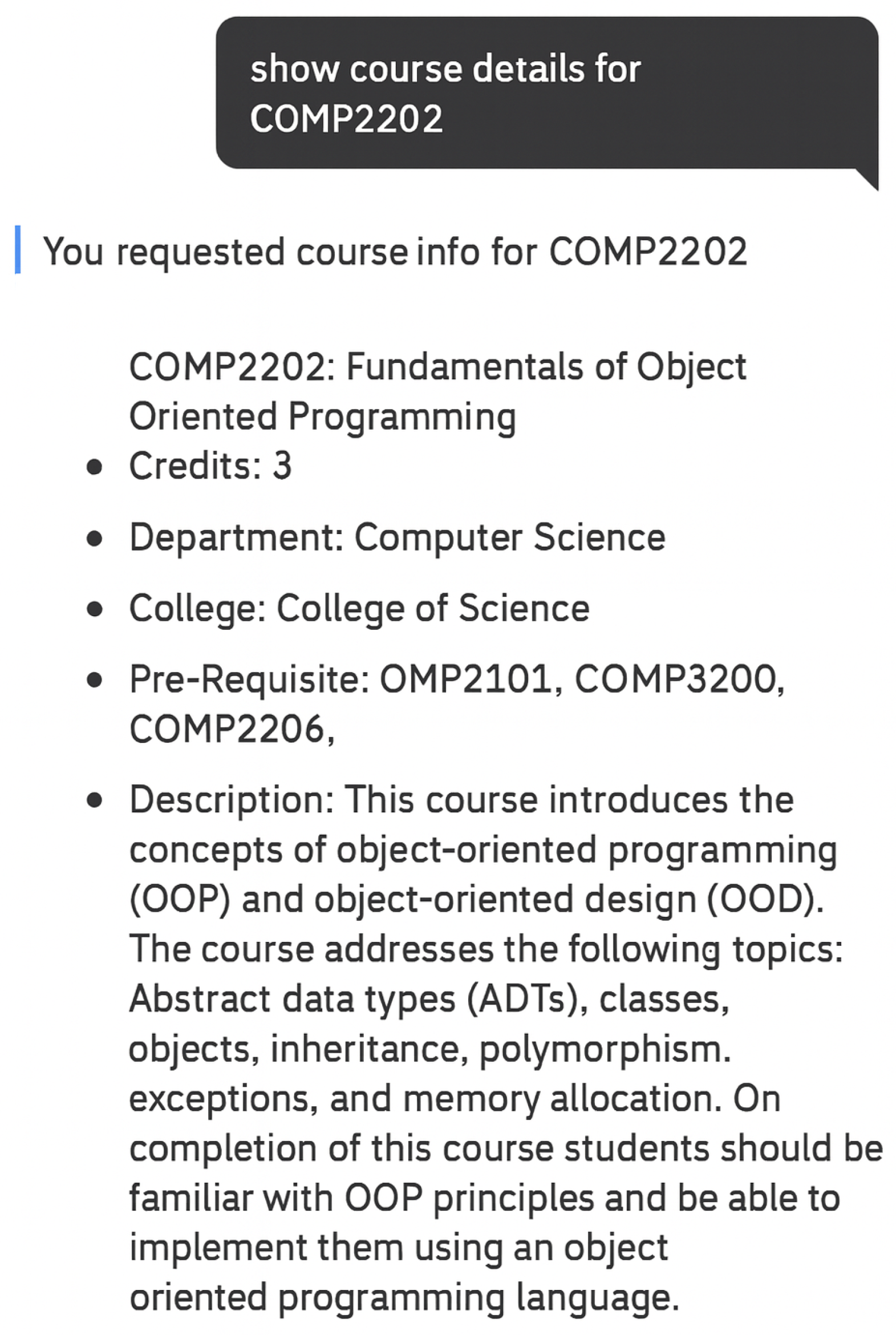

- Get the course details: Users can quickly access course information anytime by using the SQUbot without having to make manual efforts, such as searching the course details via course catalogs or contacting the administrators, and waiting for their responses. Similarly, making this feature available 24/7 via a chatbot reduces the efforts of the administrators, allowing them to focus on more strategic tasks. The students can request the chatbot to provide them with the course details of a particular course by mentioning the course code, and the chatbot will return the course details, including title, prerequisites of the course, a brief course description, credit hours of the course, and the college offering the course.

- Get the academic calendar: Users can use the SQUbot to get the academic calendar as well by typing a simple query, such as “show the university’s academic calendar,” and the chatbot will return the calendar of the entire year.

5.5.2. Personalized Services

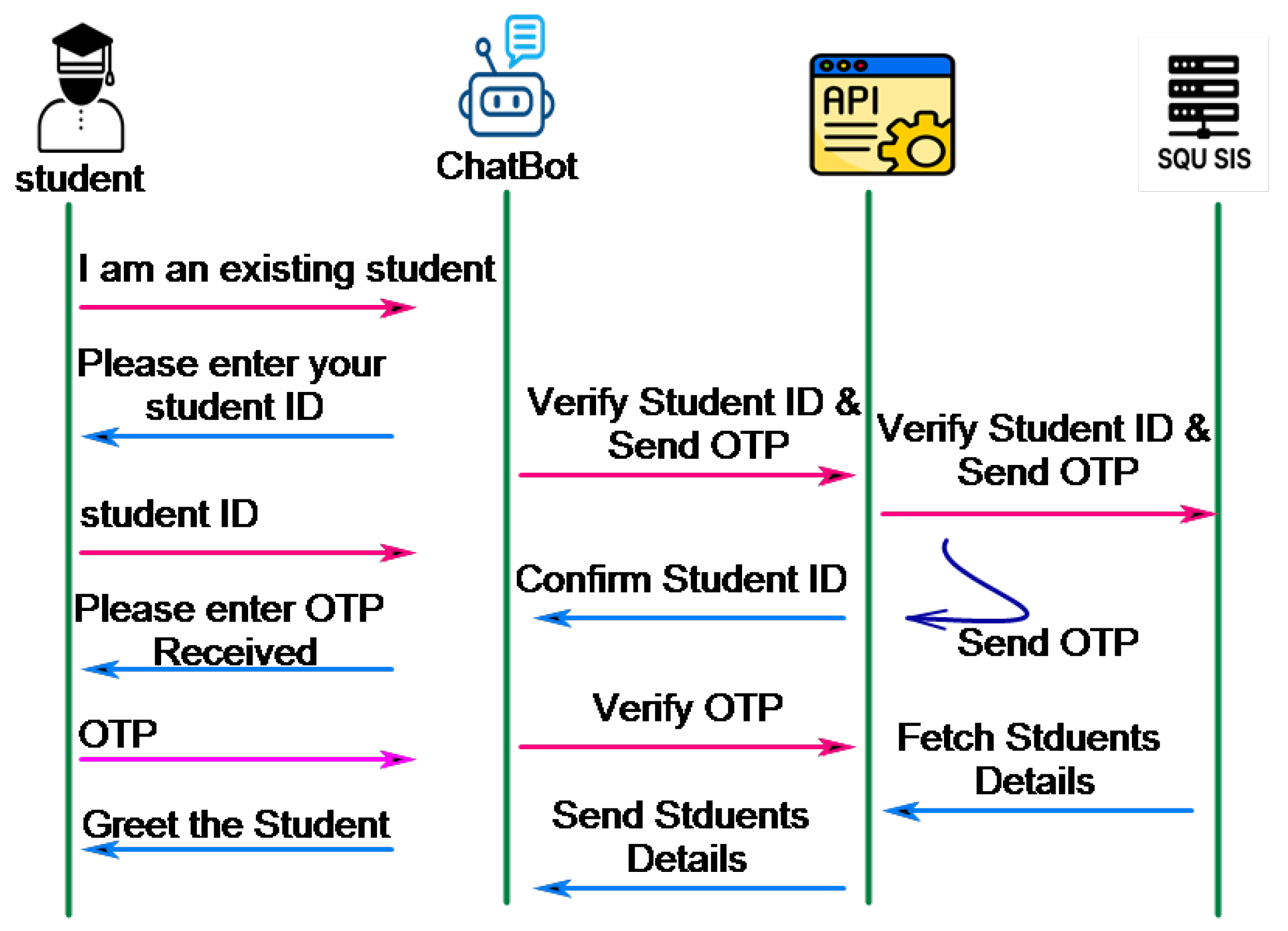

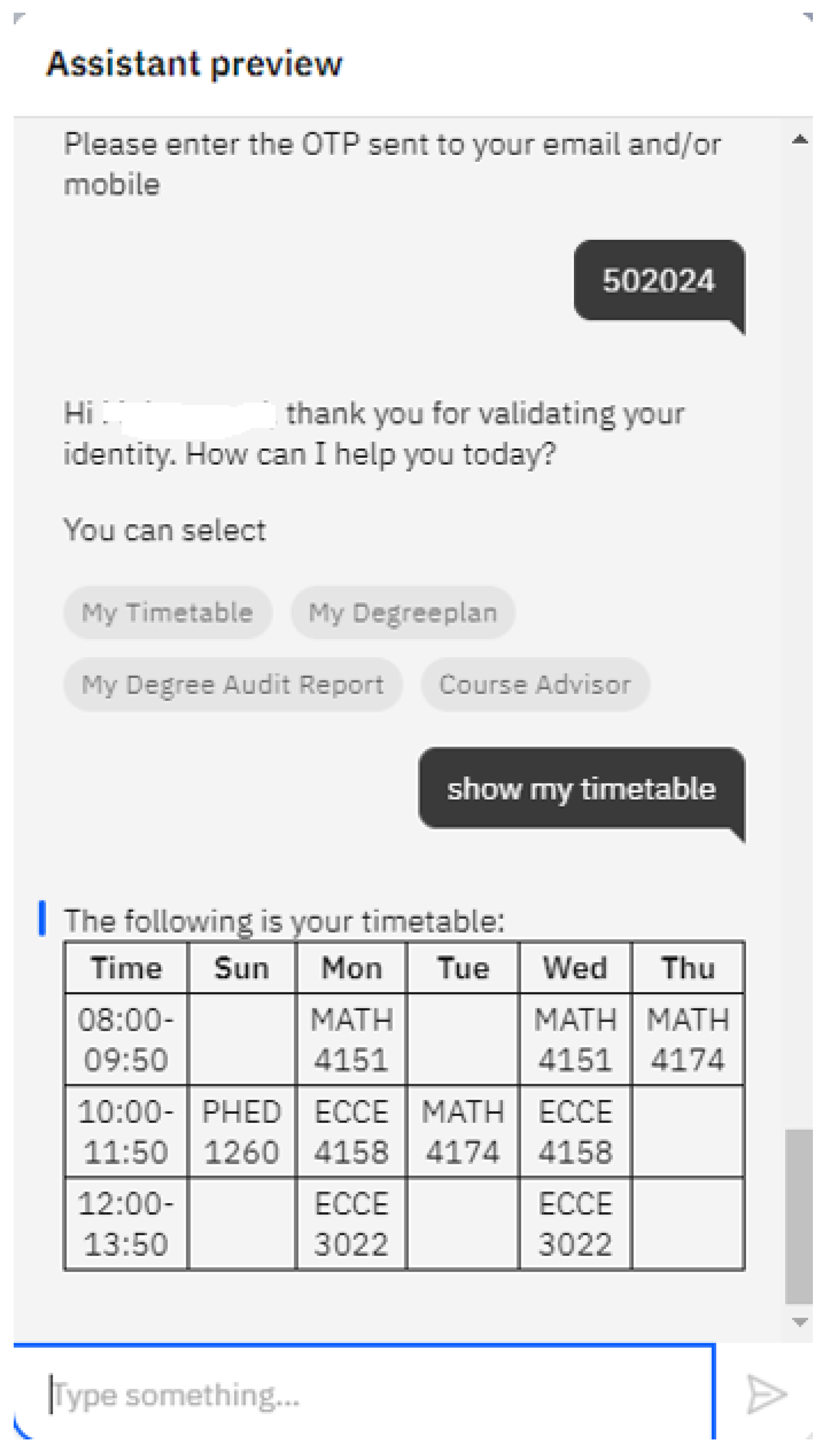

- Student verification: The SQUbot is capable of tailoring the search results according to the needs of the individual students. To provide personalized services to the students, the bot first verifies the student. Student verification is crucial for maintaining security and protecting students’ privacy by ensuring that only authorized students can access their information. Student verification is a prerequisite for using students’ personalized services using SQUbot. The bot uses the One Time Password (OTP) method to verify a student before they use any of the personalized services. If the user is an existing student, the chatbot first asks the student to enter their student ID and then calls an API endpoint to send an OTP SMS to the student’s mobile number. Students have to enter the correct OTP to proceed. Once verified, students can use personalized services without needing to verify again. Figure 6 shows how the chatbot verifies the student ID for providing personalized services. Also, the following figures demonstrate the process by which the chatbot checks the student’s ID to offer tailored services. After getting verified, the student can quickly access the following services:

- Show student timetable: Their timetable is saved anytime using the chatbot, which saves them the time of manually looking for the timeline. The student can type a simple phrase like ‘show me my timetable’. The chatbot will connect to the university’s data center through the API, look for the requested student ID, and fetch the timetable for the matching ID.

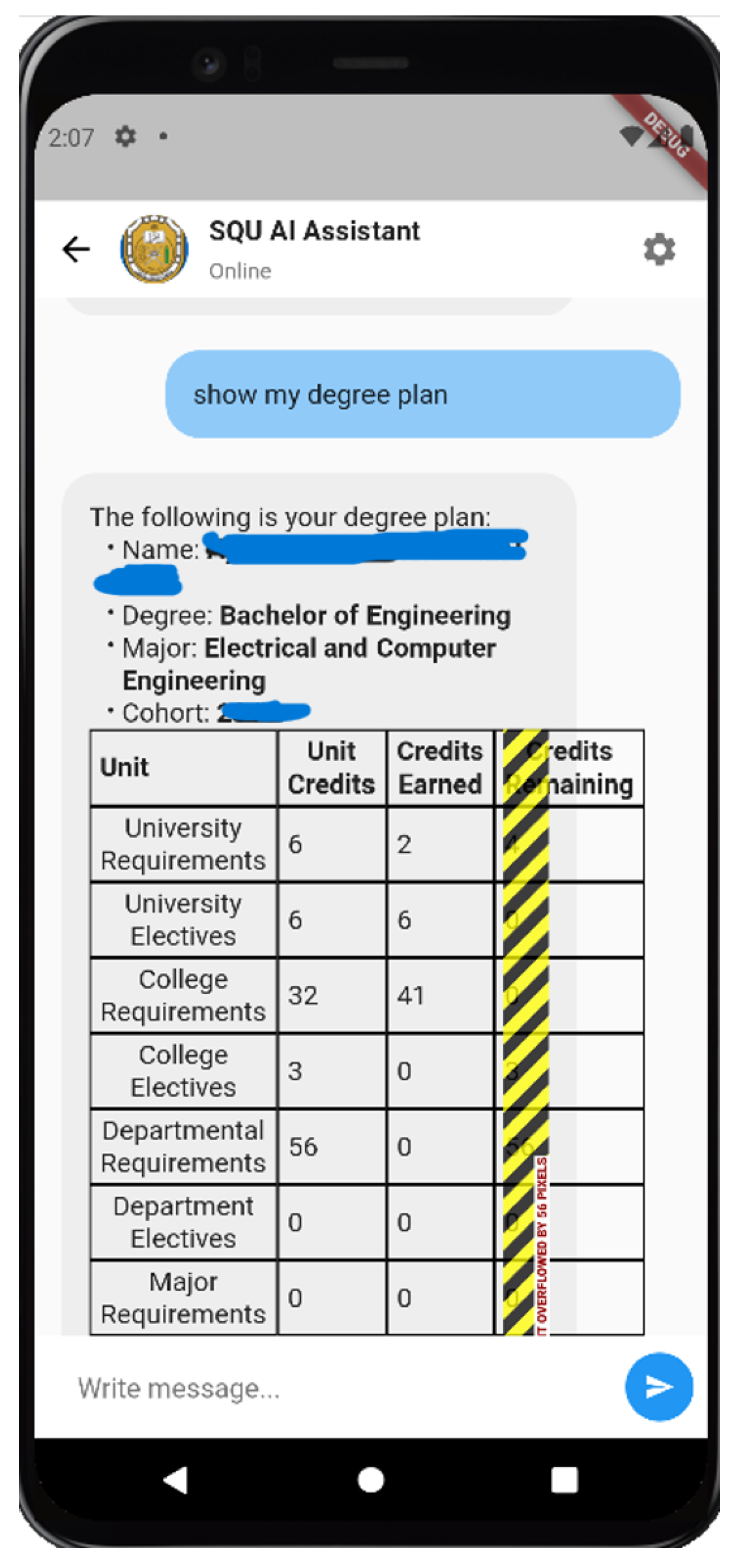

- Show student’s degree plan: The student can also check out their degree plan. A degree plan consists of course categories and a list of course credits in each category that a student has to acquire during their academic period to complete the degree. It is an essential piece of information that is sometimes very hard to get. Through the chatbot, a student can ask with a simple phrase like ‘show my degree plan’. The chatbot will then automatically identify the student and make an appropriate API call to retrieve the required information, which it will then present to the student. Figure 7 shows a snapshot of the basic degree plan. If students would like to get a more detailed plan, it is also provided.

- Show student degree audit report: A degree audit report is a critical academic advising tool that systematically evaluates a student’s educational progress toward completing their degree requirements. It functions by mapping the student’s completed coursework against the prescribed curriculum for their major or program of study. The report typically breaks down credits earned by category—such as general education, core major courses, electives, and capstone requirements—and indicates which requirements have been fulfilled, which are in progress, and which remain outstanding. This structured breakdown enables students to make informed decisions when planning their course schedules for upcoming semesters. Additionally, by identifying unmet prerequisites or credit deficiencies early, the degree audit helps prevent delays in graduation and facilitates more productive advising sessions.

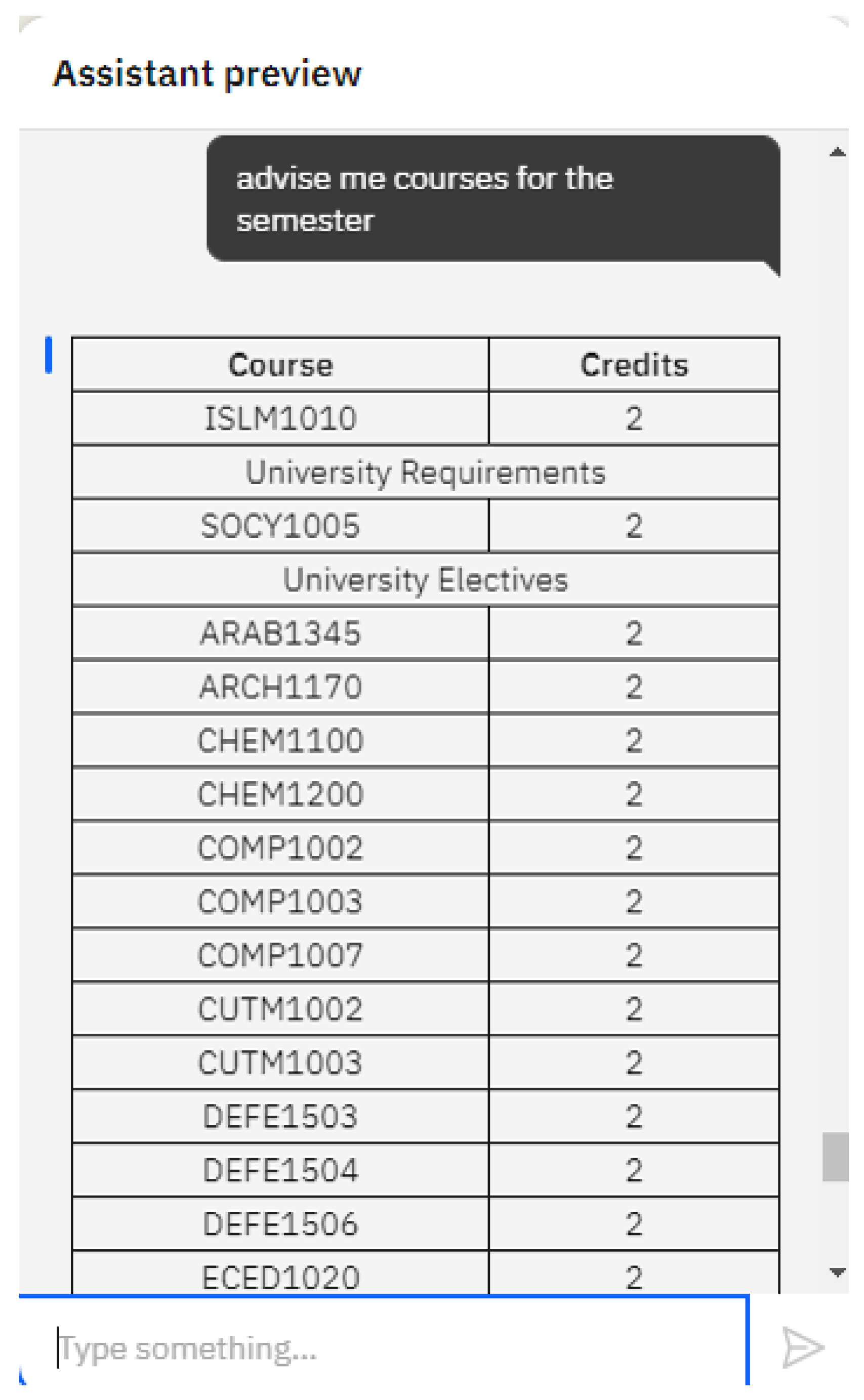

- Suggest courses for the next semester: The course recommendation feature can help students quickly discover relevant courses they can take without having to search through extensive catalogs, saving time and effort manually. The SQUbot can advise on possible courses a student can register for in the coming semester based on various factors, including the student’s degree plan, completed courses, and courses to be offered in the coming semester.

5.6. API Integration for Personalization

5.7. Platform Evaluation and Selection

5.8. User Testing Protocol

6. Results and Discussion

6.1. Evaluation Setup and Data Collection

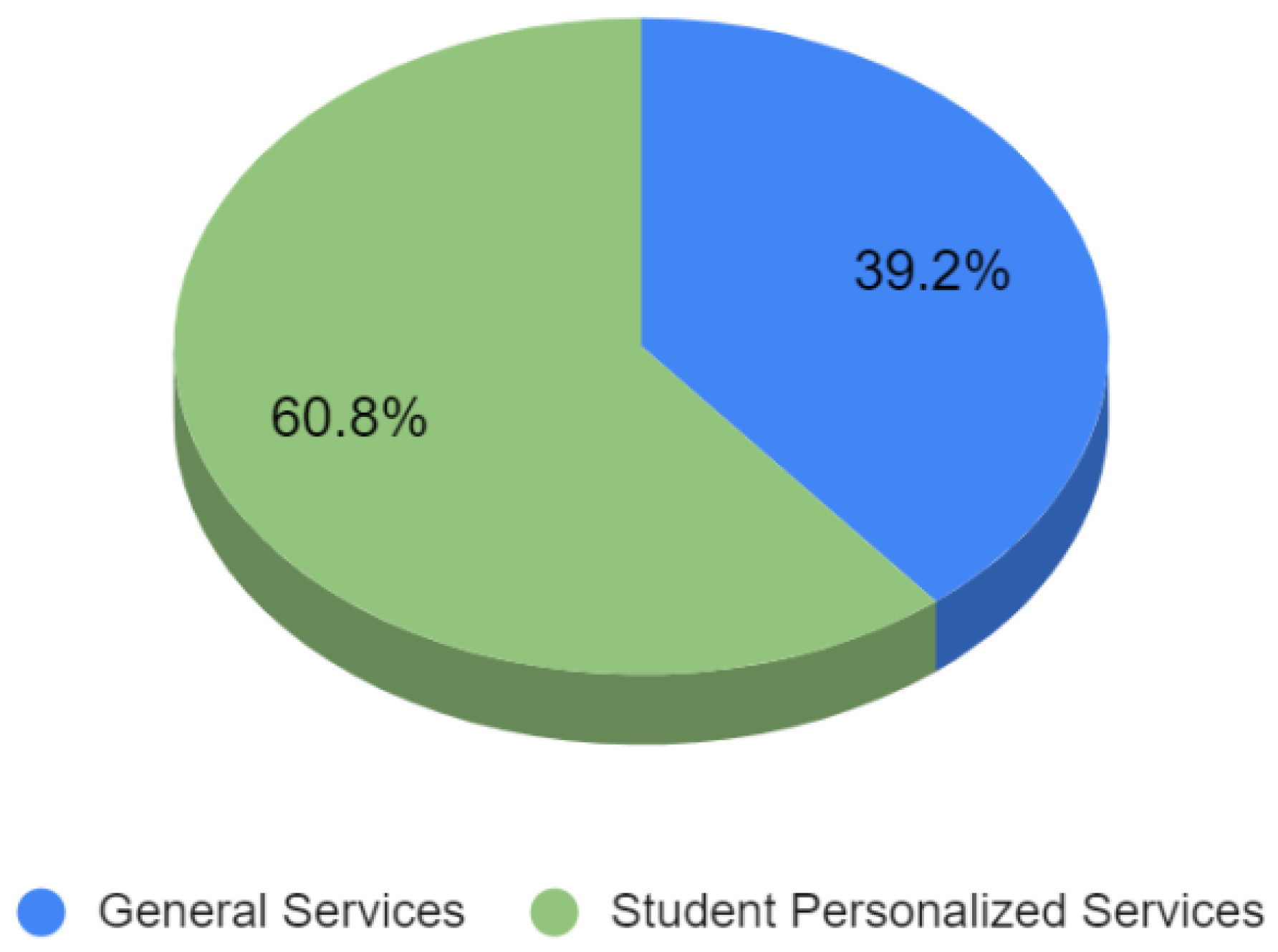

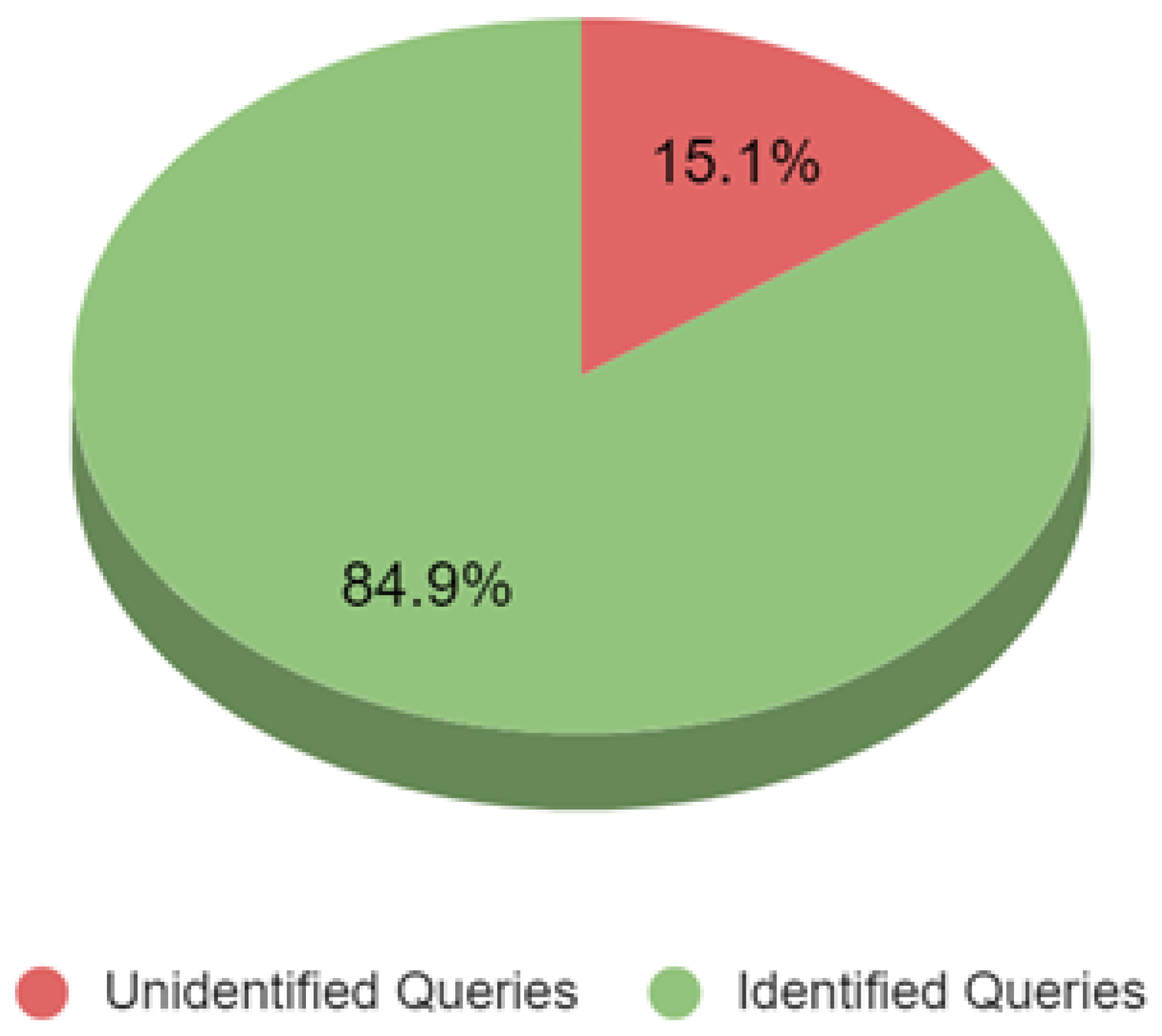

6.2. Quantitative Results

6.3. Error Analysis

- Dialectal variation (22 queries): Users used informal or colloquial Arabic phrases not included in the training set.

- Ambiguity (15 queries): Vague or incomplete questions led to low-confidence predictions.

- Out-of-scope (11 queries): The query topic was outside the current chatbot capabilities (e.g., visa status, cafeteria menu).

6.4. User Feedback and Engagement Patterns

6.5. Discussion and Implications

- The test was performed on a relatively small user base.

- Proper guidelines were provided about how to use the chatbot.

- Options were provided in the chatbot to guide users.

- My major;

- Patients;

- Circuit;

- My specialization;

- Registration of spring 22.

6.6. Novel Features and Design Contributions

- First bilingual (English–Arabic) educational chatbot implementation at Sultan Qaboos University (SQU) using IBM Watson Assistant with cloud-based NLP capabilities and support for a dual-language dialog.

- OTP-based student verification system integrated into the chatbot to securely enable personalized academic services, such as timetable access and course advising, while preserving data privacy by processing sensitive information locally within the university’s data center.

- Hybrid system architecture that connects cloud-based conversational AI with on-premise REST APIs, ensuring regulatory compliance and real-time data access without exposing personal student data to the cloud.

- Comprehensive personalized services:

- –

- Student timetable retrieval;

- –

- Degree plan consultation;

- –

- Degree audit reports;

- –

- Automated course recommendations based on academic history and course availability.

6.7. Error Analysis of Unidentified Queries:

- Out-of-scope academic terms: e.g., “Patients”, “Circuit”—queries related to disciplines or domains not covered in the training data.

- Ambiguous personal references: e.g., “My major”, “What is my specialization”—these queries imply contextual personalization, but lack sufficient entity resolution or training coverage.

- Temporal queries: e.g., “When is the registration for Spring 22 semester?”—such queries require access to frequently updated academic calendars not included in the initial chatbot version.

6.8. Contributing Factors to High Success Rate:

- Controlled user base (students from a specific college and department).

- Provision of usage guidelines through the pilot web portal and a live demo session.

- Guided conversation flows and topic suggestions within the chatbot interface.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Caldarini, G.; Jaf, S.; McGarry, K. A Literature Survey of Recent Advances in Chatbots. Information 2022, 13, 41. [Google Scholar] [CrossRef]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Anutariya, C.; Chawmungkrung, H.; Jearanaiwongkul, W.; Racharak, T. ChatBlock: A Block-Based Chatbot Framework for Supporting Young Learners and the Classroom Authoring for Teachers. Technologies 2025, 13, 1. [Google Scholar] [CrossRef]

- Luo, B.; Lau, R.Y.; Li, C.; Si, Y.W. A critical review of state-of-the-art chatbot designs and applications. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2022, 12, e1434. [Google Scholar] [CrossRef]

- Palasundram, K.; Sharef, N.M.; Nasharuddin, N.; Kasmiran, K.; Azman, A. Sequence to sequence model performance for education chatbot. Int. J. Emerg. Technol. Learn. (iJET) 2019, 14, 56–68. [Google Scholar] [CrossRef]

- Hussain, S.; Ameri Sianaki, O.; Ababneh, N. A survey on conversational agents/chatbots classification and design techniques. In Web, Artificial Intelligence and Network Applications, Proceedings of the Workshops of the 33rd International Conference on Advanced Information Networking and Applications (WAINA-2019) 33, Matsue, Japan, 27–29 March 2019; Springer: Cham, Switzerland, 2019; pp. 946–956. [Google Scholar]

- Vaira, L.; Bochicchio, M.A.; Conte, M.; Casaluci, F.M.; Melpignano, A. MamaBot: A System based on ML and NLP for supporting Women and Families during Pregnancy. In Proceedings of the 22nd International Database Engineering & Applications Symposium, Villa San Giovanni, Italy, 18–20 June 2018; pp. 273–277. [Google Scholar]

- Zhang, S.; Dinan, E.; Urbanek, J.; Szlam, A.; Kiela, D.; Weston, J. Personalizing dialogue agents: I have a dog, do you have pets too? arXiv 2018, arXiv:1801.07243. [Google Scholar] [CrossRef]

- Anumala, R.R.; Chintalapudi, S.L.; Yalamati, S. Execution of College Enquiry Chatbot using IBM virtual Assistant. In Proceedings of the 2022 International Conference on Computing, Communication and Power Technology (IC3P), Visakhapatnam, India, 7–8 January 2022; pp. 242–245. [Google Scholar]

- Fleming, M.; Riveros, P.; Reidsema, C.; Achilles, N. Streamlining student course requests using chatbots. In Proceedings of the 29th Australasian Association for Engineering Education Conference, Hamilton, New Zealand, 9–12 December 2018; Engineers Australia: Hamilton, New Zealand, 2018; pp. 207–211. [Google Scholar]

- Ralston, K.; Chen, Y.; Isah, H.; Zulkernine, F. A voice interactive multilingual student support system using IBM Watson. In Proceedings of the 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), Boca Raton, FL, USA, 16–19 December 2019; pp. 1924–1929. [Google Scholar]

- Attigeri, G.; Agrawal, A.; Kolekar, S. Advanced NLP models for Technical University Information Chatbots: Development and Comparative Analysis. IEEE Access 2024, 12, 29633–29647. [Google Scholar] [CrossRef]

- Hijjawi, M.; Bandar, Z.; Crockett, K.; Mclean, D. ArabChat: An arabic conversational agent. In Proceedings of the 2014 6th International Conference on Computer Science and Information Technology (CSIT), Amman, Jordan, 26–27 March 2014; pp. 227–237. [Google Scholar]

- Sweidan, S.Z.; Laban, S.S.A.; Alnaimat, N.A.; Darabkh, K.A. SEG-COVID: A student electronic guide within COVID-19 pandemic. In Proceedings of the 2021 9th International Conference on Information and Education Technology (ICIET), Okayama, Japan, 27–29 March 2021; pp. 139–144. [Google Scholar]

- Alabbas, A.; Alomar, K. Tayseer: A Novel AI-Powered Arabic Chatbot Framework for Technical and Vocational Student Helpdesk Services and Enhancing Student Interactions. Appl. Sci. 2024, 14, 2547. [Google Scholar] [CrossRef]

- Krishnam, N.P.; Bora, A.; Swathi, R.R.; Gehlot, A.; Chandraprakash, V.; Raghu, T. AI-Driven Bilingual Talkbot for Academic Counselling. In Proceedings of the 2023 3rd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 12–13 May 2023; pp. 1986–1992. [Google Scholar]

- Sharma, V.; Goyal, M.; Malik, D. An intelligent behaviour shown by chatbot system. Int. J. New Technol. Res. 2017, 3, 263312. [Google Scholar]

- Verma, S.; Sahni, L.; Sharma, M. Comparative analysis of chatbots. In Proceedings of the International Conference on Innovative Computing & Communications (ICICC), Delhi, India, 21–23 February 2020. [Google Scholar]

- Qaffas, A.A. Improvement of Chatbots semantics using wit. ai and word sequence kernel: Education Chatbot as a case study. Int. J. Mod. Educ. Comput. Sci. 2019, 11, 16. [Google Scholar] [CrossRef]

- Shum, H.Y.; He, X.d.; Li, D. From Eliza to XiaoIce: Challenges and opportunities with social chatbots. Front. Inf. Technol. Electron. Eng. 2018, 19, 10–26. [Google Scholar] [CrossRef]

- Ranavare, S.S.; Kamath, R. Artificial intelligence based chatbot for placement activity at college using dialogflow. Our Herit. 2020, 68, 4806–4814. [Google Scholar]

- Al-Madi, N.A.; Maria, K.A.; Al-Madi, M.A.; Alia, M.A.; Maria, E.A. An intelligent Arabic chatbot system proposed framework. In Proceedings of the 2021 International Conference on Information Technology (ICIT), Amman, Jordan, 14–15 July 2021; pp. 592–597. [Google Scholar]

- Hamideh Kerdar, S.; Kirchhoff, B.M.; Adolph, L.; Bächler, L. A Study on Chatbot Development Using No-Code Platforms by People with Disabilities for Their Peers at a Sheltered Workshop. Technologies 2025, 13, 146. [Google Scholar] [CrossRef]

| Chatbot Type | Rule-Based | AI and ML | Hybrid Model |

|---|---|---|---|

| Definition | Provide a set of predefined options. The user has to select the appropriate option. Great for smaller numbers and straightforward queries. Guided by a decision tree. Can provide personalized responses based on user identification. | Use natural conversation. Use NLP technology to understand the user query and serve with an appropriate response. Use ML to remember user chats and learn from them to improve and grow over time. Can provide personalized responses based on user identification. | Use the simplicity of a rule-based chatbot. Use the sophistication of an AI and ML-based chatbot. Cater to customers based on the situation and query. Integrate with back-end systems to provide personalized transactions. |

| Types | Menu-based chatbot and linguistic-based chatbot. | Keyword recognition-based chatbots and machine learning chatbots | - |

| Example | Healthcare bot for appointments, booking bot to book rooms and services, and travel bot for flight bookings. | Retail support bot, banking bot, telecom bot and orders, deliveries, and logistics bot | Education-course bot, automotive lead generation bot, social media marketing bot |

| Frameworks | From Scratch | Ready to Use | Hybrid |

|---|---|---|---|

| Features | - Difficult | - Easy to use | Scratch/Readymade |

| - Requires programming | - No programming | ||

| - More time | - Less time | ||

| - SDKs and libraries | - Online interface | ||

| - Free and open source | - Paid | ||

| - Need hosting | - Hosting-ready | ||

| - Full Control | - Restricted | ||

| Examples | - NLTK [Python] | Mobile Monkey | - OneReach.ai |

| - Botkit [Node.js-SDK] | Botsify Chatfuel | - Microsoft Bot | |

| - Chatterbot [Python] | Manychat Flowxo | - IBM Watson | |

| - RASA [Python] | - Google Dialogflow | ||

| - Botpress [Type-Script] | - Amazon Lex | ||

| - Wit.ai |

| Papers | Language | Technique | Limitations |

|---|---|---|---|

| [13] | Arabic | Pattern Matching | - Relies on limited rule set - Does not cater context and semantics - Addresses only general services |

| [14] | Arabic | Keyword Matching | - Relies on predefined keywords - Does not cater context and semantics |

| [15] | Arabic | RASA framework | - Addresses only general services |

| [16] | English + Arabic | Deep neural network | - Resource intensive - Less scalable - Addresses only general services |

| [22] | Arabic | Keyword matching + String distance comparison algorithm | - Partially relies on keywords - Addresses only general services |

| Intent ID | Example Utterances | Related Entity | Purpose |

|---|---|---|---|

| #GetAdmissionDetails | “How can I apply?”, “Undergrad admission info” | @program_type | Admission info by type |

| #RetrieveEmployeeContact | “Show contact for Dr. X” | @emp_name | Get employee contact details |

| #ShowTimetable | “Show my timetable”, “What are my classes today?” | @student_id | Retrieve student schedule |

| #CourseDetails | “Give details of CS101” | @course_code | Provide course description |

| Platform | Arabic NLP Support | API Flexibility | Ease of Use | Decision |

|---|---|---|---|---|

| Dialogflow | Partial | High | Easy | No |

| Botsify | Limited | Moderate | Very easy | No |

| IBM Watson | Good | High | Moderate | Yes |

| Metric | Value |

|---|---|

| Total messages | 318 |

| Correctly matched intents | 270 (84.9%) |

| General queries | 163 |

| Personalized queries | 107 |

| Unrecognized queries | 48 (15.1%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nadir, Z.; Al Lawati, H.M.; Mohammed, R.A.; Al Subhi, M.; Hossen, A. SQUbot: Enhancing Student Support Through a Personalized Chatbot System. Technologies 2025, 13, 416. https://doi.org/10.3390/technologies13090416

Nadir Z, Al Lawati HM, Mohammed RA, Al Subhi M, Hossen A. SQUbot: Enhancing Student Support Through a Personalized Chatbot System. Technologies. 2025; 13(9):416. https://doi.org/10.3390/technologies13090416

Chicago/Turabian StyleNadir, Zia, Hassan M. Al Lawati, Rayees A. Mohammed, Muna Al Subhi, and Abdulnasir Hossen. 2025. "SQUbot: Enhancing Student Support Through a Personalized Chatbot System" Technologies 13, no. 9: 416. https://doi.org/10.3390/technologies13090416

APA StyleNadir, Z., Al Lawati, H. M., Mohammed, R. A., Al Subhi, M., & Hossen, A. (2025). SQUbot: Enhancing Student Support Through a Personalized Chatbot System. Technologies, 13(9), 416. https://doi.org/10.3390/technologies13090416