Robust Supervised Deep Discrete Hashing for Cross-Modal Retrieval

Abstract

1. Introduction

1.1. Motivation

1.2. Contribution

1.3. Organization

2. Related Work

2.1. Deep Learning

2.2. Shallow Cross-Modal Hashing

2.3. Deep Cross-Modal Hashing

3. Robust Supervised Deep Discrete Hashing

3.1. Symbol Definition and Problem Formulation

3.2. Feature Learning

3.2.1. Image Feature Learning

3.2.2. Text Feature Learning

3.3. Hash Code Learning

3.3.1. Deep Hash Code Learning with Non-Redundant Feature Selection Strategy

3.3.2. Intra-Modal Consistency Preservation

3.3.3. Inter-Modal Consistency Preservation

3.4. Overall Objective Function

4. Optimization

4.1. Update of Image Modality with B, , and Fixed

| Algorithm 1 Algorithm for obtaining DNN parameter . |

Input: image feature matrix v, projection matrix , binary hash code matrix B, mini-batch size , and iteration number . Output: DNN parameter . 1: Initialize DNN parameter; 2: for do; 3: Randomly sample feature vectors from v to construct a mini-batch; 4: For each sampled point in the mini-batch, calculate by forward propagation; 5: Calculate the derivative according to Equation (15); 6: Update the DNN parameter by using back propagation; 7: end for |

4.2. Update of Text Modality with B, , and Fixed

4.3. Update with B, , and Fixed

| Algorithm 2 The iterative algorithm for solving projection matrix . |

Input: features F, binary hash code matrix B, maximum iteration number , threshold . Output: projection matrix . 1: Initialize ; 2: for do; 3: Compute according to Equation (19) and construct diagonal matrix ; 4: Compute ; 5: if then 6: break 7: end if 8: end for |

4.4. Update with B, , and Fixed

4.5. Update B with , , and Fixed

4.5.1. Objective Function Reformulation

4.5.2. Singular Value Decomposition-Based Discrete Hashing

| Algorithm 3 SVDDH scheme. |

Input: deep learning feature matrices F and G, projection matrices and , Laplacian matrix L. Output: binary hash code matrix B=. 1: Compute ; 2: Obtain , and ∑ by conducting SVD for matrix L; 3: for do 4: Obtain , and from , and Q, respectively; 5: Obtain , and from B, and , respectively; 6: Compute according to Equation (32); 7: end for |

4.6. Complexity Analysis

5. Experiments

5.1. Datasets

5.2. Evaluation Metrics

5.3. Baseline Methods and Experimental Settings

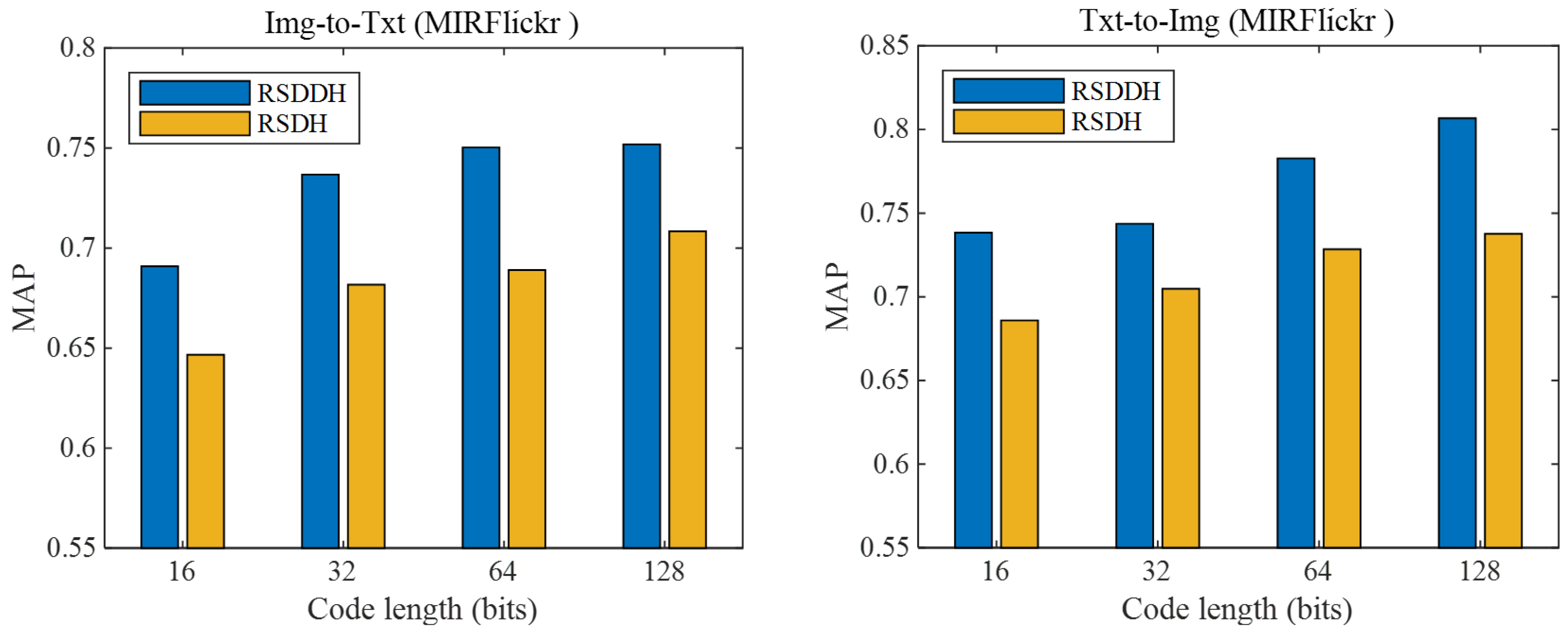

5.4. Overall Comparison with Baseline Methods

5.5. Further Comparison with Shallow Learning-Based Baseline Methods

6. Discussion

6.1. Effect of Non-Redundant Feature Selection Strategy

6.2. Effect of Inter-Modal and Intra-Modal Consistency Preservation Strategies

6.3. Effect of Discrete Hashing Scheme

6.4. Training Time Comparison

6.5. Effect of the Size of Training Set

6.6. Parameter Sensitivity Analysis

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yasuda, K.; Aritsugi, M.; Takeuchi, Y.; Shibayama, A. Disaster image tagging using generative AI for digital archives. In Proceedings of the 24th ACM Joint Conference on Digital Libraries, Hong Kong, China, 16–20 December 2025; p. 11. [Google Scholar]

- Mai Chau, P.P.; Bakkali, S.; Doucet, A. DocSum: Domain-adaptive pre-training for document abstractive summarization. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision Workshops, Tucson, AZ, USA, 28 February–4 March 2025; pp. 1213–1222. [Google Scholar]

- Liu, Y.; Wu, J.; Xin, G. Multi-keywords carrier-free text steganography based on part of speech tagging. In Proceedings of the International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery, Guilin, China, 29–31 July 2017; pp. 2102–2107. [Google Scholar]

- Hardoon, D.R.; Szedmak, S.; Shawe-Taylor, J. Canonical correlation analysis: An overview with application to learning methods. Neural Comput. 2004, 16, 2639–2664. [Google Scholar] [CrossRef]

- Geladi, P.; Kowalski, B.R. Partial least-squares regression: A tutorial. Anal. Chim. Acta. 1986, 185, 1–17. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, L.; Wang, W.; Zhang, Z. Continuum regression for cross-modal multimedia retrieval. In Proceedings of the IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 1949–1952. [Google Scholar]

- Jiang, Q.Y.; Li, W.J. Deep cross-modal hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3232–3240. [Google Scholar]

- Weiss, Y.; Torralba, A.; Fergus, R. Spectral hashing. In Proceedings of the Annual Conference on Neural Information Processing Systems, Vancouver, BC, USA, 8–10 December 2008; pp. 1753–1760. [Google Scholar]

- Kumar, S.; Udupa, R. Learning hash functions for cross-view similarity search. In Proceedings of the International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011; pp. 1360–1365. [Google Scholar]

- Song, J.; Yang, Y.; Yang, Y.; Huang, Z.; Shen, H.T. Inter-media hashing for large-scale retrieval from heterogeneous data sources. In Proceedings of the ACM International Conference on Management of Data, New York, NY, USA, 22–27 June 2013; pp. 785–796. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, USA, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Jiao, Z.; Gao, X.; Wang, Y.; Li, J.; Xu, H. Deep convolutional neural networks for mental load classification based on EEG data. Pattern Recognit. 2018, 76, 582–595. [Google Scholar] [CrossRef]

- Feng, F.; Wang, X.; Li, R. Cross-modal retrieval with correspondence autoencoder. In Proceedings of the ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 7–16. [Google Scholar]

- Qian, X.; Xue, W.; Zhang, Q.; Tao, R.; Li, H. Deep cross-modal retrieval between spatial image and acoustic speech. IEEE Trans. Multimed. 2024, 26, 4480–4489. [Google Scholar] [CrossRef]

- Chen, C.; Wang, D. CausMatch: Causal matching learning with counterfactual preference framework for cross-modal retrieval. IEEE Access 2025, 13, 12734–12745. [Google Scholar] [CrossRef]

- Guo, X.; Zhang, H.; Liu, L.; Liu, D.; Lu, X.; Meng, H. Primary code guided targeted attack against cross-modal hashing retrieval. IEEE Trans. Multimed. 2025, 27, 312. [Google Scholar] [CrossRef]

- Qi, X.; Zeng, X.; Tang, H. Cross-Modal hashing retrieval based on density clustering. IEEE Access 2025, 13, 44577–44589. [Google Scholar] [CrossRef]

- Cao, Y.; Long, M.; Wang, J.; Liu, S. Collective deep quantization for efficient cross-modal retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3974–3980. [Google Scholar]

- Zhu, X.; Huang, Z.; Shen, H.T.; Zhao, X. Linear cross-modal hashing for efficient multimedia search. In Proceedings of the ACM International Conference on Multimedia, Barcelona, Spain, 21 October 2013; pp. 143–152. [Google Scholar]

- Liu, W.; Mu, C.; Kumar, S.; Chang, S.F. Discrete graph hashing. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3419–3427. [Google Scholar]

- Cun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Fei, L.; He, Z.; Wong, W.K.; Zhu, Q.; Zhao, S.; Wen, J. Semantic decomposition and enhancement hashing for deep cross-modal retrieval. Pattern Recognit. 2025, 160, 111225. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; p. 25. [Google Scholar]

- Hu, W.; Fan, Y.; Xing, J.; Sun, L.; Cai, Z.; Maybank, S. Deep constrained siamese hash coding network and load-balanced locality-sensitive hashing for near duplicate image detection. IEEE Trans. Image Process. 2018, 27, 4452–4464. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Sun, Z.; He, R.; Tan, T. A general framework for deep supervised discrete hashing. Int. J. Comput. Vision. 2020, 128, 2204–2222. [Google Scholar] [CrossRef]

- Yang, H.F.; Lin, K.; Chen, C.S. Supervised learning of semantics-preserving hash via deep convolutional neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 437–451. [Google Scholar] [CrossRef]

- Oh, Y.; Park, S.; Ye, J.C. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans. Med. Imaging 2020, 39, 2688–2700. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Z.; Weng, Z.; Li, R.; Zhuang, H.; Lin, Z. Online weighted hashing for cross-modal retrieval. Pattern Recognit. 2025, 161, 111232. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, Y.; Li, J.; Chen, J.; Akutsu, T.; Cheung, Y.M.; Cai, H. Unsupervised dual deep hashing with semantic-index and content-code for cross-modal retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 387–399. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the ACM International Conference on Multimedia, Mountain View, CA, USA, 3–7 November 2014; pp. 675–678. [Google Scholar]

- Raji, C.G.; Chandra, S.V. Long-term forecasting the survival in liver transplantation using multilayer perceptron networks. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 2318–2329. [Google Scholar] [CrossRef]

- Ding, G.; Guo, Y.; Zhou, J.; Gao, Y. Large-scale cross-modality search via collective matrix factorization hashing. IEEE Trans. Image Process. 2016, 25, 5427–5440. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Ji, R.; Wu, Y.; Huang, F.; Zhang, B. Cross-modality binary code learning via fusion similarity hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7380–7388. [Google Scholar]

- Ding, G.; Guo, Y.; Zhou, J. Collective matrix factorization hashing for multimodal data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2083–2090. [Google Scholar]

- Wang, W.; Shen, Y.; Zhang, H.; Yao, Y.; Liu, L. Set and rebase: Determining the semantic graph connectivity for unsupervised cross modal hashing. In Proceedings of the International Joint Conference on Artificial Intelligence, Yokohama, Japan, 7–15 January 2020; pp. 853–859. [Google Scholar]

- Wang, D.; Wang, Q.; Gao, X. Robust and hashing for cross-modal similarity search. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 2703–2715. [Google Scholar] [CrossRef]

- Smeulders, A.W.M.; Worring, M.; Santini, S.; Gupta, A.; Jain, R. Content-based image retrieval at the end of the early years. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1349–1380. [Google Scholar] [CrossRef]

- Tang, J.; Wang, K.; Shao, L. Supervised matrix factorization hashing for cross-modal retrieval. IEEE Trans. Image Process. 2016, 25, 3157–3166. [Google Scholar] [CrossRef]

- Wang, D.; Gao, X.; Wang, X.; He, L. Label consistent matrix factorization hashing for large-scale cross-modal similarity search. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2466–2479. [Google Scholar] [CrossRef]

- Fang, Y.; Ren, Y. Supervised discrete cross-modal hashing based on kernel discriminant analysis. Pattern Recognit. 2020, 98, 107062. [Google Scholar] [CrossRef]

- Shen, H.T.; Liu, L.; Yang, Y.; Xu, X.; Huang, Z.; Shen, F.; Hong, R. Exploiting subspace relation in semantic labels for cross-modal hashing. IEEE Trans. Knowl. Data Eng. 2020, 33, 3351–3365. [Google Scholar] [CrossRef]

- Zhang, D.; Li, W.J. Large-scale supervised multimodal hashing with semantic correlation maximization. In Proceedings of the AAAI Conference on Artificial Intelligence, Quebec City, QC, Canada, 27–31 July 2014; pp. 1–7. [Google Scholar]

- Mandal, D.; Chaudhury, K.N.; Biswas, S. Generalized semantic preserving hashing for cross-modal retrieval. IEEE Trans. Image Process. 2019, 28, 102–112. [Google Scholar] [CrossRef] [PubMed]

- Meng, M.; Wang, H.; Yu, J.; Chen, H.; Wu, J. Asymmetric supervised consistent and specific hashing for cross-modal retrieval. IEEE Trans. Image Process. 2021, 30, 986–1000. [Google Scholar] [CrossRef] [PubMed]

- Song, G.; Su, H.; Huang, K. Deep Self-enhancement hashing for robust multi-label cross-modal retrieval. Pattern Recognit. 2024, 147, 110079. [Google Scholar] [CrossRef]

- Karpathy, A.; Li, F.F. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3128–3137. [Google Scholar]

- Long, M.; Cao, Y.; Wang, J.; Jordan, M. Learning transferable features with deep adaptation networks. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 97–105. [Google Scholar]

- Ma, X.; Zhang, T.; Xu, C. Multi-level correlation adversarial hashing for cross-modal retrieval. IEEE Trans. Multimed. 2020, 22, 3101–3114. [Google Scholar] [CrossRef]

- Xie, D.; Deng, C.; Li, C.; Liu, X.; Tao, D. Multi-task consistency-preserving adversarial hashing for cross-modal retrieval. IEEE Trans. Image Process. 2020, 29, 3626–3637. [Google Scholar] [CrossRef]

- Wu, L.; Wang, Y.; Shao, L. Cycle-consistent deep generative hashing for cross-modal retrieval. IEEE Trans. Image Process. 2019, 28, 1602–1612. [Google Scholar] [CrossRef]

- Yang, E.; Deng, C.; Liu, W.; Liu, X.; Tao, D.; Gao, X. Pairwise relationship guided deep hashing for cross-modal retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 1618–1625. [Google Scholar]

- Liong, V.E.; Lu, J.; Tan, Y.P.; Zhou, J. Cross-modal deep variational hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4077–4085. [Google Scholar]

- Deng, C.; Chen, Z.; Liu, X.; Gao, X.; Tao, D. Triplet-based deep hashing network for cross-modal retrieval. IEEE Trans. Image Process. 2018, 27, 3893–3903. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Wang, L.; Liu, H. Efficient spectral feature selection with minimum redundancy. In Proceedings of the AAAI Conference on Artificial Intelligence, Atlanta, GA, USA, 11–15 July 2010; pp. 673–678. [Google Scholar]

- He, R.; Tan, T.N.; Wang, L.; Zheng, W. l2,1 regularized correntropy for robust feature selection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2504–2511. [Google Scholar]

- Nie, F.; Huang, H.; Cai, X.; Ding, C.H. Efficient and robust feature selection via joint l2,1-norms minimization. In Proceedings of the Annual Conference on Neural Information Processing Systems, Vancouver, BC, USA, 6–9 December 2010; pp. 1813–1821. [Google Scholar]

- Zhen, Y.; Yeung, D.Y. A probabilistic model for multimodal hash function learning. In Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 940–948. [Google Scholar]

- Tseng, P. Convergence of a block coordinate descent method for nondifferentiable minimization. J. Optim. Theory Appl. 2001, 109, 475–494. [Google Scholar] [CrossRef]

- He, R.; Zheng, W.; Hu, B. Maximum correntropy criterion for robust face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1561–1576. [Google Scholar]

- Nikolova, M.; Ng, M.K. Analysis of half-quadratic minimization methods for signal and image recovery. SIAM J. Sci. Comput. 2005, 27, 937–966. [Google Scholar] [CrossRef]

- Wang, K.; He, R.; Wang, L.; Wang, W.; Tan, T. Joint feature selection and subspace learning for cross-modal retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2010–2023. [Google Scholar] [CrossRef]

- Shen, F.; Shen, C.; Liu, W.; Shen, H.T. Supervised discrete hashing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 37–45. [Google Scholar]

- Sun, R.; Sun, H.; Yao, T. A SVD-and quantization based semi-fragile watermarking technique for image authentication. In Proceedings of the International Conference on Signal Processing, Beijing, China, 26–30 August 2002; pp. 1592–1595. [Google Scholar]

- He, K.; Sun, J. Convolutional neural networks at constrained time cost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5353–5360. [Google Scholar]

- Rasiwasia, N.; Pereira, J.C.; Coviello, E.; Doyle, G.; Lanckriet, G.R.G.; Levy, R.; Vasconcelos, N. A new approach to cross-modal multimedia retrieval. In Proceedings of the ACM International Conference on Multimedia, Firenze Italy, 25–29 October 2010; pp. 251–260. [Google Scholar]

- Huiskes, M.J.; Lew, M.S. The MIR Flickr retrieval evaluation. In Proceedings of the 1st ACM International Conference on Multimedia Information Retrieval, Vancouver, BC, USA, 30–31 October 2008; pp. 39–43. [Google Scholar]

- Chua, T.S.; Tang, J.; Hong, R.; Li, H.; Luo, Z.; Zheng, Y. NUS-WIDE: A real-world web image database from National University of Singapore. In Proceedings of the ACM International Conference on Multimedia, Beijing, China, 19–24 October 2009; pp. 1–9. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Peng, Y.; Huang, X.; Qi, J. Cross-media shared representation by hierarchical learning with multiple deep networks. In Proceedings of the International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 3846–3853. [Google Scholar]

- Qin, Q.; Huo, Y.; Huang, L. Deep neighborhood-preserving hashing with quadratic spherical mutual information for cross-modal retrieval. IEEE Trans. Multimed. 2024, 26, 6361–6374. [Google Scholar] [CrossRef]

- Kang, C.; Liao, S.; Li, Z.; Cao, Z.; Xiong, G. Learning deep semantic embeddings for cross-modal retrieval. In Proceedings of the Asian Conference on Machine Learning, Seoul, Republic of Korea, 15–17 November 2017; pp. 471–486. [Google Scholar]

| Symbol | Definition |

|---|---|

| The n training instances in image (text) modality | |

| Y | Class label information of n training instances |

| B | Binary hash codes of n training instances |

| Deep learning feature matrix of n training instances in image (text) modality | |

| Projection matrix of image (text) modality for feature selection in image (text) modality | |

| Binary hash codes of the ith training instance in image (text) modality | |

| Network parameter of the deep neural network for image (text) modality | |

| The ith training instance in image (text) modality | |

| Deep learning feature of one training instance in image (text) modality |

| Name | Type | Input Size | Filter Number/Size/ Stride/Pad | Activation Function |

|---|---|---|---|---|

| Conv1 | Convolution | 227 × 227 | 96/11 × 11/4/0 | ReLU |

| Conv2 | Convolution | 27 × 27 | 256/5 × 5/1/2 | ReLU |

| Conv3 | Convolution | 13 × 13 | 384/3 × 3/1/1 | ReLU |

| Conv4 | Convolution | 13 × 13 | 384/3 × 3/1/1 | ReLU |

| Conv5 | Convolution | 13 × 13 | 256/3 × 3/1/1 | ReLU |

| Fc6 | Fully connected | 6 × 6 | N/A | ReLU |

| Fc7 | Fully connected | 4096 × 1 | N/A | TanH |

| Name | Type | Input Size | Number of Nodes | Activation Function |

|---|---|---|---|---|

| Fc1 | Fully connected | 4096 | ReLU | |

| Fc2 | Fully connected | 4096 × 1 | 4096 | ReLU |

| Fc3 | Fully connected | 4096 × 1 | TanH |

| Task | Method | Wiki | MIRFlickr | NUS-WIDE | MSCOCO | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | ||

| Img-to-Txt | CMFH [35] | 0.1900 | 0.2059 | 0.2014 | 0.1853 | 0.5883 | 0.5858 | 0.5891 | 0.5899 | 0.4962 | 0.5006 | 0.5325 | 0.5314 | 0.3660 | 0.3690 | 0.3700 | 0.3650 |

| SCM [43] | 0.2393 | 0.2303 | 0.2332 | 0.2510 | 0.6129 | 0.6345 | 0.6436 | 0.6581 | 0.5097 | 0.5248 | 0.5434 | 0.5670 | 0.5670 | 0.6150 | 0.6430 | 0.6600 | |

| SMFH [39] | 0.2276 | 0.2060 | 0.2129 | 0.2321 | 0.5672 | 0.5645 | 0.5691 | 0.5506 | 0.5524 | 0.5655 | 0.5676 | 0.5744 | 0.6210 | 0.6440 | 0.5250 | 0.5620 | |

| PRDH [52] | 0.2437 | 0.2372 | 0.2464 | 0.2600 | 0.6383 | 0.6633 | 0.6831 | 0.6710 | 0.6104 | 0.6200 | 0.6318 | 0.6377 | - | - | - | - | |

| CDQ [18] | 0.2398 | 0.2495 | 0.2576 | 0.2629 | 0.6477 | 0.6676 | 0.7005 | 0.7052 | 0.6055 | 0.6156 | 0.6251 | 0.6413 | 0.6120 | 0.6070 | 0.6420 | 0.6730 | |

| DCMH [7] | 0.2364 | 0.2559 | 0.2660 | 0.2642 | 0.6728 | 0.6965 | 0.7101 | 0.7220 | 0.6139 | 0.6315 | 0.6439 | 0.6436 | 0.6530 | 0.6678 | 0.6909 | 0.7100 | |

| DNPH [71] | - | - | - | - | 0.7330 | 0.7240 | 0.7450 | 0.7500 | 0.6430 | 0.6620 | 0.6730 | 0.6810 | 0.6727 | 0.6903 | 0.6860 | 0.6989 | |

| RSDDH (Ours) | 0.2556 | 0.2909 | 0.2819 | 0.2801 | 0.6909 | 0.7367 | 0.7503 | 0.7518 | 0.6509 | 0.6992 | 0.7179 | 0.7201 | 0.6954 | 0.7135 | 0.7212 | 0.7210 | |

| Txt-to-Img | CMFH [35] | 0.5875 | 0.6135 | 0.6053 | 0.6124 | 0.5876 | 0.5868 | 0.5919 | 0.5906 | 0.5027 | 0.5106 | 0.5311 | 0.5405 | 0.3460 | 0.3460 | 0.3460 | 0.3460 |

| SCM [43] | 0.3492 | 0.4035 | 0.3945 | 0.4046 | 0.5936 | 0.6081 | 0.6242 | 0.6482 | 0.5188 | 0.5321 | 0.5515 | 0.5749 | 0.6080 | 0.6120 | 0.6330 | 0.6490 | |

| SMFH [39] | 0.6136 | 0.6453 | 0.6531 | 0.6090 | 0.6576 | 0.6503 | 0.6600 | 0.6643 | 0.5749 | 0.5859 | 0.5959 | 0.5901 | 0.6270 | 0.6600 | 0.5520 | 0.5950 | |

| PRDH [52] | 0.6206 | 0.6296 | 0.6374 | 0.6469 | 0.6588 | 0.6672 | 0.6656 | 0.7320 | 0.6102 | 0.6156 | 0.6338 | 0.6481 | - | - | - | - | |

| CDQ [18] | 0.6406 | 0.6485 | 0.6675 | 0.6681 | 0.6968 | 0.7212 | 0.7134 | 0.7194 | 0.6457 | 0.6697 | 0.6319 | 0.6475 | 0.5890 | 0.6480 | 0.6250 | 0.6640 | |

| DCMH [7] | 0.6321 | 0.6338 | 0.6510 | 0.6566 | 0.6860 | 0.7038 | 0.7288 | 0.7482 | 0.6321 | 0.6518 | 0.6625 | 0.6663 | 0.6415 | 0.6789 | 0.7023 | 0.7113 | |

| DNPH [71] | - | - | - | - | 0.7540 | 0.7410 | 0.7910 | 0.8010 | 0.7000 | 0.7100 | 0.7240 | 0.7260 | 0.6562 | 0.6860 | 0.6928 | 0.7100 | |

| RSDDH (Ours) | 0.6346 | 0.6808 | 0.6959 | 0.6976 | 0.7383 | 0.7436 | 0.7827 | 0.8067 | 0.6536 | 0.7264 | 0.7250 | 0.7223 | 0.6864 | 0.7052 | 0.7101 | 0.7222 | |

| Task | Method | Wiki | MIRFlickr | NUS-WIDE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | 16 bits | 32 bits | 64 bits | 128 bits | ||

| Img-to-Txt | CMFH [35] | 0.2108 | 0.2257 | 0.2267 | 0.2051 | 0.6305 | 0.6368 | 0.6426 | 0.6493 | 0.5476 | 0.5526 | 0.5691 | 0.5708 |

| SCM [43] | 0.2425 | 0.2359 | 0.2447 | 0.2586 | 0.6505 | 0.6568 | 0.6741 | 0.6771 | 0.5517 | 0.5635 | 0.5792 | 0.5889 | |

| SMFH [39] | 0.2386 | 0.2218 | 0.2354 | 0.2513 | 0.6138 | 0.6227 | 0.6379 | 0.6461 | 0.5869 | 0.6231 | 0.6334 | 0.6474 | |

| RSDDH (Ours) | 0.2556 | 0.2909 | 0.2819 | 0.2801 | 0.6909 | 0.7367 | 0.7503 | 0.7518 | 0.6509 | 0.6992 | 0.7179 | 0.7201 | |

| Txt-to-Img | CMFH [35] | 0.5986 | 0.6279 | 0.6197 | 0.6328 | 0.6384 | 0.6419 | 0.6535 | 0.6618 | 0.5565 | 0.5583 | 0.5611 | 0.5769 |

| SCM [43] | 0.3708 | 0.4362 | 0.4461 | 0.4583 | 0.6523 | 0.6615 | 0.6751 | 0.7212 | 0.5671 | 0.5693 | 0.5907 | 0.5826 | |

| SMFH [39] | 0.6212 | 0.6637 | 0.6629 | 0.6301 | 0.6712 | 0.6883 | 0.7041 | 0.7069 | 0.6115 | 0.6324 | 0.6528 | 0.6662 | |

| RSDDH (Ours) | 0.6346 | 0.6808 | 0.6959 | 0.6976 | 0.7383 | 0.7436 | 0.7827 | 0.8067 | 0.6536 | 0.7264 | 0.7250 | 0.7223 | |

| Method | Wiki | MIRFlickr | NUS-WIDE |

|---|---|---|---|

| CMFH [35] | 7.59 | 13.95 | 14.36 |

| SCM [43] | 5.41 | 8.23 | 8.61 |

| SMFH [39] | 10.18 | 20.67 | 21.19 |

| PRDH [52] | |||

| CDQ [18] | |||

| DCMH [7] | |||

| RSDDH (Ours) |

| Task | Size of Training Set on MIRFlickr | MAP | Size of Training Set on NUS-WIDE | MAP |

|---|---|---|---|---|

| Img-to-Txt | 10,000 | 0.7367 | 10,500 | 0.6992 |

| 15,000 | 0.7412 | 14,700 | 0.7035 | |

| 20,000 | 0.7528 | 21,000 | 0.7106 | |

| Txt-to-Img | 10,000 | 0.7436 | 10,500 | 0.7264 |

| 15,000 | 0.7505 | 14,700 | 0.7328 | |

| 20,000 | 0.7637 | 21,000 | 0.7413 |

| Size of Training Set on MIRFlickr | Training Time (Seconds) | Size of Training Set on NUS-WIDE | Training Time (Seconds) |

|---|---|---|---|

| 10,000 | 10,500 | ||

| 15,000 | 14,700 | ||

| 20,000 | 21,000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, X.; Wu, F.; Zhai, J.; Ma, F.; Wang, G.; Liu, T.; Dong, X.; Jing, X.-Y. Robust Supervised Deep Discrete Hashing for Cross-Modal Retrieval. Technologies 2025, 13, 383. https://doi.org/10.3390/technologies13090383

Dong X, Wu F, Zhai J, Ma F, Wang G, Liu T, Dong X, Jing X-Y. Robust Supervised Deep Discrete Hashing for Cross-Modal Retrieval. Technologies. 2025; 13(9):383. https://doi.org/10.3390/technologies13090383

Chicago/Turabian StyleDong, Xiwei, Fei Wu, Junqiu Zhai, Fei Ma, Guangxing Wang, Tao Liu, Xiaogang Dong, and Xiao-Yuan Jing. 2025. "Robust Supervised Deep Discrete Hashing for Cross-Modal Retrieval" Technologies 13, no. 9: 383. https://doi.org/10.3390/technologies13090383

APA StyleDong, X., Wu, F., Zhai, J., Ma, F., Wang, G., Liu, T., Dong, X., & Jing, X.-Y. (2025). Robust Supervised Deep Discrete Hashing for Cross-Modal Retrieval. Technologies, 13(9), 383. https://doi.org/10.3390/technologies13090383