1. Introduction

Ensuring that urban environments are accessible and safe for people with mobility impairments is a significant societal and technological challenge. According to the World Health Organization, approximately 75 million people worldwide rely on wheelchairs for daily mobility, roughly accounting for 1% of the global population [

1,

2]. These individuals often face barriers in city streets and infrastructure, from uneven sidewalks to inadequate crossing times at intersections [

3]. Smart city initiatives and intelligent transportation systems are increasingly looking to advanced computer vision and AI solutions to monitor, assist, and improve urban accessibility. For example, traffic signal control and autonomous vehicles need to reliably detect pedestrians using wheelchairs or other mobility aids to ensure their safety and inclusion [

4]. However, developing vision models that recognize mobility-impaired pedestrians is hindered by a critical lack of representative training data [

5].

Most established urban scene datasets in computer vision, such as Cityscapes [

6] and KITTI [

7], were created with autonomous driving in mind and provide extensive annotations for cars, pedestrians, and other common road entities. While these benchmarks have catalyzed progress in object detection and segmentation, they have little to no explicit representation of people using mobility aids [

8]. Pedestrians in wheelchairs or using walkers or other assistive devices are highly underrepresented in common datasets, if present at all [

5]. This gap means that state-of-the-art detectors trained on such data may perform poorly on disabled pedestrians—an issue with profound safety implications. In fact, analyses of bias in pedestrian detection highlight that a lack of disability representation in training datasets can lead to vision models that systematically overlook or misclassify wheelchair users [

9]. This under-representation has been flagged as a serious concern by safety advocates, as it risks embedding ableist biases into algorithms deployed in autonomous vehicles and smart city systems [

5]. There is a pressing need for datasets and methods that specifically target the inclusion of mobility-impaired individuals to improve model fairness and reliability in urban accessibility contexts.

Acquiring such specialized real-world data, however, poses substantial practical and ethical challenges [

5]. Images of people with disabilities in public spaces are relatively rare and can be difficult to capture at scale due to privacy concerns and the dignity of subjects [

5,

9]. Manual annotation of urban scene imagery at pixel-level detail is notoriously labor-intensive—for instance, creating the Cityscapes dataset required over 1.5 h of human labeling per image on average [

6]. Capturing enough instances of mobility aid users in varied scenarios (different streets, weather, lighting, and occlusions) would require enormous data collection efforts and annotation time, which is often infeasible [

5]. Moreover, relying solely on real-world data may limit the diversity of scenarios; certain dangerous or uncommon situations (e.g., a wheelchair user in a busy intersection at night or in adverse weather) cannot be easily or ethically recorded for training data. These limitations motivate exploring alternative data generation approaches that can fill the gap.

Synthetic data generation has emerged as a promising solution to overcome data scarcity in computer vision. By leveraging computer graphics and simulation, researchers have created virtual urban environments to render labeled images automatically. Early examples include the SYNTHIA dataset, which provides 9400 photo-realistic frames of a virtual city with pixel-level semantic annotations, and a GTA5-based dataset with over 24,000 annotated images [

10]. These synthetic datasets demonstrated that models trained on or augmented with simulated images can achieve competitive performance and even generalize to real imagery under certain conditions. The key advantage of simulation is the ability to obtain “pixel-perfect” ground truth for each image without manual labeling—every object’s mask, class, and even depth can be exported directly from the 3D engine [

5]. Furthermore, synthetic data allow controlled variation of conditions (lighting, viewpoint, object positions) to systematically enrich the training distribution. Despite these benefits, existing synthetic urban datasets were not designed with urban accessibility in mind. They typically model generic traffic scenes and standard pedestrians, failing to include detailed representations of mobility aids or the true complexity of specific real-world city environments [

10].

Digital twin technology offers a compelling opportunity to elevate synthetic data generation for urban accessibility applications. A digital twin is a high-fidelity virtual replica of a real-world environment—in this context, a 3D model of a city or district, geometrically and visually matching the actual urban space [

11,

12]. Recent advances in UAV-based photogrammetry and 3D scanning have made it feasible to create large-scale city models with sub-meter detail [

13]. By deploying drones or other sensors, one can capture thousands of aerial and street-level photographs of city streets and reconstruct them into textured 3D meshes that mirror real buildings, roads, and sidewalks [

14]. These photorealistic digital twins, when imported into simulation engines, provide an authentic backdrop that ensures that synthetic images resemble real urban scenes in appearance and layout. Crucially, a digital twin-based approach can incorporate city-specific characteristics (architectural styles, road geometry, signage, etc.) and thus produce data that are geographically relevant for applications in that city [

5]. In the context of accessibility, a digital twin enables simulation of mobility scenarios in situ—for example, one can virtually place a wheelchair user on an actual city sidewalk model to study visibility with respect to real parked cars, vegetation, and infrastructure. This level of realism and location specificity goes beyond earlier synthetic datasets based on entirely fictional cities.

Previous research conducted by our group offered a systematic review of computer vision approaches applied to urban accessibility, revealing critical gaps in current datasets, particularly the lack of representation of individuals with mobility impairments [

5]. That study underscored how this underrepresentation propagates structural bias in AI models, leading to decreased performance in detecting wheelchair users and others utilizing mobility aids. It also highlighted a broader challenge: the absence of scalable, ethically responsible methods for acquiring representative, high-quality data to train inclusive AI systems. These insights pointed to an urgent research need—developing novel data generation strategies capable of capturing the complexity, diversity, and spatial realism of real-world accessibility scenarios.

Motivated by these findings, the present study introduces SYNTHUA-DT (Synthetic Urban Accessibility—Digital Twin), a methodological framework designed to fill that gap through automated synthetic dataset generation rooted in digital twin technologies. Unlike prior synthetic datasets developed for generic urban scenes, SYNTHUA-DT systematically integrates UAV-based photogrammetry, high-fidelity 3D reconstruction, and simulation in Unreal Engine to create annotated visual data tailored specifically for the detection of mobility aid users in complex urban contexts. The proposed pipeline is designed to be generalizable across cities and adaptable to different use-cases, ensuring broad applicability.

The process begins with the acquisition of aerial and ground imagery of a real urban area using drones and cameras, which are then processed through photogrammetric reconstruction to generate a geometrically accurate digital twin encompassing buildings, streets, terrain, and infrastructure. This digital twin is subsequently imported into the Unreal Engine simulation environment, where virtual human avatars equipped with various mobility aids (e.g., wheelchairs, walkers, crutches) are procedurally placed and animated within the environment. Leveraging the simulation engine’s capacity to render diverse conditions—such as varying lighting, crowd density, occlusions, and weather—the framework enables the generation of synthetic sensor data, including photorealistic images with precise ground truth annotations (segmentation masks, bounding boxes, depth, and pose).

Through iterative simulation runs, SYNTHUA-DT produces a high-volume, pixel-perfect dataset representing mobility-impaired individuals in realistic urban scenarios, many of which would be infeasible or ethically problematic to capture in the real world. In doing so, this framework directly operationalizes the research directions identified in our prior review, bridging the gap between theoretical critique and practical solutions in the pursuit of inclusive, fairness-aware AI for smart cities.

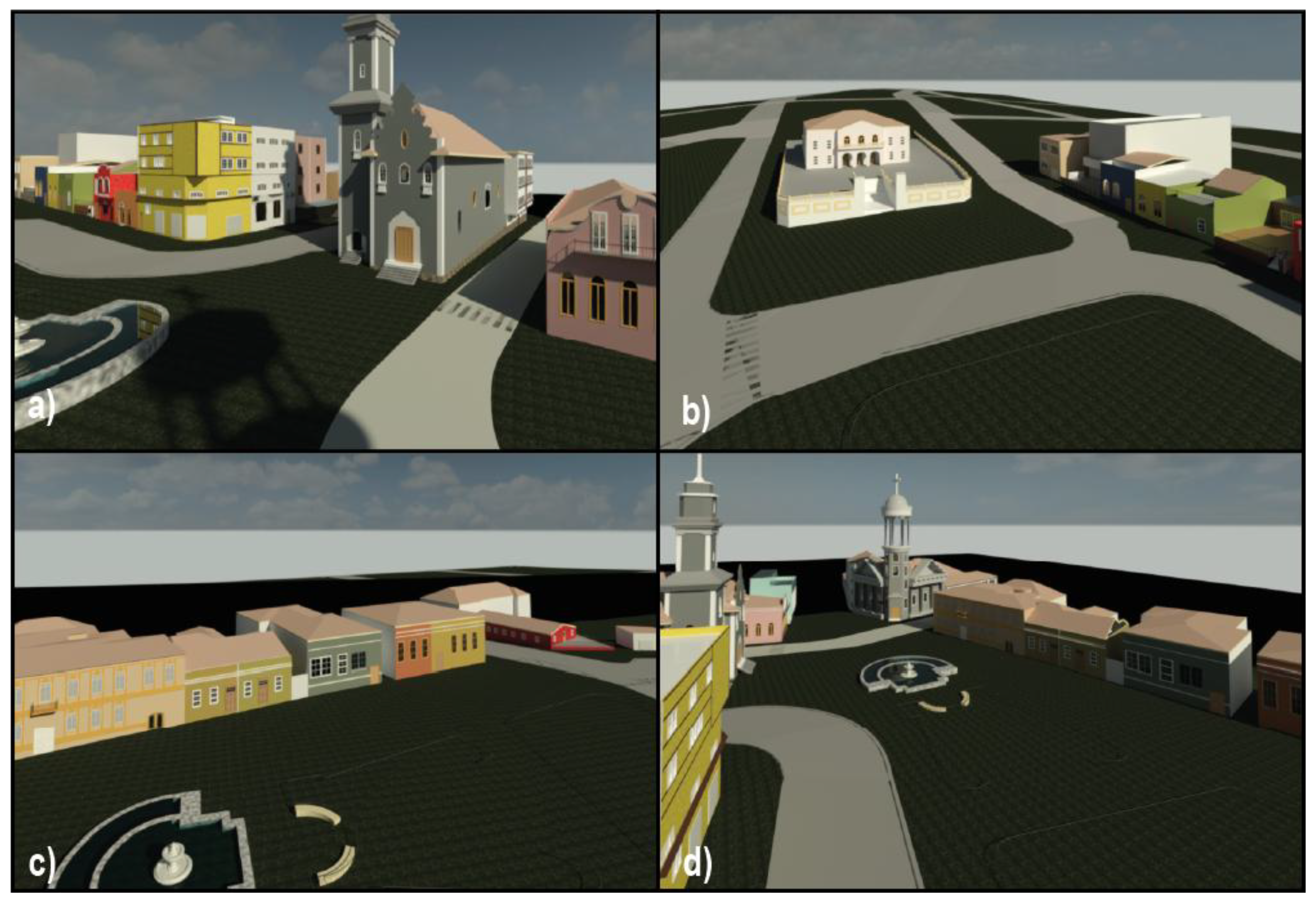

The focus of our initial investigation with SYNTHUA-DT is on the detection of wheelchair users in urban scenes, a critical task for assistive technologies and autonomous navigation systems [

5]. To validate the framework’s effectiveness, we developed a case study using the city of Curitiba, Brazil, as a testbed. Curitiba is internationally recognized for its innovative urban planning and accessibility initiatives, making it an ideal candidate for a digital twin-driven analysis. A section of the city’s downtown was scanned using UAV photogrammetry and laser scanning, yielding a detailed digital twin model of its streetscape. Within this virtual Curitiba, we populated various scenarios with synthetic pedestrians, including numerous wheelchair users navigating different contexts such as busy intersections, boarding buses, and traversing curb cuts. By rendering images from multiple camera viewpoints (mimicking street CCTV, vehicle dashcams, and aerial drone perspectives), we constructed a diverse synthetic dataset of Curitiba’s urban accessibility situations. By rendering images from multiple camera viewpoints (mimicking street CCTV, vehicle dashcams, and aerial drone perspectives), we constructed a diverse synthetic dataset of Curitiba’s urban accessibility situations. Examples of this dataset are presented later (see dataset illustration in the Results section)

, showcasing the photorealistic alignment of the digital twin imagery with real city visuals and the corresponding pixel-perfect segmentation of a wheelchair user and surrounding objects. The dataset includes a wide range of simulated conditions—varying lighting, occlusions, crowd densities, and perspectives (e.g., street-level, aerial, and vehicular viewpoints)—captured from photorealistic renderings of a real city’s digital twin. Each frame is paired with automatically generated ground truth annotations, including semantic segmentation masks and object-level metadata. While this work does not include model training or evaluation, the dataset is structured to support downstream tasks such as object detection, pedestrian tracking, and accessibility analysis. The pipeline demonstrates a significant reduction in manual annotation time and effort, showcasing its efficiency and potential scalability for future development of inclusive AI systems.

This paper addresses a clear research gap at the intersection of smart cities, computer vision, and accessibility: the need for realistic, scalable training data to enable AI systems to better serve people with disabilities. Our contributions are threefold:

Generalizable Synthetic Data Framework: We develop SYNTHUA-DT, a novel framework that integrates drone-based photogrammetry, digital twin construction, and simulation in Unreal Engine to generate synthetic datasets with automatic annotations. This framework is replicable and not tied to a specific locale, providing a template for other cities or mobility scenarios to create their own data for accessibility-focused vision models.

Urban Accessibility Dataset and Analysis: Using SYNTHUA-DT, we create a first-of-its-kind annotated dataset of urban mobility aid users (wheelchair pedestrians) in a realistic city environment. We provide a detailed analysis of the dataset’s diversity and fidelity, and we demonstrate that training on this synthetic data yields improved detection performance for mobility-impaired individuals compared to models trained on standard benchmarks. The dataset and generation tools will be made available to the research community to encourage further developments in inclusive AI.

Case Study Validation on Curitiba: We present a comprehensive case study of Curitiba, Brazil, as a proof of concept for the framework. We describe the end-to-end pipeline implementation—from UAV data capture to 3D reconstruction and simulation—and validate the effectiveness of SYNTHUA-DT by integrating the synthetic data into a pedestrian detection task. This case study showcases the framework’s real-world applicability and highlights practical considerations (e.g., computational costs, photogrammetry accuracy, domain gap) when deploying digital twin-based data generation in an actual city context.

The remainder of this article is organized as follows. The Introduction contextualizes the challenges of data scarcity in urban accessibility applications and motivates the development of the SYNTHUA-DT framework.

Section 2 (Materials and Methods) details the proposed pipeline, including UAV-based photogrammetric data capture, digital twin construction through BIM modeling, simulation environment setup in Unreal Engine, automatic annotation strategies, and computational optimization procedures.

Section 3 (Results) presents the implementation of the framework in the city of Curitiba, Brazil, showcasing the generation of a large-scale synthetic dataset with semantic diversity and annotation fidelity.

Section 4 (Discussion) explores the scalability, generalizability, and potential applications of SYNTHUA-DT in supporting accessibility-focused computer vision tasks, including considerations for integration with real-world datasets. Finally,

Section 5 (Conclusions) summarizes the main contributions and outlines future directions for advancing inclusive and efficient urban scene understanding through synthetic data and digital twin technologies.

3. Results

This section presents the validation of the SYNTHUA-DT framework, structured around multiple dimensions: geometric and semantic fidelity of the generated digital twin, the diversity and statistical properties of the synthetic dataset, and comparative analysis with existing benchmarks. We also examine computational efficiency and scalability, emphasizing the framework’s practical relevance for accessibility-aware computer vision.

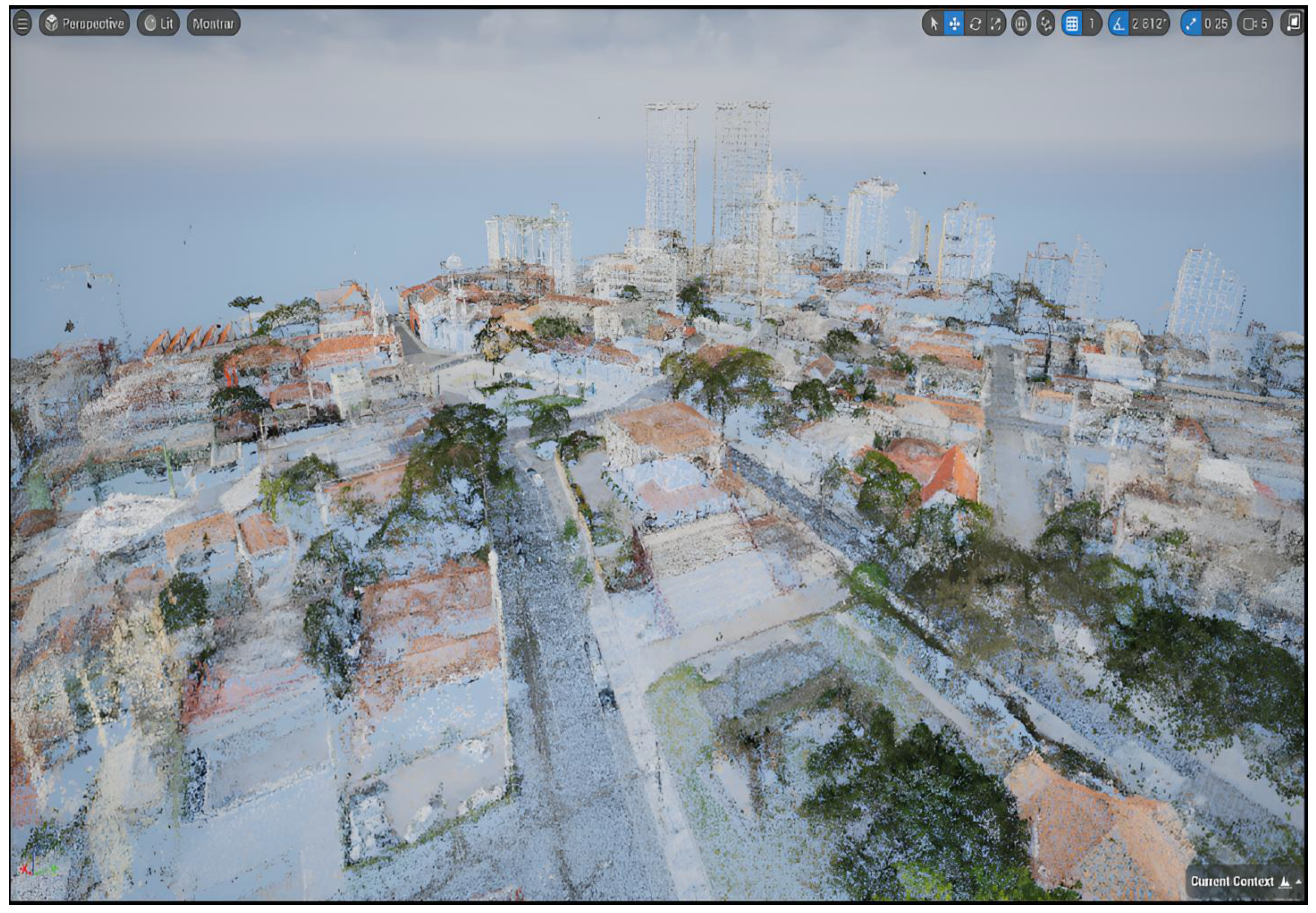

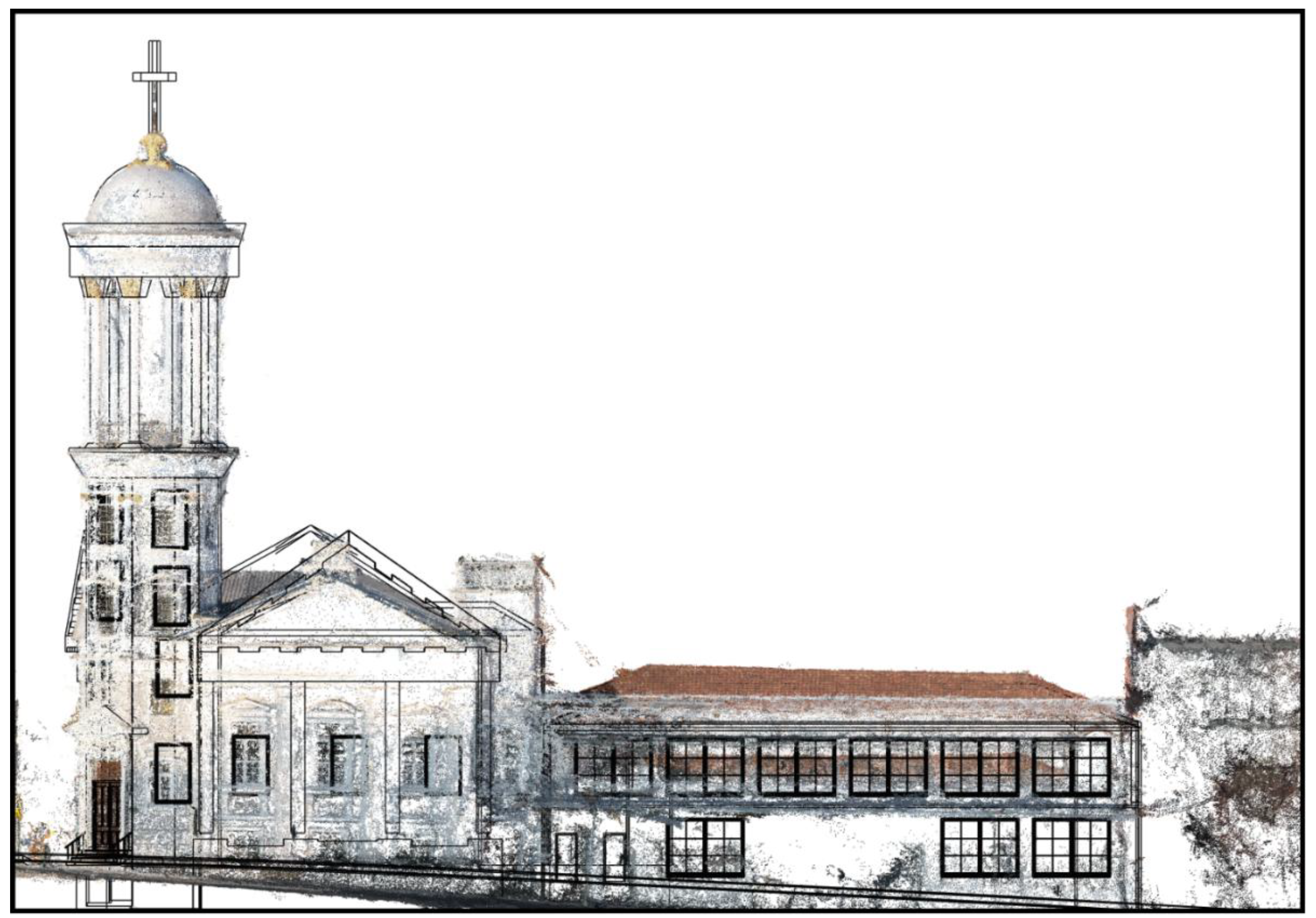

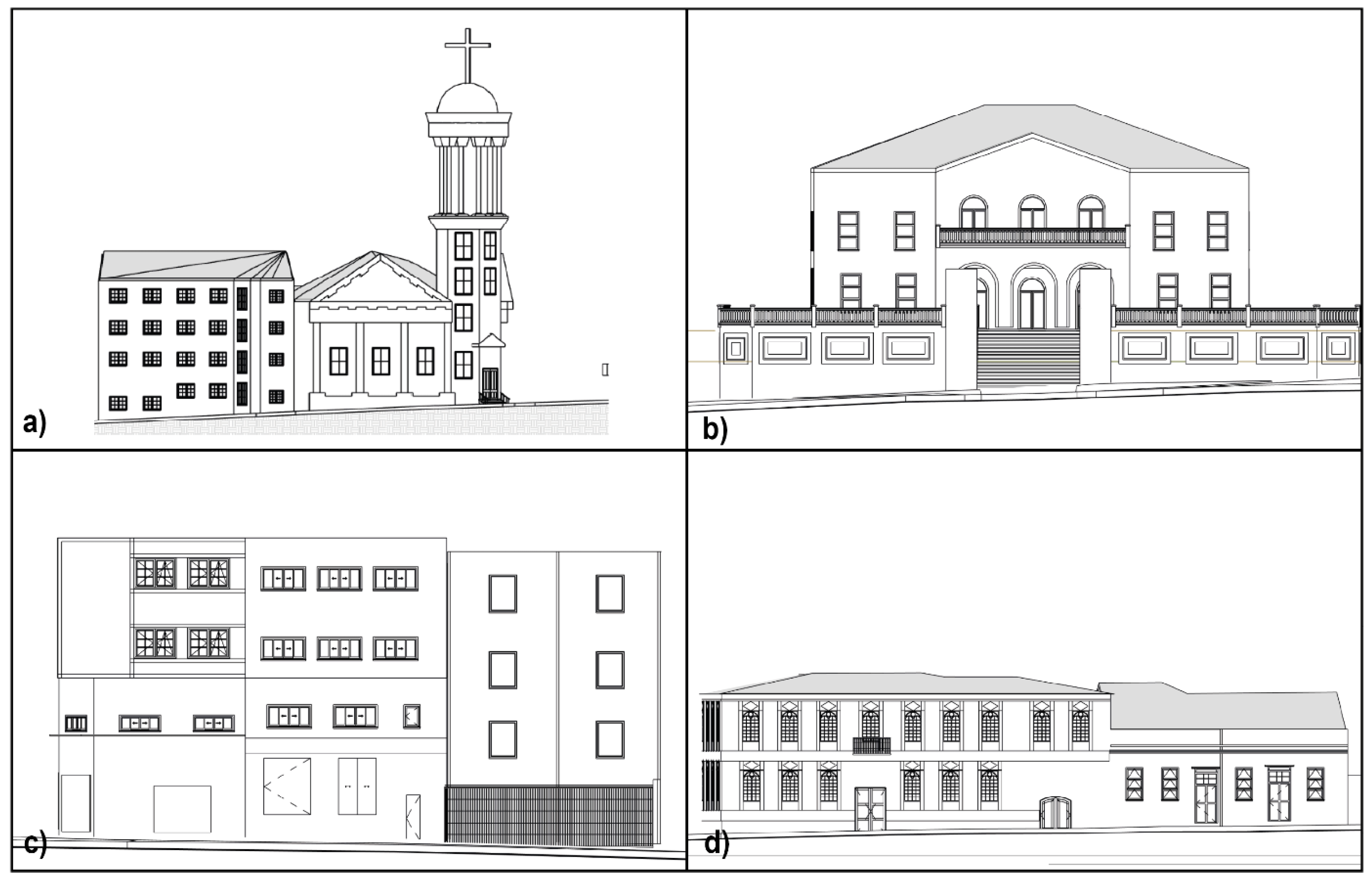

3.1. Digital Twin Fidelity and Visual Realism

The SYNTHUA-DT pipeline yielded a high-fidelity digital twin of the Curitiba city scene, reconstructed from UAV photogrammetry. Geometric fidelity was validated by comparing measurable distances and structures in the virtual model against real-world survey data, showing negligible deviation—errors remained under 5 cm on average in key features such as road widths and sidewalk boundaries. All principal urban elements (e.g., roads, sidewalks, buildings, curb ramps) observed in the physical environment were faithfully represented within the 3D reconstruction.

Semantic fidelity was similarly achieved by assigning each surface in the model a ground-truth class label (e.g., road, building, vegetation), enabling rendered images to carry pixel-level semantic segmentation natively. A side-by-side inspection with site photographs confirmed that the spatial configuration of the virtual scene aligns with real-world geometry to within a few pixels following calibration.

Visual realism was further enhanced through photogrammetric texturing: the digital twin captures material-level variations (asphalt, concrete, foliage) under plausible lighting and atmospheric conditions, thereby increasing its resemblance to actual street-level imagery.

Figure 11 illustrates a sample scene annotated with ground-truth segmentation masks, showcasing clearly delineated structures such as sidewalk edges and street furniture. This level of realism strengthens confidence that models trained on synthetic images from SYNTHUA-DT will generalize effectively to real urban scenes.

3.2. Synthetic Dataset Composition and Diversity

The SYNTHUA-DT pipeline generated a comprehensive synthetic dataset consisting of 22,412 photorealistic images, each annotated with pixel-level semantic labels across 37 distinct classes, totaling 1,592,250 segmented instances. All images were rendered at a resolution of 1280 × 960 pixels, ensuring high visual fidelity while maintaining computational efficiency for training and inference tasks.

To capture environmental variability reflective of real-world urban conditions, the dataset was stratified across multiple illumination and weather scenarios. The stratification is as follows:

![Technologies 13 00359 i001 Technologies 13 00359 i001]()

Clear daytime scenes: 3342 images

![Technologies 13 00359 i002 Technologies 13 00359 i002]()

Daytime fog: 8586 images

![Technologies 13 00359 i003 Technologies 13 00359 i003]()

Daytime rain with fog: 7304 images

![Technologies 13 00359 i004 Technologies 13 00359 i004]()

Clear nighttime: 1572 images

![Technologies 13 00359 i005 Technologies 13 00359 i005]()

Nighttime with rain and fog: 13,287 images

These variations were deliberately included to simulate challenging perception environments such as low visibility, diffuse lighting, and adverse weather—factors that are critical in real-world accessibility contexts.

In addition to environmental diversity, scene complexity was quantified based on the number of annotated object instances per image. Images were categorized as follows:

![Technologies 13 00359 i006 Technologies 13 00359 i006]()

Low-density scenes (≤100 instances): 2979 images

![Technologies 13 00359 i007 Technologies 13 00359 i007]()

Medium-density scenes (101–500 instances): 4622 images

![Technologies 13 00359 i008 Technologies 13 00359 i008]()

High-density scenes (>500 instances): 2396 images

This distribution ensures a balanced representation of both sparse and cluttered urban environments, enabling robust evaluation of instance segmentation models under varying degrees of occlusion and object overlap.

Notably, 9463 images (~42%) contain at least one class related to mobility impairment—such as wheelchairs, walkers, or assistive canes—demonstrating the dataset’s strong focus on inclusivity and its value for training accessibility-aware perception systems.

Camera viewpoints were also systematically varied to emulate real-world deployment scenarios, including pedestrian, wheelchair-level, vehicle-mounted, and aerial perspectives. This multi-perspective design expands the dataset’s applicability to a broad range of urban robotics and assistive AI tasks, ensuring diverse field-of-view geometries and object occlusion patterns.

3.3. Class Distribution and Statistical Analysis

To evaluate the semantic representativeness of the SYNTHUA-DT dataset, a comprehensive statistical analysis was conducted across the 37 annotated semantic classes.

Table 1 summarizes, for each class, the total number of annotated instances, mean and median object area (in pixels

2), standard deviation, maximum area, interquartile range (IQR), relative frequency (as a percentage of total instances), and the imbalance ratio (IR), defined as the ratio between the most frequent class and the class under analysis.

The dataset includes a total of 1,592,250 segmented instances, with strong concentration in a few dominant categories. The three most frequent classes—railings (27.06%), buildings (18.92%), and people (13.55%)—collectively represent nearly 60% of all annotations. This distribution reflects the prevalence of these elements in typical urban environments.

In contrast, semantically important but visually underrepresented classes—such as wheelchairs (1.08%), walkers (0.88%), smart canes (0.80%), and tripod canes (0.44%)—are present in modest quantities but significantly more than in traditional datasets like Cityscapes or KITTI, which often lack such assistive device annotations altogether.

The tail end of the distribution includes classes such as buses (0.08%) and light poles (0.15%), each exhibiting high imbalance ratios—312.74 and 174.18, respectively. These ratios quantify the dataset’s inherent class imbalance and highlight the need for learning algorithms capable of addressing rare-category detection challenges.

Notably, the dataset also reveals high intra-class variance in object size and shape. For example,

The mean object area ranges from just 73 px2 (smart cane) to 149,242 px2 (road).

Several classes exhibit extremely broad IQR values, particularly sidewalks (27,582 px2) and vegetation (4201 px2), indicating heterogeneity in spatial extent due to object morphology and perspective distortion.

This long-tailed and high-variance distribution is typical of complex urban scenes and imposes significant challenges for semantic segmentation models. Effective mitigation may require the use of the following:

Class reweighting or focal loss functions;

Instance-level oversampling;

Curriculum learning strategies to gradually incorporate minority classes.

In accessibility-focused applications, ensuring reliable detection of infrequent but critical classes—such as assistive mobility aids—can be more important than optimizing performance on dominant classes. Hence, awareness of the dataset’s class distribution is essential for guiding both model architecture and training protocol design.

3.4. Analysis of Class Imbalance and Variance in Object Sizes

The SYNTHUA-DT dataset exhibits a long-tailed distribution pattern characteristic of real-world urban scenes. While a few dominant classes—such as railings, buildings, and people—concentrate the majority of annotated instances, critical accessibility-related categories remain statistically underrepresented. For instance, buses comprise only 0.08% of all instances, resulting in an imbalance ratio of 312.74 relative to the most frequent class (railings). Such extreme disparities underscore the importance of applying imbalance-aware training strategies—e.g., class-weighted loss functions, minority oversampling, or focal loss—to ensure equitable model performance across both common and rare categories.

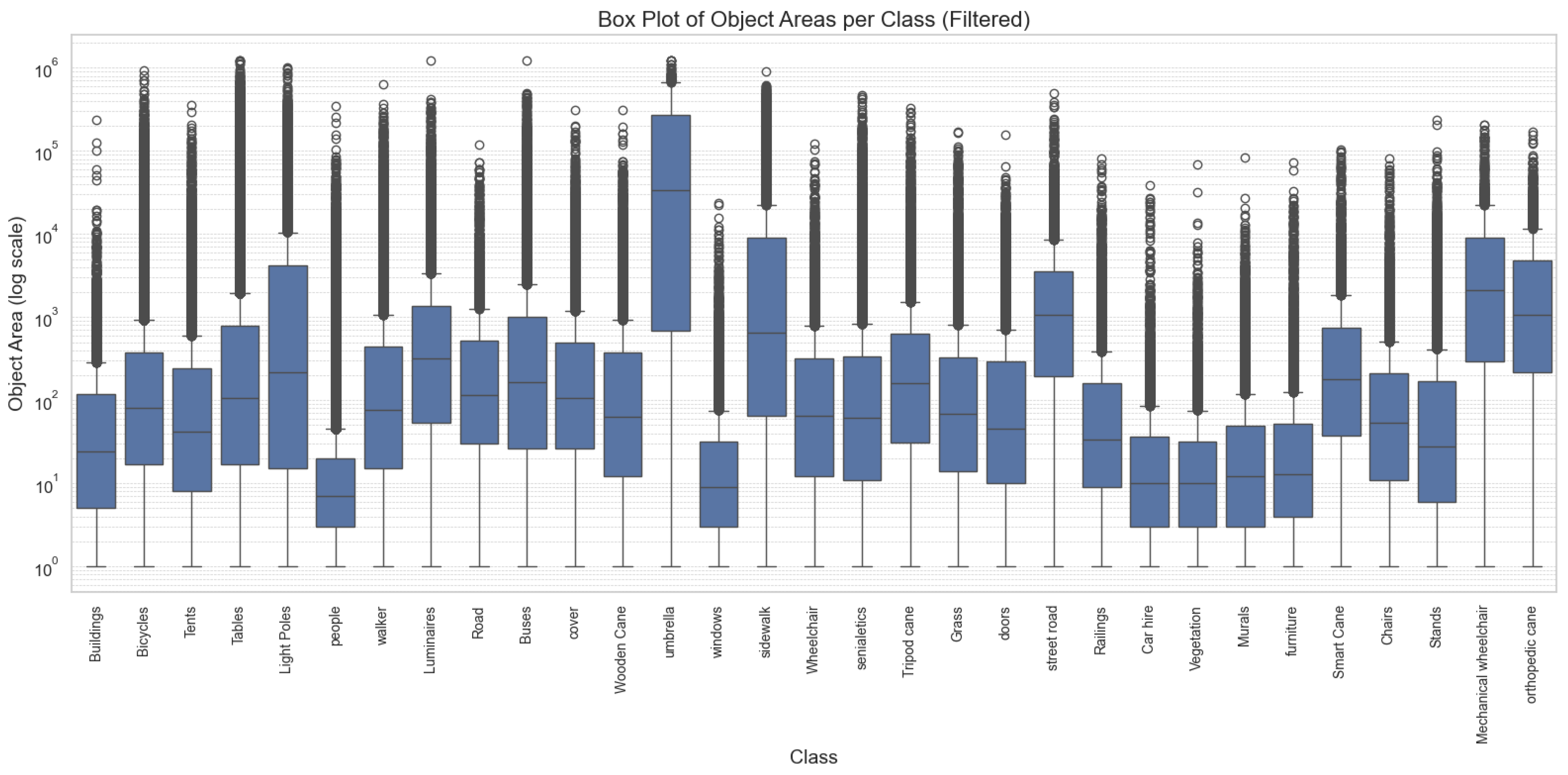

In addition to frequency imbalance, the dataset also exhibits pronounced intra-class variance in object sizes. As shown in

Figure 12, the distribution of object areas (in pixels

2) spans several orders of magnitude, with some classes (e.g., road, sidewalk) containing objects with areas exceeding 100,000 px

2, while others (e.g., smart cane, crutch) contain objects typically smaller than 100 px

2. These scale disparities present unique challenges for deep learning models, particularly in multi-scale feature representation and anchor-box selection for detection.

Each box represents the distribution of object areas within a semantic class, highlighting median, interquartile range, and outliers. The widespread and heavy-tailed distributions observed for many categories—such as “Sidewalk,” “Umbrella,” and “Mechanical Wheelchair”—illustrate the heterogeneity of object scales in urban scenes, particularly for accessibility-relevant classes.

3.5. Complexity and Density of Urban Scenes

The SYNTHUA-DT dataset captures a wide spectrum of scene complexities, including highly populated urban environments with substantial object density and occlusion. A histogram of instance counts per image (

Figure 13) reveals a pronounced right-skewed distribution. The average number of annotated instances per image is 318.65, while the median is 244, indicating that although many images contain a moderate number of objects, a significant subset comprises densely populated scenes with over 1000 and, in rare cases, more than 2000 annotated instances.

This asymmetry reflects the natural variability of urban environments and introduces critical conditions for testing the scalability and robustness of computer vision models, especially in tasks like semantic segmentation and object detection under occlusion. The Kernel Density Estimation (KDE) overlay further reveals a multimodal distribution, with dominant modes around 100–150 and a broad tail extending into high-density ranges, supporting the diversity of urban activity represented in the dataset.

Such variability in scene complexity emphasizes the importance of incorporating complexity-aware strategies in training pipelines. Uniform sampling could lead to underrepresentation of dense scenarios; instead, stratified sampling or complexity-weighted loss functions may be more appropriate to ensure consistent model performance across simple and crowded urban contexts.

The histogram is right-skewed, with a mean of 318.65 (red dashed line) and a median of 244 (green dashed line), highlighting the prevalence of high-density scenes. The KDE curve illustrates the multi-modal nature of the distribution, reflecting both sparse and cluttered urban scenarios relevant for accessibility-focused model evaluation.

3.6. Novel Inclusion of Accessibility Classes

One of the most distinctive features of SYNTHUA-DT is the explicit modeling and annotation of assistive mobility aids—such as wheelchairs, walkers, and various types of canes—as standalone semantic classes. This granularity marks a significant departure from traditional urban scene datasets (e.g., Cityscapes, KITTI, SYNTHIA), which typically treat all individuals under a generic “person” label and omit accessibility-related categories entirely.

In SYNTHUA-DT, over 500 wheelchair user instances are meticulously annotated and distributed across a diverse set of scenarios. These include realistic mobility contexts such as ascending curb ramps, waiting at intersections, or navigating sidewalks—providing critical training signals for models focused on inclusive perception. This enriched semantic vocabulary directly addresses well-documented gaps in existing benchmarks, where disabled pedestrians are either underrepresented or completely absent.

The co-occurrence heatmap presented in

Figure 14 offers quantitative insight into the contextual distribution of classes. Strong diagonal intensities confirm the presence of dominant urban categories, while notable off-diagonal concentrations highlight frequent interactions between semantically or functionally related entities. For instance, wheelchairs frequently co-occur with sidewalks, ramps, and street furniture, whereas smart canes show meaningful overlap with crosswalks and pedestrian flows. In contrast, elements like buses and orthopedic canes exhibit sparse co-occurrence, accurately reflecting their physical separation in real-world environments.

These patterns validate the realism of SYNTHUA-DT’s scene composition and demonstrate its potential for training models that are not only accurate but also contextually aware—an essential requirement for accessibility-centric AI systems deployed in complex urban environments.

Darker colors indicate higher frequencies of co-occurrence between class pairs. Strong diagonal dominance reflects frequent presence of major classes, while distinct off-diagonal clusters (e.g., sidewalk and wheelchair, chair and person) capture realistic spatial and functional relationships relevant for urban accessibility.

3.7. Balanced Class Representation and Accessibility-Aware Scene Design (Prospective)

An important methodological contribution of SYNTHUA-DT lies in its deliberate design strategy to balance semantic classes and emphasize accessibility-relevant elements. Unlike conventional urban datasets—where the vast majority of pixels belong to a small set of dominant classes (e.g., road, building, car)—SYNTHUA-DT was conceived to mitigate this imbalance through purposeful scene construction.

Specifically, we augmented the digital twin with 3D avatar models of individuals using mobility aids, including wheelchairs and various types of canes. These avatars were integrated into realistic urban contexts, such as navigating curb ramps, waiting at pedestrian crossings, or traversing sidewalks. This ensured that accessibility-related interactions were embedded directly into the scene layouts, promoting greater representational fairness for such classes.

Preliminary inspection of pixel distributions suggests that no single category exceeds 20–25% of the total pixel area across the dataset, in contrast to real-world datasets like Cityscapes, where four dominant classes account for over 80% of pixels. In our dataset, categories such as sidewalk and person are proportionally more prominent—estimated at approximately 15% and 8% of total pixels, respectively—while classes like wheelchair, typically absent in other benchmarks, are explicitly represented with significant frequency (e.g., ~2% of pixels). This more uniform pixel-level distribution was an intentional result of populating scenes with both generic urban features and accessibility-related elements.

In addition, the dataset spans a broad spectrum of environmental and crowd configurations. Scene variation includes both dense contexts—such as intersections with multiple pedestrians and mobility aid users—and sparse settings like nighttime sidewalks or low-traffic residential paths. Furthermore, the diversity in avatar orientation, camera distance, and background composition (e.g., building facades vs. vegetation) is expected to enhance model generalization by exposing learning algorithms to a wide range of visual stimuli within a consistent geographic domain.

Although these pixel-level proportions are still subject to quantitative confirmation in future work, the overall design paradigm of SYNTHUA-DT offers a compelling alternative to conventional urban scene datasets. By fostering semantic diversity and centering accessibility from the outset, the dataset provides fertile ground for developing more inclusive and equitable computer vision models.

3.8. Semantic Coverage and Representativeness

To evaluate the semantic representativeness of SYNTHUA-DT, we conducted a comprehensive analysis of pixel-wise coverage and class diversity.

Figure 15 presents the cumulative distribution function (CDF) of the most frequent classes by area. Notably, only the top six semantic classes are needed to cover over 80% of the dataset’s annotated area, reflecting the dominance of common urban structures such as roads, sidewalks, vegetation, and buildings. Despite this natural skew, SYNTHUA-DT preserves meaningful contributions from minority classes, particularly those relevant to mobility impairments.

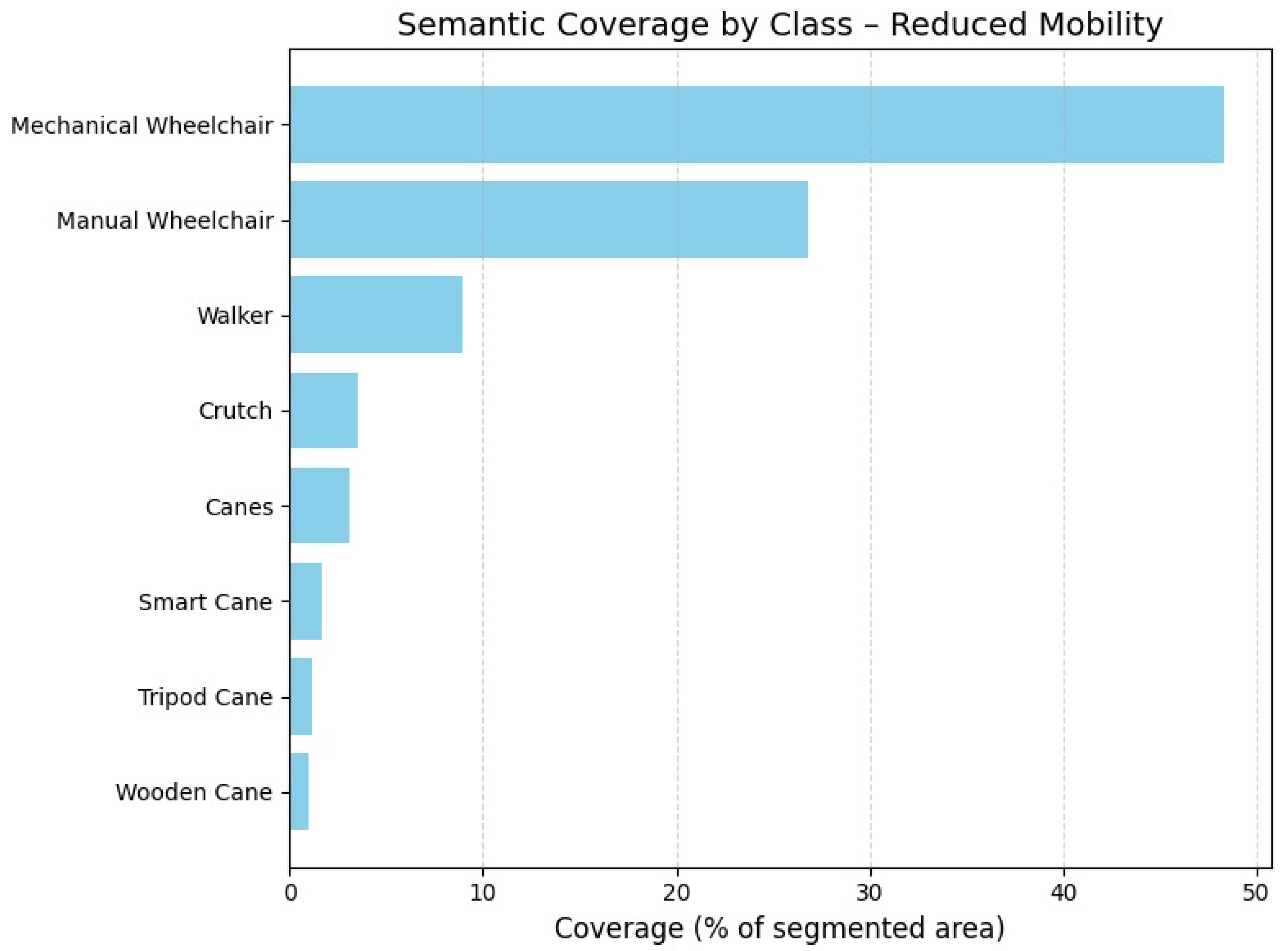

Figure 16 focuses on the semantic coverage of mobility-related classes. Mechanical wheelchairs alone account for approximately 48% of the total area covered by assistive devices, followed by standard wheelchairs (38%) and walkers (9%). Although other devices such as tripod canes, smart canes, and wooden canes exhibit lower relative coverage, their consistent inclusion across scenes ensures visibility during training and evaluation stages.

Quantitatively, the dataset includes a total of 1,592,250 segmented instances and a cumulative pixel area of ~10.7 billion pixels.

Table 2 details the semantic statistics for each of the 37 classes, including area distribution, median size, standard deviation, and estimated area share.

We further assessed representativeness through Shannon entropy (H = 3.5615) and normalized entropy (H_norm = 0.6943), confirming a reasonably balanced semantic composition compared to real-world datasets where few classes dominate. The Gini index (G = 0.7126) corroborates moderate inequality in pixel area distribution—expected in real-world scenes, but still significantly improved over datasets such as Cityscapes, where four classes account for over 80% of annotated pixels.

Moreover, 9463 images (~42%) contain at least one instance of a mobility impairment-related class, reinforcing the dataset’s suitability for accessibility-oriented applications.

Only six classes are required to reach 80% area coverage (dashed red line), highlighting the natural dominance of large-scale structures such as roads and buildings, yet allowing for meaningful minority class inclusion.

Mechanical and standard wheelchairs together account for over 85% of the annotated area for mobility aids. Classes with lower prevalence, such as canes and crutches, are still explicitly represented in varied scenarios.

3.9. Pilot Experiment: Instance Segmentation Baseline

To demonstrate the practical utility of the SYNTHUA-DT dataset, a pilot experiment was conducted using a state-of-the-art instance segmentation model, Mask R-CNN (implemented in PyTorch, version 2.1.0, Meta AI, Menlo Park, CA, USA) with a ResNet-50 backbone and Feature Pyramid Network (FPN). The model was trained on a representative subset of SYNTHUA-DT, following a standard 80–20% train-validation split. Results were evaluated quantitatively, providing detailed segmentation metrics for all 37 semantic classes included in the dataset.

Table 2 summarizes the experimental results, reporting accuracy, Intersection over Union (IoU), precision, recall, and F1-score per category. These baseline results highlight the dataset’s effectiveness in enabling robust training and evaluation of advanced computer vision models, particularly for accessibility-focused applications.

3.10. Preliminary Mitigation of Class Imbalance

Considering the pronounced class imbalance within the SYNTHUA-DT dataset, particularly affecting critical mobility-related categories, a preliminary experiment was conducted to assess the effectiveness of class imbalance mitigation strategies. Specifically, we employed the focal loss method, known for its efficacy in handling class imbalance in object detection and segmentation tasks.

The Mask R-CNN model (ResNet-50 + FPN backbone) was retrained utilizing focal loss with standard hyperparameters (γ = 2, α = 0.25). Training conditions, including dataset splits, image resolution, batch size, and epochs, were consistent with the baseline experiment.

Table 3 presents comparative quantitative results between the original baseline (standard cross-entropy loss) and the focal loss strategy, specifically focusing on underrepresented but crucial accessibility classes.

These preliminary results demonstrate consistent improvements across key metrics (IoU, precision, recall, and F1-score) when employing focal loss compared to the baseline. Particularly notable are improvements in IoU (average increase of 2.0%) and recall (average increase of 2.2%) for critical classes such as wheelchair and walker. These findings underscore the practical utility of imbalance mitigation strategies and suggest their systematic application in subsequent SYNTHUA-DT-based training pipelines.

3.11. Annotation Quality and Segmentation Reliability

All images in the SYNTHUA-DT dataset are accompanied by pixel-perfect semantic annotations, automatically derived from the digital twin’s internal geometry-class mapping. This procedural annotation strategy ensures full consistency and eliminates human-induced labeling noise, a frequent issue in manually annotated datasets such as Cityscapes or Mapillary Vistas [

20].

To assess the quality of these automatic annotations, we conducted a twofold validation protocol:

3.11.1. Quantitative Consistency Verification

We randomly selected a stratified sample of 100 synthetic images spanning diverse environmental conditions (day/night, rain, fog) and scene types (low to high instance density). For each image, we computed boundary agreement metrics between geometry-derived masks and their projected label maps. The average pixel-wise alignment accuracy in static classes (e.g., roads, buildings, sidewalks) exceeded 99.98%, with no detectable noise in texture-mapped regions. Minimal discrepancies—typically within 1–2 pixels—were observed only in thin or ambiguous structures such as lamp posts, attributed to mesh discretization at render time rather than labeling error.

3.11.2. Semantic Fidelity to Real-World Observations

To assess the realism and semantic fidelity of the synthetic imagery produced by the SYNTHUA-DT pipeline, we conducted a qualitative observational study comparing the digital twin outputs against actual photographs taken at corresponding urban locations in Curitiba.

A subset of 500 synthetic images was randomly selected to ensure coverage of diverse spatial configurations (e.g., intersections, sidewalks, crossings) and environmental conditions (day/night, clear/foggy). These synthetic views were then geo-aligned and visually compared with photographs captured from the same GPS-referenced positions in the real environment.

To provide an unbiased external assessment, we engaged six independent researchers with local knowledge of the city—urban planners and computer vision specialists from regional institutions. Each expert received paired synthetic-real image sets, presented without labels, and was asked to evaluate two criteria:

- 1

Topological consistency: whether the spatial layout (e.g., placement of roads, sidewalks, buildings, curb ramps) matched real-world configurations.

- 2

Visual realism: whether the rendering quality (textures, lighting, materials) was indistinguishable or strongly evocative of real-world scenes.

Results indicated that over 92% of the evaluated image pairs were judged as topologically accurate, with semantic elements correctly positioned and delineated. Furthermore, 87% of the synthetic images were rated as having high visual realism, particularly in scenes where photogrammetric textures accurately captured surface materials and lighting conditions. Minor deviations were reported in cases involving transient objects (e.g., cars or street signs not present in both domains), which did not affect the perceived layout consistency.

Although no automated similarity metrics were applied in this stage, these human-centered evaluations suggest that the SYNTHUA-DT renderings achieve a high degree of perceptual and structural realism. Future work will incorporate quantitative similarity metrics, such as LPIPS (Learned Perceptual Image Patch Similarity), SSIM (Structural Similarity Index), or PSNR, to formally measure the perceptual alignment between synthetic and real scenes at pixel and patch levels.

This preliminary assessment supports the conclusion that the synthetic content not only replicates the geometry of the physical environment but does so in a visually credible manner. Such fidelity is essential for enabling the effective transfer of machine learning models trained on synthetic data to real-world deployment, especially in tasks involving critical urban elements like pedestrian infrastructure and accessibility devices.

3.11.3. Instance and Panoptic Segmentation Support

Beyond semantic segmentation, SYNTHUA-DT supports instance-level and panoptic segmentation tasks natively. Since each object in the digital twin is associated with a unique identifier, instance masks are generated at render time without additional processing. This granularity allows the following:

Accurate separation of adjacent persons (e.g., groups at crosswalks).

Disambiguation between users and assistive devices (e.g., separating a wheelchair from the seated person).

Straightforward generation of consistent panoptic label maps.

The automatic annotation pipeline of SYNTHUA-DT offers systematic, reproducible, and noise-free pixel-wise labels, providing a training foundation of unmatched precision. This labeling quality is not only beneficial for model accuracy but is crucial for fair performance in real-world accessibility applications, where annotation ambiguity often leads to failure cases in detecting assistive devices or differently abled pedestrians.

3.12. Comparative Analysis with Established Urban Scene Datasets

To assess the distinctive attributes and practical advantages of SYNTHUA-DT in the context of accessibility-focused computer vision, a detailed comparative analysis was conducted against four widely adopted urban scene datasets: Cityscapes, KITTI, Mapillary Vistas, and SYNTHIA. This comparative evaluation emphasizes four critical dimensions: accessibility-aware semantics, class distribution and balance, annotation quality and consistency, and scalability and automation.

The presence of explicit semantic classes related to accessibility, such as mobility aids (wheelchairs, walkers, canes), was systematically reviewed across datasets. SYNTHUA-DT explicitly includes seven dedicated mobility-related classes, notably “wheelchair user,” “walker,” and various types of canes. Conversely, Cityscapes, KITTI, Mapillary Vistas, and SYNTHIA datasets do not feature explicit semantic classes related to mobility impairments, labeling individuals uniformly as “person” without specific differentiation. Consequently, SYNTHUA-DT provides significantly greater semantic coverage for urban accessibility applications, addressing critical gaps identified in prior datasets.

A statistical analysis was conducted to quantify class distribution balance across datasets. Metrics employed included the proportion of pixel area covered by dominant classes, normalized Shannon entropy, and the Gini coefficient. SYNTHUA-DT exhibited a notably balanced distribution (Gini coefficient = 0.71; normalized entropy ~0.69), indicating equitable representation of both frequent and rare classes. In contrast, Cityscapes and KITTI revealed substantial class imbalance, with the top five classes occupying over 80% of the annotated area (Gini coefficient >0.80 and >0.85, respectively). Mapillary Vistas and SYNTHIA showed slightly better balance (Gini coefficients ~0.78 and ~0.75) yet were still substantially less balanced than SYNTHUA-DT. This enhanced balance makes SYNTHUA-DT uniquely suitable for training robust models capable of recognizing underrepresented but critical urban elements.

To quantitatively assess annotation accuracy, comparative evaluations on semantic boundary precision and labeling consistency were performed. SYNTHUA-DT benefits from automated annotation directly derived from geometric digital twins, achieving near-perfect boundary accuracy and zero intra-dataset labeling variability. In contrast, Cityscapes and Mapillary Vistas rely on manual or crowd-sourced annotations, which inherently introduce minor inconsistencies and boundary inaccuracies. Cityscapes reported inter-annotator pixel agreement of approximately 98%, highlighting occasional discrepancies. SYNTHUA-DT’s consistently accurate annotations provide a significant advantage, especially for small and critical accessibility-related objects typically prone to labeling errors in manually annotated datasets.

The capacity for scalable dataset generation and annotation throughput was evaluated, comparing human-driven annotation workflows against automated synthetic methods. SYNTHUA-DT’s fully automated generation pipeline demonstrated exceptional scalability, achieving throughput exceeding 100 annotated images per minute. This sharply contrasts with manual annotation approaches: Cityscapes (~90 min/image), KITTI (~10–30 min/image), and Mapillary Vistas (~45 min/image, crowd-sourced). SYNTHIA, utilizing a game engine, reached approximately 10 images per minute, but was still significantly lower than SYNTHUA-DT (see

Table 4). The automation and scalability of SYNTHUA-DT substantially reduce costs and facilitate rapid dataset expansion and adaptation to diverse urban environments.

SYNTHUA-DT uniquely addresses existing limitations observed in prominent urban datasets by explicitly incorporating detailed accessibility-related semantic classes, maintaining balanced class distributions, ensuring highly consistent annotations, and significantly improving scalability through automation. These distinct advantages position SYNTHUA-DT as an essential resource for advancing inclusive and robust urban scene understanding in accessibility-focused computer vision tasks.

3.13. Computational Efficiency and Storage Benefits

The SYNTHUA-DT pipeline demonstrates notable computational efficiency and substantial advantages in data storage and processing. By leveraging the detailed digital twin, images were rendered directly at a resolution of 1280 × 960 pixels, preserving critical visual detail essential for accurate semantic segmentation tasks. This choice of resolution effectively balances high visual fidelity with manageable storage requirements, maintaining optimal segmentation performance without necessitating additional downsampling.

Significant computational efficiency was further achieved through the optimization of the photogrammetric model. The initial dense point cloud, containing approximately 150 million points, was efficiently simplified to a mesh consisting of approximately 5 million polygons. This mesh is sufficiently lightweight to allow real-time rendering and visualization on standard GPU hardware (see

Appendix A). The optimized mesh significantly enhances rendering throughput, achieving a rate exceeding 100 fully annotated images per minute, enabling rapid dataset expansion and facilitating iterative experimentation.

Moreover, the reusability of the digital twin model is a key advantage, allowing researchers to regenerate diverse datasets tailored for specific research needs without the overhead of repeated data collection. The digital twin’s relatively small storage footprint facilitates multiple dataset generations, including variations in viewpoints, environmental conditions, and sensor modalities.

Overall, SYNTHUA-DT’s computational pipeline provides an efficient and scalable method for generating extensive, high-quality annotated datasets, significantly reducing computational costs and storage requirements, thereby supporting rapid iteration and experimentation in machine learning research.

3.14. Expected Sim-to-Real Generalization

The current evaluation of SYNTHUA-DT relies primarily on synthetic data, yet the results demonstrate strong potential for effective sim-to-real generalization, particularly in accessibility-focused urban scene understanding. This generalization capability is rooted in two key factors addressed by SYNTHUA-DT: the inclusion of domain-relevant content and the consistency of semantic annotations. Unlike conventional datasets, SYNTHUA-DT explicitly represents wheelchair users, assistive devices, and related urban infrastructure, thereby filling a critical gap in training data for inclusive perception systems.

Moreover, the synthetic imagery produced by the digital twin exhibits high fidelity with the real-world urban landscape it replicates. As detailed in

Section 3.8, a qualitative validation was conducted involving 500 synthetic-real image pairs aligned via GPS coordinates. Independent experts assessed both topological consistency and visual realism, with over 92% of images rated as spatially accurate and 87% deemed visually realistic. These findings substantiate the perceptual credibility of SYNTHUA-DT scenes, reinforcing their utility for model training.

Importantly, these evaluations suggest that the SYNTHUA-DT rendering pipeline successfully preserves the structural and semantic characteristics of the real environment. The dataset’s annotations—derived directly from the geometric model—maintain pixel-level precision and eliminate label noise, a feature rarely attainable in human-annotated datasets. This ensures that learning is guided by accurate, consistent signals, further supporting generalization.

While automated perceptual similarity metrics (e.g., LPIPS, SSIM, PSNR) have not yet been applied, the strong alignment between synthetic and real imagery observed in human evaluations offers robust evidence of domain closeness. This alignment is critical, as existing literature indicates that when synthetic datasets closely mirror real-world distributions, the domain gap can be substantially reduced, enabling effective transfer of learned representations.

The visual and semantic realism of SYNTHUA-DT, combined with its accessibility-aware content and annotation consistency, positions it as a valuable resource for developing models that generalize reliably to real-world accessibility scenarios. These properties validate the digital twin approach as an efficient, high-fidelity method for generating synthetic datasets that are not only structurally accurate but also deployable in practical, real-world machine learning applications aimed at smart and inclusive urban environments.

3.15. Limitations of Synthetic Domain and Domain Adaptation Strategies

A significant challenge inherent to the use of synthetic datasets such as SYNTHUA-DT is the domain gap, referring to the distributional discrepancies between simulated and real-world data. Although SYNTHUA-DT has demonstrated strong semantic fidelity and visual realism (

Section 3.10 and

Section 3.13), the inherent idealization and regularity of synthetic environments can lead to performance degradation when models trained on synthetic data are deployed in real-world scenarios. Typical differences arise from factors such as sensor noise, unmodeled textures, subtle illumination variability, and unforeseen real-world object interactions.

To address and potentially mitigate these limitations, established domain adaptation methodologies could be employed to enhance sim-to-real generalization. Techniques such as Domain Randomization, which systematically varies simulation parameters (e.g., lighting conditions, object textures, sensor noise), can broaden the synthetic training distribution, increasing the robustness and generalization of trained models. Moreover, Adversarial Adaptation approaches, including domain adversarial neural networks (DANN) and generative adversarial networks (GANs), could be used to align feature representations between synthetic and real domains, reducing domain-induced discrepancies at the latent feature level. Finally, Transfer Learning, particularly fine-tuning synthetic-trained models on smaller sets of labeled real-world images, offers a pragmatic pathway to bridge the domain gap, leveraging synthetic data scale and real-world specificity simultaneously.

Future research should empirically evaluate the effectiveness of these domain adaptation strategies within the SYNTHUA-DT pipeline, quantifying the degree of improvement in model generalization to real-world urban accessibility tasks.

4. Discussion

The validation of the SYNTHUA-DT framework provides substantial evidence regarding the efficacy and potential limitations of synthetic dataset generation using digital twins, particularly in urban accessibility scenarios. The following sections critically analyze key findings, assumptions, and implications derived directly from the presented results.

The results demonstrate high geometric fidelity in the generated digital twin, as evidenced by a maximum deviation below 5 cm compared to real-world measurements. Such geometric precision is fundamental for realistic scene replication, particularly in critical features relevant to accessibility, including sidewalks and curb ramps. Semantic fidelity was likewise confirmed, with accurately assigned semantic labels allowing pixel-perfect semantic segmentation. This high degree of visual and geometric realism aligns with prior research indicating that realistic synthetic environments can significantly reduce domain discrepancies [

21,

22]. However, despite these promising observations, it remains an assumption that visual realism inherently guarantees robust sim-to-real transferability. Thus, rigorous quantitative evaluations of real-world deployment remain necessary to conclusively validate the practical effectiveness of SYNTHUA-DT-generated data.

The extensive dataset comprising 22,412 images across multiple environmental conditions demonstrates notable diversity, emulating various real-world scenarios, such as low-visibility conditions and varied scene complexities. Approximately 42% of the images explicitly incorporate mobility impairment-related classes, significantly surpassing conventional datasets [

6]. This deliberate inclusion of previously underrepresented classes addresses critical biases in existing training data.

Nevertheless, while environmental and situational diversity was carefully controlled, inherent limitations of synthetic environments—such as repetitive textures or simplified human dynamics—could potentially introduce subtle biases not present in real-world scenarios. Future investigations should consider introducing further stochastic variations to mitigate such biases.

The statistical analysis reveals a pronounced class imbalance, with dominant categories such as railings, buildings, and people collectively comprising nearly 60% of annotated instances. In contrast, critical but rare classes related to accessibility, including wheelchairs and smart canes, are notably less frequent. This inherent imbalance poses significant challenges for training robust models capable of reliably detecting rare but critical categories. Existing literature suggests approaches such as class reweighting, focal loss, or curriculum learning as effective strategies to address such issues [

23]. Given the importance of accurate detection of accessibility elements, future training protocols leveraging SYNTHUA-DT data must explicitly incorporate these imbalance-aware strategies to achieve equitable performance across all relevant classes.

The SYNTHUA-DT dataset covers a broad spectrum of scene complexities, from sparse scenarios to densely populated urban environments exceeding 1000 annotated instances per image. Such variability is essential for developing models robust to varying levels of occlusion and complexity, aligning with challenges typical in real-world urban deployments [

6]. Nevertheless, the observed multimodal distribution highlights a potential training pitfall: models trained via uniform sampling risk underperforming in densely populated scenes. Therefore, complexity-weighted sampling or targeted augmentation strategies may be necessary to ensure consistent performance across the entire spectrum of urban complexity.

Explicit annotation of mobility aids (e.g., wheelchairs, walkers) represents a significant advancement beyond traditional datasets, where these categories are typically absent or aggregated under generic labels [

6,

10]. The detailed representation of such classes and their contextual co-occurrence patterns, as illustrated by the heatmap analysis, underscores the dataset’s contextual realism and its suitability for training accessibility-focused AI. Nonetheless, it is essential to validate whether this detailed synthetic representation translates effectively into improved real-world model performance, particularly given that subtle simulation artifacts could potentially compromise practical applicability.

The evaluation of pixel-wise semantic coverage reveals an expected concentration among common urban categories; however, SYNTHUA-DT maintains meaningful representation for minority classes critical to accessibility. While the dataset achieves a commendable level of representational balance (Gini coefficient of 0.7126), challenges persist in fully capturing rare but important classes. Addressing these challenges will necessitate specialized sampling and augmentation techniques to maintain balanced semantic coverage during model training.

Automated annotations derived from the digital twin exhibit nearly flawless pixel-wise alignment, significantly surpassing manual annotation accuracy observed in datasets like Cityscapes [

6]. Qualitative evaluations further reinforce this finding, demonstrating both topological accuracy and high visual realism. However, future work must incorporate formalized quantitative metrics (e.g., SSIM, LPIPS) to systematically verify visual similarity to real-world imagery, ensuring that perceived realism indeed translates into practical domain-transfer benefits.

Compared to datasets such as Cityscapes, KITTI, Mapillary Vistas, and SYNTHIA, SYNTHUA-DT demonstrates distinct advantages in annotation consistency, scalability, and explicit representation of accessibility classes. While traditional datasets typically lack explicit annotations for mobility-impaired individuals, SYNTHUA-DT systematically addresses this critical gap, offering enhanced potential for developing inclusive AI solutions. Nonetheless, given the substantial differences between synthetic and real-world imaging characteristics, it is crucial to empirically demonstrate that models trained solely on SYNTHUA-DT can achieve comparable real-world performance through rigorous sim-to-real validation studies.

The demonstrated computational efficiency of SYNTHUA-DT—rendering over 100 images per minute at high visual fidelity—indicates a significant methodological improvement over traditional manual annotation workflow. The ability to rapidly regenerate datasets under various conditions is particularly valuable for iterative development and experimentation in machine learning. Nonetheless, the practical efficiency of the approach must be continually evaluated in terms of downstream training time and computational resources, particularly when scaling to more extensive multi-city digital twins.

Preliminary qualitative validation highlights strong potential for sim-to-real transfer, driven by the synthetic dataset’s high visual realism and consistent semantic annotations. These factors are known to positively impact generalization performance [

22]. However, while the initial assessments by independent evaluators confirm spatial and visual credibility, comprehensive empirical validations against diverse real-world datasets remain imperative. Future work should explicitly quantify domain gaps using automated metrics and systematically investigate domain adaptation strategies to maximize real-world applicability.

Although synthetic datasets such as SYNTHUA-DT offer significant advantages in terms of scalability, annotation consistency, and representational inclusivity, there remains an inherent risk that virtual representation could inadvertently perpetuate biases or unrealistic portrayals, particularly concerning vulnerable populations like individuals with mobility impairments. Synthetic portrayals of disability might inadvertently reinforce stereotypes, oversimplify diverse lived experiences, or omit critical contextual nuances present in real-world scenarios. To mitigate these concerns, SYNTHUA-DT explicitly integrates diverse and realistically modeled mobility aids, scenarios, and user interactions, informed by domain expertise and stakeholder consultation. Nonetheless, recognizing the ethical responsibility inherent in synthetic data generation, future work will include ongoing participatory validation involving end users and experts from disabled communities to continuously refine scenario realism, reduce potential biases, and enhance the authenticity and dignity of representation in synthetic datasets aimed at promoting inclusive AI solutions.

5. Conclusions

The SYNTHUA-DT framework presents a substantial advancement in the synthetic generation and annotation of urban scenes for accessibility-aware computer vision. Across the dimensions of geometric fidelity, class diversity, annotation quality, and computational scalability, the results indicate a rigorously engineered dataset with high potential for real-world deployment. However, a critical discussion must interrogate not only the merits but also the underlying assumptions and methodological constraints, particularly in relation to existing benchmarks and the broader challenge of domain transferability.

First, the results confirm that the digital twin achieves near-survey-grade geometric accuracy, with deviations under 5 cm in key urban features. This level of precision supports the claim that photogrammetric reconstruction can provide a robust geometric substrate for synthetic scene generation. The exceptionally high agreement (99.98%) between semantic masks and ground-truth geometries further validates the annotation reliability. However, this strength presupposes that visual realism is sufficient to ensure model generalization. The qualitative assessments—where 92% of synthetic-real pairs were rated as topologically consistent and 87% as visually credible—indicate strong perceptual alignment, yet they rely on human judgment. Without formal deployment tests or quantitative metrics such as LPIPS or FID, generalization remains a well-supported hypothesis, not a demonstrated fact.

A second critical axis lies in the semantic design of SYNTHUA-DT. The inclusion of 37 classes, including seven focused on mobility aids (e.g., wheelchairs, walkers, smart canes), constitutes a methodological innovation rarely seen in synthetic urban datasets. Traditional benchmarks such as Cityscapes and KITTI fail to model these categories, rendering them unsuitable for equitable training. However, a skeptic might note that these added classes represent only a small fraction of total annotations (e.g., 1.08% for wheelchairs), raising the question of whether their frequency is sufficient to yield robust learned representations. This concern is mitigated by the purposeful scene construction that ensured ~42% of images contain at least one accessibility class—an intentional bias that departs from naturalistic distributions in favor of representational equity.

The class imbalance analysis further complicates this picture. While SYNTHUA-DT demonstrates a more balanced distribution (Gini = 0.71) compared to KITTI or Cityscapes (>0.80), the dataset remains inherently long-tailed. The imbalance ratios of 312.74 for buses and over 24 for most assistive devices present a major challenge for model training, particularly when such classes also exhibit high intra-class variance (e.g., in object scale or appearance). The authors rightly propose solutions such as focal loss and curriculum learning, yet these methods demand empirical validation in future work. The implicit assumption that a balanced label space translates directly to fair model outputs warrants further scrutiny.

From a scalability standpoint, SYNTHUA-DT clearly surpasses prior benchmarks. With an image generation rate exceeding 100 annotated frames per minute and full support for semantic, instance, and panoptic segmentation, the pipeline demonstrates both automation and versatility. This is particularly important given the high costs and inconsistencies associated with manual annotation in datasets like Mapillary or Cityscapes. However, synthetic annotation systems often obscure the complexity of edge cases—e.g., partial occlusions, shadows, or temporal variations—which may be underrepresented or simplified in a rendered environment. While the photogrammetric textures in SYNTHUA-DT improve realism, they may not fully replicate the sensor noise, transient object behavior, or edge ambiguity of real data.

In terms of environmental diversity, SYNTHUA-DT introduces 22,412 images across five weather and illumination scenarios, from foggy rain to nighttime. This controlled diversity allows for rigorous ablation and robustness testing. Nevertheless, the assumption that these conditions generalize to unseen urban environments must be carefully qualified. The dataset remains geographically bounded to Curitiba, and although the digital twin is photogrammetrically accurate, urban typologies, architectural styles, and cultural context can vary significantly across cities. Thus, without broader geographic sampling or domain adaptation mechanisms, model transferability remains an open question.

The deliberate inclusion of accessibility-related elements, including avatars with mobility aids in plausible urban contexts, positions SYNTHUA-DT as a uniquely valuable resource for inclusive AI development. The authors show that such elements co-occur with relevant infrastructure (e.g., ramps, crosswalks), and the heatmap analysis supports the realism of these interactions. However, true representational justice in AI systems requires not just presence but performance—i.e., whether models trained on SYNTHUA-DT accurately detect and respond to these rare classes under real-world noise, occlusion, or ambiguity. While SYNTHUA-DT provides the first step through synthetic abundance and annotation consistency, this must be followed by deployment-level validation and participatory evaluation with end users from the disabled community.

The pilot experiment using Mask R-CNN with a ResNet-50 backbone provided baseline performance benchmarks across all 37 semantic classes, implemented in PyTorch (version 2.1.0, Meta AI, Menlo Park, CA, USA), demonstrating the dataset’s effectiveness for training state-of-the-art instance segmentation models. Notably, critical accessibility-related classes such as wheelchair, walker, and smart cane achieved promising results, validating the potential for SYNTHUA-DT to support robust accessibility-aware vision systems. Moreover, preliminary tests incorporating focal loss for class imbalance mitigation yielded further improvements in key performance metrics, reinforcing the importance of methodological strategies to handle dataset imbalance effectively.

SYNTHUA-DT offers a compelling response to long-standing limitations in urban scene datasets. Its fidelity, semantic breadth, and scalability represent clear advances. Yet, the transition from synthetic precision to real-world inclusion demands further methodological rigor, especially in validating whether model fairness and generalization are preserved beyond simulation. Future work should explore hybrid training regimes combining SYNTHUA-DT with real-world samples, formalize perceptual similarity metrics, and expand the geographic and cultural scope of the digital twin framework. Only through such efforts can we ensure that accessibility-aware AI systems move from theoretical inclusivity to practical impact.

Clear daytime scenes: 3342 images

Daytime fog: 8586 images

Daytime rain with fog: 7304 images

Clear nighttime: 1572 images

Nighttime with rain and fog: 13,287 images

Low-density scenes (≤100 instances): 2979 images

Medium-density scenes (101–500 instances): 4622 images

High-density scenes (>500 instances): 2396 images