Dual-Channel ADCMix–BiLSTM Model with Attention Mechanisms for Multi-Dimensional Sentiment Analysis of Danmu

Abstract

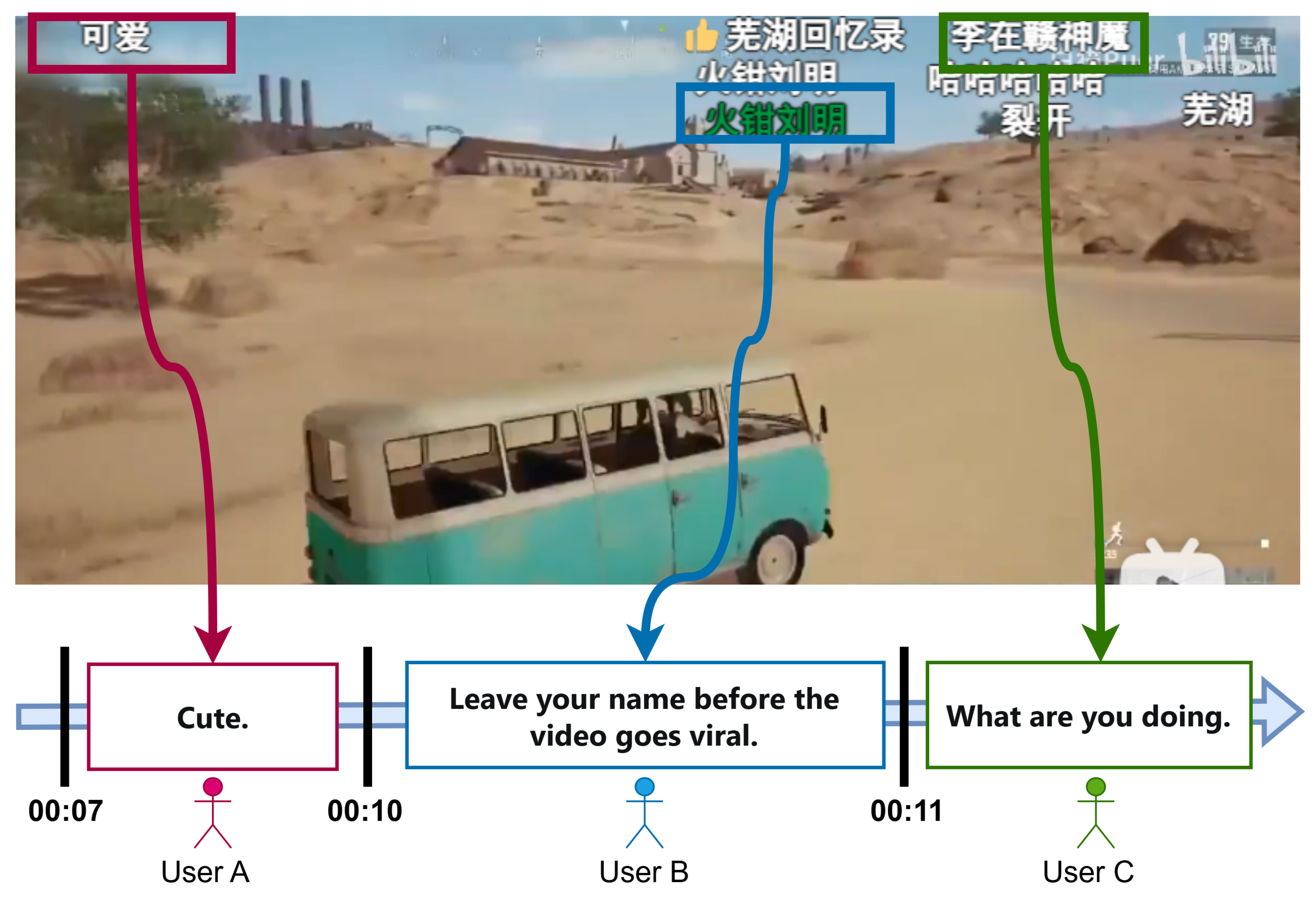

1. Introduction

- (1)

- This paper presents a dual-channel ADCMix–BiLSTM model integrated with attention mechanisms for multi-dimensional sentiment analysis of Danmu texts. Unlike traditional models with limited ability for processing short texts and informal language, the proposed architecture can effectively capture the complex, multi-dimensional sentiment information in Danmu.

- (2)

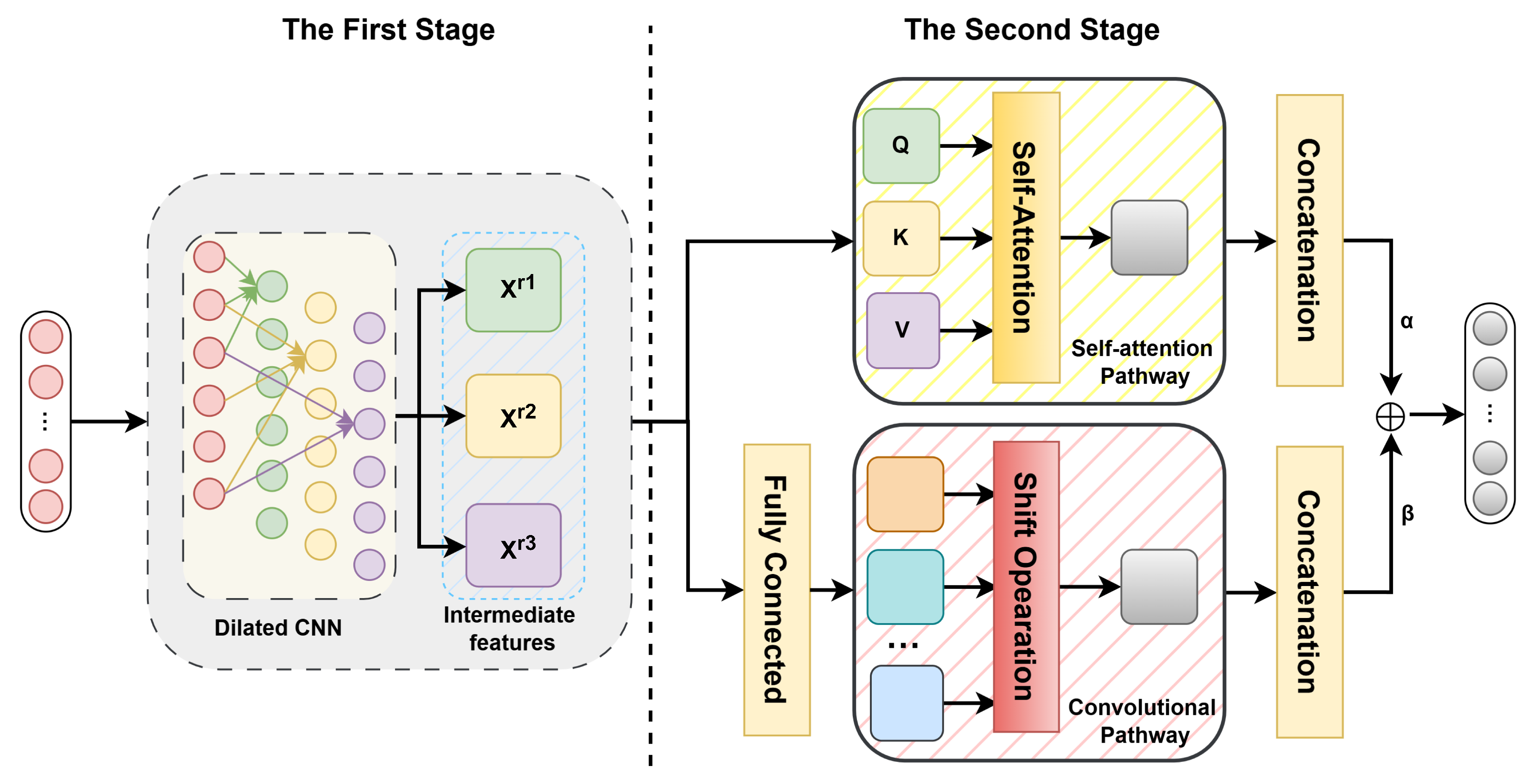

- The ADCMix structure is designed to capture the high-level semantic information of multi-dimensional sentiment features in Danmu. The dilated CNN in the ADCMix component learns the sentiment features from multiple perspectives, and the self-attention mechanism with a convolutional module learns the sentiment features from different perspectives.

- (3)

- The proposed model is validated through comprehensive experiments on two self-constructed datasets, Romance of The Three Kingdoms and The Truman Show.

2. Related Work

2.1. Danmu Sentiment Analysis

2.2. Short-Text Sentiment Analysis

3. Materials and Methods

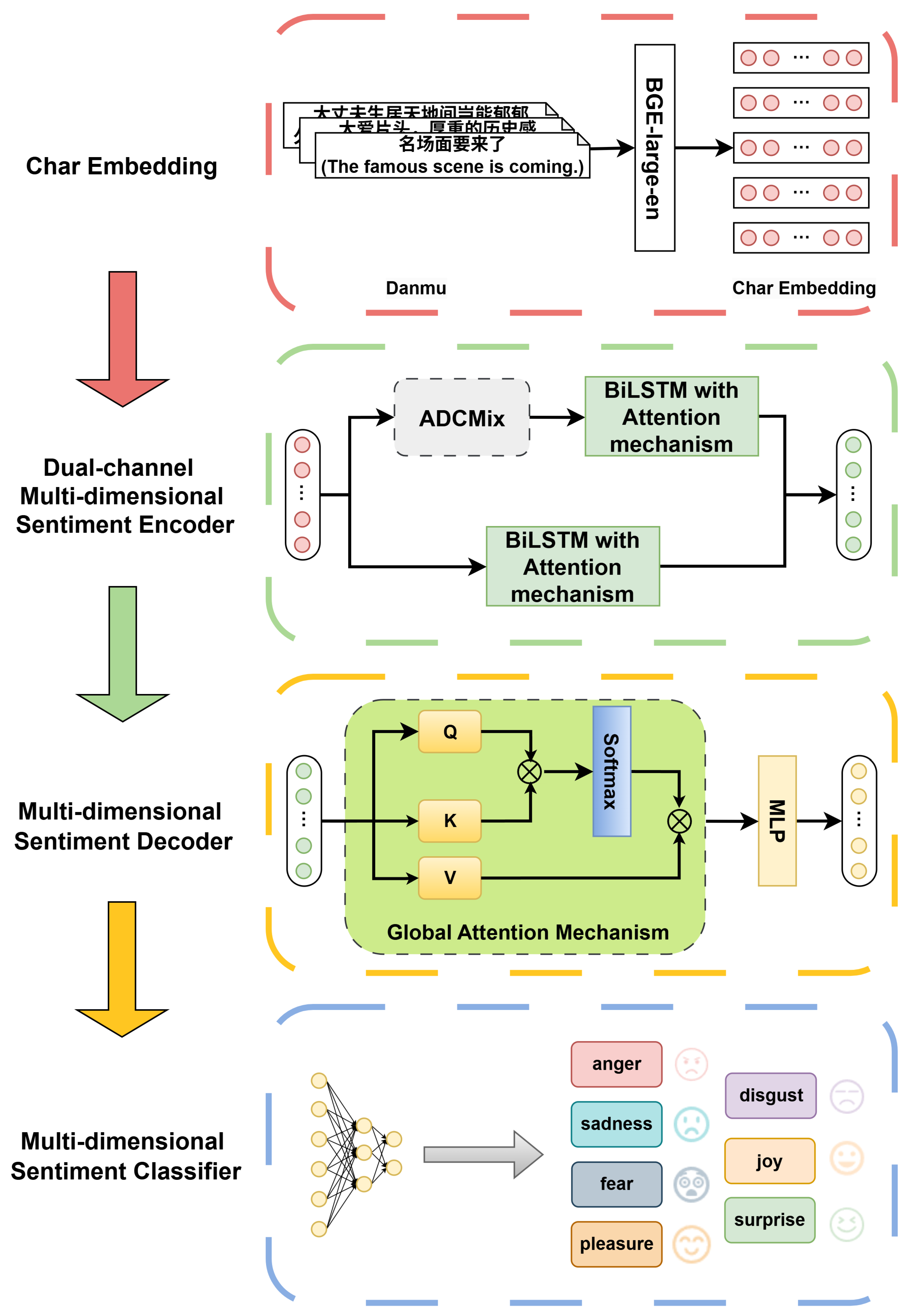

- Char Embedding: Utilizes the BGE-large-en model to encode Danmu at the character level, capturing fine-grained semantics and handling the flexibility of Chinese character composition.

- Dual-Channel Multi-Dimensional Sentiment Encoder:

- -

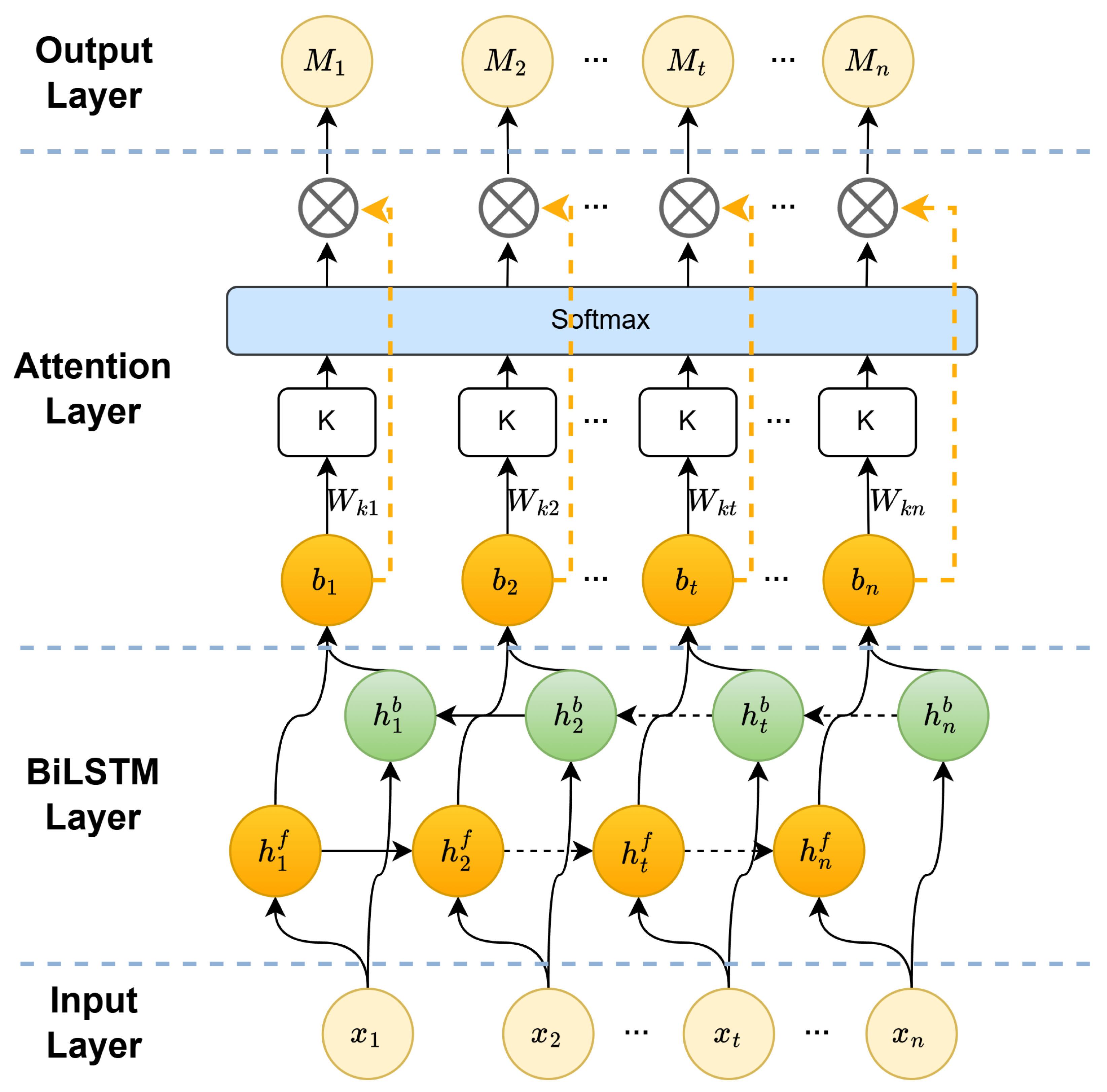

- Channel I: Extracts multi-scale sentiment features using the proposed ADCMix and models long-range dependencies via BiLSTM with attention.

- -

- Channel II: Preserves original contextual information through a parallel BiLSTM with attention path.

- -

- Integration: Fuses both channels to enhance feature richness and multi-dimensional sentiment representation.

- Multi-Dimensional Sentiment Decoder: Combines a global attention module and an MLP to decode subtle sentiment variations and refine sentiment representations.

- Multi-Dimensional Sentiment Classifier: Maps the final feature representation to a sentiment dimension using a fully connected layer and SoftMax activation.

3.1. Char Embedding

3.2. Dual-Channel Multi-Dimensional Sentiment Encoder

3.2.1. Channel I

3.2.2. Channel II

3.2.3. Dual-Channel Integration

3.3. Multi-Dimensional Sentiment Decoder

3.4. Multi-Dimensional Sentiment Classifier

4. Results and Discussion

4.1. Experimental Setup

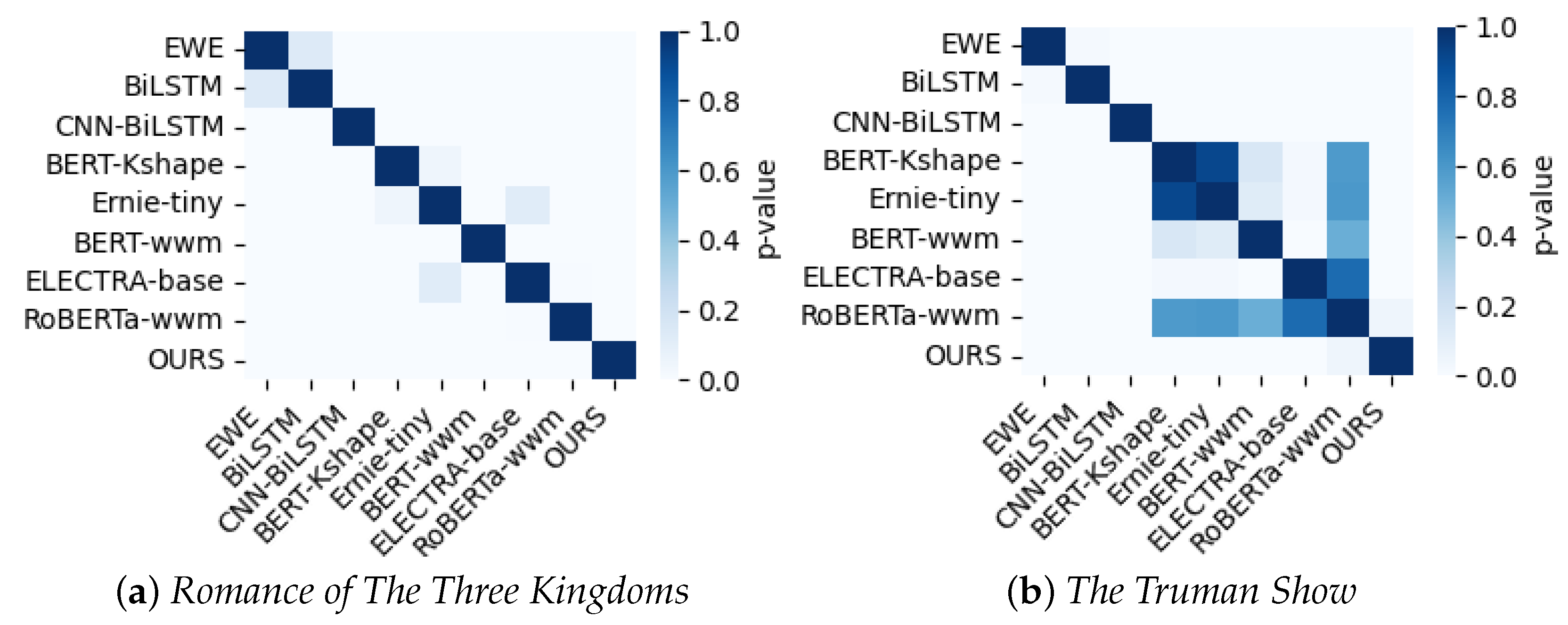

4.2. Comparisons of the ADCMix–BiLSTM and SOTA Models

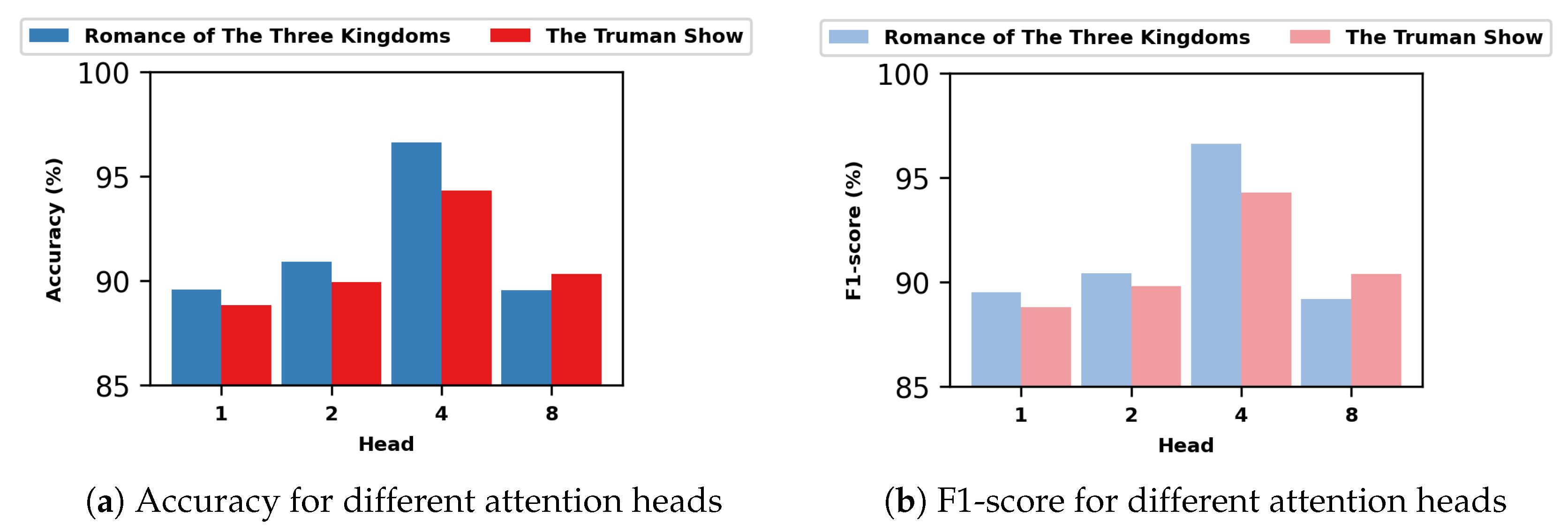

4.3. Analysis of the Factors Influencing Model Performance

4.3.1. Embedding and Channel Analysis

4.3.2. Parameter Analysis in ADCMix

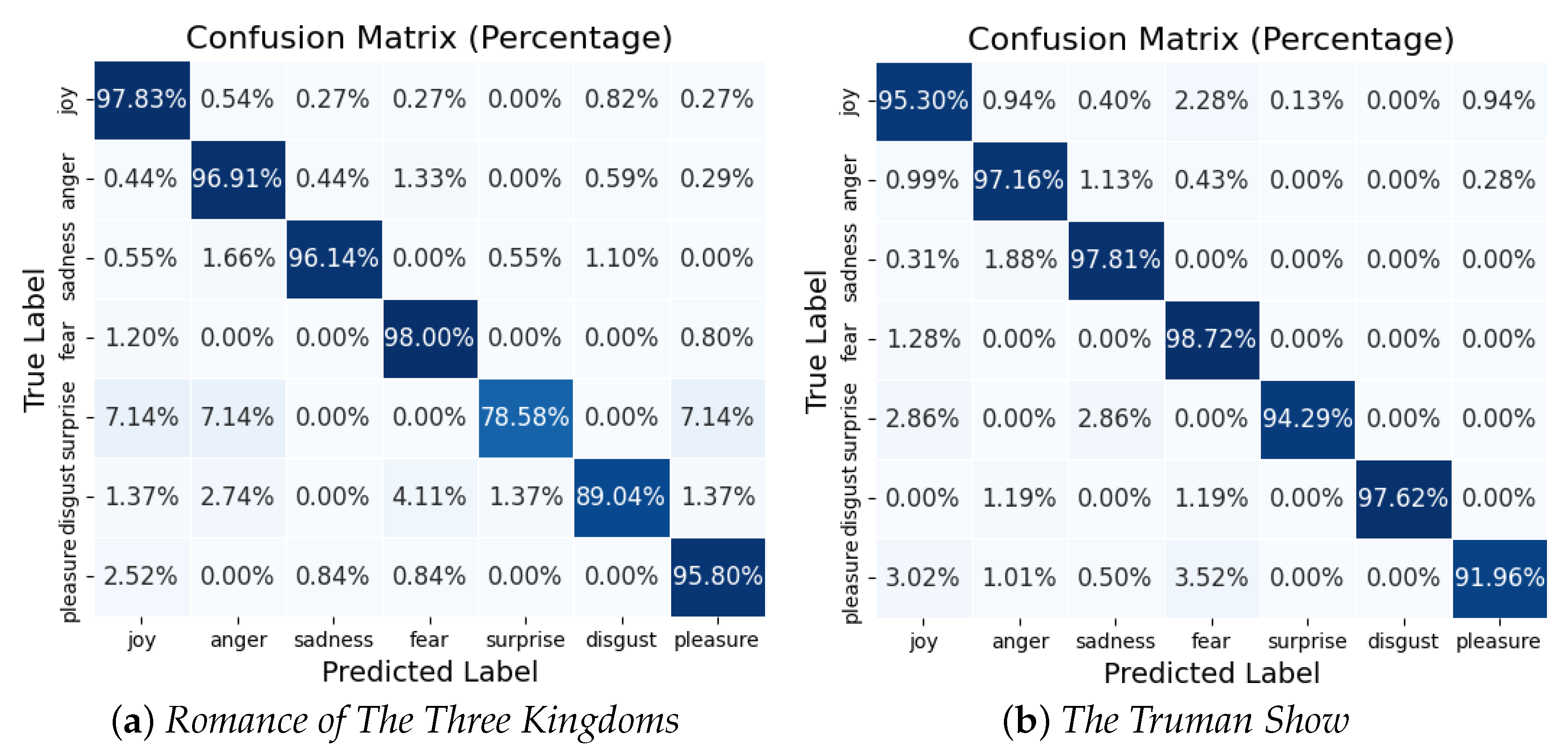

4.4. Analysis of Misclassifications

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bai, Q.; Wei, K.; Zhou, J.; Xiong, C.; Wu, Y.; Lin, X.; He, L. Entity-level sentiment prediction in Danmaku video interaction. J. Supercomput. 2021, 77, 9474–9493. [Google Scholar] [CrossRef]

- Al-Saqqa, S.; Awajan, A. The use of word2vec model in sentiment analysis: A survey. In Proceedings of the 2019 International Conference on Artificial Intelligence, Robotics and Control, Cairo, Egypt, 14–16 December 2019; pp. 39–43. [Google Scholar] [CrossRef]

- Li, C.; Wang, J.; Wang, H.; Zhao, M.; Li, W.; Deng, X. Visual-texual emotion analysis with deep coupled video and danmu neural networks. IEEE Trans. Multimed. 2019, 22, 1634–1646. [Google Scholar] [CrossRef]

- Wang, S.; Chen, Y.; Ming, H.; Huang, H.; Mi, L.; Shi, Z. Improved danmaku emotion analysis and its application based on bi-LSTM model. IEEE Access 2020, 8, 114123–114134. [Google Scholar] [CrossRef]

- Zhang, R.; Ren, C. Sentiment time series clustering of Danmu videos based on BERT fine-tuning and SBD-K-shape. Electron. Libr. 2024, 42, 553–575. [Google Scholar] [CrossRef]

- Sherin, A.; SelvakumariJeya, I.J.; Deepa, S. Enhanced Aquila optimizer combined ensemble Bi-LSTM-GRU with fuzzy emotion extractor for tweet sentiment analysis and classification. IEEE Access 2024, 12, 141932–141951. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, R.; Chen, G.; Zhang, B. Enhancing sentiment analysis of online comments: A novel approach integrating topic modeling and deep learning. PeerJ Comput. Sci. 2024, 10, e2542. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Shen, Z.; Guan, T.; Tao, Y.; Kang, Y.; Zhang, Y. Analyzing patient experience on weibo: Machine learning approach to topic modeling and sentiment analysis. JMIR Med. Inform. 2024, 12, e59249. [Google Scholar] [CrossRef] [PubMed]

- Edara, D.C.; Vanukuri, L.P.; Sistla, V.; Kolli, V.K.K. Sentiment analysis and text categorization of cancer medical records with LSTM. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 5309–5325. [Google Scholar] [CrossRef]

- Yenkikar, A.; Babu, C.N. AirBERT: A fine-tuned language representation model for airlines tweet sentiment analysis. Intell. Decis. Technol. 2023, 17, 435–455. [Google Scholar] [CrossRef]

- Karabila, I.; Darraz, N.; EL-Ansari, A.; Alami, N.; EL Mallahi, M. BERT-enhanced sentiment analysis for personalized e-commerce recommendations. Multimed. Tools Appl. 2024, 83, 56463–56488. [Google Scholar] [CrossRef]

- Singh, T.D.; Singh, T.J.; Shadang, M.; Thokchom, S. Review comments of manipuri online video: Good, bad or ugly. In Proceedings of the International Conference on Computing and Communication Systems: I3CS 2020, NEHU, Shillong, India, 28–30 April 2021; pp. 45–53. [Google Scholar] [CrossRef]

- Suresh Kumar, K.; Radha Mani, A.; Ananth Kumar, T.; Jalili, A.; Gheisari, M.; Malik, Y.; Chen, H.C.; Jahangir Moshayedi, A. Sentiment Analysis of Short Texts Using SVMs and VSMs-Based Multiclass Semantic Classification. Appl. Artif. Intell. 2024, 38, 2321555. [Google Scholar] [CrossRef]

- Hameed, Z.; Garcia-Zapirain, B. Sentiment classification using a single-layered BiLSTM model. IEEE Access 2020, 8, 73992–74001. [Google Scholar] [CrossRef]

- Liu, K.; Feng, Y.; Zhang, L.; Wang, R.; Wang, W.; Yuan, X.; Cui, X.; Li, X.; Li, H. An effective personality-based model for short text sentiment classification using BiLSTM and self-attention. Electronics 2023, 12, 3274. [Google Scholar] [CrossRef]

- Xiaoyan, L.; Raga, R.C. BiLSTM model with attention mechanism for sentiment classification on Chinese mixed text comments. IEEE Access 2023, 11, 26199–26210. [Google Scholar] [CrossRef]

- Gao, Z.; Li, Z.; Luo, J.; Li, X. Short text aspect-based sentiment analysis based on CNN+ BiGRU. Appl. Sci. 2022, 12, 2707. [Google Scholar] [CrossRef]

- Albahli, S.; Nawaz, M. TSM-CV: Twitter Sentiment Analysis for COVID-19 Vaccines Using Deep Learning. Electronics 2023, 12, 3372. [Google Scholar] [CrossRef]

- Shan, Y. Social Network Text Sentiment Analysis Method Based on CNN-BiGRU in Big Data Environment. Mob. Inf. Syst. 2023, 2023, 8920094. [Google Scholar] [CrossRef]

- Aslan, S. A deep learning-based sentiment analysis approach (MF-CNN-BILSTM) and topic modeling of tweets related to the Ukraine–Russia conflict. Appl. Soft Comput. 2023, 143, 110404. [Google Scholar] [CrossRef]

- Gan, C.; Feng, Q.; Zhang, Z. Scalable multi-channel dilated CNN–BiLSTM model with attention mechanism for Chinese textual sentiment analysis. Future Gener. Comput. Syst. 2021, 118, 297–309. [Google Scholar] [CrossRef]

- Su, W.; Chen, X.; Feng, S.; Liu, J.; Liu, W.; Sun, Y.; Tian, H.; Wu, H.; Wang, H. Ernie-tiny: A progressive distillation framework for pretrained transformer compression. arXiv 2021, arXiv:2106.02241. [Google Scholar] [CrossRef]

- Xu, Z. RoBERTa-WWM-EXT fine-tuning for Chinese text classification. arXiv 2021, arXiv:2103.00492. [Google Scholar] [CrossRef]

- Xiao, S.; Liu, Z.; Zhang, P.; Muennighoff, N. C-Pack: Packaged Resources To Advance General Chinese Embedding. arXiv 2023, arXiv:2309.07597. [Google Scholar] [CrossRef]

- Kumar, G.; Agrawal, R.; Sharma, K.; Gundalwar, P.R.; Agrawal, P.; Tomar, M.; Salagrama, S. Combining BERT and CNN for Sentiment Analysis A Case Study on COVID-19. Int. J. Adv. Comput. Sci. Appl. 2024, 15. [Google Scholar] [CrossRef]

- Jlifi, B.; Abidi, C.; Duvallet, C. Beyond the use of a novel Ensemble based Random Forest-BERT Model (Ens-RF-BERT) for the Sentiment Analysis of the hashtag COVID19 tweets. Soc. Netw. Anal. Min. 2024, 14, 88. [Google Scholar] [CrossRef]

- Pan, X.; Ge, C.; Lu, R.; Song, S.; Chen, G.; Huang, Z.; Huang, G. On the integration of self-attention and convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 815–825. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Plutchik, R. A general psychoevolutionary theory of emotion. In Theories of Emotion; Elsevier: Amsterdam, The Netherlands, 1980. [Google Scholar] [CrossRef]

- Zou, F.; Shen, L.; Jie, Z.; Zhang, W.; Liu, W. A Sufficient Condition for Convergences of Adam and RMSProp. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11119–11127. [Google Scholar] [CrossRef]

- Vrbančič, G.; Podgorelec, V. Efficient ensemble for image-based identification of Pneumonia utilizing deep CNN and SGD with warm restarts. Expert Syst. Appl. 2022, 187, 115834. [Google Scholar] [CrossRef]

- Church, K.W. Word2Vec. Nat. Lang. Eng. 2017, 23, 155–162. [Google Scholar] [CrossRef]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Wang, S.; Hu, G. Revisiting Pre-Trained Models for Chinese Natural Language Processing. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 657–668. [Google Scholar] [CrossRef]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for chinese bert. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

| Romance of the Three Kingdoms | ||||||

|---|---|---|---|---|---|---|

| Number of Danmu: | 6736 | |||||

| Joy | Anger | Sadness | Fear | Surprise | Disgust | Pleasure |

| 831 | 1024 | 1460 | 42 | 391 | 446 | 2541 |

| The Truman Show | ||||||

| Number of Danmu: | 8858 | |||||

| Joy | Anger | Sadness | Fear | Surprise | Disgust | Pleasure |

| 1191 | 1072 | 2432 | 186 | 482 | 719 | 2776 |

| ADCMix | |||||

|---|---|---|---|---|---|

| Dilated CNN | |||||

| Out_Channels | Kernel | Dilation | Padding Formula | Activation | |

| 128 | 3 | (1, 2, 3) | ReLU | ||

| Self-Attention Pathway | Convolutional Pathway | ||||

| Embed_Dim | Heads | Head_Dim | Out_Channels | Kernel | Groups |

| 128 | 4 | 32 | 128 | 1 | 32 |

| BiLSTM with Attention Mechanism | |||||

| BiLSTM | Attention Mechanism | ||||

| Num_Layers | Dropout | Hidden_Size | Activation | Dropout | |

| 2 | 0.5 | 256 | tanh | 0.3 | |

| Method | The Three Kingdoms | The Truman Show | |||

|---|---|---|---|---|---|

| ACC (%) | F1-Score (%) | ACC (%) | F1-Score (%) | ||

| deep learning | EWE-LSTM | ||||

| BiLSTM | |||||

| Dilated CNN-BiLSTM | |||||

| pretrained | BERT-Kshape | ||||

| Ernie-tiny | |||||

| Chinese-BERT-wwm | |||||

| Chinese-ELECTRA-base | |||||

| RoBERTa-wwm-ext | |||||

| OURS | |||||

| Embedding Method | Romance of The Three Kingdoms | The Truman Show | ||

|---|---|---|---|---|

| ACC (%) | F1-Score (%) | ACC (%) | F1-Score (%) | |

| word-level | 51.72 | 46.85 | 54.40 | 50.39 |

| char-level | 96.62 | 96.62 | 94.31 | 94.29 |

| Architecture | Romance of The Three Kingdoms | The Truman Show | ||

|---|---|---|---|---|

| ACC (%) | F1-Score (%) | ACC (%) | F1-Score (%) | |

| Single Channel I | 88.54 | 88.22 | 86.59 | 86.53 |

| Single Channel II | 81.41 | 81.19 | 77.70 | 77.23 |

| Dual-channel | 96.62 | 96.62 | 94.31 | 94.29 |

| Dilated Rate of Channel | Romance of The Three Kingdoms | The Truman Show | ||

|---|---|---|---|---|

| ACC (%) | F1-Score (%) | ACC (%) | F1-Score (%) | |

| (1,1,1) | 96.02 | 96.02 | 92.02 | 92.74 |

| (1,2,3) | 96.62 | 96.62 | 94.31 | 94.29 |

| (2,2,2) | 95.31 | 95.31 | 93.77 | 93.74 |

| (2,3,4) | 95.67 | 95.65 | 93.00 | 93.00 |

| (3,3,3) | 95.37 | 95.33 | 91.96 | 91.96 |

| (4,5,6) | 95.31 | 95.32 | 92.28 | 92.26 |

| Method | Romance of The Three Kingdoms | The Truman Show | ||||

|---|---|---|---|---|---|---|

| ACC (%) | F1-Score (%) | ACC (%) | F1-Score (%) | |||

| Swin-T | 1 | - | 95.13 | 95.12 | 90.88 | 90.87 |

| Conv-Swin-T | - | 1 | 93.17 | 93.20 | 90.70 | 90.70 |

| Swin-ADCMix-T | 1 | 1 | 96.56 | 96.55 | 91.96 | 91.96 |

| 1 | 95.79 | 95.78 | 92.87 | 92.86 | ||

| 1- | 95.61 | 95.61 | 92.51 | 92.50 | ||

| 96.62 | 96.62 | 94.31 | 94.29 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ping, W.; Bai, Z.; Tao, Y. Dual-Channel ADCMix–BiLSTM Model with Attention Mechanisms for Multi-Dimensional Sentiment Analysis of Danmu. Technologies 2025, 13, 353. https://doi.org/10.3390/technologies13080353

Ping W, Bai Z, Tao Y. Dual-Channel ADCMix–BiLSTM Model with Attention Mechanisms for Multi-Dimensional Sentiment Analysis of Danmu. Technologies. 2025; 13(8):353. https://doi.org/10.3390/technologies13080353

Chicago/Turabian StylePing, Wenhao, Zhihui Bai, and Yubo Tao. 2025. "Dual-Channel ADCMix–BiLSTM Model with Attention Mechanisms for Multi-Dimensional Sentiment Analysis of Danmu" Technologies 13, no. 8: 353. https://doi.org/10.3390/technologies13080353

APA StylePing, W., Bai, Z., & Tao, Y. (2025). Dual-Channel ADCMix–BiLSTM Model with Attention Mechanisms for Multi-Dimensional Sentiment Analysis of Danmu. Technologies, 13(8), 353. https://doi.org/10.3390/technologies13080353