Abstract

Model order reduction (MOR) is crucial for efficiently simulating large-scale RLCk models extracted from modern integrated circuits. Among MOR methods, balanced truncation offers strong theoretical error bounds but is computationally intensive and does not preserve passivity. In contrast, moment matching (MM) techniques are widely adopted in industrial tools due to their computational efficiency and ability to preserve passivity in RLCk models. Typically, MM approaches based on the rational Krylov subspace (RKS) are employed to produce reduced-order models (ROMs). However, the quality of the reduction is influenced by the selection of the number of moments and expansion points, which can be challenging to determine. This underlines the need for advanced strategies and reliable convergence criteria to adaptively control the reduction process and ensure accurate ROMs. This article introduces a frequency-aware multi-point MM (MPMM) method that adaptively constructs an RKS by closely monitoring the ROM transfer function. The proposed approach features automatic expansion point selection, local and global convergence criteria, and efficient implementation techniques. Compared to an established MM technique, MPMM achieves up to 16.3× smaller ROMs for the same accuracy, over 99.18% reduction in large-scale benchmarks, and up to 4× faster runtime. These advantages establish MPMM as a strong candidate for integration into industrial parasitic extraction tools.

1. Introduction

In recent years, advanced integrated circuit technologies, such as 3D packaging, have pushed the limits of performance and integration by overcoming key manufacturing limitations [1]. However, these advancements have introduced significant electromagnetic interactions, including mutual inductive coupling effects arising from densely packed interconnects and multi-layer routing structures. Modeling these interactions using parasitic extraction tools [2] often results in large-scale RLCk models with an extensive number of mutual inductances, rendering traditional SPICE simulation computationally intensive. Model order reduction (MOR) techniques aim to alleviate this issue by constructing reduced-order models (ROMs) that preserve the input–output behavior of the original systems, significantly reducing computational cost and simulation time.

MOR techniques can be classified into two main categories: balanced truncation (BT) and moment matching (MM). BT methods [3,4,5,6,7,8] provide robust approximation error bounds and can handle multi-port circuits effectively. However, BT becomes impractical for large-scale RLCk models as Gramian computation requires the solution of computationally expensive Lyapunov matrix equations and storage of dense matrices. Moreover, BT does not inherently preserve passivity [4] unless special post-processing techniques are applied, which often introduce additional computational overhead [3,9]. In contrast, MM methods [10,11,12,13,14,15,16,17] are better suited for large-scale model reduction due to their computational efficiency. These methods successfully exploit the sparsity of the system matrices and preserve passivity in symmetric RLCk systems by performing the projection through a congruence transformation [10]. However, the accuracy and size of the resulting ROM strongly depend on the quality of the underlying Krylov subspace.

MM techniques typically perform projection using the standard Krylov subspace [10], which is efficient at frequencies near the DC point but often degrades in accuracy at higher frequencies [16]. To overcome this limitation, the rational Krylov subspace (RKS) has been widely adopted in MOR methods [6,8,12,13,14,15,17]. Nevertheless, RKS performance heavily depends on the number and placement of the expansion points, which may be challenging to determine automatically. Although several methods use adaptive strategies or iteratively refine expansion points based on ROM quality, they still require the number of moments or the size of the ROM to be predefined [12,13,14,15,17]. Moreover, while most of them are suitable for -optimal reduction [6,12,13,17], offering globally optimal solutions, they often produce ROMs of unnecessarily high order in frequency-limited applications (e.g., circuit simulation). To improve ROM accuracy within specific frequency windows, authors in [14,15] propose using initial frequency bounds to guide the RKS construction. However, [14] requires the calculation of the original system transfer function, which is infeasible for large-scale models, while [15] selects expansion points based on the ROM dominant poles, a strategy that can overlook frequency regions with high approximation error. Furthermore, most methods often lack robust convergence criteria, which directly affects both ROM quality and reduction performance.

Beyond projection-based MOR techniques, data-driven methods for rational approximation have recently gained attention in the context of frequency-domain modeling. Algorithms such as AAA [18], Modified AAA [19], and AGORA [20] construct reduced-order rational approximations directly from frequency response samples, without access to the original system matrices. While these approaches are well-suited for small-scale or black-box systems, they are not directly applicable to large-scale RLCk models, where the sampling of the system’s frequency response can be computationally intensive.

These limitations highlight the need for frequency-aware MOR methods with reliable convergence criteria, which can adaptively refine the RKS in regions of high approximation error. In this work, we propose a frequency-aware multi-point MM (MPMM) method that iteratively builds an RKS, guided by the ROM transfer function error. The proposed method adaptively selects expansion points and assesses convergence based on local and global convergence criteria, ensuring compact and accurate ROMs over the frequency range of interest. Building upon our prior methodology for low-rank BT via multi-point RKS projection [8], this work extends the concept to MM and introduces the following key contributions:

- Two discrete convergence criteria, entirely based on the ROM’s transfer function, avoiding the calculation of the original model’s response [14]. The local convergence criterion allows the number of moments per expansion point to be chosen automatically, eliminating the need for fixed iteration counts [15], while the global convergence criterion provides a reliable assessment of the overall ROM accuracy.

- An adaptive expansion point selection strategy, where each subsequent expansion point is selected based on the current ROM error profile. This allows the algorithm to target the most critical frequency regions.

- Efficient implementation techniques, such as sparse/dense matrix handling and substitution of matrix inversions by linear solves, enabling fast and scalable reduction of large-scale RLCk models.

An extensive evaluation against an established MM method [15] demonstrates that MPMM produces ROMs that are 2.6× on average and up to 16.3× smaller for the same accuracy, achieving over 99.18% order reduction for large-scale models. Additionally, it exhibits up to 4× faster reduction time, with an average speedup of 2.3×, and up to 2.5× less memory consumption, further highlighting its computational efficiency. Given its effectiveness, the proposed method can be integrated into industrial parasitic extraction tools, such as ANSYS RaptorH™ [2], enabling an efficient reduction in complex RLCk circuit models.

The remainder of this article is organized as follows. Section 2 provides a review of existing MOR techniques, focusing on MM methods. Section 3 outlines the key concepts of MM and introduces the RKS. Section 4 presents our proposed MPMM methodology along with efficient implementation techniques. Section 5 reports the experimental results, and Section 6 concludes this article.

2. Related Work

MOR has been a key enabler for efficiently simulating large-scale RLCk models of integrated circuits. Among various MOR techniques [3,4,5,6,7,8,10,11,12,13,14,15,16,17], two main families have been widely explored: MM and BT. BT methods [3,4,5,6,7,8] provide theoretical error bounds and strong control over the approximation quality. They achieve model reduction by solving large-scale Lyapunov equations and performing a balancing transformation based on the controllability and observability Gramians of the system. However, applying BT directly to large models is often infeasible due to the high computational and memory costs of solving dense matrix equations, even with low-rank approximations. Moreover, BT does not guarantee passivity unless post-reduction passivity enforcement techniques [3,9] are applied, further increasing computational cost. On the other hand, MM approaches [10,11,12,13,14,15,16,17] have been widely used in industrial tools [2] due to their simplicity and robustness. MM techniques generate ROMs by projecting the original system onto Krylov subspaces, effectively matching the moments of the original system’s transfer function around selected expansion points. Such methods inherently preserve passivity for symmetric RLCk systems [10] and are highly scalable for large-scale, sparse descriptor models.

Traditional MM approaches use the standard Krylov subspace, where the basis is generated around the DC expansion point [10,11]. Although such methods have straightforward implementation and are computationally efficient, their accuracy is localized around the DC frequency and degrades rapidly for frequencies farther away. To improve approximation quality, the extended Krylov subspace (EKS) has been proposed [16,17]. EKS combines the standard and inverse Krylov subspaces, expanding the subspace both around DC and infinity, improving spectral coverage from low to high frequencies in a single projection. However, like their predecessors, EKS-based methods struggle to maintain high accuracy in specific frequencies, as they target the entire frequency spectrum [6].

To address this limitation, RKS-based methods [6,12,13,14,15,17] further generalize MM by introducing arbitrary real or complex shifts in the projection process. In the context of circuit simulation, using real shifts is preferred to maintain real-valued ROMs [15]. Several techniques based on RKS aim to achieve -optimality [6,12,13,17]. The well-known IRKA algorithm [12] attempts to minimize the system’s -norm error by approximating the eigenvalues of the original system given a fixed ROM order r. This is performed through an iterative refinement of the shift parameters for the RKS. However, it requires a repeated calculation of the ROM eigenvalues and reconstruction of the RKS, which reduces its applicability to large-scale problems. The authors of [6,13] proposed more flexible versions of IRKA, which initially construct an RKS and then adaptively select subsequent expansion points based on ROM eigenvalues to expand the subspace, aiming to reduce computational cost. More advanced approaches have introduced a combination of EKS and RKS [17], achieving better results. Nevertheless, these methods [6,12,13,17] often produce unnecessarily large ROMs when only a limited frequency band is of interest.

To improve efficiency in frequency-limited applications, multi-point RKS methods have been developed [14,15]. The method proposed in [15] employs an adaptive three-point selection strategy (A3PSA), which selects expansion points at low, mid, and high frequencies based on a user-defined frequency range and the dominant poles of the system, following the IRKA scheme [12]. ROM accuracy is assessed by considering the differences in ROM eigenvalues computed at the previous and current three points (low, mid, and high). However, this technique assumes a fixed number of matched moments per expansion point, which may lead to suboptimal ROM sizes. The fully adaptive scheme presented in [14] dynamically selects expansion points based on the frequency at which the error between the transfer function of the original model and the ROM is the largest. The number of moments is then adjusted by monitoring the error within the interval between neighboring expansion points. Despite being promising, this method relies on a repeated evaluation of the original model’s response, which is practically infeasible for large-scale models.

Contrary to existing multi-point approaches [14,15], the proposed method relies exclusively on ROM-level information to guide both the selection of expansion points and the construction of the RKS. Moreover, the addition of a local convergence criterion allows the number of moments per expansion point to be determined automatically, avoiding unnecessary computations. Finally, our method can benefit from less frequent convergence checks, further improving reduction time.

3. MOR by MM

Using the modified nodal analysis (MNA) [21], a linear RLCk circuit with n nodes, m (inductive) branches, p inputs, and q outputs can be described in the time domain as

where is the node conductance matrix, is the node capacitance matrix, is the branch inductance matrix, is the node-to-branch incidence matrix, is the vector of node voltages, is the vector of inductive branch currents, is the vector of input excitations, is the input-to-node connectivity matrix, is the vector of output measurements, and is the node-to-output connectivity matrix. Moreover, we consider and . By defining the model order as , the state vector as , and also

the descriptor form is obtained:

The primary goal of MOR is to compute a ROM:

where , , , with , such that the ROM accurately approximates the original system. The approximation quality is measured by the output error, which is bounded in the time domain as

for any input and a small tolerance . Using Plancherel’s theorem [22], the error bound can be equivalently expressed in the frequency domain as

Let and denote the transfer functions of the original model and the ROM, respectively:

Then, the output error in the frequency domain satisfies:

where denotes the norm of a rational transfer function. Therefore, ensuring that , guarantees that the ROM accurately approximates the behavior of the original system for any input excitation.

MM provides an efficient way for reducing linear systems, being particularly useful for circuit simulation and descriptor models. Starting from the time-domain descriptor system (Equation (3)), the application of the Laplace transform yields the following frequency-domain representation:

If we now assume zero initial conditions (i.e., and ), then the above system can be written as

Expanding the original system’s transfer function as a Taylor series around an expansion point (commonly ) gives

where the coefficients are the system’s moments at . In particular, for circuit models, corresponds to the DC behavior, where inductors are treated as short circuits and capacitors as open circuits, whereas captures the system’s dominant time constant, characterizing its response to external inputs. In general, these block moments can be calculated using the following relation:

The key idea of MM is to construct a ROM, such that its transfer function matches several moments of the original system’s transfer function around selected frequency points. This is achieved via projection onto a lower-dimensional subspace. Let denote the right and left projection matrices, respectively. For an expansion around , the subspaces that these projection matrices span are

By projecting the original system matrices, the ROM is formed as

The resulting ROM transfer function matches the first moments of the original transfer function .

Typically, the projection subspaces are constructed using Krylov sequences based on the system matrices. In the case of symmetric systems, a single-sided projection can be employed (i.e., ), enabling efficient reduction in large-scale models while inherently preserving ROM passivity [10]. Typical MM techniques based on the standard Krylov subspaces offer excellent efficiency near the DC point. However, their accuracy degrades at higher frequencies, limiting their applicability to broadband or frequency-selective problems [16].

To address this issue, RKS-based projection techniques have been developed, incorporating multiple expansion points to improve approximation quality across wider frequency ranges. If we define and also , , then the RKS of order j associated with the matrix pair () and a set of expansion points is defined as

By appropriately choosing the shifts, the ROM can accurately capture the system dynamics over a targeted frequency band. Nevertheless, the selection of expansion points and the number of matched moments remain critical for ensuring good accuracy. Poor choice of expansion points can lead to reduced accuracy at frequencies that are not well represented by the matched moments.

4. Proposed Methodology

This section presents our multi-point MM (MPMM) framework based on RKS for accurately reducing large-scale RLCk models. The methodology adaptively builds the RKS by selecting frequency points where the ROM approximation error is maximized, ensuring accurate coverage across the frequency range of interest. At each step, the algorithm constructs a ROM via projection, monitors convergence through reliable local and global error indicators, and dynamically selects the expansion points. The proposed MPMM procedure is presented in Algorithm 1.

| Algorithm 1 Proposed multi-point MM (MPMM) method |

Inputs: Outputs:

|

Algorithm 1 receives as input the MNA matrices of the original model (), as defined in Equation (2), along with a predefined set of candidate expansion points covering the desired frequency range. The output consists of the ROM matrices (). The reduction process is adaptive and driven by effective two convergence criteria, detailed in Section 4.2. The local convergence criterion ensures sufficient moment matching at each selected frequency point, while the global convergence criterion verifies that the ROM achieves the required accuracy across the entire frequency range. Expansion points () are dynamically selected from the candidate set following the strategy described in Section 4.3. For each expansion point , the initial basis vectors are computed in step 5 as . In steps 7–16, subsequent vectors are generated iteratively, forming a local RKS of dimension j:

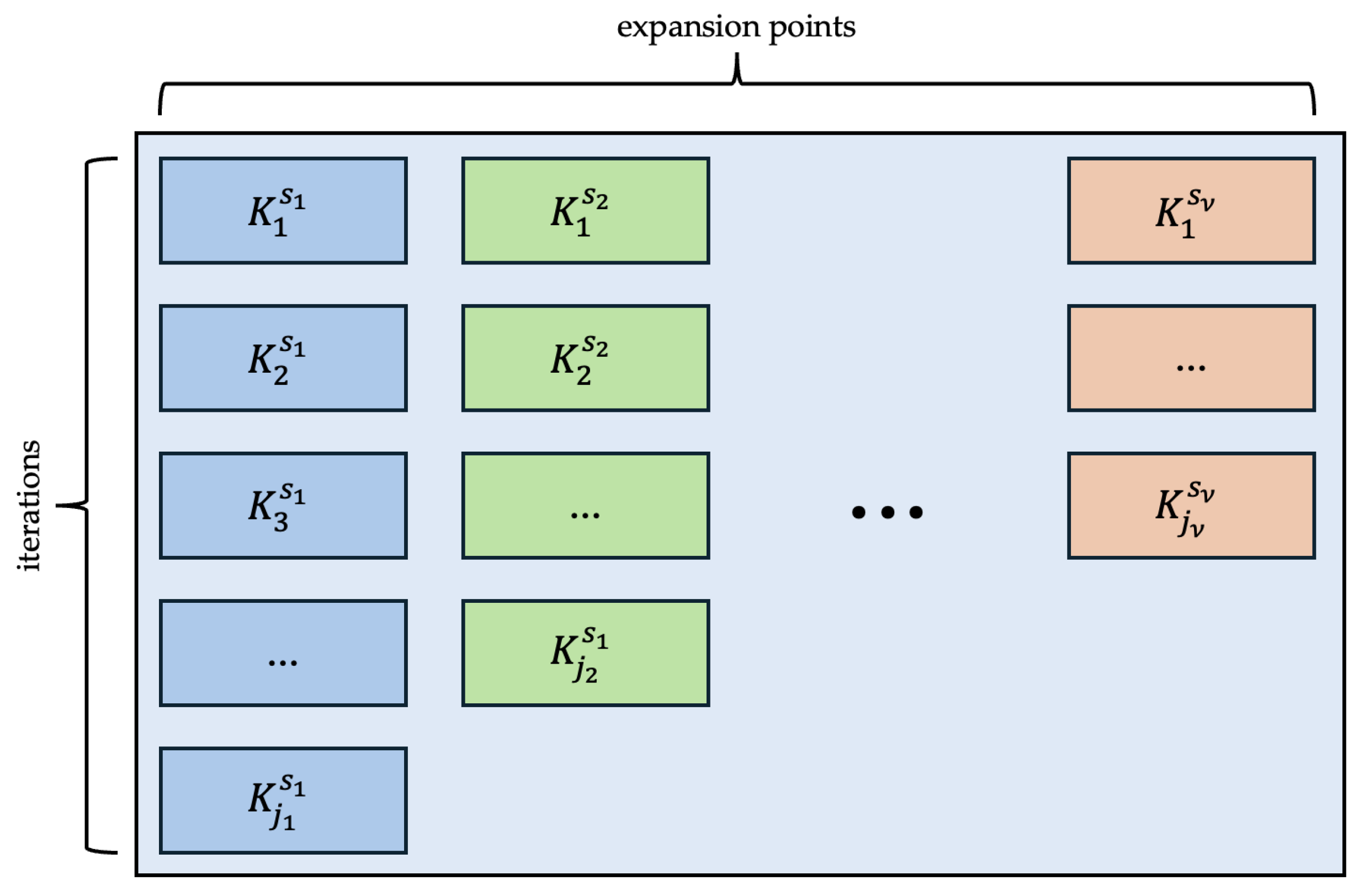

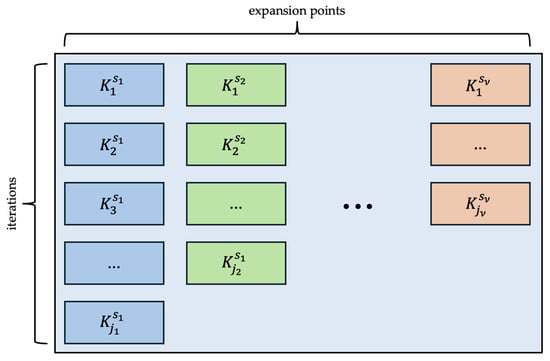

The expansion at continues until the local convergence criterion is satisfied, indicating that a further addition of moments at this frequency point is unnecessary. Upon reaching local convergence, a new expansion point is selected from the remaining candidates in step 17. This iterative procedure continues until the global convergence criterion is met. The final RKS is the combination of subspaces constructed around all selected expansion points , as depicted in Figure 1:

where is the total number of used expansion points () and denotes the number of iterations performed at each point .

Figure 1.

Final rational Krylov subspace generated by the proposed MPMM approach.

4.1. Orthogonalization Process

The orthogonalization steps in Algorithm 1 () are performed using a modified Gram–Schmidt procedure [23]. In step 12, orthogonalization with respect to follows a block-wise procedure:

fordo fordo ; end for end for |

This ensures that the RKS vectors remain linearly independent and well conditioned.

4.2. Convergence Criteria

Convergence in steps 9–10 is evaluated periodically every iterations, rather than at every iteration, to reduce computational overhead. The ROM transfer function is used to monitor both global and local convergence. The error at iteration j is defined as

where is the ROM transfer function at the j-th iteration (calculated using the expansion point ) and l is the number of evaluation points.

- Global convergence evaluates the ROM transfer function over the entire candidate set of expansion points to ensure uniform accuracy across the desired frequency range.

- Local convergence focuses on the current expansion point () and its neighboring points () to ensure accurate local approximation around .

4.3. Expansion Point Selection

The candidate expansion points are selected based on one of two strategies:

- Uniform distribution: Expansion points are evenly distributed within a user-defined frequency range.

- User-defined frequencies: Expansion points are specified directly by the user according to targeted frequency bands.

The dynamic selection of each expansion point in Algorithm 1 () follows these rules:

- The first point is chosen as the lowest frequency in (step 1).

- The second point is selected as the highest frequency.

- Subsequent points are chosen based on the maximum global approximation error among unused candidate expansion points.

This strategy prioritizes refining the ROM at the frequencies where the current ROM exhibits the largest error.

A critical aspect of Krylov subspace projection is deciding whether to use real, imaginary, or complex expansion points. When constructing the RKS, only real expansion points are employed for the following reasons [24]:

- The MNA matrices of the original model (Equation (2)) are real; using complex shifts would introduce complex arithmetic, increasing computational cost [15].

- Real shifts offer broader convergence across the frequency spectrum, while imaginary shifts, despite their excellent local accuracy, may degrade performance away from the interpolation point [11].

4.4. Efficient Implementation Details

Several efficient implementation strategies are applied to ensure high MPMM performance and scalability.

4.4.1. Matrix Inversions as Linear Solves

Algorithm 1 involves the direct inversion of matrix , which is computationally prohibitive and can be effectively avoided. Since this matrix is only needed in matrix products with p vectors in steps 5 and 11, the matrix products involving inverse matrices are computed by equivalently solving the sparse linear system:

This approach avoids dense inversions and preserves the sparsity of the system matrices.

4.4.2. Handling of Sparse/Dense Sub-Matrices

The matrix of Equation (2) contains a sparse block and a dense block due to the mutual inductance couplings. Therefore, for optimal handling, the sparse sub-matrix and the dense sub-matrix within are handled separately. The operation can be represented as a block system of equations:

the solution of which is calculated as

5. Experimental Evaluation

5.1. Experimental Setup

To evaluate the performance of the proposed MPMM method, we compared it against A3PSA [15]. All methods were evaluated using a comprehensive set of benchmarks, including SLICOT models [25], IBM power grids [26], and a variety of custom-designed circuits implemented using GlobalFoundries 22 nm FDSOI technology. The custom benchmarks include a phase-locked loop (PLL), an analog mixer, a time-interleaved digital-to-analog converter (TI_DAC), three low-noise-amplifiers (LNAs), an injection-locked frequency multiplier (ILFM), and a frequency divider (FDIV). Corresponding RLCk models for these designs were generated using ANSYS RaptorH™ [2]. Table 1 summarizes the characteristics of all evaluated models. For each benchmark, we report the Frobenius norm and the condition number of the system matrix .

Table 1.

Detailed characteristics of the examined RLC and RLCk models.

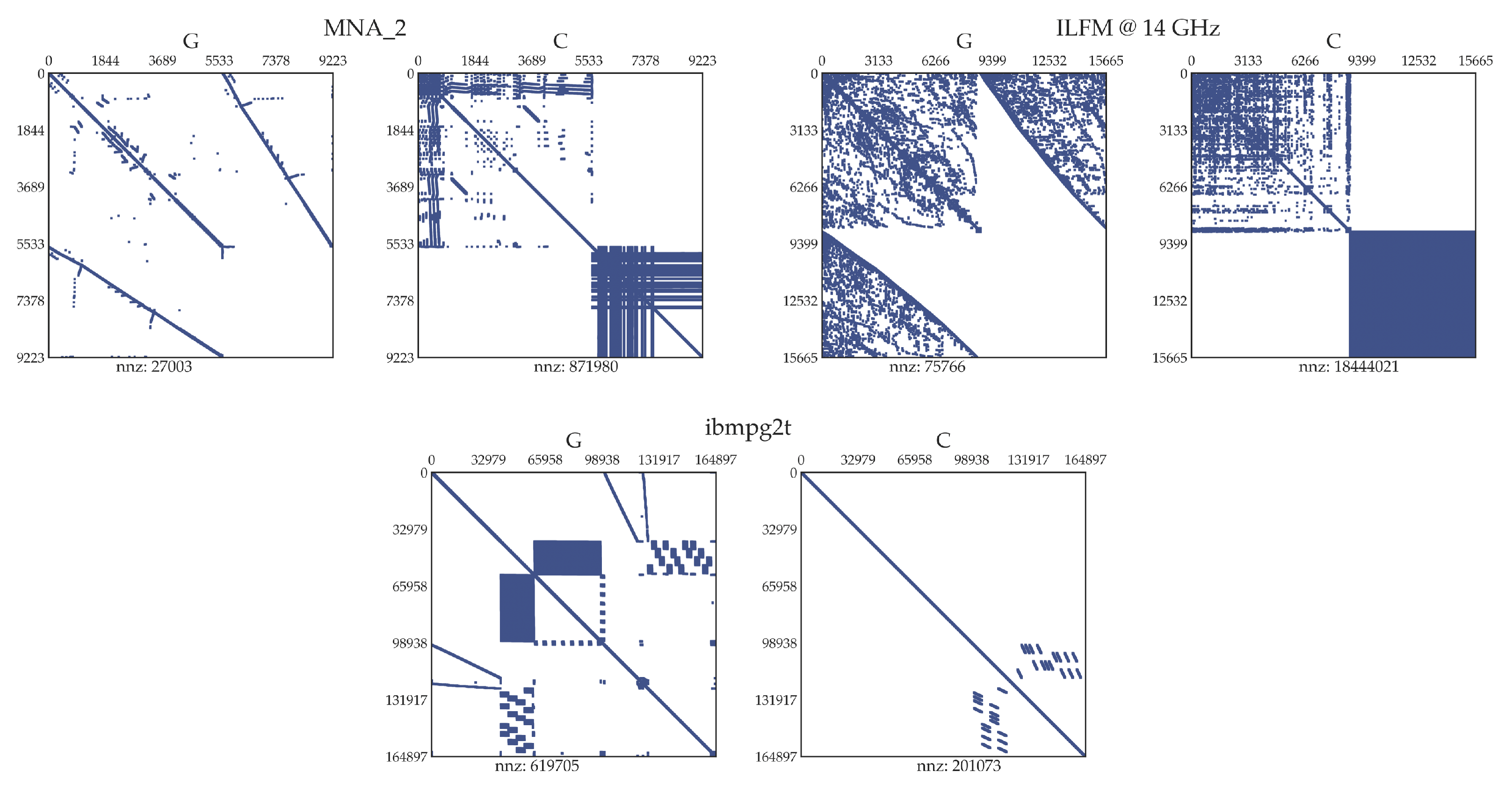

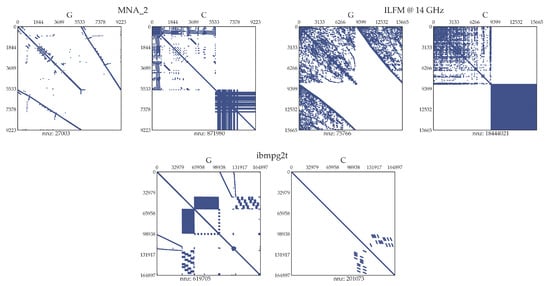

As indicated in Table 1, the majority of the benchmarks feature a significant number of mutual inductances, and their system matrices are often ill-conditioned, which poses challenges to MOR. Figure 2 shows typical sparsity patterns of the matrices and for each benchmark category. In RLCk models, the matrix and the capacitance sub-matrix are sparse, while the mutual inductance sub-matrix is dense due to the high density of electromagnetic coupling.

Figure 2.

Sparsity patterns of matrices and of MNA_2, ILFM @ 14 GHz, and ibmpg2t.

The MPMM method was initially configured with the following parameters:

- Tolerance thresholds (, , ) set to ;

- Number of candidate frequency points and maximum local iterations (, ) set to 7;

- Convergence period () set to 2 iterations.

All algorithms were implemented in C++ using the Eigen library [27] and the experiments were performed on a Linux workstation equipped with a 2.80 GHz 16-thread Intel® Xeon Silver 4309Y CPU and 64 GB of memory.

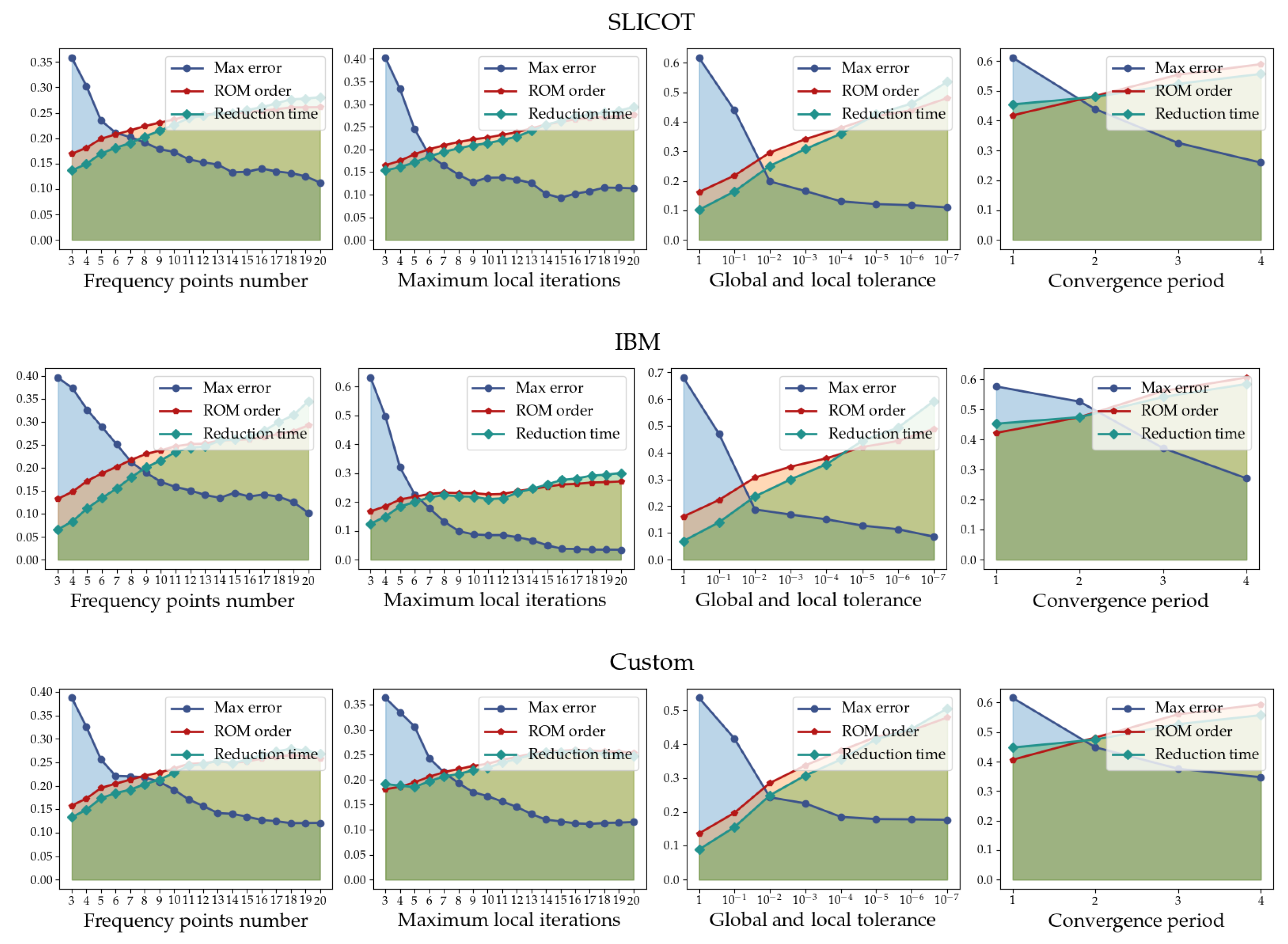

5.2. MPMM Parameter Tuning

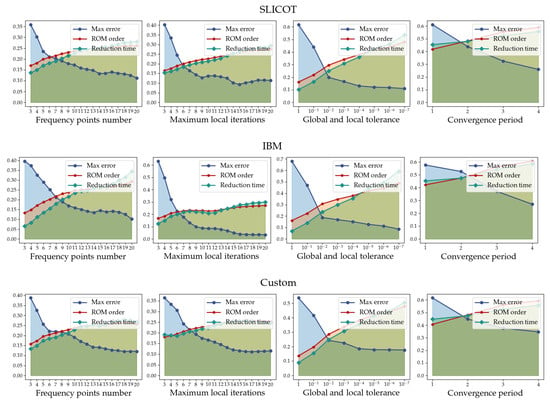

To determine appropriate parameter values for the MPMM method, we conducted an initial analysis by varying one parameter at a time while keeping the rest fixed according to the above configuration. For each parameter, we evaluated the resulting impact on three key metrics: maximum error, ROM order, and reduction time. All metric values were normalized and then averaged across benchmarks of the same category (SLICOT, IBM, and Custom), providing comparative insight into how each parameter affects performance. The aggregated results are presented in Figure 3.

Figure 3.

Normalized average ROM order, maximum error, and reduction time across each benchmark category (SLICOT, IBM, Custom) for varying MPMM parameter values.

As demonstrated, increasing the number of frequency points or the maximum local iterations generally improves the ROM accuracy, but at the cost of slightly higher ROM order and runtime. Setting the expansion points to seven and the local iterations to nine was found to provide a good balance between accuracy and efficiency, avoiding unnecessary subspace growth while maintaining precision across all benchmarks. For both global and local convergence tolerances, a value of yielded consistently reliable results. Further reducing the tolerance (e.g., to ) did not lead to meaningful accuracy improvements, but increased computational cost and ROM order. Regarding the convergence period, a value of three strikes an effective balance, minimizing unnecessary convergence checks and ensuring timely termination. Based on this analysis, the selected parameters were used for the subsequent evaluation.

5.3. Performance and Accuracy Evaluation

Following the parameter selection, we conducted a comprehensive evaluation to assess the accuracy, ROM order, and runtime performance of MPMM. The results are presented in Table 2, where the maximum relative error is computed as , evaluated over a set of frequency points. For the SLICOT benchmarks, the evaluation was performed over the frequency range [] = [,], while for the IBM power grid circuits, the range was set to [] = [,]. In the case of custom RLCk designs, we used a base frequency of 100 MHz ( = ), and the upper bound was set to twice the resonance frequency of each circuit. For instance, the PLL operating at 28 GHz was evaluated up to 56 GHz ( = ). Initially, we obtained the MPMM ROMs using the discussed parameters and calculated the corresponding errors. Then, to ensure a fair comparison, we evaluated A3PSA under two scenarios. First, A3PSA ROMs were generated until the resulting errors matched those of the MPMM ROMs, and the corresponding ROM orders were recorded (i.e., ROM order for same error). Second, A3PSA was run to produce ROMs of the same order as the MPMM ROMs, and the resulting maximum errors were measured (i.e., max error for same order). Runtime measurements were also conducted under equal ROM size to reflect algorithmic performance.

Table 2.

Comparison of MPMM and A3PSA in terms of ROM accuracy, ROM order, reduction time, and memory requirements across the examined RLC and RLCk models.

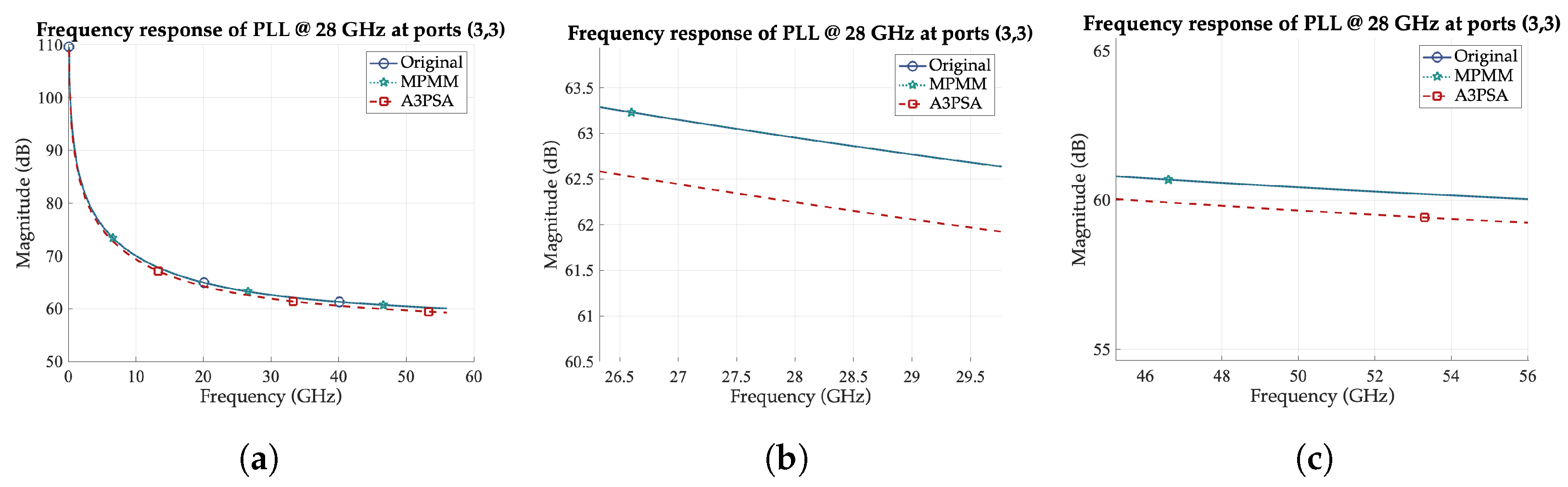

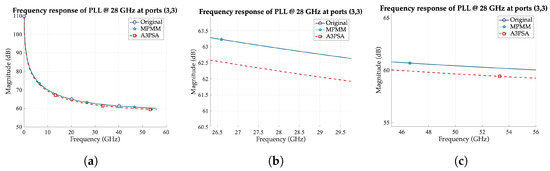

As shown in Table 2, the proposed MPMM method consistently produces either more accurate ROMs for the same order or smaller ROMs for the same error compared to A3PSA. This trend holds across all benchmark categories. For example, in the PLL @ 28 GHz benchmark, MPMM achieves significantly lower error ( vs. ) for the same ROM order. As illustrated in Figure 4, the MPMM ROM closely follows the original transfer function, whereas A3PSA slightly deviates, particularly near the mid and high frequencies. Similarly, in the MNA_2 case, although both methods reduce the model to a few hundred states, A3PSA exhibits a noticeable accuracy drop, with a maximum error of compared to only for MPMM.

Figure 4.

Comparison of the original and ROM transfer functions of PLL @ 28 GHz across ports (3,3) for identical MPMM and A3PSA ROM order. The plots demonstrate the frequency responses at (a) the examined frequency range, (b) the resonance frequency, and (c) the high-frequency region.

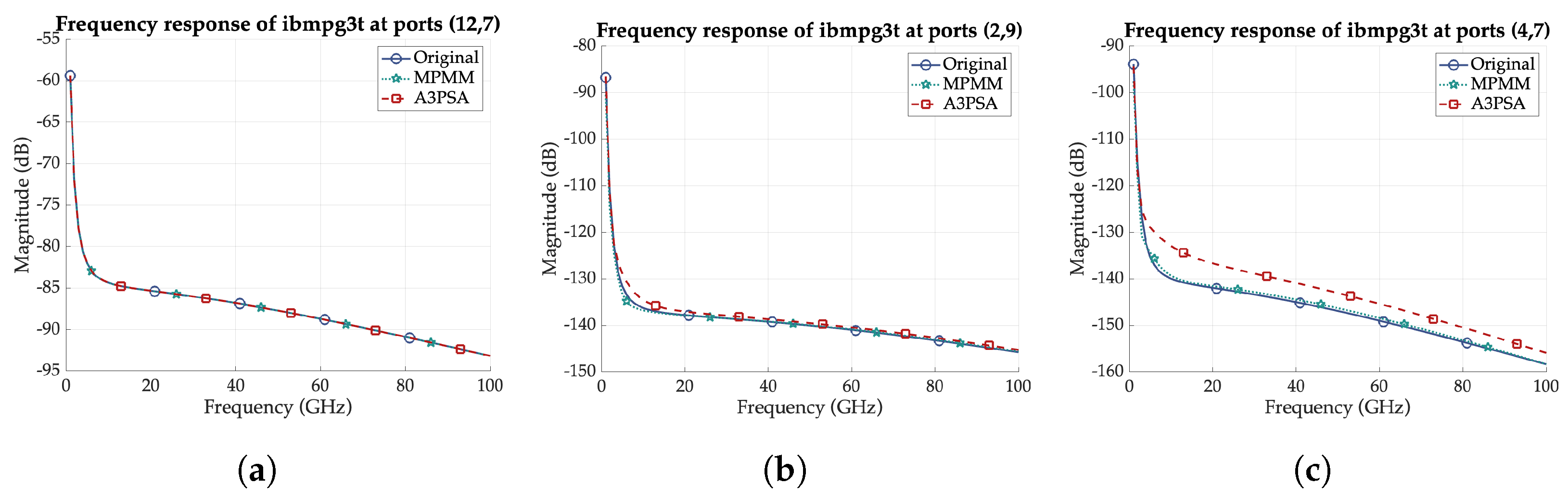

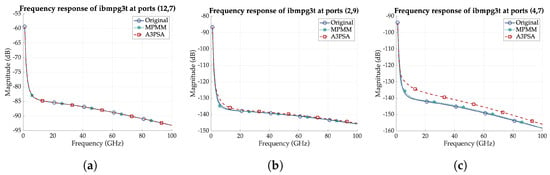

The advantage of MPMM becomes more pronounced in large and complex circuits. For instance, in the TI_DAC @ 28 GHz benchmark, MPMM achieves the same accuracy as A3PSA with a ROM that is 2.25× smaller (640 vs. 1440), while also reducing memory usage by more than half. In addition, in the ibmpg3t case, MPMM delivers a lower maximum error ( vs. ) with a more compact ROM and efficient memory consumption. As depicted in Figure 5, although A3PSA matches the original transfer function at ports (12,7), it exhibits noticeable deviations at ports (2,9), which deteriorate at ports (4,7). In contrast, MPMM consistently preserves accuracy across all examined ports.

Figure 5.

Comparison of the original and ROM transfer functions of ibmpg3t at ports (a) (12,7), (b) (2,9), and (c) (4,7) for identical MPMM and A3PSA ROM order.

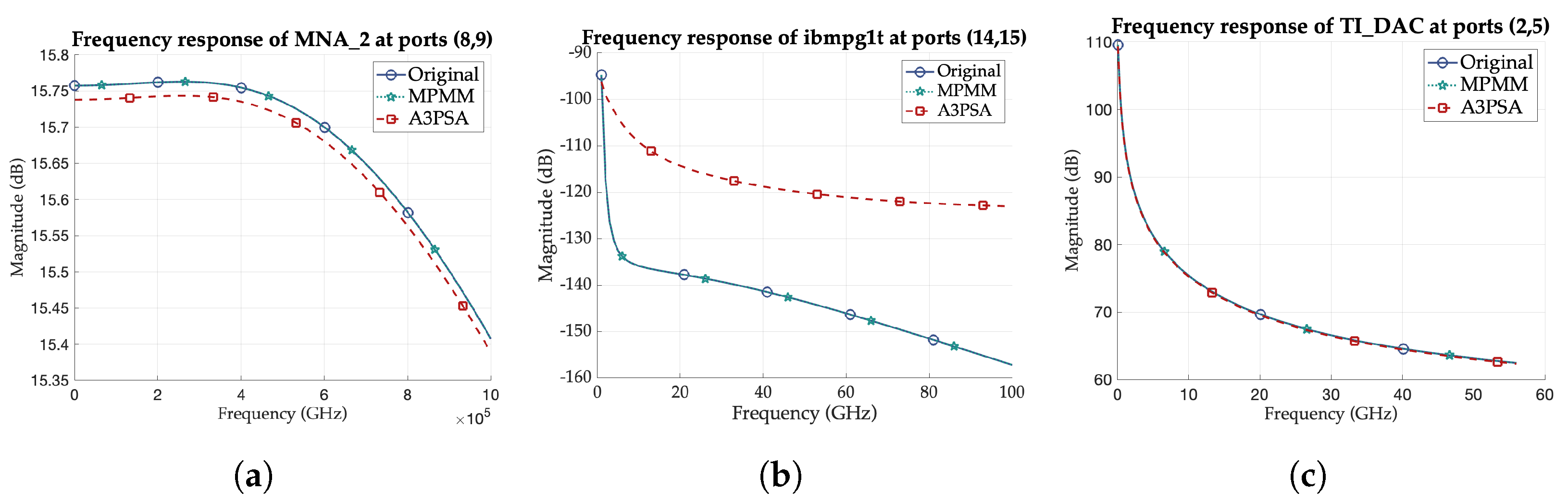

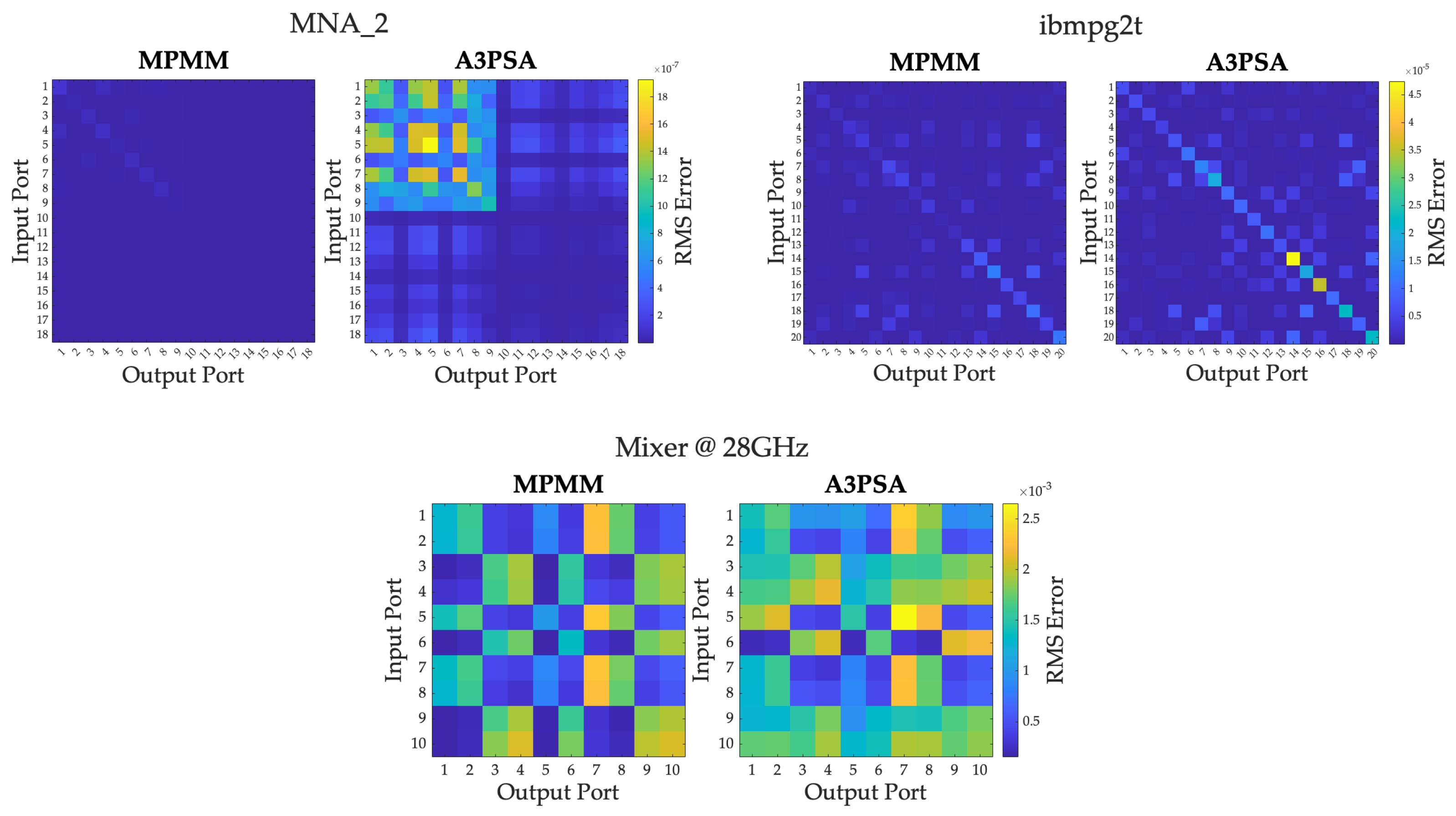

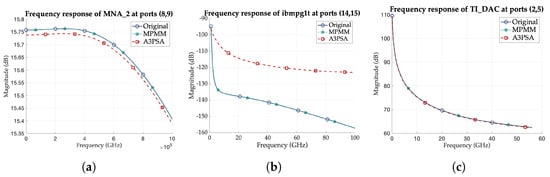

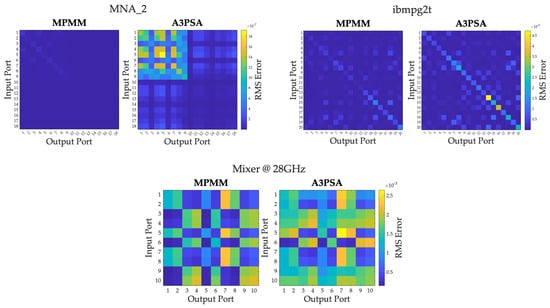

To further validate the accuracy of the proposed approach, Figure 6 shows representative ROM transfer functions for each benchmark category, demonstrating that MPMM consistently results in more accurate ROMs across all cases. To highlight the superiority of MPMM over A3PSA, Figure 7 presents the root mean square (RMS) error between the original and reduced transfer function matrices for different benchmarks. As can be seen, MPMM achieves a lower RMS error for nearly all port combinations, with A3PSA exhibiting visible regions of higher error. This indicates that the proposed approach maintains high ROM accuracy for all input–output ports in the examined frequency range.

Figure 6.

Comparison of the original and ROM transfer functions of (a) MNA_2 at ports (8,9), (b) ibmpg1t at ports (14,15), and (c) TI_DAC @ 28 GHz at ports (2,5) for identical MPMM and A3PSA ROM order.

Figure 7.

RMS errors of MPMM and A3PSA ROM transfer functions across all input–output port combinations for MNA_2, ibmpg2t, and Mixer @ 28 GHz.

Beyond its accuracy advantages, MPMM is highly effective at producing compact models. Across all evaluated benchmarks, MPMM achieves reductions ranging from 64.18% to 99.97%, with large-scale models (>20 K nodes) typically reduced by more than 99.18%. Furthermore, it yields ROMs that are 2.6× on average and up to 16.3× more compact than A3PSA ROMs for the same level of accuracy. ROM compactness results from the adaptive control over the number of moments matched per frequency, achieved through local convergence checks in MPMM. Unlike A3PSA, which uses a fixed number of moments per expansion point [15], MPMM dynamically adjusts the subspace size, avoiding unnecessary computation while maintaining accuracy.

In terms of reduction performance, MPMM achieves up to 4× speedup over A3PSA, with an average of 2.3× faster execution. This improvement is particularly evident in complex designs such as FDIV @ 28 GHz and ibmpg4t, where A3PSA’s fixed iteration scheme leads to longer runtimes. MPMM avoids such inefficiencies through its less frequent convergence checks and adaptive termination strategy. Even greater speedup could be achieved by configuring MPMM to match the accuracy of A3PSA.

Regarding memory requirements, although the memory usage of MPMM is comparable to that of A3PSA on smaller benchmarks, it performs notably better in large-scale designs. For example, in ibmpg1t and ibmpg3t, MPMM reduces memory consumption by over 400 MB and 8.5 GB, respectively, while achieving superior or similar accuracy. Moreover, for the TI_DAC @ 28 GHz benchmark, MPMM requires 2.5× less memory than A3PSA. Combined with its faster runtime, improved accuracy, and more compact ROMs, MPMM achieves an optimal balance between performance and precision. These advantages make MPMM especially suitable for reducing real-world designs encountered in industrial applications.

We also tested our method against several established techniques, including tangential IRKA [12], ISRK [5], RKSM [6], and LR-ADI [28], using implementations from open-source frameworks such as M-M.E.S.S. [29], sssMOR [30], and MATLAB’s reducespec toolbox [31]. However, the results from these comparisons are not reported in this article due to several limitations observed during evaluation. Specifically, these methods either required high ROM orders to reach acceptable accuracy, failed to converge reliably on several benchmarks, or incurred excessive computational demands.

6. Conclusions

This article presents a frequency-aware MM MOR method based on the RKS, tailored for the efficient reduction in large-scale RLCk models. The proposed MPMM technique employs adaptive expansion point selection, local and global convergence criteria, and several efficient implementation strategies to efficiently handle large-scale models. Unlike existing methods, MPMM relies exclusively on the ROM transfer function to drive the subspace construction and adaptively determines the required number of moments per expansion point. Experimental results indicate that MPMM consistently outperforms A3PSA, producing ROMs that are on average 2.6× and up to 16.3× smaller, achieving up to 99.97% reduction for large-scale benchmarks. Additionally, MPMM achieves an average of 2.3× and up to 4× runtime speedup compared to A3PSA. These improvements demonstrate that the proposed method is well suited for practical deployment in industrial tools such as ANSYS RaptorH™ [2] for passive RLCk model reduction.

Author Contributions

Conceptualization, D.G. and C.G.; Methodology, D.G. and C.G.; Software, D.G. and C.G.; Validation, D.G. and C.G.; Writing—original draft, D.G. and C.G.; Writing—review and editing, D.G. and N.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liu, M. 1.1 Unleashing the Future of Innovation. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 13–17 February 2021; pp. 9–16. [Google Scholar]

- Ansys—RaptorH™. Available online: www.ansys.com/products/semiconductors/ansys-raptorh (accessed on 23 June 2025).

- Phillips, J.; Daniel, L.; Silveira, L.M. Guaranteed Passive Balancing Transformations for Model Order Reduction. In Proceedings of the 39th Annual Design Automation Conference (DAC), New Orleans, LA, USA, 10–14 June 2002; pp. 52–57. [Google Scholar]

- Gugercin, S.; Antoulas, A.C. A Survey of Model Reduction by Balanced Truncation and Some New Results. Int. J. Control 2004, 77, 748–766. [Google Scholar] [CrossRef]

- Gugercin, S. An Iterative SVD-Krylov Based Method for Model Reduction of Large-Scale Dynamical Systems. Linear Algebra Appl. 2008, 428, 1964–1986. [Google Scholar] [CrossRef]

- Druskin, V.; Simoncini, V. Adaptive Rational Krylov Subspaces for Large-Scale Dynamical Systems. Syst. Control Lett. 2011, 60, 546–560. [Google Scholar] [CrossRef]

- Floros, G.; Evmorfopoulos, N.; Stamoulis, G. Frequency-Limited Reduction of Regular and Singular Circuit Models via Extended Krylov Subspace Method. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2020, 28, 1610–1620. [Google Scholar] [CrossRef]

- Giamouzis, C.; Garyfallou, D.; Stamoulis, G.; Evmorfopoulos, N. Low-Rank Balanced Truncation of RLCk Models via Frequency-Aware Rational Krylov-Based Projection. In Proceedings of the 20th International Conference on Synthesis, Modeling, Analysis and Simulation Methods and Applications to Circuit Design (SMACD), Coimbra, Portugal, 1–4 July 2024; pp. 1–4. [Google Scholar]

- Grivet-Talocia, S.; Ubolli, A. A Comparative Study of Passivity Enforcement Schemes for Linear Lumped Macromodels. IEEE Trans. Adv. Packag. 2008, 31, 673–683. [Google Scholar] [CrossRef]

- Odabasioglu, A.; Celik, M.; Pileggi, L. PRIMA: Passive Reduced-order Interconnect Macromodeling Algorithm. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 1998, 17, 645–654. [Google Scholar] [CrossRef]

- Freund, R.W. SPRIM: Structure-Preserving Reduced-Order Interconnect Macromodeling. In Proceedings of the IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Jose, CA, USA, 7–11 November 2004; pp. 80–87. [Google Scholar]

- Gugercin, S.; Antoulas, A.C.; Beattie, C. H2 Model Reduction for Large-Scale Linear Dynamical Systems. SIAM J. Matrix Anal. Appl. 2008, 30, 609–638. [Google Scholar] [CrossRef]

- Panzer, H.K. Model Order Reduction by Krylov Subspace Methods with Global Error Bounds and Automatic Choice of Parameters. Ph.D. Thesis, Technische Universität München, Munich, Germany, 2014. [Google Scholar]

- Feng, L.; Korvink, J.G.; Benner, P. A Fully Adaptive Scheme for Model Order Reduction Based on Moment Matching. IEEE Trans. Compon. Packag. Manuf. Technol. 2015, 5, 1872–1884. [Google Scholar] [CrossRef]

- Nguyen, T.S.; Le Duc, T.; Tran, T.S.; Guichon, J.M.; Chadebec, O.; Meunier, G. Adaptive Multipoint Model Order Reduction Scheme for Large-Scale Inductive PEEC Circuits. IEEE Trans. Electromagn. Compat. 2017, 59, 1143–1151. [Google Scholar] [CrossRef]

- Chatzigeorgiou, C.; Garyfallou, D.; Floros, G.; Evmorfopoulos, N.; Stamoulis, G. Exploiting Extended Krylov Subspace for the Reduction of Regular and Singular Circuit Models. In Proceedings of the 26th Asia and South Pacific Design Automation Conference (ASPDAC), Tokyo, Japan, 18–21 January 2021; pp. 773–778. [Google Scholar]

- Hamadi, M.A.; Jbilou, K.; Ratnani, A. A Model Reduction Method in Large-Scale Dynamical Systems Using an Extended-Rational Block Arnoldi Method. J. Appl. Math. Comput. 2022, 68, 271–293. [Google Scholar] [CrossRef]

- Nakatsukasa, Y.; Sète, O.; Trefethen, L.N. The AAA Algorithm for Rational Approximation. SIAM J. Sci. Comput. 2018, 40, A1494–A1522. [Google Scholar] [CrossRef]

- Bradde, T.; Grivet-Talocia, S.; Aumann, Q.; Gosea, I.V. A Modified AAA Algorithm for Learning Stable Reduced-Order Models from Data. J. Sci. Comput. 2025, 103, 14. [Google Scholar] [CrossRef]

- Lemus, A.; Ege Engin, A. AGORA: Adaptive Generation of Orthogonal Rational Approximations for Frequency-Response Data. Int. J. Circuit Theory Appl. 2025. [Google Scholar] [CrossRef]

- Ho, C.W.; Ruehli, A.E.; Brennan, P.A. The Modified Nodal Approach to Network Analysis. IEEE Trans. Circuits Syst. 1975, 22, 504–509. [Google Scholar]

- Gröchenig, K. Foundations of Time-Frequency Analysis; Birkhäuser: Boston, MA, USA, 2001. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations; Johns Hopkins University Press: Baltimore, MD, USA, 1983. [Google Scholar]

- Grimme, E.J. Krylov Projection Methods for Model Reduction. Ph.D. Thesis, University of Illinois at Urbana-Champaign, Urbana, IL, USA, 1997. [Google Scholar]

- Chahlaoui, Y.; Van Dooren, P. A Collection of Benchmark Examples for Model Reduction of Linear Time Invariant Dynamical Systems; Technical Report, SLICOT Working Note 2002-2; 2002. Available online: https://eprints.maths.manchester.ac.uk/1040/ (accessed on 23 June 2025).

- Nassif, S.R. Power Grid Analysis Benchmarks. In Proceedings of the 13th Asia and South Pacific Design Automation Conference (ASPDAC), Seoul, Republic of Korea, 21–24 January 2008; pp. 376–381. [Google Scholar]

- Guennebaud, G.; Jacob, B.; Niesen, J.; Heibel, H.; Gautier, M.; Olivier, S.; Steiner, B.; Riddile, K.; Capricelli, T.; Holoborodko, P.; et al. Eigen v3. Available online: http://eigen.tuxfamily.org (accessed on 23 June 2025).

- Benner, P.; Breiten, T. Low Rank Methods for a Class of Generalized Lyapunov Equations and Related Issues. Numer. Math. 2013, 124, 441–470. [Google Scholar] [CrossRef]

- M-M.E.S.S.-3.0—The Matrix Equations Sparse Solvers Library. Available online: https://www.mpi-magdeburg.mpg.de/projects/mess (accessed on 23 June 2025).

- MORLab—sssMOR Toolbox. Available online: https://www.mathworks.com/matlabcentral/fileexchange/59169-sssmor-toolbox (accessed on 23 June 2025).

- MathWorks—Reducespec. Available online: https://www.mathworks.com/help/matlab/ref/reducespec.html (accessed on 23 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).