Abstract

Business Continuity Management (BCM) is critical for organizations to mitigate disruptions and maintain operations, yet many struggle with fragmented and non-standardized self-assessment tools. Existing frameworks often lack holistic integration, focusing narrowly on isolated components like cyber resilience or risk management, which limits their ability to evaluate BCM maturity comprehensively. This research addresses this gap by proposing a structured Self-Assessment System designed to unify BCM components into an adaptable, standards-aligned methodology. Grounded in Design Science Research, the system integrates a BCM Model comprising eight components and 118 activities, each evaluated through weighted questions to quantify organizational preparedness. The methodology enables organizations to conduct rapid as-is assessments using a 0–100 scoring mechanism with visual indicators (red/yellow/green), benchmark progress over time and against peers, and align with international standards (e.g., ISO 22301, ITIL) while accommodating unique organizational constraints. Demonstrated via focus groups and semi-structured interviews with 10 organizations, the system proved effective in enhancing top management commitment, prioritizing resource allocation, and streamlining BCM implementation—particularly for SMEs with limited resources. Key contributions include a reusable self-assessment tool adaptable to any BCM framework, empirical validation of its utility in identifying weaknesses and guiding continuous improvement, and a pathway from initial assessment to advanced measurement via the Plan-Do-Check-Act cycle. By bridging the gap between theoretical standards and practical application, this research offers a scalable solution for organizations to systematically evaluate and improve BCM resilience.

1. Introduction

The effective use of Information and Communication Technologies (ICT) is nowadays essential for organizations, although with various levels of dependence. However, it has become vital to an organization’s competitiveness and agility, particularly in critical business processes. As a result, businesses should brace themselves for potential disruptions in productivity and competitiveness, especially concerning ICT-enabled business processes.

According to the Business Continuity Institute [1] and Katsaliaki et al. [2], organizations increasingly face challenges in ensuring business continuity due to the growing complexity of ICT infrastructures and the unpredictability of disruptive events. These disruptions can severely impact business operations, leading to financial losses, reputational damage, and operational inefficiencies. However, despite the critical importance of BCM, organizations often face challenges in conducting comprehensive and standardized assessments of their BCM capabilities, which hampers their ability to effectively identify gaps and improve resilience. Therefore, it is crucial to establish robust Business Continuity Management (BCM) models that provide resilience and agility in response to unforeseen events.

Business Continuity (BC) is based on the concept that an organization must have the strategic and tactical ability to plan for and respond to incidents and business interruptions [3] to continue operations at a predetermined acceptable level. This ability is critical and must be included in the BCM model for it to be suitable for integration with our Self-Assessment Methodology.

Strategic ability is formalized in BCM, which seeks to guarantee the organization’s preparedness for maintaining the continuity of its services, business processes, or purpose during a disaster, through implementation of a contingency plan [4]. As a result, BCM includes effective planning for resuming short-term business processes. The Business Continuity Plan (BCP) is thus implemented to eliminate or reduce the impact of operational interruptions caused by disruptive events [5]. The BCM model associated with our Self-Assessment Methodology must uphold this principle.

The BCP, on the other hand, is a by-product of BCM. Thus, to develop it, a set of essential BCM components must be addressed to generate a compelling and valid artifact that an organization can utilize. In this perspective, the BCM Model must provide the components and activities that enable the systematization of BCM and are integrated to follow the iterative management approach Plan-Do-Check-Act (PDCA). Still, existing frameworks tend to focus on isolated BCM components rather than offering a holistic perspective, which is a challenge noted by several authors [6,7,8,9,10,11].

Current BCM assessment frameworks are fragmented and lack integration, frequently addressing single components or narrowly focused risks such as cyber resilience, without providing a unified, adaptable methodology that encompasses the full spectrum of BCM activities.

With these considerations and an understanding of each of the BC components and their activities in mind, an assessment system can be defined to evaluate each of the intentions specified in BCM activities. The set of all activity components of the assessment system should strategically lead the organization on what should be considered to achieve the activity objective. However, each organization has unique characteristics, constraints, legal or regulatory frameworks, and partnerships with other organizations that may limit the execution or scope of the goal described in a metric.

Existing frameworks address BC self-assessment, particularly in cyber resilience and third-party risk management [12]. The Cyber Resilience Self-Assessment Tool (CR-SAT) helps SMEs assess and improve cyber resilience, while another study highlights deficiencies in self-assessment tools for risk management and suggests the need for a more robust process [13].

Given this gap, our research seeks to address the problem of fragmented and non-standardized BCM assessments by proposing a comprehensive Self-Assessment methodology. This methodology aims to integrate multiple BCM components, providing organizations with a structured and adaptable evaluation tool that can assess their BC maturity and resilience effectively.

In summary, achieving a multidisciplinary BC assessment is relevant to defining an assessment system supported by a BCM model that includes and relates the BC components and activities and their metrics or assessment questions. Thus, organizations can gain the benefits of having a documented method to conduct an assessment and track the program’s continuous improvement and progress [14].

There are international frameworks and standards, referred to as Standards, that support the design of a BCP. Organizations typically seek support from current and widely recognized Standards for designing their BCP to improve processes, ensure compliance, obtain certification, and enhance their brand image. Selecting the most appropriate standard for the organization should be an agile activity that helps define a BC approach. While the Standards guidance focuses on the methods to be used, when, and by whom, its guidelines indicate that organizations must determine what should be measured and assessed. Some organizations cope with constraints when defining what to assess when designing a BCP. These constraints can be addressed by monitoring and assessing the BC activities of a BCM Model and its performance [15] and defining questions to assess both the design and implementation of the BCP [16].

Previously, measuring concerns was handled by proposing BCM frameworks based on questionnaires or diagrams [6], but there has been limited progress in this area since 2018. One solution focuses on assessing the effectiveness and maturity of the organization’s BC practices and initiatives in BCP design and execution. It is based on metrics and specific questions that will aid in the design or redesign of BCPs and identify gaps and crucial areas to address in the BC area.

The Self-Assessment methodology presented here was designed to be an economical way to identify gaps, improve the BCM program, and raise awareness. The design process considered how a Self-Assessment System could help to prepare the Business Continuity Management System (BCMS). In an environment of limited resources, we also considered how it could enhance BC preparedness and provide an opportunity to obtain a BCM score, which can be traced in time to capture improvement and identify deficiencies. On its first use, it is intended the capture the as-is state of the organization’s BCMS and, in time, to capture the state evolution.

Therefore, this research aims to provide a structured Self-Assessment methodology enabling organizations to systematically evaluate their BC readiness, improve their BCM processes, and enhance their resilience against disruptive events.

Some questions emerged, triggering an analysis and comparison of the Standards establishing the need for an integrated identification of a set of metrics that allow the measurement and qualitative assessment of the essential components of the BCM, as well as the degrees of BC maturity in organizations [17].

The research addressed these issues and adopted a BCM Model, which allows multidisciplinary BC preparedness and implementation. It attempts to assist organizations and streamline their BCP design and implementation processes.

This paper focuses on the Self-Assessment methodology, enabling compliance evaluation with the BCM Model adopted. This Self-Assessment methodology not only facilitates identification of BCM weaknesses and strengths but also supports continuous improvement by enabling organizations to track progress over time through repeatable, standardized evaluations aligned with internationally recognized BCM standards.

This article is structured as follows: Section 1 introduces the research topic, outlining the significance of BC and the necessity for a structured approach to its assessment. Section 2 provides background research, covering fundamental BC concepts, the BCP, and BCM, along with a review of relevant standards and best practice models. Additionally, it discusses measurement challenges within the BCM scope. Section 3 details the methodology applied in the research. Section 4 identifies the problem and its motivation, setting the stage for the proposed solution presented in Section 5. This section defines the objectives and requirements, explains the transition from self-assessment to measurement, and introduces the Self-Assessment System and its components. Section 6 demonstrates the proposed approach, Section 7 presents its evaluation, and Section 8 concludes the paper by summarizing the findings and their implications. Finally, the References section lists all cited sources.

2. Background Research

This section presents the theoretical background for the relevant research topics.

2.1. Business Continuity

BC is described as examining an organization’s critical functions, identifying potential disaster scenarios, and developing procedures to handle these concerns [18]. Businesses are increasingly being required to prepare for risks that threaten the existence of crucial business activities. This results from the increasing frequency of natural disasters, threats from terrorist attacks and other criminal attacks, and changing legislation and regulations [19]. The economy and increased competition impact the organization’s need to improve and devote additional attention to developments that may disrupt critical business operations. Even minor disruptions can seriously affect the organization and its reputation [20].

Despite growing awareness of these risks, many organizations struggle to operationalize BC in a consistent, measurable, and strategic manner. The challenge lies not only in developing continuity procedures but also in ensuring they are effectively implemented, maintained, and aligned with business objectives.

This difficulty is compounded by the growing complexity of threats, limited internal expertise, and the lack of practical tools that support decision-making in business continuity planning.

The ability of an organization to continue its essential activities after or during a disaster, as well as the speed with which it may restore full performance, might be the difference between success and failure [21]. Hence, BC includes an organization’s ability to plan for and respond to disruptions and emergencies, such as keeping things running and recovering to normal [22].

Consequently, BC is a significant challenge for all organizations, regardless of the activity sector in which they operate, concerning competitiveness objectives, profitability, and market position.

While the existing literature and international standards emphasize the importance of BC, they often fall short of offering actionable frameworks that support organizations—particularly SMEs—in evaluating their continuity readiness.

Furthermore, there is a noticeable absence of self-assessment mechanisms that connect BC activities with performance indicators, maturity levels, or business value, limiting strategic engagement from top management.

However, BC relies on the premise that disruptions will occur at some time. As defined by Arduini & Morabit [23], BC is a framework of disciplines, processes, and techniques to provide continuous operation for critical business functions under all conditions. BC specifies how an organization will function following a disruption until its usual facilities are restored [24]. The central focus is BC’s stress on establishing other solutions for continuing operations during a disaster.

A successful contingency plan must include disaster recovery and BC components. Disaster recovery generally concerns technical recovery processes, whereas BC concerns logistical processes that temporarily bypass damaged technical elements [24]. Consequently, for BC to be solid, it must be planned.

To address this gap, this study introduces a novel Self-Assessment System grounded in BC maturity models and aligned with international standards. This system provides quantifiable metrics across preparedness, response, and recovery phases and supports organizations in benchmarking their continuity capabilities.

By translating complex standards into structured evaluation criteria, the proposed system empowers organizations to identify weaknesses, prioritize improvements, and communicate the value of BC efforts to decision-makers.

2.2. Business Continuity Plan

BC refers to the specific business plans and actions that allow an organization to respond to a crisis so that functions, sub-functions, and processes are recovered and resumed following a predetermined plan, prioritized by their criticality towards the economic viability of the business [25]. BC also establishes the strategies, procedures, and critical actions required to respond to and manage crises [26]. The authors of [27] state that the overall purpose of BC is to identify, plan, implement, and sustain multiple modes of operation in the event of a crisis.

BC provides a method for organizations to anticipate and overcome disruptions, lowering the risk of loss and allowing business operations to continue [28]. Planning, however, involves understanding potential outcomes and the risks an organization faces. To safeguard the business and the interests of its stakeholders, the authors of [29] emphasize that BC entails anticipating failures, taking planned measures, and testing.

The usefulness of a BCP is debatable [30]. Some authors emphasize organizational BCP awareness or training [31] and preparatory steps for BCP design and implementation [32]. Other works address security issues, focusing on cybersecurity [33] and risk-mitigation procedures [34]. Additionally, security policies are defined to encourage a safety culture and advocate improving ICT professionals’ preparation with training and practice [35]. Instead of being tailored to a specific type of disaster, the BCP should be designed to respond to the impact on the organization [36].

However, the definition of BCPs changes depending on the research objectives mentioned above. ISO 22301:2019 provides a comprehensive definition stating that the BCP is the documented information that guides an organization to respond to disruption and resume, recover, and restore the delivery of products and services consistent with BC objectives [37].

BCPs are documented procedures, from the perspective of preparing ICT for BC, that guide organizations to respond, recover, resume, and restore to a predefined level of operation following an interruption, according to ISO/IEC 27031:2011 [38]. Objectives to plan, establish, implement, operate, monitor, review, maintain, and continually improve a documented management system to protect against and mitigate the effects of disruptions and prepare for and respond to them are examples of how BC planning may be structured [39].

In terms of understanding the organization, one crucial aspect of developing a business contingency plan is accurately identifying the company’s assets and determining which are critical to the business and must be prioritized. The BCP encompasses various aspects, including risk assessment, Business Impact Analysis (BIA), recovery strategies, plan development, documentation, testing, implementing, and maintaining the plan [40]. Before conducting the BIA, it is crucial to gather essential information [41]. This information is instrumental in determining critical processes and establishing the appropriate BCP recovery sequence.

However, despite the growing body of literature on BCP components, risk mitigation strategies, and international standards, organizations continue to face difficulties in translating these guidelines into actionable and measurable practices.

The practical implementation of BCP remains fragmented and inconsistent, especially among small and medium-sized enterprises (SMEs), which often lack the resources and expertise to interpret complex standards or evaluate the effectiveness of their preparedness measures.

The BCP must undergo an annual review and receive approval from the organization’s top management. Furthermore, it should be readily available to all key employees responsible for supporting the business during recovery [42].

This gap highlights the need for a structured, metrics-based approach to assess BCP maturity and guide organizations in implementing effective continuity strategies.

Existing frameworks, while comprehensive in theory, often fall short in offering concrete self-assessment tools that align continuity efforts with measurable business outcomes.

In response, this study proposes a novel Self-Assessment System that operationalizes BCM components through quantifiable metrics. The system enables organizations to evaluate their readiness across all phases—preparedness, response, and recovery—while ensuring alignment with standards like ISO 22301 and ISO/IEC 27031.

2.3. Business Continuity Management

The BCP must be integrated into a comprehensive management process known as BCM to attain its goals effectively. BCM involves the systematic implementation and continuous maintenance of BC [39]. Thus, BCM is a holistic management process that identifies potential threats to an organization and the impacts on business operations which they might cause [38]. To ensure these threats are managed proactively and systematically, international standards emphasize the importance of embedding continuity practices within the organization’s broader management systems.

According to ISO 22300:2021 [39], a BCMS is an integral component of the overall management system, responsible for establishing, implementing, operating, monitoring, reviewing, maintaining, and improving BC. Additionally, a BC Program is an ongoing management and governance process supported by top management and adequately resourced to implement and maintain the BCM. Consequently, implementing an adequate and effective BCMS is challenging, demanding, time-consuming, and holistic [43]. It is necessary to streamline the organizational process of establishing a BCP and support organizations in achieving this goal. This underscores the relevance of exploring existing frameworks and models that can facilitate more structured and efficient BCM implementation.

In this context, exploring the components and requirements of a BCM Model is pertinent in the quest to identify means of mitigating the identified gaps and addressing the identified constraints [17]. The BCM components and activities employed in the Self-Assessment System draw upon the framework for the Multidisciplinary Assessment of Organizational Maturity in Business Continuity Management [44], which pays particular attention to the scientific literature and Standards since 2016 [45]. The framework’s BCM Model incorporates a set of strategic guidelines written and presented in a way that aims to simplify the organizational processes for the BCP design. Organizations can then focus efforts on improving their BCM program and better prepare for business continuity events.

While the theoretical foundations and standardization efforts are well-established, practical implementation continues to face persistent barriers. Despite these advancements, significant challenges remain that hinder widespread adoption and effective execution of BCM practices, particularly in resource-constrained environments.

2.4. Standards and Models of Best Practices

This research examined strategic guidelines from the Capability Maturity Model Integration (CMMI), which offers a set of best practices enabling companies to improve the performance of their primary business processes [46]. Additionally, it explored the Information Technology Infrastructure Library (ITIL) as a framework designed to align ICT services with business needs [47]. The research also considered the Control Objectives for Information and Related Technology (COBIT), serving as a valuable reference guide for implementing ICT Governance, encompassing the technical part, processes, and people [48].

Furthermore, several relevant Standards for BC were reviewed. ISO 22301:2019 outlines the structure and requirements for implementing and maintaining a BCMS. The NFPA 1600 provides essential criteria for preparedness and resilience [14]. Lastly, NIST 800-34 Rev.1 offers instructions, recommendations, and considerations for information systems contingency planning [49].

Despite the comprehensiveness of existing standards and frameworks, their effective application in organizations—especially SMEs—remains a challenge. Many struggle to operationalize these guidelines in a way that supports decision-making, performance tracking, and alignment with business goals.

In practice, organizations often lack the tools to translate high-level frameworks into actionable procedures and quantifiable metrics that support self-evaluation, continuous improvement, and strategic oversight.

The Standard ISO 22301:2019 states that the organization shall evaluate the performance and effectiveness of the BCMS. Concerning performance evaluation, ISO 22301 [37] states that the organization shall determine what needs to be monitored and measured and the methods to ensure valid results. It must also be determined when and by whom the monitoring and measuring shall be performed and the results analyzed and evaluated. Thus, the standard suggests that the organization retain appropriate documentary information as evidence of the results.

Considering the measurement perspective in ITIL, within the general practice of Continual Improvement management, many techniques can be employed when assessing the current state—such as a balanced scorecard review, internal and external assessments, and audits [47].

To assess where the organization is, services and methods already in place should be measured and/or observed directly to properly understand their current state and what can be reused from them [47]. Decisions on how to proceed should be based on information that is as accurate as possible. Therefore, the road to optimization of practices and services must follow high-level steps, which include executing the improvements iteratively. This comprises the use of metrics and other feedback to check progress, stay on track, and adjust the approach to optimization as needed [47].

In the COBIT 2019 domain Monitor, Evaluate, and Assess, the management objective Managed Performance and Conformance Monitoring defines five practices and their activities [48]. The Management Practice MEA01.04—Analyse and Report Performance suggests that the organization uses a method that provides a succinct view of ICT performance and fits within the enterprise monitoring system [48]. To facilitate effective, timely decision-making, scorecards or traffic light reports are suggested. Regarding the performance measurement policy, COBIT states that a balanced scorecard translates strategy into action to achieve enterprise goals, streamline internal functions, create operational efficiencies, and develop staff skills. This holistic view of operations helps link long-term strategic objectives and short-term actions.

While standards like ISO 22301, COBIT, ITIL, and CMMI encourage performance measurement and continual improvement, they do not explicitly define unified self-assessment models for BC maturity evaluation.

Moreover, there is little integration across these models to support BC-specific maturity assessments that simultaneously address ICT alignment, operational continuity, and governance reporting. This leaves a gap in how organizations can consistently monitor BC preparedness using a single, holistic approach.

NFPA 1600 argues that an internal assessment of the development, implementation, and progress made in a business continuity/continuity of operations program is a vital part of an entity’s growth and success. There are benefits in developing a documented method to conduct an assessment that tracks the program’s continuous improvement and progress [14]. There must be a commitment to monitoring for tracking progress through a defined period. Therefore, NFPA 1600 advises maintaining a documented program with scope, goals, performance, objectives, and metrics for program evaluation [14]. Regardless of the selected approach, a continuous focus on a quantifiable process and its use at all levels of the organization will provide the maximum benefits.

Monitoring the present state can help the organization to set short-term through long-term goals, track progress, and eliminate waste in cost and effort [14]. It can also help justify expenses and substantiate the need for capital, personnel, and other process components that can help improve the implementation of business continuity. Thus, a specific method of applying a self-assessment (and maturity model) must define the key concepts and their elements. It also must define a scoring process method to record its compliance with the model. Finally, it must implement a method to distribute the model, train the participants, gather results, and prepare a summary for all interested parties. Best practices, lessons learned, and other criteria discovered during the assessment can be shared throughout, resulting in process improvement for the organization.

To bridge this gap, this study proposes a structured self-assessment model grounded in BC standards and ICT governance frameworks.

The model integrates concepts from ISO 22301, CMMI, COBIT, and ITIL to form a coherent evaluation system that enables organizations to assess their BC maturity, track performance, and guide continuous improvement.

It introduces a scoring methodology and evaluation procedures that transform abstract standards into concrete metrics, thereby supporting operational decision-making, transparency, and strategic alignment.

Performance must be managed at all levels of the business and be a key factor for process change. CMMI recognizes the importance of understanding an organization’s current level of performance and the extent to which it aligns with actual business needs and goals [46]. If performance does not correspond to business needs and goals, process improvement is used to raise performance to the necessary level. In this sense, CMMI was built with a focus on performance and continuous improvement, making it easier for organizations to measure performance frequently to assess the impact on the business over time.

From the perspective that measurement is necessary to achieve business results, CMMI emphasizes that “what gets measured gets done” and that measurement requires investment. Therefore, it must be ensured that there is a reason for every measurement that is collected and that provides business value and performance improvement. It must be ensured that people understand why measurements are collected and how they are helpful for the project and the organization. However, measurement directs people’s behavior and can affect the quality of the measurement system, so more analysis should be prioritized over more measures.

As the measurement is relevant, top management must identify its needs and use the information collected to provide governance and an overview of improving and effectively implementing processes. As an example of an activity, top management supervises the appropriate integration of measurement and analysis activities into the organization’s processes [46]. The use of measurement supports the following:

- Objective planning and estimation;

- Effective monitoring of progress and performance against plans and objectives;

- Identification and resolution of process-related issues;

- Providing a basis for incorporating measurement into additional processes in the future.

2.5. Discussion on Measurement in the BCM Scope

In terms of measurement, we highlight multiple works within the BCM scope that impacted the research artifacts proposed. Starting in 2018, a few publications mentioned metrics, Key Performance Indicators (KPIs), or measurement concepts. Thus, some authors consider measurement for specific issues, covering only some of BCM [50,51,52].

Despite the acknowledged importance of measurement within BCM, there remains no consensus on a standardized, comprehensive approach to assess the maturity or performance of BCPs across organizations.

In other publications [53,54,55,56,57], measurement is focused on Recovery Time Objective, Recovery Point Objective, and Risk Assessment, not on specific metrics or assessment of the BCMS. For example, some focus on metrics for evaluating Disaster Recovery Plan performance [56].

There are other papers focused on understanding the organization’s preparedness which propose metrics or KPIs for ICT systems, although they need to consider the latest versions of the Standards [58,59,60]. Some authors focus on loss prevention, the BCM program, or project justification [61], proposing a risk index based on metrics. For example, they propose metrics for BCM or BCP justification [62], readiness, or financial loss, focusing on maintaining management support and engagement. Therefore, most of the cited studies that address metrics or KPIs for BCM or BCP are focused on financial or loss justification to maintain top management support and engagement.

Harding [55] states that measurement is to answer whether the organization can recover. Measurement provides top management with information on how the organization will recover from an incident. The BCP is improved by identifying deficiencies and awareness-raising for decision-making so that the organization can choose between accepting the risk or reducing it by applying relevant controls.

The BCP assessment is crucial for developing a supplier management program [50]. If a third-party service provider is affected by an incident or disaster, it is relevant to understand how this will affect the continuity of the organization’s business operations. Thus, Marshall [50] defines test questions for how the organization considers risk. Regarding risk drivers, Marshall [50] considers the following relevant to the supplier score: recovery time frame, unmitigated risks, adequate BCP documentation, supplier BCP testing, BCP program suitability, supplier BCP management, and a signed commitment letter to the BCP. Each risk level is assigned (low, medium, medium-high, and high) according to an interpretation given to the realization or implementation of risk drivers.

Tomsic [52] considers that effective mechanisms can be explored as long as they reflect and support organizational management standards, including a relatively informal measurement process focused on the organization’s perception of the program’s key performance indicators. Regarding formal audit approaches such as questionnaires, effective measurement depends on establishing expectations and standards for the purpose, scope, roles and responsibilities, training requirements, and activity for identified individuals or positions. An emergency management standard that establishes these measurable components allows transparent and sustainable improvements in organizational preparedness directly related to its stated objectives. Regular measurement and prioritization of resource allocation promote a cycle of continuous improvement of the Emergency Management Program [52].

However, existing studies and frameworks tend to focus on isolated metrics (e.g., RTO, RPO, risk index), specific organizational contexts (such as ICT systems), or management perceptions, rather than offering a reusable, structured system for self-assessing the overall state and maturity of the BCM program.

Regarding the measurement of a BC program, Stourac [63] suggests that a scorecard can offer benefits beyond periodic measurement by acting as a program hub. Building an effective and efficient program involves adopting a strategic vision of the accomplishments, maintaining consistent communication, providing regular reports to top management, and adhering to a well-defined yet flexible process.

In their proposal, Olson & Anderson [62] introduce a resilience scoring methodology designed to evaluate the BCP by analyzing its alignment with a predefined set of criteria, which can be tailored to suit the organization’s specific requirements. This scoring approach effectively encourages active involvement, enhances reporting capabilities, identifies risks, and assesses the overall organizational resilience. A resilience score assesses how resilient a team would be in performing its critical function if confronted with an interruption event that would require the implementation/activation of the BC plan.

After implementing the resilience score, Olson & Anderson [62] stated that the planner’s involvement significantly improved, as evidenced by the improvement in the plan’s content and greater participation in program requirements and activities. The authors argue that the score encouraged and provided additional responsibilities in that the continuity team improved communication with top management about plan content. The score also provided the ability to identify trends and make informed decisions. The highlighting of risk areas and opportunities leveraged the prioritization of resources and appropriate focus on improvement efforts. The resilience score provided the ability to assess the plan’s quality quickly.

One objective of the BIA process is to establish recovery objectives. The next step is to determine whether ICTs can match those objectives. A customizable model is presented by Ricks & Boswell [56], suitable for application in any organization, enabling adoption of an ICT-centric approach and assessing resilience capabilities effectively. The model’s output is a composite score (based on an aggregated capability score and a weighting factor) for each application that identifies these ICT services in the portfolio and their most significant gaps in capabilities. The score categories can be interpreted and communicated as follows: Inadequate (red), with a score between 0 and 18; Needs improvement (yellow), with a score between 19 and 25; and Good (green), with a score of 26 or higher. In the last stage of the assessment model, a prioritized list of corrective measures is developed for each application, considering the capability assessment [56]. Moreover, although some authors suggest score-based evaluations or checklists, few offer guidance on integrating such approaches into a scalable, user-friendly self-assessment methodology that aligns with current Standards and supports benchmarking across different organizational sizes and sectors.

We identify a set of publications addressing measurement in BC-related programs [64], although published frameworks or methods that address the question of self-assessment are limited in number. From this set of publications, we identified two methods for self-assessment: a quick self-assessment preparedness questionnaire with a score, and a questionnaire with a score for the BC-related program evolution or the initiatives for compliance.

To address these limitations, we propose a Self-Assessment System that consolidates fragmented approaches into a cohesive, standards-aligned framework. This system enables organizations to rapidly assess their preparedness using a scoring model supported by well-defined guidelines, key success factors, and metrics.

In 2003, Gallagher [58] introduced a rapid method to assess an organization’s preparedness to ensure its continuity after a severe incident. This approach involved a questionnaire to assess whether the organization had reasonable measures to reduce risks and an effective BC program. The set of 20 questions establishes where the organization stands regarding BCM. Each question has a score between 0 and 5, where 0 indicates that the topic was not addressed and 5 indicates that it reached a good point regarding the main issues addressed. The total score is qualitatively evaluated; where below 50, there is considerable work to reach a satisfactory state. Between 50 and 65, there still needs to be compliance with reasonable governance requirements. Between 65 and 80, BCM requirements are unlikely to be met. Above 80, it indicates that there is likely an effective and efficient BCM program [58].

Despite its usefulness, the self-assessment method has limitations and does not replace an independent audit of a BC program [51]. However, identifying gaps, improving the program, and raising awareness can be cost-effective. Self-assessment helps prepare the program and team members for an independent audit. Self-assessment might provide an opportunity, in a resource-constrained context, to obtain quantitative outputs about the current state that can be tracked over time to capture improvement or highlight shortcomings. Self-assessment holds significant value within any BC program. The program’s maturity level, Organizational Culture, and Management expectations will determine its specific role, as outlined in [51].

Even if proposing measurement for specific issues [51], when defining the objectives for self-assessment, one should consider the organization’s mission, vision, values and culture, the goal or purpose of self-assessment, the audience, and the scope. The output must determine the questions, how to ask them, and in what form the answers are required. Questions must be limited to the most relevant topics and capture the answers in a format that is easy to manage and make into a repeatable process. A clear and transparent process must be in place for validation and sign-off to identify who completed the assessment and who is responsible for review and sign-off, including the due date.

The NFPA 1600 [14] proposes a specific method of applying a self-assessment which can include defining the elements of each concept and providing the guidelines and minimum requirements for each element. It also suggests defining a method for the entity to conduct a scoring process to record its compliance with the model [14]. Finally, it suggests implementing a method to distribute the model, train the participants, gather results, and prepare a summary for all interested parties.

The Self-Assessment System should provide an overall BC state in organizations of all sizes. Nonetheless, it is suitable for small businesses who wish to assess their BC preparedness and readiness, even if they do not intend to use the Self-Assessment System.

We believe that the Self-Assessment System can boost the initiation of the BC program and simplify its use if questions are easily interpreted and a summary of the purpose to achieve is given using a score and semaphore color. Presenting a small but adequate amount of questions in each component can reduce the perception of complexity and allow adoption in organizations of lower capacity. It is justified by the incrementally decreasing value of each question as they have less coverage.

Quantifying BC information is challenging, yet it enhances accessibility compared to lengthy textual reports. The authors agree that organizations need a scoring system that allows benchmarking between organizations to obtain recognition for compliance and trigger the adoption of frameworks, Standards, or solutions to implement BC in the organization. Therefore, when answering the questions, the topic areas can be inferred, guidelines can emerge, and a more precise vision of what the organization should do can be discerned.

Therefore, to narrow the identified gap in BC self-assessment, we gather a set of contributions from academia and the latest Standards and incorporate all this knowledge in the proposed Self-Assessment System. Success factors, guidelines, or best practices may be used to benchmark and streamline BCP design, adequately supported by a set of metrics with defined goals. The guidelines are carefully selected in the Self-Assessment System, capturing the essence of the underlying activities metrics. By addressing the challenges and incorporating them into the proposed artifact, organizational processes were effectively supported, streamlining the establishment of the BCP.

Our approach bridges the gap between academic proposals and practical applicability, offering a method that is not only grounded in the literature and standards but also tailored for organizations with varying levels of resources and BCM maturity.

3. Methodology

This study follows the problem-centered initiation approach [65] of the Design Science Research (DSR) methodology. DSR is one of the research methods used in the Information Systems (IS) field to solve organizational issues and contribute to the resolution of complex problems [66,67]. DSR is particularly suited for this study because it emphasizes the creation and evaluation of innovative artifacts that address practical organizational problems, aligning perfectly with the goal of developing a Self-Assessment System for BCM that is both rigorously designed and pragmatically useful.

One of the aspects of the research question defined is the possibility of supporting an organization and streamlining its organizational processes, with the definition of strategic guidelines for implementing a BCP [45]. To succeed with the BC endeavor, it is necessary to break down the task into smaller, more manageable parts and tackle them gradually [68]. Implementing a BCP and developing a self-assessment can be daunting, but it can be accomplished by breaking it down into smaller, more manageable tasks. A self-assessment can help an organization identify and prioritize these tasks, making it easier to implement a BCP over time.

The ability to solve the problem and the utility, efficacy, and quality of utilizing the artifact was demonstrated and evaluated through Focus Groups with experts in business continuity and semi-structured interviews with ICT and BC professionals and managers [44].

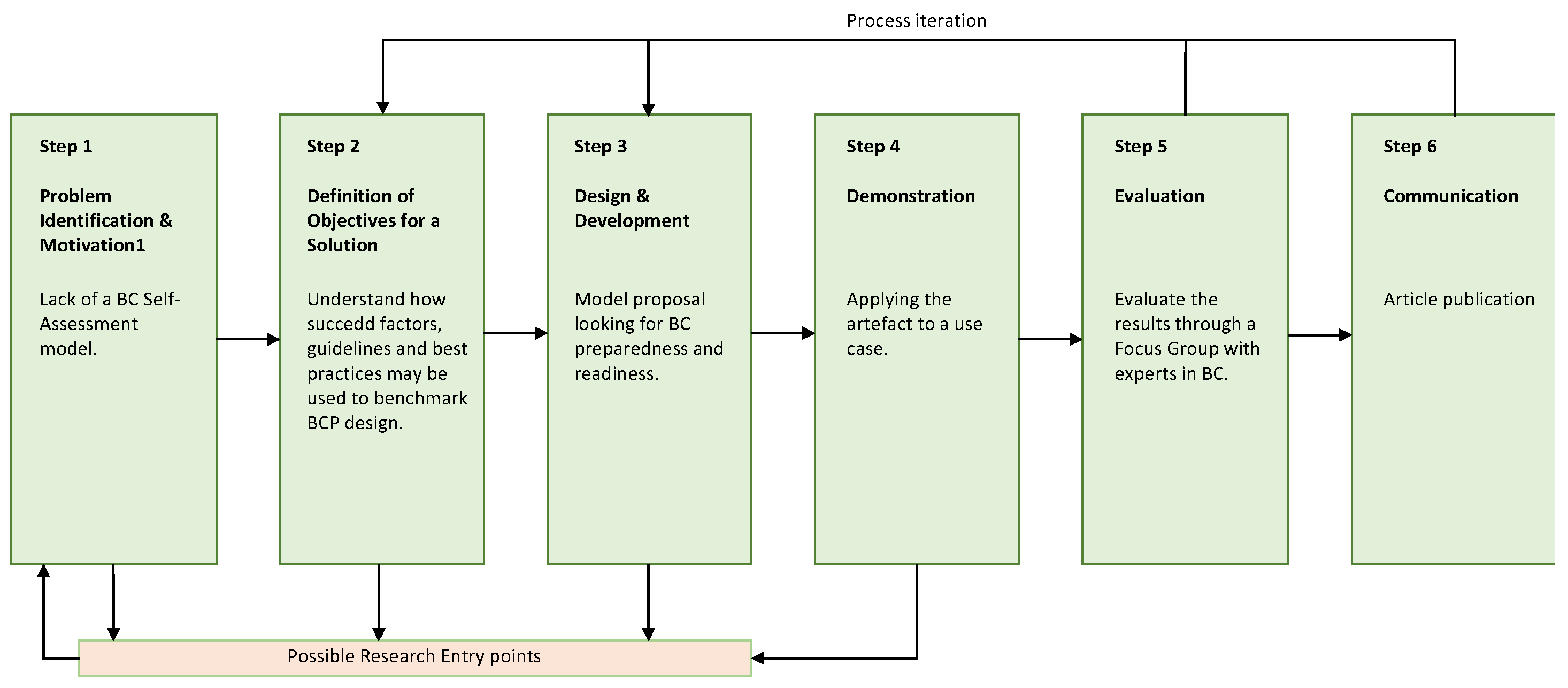

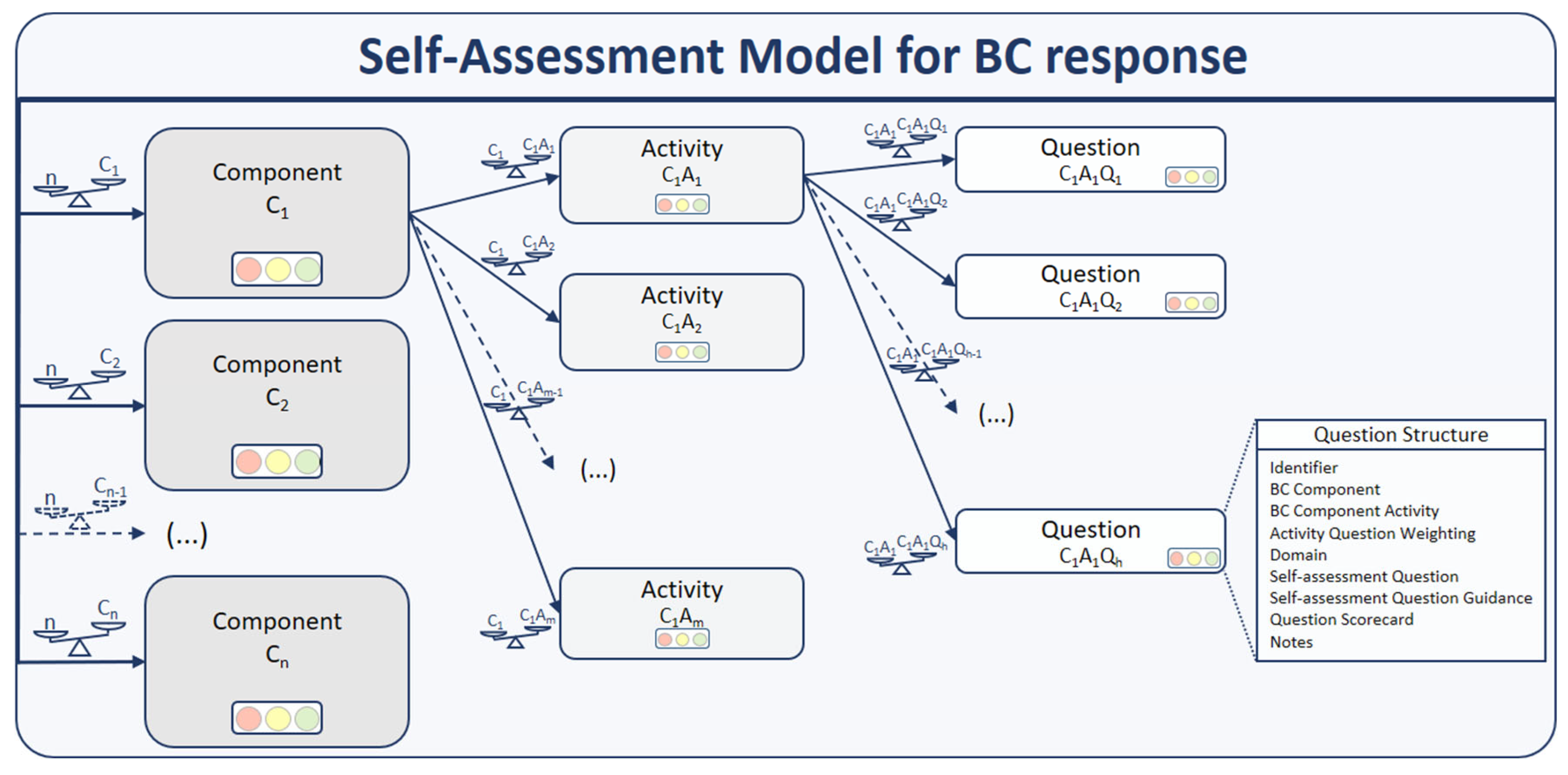

The activities in each stage of the DSR are illustrated in Figure 1, with particular relevance to steps 4 and 5, where the Self-Assessment Methodology was demonstrated and evaluated.

Figure 1.

DSR methodology applied to the research (adapted from Hevner et al. [66]).

Figure 1 illustrates the six-step DSR methodology applied in this study to develop and validate the Self-Assessment System for BCM. The details for each step are as follows:

- Step 1—Identification of the problem and motivation: The relevance of the research problem to be tackled was identified, defined, and presented [17]. The research problem was identified as the lack of a standardized and comprehensive self-assessment methodology for BCM. Organizations face challenges in implementing BCM, such as high costs, lack of expertise, and insufficient top management awareness. There is a need for a structured self-assessment tool to evaluate BCM readiness, identify gaps, and improve organizational resilience. The problem was contextualized within the broader scope of BCM frameworks, standards, and best practices, as discussed in the background research.

- Step 2—Definition of the objectives of a solution: We defined what an improved artifact could accomplish and inferred the objectives of a solution from the definition of the problem and the knowledge of what is achievable and feasible [45]. The primary objective was to develop a Self-Assessment System that could help organizations evaluate their BCM readiness, identify gaps, and improve strategic alignment. The solution aimed to provide a structured and adaptable evaluation tool that could be used by organizations of all sizes, regardless of their current BCM adoption level. The Self-Assessment System was designed to integrate with any framework in the BCM scope. During the design phase, careful consideration was given to balancing comprehensiveness with usability to ensure that the Self-Assessment System could be adopted by organizations with varying levels of BCM maturity and resource availability. This balance was a key design decision grounded in DSR’s emphasis on artifact relevance and applicability.

- Step 3—Design and development: Artefact development to solve the identified problem following the defined objectives. It comprises stating the artifact’s desired functionality [69], its architecture, and the design of the prototype. The Self-Assessment System was designed to align with the BCM Model, which includes eight components and 118 activities. The eight components of the BCM Model were derived from a systematic literature review (SLR) of 167 publications, which identified recurring themes in BCM across sectors such as healthcare, finance, and energy. Key components—including Risk Assessment (RA), Business Impact Analysis (BIA), and ICT Strategy—were prioritized based on their frequency in the literature (e.g., RA appeared in 39 publications, BIA in 58) and their alignment with established standards like ISO 22301. Components such as ‘Emergency Response’ and ‘Crisis Management’ were added due to emerging trends in disaster resilience (e.g., 11 publications addressed natural disaster strategies). A structured set of questions was developed for each activity within the BCM Model components. Questions for each activity were formulated using evidence from the SLR, where specific gaps or best practices were highlighted (e.g., 23 publications emphasized cybersecurity measures in ICT Strategy, prompting dedicated questions on incident recovery). To ensure validity, questions were iteratively reviewed by a panel of five experts with >15 years of BCM experience, covering domains like risk management (16 years), ICT governance (21 years), and cybersecurity (22 years). Discrepancies were resolved through Delphi rounds until consensus was reached on clarity and relevance. These questions were designed to assess the organization’s alignment with BCM best practices and standards. The questions were weighted based on their relevance and importance to the overall BCM process. Weightings were empirically determined through two methods: (1) a frequency analysis of SLR findings (e.g., ‘ICT Strategy’ received higher weights due to representation in 129 publications, versus 11 for supply chain risks), and (2) expert scale scoring (1–5) during the Focus Group session. For instance, metrics tied to data recovery (RTO/RPO) were weighted 30% higher than generic policy checks, reflecting their critical role in 70% of disruption scenarios analyzed in the literature. The Self-Assessment System was formalized as a rapid assessment tool, enabling organizations to quickly evaluate their BCM readiness and identify critical issues. The system was designed to be user-friendly, with clear instructions and visual indicators (e.g., scorecards with color-coded results) to help users interpret their scores and identify areas for improvement. The artifact design followed an iterative development approach, allowing for continuous refinement based on expert feedback and empirical evaluation. This iterative process ensured that the Self-Assessment System was not only theoretically sound but also practically viable and responsive to real organizational contexts. The system underwent an iteration based on feedback from the Focus Group. For example, initial questions about ‘cloud-computing redundancy’ (from 11 SLR publications) were simplified after users noted technical complexity, while ‘crisis communication’ metrics were expanded following participant reports of ambiguity during simulated cyberattacks.

- Step 4—Demonstration: The feasibility of the artifact was demonstrated, allowing for an accurate assessment of its suitability for its purpose. With proof of concept, we demonstrated use of the artifact to address case scenarios through simulation. The resources required for the demonstration include adequate knowledge of how to use the artifact to solve the problem [65]. The Self-Assessment System was demonstrated through a Focus Group session with experts in business continuity, ICT governance, risk management, and cybersecurity. Participants were given a hands-on opportunity to interact with the system and simulate its application within their own organizations.

- Step 5—Evaluation: A design artifact’s utility, quality, and effectiveness must be rigorously demonstrated through well-performed evaluation methods [66]. The business context defines the criteria for artifact evaluation. The assessment involves integrating the artifact into the business’s technical infrastructure. How well the artifact supports a solution to the problem was observed and measured [44]. It included objective quantitative performance measures, using questionnaires to evaluate the artifact’s characteristics. This quantitative evaluation employed validated scales (1–5) according to the domain of the problem. Participants rated each attribute on, for example, clarity, relevance, and usability. Open-ended responses were thematically grouped to contextualize scores. BC experts and professionals in the ICT and BC area were selected from various activity sectors. We evaluated the artifact’s completeness and the quality of the changes resulting from the iterations [44]. The artifact evaluation enabled the development of a valid artifact aimed at reducing the identified problem. The evaluation strategy incorporated both qualitative and quantitative measures to rigorously assess the artifact’s utility, quality, and effectiveness. Participant responses were analyzed using a thematic analysis approach [70], combining deductive coding based on the 16 predefined attributes (e.g., clarity, adaptability) and inductive coding for emergent themes. The authors coded the Focus Group transcripts, with discrepancies resolved through consensus. To ensure validity, coded data were cross-validated against questionnaire responses for usability, relevance, and clarity metrics.By involving diverse stakeholders from different sectors, the study ensured comprehensive validation of the Self-Assessment System’s capability to identify BCM gaps and support continuous improvement.

- Step 6—Communication: Communicate the problem and its relevance, the Self-Assessment methodology artifact, its usefulness and novelty, the rigor of its design, and its effectiveness for researchers and professionals in the area. The importance and usefulness of the research and the various step results of the research were communicated to the scientific community, professionals, and interested organizations [44,45,64]. Our objective is to submit further scientific articles to journals to disseminate our findings and contributions widely. This research contributes to the DSR body of knowledge by providing a validated artifact that addresses a recognized gap in BCM assessment, demonstrating how design science principles can be effectively applied to develop tools that enhance organizational resilience and strategic management of BC.

4. Problem Identification and Motivation

In the previous section, the methodology was described. In this section, the research problem is stated, corresponding to step 1 of the DSR, considering what was discussed in theoretical background, in Section 2.

Due to feasibility concerns and associated costs, there are constraints and limitations throughout the BCMS implementation [43]. There are multiple efforts in the scientific and professional communities to systematize and reduce the complexity of BCMS implementation. The literature also reports various challenges to launching a BCM program, like the qualified expertise necessary to design and implement a BCP [71,72]. These constraints may be partly justified because the organization’s top managers must be more aware of the benefits of continuity planning [73]. Furthermore, personnel may require additional resilience or recovery skills and assistance in understanding the importance of implementing a BCMS [74].

Medium-sized and small enterprises are more vulnerable to disasters due to limited financial and human resources and inadequate technological capabilities to recover from disasters [16]. Specific organizations delay BCP implementation for various reasons, such as design restrictions driven by technical or financial challenges or different interpretations of requirements [72]. Additionally, restrictive company policies or time constraints to finish the project might justify postponement [28].

Apart from these hurdles, some organizations may need more proactiveness in BC planning and Disaster Recovery [75]. This lack can lead to severe consequences, including damage to reputation and market share, impaired customer service and business processes, regulatory responsibility, and extended downtime and system recovery times. Thus, more organizations, particularly in the public sector, must implement and be certified in BCM Standards [76].

In his research, Stourac [63] proposes implementing an annual scorecard to ensure program realization to develop a measurable and successful BC program. Similarly, the author of [77] argued that only a limited number of methods are available to assess and measure how an organization allocates its time and resources to address the essential areas within BCM components. Zeng & Zio [61] propose their Framework for BC quantitative evaluation, highlighting the need for more clearly defined business metrics in the studied models.

Based on these models, BC quantitative analysis is therefore unfeasible, restricting its practical use [61]. The framework proposes four BC quantitative metrics based on the potential loss caused by disruptive events.

Although these studies confirm the relevance of measuring under the BC scope, they primarily focus on measuring an established BCM program or one of its components, such as metrics for ICT systems, disaster recovery plans, risk management, and financial loss or justification. We identified the need for a self-assessment system that covers all phases of a BCMS, including awareness and commitment of top management, understanding the organization and its information flows, and business processes. By integrating these components, the organization can identify risks and prepare measures and solutions to avoid or mitigate them. Assessing the BC planning and implementation phases, as well as conducting training, tests, and exercises under the PDCA life cycle, is essential.

5. Proposal

In the previous section, the research problem was defined, highlighting the challenges in BCMS implementation, such as cost, expertise, and management awareness, and emphasizing the need for a comprehensive self-assessment system covering all BCMS phases. In this section, the objectives and requirements of the Self-Assessment System are presented, detailing its integration with a BCM Model to help organizations evaluate their BCM readiness, transition to self-assessment, and establish a solid foundation for BCMS implementation.

5.1. Objectives and Requirements

Self-assessment tools are more likely to be effective when they are appropriate in conceptual scope and assessment content, provide diagnostic guidance, and have high validity [78]. Thus, to inform the developed Self-Assessment Methodology, we integrated it with the Framework for the Multidisciplinary Assessment of Organizational Maturity on Business Continuity Management (FAMMOCN) [44]. FAMMOCN defines a Model with components and activities organized to manage business continuity. Each component is organized into domains of action to make it easier to identify the activities’ context. Each domain describes the activities that detail, among other things, the projects, actions, tasks, intents, initiatives, strategies, or policies that may be addressed, measured, or assessed.

It also provides an Implementation Guide with FAMMOCN elements and implementation aspects.

The Self-Assessment System, integrated within FAMMOCN, was designed to assist organizations in assessing their current state of BCM and identifying potential solutions for significant BC concerns. By utilizing the Self-Assessment System, organizations can better understand their BCM’s strengths and weaknesses and develop strategies to improve their overall readiness for business continuity.

A structured set of questions was developed for each activity within the components of the BCM Model. Developing and integrating an assessment system with a model that supports it can be challenging, particularly when preparing an organization to plan a continuity response. This preparation must fulfill the organization’s requirements to make the self-assessment system easy to apply while ensuring a robust and reliable continuity response.

The Self-Assessment System formalizes the rapid assessment process based on the BCM Model and the reviewed literature. The system defines and systematizes questions for each BCM activity, enabling organizations to identify critical issues that must be addressed in their BC activities and determine the appropriate course of action.

The Self-Assessment System was developed by selecting the most relevant questions inferred through analysis of the BCM frameworks, Standards, and reviewed literature to assess multidisciplinary BC preparedness. Therefore, its purpose is to provide a quick overview of the organization’s state of BC readiness. The Self-Assessment System can be used by organizations of all sizes to assess their preparedness and readiness for BC, regardless of whether they plan to adopt a BCM Framework or are currently evaluating it for adoption.

5.2. From Self-Assessment to Measurement

Considering the structure of the BCM Model [44], we outlined a strategy to address the identified constraints across the various stages of organizational business continuity progress. The Implementation Guide supports using the Self-Assessment System in the initial stages of developing a BC response. Once the organization is sufficiently prepared, conscious, and disciplined in BC, it can use a measurement system to measure its BC preparedness and response systematically.

As BC awareness increases, it becomes relevant to strengthen organizational discipline and improve underlying BC processes, such as through the PDCA cycle or BCMS implementation.

The Self-Assessment System can set a starting point for the BCMS establishment. Prompt self-assessment enables internal and external benchmarking and visualization of the organization’s present state. Ultimately, the objective is to support raising the commitment and awareness of Top Management to secure the required investment to develop a suitable BCMS for the organization.

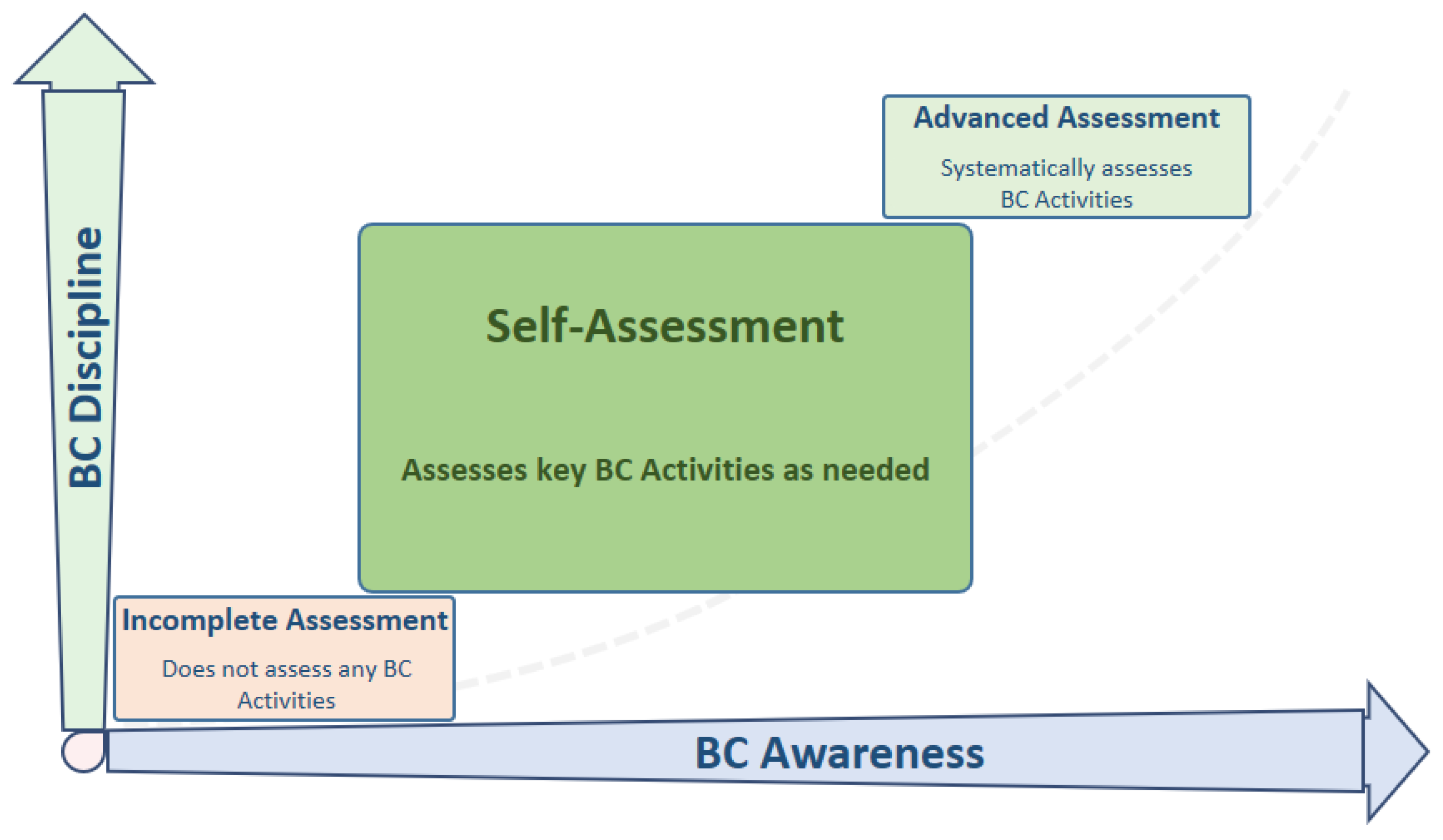

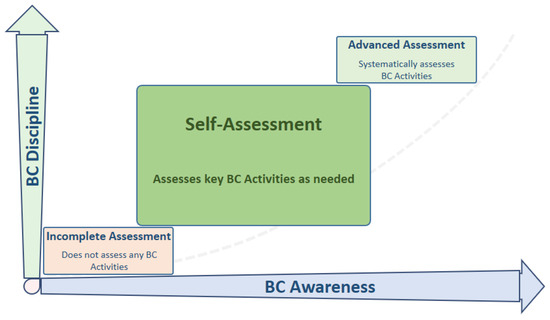

However, as organizations adapt and their BC awareness grows, it is relevant to increase discipline in the processes that ensure the management of the organization’s BCMS. In this context, if an organization decides to establish measurement as a management practice, it may undertake periodic and systematic assessments of its organizational maturity in the BC area, which correspond to advanced assessment, as depicted in Figure 2.

Figure 2.

Stages of BC assessment in the organization.

Figure 2 is inspired by the stages of the CMMI process discipline, emphasizing that the foundation of process improvement lies in instilling discipline in the organization’s culture [46]. Therefore, it is recommended that the organization self-assess its BC preparedness during a preparatory stage to quickly obtain an overall perspective. At this stage, the Self-Assessment System is used, considering essential activities within components, with compliance reported by quantitative answers to written questions in a simplified and straightforward manner.

Figure 2 illustrates that as BC awareness grows, there is a need to enhance organizational discipline and improve the underlying BC processes, for example through the PDCA cycle or a BCM System. To support organizations in various stages of BC, valuable assistance is required in initiating, planning, implementing, maintaining, reviewing, and improving the response, recovery, resumption, and restoration of business processes, focusing on ICT.

The Self-Assessment System provides a BC overall view of the organization, which may be a starting point for the BCMS implementation. The quick self-assessment enables the organization to visualize its present state and establish internal and external benchmarking. Thus, the objective is to support increasing the top management’s commitment and awareness to attract the necessary investment to start and establish an appropriate BCMS.

Nonetheless, it is crucial to adapt and monitor the progress of BC awareness and the need for enhanced discipline in the processes that guarantee effective management of the organization’s BCMS. As a result, systematic assessment can transition into systematic measurement, entailing the definition of a scalable array of metrics for each activity. Each metric should evaluate an initiative that aids in achieving a certain level of activity readiness, preparedness, or compliance. Each metric’s purpose is to guide the organization to understand what must be considered to achieve the activity’s objective.

5.3. Self-Assessment System

The organization can self-assess its preparedness for BC in a preparatory phase for continuity and to capture a brief overview. The organization can establish a starting point for implementing a BCP through the Self-Assessment System support application, agilely measuring its current state of multidisciplinary preparedness in the BC area. At this stage, essential activities in each component are considered, reporting their compliance through written questions in a simplified and straightforward manner.

In selected activities for each BCM Model component, the organization directly answers self-assessment questions. A single activity may have several questions. The total score will be the sum of the weighted scores for each component, reporting model compliance.

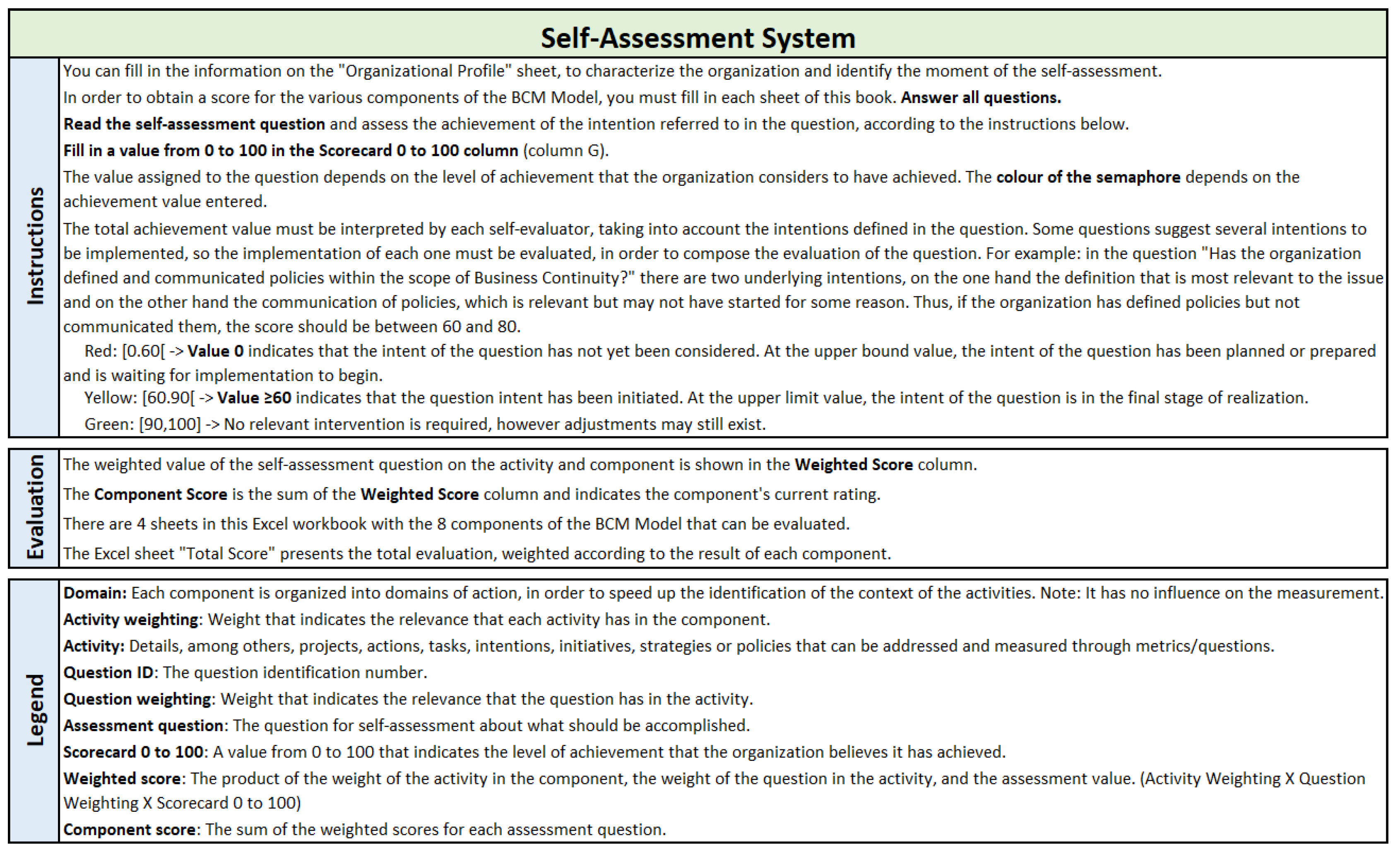

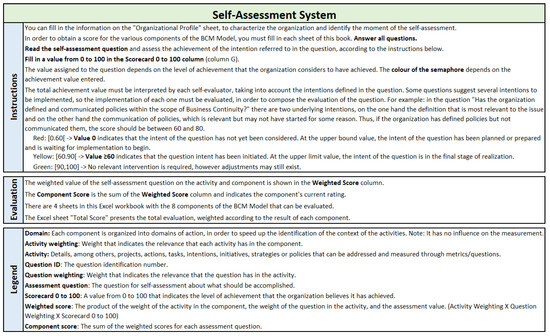

The Self-Assessment System tool provides instructions to assist the evaluator in understanding and interpreting the questions available, as depicted in Figure 3.

Figure 3.

Self-Assessment System instructions.

The instructions assist the evaluator in understanding the range of values to use, what they mean, and how the Self-Assessment System is presented in the application tool.

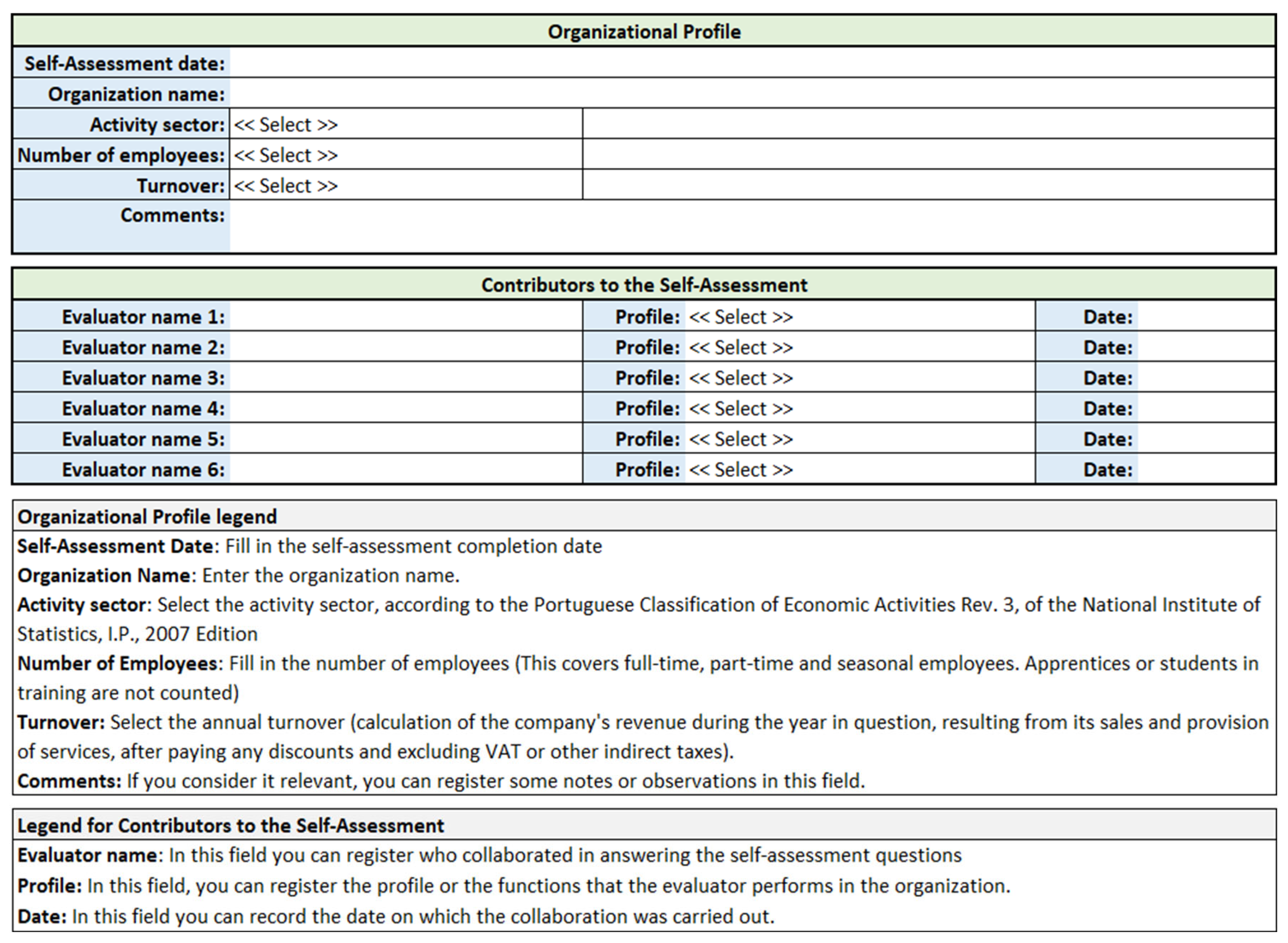

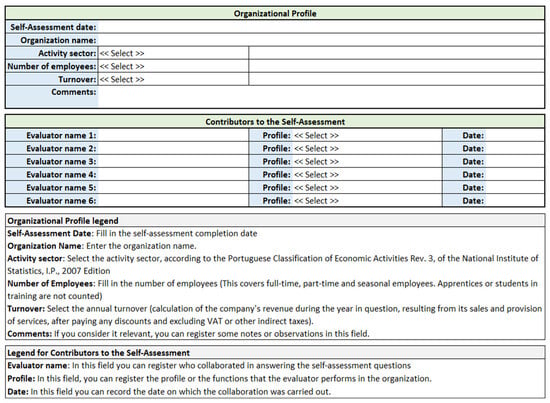

Figure 4 depicts the organization’s profile information and all the evaluators participating in the assessment.

Figure 4.

Self-Assessment System profile.

This information is relevant for describing the organization and maintaining a benchmarking dataset. This benchmark is internal for comparing previous assessments but can be used for external benchmarking with other organizations.

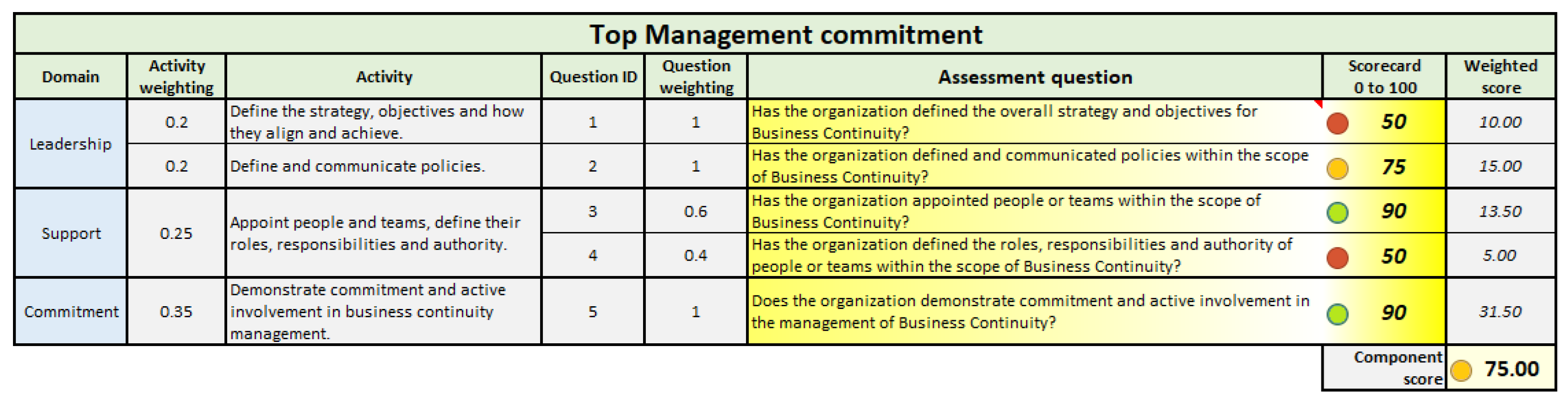

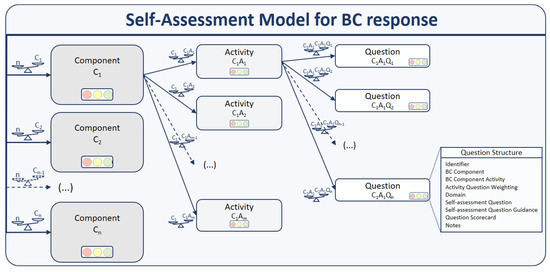

The total score will be the sum of each component’s weighted score, demonstrating compliance with the BCM Model. Figure 5 depicts the Self-assessment Methodology.

Figure 5.

The Self-Assessment Model for BC response.

Figure 5 depicts the question’s structure with nine attributes. In an activity, each question has a weight. The activities are weighted for each component, which in turn is weighted, providing their contribution to the assessment of the total score of the organization. Components, activities and questions can be added according to the evolution of the model, this configuration being represented by (…) in the dashed arrows in Figure 5.

Thus, the metrics structure concepts apply to the Self-Assessment Methodology. However, only a subset of attributes are required for the self-assessment. The considered attributes in the Self-Assessment Methodology are presented in Table 1.

Table 1.

Question structure attributes used in the Self-Assessment Methodology.

The “Self-assessment Question Guidance” attribute in Table 1 indicates what is expected to be accomplished by the system user. Thus, this system assists the organization in understanding what has to be done within the BC scope, which is one of the research objectives.

Table 1 presents the protocol for assessing a component using the Self-assessment Methodology.

Table 2 shows the explanatory notes for each attribute:

Table 2.

Protocol for a BC component self-assessment.

- 1 *—The domain of the activity. It does not influence measurement.

- 2 *—The weighting (p) of activity A is referenced by pA. The sum of the weightings of all the essential activities in the component is 100.

- 3 *—Essential activities for the BCM Model component in the Self-assessment Methodology.

- 4 *—The question identification number.

- 5 *—The weighting (p) of question A1 in activity A. The sum of the question weightings for each activity is 100.

- 6 *—The self-assessment question no. 1 of activity A (A1), defined for domain X1. There may be multiple questions for a single activity.

- 7 *—The scorecard of the self-assessment question A1 (sA1). A value between 0 and 100 is accepted, represented by the semaphore. Red (<60), yellow (≥60 and <90), or green (≥90). The Self-Assessment System adopts the FAMMOCN maturity model, and the resulting score can be interpreted as an indicator of the organization’s relative maturity in BCM preparedness. The scoring scale uses visual semaphores: red, yellow, and green, which offer an intuitive view of performance:

Red (0–59) suggests the organization has significant gaps and should prioritize foundational actions such as raising awareness, allocating roles, and defining policies.

Yellow (60–89) indicates that key activities have been initiated but are not yet systematically integrated; organizations in this range should focus on standardizing processes, formalizing documentation, and increasing training and testing frequency.

Green (90–100) reflects a well-established and integrated BCMS; however, continuous improvement should still be pursued, with efforts toward optimization, performance tracking, and external validation.

These ranges are not rigid levels of maturity but serve as diagnostic indicators to help organizations tailor their next steps according to their current preparedness stage.

- 8 *—The result of the self-assessment question is the product of the weighting in the activity (pA) by the metric weighting (pA1) and the entered value that defines the achievement of the intention defined in the self-assessment question (sA1). The weighted score of the question is referenced by spA1.

- 9 *—The component score is the sum of the weighted results of each self-assessment question. This value assumes values between 0 and 100.

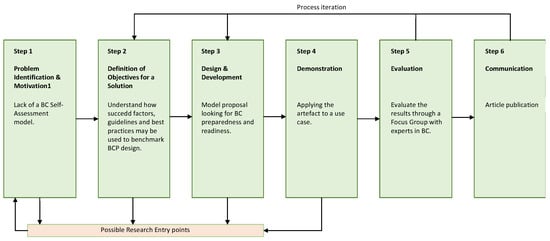

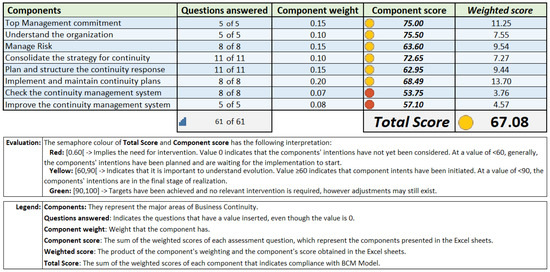

The self-assessment of multidisciplinary preparedness in the BC area follows a defined protocol. The total score achieved will be the sum of the weighted score values of each component, which reflects compliance with the BCM Model, as shown in Table 3.

Table 3.

Protocol for self-assessment of all components.

Table 3 provides explanatory notes on what is considered in order to obtain the total score:

- 1 *—The BCM Model component;

- 2 *—The number of questions answered out of the total of 61 questions defined. It is considered answered if the value is between 0 and 100;

- 3 *—The weighting (p) of the CA component is referenced by pCA. The sum of the weightings of all components is 100;

- 4 *—The score of the component resulting from its assessment (score) (see Table 2). A value between 0 and 100 is accepted, which is represented by the semaphore: red (≥ 0 and < 60), yellow (≥ 60 and < 90), or green (≥ 90 and ≤ 100);

- 5 *—The result of the component of the self-assessment is the product of the component weighting (pCA) by the value of the component score (score). The weighted score of the component is referenced by spCA;

- 6 *—The total score is the sum of the weighted results of each component. This value assumes values between 0 and 100.

5.4. Components

The BCM components of the Self-Assessment System were derived from an extensive Systematic Literature Review (SLR) of existing BCM frameworks and models. This comprehensive analysis identified key areas essential for effective BC planning and response. The SLR encompassed various studies, including those that explored trends in BC planning and the development of BCM maturity models [45]. For instance, recent research [79] analyzed the existing literature to identify BCM processes and maturity models, providing valuable insights into the structuring of BCM components.

Additionally, studies such as [80] discussed the need for a BCP framework to handle threats or disasters, highlighting the importance of structured components within BCM. These insights, among others, informed the selection and definition of the eight components and 118 activities within the BCM Model, ensuring a comprehensive approach to organizational preparedness and resilience.

The recent literature continues to emphasize the significance of structured BCM frameworks. A study by Ostadi et al. [81] conducted an SLR to analyze organizational resilience, business continuity, and risk, contributing to the understanding of process resilience and continuity. These ongoing studies reinforce the relevance of the components identified in the BCM Model and support their application in current organizational contexts.

Therefore, the BCM Model encompasses relevant areas in BCM and defines eight components and 118 activities [44]. The strategic guidelines within the model are organized into components that must be addressed in the event of an interruption or disaster.

The demonstration and evaluation of the Self-Assessment Methodology revealed that a quick and efficient assessment that results in a score and allows for benchmarking with other organizations is crucial to justify BC. It was determined that the initiation of the BC program and ease of use of a BCM framework could be improved if each component included self-assessment questions. The questions should be of clear and concise interpretation (self-assessment) and use a visual indicator to describe the purpose of the activity. Furthermore, it was considered that a self-assessment was necessary for overall recognition of compliance and could increase the adoption of a BCM framework and implementation of BC within an organization.

Each component is structured into operational domains to facilitate identifying the context of activities. Thus, each domain outlines the activities that detail the projects, actions, tasks, intentions, initiatives, strategies, or policies that can be addressed and assessed.

Hence, the activities with the highest relevance and added value were selected to represent the essential strategic guidelines defined in the model and emphasized by the Standards. This emphasis is reflected in the weight given to each activity for the BC component. Some activities may contain multiple self-assessment questions. In these cases, the relevance of each question is quantitatively distributed through the weighting attribute.

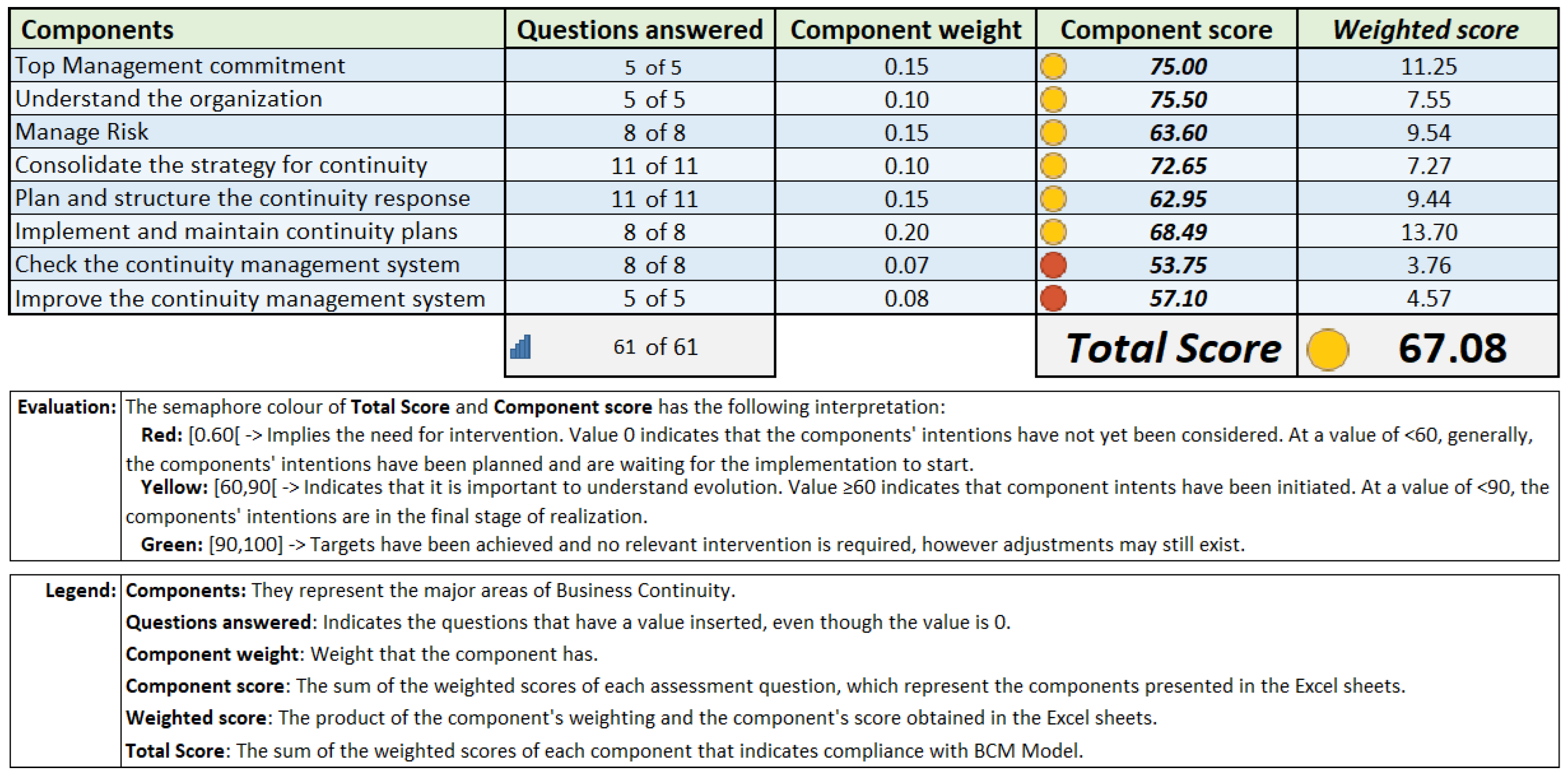

Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 list the components of the Self-Assessment System and their related activities. Each component has a set of questions that were filled with random values in the presented cases.

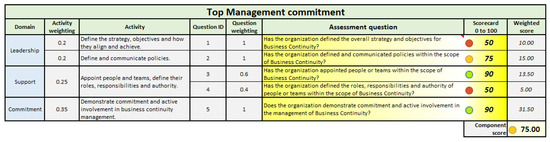

Figure 6.

Self-assessment questions for the Top Management Commitment component.

Figure 6 focuses on the “Top Management Commitment” component and continuous management activities that support the BCM program. Top management must demonstrate leadership and commitment and support BCMS activities.

Figure 6 summarizes Top Management’s responsibilities: define the strategy and objectives, define policies, appoint BC teams, ensure adequate resources, and demonstrate active involvement in the BCM.

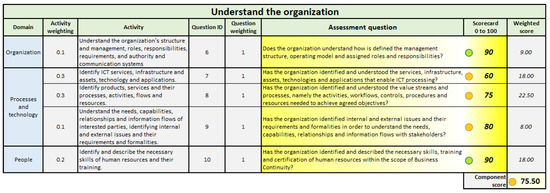

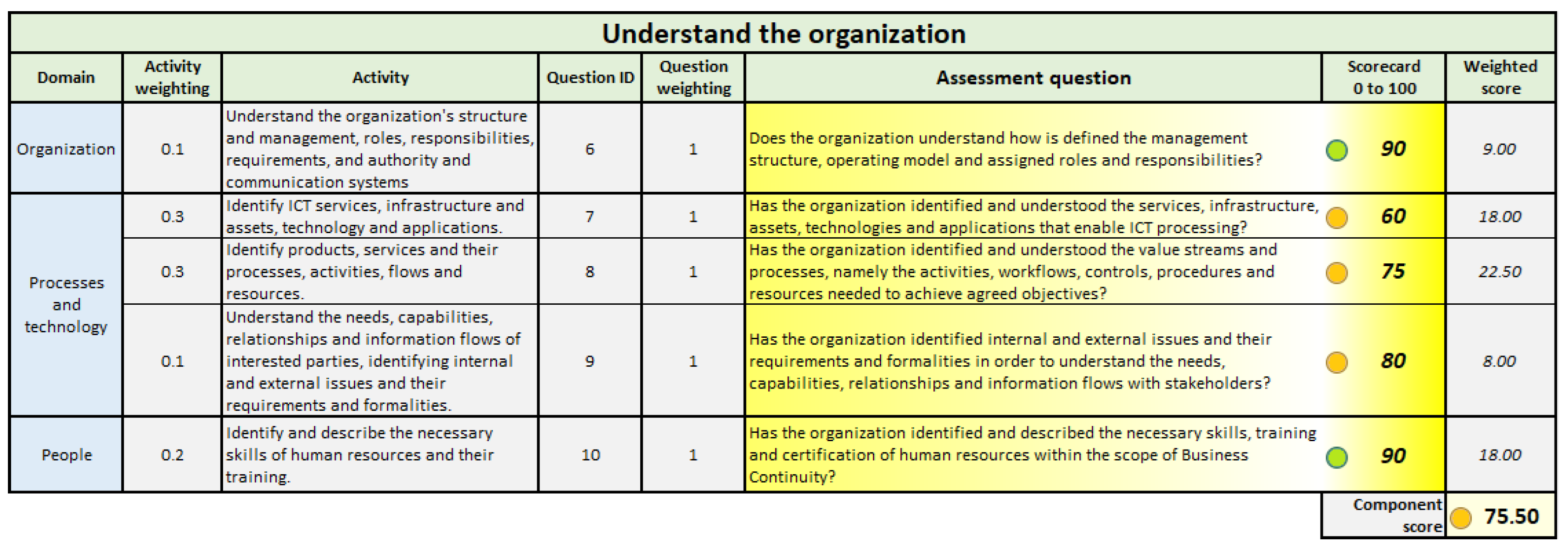

The component “Understanding the organization” in Figure 7 aims to determine which factors are relevant to the organization’s mission, which involves delivering products or services and impacting the expected BCMS results.

Figure 7.

Self-assessment questions for the Understand the Organization component.

Figure 7.

Self-assessment questions for the Understand the Organization component.

As a summary of Figure 7, the organization must understand its organizational structure and culture, the products and services delivered and the related business processes, the information flows, and necessary technologies.

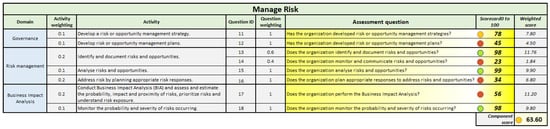

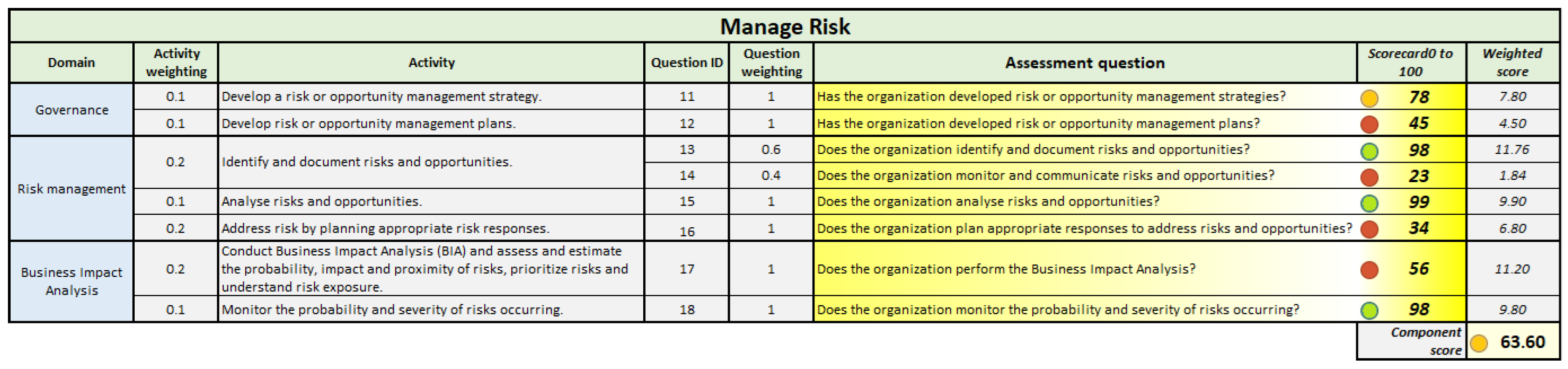

Figure 8 depicts the “Manage Risk” component, which aims to determine risks based on the outcomes of Understanding the Organization, assess the impact of risks and opportunities identified, and plan risk management according to the defined strategy.

Figure 8.

Self-assessment questions for Manage Risk component.

Figure 8.

Self-assessment questions for Manage Risk component.

As a summary of Figure 8, the organization must develop risk management strategies and plan its activity to cope with risks. Risks must be analyzed, evaluated for their business impact, and treated with the appropriate response that ensures continuity and predefined readiness.

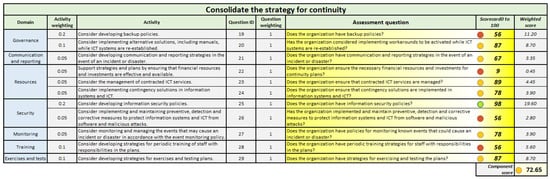

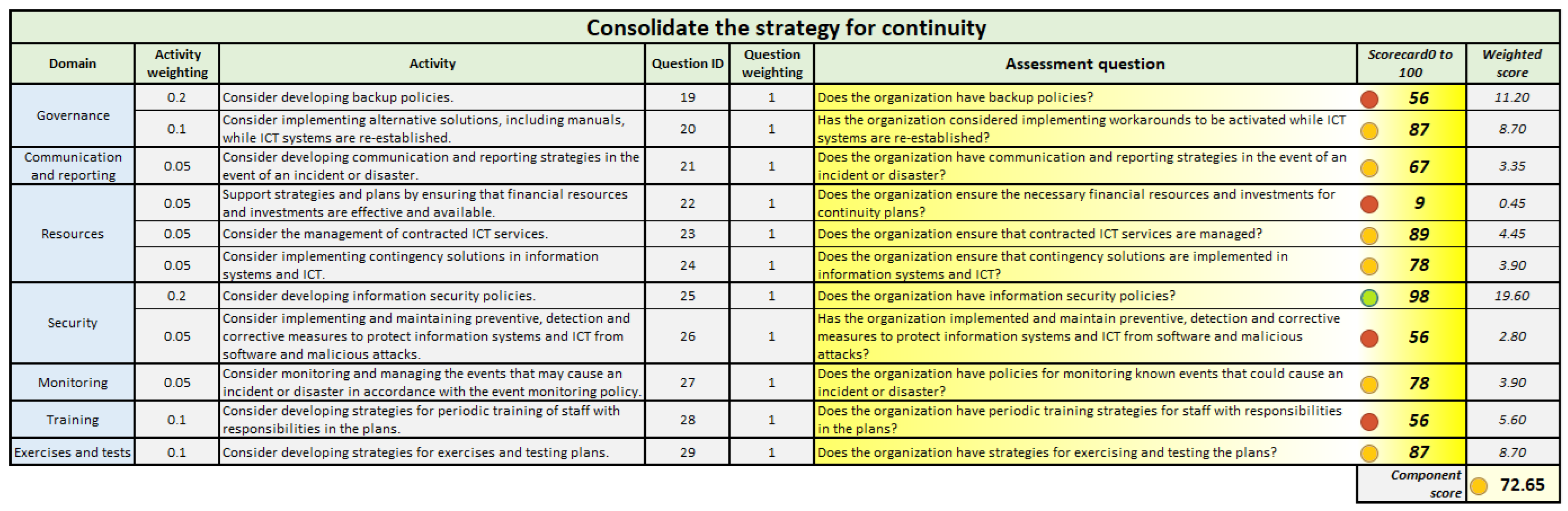

Figure 9 depicts the component “Consolidate the Strategy for Continuity”, which strives to establish strategies that allow the BC objectives to be met, following the continuity requirements and available resources.

Figure 9.

Self-assessment questions for the Consolidate the Strategy for Continuity component.

Figure 9.

Self-assessment questions for the Consolidate the Strategy for Continuity component.

Figure 9 highlights how the organization should consider the strategy for its BCMS, the communication in case of an incident or disaster, and contingency strategies regarding the delivery of products and services. Staff assistance is relevant, as is facilities safety and maintenance. The ICT strategy and security must be established following the defined recovery times. This set of activities should integrate the development of strategies for training on continuity and plans. The organization should also consider strategies for exercises and tests to ensure the effectiveness and efficiency of preparedness, readiness, and responsiveness to disruptive events impacting the activity.

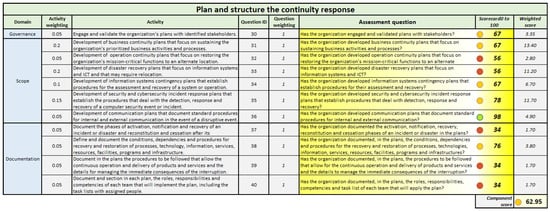

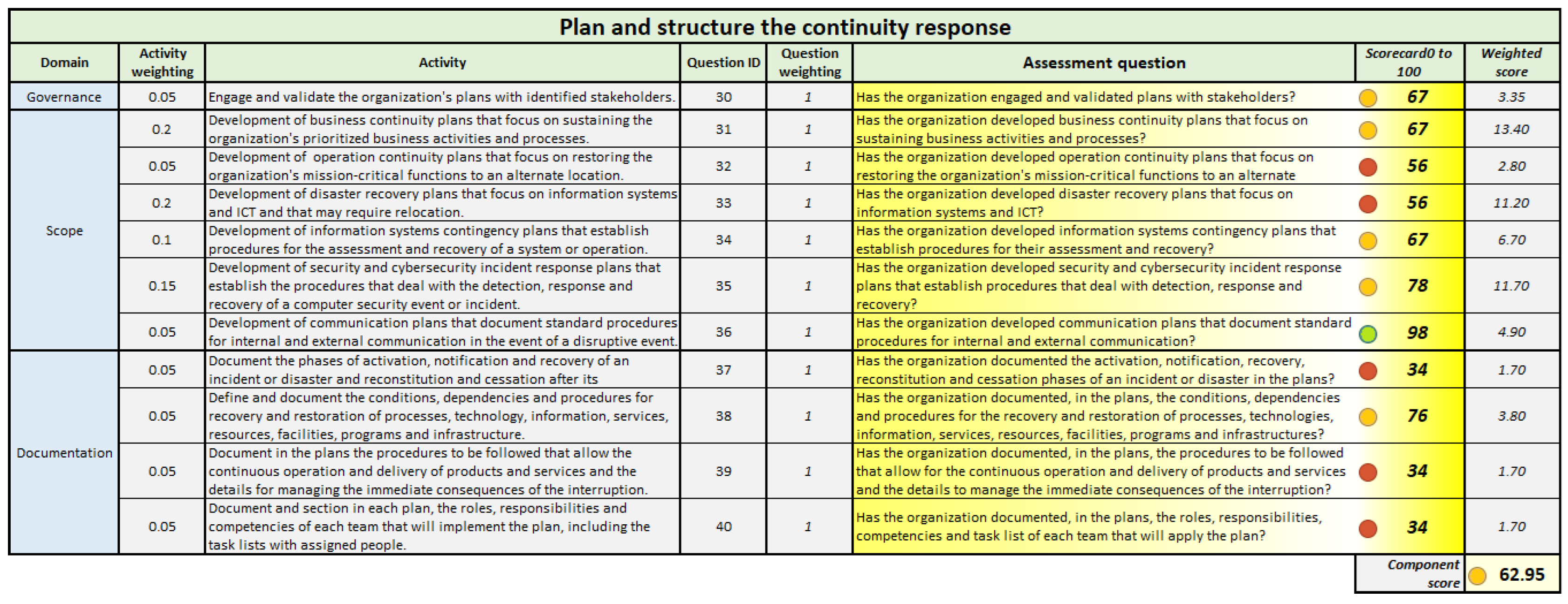

The “Planning and Structure the Continuity Response” component in Figure 10 aims to develop and document the plans and capacity required to execute the stated strategy and the BCM program.

Figure 10.

Self-assessment questions for the Plan and Structure the Continuity Response component.

Figure 10.

Self-assessment questions for the Plan and Structure the Continuity Response component.

To summarize Figure 10, the organization should develop and document the plans in the BC scope that it considers appropriate, according to the strategies defined and the BIA. The following procedures must be included in the documentation according to the incident or disaster stages.

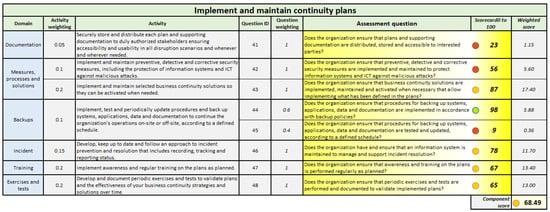

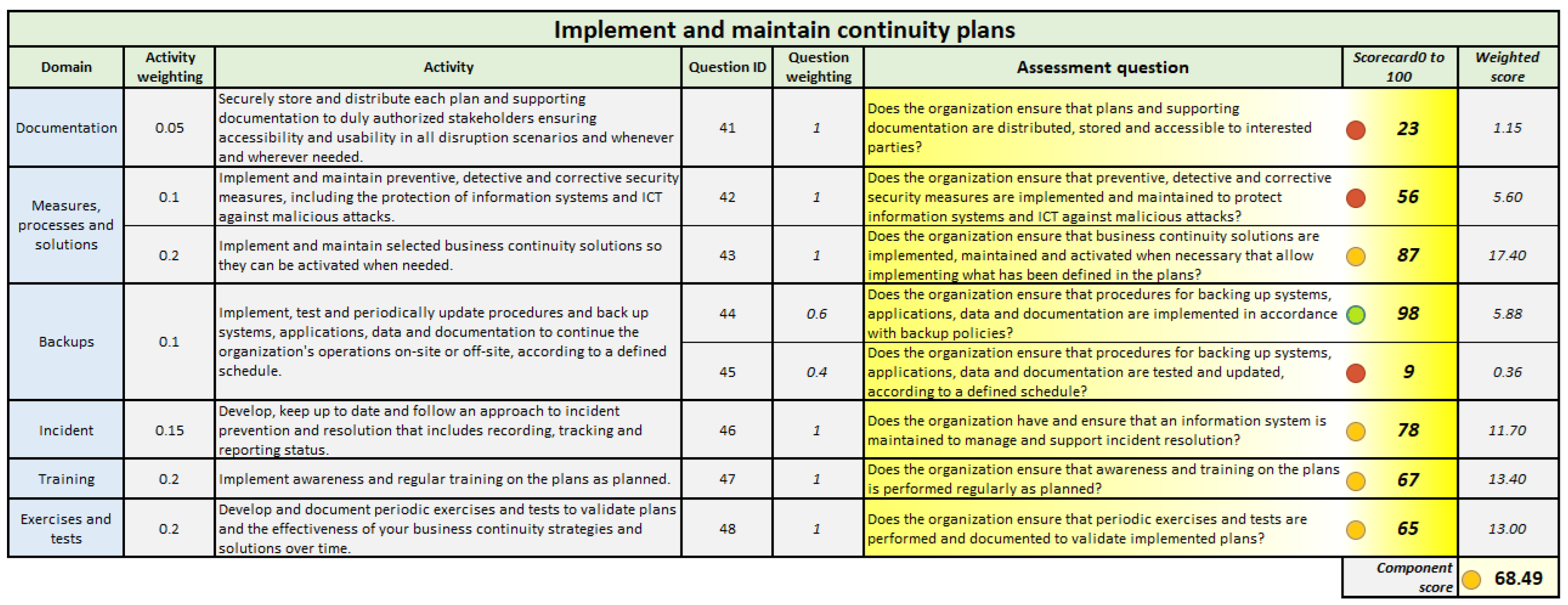

Figure 11 depicts the “Implement and Maintain Continuity Plans” component, aiming to implement the directives, actions, solutions, and processes required to accomplish the continuity objectives as planned.

Figure 11.

Self-assessment questions for the Implement and Maintain Continuity Plans component.

Figure 11.

Self-assessment questions for the Implement and Maintain Continuity Plans component.

Summarizing Figure 11, the organization must implement continuity solutions that provide security, continuity, and compliance with the defined requirements, implement and maintain training within the BC scope, and perform exercises and tests as planned. Having the required resources available before, during, and after a disruption is essential.

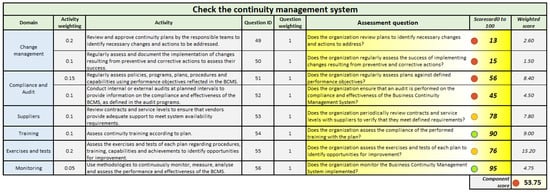

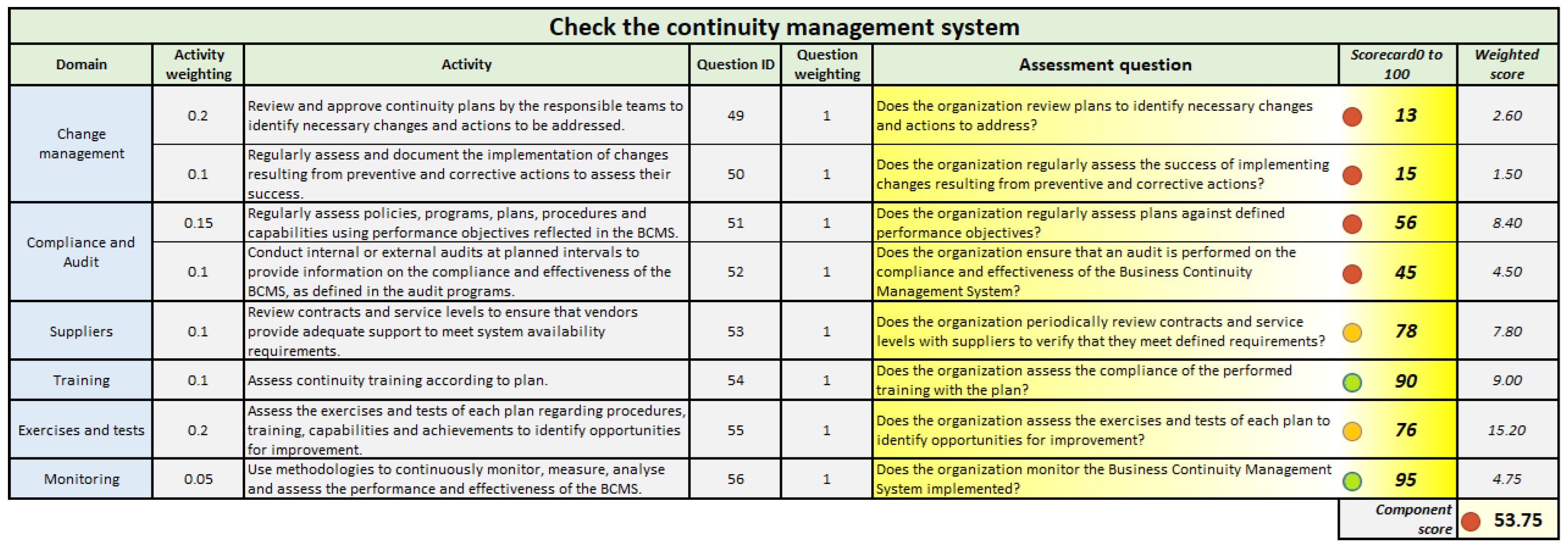

Figure 12 depicts the “Check the Continuity Management System” component that advises the organization to check the BCMS’s adequacy, effectiveness, and requirements.

Figure 12.

Self-assessment questions for Check the Continuity Management System component.

Figure 12.

Self-assessment questions for Check the Continuity Management System component.

As a summary of Figure 12, the organization should review the continuity plans to check compliance with the requirements. The changes, the training and its materials, the exercises, and the tests performed should be evaluated to check the adequacy and identify opportunities for improvement.

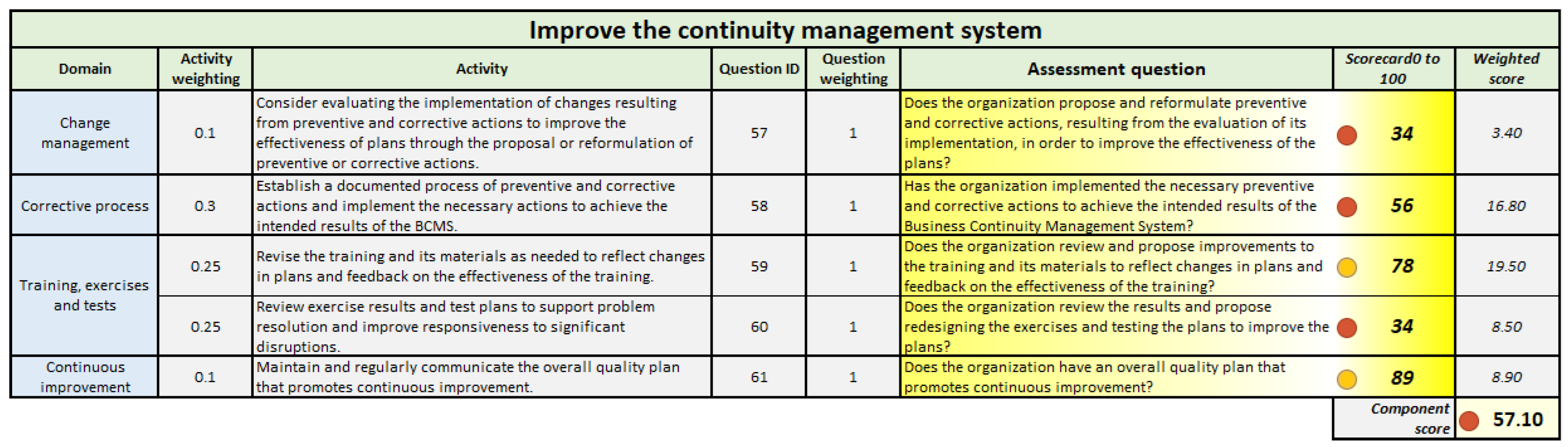

The component “Improve the Continuity Management System” in Figure 13 aims to ensure that the organization discovers opportunities for improvement, according to the checks performed, and implements the necessary actions to achieve the continuity and the BCMS objectives.

Figure 13.

Self-assessment questions for Improve the Continuity Management System component.

Figure 13.

Self-assessment questions for Improve the Continuity Management System component.