A New Method for Camera Auto White Balance for Portrait

Abstract

1. Introduction

2. Materials and Methods

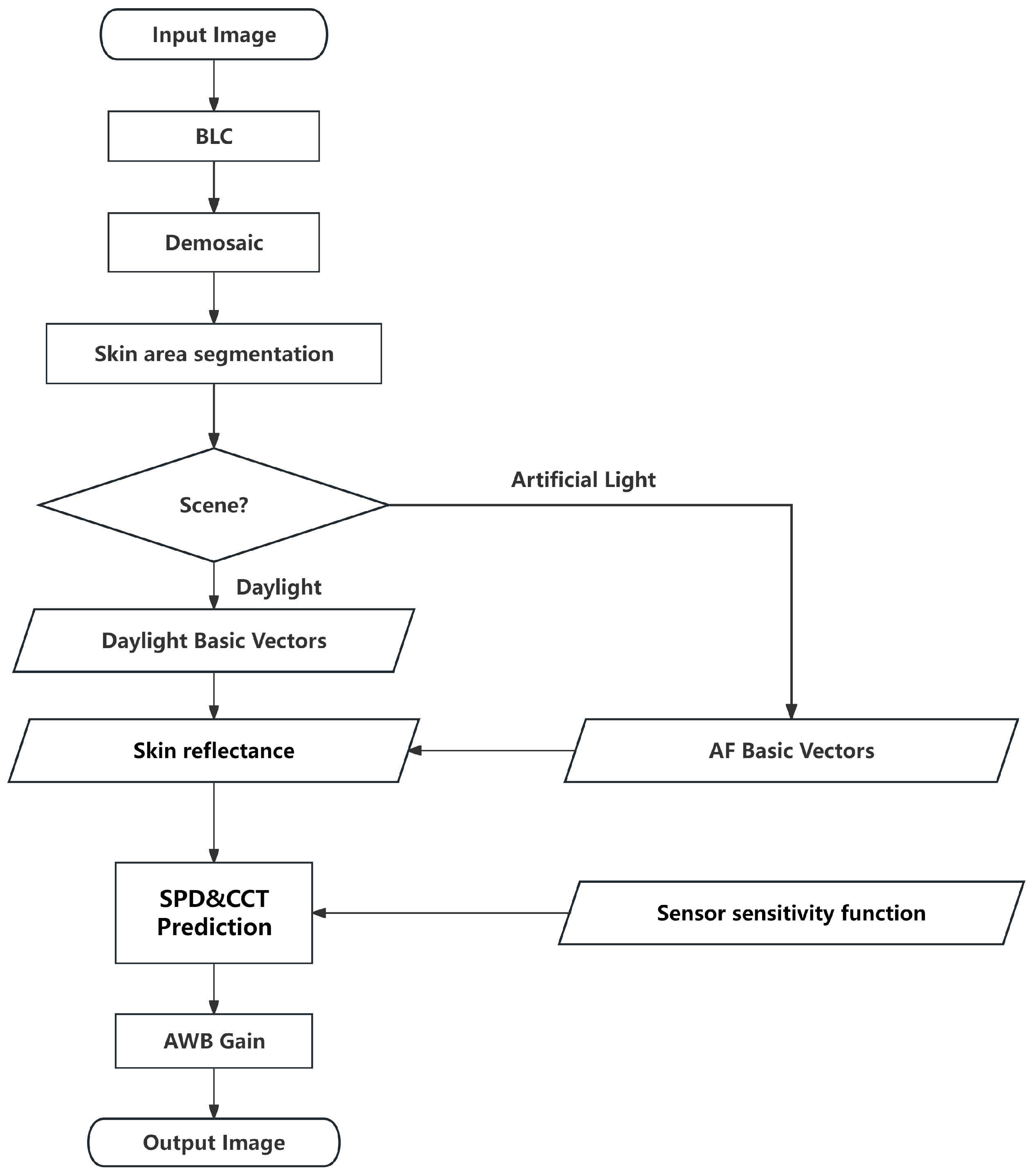

2.1. Theoretical Basis of SCR-AWB Algorithm

2.1.1. Spectral Estimation of Unknown Light Sources

2.1.2. From Predicted Spectra to CCT

2.1.3. From Predicted Spectra to Gain

2.2. Parameter Acquisition

2.2.1. Prior Information Acquisition

2.2.2. Image Information Acquisition

3. Experiments

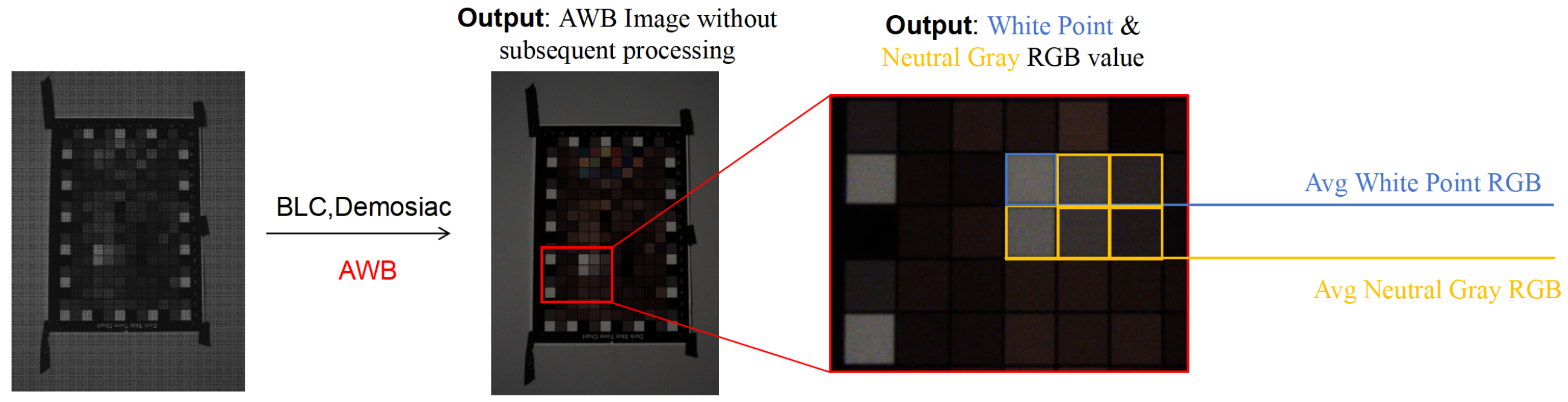

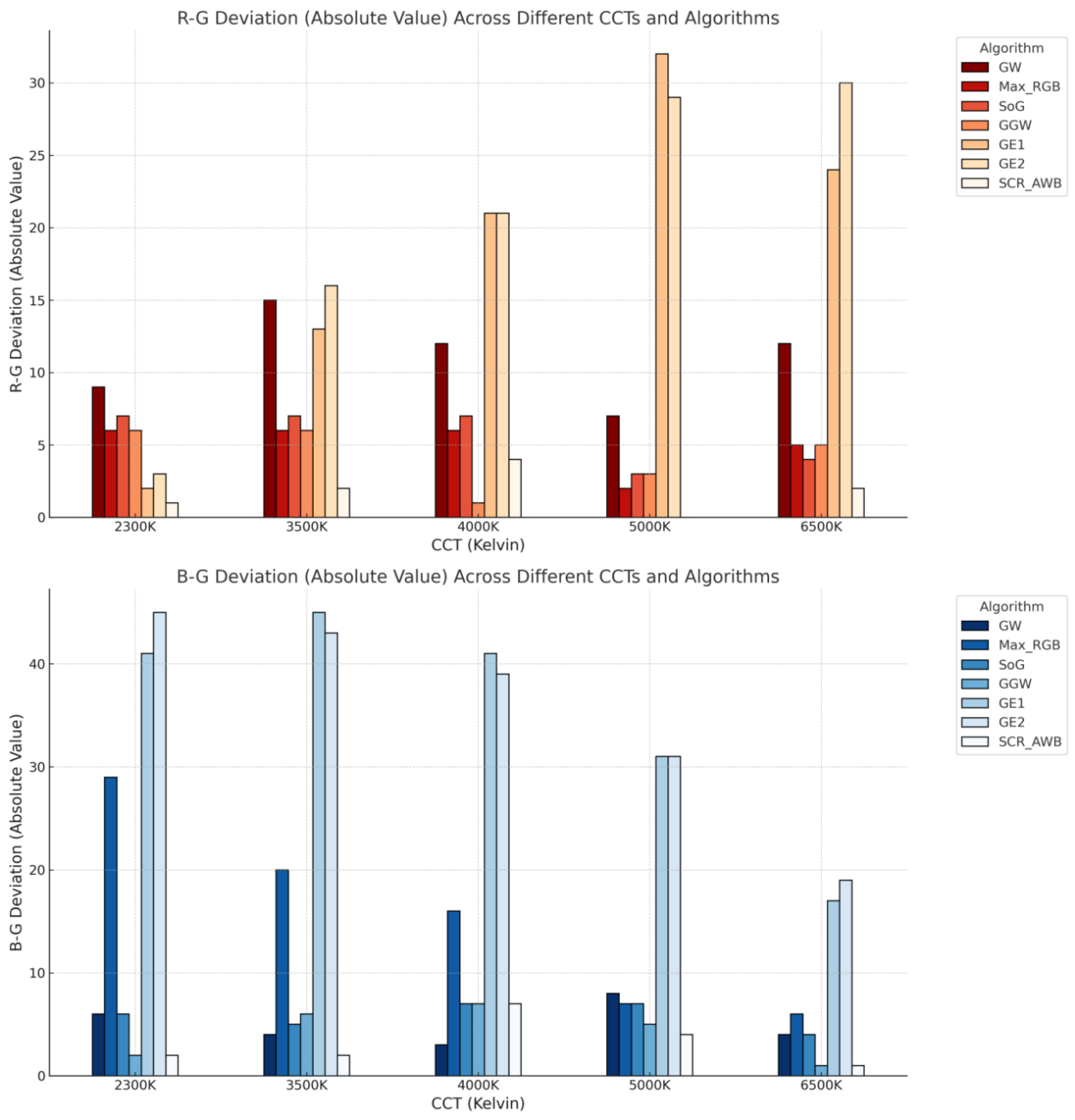

3.1. Experiment 1: Color Chart White Point and Neutral Gray Evaluation Under Different CCT Artificial Light Sources

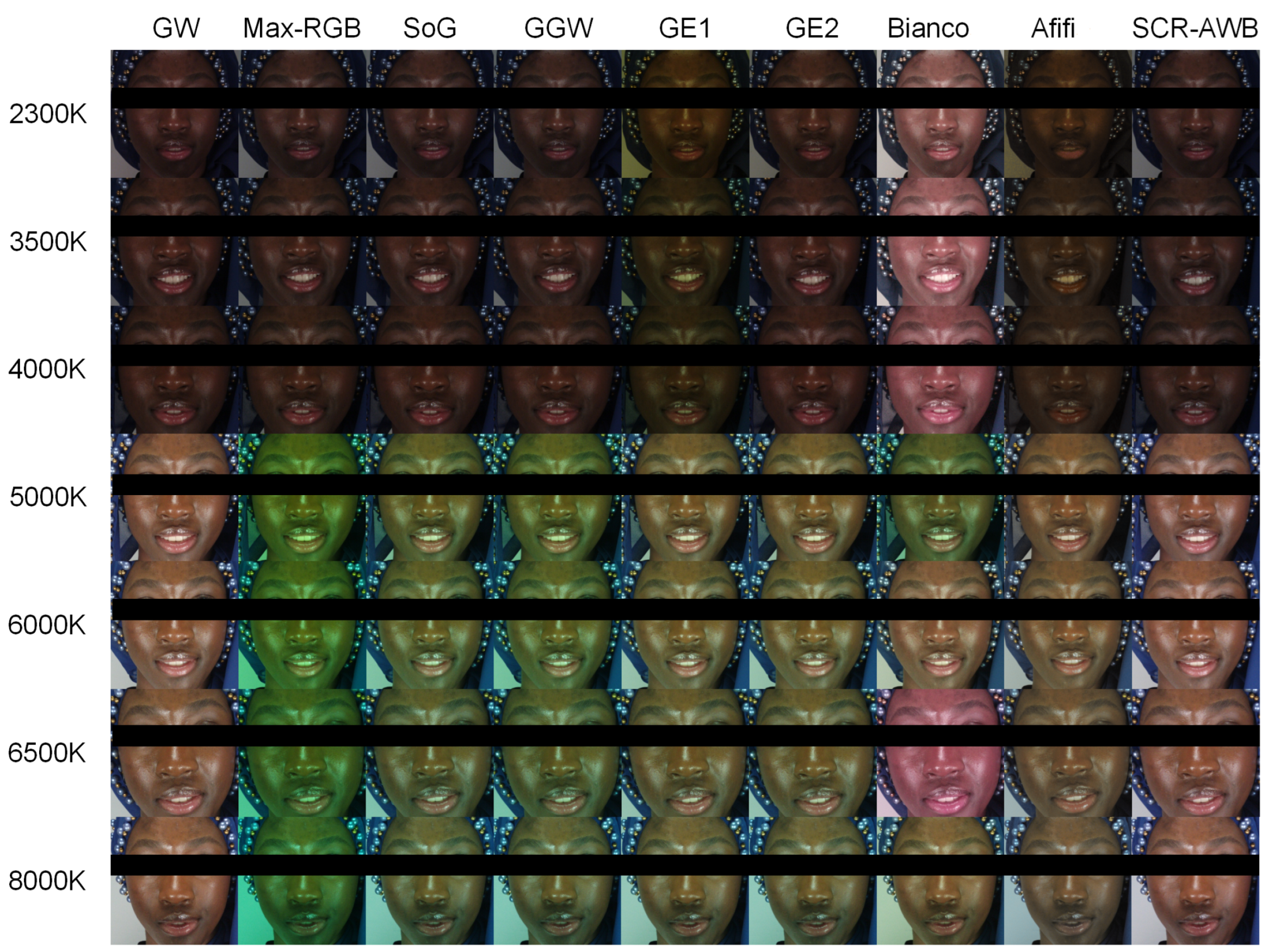

3.2. Experiment 2: Skin Color Reproduction Under Different CCT Artificial Light Sources

- 1.

- Comparison of SCR-AWB Algorithm Predicted CCT with Calibrated Laboratory CCT: As outlined in the methodology, the SCR-AWB algorithm predicts the SPD, which is then converted to CCT. White balance accuracy is assessed by calculating the difference, , between the algorithm-predicted CCT and the actual calibrated CCT, measured using a spectroradiometer (CS-2000, KONICA MINOLTA, Inc., Osaka, Japan). A smaller indicates a closer match between the predicted ambient light and actual lighting conditions, thereby enhancing white balance performance.

- 2.

- Evaluation of AWB Results on sRGB Output for DCI-P3 Display: The SCR-AWB algorithm outputs both CCT and gain values for the R and B channels. These gain values are applied in the image processing pipeline to adjust white balance, resulting in the final output in sRGB format (JPEG). Other AWB algorithms used for comparison also modify only the R and B channel gains, ensuring that BLC, CCM, Gamma correction, and other processing steps are kept consistent across all algorithms for an accurate evaluation of white balance adjustments.

4. Results

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | Linear dichroism |

References

- Bar-Haim, Y.; Saidel, T.; Yovel, G. The role of skin colour in face recognition. Perception 2009, 38, 145–148. [Google Scholar] [CrossRef]

- Henderson, T. Aesthetic photography and its role in perception. J. Vis. Arts 2017, 15, 200–215. [Google Scholar]

- Vo, P. Human-computer interaction and color adaptation. Comput. Percept. 2010, 22, 78–89. [Google Scholar]

- Zapryanov, R. White balance correction and its impact on color fidelity. Opt. Eng. 2012, 45, 302–310. [Google Scholar]

- Zhang, L. Challenges in automatic white balance algorithms for mobile devices. Mob. Imaging J. 2012, 5, 89–102. [Google Scholar]

- Chen, G.; Zhang, X. A method to improve robustness of the gray world algorithm. In Proceedings of the 4th International Conference on Computer, Mechatronics, Control and Electronic Engineering, Hangzhou, China, 28–29 September 2015; Atlantis Press: Dordrecht, The Netherlands, 2015; pp. 243–248. [Google Scholar]

- Sharma, G.; Trussell, H.J. Digital color imaging. IEEE Trans. Image Process. 1997, 6, 901–932. [Google Scholar] [CrossRef] [PubMed]

- Finlayson, G.D.; Trezzi, E. Shades of gray and colour constancy. In Proceedings of the 12th Color and Imaging Conference, Scottsdale, AZ, USA, 9–12 November 2004; Society of Imaging Science and Technology: Springfield, VA, USA, 2004; pp. 37–41. [Google Scholar]

- Van De Weijer, J.; Gevers, T.; Gijsenij, A. Edge-based color constancy. IEEE Trans. Image Process. 2007, 16, 2207–2214. [Google Scholar] [CrossRef]

- Funt, B.; Shi, L. The rehabilitation of MaxRGB. In Proceedings of the 18th Color Imaging Conference, San Antonio, TX, USA, 8–12 November 2010; Simon Fraser University: Burnaby, BC, Canada, 2010. [Google Scholar]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- Rotman, D.; Harding, P. DOE Greenbook—Needs and Directions in High-Performance Computing for the Office of Science; Technical Report; Lawrence Livermore National Laboratory (LLNL): Livermore, CA, USA, 2002. [Google Scholar]

- Bailey, M.; Hilton, A.; Guillemaut, J.Y. Finite aperture stereo. Int. J. Comput. Vis. 2022, 130, 2858–2884. [Google Scholar] [CrossRef]

- Gottumukkal, R.; Asari, V. Skin color constancy for illumination invariant skin segmentation. In Proceedings of the Image and Video Communications and Processing 2005, San Jose, CA, USA, 16–20 January 2005; SPIE: Bellingham, WA, USA, 2005; Volume 5685, pp. 969–976. [Google Scholar]

- Bianco, S.; Schettini, R. Color constancy using faces. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 65–72. [Google Scholar]

- Bianco, S.; Schettini, R. Adaptive color constancy using faces. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1505–1518. [Google Scholar] [CrossRef]

- Liang, J.; Hu, X.; Zhou, W.; Xiao, K.; Wang, Z. Deep Learning-Based Exposure Asymmetry Multispectral Reconstruction from Digital RGB Images. Symmetry 2025, 17, 286. [Google Scholar] [CrossRef]

- Xu, W.; Wei, L.; Yi, X.; Lin, Y. Spectral Image Reconstruction Using Recovered Basis Vector Coefficients. Photonics 2023, 10, 1018. [Google Scholar] [CrossRef]

- Lu, Y.; Xiao, K.; Pointer, M.; He, R.; Zhou, S.; Nasseraldin, A.; Wuerger, S. The International Skin Spectra Archive (ISSA): A multicultural human skin phenotype and colour spectra collection. Sci. Data 2025, 12, 487. [Google Scholar] [CrossRef] [PubMed]

- Bianco, S.; Cusano, C.; Schettini, R. Single and multiple illuminant estimation using convolutional neural networks. IEEE Trans. Image Process. 2017, 26, 4347–4362. [Google Scholar] [CrossRef] [PubMed]

- Choi, H.-H. DRANet: Deep Learning-Based Automatic White Balancing Approach to CVCC. IEEE Access 2025, 13, 36714–36722. [Google Scholar] [CrossRef]

- Ershov, E.; Savchik, A.; Semenkov, I.; Banić, N.; Koščević, K.; Subašić, M.; Belokopytov, A.; Terekhin, A.; Senshina, D.; Nikonorov, A.; et al. Illumination estimation challenge: The experience of the first 2 years. Color Res. Appl. 2021, 46, 705–718. [Google Scholar] [CrossRef]

- Li, B.; Qin, H.; Xiong, W.; Li, Y.; Feng, S.; Hu, W.; Maybank, S. Ranking-based color constancy with limited training samples. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12304–12320. [Google Scholar] [CrossRef]

- Zhang, H.Y.; Fang, Y.; Wu, J.H.; Wang, W.Z.; Zou, N.Y. Deep learning of color constancy based on object recognition. In Proceedings of the 2023 15th International Conference on Computer Research and Development (ICCRD), Hangzhou, China, 10–12 January 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 215–219. [Google Scholar]

- Liang, J.; Xiao, K.; Pointer, M.R.; Wan, X.; Li, C. Spectra estimation from raw camera responses based on adaptive local-weighted linear regression. Opt. Express 2019, 27, 5165–5180. [Google Scholar] [CrossRef]

- Barnard, K.; Finlayson, G.; Funt, B. Color constancy for scenes with varying illumination. Comput. Vis. Image Underst. 1997, 65, 311–321. [Google Scholar] [CrossRef]

- Li, C.; Cui, G.; Melgosa, M.; Ruan, X.; Zhang, Y.; Ma, L.; Xiao, K.; Luo, M.R. Accurate method for computing correlated color temperature. Opt. Express 2016, 24, 14066–14078. [Google Scholar] [CrossRef]

- Robertson, A.R. Computation of correlated color temperature and distribution temperature. JOSA 1968, 58, 1528–1535. [Google Scholar] [CrossRef]

- Zhu, J.; Xie, X.; Liao, N.; Zhang, Z.; Wu, W.; Lv, L. Spectral sensitivity estimation of trichromatic camera based on orthogonal test and window filtering. Opt. Express 2020, 28, 28085–28100. [Google Scholar] [CrossRef]

- Huynh, C.P.; Robles-Kelly, A. Recovery of spectral sensitivity functions from a colour chart image under unknown spectrally smooth illumination. In Proceedings of the 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 708–713. [Google Scholar]

- Bianco, S.; Cusano, C.; Schettini, R. Color constancy using CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 81–89. [Google Scholar]

- Buzzelli, M.; Zini, S.; Bianco, S.; Ciocca, G.; Schettini, R.; Tchobanou, M.K. Analysis of biases in automatic white balance datasets and methods. Color Res. Appl. 2023, 48, 40–62. [Google Scholar] [CrossRef]

- Akbarinia, A.; Rodríguez, R.G.; Parraga, C.A. Colour constancy: Biologically-inspired contrast variant pooling mechanism. arXiv 2017, arXiv:1711.10968. [Google Scholar]

- Gao, C.; Wang, Z.; Xu, Y.; Melgosa, M.; Xiao, K.; Brill, M.H.; Li, C. The von Kries chromatic adaptation transform and its generalization. Chin. Opt. Lett. 2020, 18, 033301. [Google Scholar] [CrossRef]

- Afifi, M.; Brown, M.S. Deep white-balance editing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1397–1406. [Google Scholar]

- Li, Y.Y.; Lee, H.C. Auto white balance by surface reflection decomposition. JOSA A 2017, 34, 1800–1809. [Google Scholar] [CrossRef]

- Tan, X.; Lai, S.; Wang, B.; Zhang, M.; Xiong, Z. A simple gray-edge automatic white balance method with FPGA implementation. J. Real-Time Image Process. 2015, 10, 207–217. [Google Scholar] [CrossRef]

- Houser, K.W.; Wei, M.; David, A.; Krames, M.R.; Shen, X.S. Review of measures for light-source color rendition and considerations for a two-measure system for characterizing color rendition. Opt. Express 2013, 21, 10393–10411. [Google Scholar] [CrossRef]

- Xiao, K.; Yates, J.M.; Zardawi, F.; Sueeprasan, S.; Liao, N.; Gill, L.; Li, C.; Wuerger, S. Characterising the variations in ethnic skin colours: A new calibrated database for human skin. Skin Res. Technol. 2017, 23, 21–29. [Google Scholar] [CrossRef]

- He, R.; Xiao, K.; Pointer, M.; Bressler, Y.; Liu, Z.; Lu, Y. Development of an image-based measurement system for human facial skin colour. Color Res. Appl. 2022, 47, 288–300. [Google Scholar] [CrossRef]

- Wang, M.; Xiao, K.; Wuerger, S.; Cheung, V.; Luo, M.R. Measuring human skin colour. In Proceedings of the 23rd Color and Imaging Conference, Darmstadt, Germany, 19–23 October 2015; Society for Imaging Science and Technology: Springfield, VA, USA, 2015; pp. 230–234. [Google Scholar]

| Algorithm | Mean | Median | Best 25% | Worst 25% | Maximum |

|---|---|---|---|---|---|

| GW ** | 3.20 ± 1.10 | 3.14 | 2.58 | 3.98 | 4.19 |

| Max-RGB | 4.79 ± 4.07 | 3.75 | 2.70 | 6.14 | 9.82 |

| SoG * | 2.41 ± 1.03 | 2.59 | 2.17 | 3.03 | 3.16 |

| GGW * | 1.96 ± 0.50 | 1.92 | 1.70 | 2.00 | 2.60 |

| GE1 ** | 10.45 ± 2.92 | 10.58 | 9.27 | 12.04 | 13.01 |

| GE2 ** | 10.86 ± 3.15 | 10.87 | 8.95 | 11.36 | 14.99 |

| Bianco’s [20] | 3.45 ± 3.99 | 2.31 | 1.73 | 3.57 | 8.90 |

| SCR-AWB | 0.88 ± 0.55 | 0.87 | 0.50 | 0.98 | 1.57 |

| Algorithm | Recovery Angle Error | Algorithm | Reproduction Angle Error | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Median | Best 25% | Worst 25% | Maximum | Mean | Median | Best 25% | Worst 25% | Maximum | ||

| GW ** | 2.76 ± 0.65 | 2.39 | 1.66 | 3.18 | 8.15 | GW | 1.89 ± 0.89 | 1.73 | 1.30 | 2.39 | 2.86 |

| Max-RGB ** | 3.84 ± 1.48 | 2.65 | 0.92 | 5.40 | 11.77 | Max-RGB | 3.81 ± 4.95 | 2.52 | 0.76 | 5.40 | 9.98 |

| SoG ** | 1.97 ± 0.42 | 1.76 | 1.39 | 2.54 | 4.63 | SoG * | 1.62 ± 0.85 | 1.43 | 1.35 | 1.89 | 2.63 |

| GGW ** | 1.98 ± 0.50 | 1.38 | 1.10 | 2.53 | 5.68 | GGW | 1.85 ± 0.50 | 1.86 | 1.27 | 2.48 | 2.48 |

| GE1 ** | 11.07 ± 1.61 | 10.49 | 8.38 | 13.66 | 19.08 | GE1 ** | 9.73 ± 4.62 | 8.52 | 6.61 | 13.66 | 13.71 |

| GE2 ** | 10.94 ± 1.68 | 10.51 | 7.13 | 12.44 | 18.05 | GE2 * | 10.21 ± 5.80 | 10.51 | 6.18 | 11.41 | 17.24 |

| Bianco’s [20] ** | 1.73 ± 0.40 | 1.58 | 1.01 | 2.31 | 4.36 | Bianco’s [20] | 1.93 ± 1.20 | 1.87 | 1.84 | 1.91 | 3.39 |

| Afifi’s [35] ** | 8.13 ± 2.99 | 5.59 | 2.47 | 13.66 | 22.71 | Afifi’s [35] | 8.84 ± 10.68 | 5.19 | 2.47 | 15.23 | 20.52 |

| SCR-AWB | 1.16 ± 0.23 | 1.08 | 0.83 | 1.29 | 3.01 | SCR-AWB | 0.91 ± 0.43 | 0.95 | 0.63 | 1.12 | 1.35 |

| Scene | Mean | Median | Best 25% | Worst 25% | Maximum |

|---|---|---|---|---|---|

| 2300 K | 97 | 93 | 51 | 101 | 252 |

| 3500 K | 63 | 60 | 15 | 109 | 132 |

| 4000 K | 52 | 35 | 24 | 75 | 114 |

| 5000 K | 149 | 147 | 141 | 169 | 215 |

| 6000 K | 231 | 138 | 65 | 383 | 560 |

| 6500 K | 264 | 213 | 170 | 288 | 542 |

| 8000 K | 381 | 299 | 208 | 435 | 1326 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S.; Xiao, K.; Li, C.; Lai, P.; Luo, H.; Sun, W. A New Method for Camera Auto White Balance for Portrait. Technologies 2025, 13, 232. https://doi.org/10.3390/technologies13060232

Zhou S, Xiao K, Li C, Lai P, Luo H, Sun W. A New Method for Camera Auto White Balance for Portrait. Technologies. 2025; 13(6):232. https://doi.org/10.3390/technologies13060232

Chicago/Turabian StyleZhou, Sicong, Kaida Xiao, Changjun Li, Peihua Lai, Hong Luo, and Wenjun Sun. 2025. "A New Method for Camera Auto White Balance for Portrait" Technologies 13, no. 6: 232. https://doi.org/10.3390/technologies13060232

APA StyleZhou, S., Xiao, K., Li, C., Lai, P., Luo, H., & Sun, W. (2025). A New Method for Camera Auto White Balance for Portrait. Technologies, 13(6), 232. https://doi.org/10.3390/technologies13060232