Abstract

We present a novel approach to reinforcement learning (RL) specifically designed for fail-operational systems in critical safety applications. Our technique incorporates disentangled skill variables, significantly enhancing the resilience of conventional RL frameworks against mechanical failures and unforeseen environmental changes. This innovation arises from the imperative need for RL mechanisms to sustain uninterrupted and dependable operations, even in the face of abrupt malfunctions. Our research highlights the system’s ability to swiftly adjust and reformulate its strategy in response to sudden disruptions, maintaining operational integrity and ensuring the completion of tasks without compromising safety. The system’s capacity for immediate, secure reactions is vital, especially in scenarios where halting operations could escalate risks. We examine the system’s adaptability in various mechanical failure scenarios, highlighting its effectiveness in maintaining safety and functionality in unpredictable situations. Our research represents a significant advancement in the safety and performance of RL systems, paving the way for their deployment in safety-critical environments.

1. Introduction

In artificial intelligence, reinforcement learning (RL) is a critical technique that allows agents to learn and improve their actions through interaction with their environment [1]. This method is increasingly vital in various robotic areas and crucial for navigating complex real-world challenges [2].

The primary challenge in RL applications is safety assurance. While RL is effective in many cases, it often struggles to foresee or mitigate risks in novel or unpredictable situations [3,4,5]. In particular, when faced with unanticipated test data, the neural network may exhibit excessive confidence in its predictions [6,7]. In critical tasks like autonomous driving, such overconfidence can lead to severe failures [8].

RL systems have integrated fail-safe mechanisms to address this when handling exceptional circumstances. These systems are designed to switch to a safe state during errors, preventing further damage or risk and ensuring no catastrophic failures under any operating condition [9,10,11]. This is also mandated by international standards like ISO 26262 [12], requiring fail-safe features for protective responses to functional failures.

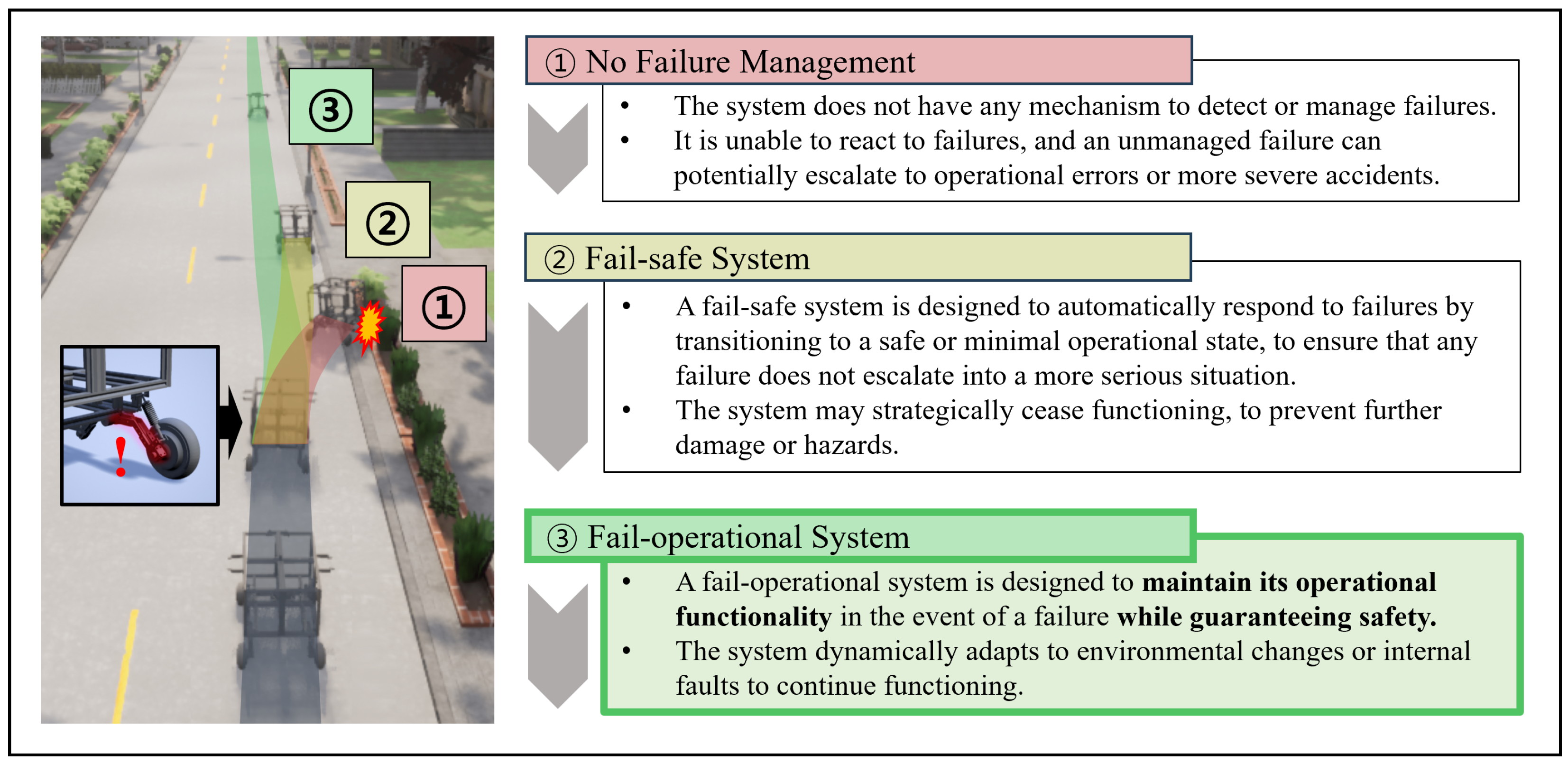

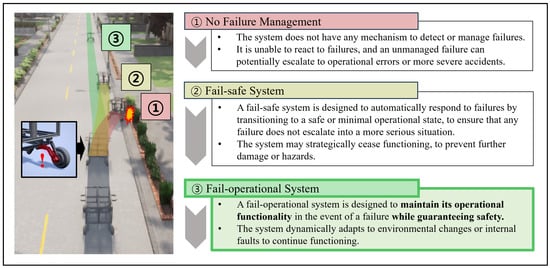

However, for dynamic driving tasks in autonomous vehicles or delivery robots, a simple, fail-safe system for functional safety might not be comprehensive for overall driving safety. A fail-safe mechanism typically prevents catastrophic failures by transitioning the system into a safe state or using a fallback policy, often completely halting operations upon detecting a fault. Sometimes, more sophisticated responses are needed for system failures. For instance, highway safety measures like stopping or waiting for human intervention can be hazardous. Conversely, fail-operational systems ensure continued operation with minimal functionality loss, even during critical failures [11]. A comparative overview of failure management systems is illustrated in Figure 1.

Figure 1.

Comparative overview of failure management systems.

Architectures often rely on structured Fault Detection, Isolation, and Recovery (FDIR) pipelines in conventional fail-operational systems, particularly in safety-critical domains such as aerospace and autonomous driving. Notably, FDIRO [13] extends this paradigm by incorporating an optimization step after recovery to ensure the reconfigured system remains contextually effective. Adapted for autonomous vehicles, this strategy typically employs low-latency failure detection and isolation, followed by system reconfiguration to ensure safety constraints are met. The subsequent optimization phase adjusts component placement (e.g., computation node assignments) based on runtime context to enhance efficiency.

Additionally, hierarchical fallback architectures, such as those presented in [14], propose tiered control and inference strategies. Depending on system confidence and environmental uncertainty, these systems switch between high-performance and low-risk models. Such approaches emphasize system-level redundancy and architectural fallback mechanisms rather than the policy-level adaptability at the core of our approach.

Moreover, redundancy-based techniques, such as analytical redundancy, are commonly adopted in conventional fail-operational systems. For instance, Simonik et al. [15] demonstrate steering angle estimation for automated driving using analytical redundancy to maintain vehicle functionality in the event of sensor failure. These methods ensure fault tolerance using estimation models to replicate failed sensor outputs and maintain control continuity.

In contrast, our contribution introduces an innovative learning and reasoning model that implements a dual-skills approach, distinguishing between ‘task skill’ and ‘actor skill’. The ‘task skill’ component involves high-level planning, such as determining the vehicle’s intended driving path or destination. In contrast, the ‘actor skill’ governs low-level control actions based on the vehicle’s current mechanical state and environment. This separation enables flexible and modular control, where high-level objectives and low-level execution are decoupled, allowing for better accommodating system changes or faults.

The prior work [16] introduced a skill discovery algorithm that learns morphology-aware latent variables without direct compensation. This algorithm adapts to unseen environmental changes, showing zero-shot inference capabilities for morphological alterations. Building on this, we developed a fail-operational system with real-time fault detection and response planning. In case of significant errors due to sensor or system failures, the system updates the actor skill to maintain continuous operation.

The proposed method underscores the significance of skill-based learning in enhancing RL system resilience and reliability. By creating systems that aptly respond to mechanical failures and adapt to changing scenarios, we explore new safety and efficiency avenues in RL for critical systems like autonomous driving, where failure costs are exceptionally high and intolerable.

2. Related Works

2.1. Safe Reinforcement Learning

Safe Reinforcement Learning (SRL) is a specialized branch of RL that goes beyond the traditional goal of maximizing rewards, including adherence to specific safety constraints within its learning objectives. It emphasizes integrating these constraints into the RL framework, with attention to factors like expected return, risk metrics, and potentially hazardous areas within the Markov Decision Process (MDP) framework [17,18].

A common strategy for addressing SRL challenges is to adjust the policy optimization phase of classic RL algorithms. This adaptation aims to balance the pursuit of task-related rewards with observing safety constraints. Techniques like trust region policy optimization [19,20], Lagrangian relaxation [21,22,23], and the construction of Lyapunov functions [24,25] have been investigated for this purpose.

SRL also involves limiting the policy’s exploration to safer zones within the MDP. This is performed via Recovery RL [17,26,27] or Shielding Mechanisms [28,29], which act as preventive measures against the agent’s venture into risky states, thereby maintaining exploration within safe parameters.

Moreover, model-based RL contributes significantly to SRL by facilitating safe exploration and control. It often incorporates Model Predictive Control (MPC) [30], allowing real-time optimization of control tasks with embedded safety constraints, such as state restrictions [31,32,33] or behavioral shields [29,34].

2.2. Fail-Operational Systems

Fail-operational systems are designed to maintain core functionalities even in partial failures—a critical requirement in safety-critical domains such as autonomous driving and aerospace. Traditional fail-operational architectures rely on structured Fault Detection, Isolation, and Recovery (FDIR) pipelines. The extended FDIRO model incorporates an optimization step into the standard FDIR procedure, dynamically reallocating system resources (e.g., computation node assignments) based on contextual information to enhance operational effectiveness after recovery [13].

Hierarchical fallback architectures present another common fail-operational strategy, in which control is tiered according to performance and risk levels. As introduced by Polleti et al. [14], such systems switch between high-performance and conservative models based on system confidence or environmental uncertainty. These approaches offer robustness by relying on predefined control transitions and behavioral backups.

Additionally, analytical redundancy is widely employed to compensate for sensor-level failures. For example, Simonik et al. [15] propose a method for estimating steering angles using redundant models when direct sensor readings are unreliable or unavailable. This approach ensures system continuity by synthesizing missing inputs from other available signals.

While effective, these conventional methods depend heavily on handcrafted redundancy, explicit fallback logic, or architecture-level reconfiguration, which can limit adaptability in highly dynamic or unexpected failure scenarios.

2.3. Skill-Based Reinforcement Learning

Skill learning in the context of RL includes a variety of techniques and approaches aimed at improving the policy’s adaptability and functionality, allowing the agent to adapt and fine-tune for unseen tasks more efficiently [35,36]. Historical algorithms such as SKILLS [37] and PolicyBlocks [38] pioneered the concept of skill learning. These learned skills are expressed in various forms, such as sub-policies, often referred to as options [39,40,41], and sub-goal functions [42,43]. More recent developments include embedding skills in continuous space through stochastic latent variable models [44], which concisely represent a wide range of skills [45,46,47].

Unsupervised skill discovery is an essential aspect of skill learning and is helpful in environments where it is challenging to design explicit rewards or where reward signals are sparse. This approach maximizes the mutual information between skills and states to derive meaningful skills [48,49]. Various algorithms utilize mutual information as an intrinsic reward, optimizing through reinforcement learning [50,51,52], while other methods learn a transition model and use model predictive control over downstream tasks [53,54].

However, mutual information-based approaches have limitations, especially in covering a broader region in the state space [49]. Recognizing these limitations, several alternative methodologies have been proposed. Lipschitz-constrained Skill Discovery [55] stands out in that it focuses on mapping the skill space to the state space and maximizing the Euclidean travel distance within the state space. Unsupervised goal-conditioned RL learns goals, corresponding tomdiverse reaching policies [56,57], and Controllability-Aware Skill Discovery [49] aims to make agents continuously move to hard-to-control states.

In our proposed method, we introduce the new concept of separated skill discovery and specialize each skill space in a specific domain. This specialization is designed to make skills more targeted and effective in specific areas. It is expected to broaden the scope that each skill can effectively cover and improve its adaptability and applicability.

2.4. Disentangled Skill Discovery

In prior research [16], we unveiled an innovative skill discovery algorithm that delineates skill domains to acquire knowledge of morphology-sensitive latent variables without direct adjustments. This algorithm is adept at adjusting to unanticipated morphological changes in the environment, showcasing the ability to perform zero-shot inference for morphological adaptations by seamlessly responding to these variations.

The algorithm’s foundation lies in two key elements: the ‘task skill’ and the ‘actor skill’. The ‘task skill’ pertains to overarching objectives such as charting the vehicle’s desired route or destination. It steers the vehicle toward achieving its objectives. Conversely, the ‘actor skill’ governs precise driving maneuvers based on the vehicle’s instantaneous state and its interaction with the environment. The system divides the agent’s operational expertise into two separate latent variables, each shaped through two specialized variational autoencoders (VAEs) [44].

Drawing from unsupervised skill discovery methodologies [52,53,54], notably the maximization of mutual information, the task VAE is designed to create as varied geometric paths as possible. Meanwhile, the action VAE learns the necessary actions for task execution by analyzing state and action pairings, encapsulating this information into an actor latent variable.

The system includes the ‘sampler’ and the ‘explorer’, both crucial to learning. The sampler, influenced by information-centric strategies [53,54], generates novel trajectories by maximizing the mutual information between the geometric state space and the latent space. The Explorer’s role is to enact the sampler’s directives. The training environment employs multiple agents, each with unique physical attributes and Explorer policies custom-fitted to them. This arrangement ensures task learners discern and assimilate the unique behavioral patterns specific to each agent model.

While this algorithm has proven effective in adapting to morphological changes and exhibited zero-shot inference prowess for such modifications, we recognized a critical shortfall: the urgency of detecting and reacting to malfunctions in real time is paramount for ensuring safety. To address this, this study has led to the creation of an exhaustive fail-operational system. This system is meticulously designed to react immediately to abrupt environmental shifts, preserving the continuity of essential functions even amidst component malfunctions.

3. Methodology

3.1. Skill-Based Fail-Operational System

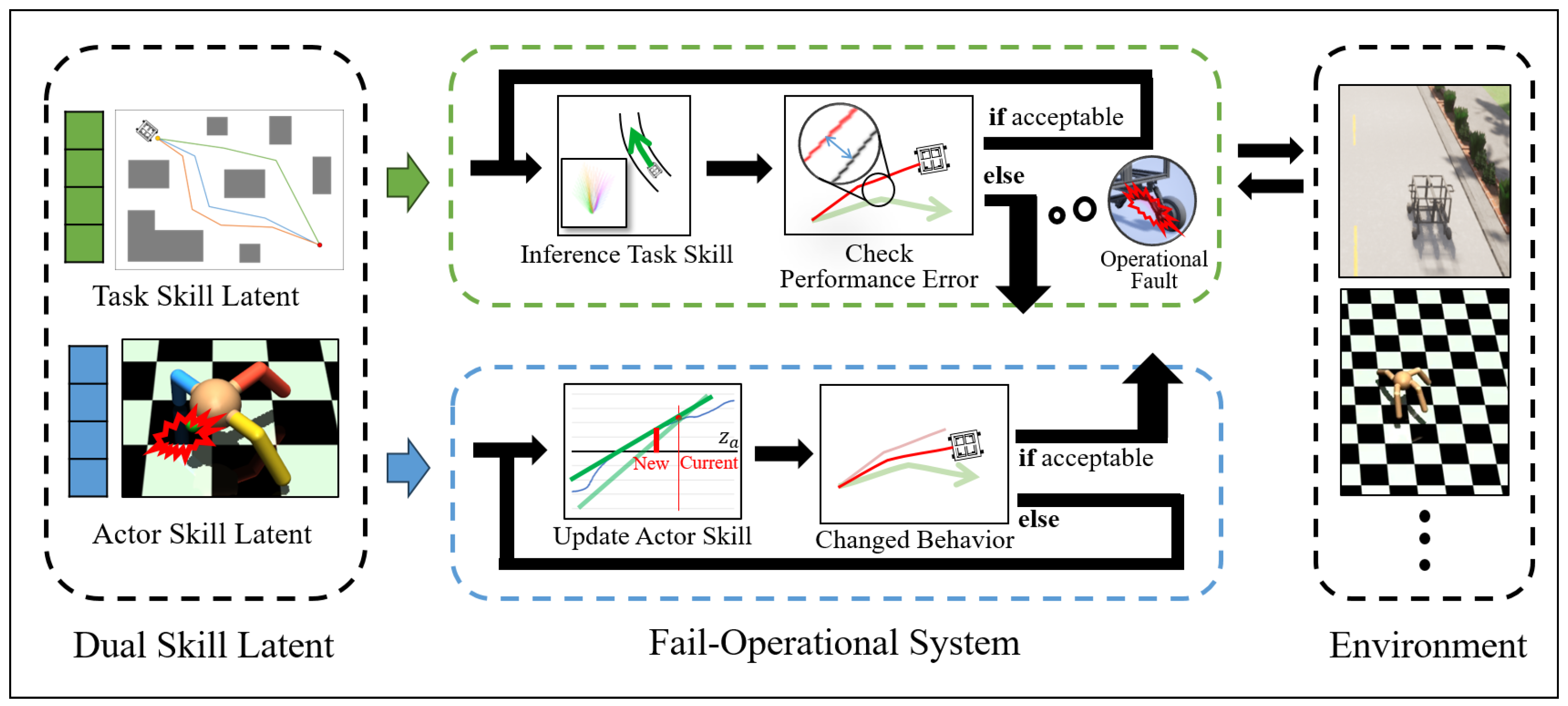

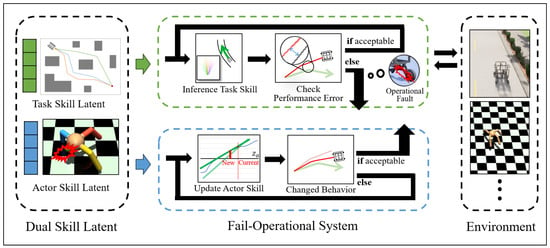

This study proposes a fail-operational system that can cooperate with RL, focusing on safety and adaptability. We can detect errors and adjust their behavior in real time to maintain stable operation. Figure 2 is a proposed conceptual diagram representing the workflow of the proposed system.

Figure 2.

Conceptual diagram of the inference strategy of the proposed system.

We propose a fail-operational system that responds appropriately to mechanical problems when they occur and maintains stable driving. This innovative learning and reasoning model implements a dual-skills approach, distinguishing between the ‘task skill’ and ‘actor skill’.

The ‘task skill’ component is responsible for essential goals such as determining the vehicle’s intended driving path or destination. It gives the vehicle the direction it needs to move toward its goal. On the other hand, the ‘actor skill’ is an element that directs specific driving actions according to the vehicle’s current state and surrounding environment. The actor skill embeds the physical control needed to follow the trajectory generated by the task skill under the current mechanical condition of the system.

During operation, the system monitors the deviations or anomalies from expected performance. If the deviation remains within predefined safety thresholds, the ‘actor skill’ is maintained, ensuring the vehicle continues driving as expected. However, if a sensor malfunction or drive system defect causes a discrepancy, the system actively responds to the failure. Unlike traditional fallback or redundancy mechanisms, the system updates its ‘actor skill’ to re-calibrate its driving method to maintain stability without relying on predefined redundant components.

3.2. Dual Skill Latents

This approach involves formulating two distinct disentangled latent variables encapsulating different aspects of the agent’s operational knowledge. These variables are essential to the partitioned latent space, which is constructed through the deployment of two dedicated variant autoencoders [44] (task VAE and action VAE).

Following the principles of unsupervised skill discovery [52,54,58], particularly the necessity of maximizing mutual information, the task VAE is able to generate diverse geometric paths that comprehensively represent all feasible trajectories required for operational flexibility. The action VAE uses all state-and-action pairs to learn the explorer’s actions to perform the task and stores this information as an Actor latent.

The following sections explain how each learning component is learned. Additionally, hyperparameters and constant values are explained in detail in Appendix A.

3.2.1. Task Skill Learner and Sampler

The task VAE generates an array of coordinates representing the agent’s movements. Both the input and output of the task VAE consist of an array of geometric locations, and each array spans frame length T. The operation system strategically chooses the skill latent to provide the agent’s immediate path, enabling decision-making to achieve the task.

The task encoder is designed to identify valid motion trajectories and encode this knowledge within the skill potential . At the same time, the task decoder emulates the trajectories discovered in the exploration process. The loss function for the task VAE, denoted as , is derived from the Evidence Lower Bound (ELBO) [59], with the prior normalized as a standard Gaussian distribution . Additionally, to mitigate potential bias and overfitting during training, we apply KL-divergence regularization within the VAE framework.

The role of the sampler is to operate according to principles inspired by information-based approaches [51,52,53]. The sampler is tasked with generating new trajectories by optimizing the mutual information between the geometrical state space S and latent space . The loss function for sampler parameter is designed as follows:

3.2.2. Actor Skill Learner

To encapsulate the actor’s state changes within the skill latent space , we employ an action variational autoencoder (VAE), consisting of an action encoder and an action decoder .

The encoder processes the trajectories of actions and states generated through the execution of the explorer, extracting these experiences into the skill latent . Conversely, the action decoder requires access to both and to reconstruct the actor’s driving maneuver. In particular, the construction of the decoder differs from the standard VAE as it incorporates both and as inputs. The objective function is thus defined as follows:

3.3. Skill Inferencing

3.3.1. Task Skill

The task VAE generates an array of coordinates representing the agent’s movements. Both the input and output of a task VAE consist of an array of geometric locations, and each array spans frame length T. The operation system strategically chooses the skill latent to provide the agent’s immediate path, enabling decision-making to achieve the task.

The space contains a diverse range of potential paths originating from the agent’s current location, encompassing all possible trajectories that the agent may undertake in the future. At every moment, the system continuously infers the optimal corresponding to the path that most closely aligns with a given target path or point. The mathematical definition of optimal is as follows. G is the provided or calculated target path, and is the distance between the path and the point.

To determine the most suitable , the system samples in the latent space and evaluates its alignment with the desired target path. We utilized a precomputed table of pairs of specific instances of and their corresponding paths. The table serves as a lookup mechanism that can quickly identify the optimal . The feasibility of this approach is grounded in the fact that the task skill is independent of the actor’s attributes, which we may wish to alter during operation. This independence ensures that the table remains valid regardless of changes in the actor’s state or configuration.

3.3.2. Actor Skill

The actor skill is designed to improve autonomous agents’ operational capabilities and safety. It reacts to environmental interactions in the context of an agent’s mechanical properties and their impact on performance.

The system continuously monitors the actual path of the agent and compares it with the intended path. Mathematically, the performance error is defined as follows:

is the Euclidean distance between two points. Note that is the output of the task decoder, and s is the observation value after the system performs the action by the action decoder.

In the absence of mechanical errors, an agent’s existing actor skill latent should be preserved. It represents the agent’s optimal response method in the current state and is initialized through a latent sampling process. The initial value of is precomputed as follows:

Whether to maintain or update the actor skill is determined by predefined safety thresholds . If the observed error in the executed skill is within the threshold, the current actor skill is still considered acceptable and maintained.

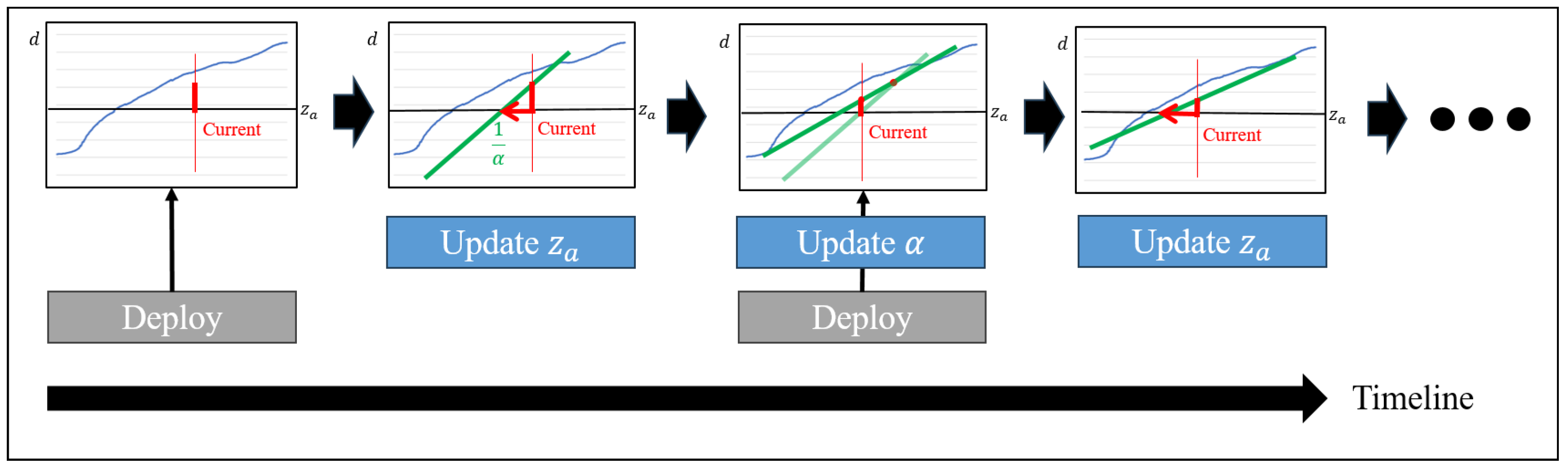

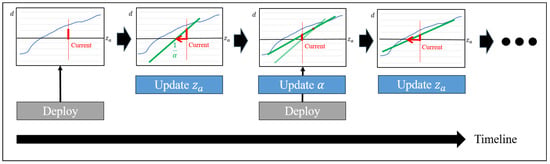

However, if the deviation exceeds a safety threshold, the system initiates an update process for actor skills. To infer the actor skill , we use a technical approach that relies on dynamic adjustment of in response to observed performance errors . This process utilizes the scaling factor . The first time, is initialized to the initial value based on experience.

Firstly, is updated by adding the product of the performance error and . The parameter is then modified based on the Exponential Moving Average of . The adjustment fine-tunes to ensure that it accurately reflects the relationship between actor skill changes and performance error changes. Mathematically, this is represented as follows:

where is the smoothing factor. And since d is an actual observation value and has a lot of noise, is a hyperparameter to achieve stability by reducing the reactivity of . To improve the robustness of this process, especially when there is a risk of local minima during the search, we introduce a strategy to set several pivotal points as search criteria based on previously learned actor settings. The search range is extended to other pivots after searching around the initial if the performance error does not fall below a threshold. The search process is mathematically structured as follows.

Initially, determine the set of pivot points for . This can be inferred from the task performance results of a pre-learned actor or set to divide the space uniformly. If becomes too large, it will no longer be possible to find a point with a low error value even if is updated (see Figure 3). To determine this, we set a threshold that the alpha value can have. If becomes greater than even though the error is less than the , jumps to one of the nearby pivots. The entire process of inferring skill is described in Algorithm 1.

| Algorithm 1 Skill inference algorithm |

|

Figure 3.

A diagram showing the process of updating actor skills. is updated based on observed error and the parameter is adjusted with the change in error during the update process. If becomes larger than , jumps to one of the nearby pivots.

4. Experiments

4.1. Skill Discovery from Predefined Models

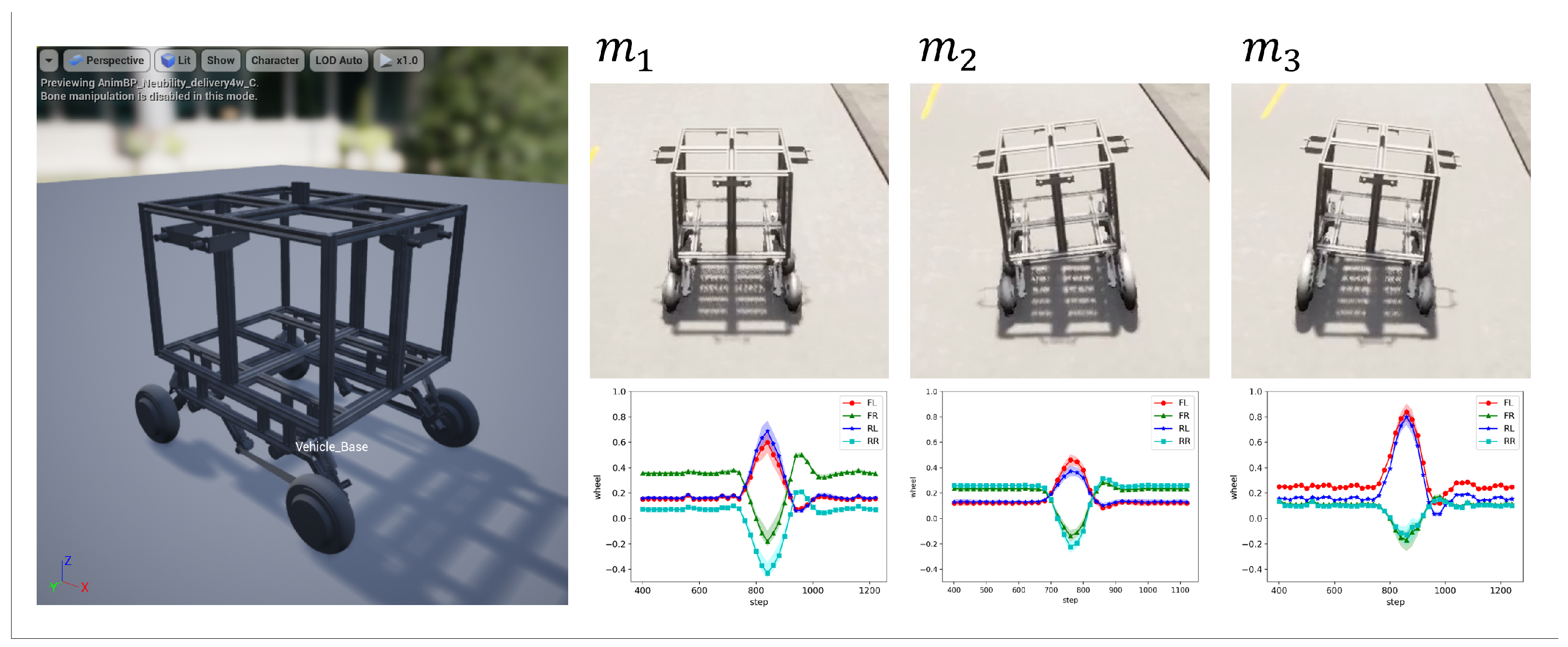

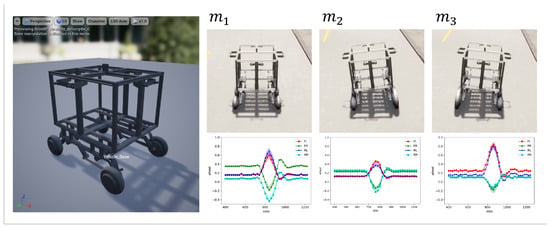

In the experiment, we evaluated the effectiveness of the proposed algorithm in adapting to unseen changes in mechanical states through experiments conducted within the CARLA vehicle simulator [60]. For this experiment, we utilized a four-wheeled delivery robot model. To enable the system to respond to a variety of mechanical conditions, we designed a standard model , a model with an enlarged left wheel , and a model with an enlarged right wheel (see Figure 4). These variations are intended to simulate problems due to asymmetric wheel configurations.

Figure 4.

(Left) The design of the four-wheeled robot used in the experiment. (Top Right) Graphical representation of the shapes of three predefined robot models. (Bottom Right) Optimal input values for each wheel of the corresponding model to make an ideal left turn.

For additional details and to access the source code and results of the experiments, visit our repository https://github.com/boratw/sd4m_carla (accessed on 8 March 2025).

4.2. Visualization of Skill Latent Space

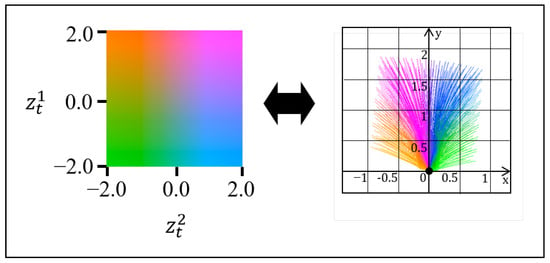

4.2.1. Task Skill Latent

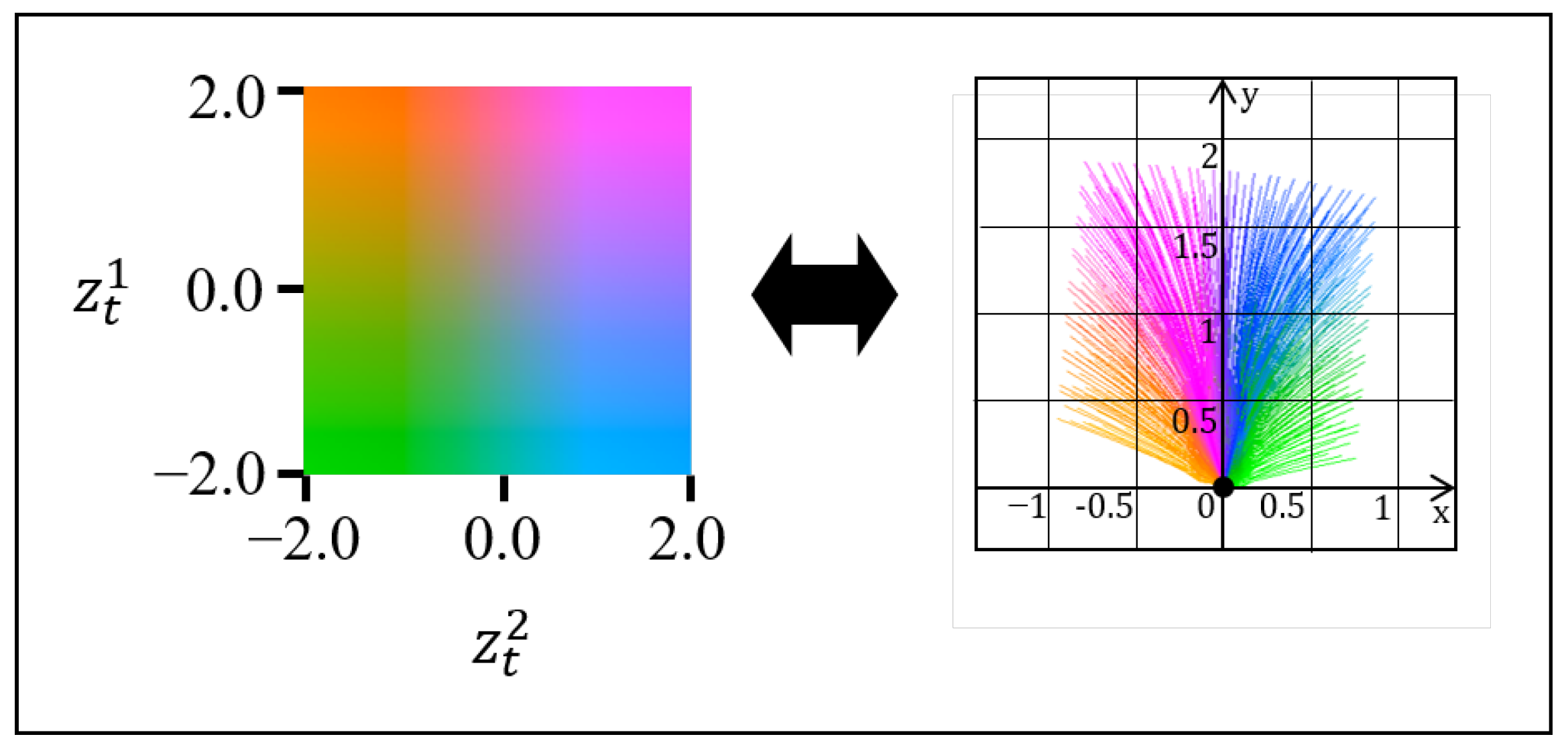

The main learning goal for the task latent variable was to enable the system to learn all possible paths a vehicle can take autonomously. Figure 5 shows the visualization of embedded task skills. After training, becomes a repository of potential routes containing the different navigation choices available to the vehicle based on its current location. It was confirmed that all possible paths in the forward direction of the robot were well embedded. In the experimental configuration, the trajectory length T was set to 500 ms.

Figure 5.

Visualization of embedded task skills. The left side of the figure shows the relationship between latent value and color, and the right side demonstrates the corresponding target paths decoded from the latent.

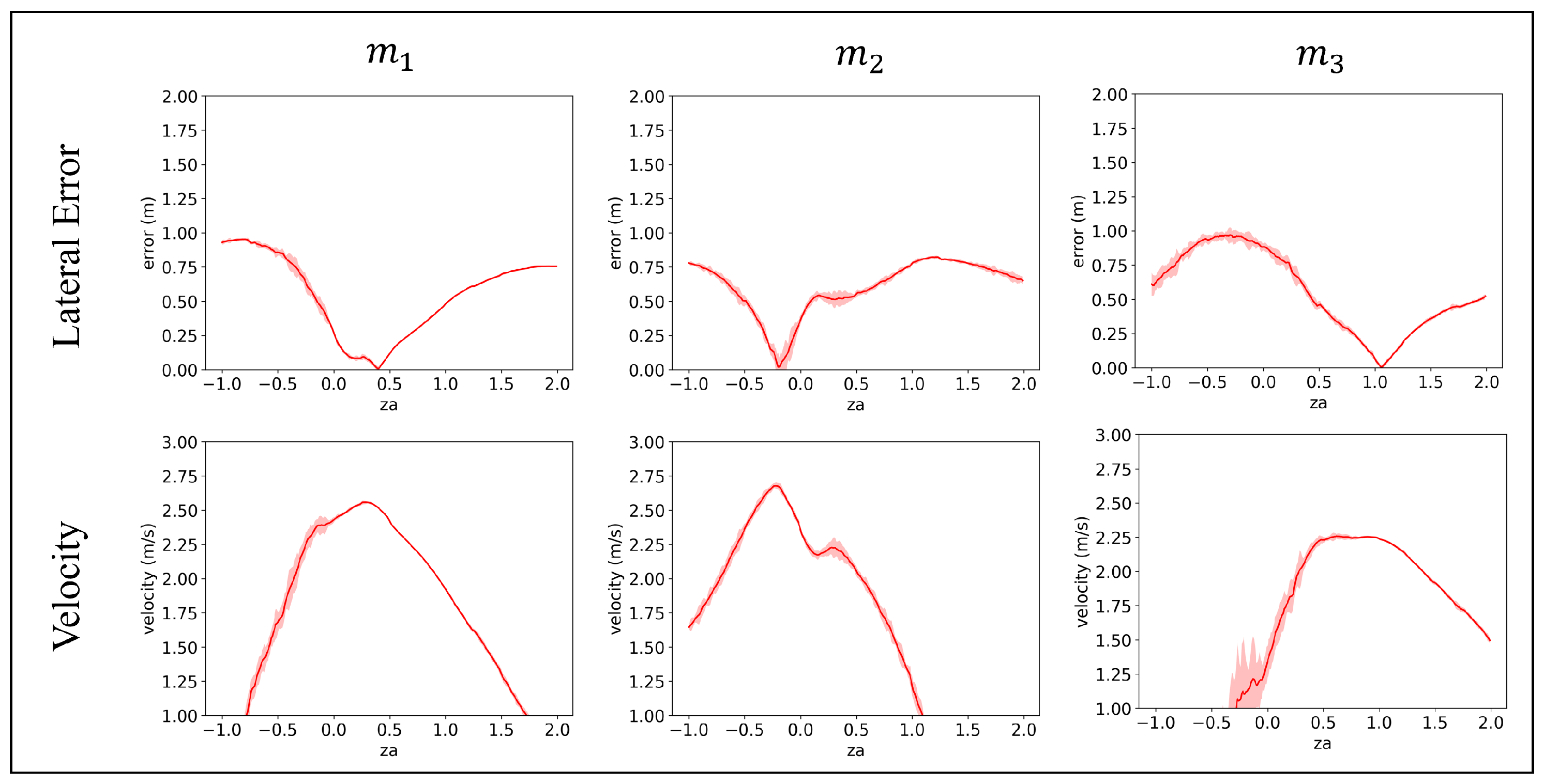

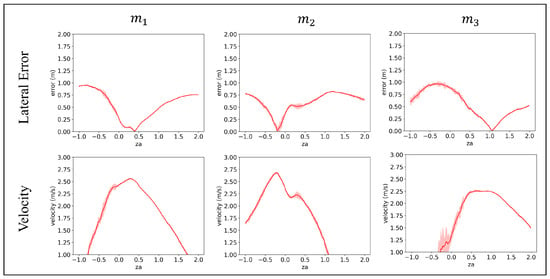

4.2.2. Actor Skill Latent

In contrast, the learning objective for the actor latent variable focuses on the vehicle’s shape. Specifically, the goal is to ensure that each distinct physical characteristic of the vehicle is appropriately mapped to an optimal distance within the latent space. This mapping ensures that changes in the vehicle’s physical characteristics, such as different wheel sizes or asymmetric configurations, are accurately represented and distinguishable within .

Figure 6 and Table 1 show the performance of the proposed algorithm and the SAC [61] agent for each predefined shape. is the optimal value obtained through Equation (7), and the velocity and error values are the average values shown in the lane keeping scenario, which will be described later.

Figure 6.

Relationship between the actor latent variable and task performance metrics across the shape of the vehicle.

Table 1.

Comparison of performance between the proposed algorithm and SAC agent for predefined shapes.

We confirmed that the learner we trained learned the capability of operating at a similar level to the SAC policy, which focused on learning only each shape. And values were also mapped separately from each other, as expected.

4.3. Evaluation of Robustness in Static Environment Changes

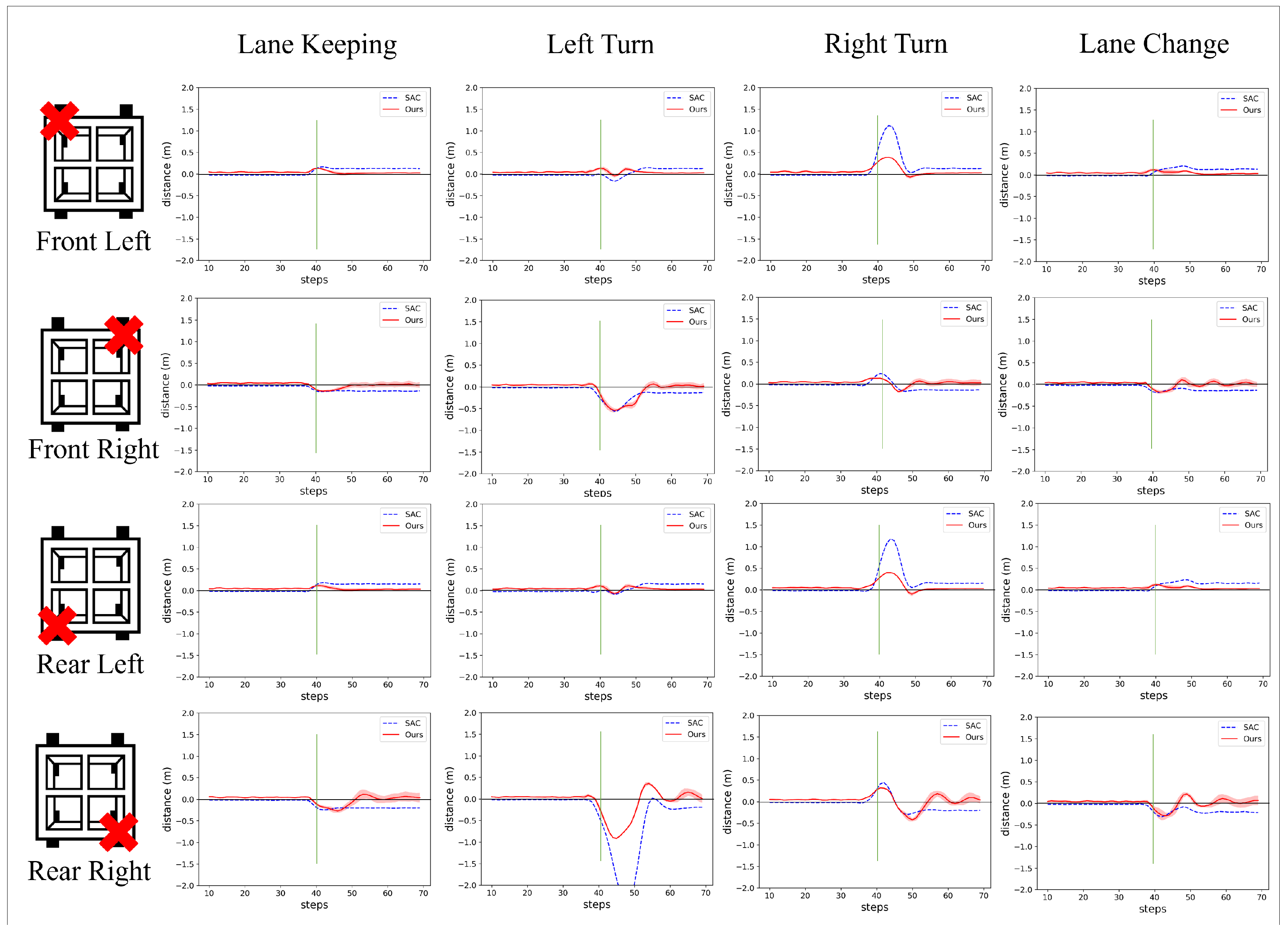

To evaluate the adaptability of the learned model in response to failure scenarios, we designed experiments focusing on a four-legged robot under various compromised conditions. The goal was to evaluate the system’s ability when specific mechanical failures occur and compare its performance to existing reinforcement learning models.

We prepared four separate models of a four-wheeled robot (Figure 4), each representing a unique failure state. In these models, each corresponding robot wheel was individually disconnected from the linked motor, which means that the input value is not sent to the wheel.

For each model, we determined the optimal value, as described in Equation (7). The identified that best compensated for the specific model ensured that the robot could effectively perform its intended task despite the damaged wheels.

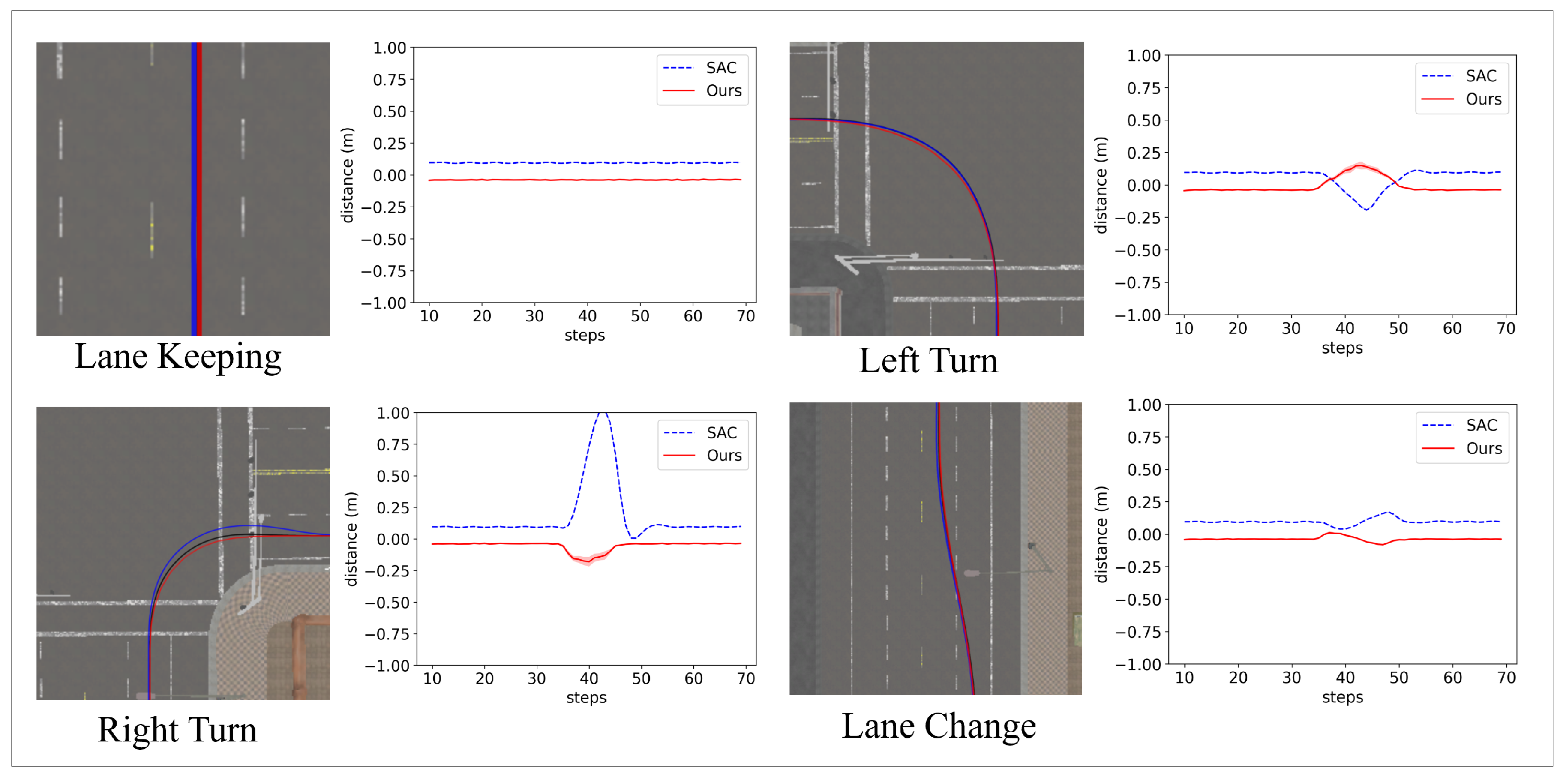

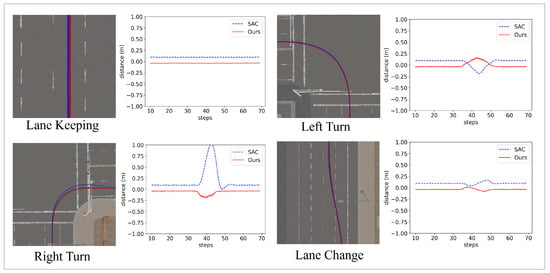

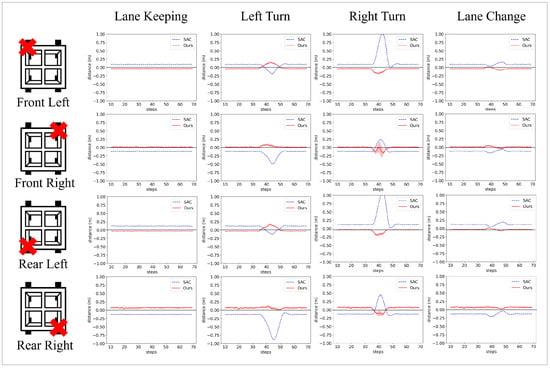

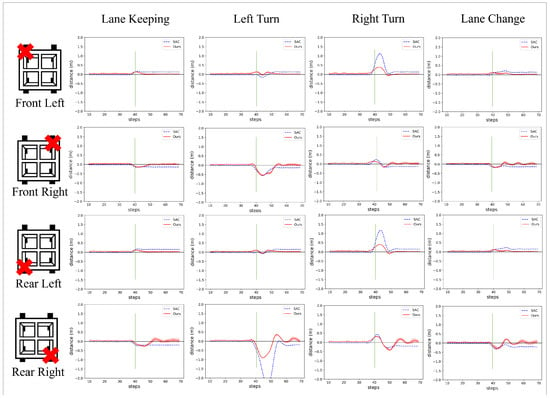

The driving capabilities of the system juxtaposed with those of standard reinforcement learning models were evaluated across four driving scenarios: Lane Keeping, Left Turn, Right Turn, and Lane Change. The primary metric for evaluation is lateral distance, measured as the deviation between the robot’s actual position and the optimal path provided to the system. Figure 7 shows a representative example of experiments focusing on a model with a disconnected “Front Left” wheel. And Figure 8 and Table 2 present a detailed comparison of the results for each scenario across the different models.

Figure 7.

Visualizations of the driving scenarios that compare the paths driven by the SAC model (blue line) and our algorithm (red line), using a model with a broken ‘Front Left’ wheel. The black line represents the ideal trajectory generated by the Task VAE. The right side of each visualization displays the observed lateral distance values during the experiment.

Figure 8.

The lateral deviation from the reference trajectory for each model. The data were averaged across different scenarios based on 25 experiments, and the shaded area in the graph indicates the 95% confidence interval for the value.

Table 2.

Comparative analysis of performance between the proposed algorithm and the SAC with the optimal for each kinematic modification of wheel size.

Since no scenario in which the robot’s wheels were disconnected was presented during the training phase, the system inferred the optimal value even though it was a completely new failure situation. Additionally, utilizing the inferred values, the system demonstrated resilience by performing at a similar level to a scenario where the robot’s wheels were intact.

4.4. Evaluation of Fail-Operational System

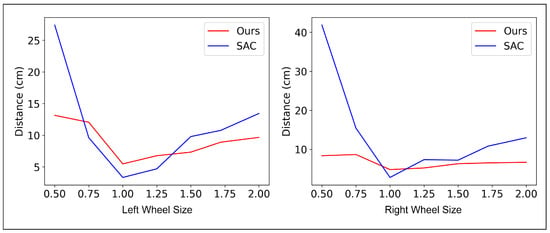

Building on the previous experiment, which evaluated the adaptability of models under static failure scenarios, we extended the investigation to include more dynamic and unpredictable conditions. Specifically, we assessed the system’s responsiveness to unexpected mechanical failures during operation.

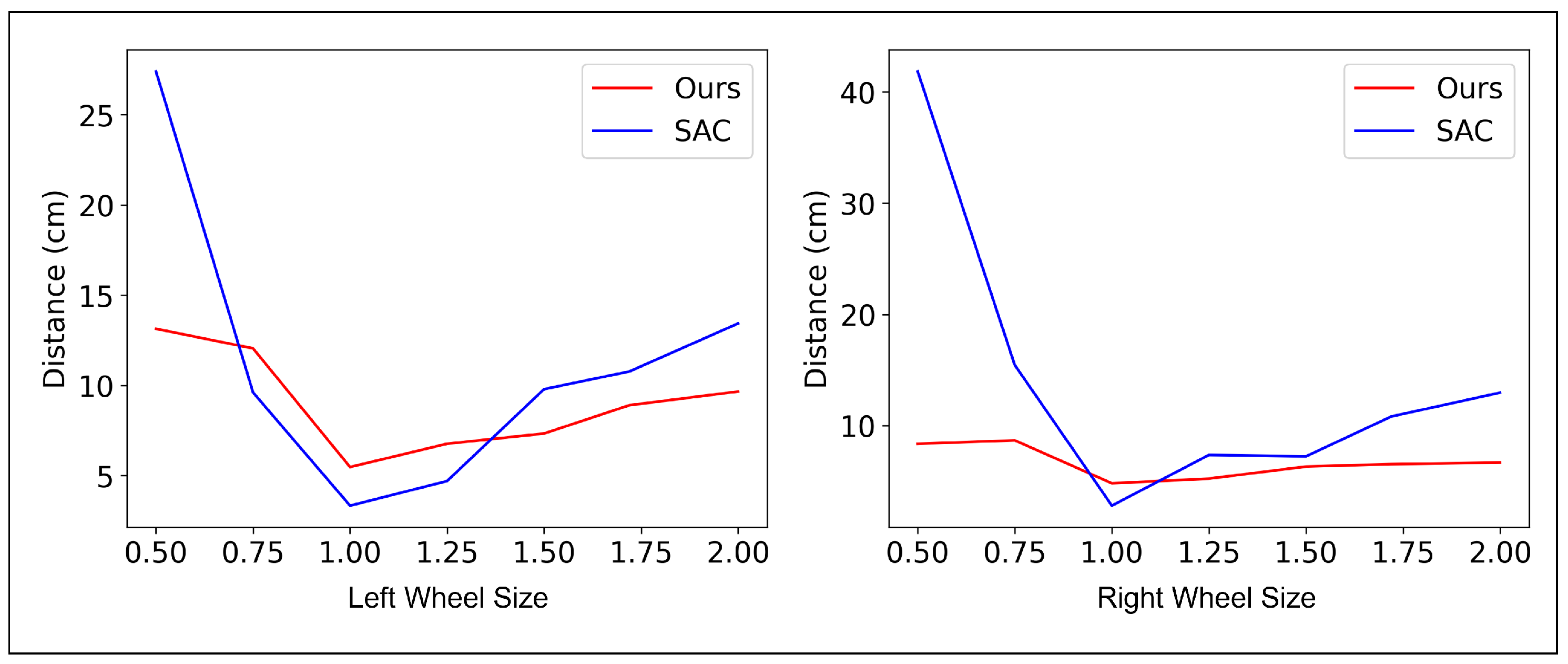

First, we examined the vehicle’s reaction to a sudden change in wheel size. The left and right wheels were altered simultaneously, requiring different inputs to maintain a straight trajectory. Initially, the delivery robot was driven straight, with all wheels at 100% of their original size. Subsequently, the size of one wheel was varied by a certain percentage. We measured the maximum deviation of both the Soft Actor–Critic (SAC) algorithm and the proposed algorithm from the center of the lane, as well as the time required to return to parallel driving. The results of this experiment are presented in Figure 9 and Table 3 and Table 4. As indicated in the tables, the proposed method demonstrates significantly lower lateral deviation and faster recovery times under various failure scenarios.

Figure 9.

Comparative performance of the proposed algorithm and SAC in observing the deviation from a lane’s center when the robot is driven straight.

Table 3.

Analysis of the performance between algorithms in the left wheel size variation experiment.

Table 4.

Analysis of the performance between algorithms in the right wheel size variation experiment.

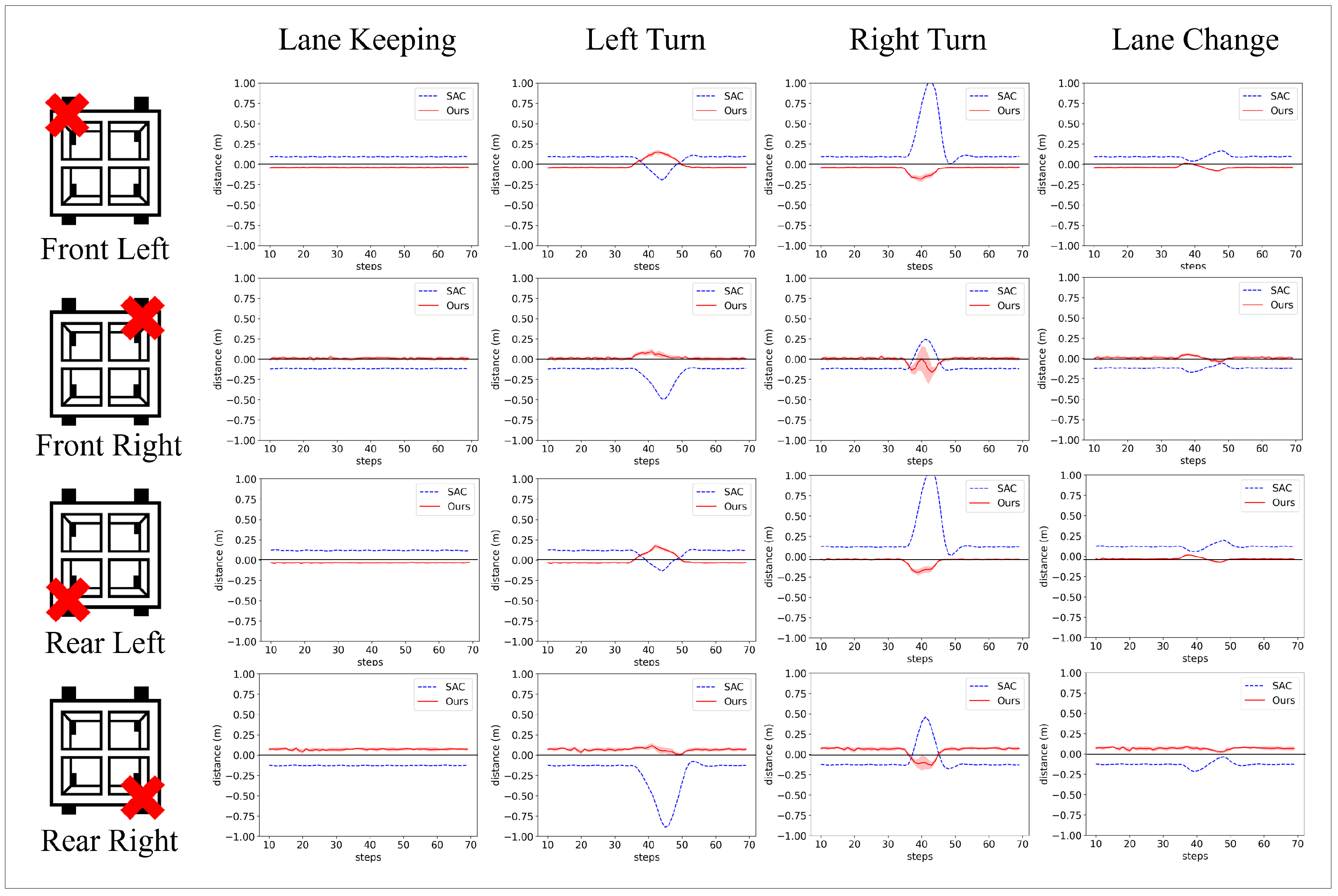

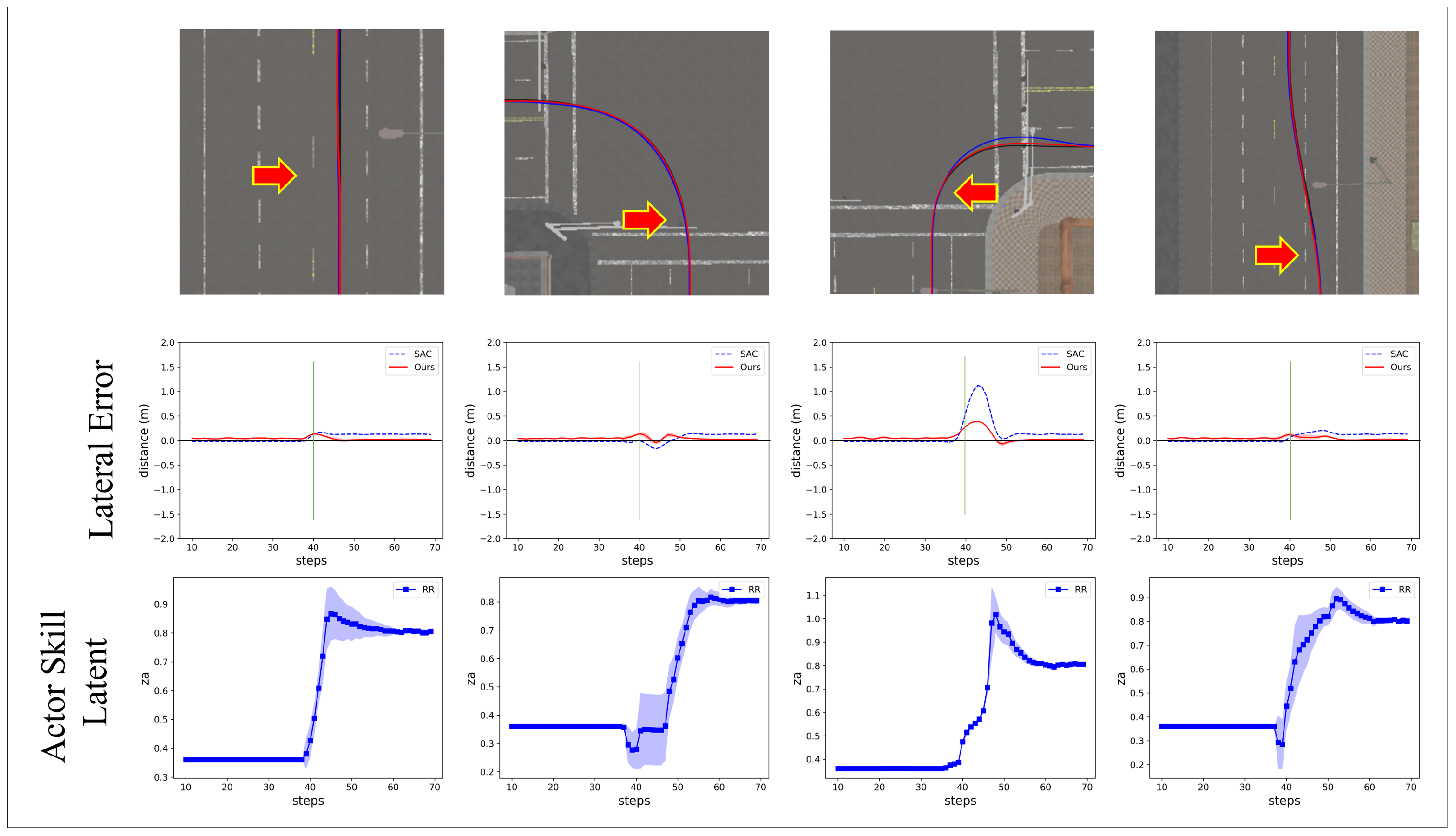

Next, we conducted a similar experiment to the one described in Section 4.3, where the delivery robot navigated through predefined scenarios. This time, one wheel suddenly disconnected during each scenario. The experimental setup included four identical scenarios: Lane Keeping, Left Turn, Right Turn, and Lane Change. Initially, all four wheels of the robot functioned normally. During each scenario, one wheel became disconnected unexpectedly. As in previous experiments, the primary metric for evaluation was the lateral distance between the robot’s actual trajectory and the optimal path. Wheel disconnections were triggered at 40 steps into each scenario.

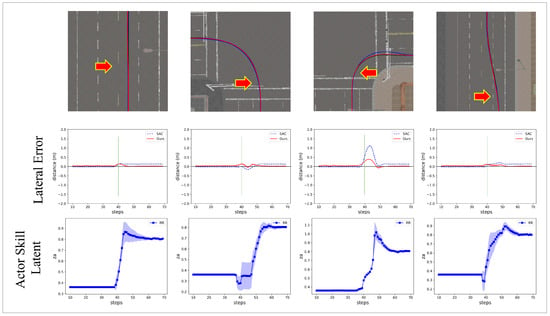

Figure 10 shows a representative example of experiments focusing on a model with a disconnected “Front Left” wheel. This figure shows the trajectory of each algorithm on the map, the lateral errors, and the inferred value. And Figure 11 and Table 5 present a detailed comparison of the results for each scenario across the different models.

Figure 10.

The result of the experiment conducted on the ‘Front Left’ wheel disconnection scenario. (Top) Aerial snapshots depict the agent’s trajectory before and after the wheel disconnection. The red arrow indicates the point at which the disconnection occurs. (Middle) lateral distance for the proposed algorithm versus the SAC model. The green vertical line indicates the step at which the wheel disconnection occurs. (Bottom) The inferred from our approach.

Figure 11.

The lateral distance for each model across different scenarios. Shading indicates the 95% confidence interval for the value. A green vertical line indicates the step at which the wheel disconnection occurs.

Table 5.

Comparison of performance between the proposed algorithm and SAC agent.

Through this experiment, we observed the system’s ability to initiate and converge inferences of the agent’s skill, , when a malfunction occurs. In the “Front Left” case, the value is observed to converge around 0.8, indicating a robust and effective response of the system to mechanical failures. This convergence demonstrates the system’s ability to adaptively readjust its strategy in response to sudden changes and maintain optimal performance.

5. Discussion

The first experiment was designed around a four-legged robot model subjected to various failure states. The test aims to understand the system’s response to specific mechanical failures and benchmark its performance against conventional reinforcement learning models. With this setup, we showed that by using the optimal actor skill variable for each failure state, the robot could continue its task effectively despite unexpected sudden damage to the wheel motor.

In the second experiment, the robot started with all wheels operational, but one wheel would disconnect while running various driving scenarios. This unpredictability tested the system’s ability to adjust and readjust its strategy rapidly. The system demonstrated a robust ability to quickly infer and adapt the skill variable in response to these sudden faults in the actuating system. The proposed system could continue performing its task without compromising safety.

A critical insight from the experiments is the system’s ability to complete tasks safely during unexpected mechanical failures. This finding highlights the importance of fault-operating systems when human intervention is impractical, but immediate response is essential. For example, in the second experiment, a sudden malfunction at an intersection may lead to significant traffic disruptions and dangers. In such cases, the capacity to continue safe operations takes precedence over maintaining high performance levels.

Although the proposed system demonstrates robustness to mechanical failures, it does not directly address cases involving advanced sensor degradation or severe observation noise. The current framework assumes a minimally sufficient level of perceptual input and focuses on motor-level adaptability. Incorporating observation uncertainty modeling, sensor fusion with redundancy, and robust representation learning could enable the system to reason under imperfect observations. These extensions would further enhance the system’s applicability in real-world safety-critical domains.

6. Conclusions

In this study, we developed a separable skill-learning architecture and a comprehensive system for real-time fault situation inference and response planning. This study aimed to address the limitations of existing RL methods in predicting and preventing risks in unseen or unpredictable situations in real-world applications.

We evaluated the proposed system in various scenarios involving mechanical faults. The system demonstrated robustness in the experiments by rapidly adapting its behavioral strategy in response to sudden mechanical faults during operation. We showed the system’s ability to maintain task performance through improved lateral stability and faster recovery under unexpected fault conditions. This highlights its effectiveness in scenarios demanding an immediate and safe response, where the ability to continue operation safely is critical.

In conclusion, this study demonstrates a novel approach to enhancing the safety and adaptability of RL systems. The ability to handle unexpected scenarios and maintain operational integrity represents a significant advance toward deploying RL agents in real-world environments where unpredictability and safety issues are prevalent. The proposed method is suitable for real-world deployments, including medical robots and autonomous vehicles, where unpredictability and safety issues are prevalent. This study represents a significant step forward in the practical application of RL agents in safety-critical environments.

Author Contributions

Conceptualization, S.K.; funding acquisition, S.K.; methodology, T.K. and S.K.; project administration, S.K.; resources, S.K.; supervision, S.K.; validation, T.K.; visualization, T.K.; writing—original draft, T.K.; writing—review and editing, S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Institute for Information and Communications Technology Promotion grant number 2022-0-00966.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in this study are openly available in Github at https://github.com/boratw/sd4m_carla (accessed on 8 March 2025).

Acknowledgments

This work was supported by Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korean government(MSIT) (No.2022-0-00966, Development of AI processor with a Deep Reinforcement Learning Accelerator adaptable to Dynamic Environment).

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A. Technical Detail

Appendix A.1. Environment Setting

We trained and validated the proposed system within the CARLA [60] simulation environment. To pursue a more detailed control approach suitable for our research purposes, we designed a custom vehicle controller for the simulation. This controller bypasses CARLA’s default vehicle control mechanisms, which typically rely on a steering wheel and engine model. Instead, it directly controls the motor of each individual wheel.

During the training and evaluation process, the CARLA simulator provides the state vector of each vehicle, and the system calculates and outputs the optimal action for each state encountered.

- State Vector: The state vector consists of a total of 10 elements. All values are given as coordinates relative to the geometric state at the starting point of the trajectory.

- −

- Position (x, y): the relative coordinates of the vehicle’s current position (m)

- −

- Speed (x, y): instantaneous speed along the x and y axes (m/s).

- −

- Acceleration (x, y): acceleration components of the vehicle (m/s2).

- −

- Vehicle orientation (unit vector x, y): this unit vector represents the orientation of the vehicle in a two-dimensional plane.

- −

- Vehicle pose (roll and pitch): these quantify the angular orientation of the vehicle in terms of roll and pitch (degree).

- Action Vector: The action vector consists of four unique values ranging from −1 to 1, each value corresponding to a control input provided to each wheel of the vehicle. Positive values indicate acceleration, and negative values represent deceleration.

Interactions between the environment and the system occur every 1/20th of a second. This interval constitutes a single time unit within the experimental setup.

Appendix A.2. Implementation Details

The main values and hyperparameters of the learning network are as follows. The architectural framework and hyperparameters used in the learning network are derived with reference to the established structure described in SD4M [16]. As a result, the mathematical expressions of our network are broadly consistent with those described in SD4M [16].

Table A1.

Parameters of the task and action VAE.

Table A1.

Parameters of the task and action VAE.

| Parameter | Value |

|---|---|

| Encoder 1 | [256, 256] |

| Decoder 1 | [256, 256] |

| 2 | 0.001 |

| Learning rate | 0.0001 |

| 2 | 0.02 |

| 2 | 0.01 |

| 3 | 0.25 |

| 3 | 0.9 |

1 The number of neurons present in each layer of the MLP. 2 This constant is introduced in [16]. 3 This constant is described in Section 3.3.

Table A2.

Parameters of the sampler.

Table A2.

Parameters of the sampler.

| Parameter | Value |

|---|---|

| Decoder 1 | [256, 256] |

| 2 | 0.001 |

| Learning rate | 0.0001 |

| 2 | 0.02 |

| 2 | 0.01 |

1 The number of neurons present in each layer of the MLP; 2 this constant is introduced in [16].

Table A3.

Parameters of the explorer.

Table A3.

Parameters of the explorer.

| Parameter | Value |

|---|---|

| Value Network 1 | [256, 256] |

| Policy Network 1 | [256, 256] |

| 2 | 0.95 |

| Initial Entropy 2 | 0.1 |

| Target Entropy 2 | |

| Learning rate | 0.0001 |

| Policy Update rate 2 | 0.05 |

1 The number of neurons present in each layer of the MLP; 2 this constant is introduced in SAC [61].

Appendix B. Experiment Settings

For the experiment, we prepared a delivery robot model. This model has four wheels, but unlike a regular vehicle, each can be controlled separately. To achieve this, we modified the power transmission structure inside the collar to send inputs to each wheel individually. All training and experimental code can be found at https://github.com/boratw/sd4m_carla (accessed on 8 March 2025).

Appendix B.1. Training

The training was conducted by running 25 vehicles simultaneously in an empty lot, collecting results, and learning from them. The training was performed without any reward, utilizing three models: , , and . For training, we ran 1000 experiments, each consisting of 2000 frames, to collect a total of 2 million frames. These frames were divided into 50-frame increments and used as single batches of paths. The network was trained for 350 epochs, each consisting of 64 paths per batch and 32 batches.

Appendix B.2. Scenario Experiments

This section details the scenario experiments described in Section 4.3 and Section 4.4. Each experiment was conducted at a single intersection in the CARLA built-in map Town05. The results were derived from the mean and standard deviation after 25 repetitions. In each experiment, a delivery robot followed 80 waypoints through an intersection, with a scheduled disconnection at the 40th waypoint.

Initially, was set to 0.37, a default value obtained from a previous experiment. The alpha parameter was set to 1.5, and the pivots were −0.18, 0.37, and 1.01. However, the pivots were not used as they did not fall into a local minimum during the experiment. The error value was calculated as the vertical distance between an imaginary line connecting the waypoints and the vehicle’s position. In contrast, the velocity was calculated as the average value until the end of the episode.

References

- Arulkumaran, K.; Deisenroth, M.P.; Brundage, M.; Bharath, A.A. Deep reinforcement learning: A brief survey. IEEE Signal Process. Mag. 2017, 34, 26–38. [Google Scholar] [CrossRef]

- Aradi, S. Survey of deep reinforcement learning for motion planning of autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2020, 23, 740–759. [Google Scholar] [CrossRef]

- Coraluppi, S.P.; Marcus, S.I. Risk-sensitive and minimax control of discrete-time, finite-state Markov decision processes. Automatica 1999, 35, 301–309. [Google Scholar] [CrossRef]

- Amodei, D.; Olah, C.; Steinhardt, J.; Christiano, P.; Schulman, J.; Mané, D. Concrete problems in AI safety. arXiv 2016, arXiv:1606.06565. [Google Scholar]

- Garcıa, J.; Fernández, F. A comprehensive survey on safe reinforcement learning. J. Mach. Learn. Res. 2015, 16, 1437–1480. [Google Scholar]

- Nguyen, A.; Yosinski, J.; Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 427–436. [Google Scholar]

- Hendrycks, D.; Gimpel, K. A baseline for detecting misclassified and out-of-distribution examples in neural networks. arXiv 2016, arXiv:1610.02136. [Google Scholar]

- The Tesla Team. A Tragic Loss; The Tesla Team: Austin, TX, USA, 2016. [Google Scholar]

- Wanner, D.; Trigell, A.S.; Drugge, L.; Jerrelind, J. Survey on fault-tolerant vehicle design. World Electr. Veh. J. 2012, 5, 598–609. [Google Scholar] [CrossRef]

- Wood, M.; Robbel, P.; Maass, M.; Tebbens, R.D.; Meijs, M.; Harb, M.; Reach, J.; Robinson, K.; Wittmann, D.; Srivastava, T. Safety first for automated driving. Aptiv, Audi, BMW, Baidu, Continental Teves, Daimler, FCA, HERE, Infineon Technologies, Intel, Volkswagen 2019. Available online: https://group.mercedes-benz.com/documents/innovation/other/safety-first-for-automated-driving.pdf (accessed on 11 October 2024).

- Stolte, T.; Ackermann, S.; Graubohm, R.; Jatzkowski, I.; Klamann, B.; Winner, H.; Maurer, M. Taxonomy to unify fault tolerance regimes for automotive systems: Defining fail-operational, fail-degraded, and fail-safe. IEEE Trans. Intell. Veh. 2021, 7, 251–262. [Google Scholar] [CrossRef]

- ISO 26262-1:2018; Road Vehicles–Functional Safety. International Organization for Standardization: Geneva, Switzerland, 2018.

- Kain, T.; Tompits, H.; Müller, J.S.; Mundhenk, P.; Wesche, M.; Decke, H. FDIRO: A general approach for a fail-operational system design. In Proceedings of the 30th European Safety and Reliability Conference and 15th Probabilistic Safety Assessment and Management Conference, Venice, Italy, 1–5 November 2020; Research Publishing Services: Singapore, 2020. [Google Scholar] [CrossRef]

- Polleti, G.; Santana, M.; Del Sant, F.S.; Fontes, E. Hierarchical Fallback Architecture for High Risk Online Machine Learning Inference. arXiv 2025, arXiv:2501.17834. [Google Scholar]

- Simonik, P.; Snasel, V.; Ojha, V.; Platoš, J.; Mrovec, T.; Klein, T.; Suganthan, P.N.; Ligori, J.J.; Gao, R.; Gruenwaldt, M. Steering Angle Estimation for Automated Driving on Approach to Analytical Redundancy for Fail-Operational Mode. 2024. Available online: https://ssrn.com/abstract=4938200 (accessed on 11 October 2024).

- Kim, T.; Yadav, P.; Suk, H.; Kim, S. Learning unsupervised disentangled skill latents to adapt unseen task and morphological modifications. Eng. Appl. Artif. Intell. 2022, 116, 105367. [Google Scholar] [CrossRef]

- Thananjeyan, B.; Balakrishna, A.; Nair, S.; Luo, M.; Srinivasan, K.; Hwang, M.; Gonzalez, J.E.; Ibarz, J.; Finn, C.; Goldberg, K. Recovery rl: Safe reinforcement learning with learned recovery zones. IEEE Robot. Autom. Lett. 2021, 6, 4915–4922. [Google Scholar] [CrossRef]

- Gu, S.; Yang, L.; Du, Y.; Chen, G.; Walter, F.; Wang, J.; Yang, Y.; Knoll, A. A review of safe reinforcement learning: Methods, theory and applications. arXiv 2022, arXiv:2205.10330. [Google Scholar] [CrossRef]

- Achiam, J.; Held, D.; Tamar, A.; Abbeel, P. Constrained policy optimization. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 22–31. [Google Scholar]

- Kim, D.; Oh, S. TRC: Trust region conditional value at risk for safe reinforcement learning. IEEE Robot. Autom. Lett. 2022, 7, 2621–2628. [Google Scholar] [CrossRef]

- Geibel, P.; Wysotzki, F. Risk-sensitive reinforcement learning applied to control under constraints. J. Artif. Intell. Res. 2005, 24, 81–108. [Google Scholar] [CrossRef]

- Tessler, C.; Mankowitz, D.J.; Mannor, S. Reward constrained policy optimization. arXiv 2018, arXiv:1805.11074. [Google Scholar]

- Srinivasan, K.; Eysenbach, B.; Ha, S.; Tan, J.; Finn, C. Learning to be safe: Deep rl with a safety critic. arXiv 2020, arXiv:2010.14603. [Google Scholar]

- Chow, Y.; Nachum, O.; Duenez-Guzman, E.; Ghavamzadeh, M. A lyapunov-based approach to safe reinforcement learning. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Chow, Y.; Nachum, O.; Faust, A.; Duenez-Guzman, E.; Ghavamzadeh, M. Lyapunov-based safe policy optimization for continuous control. arXiv 2019, arXiv:1901.10031. [Google Scholar]

- Lee, J.; Hwangbo, J.; Hutter, M. Robust recovery controller for a quadrupedal robot using deep reinforcement learning. arXiv 2019, arXiv:1901.07517. [Google Scholar]

- Fisac, J.F.; Akametalu, A.K.; Zeilinger, M.N.; Kaynama, S.; Gillula, J.; Tomlin, C.J. A general safety framework for learning-based control in uncertain robotic systems. IEEE Trans. Autom. Control 2018, 64, 2737–2752. [Google Scholar] [CrossRef]

- Gillula, J.H.; Tomlin, C.J. Guaranteed safe online learning via reachability: Tracking a ground target using a quadrotor. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 2723–2730. [Google Scholar]

- Li, S.; Bastani, O. Robust model predictive shielding for safe reinforcement learning with stochastic dynamics. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 7166–7172. [Google Scholar]

- Garcia, C.E.; Prett, D.M.; Morari, M. Model predictive control: Theory and practice—A survey. Automatica 1989, 25, 335–348. [Google Scholar] [CrossRef]

- Sadigh, D.; Kapoor, A. Safe control under uncertainty with probabilistic signal temporal logic. In Proceedings of the Robotics: Science and Systems XII, Ann Arbor, MI, USA, 12–14 July 2016. [Google Scholar]

- Berkenkamp, F.; Turchetta, M.; Schoellig, A.; Krause, A. Safe Model-based Reinforcement Learning with Stability Guarantees. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Rosolia, U.; Borrelli, F. Learning model predictive control for iterative tasks. a data-driven control framework. IEEE Trans. Autom. Control 2017, 63, 1883–1896. [Google Scholar] [CrossRef]

- Bastani, O.; Li, S.; Xu, A. Safe Reinforcement Learning via Statistical Model Predictive Shielding. In Proceedings of the Robotics: Science and Systems, Virtually, 12–16 July 2021; pp. 1–13. [Google Scholar]

- Pertsch, K.; Lee, Y.; Lim, J. Accelerating reinforcement learning with learned skill priors. In Proceedings of the Conference on Robot Learning, London, UK, 8–11 November 2021; pp. 188–204. [Google Scholar]

- Nam, T.; Sun, S.H.; Pertsch, K.; Hwang, S.J.; Lim, J.J. Skill-based meta-reinforcement learning. arXiv 2022, arXiv:2204.11828. [Google Scholar]

- Thrun, S.; Schwartz, A. Finding structure in reinforcement learning. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 28 November–1 December 1994; Volume 7. [Google Scholar]

- Pickett, M.; Barto, A.G. Policyblocks: An algorithm for creating useful macro-actions in reinforcement learning. In Proceedings of the ICML, Sydney, Australia, 8–12 July 2002; Volume 19, pp. 506–513. [Google Scholar]

- Li, A.C.; Florensa, C.; Clavera, I.; Abbeel, P. Sub-policy adaptation for hierarchical reinforcement learning. arXiv 2019, arXiv:1906.05862. [Google Scholar]

- Sutton, R.S.; Precup, D.; Singh, S. Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artif. Intell. 1999, 112, 181–211. [Google Scholar] [CrossRef]

- Bacon, P.L.; Harb, J.; Precup, D. The option-critic architecture. In Proceedings of the AAAI Conference on Artificial Intelligence 2017, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Plappert, M.; Andrychowicz, M.; Ray, A.; McGrew, B.; Baker, B.; Powell, G.; Schneider, J.; Tobin, J.; Chociej, M.; Welinder, P.; et al. Multi-goal reinforcement learning: Challenging robotics environments and request for research. arXiv 2018, arXiv:1802.09464. [Google Scholar]

- Mandlekar, A.; Ramos, F.; Boots, B.; Savarese, S.; Fei-Fei, L.; Garg, A.; Fox, D. Iris: Implicit reinforcement without interaction at scale for learning control from offline robot manipulation data. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4414–4420. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Hausman, K.; Springenberg, J.T.; Wang, Z.; Heess, N.; Riedmiller, M. Learning an embedding space for transferable robot skills. In Proceedings of the International Conference on Learning Representation, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Lynch, C.; Khansari, M.; Xiao, T.; Kumar, V.; Tompson, J.; Levine, S.; Sermanet, P. Learning latent plans from play. In Proceedings of the Conference on Robot Learning, Virtual, 16–18 November 2020; pp. 1113–1132. [Google Scholar]

- Zhang, L.; Xiang, T.; Gong, S. Learning a deep embedding model for zero-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2021–2030. [Google Scholar]

- Jiang, Z.; Gao, J.; Chen, J. Unsupervised skill discovery via recurrent skill training. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 39034–39046. [Google Scholar]

- Park, S.; Lee, K.; Lee, Y.; Abbeel, P. Controllability-Aware Unsupervised Skill Discovery. arXiv 2023, arXiv:2302.05103. [Google Scholar]

- Gregor, K.; Rezende, D.J.; Wierstra, D. Variational intrinsic control. arXiv 2016, arXiv:1611.07507. [Google Scholar]

- Achiam, J.; Edwards, H.; Amodei, D.; Abbeel, P. Variational option discovery algorithms. arXiv 2018, arXiv:1807.10299. [Google Scholar]

- Eysenbach, B.; Gupta, A.; Ibarz, J.; Levine, S. Diversity is all you need: Learning skills without a reward function. arXiv 2018, arXiv:1802.06070. [Google Scholar]

- Kim, J.; Park, S.; Kim, G. Unsupervised skill discovery with bottleneck option learning. arXiv 2021, arXiv:2106.14305. [Google Scholar]

- Sharma, A.; Gu, S.; Levine, S.; Kumar, V.; Hausman, K. Dynamics-aware unsupervised discovery of skills. arXiv 2019, arXiv:1907.01657. [Google Scholar]

- Park, S.; Choi, J.; Kim, J.; Lee, H.; Kim, G. Lipschitz-constrained unsupervised skill discovery. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Warde-Farley, D.; de Wiele, T.; Kulkarni, T.; Ionescu, C.; Hansen, S.; Mnih, V. Unsupervised control through non-parametric discriminative rewards. arXiv 2018, arXiv:1811.11359. [Google Scholar]

- Pitis, S.; Chan, H.; Zhao, S.; Stadie, B.; Ba, J. Maximum entropy gain exploration for long horizon multi-goal reinforcement learning. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 7750–7761. [Google Scholar]

- Kim, H.; Kim, J.; Jeong, Y.; Levine, S.; Song, H.O. Emi: Exploration with mutual information. arXiv 2018, arXiv:1810.01176. [Google Scholar]

- Alemi, A.A.; Fischer, I.; Dillon, J.V.; Murphy, K. Deep variational information bottleneck. arXiv 2016, arXiv:1612.00410. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).