Turn-Taking Modelling in Conversational Systems: A Review of Recent Advances

Abstract

1. Introduction

- What signals are involved in the coordination of turn-taking in dialogue?

- How can the system identify appropriate places and times to generate a backchannel?

- How can real-time turn-taking be optimised to adapt to human–agent interaction scenarios and evaluated through a user study involving real-world interactions?

- The handling of multi-party and situated interactions, including scenarios with multiple potential addressees or the manipulation of physical objects, will also be addressed.

- Key Terms

- Transition-Relevance Place (TRP) refers to a moment in conversation where a speaker change can appropriately occur.

- Predictive turn-taking describes the use of cues that precede a TRP to anticipate turn completion and plan response timing.

- Voice Activity Projection (VAP) denotes models that forecast near-future speech activity to coordinate responses or backchannels in real time.

2. Background

Speaker A: “Hey, did you hear about the concert next weekend?”

Speaker B: “Oh, yeah! I got my tickets yesterday.”

Speaker C: “Same here, can’t wait!”

(Later in the conversation)

Speaker A: “So, this reminds me of when I attended my first concert years ago. It was raining, and we were waiting outside for hours…” (Speaker A continues narrating uninterrupted for several minutes while others listen attentively.)

2.1. Temporal Dynamics in Turn-Taking

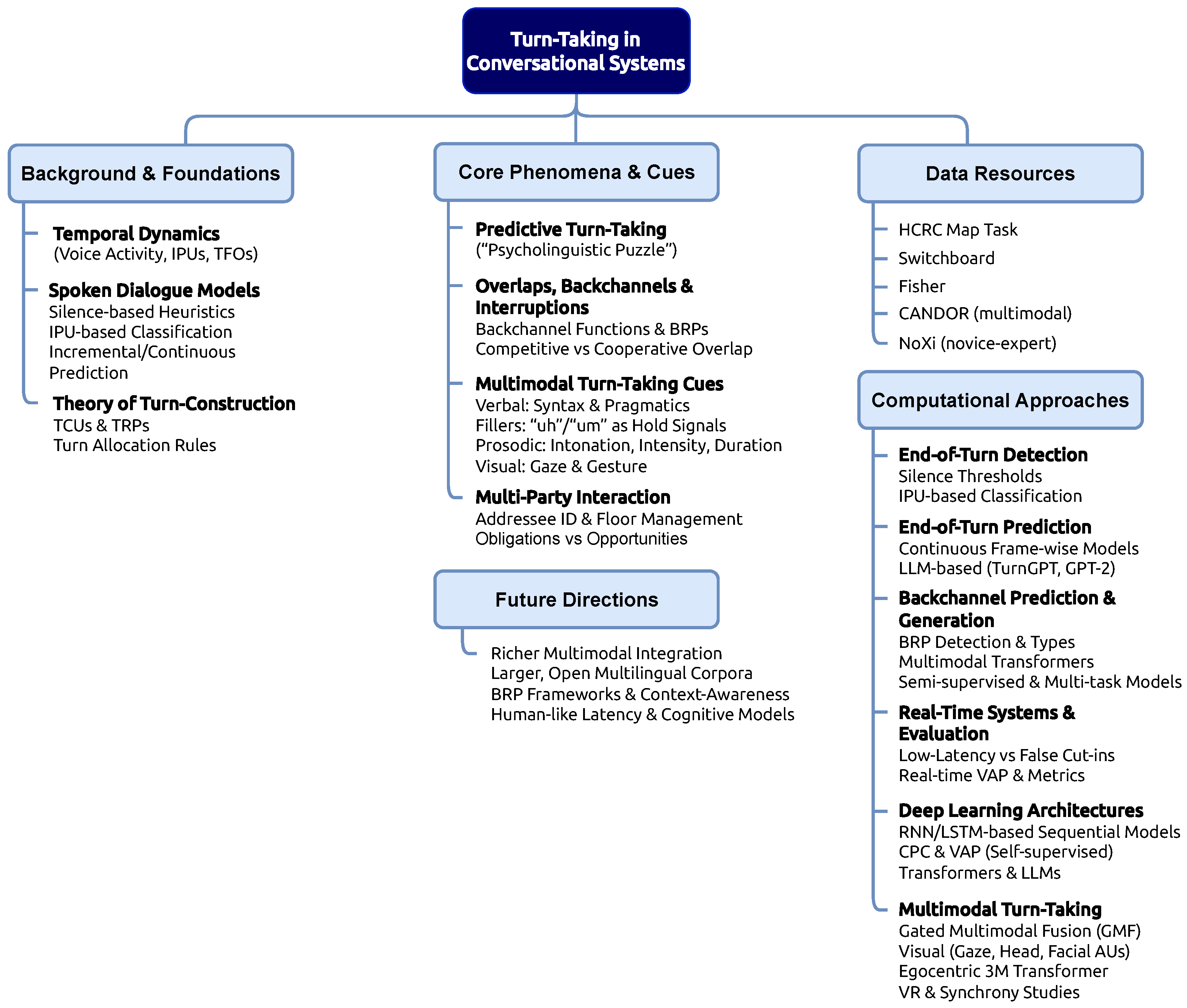

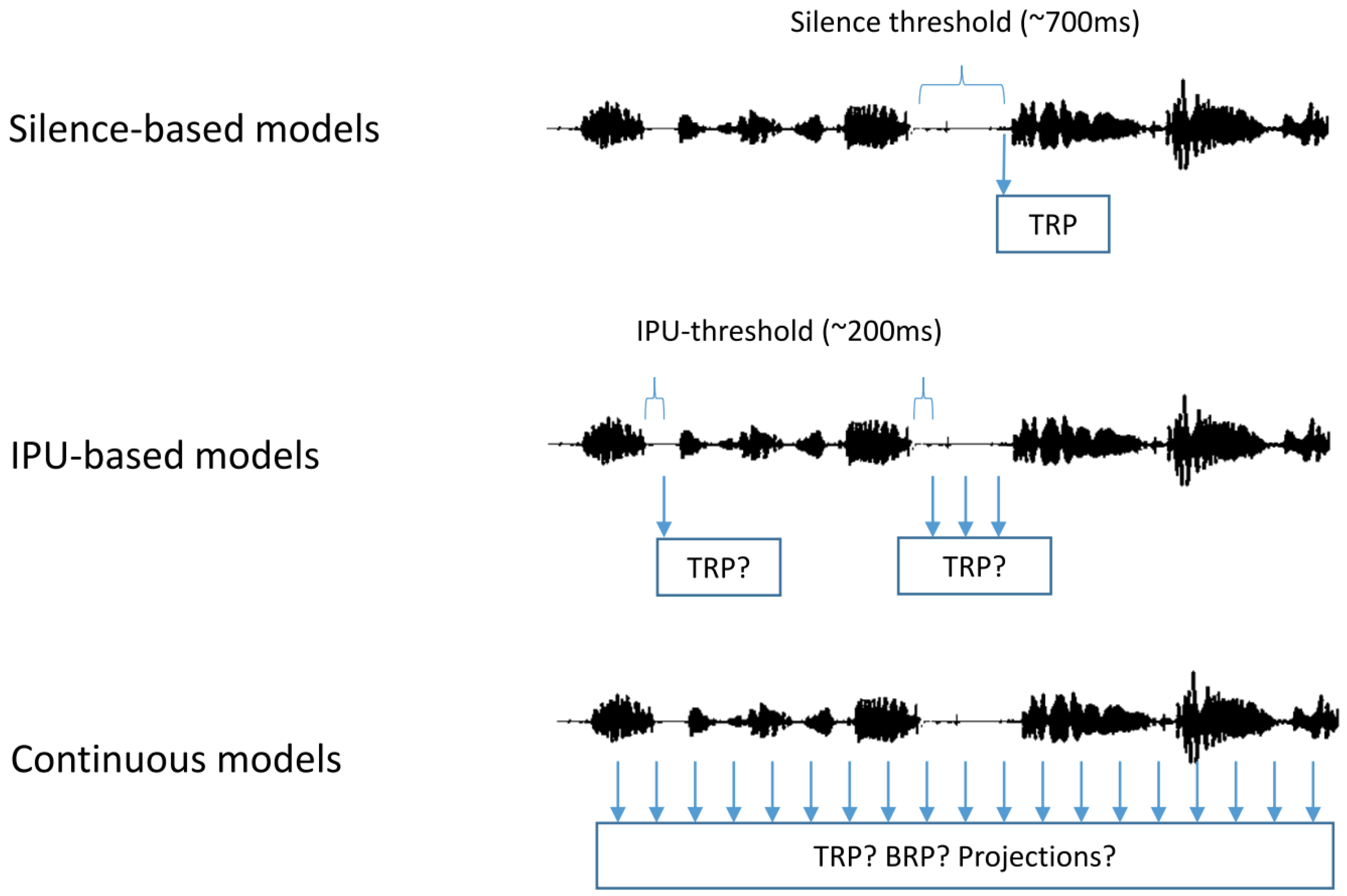

2.2. Implementing Turn-Taking in Spoken Dialogue Systems

2.3. Predictive Turn-Taking

2.4. Overlaps, Backchannels and Interruptions

2.5. Multi-Party Interaction

Obligations vs. Opportunities in Multi-Party Turn-Taking

2.6. Datasets

2.6.1. HCRC Map Task Corpus

2.6.2. Switchboard Corpus

2.6.3. Fisher Corpus

2.6.4. CANDOR Corpus

2.6.5. NoXi Database

3. Analysing Dialogue Content: Cues for Effective Turn-Taking

3.1. Text and Verbal Cues: Syntax, Semantics, and Pragmatics

3.1.1. Syntactic & Pragmatic Completeness

- A: I booked/the tickets/

- B: Oh/for/which event/

- A: The concert/

3.1.2. Projectability and Turn Coordination

3.2. Fillers or Filled Pauses

3.3. Speech and the Acoustic Domain

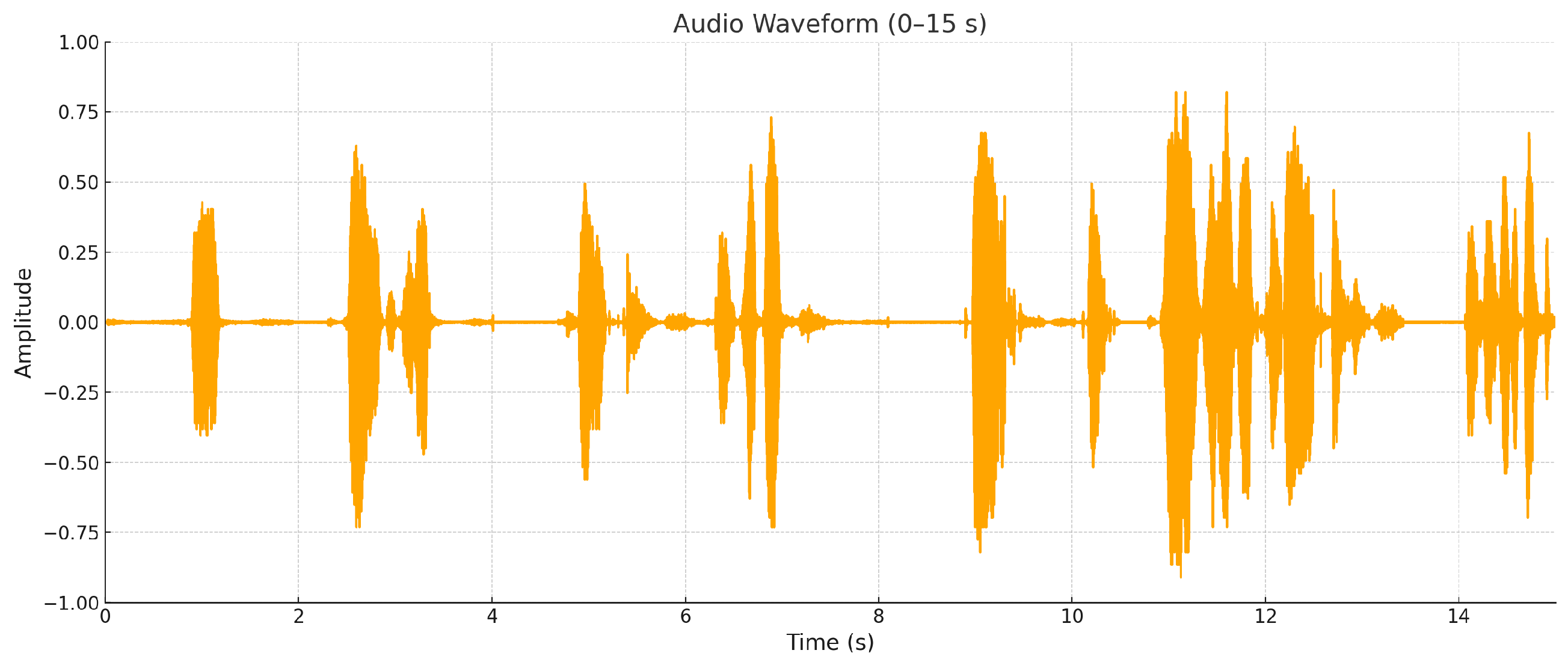

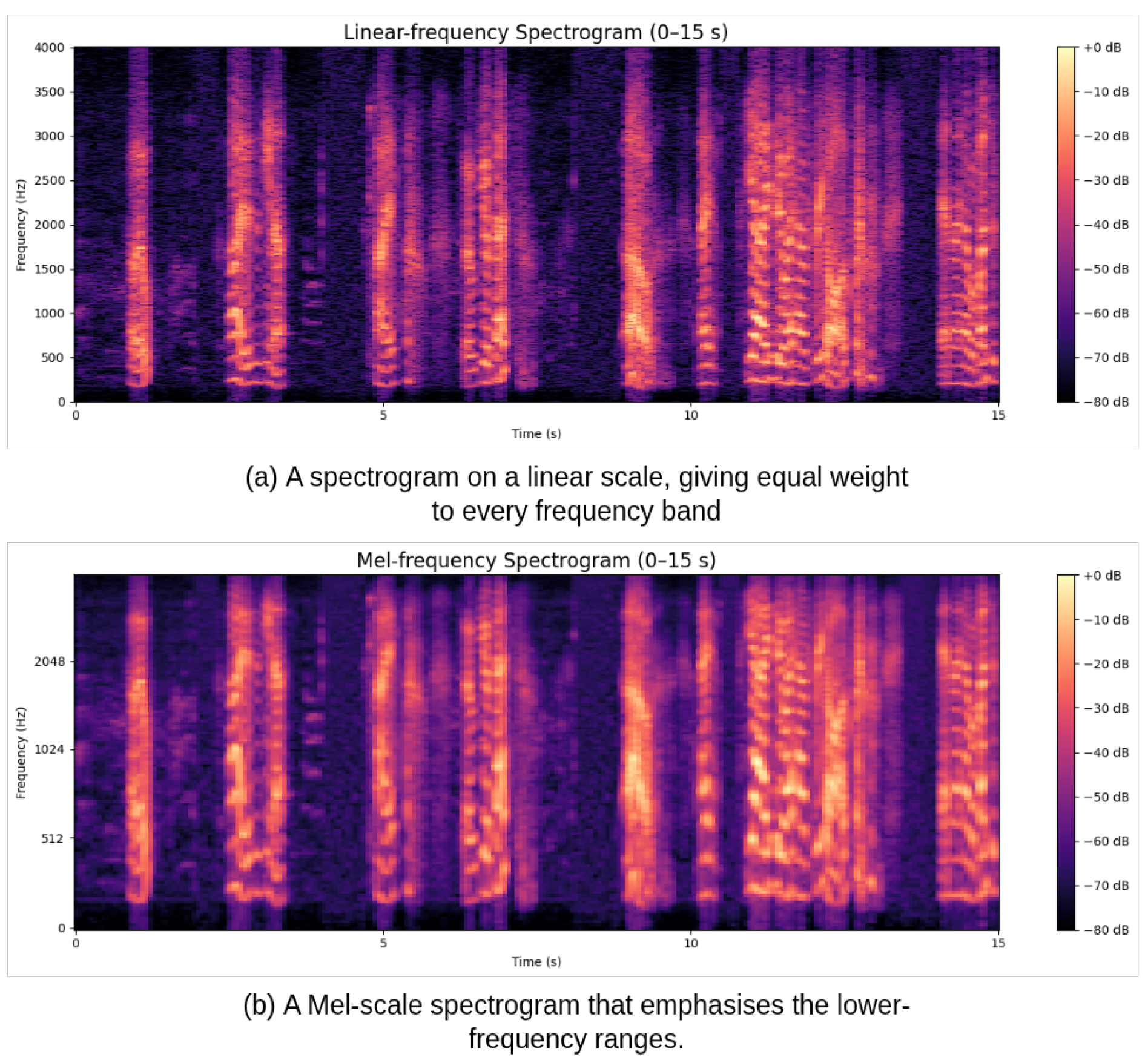

Spectrogram and Mel-Spectrogram

3.4. Prosody

3.4.1. Intonation and Turn-Taking

3.4.2. Intensity and Turn Regulation

3.4.3. Speech Rate and Duration as Turn-Taking Cues

3.4.4. Prosody in Computational Models of Turn-Taking

3.5. Visual Cues

3.5.1. Gaze

3.5.2. Gesture

- Hand Gestures and Predictive Turn-taking

- Gesture Timing and Turn Coordination

4. Computational Models for Natural Turn-Taking in Human–Robot Interaction

4.1. Turn-Taking Detection and Prediction Models

4.1.1. End-of-Turn Detection

4.1.2. End-of-Turn Prediction

4.2. Backchannel Prediction and Generation

4.3. Real-Time Turn-Taking Systems

4.4. Modelling Turn-Taking in Multi-Party Interaction

4.5. Multimodal Turn-Taking Approaches

5. Deep Learning (Network Architectures) for Turn-Taking Prediction

5.1. Contrastive Predictive Coding

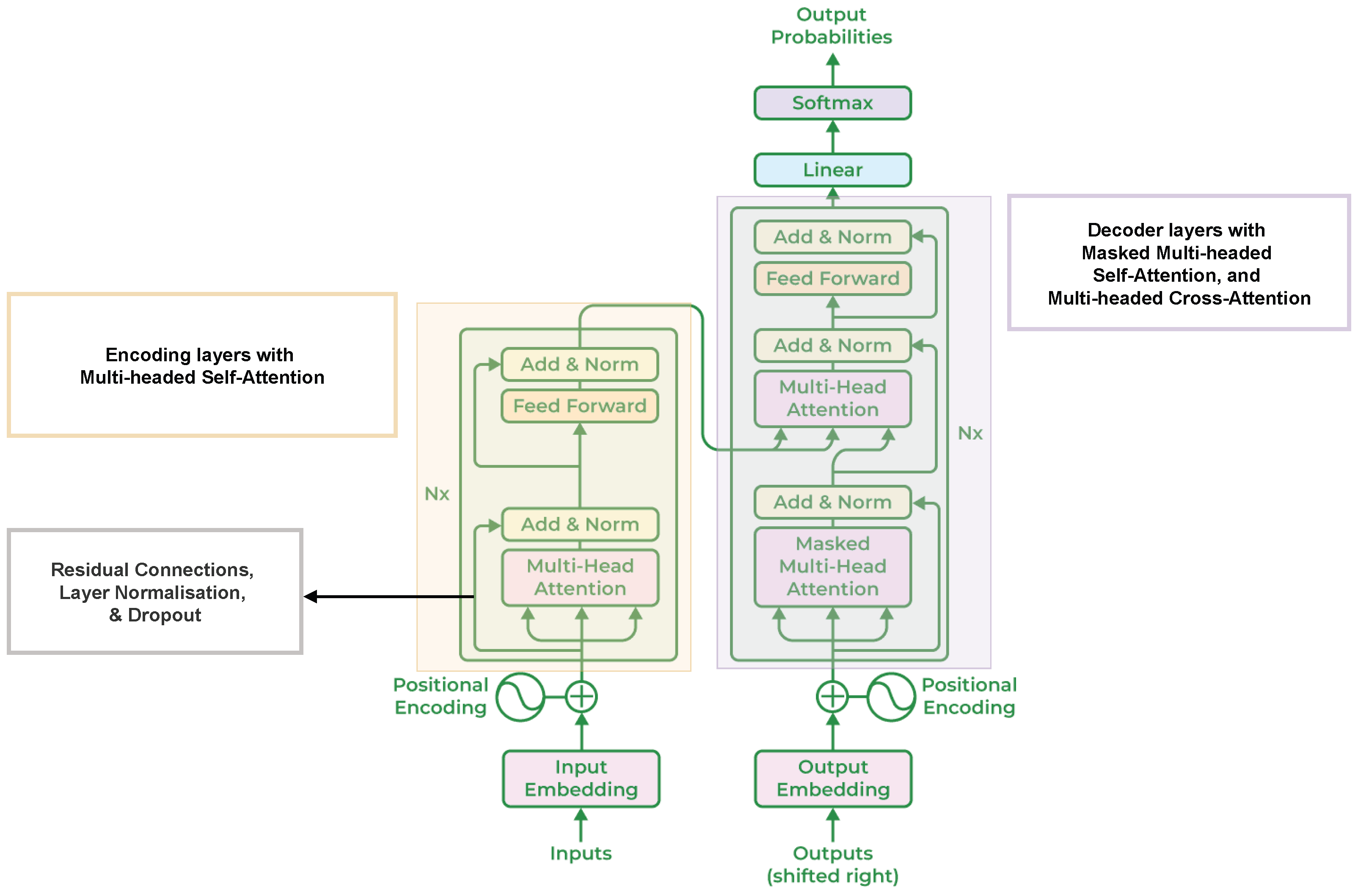

5.2. Transformers

5.3. Input Features and Data Flow

6. Conclusions and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Moore, R.K. A comparison of the data requirements of automatic speech recognition systems and human listeners. In Proceedings of the 8th European Conference on Speech Communication and Technology (Eurospeech 2003), Geneva, Switzerland, 1–4 September 2003; pp. 2581–2584. [Google Scholar] [CrossRef]

- Mehl, M.R.; Vazire, S.; Ramírez-Esparza, N.; Slatcher, R.B.; Pennebaker, J.W. Are Women Really More Talkative Than Men? Science 2007, 317, 82. [Google Scholar] [CrossRef] [PubMed]

- Abreu, F.; Pika, S. Turn-taking skills in mammals: A systematic review into development and acquisition. Front. Ecol. Evol. 2022, 10, 987253. [Google Scholar] [CrossRef]

- Cartmill, E.A. Overcoming bias in the comparison of human language and animal communication. Proc. Natl. Acad. Sci. USA 2023, 120, e2218799120. [Google Scholar] [CrossRef]

- Nguyen, T.; Zimmer, L.; Hoehl, S. Your turn, my turn. Neural synchrony in mother-infant proto-conversation. Philos. Trans. R. Soc. Biol. Sci. 2022, 378, 20210488. [Google Scholar] [CrossRef]

- Endevelt-Shapira, Y.; Bosseler, A.N.; Mizrahi, J.C.; Meltzoff, A.N.; Kuhl, P.K. Mother-infant social and language interactions at 3 months are associated with infants’ productive language development in the third year of life. Infant Behav. Dev. 2024, 75, 101929. [Google Scholar] [CrossRef]

- Heldner, M.; Edlund, J. Pauses, gaps and overlaps in conversations. J. Phon. 2010, 38, 555–568. [Google Scholar] [CrossRef]

- Sacks, H.; Schegloff, E.A.; Jefferson, G.D. A simplest systematics for the organization of turn-taking for conversation. Language 1974, 50, 696–735. [Google Scholar] [CrossRef]

- Skantze, G. Turn-taking in Conversational Systems and Human-Robot Interaction: A Review. Comput. Speech Lang. 2021, 67, 101178. [Google Scholar] [CrossRef]

- Levinson, S.C.; Torreira, F. Timing in turn-taking and its implications for processing models of language. Front. Psychol. 2015, 6, 731. [Google Scholar] [CrossRef] [PubMed]

- Garrod, S.; Pickering, M.J. The use of content and timing to predict turn transitions. Front. Psychol. 2015, 6, 751. [Google Scholar] [CrossRef] [PubMed]

- Achiam, O.J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Holler, J.; Kendrick, K.H.; Casillas, M.; Levinson, S.C. Turn-Taking in Human Communicative Interaction; Frontiers Media SA: Lausanne, Switzerland, 2016. [Google Scholar]

- Levinson, S.C. Turn-taking in Human Communication—Origins and Implications for Language Processing. Trends Cogn. Sci. 2016, 20, 6–14. [Google Scholar] [CrossRef]

- Pika, S.; Wilkinson, R.; Kendrick, K.H.; Vernes, S.C. Taking turns: Bridging the gap between human and animal communication. Proc. R. Soc. Biol. Sci. 2018, 285, 20180598. [Google Scholar] [CrossRef]

- Ford, C.E.; Thompson, S.A. Interactional Units in Conversation: Syntactic, Intonational, and Pragmatic Resources for the Management of Turns. In Interaction And Grammar; Cambridge University Press: Cambridge, UK, 1996; pp. 134–184. [Google Scholar]

- Local, J.; Walker, G. How phonetic features project more talk. J. Int. Phon. Assoc. 2012, 42, 255–280. [Google Scholar] [CrossRef]

- Bögels, S.; Torreira, F. Listeners use intonational phrase boundaries to project turn ends in spoken interaction. J. Phon. 2015, 52, 46–57. [Google Scholar] [CrossRef]

- Bögels, S.; Torreira, F. Turn-end Estimation in Conversational Turn-taking: The Roles of Context and Prosody. Discourse Processes 2021, 58, 903–924. [Google Scholar] [CrossRef]

- Magyari, L.; de Ruiter, J.P. Prediction of Turn-Ends Based on Anticipation of Upcoming Words. Front. Psychol. 2012, 3, 376. [Google Scholar] [CrossRef]

- Riest, C.; Jorschick, A.B.; de Ruiter, J.P. Anticipation in turn-taking: Mechanisms and information sources. Front. Psychol. 2015, 6, 89. [Google Scholar] [CrossRef] [PubMed]

- Selting, M. The construction of units in conversational talk. Lang. Soc. 2000, 29, 477–517. [Google Scholar] [CrossRef]

- de Ruiter, J.P.; Mitterer, H.; Enfield, N.J. Projecting the End of a Speaker’s Turn: A Cognitive Cornerstone of Conversation. Language 2006, 82, 515–535. [Google Scholar] [CrossRef]

- Goodwin, C.; Heritage, J. Conversation analysis. Annu. Rev. Anthropol. 1990, 19, 283–307. [Google Scholar] [CrossRef]

- Edmondson, W. Spoken Discourse: A Model for Analysis; A Longman paperback; Longman: London, UK, 1981. [Google Scholar]

- Brady, P.T. A technique for investigating on-off patterns of speech. Bell Syst. Tech. J. 1965, 44, 1–22. [Google Scholar] [CrossRef]

- Welford, W.; Welford, A.; Brebner, J.; Brebner, J.; Kirby, N. Reaction Times; Academic Press: Cambridge, MA, USA, 1980. [Google Scholar]

- Wilson, M.; Wilson, T.P. An oscillator model of the timing of turn-taking. Psychon. Bull. Rev. 2005, 12, 957–968. [Google Scholar] [CrossRef]

- Stivers, T.; Enfield, N.J.; Brown, P.; Englert, C.; Hayashi, M.; Heinemann, T.; Hoymann, G.; Rossano, F.; Ruiter, J.P.D.; Yoon, K.E.; et al. Universals and cultural variation in turn-taking in conversation. Proc. Natl. Acad. Sci. USA 2009, 106, 10587–10592. [Google Scholar] [CrossRef] [PubMed]

- Pouw, W.; Holler, J. Timing in conversation is dynamically adjusted turn by turn in dyadic telephone conversations. Cognition 2022, 222, 105015. [Google Scholar] [CrossRef] [PubMed]

- Ferrer, L.; Shriberg, E.; Stolcke, A. Is the speaker done yet? faster and more accurate end-of-utterance detection using prosody. In Proceedings of the Interspeech, Denver, CO, USA, 16–20 September 2002. [Google Scholar]

- Ward, N.G. Prosodic Patterns in English Conversation; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Barthel, M.; Meyer, A.S.; Levinson, S.C. Next Speakers Plan Their Turn Early and Speak after Turn-Final “Go-Signals”. Front. Psychol. 2017, 8, 393. [Google Scholar] [CrossRef]

- Pickering, M.J.; Garrod, S. An integrated theory of language production and comprehension. Behav. Brain Sci. 2013, 36, 329–347. [Google Scholar] [CrossRef]

- Holler, J.; Kendrick, K.H. Unaddressed participants’ gaze in multi-person interaction: Optimizing recipiency. Front. Psychol. 2015, 6, 98. [Google Scholar] [CrossRef]

- Yngve, V.H. On getting a word in edgewise. In Papers from the Sixth Regional Meeting Chicago Linguistic Society, April 16–18, 1970; Chicago Linguistic Society; Department of Linguistics, University of Chicago: Chicago, IL, USA, 1970; pp. 567–578. [Google Scholar]

- Clark, H.H. Using Language; “Using” Linguistic Books; Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Knudsen, B.; Creemers, A.; Meyer, A.S. Forgotten Little Words: How Backchannels and Particles May Facilitate Speech Planning in Conversation? Front. Psychol. 2020, 11, 593671. [Google Scholar] [CrossRef] [PubMed]

- Coates, J. No gap, lots of overlap: Turn-taking patterns in the talk of women friends. In Researching Language and Literacy in Social Context: A Reader; Multilingual Matters; Multilingual Matters Ltd.: Bristol, UK, 1994; pp. 177–192. [Google Scholar]

- Schegloff, E.A. Overlapping talk and the organization of turn-taking for conversation. Lang. Soc. 2000, 29, 1–63. [Google Scholar] [CrossRef]

- Poesio, M.; Rieser, H. Completions, Coordination, and Alignment in Dialogue. Dialogue Discourse 2010, 1, 1–89. [Google Scholar] [CrossRef]

- French, P.; Local, J. Turn-competitive incomings. J. Pragmat. 1983, 7, 17–38. [Google Scholar] [CrossRef]

- Bennett, A. Interruptions and the interpretation of conversation. In Proceedings of the Annual Meeting of the Berkeley Linguistics Society, Berkeley, CA, USA, 18–20 February 1978; pp. 557–575. [Google Scholar]

- Gravano, A.; Hirschberg, J. A Corpus-Based Study of Interruptions in Spoken Dialogue. In Proceedings of the Interspeech, Portland, OR, USA, 9–13 September 2012. [Google Scholar]

- Heldner, M.; Hjalmarsson, A.; Edlund, J. Backchannel relevance spaces. In Proceedings of the Nordic Prosody XI, Tartu, Estonia, 15–17 August 2012; Peter Lang Publishing Group: Lausanne, Switzerland, 2013; pp. 137–146. [Google Scholar]

- Ward, N.G. Using prosodic clues to decide when to produce back-channel utterances. In Proceedings of the Fourth International Conference on Spoken Language Processing, ICSLP ’96, Philadelphia, PA, USA, 3–6 October 1996; Volume 3, pp. 1728–1731. [Google Scholar]

- Gravano, A.; Hirschberg, J. Turn-taking cues in task-oriented dialogue. Comput. Speech Lang. 2011, 25, 601–634. [Google Scholar] [CrossRef]

- Kurata, F.; Saeki, M.; Fujie, S.; Matsuyama, Y. Multimodal Turn-Taking Model Using Visual Cues for End-of-Utterance Prediction in Spoken Dialogue Systems. In Proceedings of the Interspeech, Dublin, Ireland, 20–24 August 2023. [Google Scholar]

- Kendrick, K.H.; Holler, J.; Levinson, S.C. Turn-taking in human face-to-face interaction is multimodal: Gaze direction and manual gestures aid the coordination of turn transitions. Philos. Trans. R. Soc. 2023, 378, 20210473. [Google Scholar] [CrossRef]

- Onishi, K.; Tanaka, H.; Nakamura, S. Multimodal Voice Activity Prediction: Turn-taking Events Detection in Expert-Novice Conversation. In Proceedings of the 11th International Conference on Human-Agent Interaction, Gothenburg, Sweden, 4–7 December 2023. [Google Scholar]

- Lai, C. What do you mean, you’re uncertain?: The interpretation of cue words and rising intonation in dialogue. In Proceedings of the Interspeech, Chiba, Japan, 26–30 September 2010. [Google Scholar]

- Gravano, A.; Benus, S.; Chavez, H.; Hirschberg, J.; Wilcox, L. On the role of context and prosody in the interpretation of ‘okay’. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Prague, Czech Republic, 23–30 June 2007. [Google Scholar]

- Bavelas, J.B.; Coates, L.; Johnson, T. Listener Responses as a Collaborative Process: The Role of Gaze. J. Commun. 2002, 52, 566–580. [Google Scholar] [CrossRef]

- Wang, K.; Cheung, M.M.; Zhang, Y.; Yang, C.; Chen, P.Q.; Fu, E.Y.; Ngai, G. Unveiling Subtle Cues: Backchannel Detection Using Temporal Multimodal Attention Networks. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023. [Google Scholar]

- Johansson, M.; Skantze, G. Opportunities and Obligations to Take Turns in Collaborative Multi-Party Human-Robot Interaction. In Proceedings of the SIGDIAL Conference, Prague, Czech Republic, 2–4 September 2015. [Google Scholar]

- Skantze, G.; Johansson, M.; Beskow, J. Exploring Turn-taking Cues in Multi-party Human-robot Discussions about Objects. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; pp. 67–74. [Google Scholar] [CrossRef]

- Traum, D.R.; Rickel, J. Embodied agents for multi-party dialogue in immersive virtual worlds. In Proceedings of the Adaptive Agents and Multi-Agent Systems, Bologna, Italy, 15–19 July 2002. [Google Scholar]

- Vertegaal, R.; Slagter, R.J.; van der Veer, G.C.; Nijholt, A. Eye gaze patterns in conversations: There is more to conversational agents than meets the eyes. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Seattle, WA, USA, 31 March–5 April 2001. [Google Scholar]

- Katzenmaier, M.; Stiefelhagen, R.; Schultz, T. Identifying the addressee in human-human-robot interactions based on head pose and speech. In Proceedings of the 6th International Conference on Multimodal Interfaces, State College, PA, USA, 13–15 October 2004; pp. 144–151. [Google Scholar]

- Ba, S.O.; Odobez, J.M. Recognizing Visual Focus of Attention From Head Pose in Natural Meetings. IEEE Trans. Syst. Man, Cybern. Part (Cybern.) 2009, 39, 16–33. [Google Scholar] [CrossRef]

- Stiefelhagen, R.; Zhu, J. Head orientation and gaze direction in meetings. In Proceedings of the CHI ’02 Extended Abstracts on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002. [Google Scholar]

- Anderson, A.H.; Bader, M.; Bard, E.G.; Boyle, E.; Doherty, G.; Garrod, S.; Isard, S.D.; Kowtko, J.C.; McAllister, J.; Miller, J.; et al. The Hcrc Map Task Corpus. Lang. Speech 1991, 34, 351–366. [Google Scholar] [CrossRef]

- Godfrey, J.; Holliman, E.; McDaniel, J. SWITCHBOARD: Telephone speech corpus for research and development. In Proceedings of the [Proceedings] ICASSP-92: 1992 IEEE International Conference on Acoustics, Speech, and Signal Processing, San Francisco, CA, USA, 23–26 March 1992; Volume 1, pp. 517–520. [Google Scholar] [CrossRef]

- Cieri, C.; Miller, D.; Walker, K. The Fisher Corpus: A Resource for the Next Generations of Speech-to-Text. In Proceedings of the International Conference on Language Resources and Evaluation, Lisbon, Portugal, 26–28 May 2004. [Google Scholar]

- Reece, A.; Cooney, G.; Bull, P.; Chung, C.; Dawson, B.; Fitzpatrick, C.A.; Glazer, T.; Knox, D.; Liebscher, A.; Marin, S. The CANDOR corpus: Insights from a large multimodal dataset of naturalistic conversation. Sci. Adv. 2023, 9, eadf3197. [Google Scholar] [CrossRef]

- Cafaro, A.; Wagner, J.; Baur, T.; Dermouche, S.; Torres, M.T.; Pelachaud, C.; André, E.; Valstar, M.F. The NoXi database: Multimodal recordings of mediated novice-expert interactions. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017. [Google Scholar]

- Duncan, S.; Niederehe, G. On signalling that it’s your turn to speak. J. Exp. Soc. Psychol. 1974, 10, 234–247. [Google Scholar] [CrossRef]

- Razavi, S.Z.; Kane, B.; Schubert, L.K. Investigating Linguistic and Semantic Features for Turn-Taking Prediction in Open-Domain Human-Computer Conversation. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Skantze, G. Towards a General, Continuous Model of Turn-taking in Spoken Dialogue using LSTM Recurrent Neural Networks. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue; Jokinen, K., Stede, M., DeVault, D., Louis, A., Eds.; Association for Computational Linguistics: Saarbrücken, Germany, 2017; pp. 220–230. [Google Scholar] [CrossRef]

- Ekstedt, E.; Skantze, G. TurnGPT: A Transformer-based Language Model for Predicting Turn-taking in Spoken Dialog. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; Cohn, T., He, Y., Liu, Y., Eds.; pp. 2981–2990. [Google Scholar] [CrossRef]

- da Silva Morais, E.; Damasceno, M.; Aronowitz, H.; Satt, A.; Hoory, R. Modeling Turn-Taking in Human-To-Human Spoken Dialogue Datasets Using Self-Supervised Features. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Jung, D.; Cho, Y.S. Automatic Conversation Turn-Taking Segmentation in Semantic Facet. In Proceedings of the 2023 International Conference on Electronics, Information, and Communication (ICEIC), Singapore, 5–8 February 2023; pp. 1–4. [Google Scholar]

- Tree, J.E.F. Interpreting Pauses and Ums at Turn Exchanges. Discourse Process. 2002, 34, 37–55. [Google Scholar] [CrossRef]

- Rose, R.L. Um and uh as differential delay markers: The role of contextual factors. In Proceedings of the Disfluency in Spontaneous Speech (DiSS), the 7th Workshop on Disfluency in Spontaneous Speech, Edinburgh, UK, 8–9 August 2015; pp. 73–76. [Google Scholar]

- Rose, R.L. Filled Pauses in Language Teaching: Why and How. Ph.D. Thesis, Waseda University, Shinjuku, Japan, 2008. [Google Scholar]

- Clark, H.H.; Tree, J.E.F. Using uh and um in spontaneous speaking. Cognition 2002, 84, 73–111. [Google Scholar] [CrossRef] [PubMed]

- O’connell, D.C.; Kowal, S.H. Uh and Um Revisited: Are They Interjections for Signaling Delay? J. Psycholinguist. Res. 2005, 34, 555–576. [Google Scholar] [CrossRef]

- Kirjavainen, M.; Crible, L.; Beeching, K. Can filled pauses be represented as linguistic items? Investigating the effect of exposure on the perception and production of um. Lang. Speech 2021, 65, 263–289. [Google Scholar] [CrossRef]

- Corley, M.; Stewart, O.W. Hesitation Disfluencies in Spontaneous Speech: The Meaning of um. Lang. Linguist. Compass 2008, 2, 589–602. [Google Scholar] [CrossRef]

- Greenwood, D.D. The Mel Scale’s disqualifying bias and a consistency of pitch-difference equisections in 1956 with equal cochlear distances and equal frequency ratios. Hear. Res. 1997, 103, 199–224. [Google Scholar] [CrossRef]

- Local, J.; Kelly, J.D.; Wells, W.H. Towards a phonology of conversation: Turn-taking in Tyneside English. J. Linguist. 1986, 22, 411–437. [Google Scholar] [CrossRef]

- Koiso, H.; Horiuchi, Y.; Tutiya, S.; Ichikawa, A.; Den, Y. An Analysis of Turn-Taking and Backchannels Based on Prosodic and Syntactic Features in Japanese Map Task Dialogs. Lang. Speech 1998, 41, 295–321. [Google Scholar] [CrossRef]

- Duncan, S. Some Signals and Rules for Taking Speaking Turns in Conversations. J. Personal. Soc. Psychol. 1972, 23, 283–292. [Google Scholar] [CrossRef]

- Edlund, J.; Heldner, M. Exploring Prosody in Interaction Control. Phonetica 2005, 62, 215–226. [Google Scholar] [CrossRef]

- Hjalmarsson, A. The additive effect of turn-taking cues in human and synthetic voice. Speech Commun. 2011, 53, 23–35. [Google Scholar] [CrossRef]

- Selting, M. On the Interplay of Syntax and Prosody in the Constitution of Turn-Constructional Units and Turns in Conversation. Pragmatics 1996, 6, 371–388. [Google Scholar] [CrossRef]

- Boersma, P. Praat: Doing Phonetics by Computer [Computer Program]. 2011. Available online: http://www.praat.org/ (accessed on 17 March 2025).

- Kendon, A. Some functions of gaze-direction in social interaction. Acta Psychol. 1967, 26 1, 22–63. [Google Scholar] [CrossRef]

- Jokinen, K.; Nishida, M.; Yamamoto, S. On eye-gaze and turn-taking. In Proceedings of the EGIHMI ’10, Hong Kong, China, 7 February 2010. [Google Scholar]

- Oertel, C.; Wlodarczak, M.; Edlund, J.; Wagner, P.; Gustafson, J. Gaze Patterns in Turn-Taking. In Proceedings of the Interspeech, Portland, OR, USA, 9–13 September 2012. [Google Scholar]

- Vertegaal, R.; Weevers, I.; Sohn, C.; Cheung, C. GAZE-2: Conveying eye contact in group video conferencing using eye-controlled camera direction. In Proceedings of the International Conference on Human Factors in Computing Systems, Ft. Lauderdale, FL, USA, 5–10 April 2003. [Google Scholar]

- Nakano, Y.I.; Nishida, T. Attentional Behaviors as Nonverbal Communicative Signals in Situated Interactions with Conversational Agents. In Conversational Informatics: An Engineering Approach; Wiley Online Library: Hoboken, NJ, USA, 2007; pp. 85–102. [Google Scholar]

- Lee, J.; Marsella, S.; Traum, D.R.; Gratch, J.; Lance, B. The Rickel Gaze Model: A Window on the Mind of a Virtual Human. In Proceedings of the International Conference on Intelligent Virtual Agents, Paris, France, 17–19 September 2007; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Zellers, M.; House, D.; Alexanderson, S. Prosody and hand gesture at turn boundaries in Swedish. Speech Prosody 2016, 2016, 831–835. [Google Scholar]

- Holler, J.; Kendrick, K.H.; Levinson, S.C. Processing language in face-to-face conversation: Questions with gestures get faster responses. Psychon. Bull. Rev. 2017, 25, 1900–1908. [Google Scholar] [CrossRef] [PubMed]

- Ter Bekke, M.; Drijvers, L.; Holler, J. The predictive potential of hand gestures during conversation: An investigation of the timing of gestures in relation to speech. In Proceedings of the Gesture and Speech in Interaction Conference; KTH Royal Institute of Technology: Stockholm, Sweden, 2020. [Google Scholar]

- Guntakandla, N.; Nielsen, R.D. Modelling Turn-Taking in Human Conversations. In Proceedings of the AAAI Spring Symposia, Palo Alto, CA, USA, 23–25 March 2015. [Google Scholar]

- Johansson, M.; Hori, T.; Skantze, G.; Höthker, A.; Gustafson, J. Making Turn-Taking Decisions for an Active Listening Robot for Memory Training. In Proceedings of the International Conference on Software Reuse, Limassol, Cyprus, 5–7 June 2016. [Google Scholar]

- Maier, A.; Hough, J.; Schlangen, D. Towards Deep End-of-Turn Prediction for Situated Spoken Dialogue Systems. In Proceedings of the Interspeech, Stockholm, Sweden, 20–24 August 2017. [Google Scholar]

- Masumura, R.; Tanaka, T.; Ando, A.; Ishii, R.; Higashinaka, R.; Aono, Y. Neural Dialogue Context Online End-of-Turn Detection. In Proceedings of the SIGDIAL Conference, Melbourne, Australia, 12–14 July 2018. [Google Scholar]

- Lala, D.; Inoue, K.; Kawahara, T. Smooth Turn-taking by a Robot Using an Online Continuous Model to Generate Turn-taking Cues. In Proceedings of the International Conference on Multimodal Interaction, ICMI 2019, Suzhou, China, 14–18 October 2019; pp. 226–234. [Google Scholar] [CrossRef]

- Meena, R.; Skantze, G.; Gustafson, J. Data-driven models for timing feedback responses in a Map Task dialogue system. Comput. Speech Lang. 2014, 28, 903–922. [Google Scholar] [CrossRef]

- yiin Chang, S.; Li, B.; Sainath, T.N.; Zhang, C.; Strohman, T.; Liang, Q.; He, Y. Turn-Taking Prediction for Natural Conversational Speech. arXiv 2022, arXiv:2208.13321. [Google Scholar] [CrossRef]

- Liu, C.; Ishi, C.T.; Ishiguro, H. A Neural Turn-Taking Model without RNN. In Proceedings of the Interspeech, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Aylett, M.P.; Carmantini, A.; Pidcock, C.; Nichols, E.; Gomez, R.; Siskind, S.R. Haru He’s Here to Help!: A Demonstration of Implementing Comedic Rapid Turn-taking for a Social Robot. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023. [Google Scholar]

- DeVault, D.; Sagae, K.; Traum, D.R. Can I Finish? Learning When to Respond to Incremental Interpretation Results in Interactive Dialogue. In Proceedings of the SIGDIAL Conference, London, UK, 11–12 September 2009. [Google Scholar]

- Schlangen, D. From Reaction To Prediction Experiments with Computational Models of Turn-Taking. In Proceedings of the INTERSPEECH 2006—ICSLP, Ninth International Conference on Spoken Language Processing, Pittsburgh, PA, USA, 17–21 September 2006. [Google Scholar]

- Ward, N.G.; DeVault, D. Ten Challenges in Highly-Interactive Dialog System. In Proceedings of the AAAI Spring Symposia, Palo Alto, CA, USA, 23–25 March 2015. [Google Scholar]

- Hariharan, R.; Häkkinen, J.; Laurila, K. Robust end-of-utterance detection for real-time speech recognition applications. In Proceedings of the 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No.01CH37221), Salt Lake City, UT, USA, 7–11 May 2001; Volume 1, pp. 249–252. [Google Scholar]

- Atterer, M.; Baumann, T.; Schlangen, D. Towards Incremental End-of-Utterance Detection in Dialogue Systems. In COLING 2008, 22nd International Conference On Computational Linguistics, Posters Proceedings, 18–22 August 2008; Scott, D., Uszkoreit, H., Eds.; Coling 2008 Organizing Committee: Manchester, UK, 2008; pp. 11–14. [Google Scholar]

- Uro, R.; Tahon, M.; Wottawa, J.; Doukhan, D.; Rilliard, A.; Laurent, A. Annotation of Transition-Relevance Places and Interruptions for the Description of Turn-Taking in Conversations in French Media Content. In Proceedings of the International Conference on Language Resources and Evaluation, Torino, Italy, 20–25 May 2024. [Google Scholar]

- Uro, R.; Tahon, M.; Doukhan, D.; Laurent, A.; Rilliard, A. Detecting the terminality of speech-turn boundary for spoken interactions in French TV and Radio content. arXiv 2024, arXiv:2406.10073. [Google Scholar] [CrossRef]

- Threlkeld, C.; de Ruiter, J. The Duration of a Turn Cannot be Used to Predict When It Ends. In Proceedings of the 23rd Annual Meeting of the Special Interest Group on Discourse and Dialogue, Edinburgh, UK, 7–9 September 2022; Lemon, O., Hakkani-Tur, D., Li, J.J., Ashrafzadeh, A., Garcia, D.H., Alikhani, M., Vandyke, D., Dušek, O., Eds.; 2022; pp. 361–367. [Google Scholar] [CrossRef]

- Fujie, S.; Katayama, H.; Sakuma, J.; Kobayashi, T. Timing Generating Networks: Neural Network Based Precise Turn-Taking Timing Prediction in Multiparty Conversation. In Proceedings of the Interspeech 2021, Brno, Czechia, 30 August–3 September 2021; pp. 3226–3230. [Google Scholar] [CrossRef]

- Torreira, F.; Bögels, S. Vocal reaction times to speech offsets: Implications for processing models of conversational turn-taking. J. Phon. 2022, 94, 101175. [Google Scholar] [CrossRef]

- Threlkeld, C.; Umair, M.; de Ruiter, J. Using Transition Duration to Improve Turn-taking in Conversational Agents. In Proceedings of the 23rd Annual Meeting of the Special Interest Group on Discourse and Dialogue, Edinburgh, UK, 7–9 September 2022; Lemon, O., Hakkani-Tur, D., Li, J.J., Ashrafzadeh, A., Garcia, D.H., Alikhani, M., Vandyke, D., Dušek, O., Eds.; pp. 193–203. [Google Scholar] [CrossRef]

- Schlangen, D.; Skantze, G. A General, Abstract Model of Incremental Dialogue Processing. In Proceedings of the 12th Conference of the European Chapter of the ACL (EACL 2009), Athens, Greece, 1 March 2009; Lascarides, A., Gardent, C., Nivre, J., Eds.; pp. 710–718. [Google Scholar]

- Skantze, G.; Hjalmarsson, A. Towards incremental speech generation in conversational systems. Comput. Speech Lang. 2013, 27, 243–262. [Google Scholar] [CrossRef]

- Ward, N.G.; Fuentes, O.; Vega, A. Dialog prediction for a general model of turn-taking. In Proceedings of the Interspeech 2010, Chiba, Japan, 26–30 September 2010; pp. 2662–2665. [Google Scholar] [CrossRef]

- Sakuma, J.; Fujie, S.; Zhao, H.; Kobayashi, T. Improving the response timing estimation for spoken dialogue systems by reducing the effect of speech recognition delay. In Proceedings of the Interspeech 2023, Dublin, Ireland, 20–24 August 2023; pp. 2668–2672. [Google Scholar] [CrossRef]

- Inoue, K.; Jiang, B.; Ekstedt, E.; Kawahara, T.; Skantze, G. Multilingual Turn-taking Prediction Using Voice Activity Projection. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Torino, Italy, 20–25 May 2024; Calzolari, N., Kan, M.Y., Hoste, V., Lenci, A., Sakti, S., Xue, N., Eds.; pp. 11873–11883. [Google Scholar]

- Ekstedt, E.; Skantze, G. Voice Activity Projection: Self-supervised Learning of Turn-taking Events. In Proceedings of the Interspeech 2022, Incheon, Republic of Korea, 18–22 September 2022; pp. 5190–5194. [Google Scholar] [CrossRef]

- Park, C.; Lim, Y.; suk Choi, J.; Sung, J.E. Changes in linguistic behaviors based on smart speaker task performance and pragmatic skills in multiple turn-taking interactions. Intell. Serv. Robot. 2021, 14, 357–372. [Google Scholar] [CrossRef]

- Chen, K.; Li, Z.; Dai, S.; Zhou, W.; Chen, H. Human-to-Human Conversation Dataset for Learning Fine-Grained Turn-Taking Action. In Proceedings of the Interspeech 2021, Brno, Czechia, 30 August–3 September 2021; pp. 3231–3235. [Google Scholar] [CrossRef]

- Inaishi, T.; Enoki, M.; Noguchi, H. A Voice Dialog System without Interfering with Human Speech Based on Turn-taking Detection. In Proceedings of the 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021; pp. 820–825. [Google Scholar]

- Ekstedt, E.; Skantze, G. Projection of Turn Completion in Incremental Spoken Dialogue Systems. In Proceedings of the 22nd Annual Meeting of the Special Interest Group on Discourse and Dialogue, Singapore and Online, 29–31 July 2021; Li, H., Levow, G.A., Yu, Z., Gupta, C., Sisman, B., Cai, S., Vandyke, D., Dethlefs, N., Wu, Y., Li, J.J., Eds.; pp. 431–437. [Google Scholar] [CrossRef]

- Bîrlădeanu, A.; Minnis, H.; Vinciarelli, A. Automatic Detection of Reactive Attachment Disorder Through Turn-Taking Analysis in Clinical Child-Caregiver Sessions. In Proceedings of the Interspeech 2022, Incheon, Republic of Korea, 18–22 September 2022; pp. 1407–1410. [Google Scholar] [CrossRef]

- O’Bryan, L.; Segarra, S.; Paoletti, J.; Zajac, S.; Beier, M.E.; Sabharwal, A.; Wettergreen, M.A.; Salas, E. Conversational Turn-taking as a Stochastic Process on Networks. In Proceedings of the 2022 56th Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 31 October–2 November 2022; pp. 1243–1247. [Google Scholar]

- Feng, S.; Xu, W.; Yao, B.; Liu, Z.; Ji, Z. Early prediction of turn-taking based on spiking neuron network to facilitate human-robot collaborative assembly. In Proceedings of the 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), Mexico City, Mexico, 20–24 August 2022; pp. 123–129. [Google Scholar]

- Shahverdi, P.; Tyshka, A.; Trombly, M.; Louie, W.Y.G. Learning Turn-Taking Behavior from Human Demonstrations for Social Human-Robot Interactions. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 7643–7649. [Google Scholar]

- Ekstedt, E.; Skantze, G. How Much Does Prosody Help Turn-taking? Investigations using Voice Activity Projection Models. In Proceedings of the SIGDIAL Conferences, Edinburgh, UK, 7–9 September 2022. [Google Scholar]

- Ekstedt, E.; Skantze, G. Show & Tell: Voice Activity Projection and Turn-taking. In Proceedings of the Interspeech, Dublin, Ireland, 20–24 August 2023. [Google Scholar]

- Ekstedt, E.; Wang, S.; Székely, É.; Gustafson, J.; Skantze, G. Automatic Evaluation of Turn-taking Cues in Conversational Speech Synthesis. In Proceedings of the Interspeech, Dublin, Ireland, 20–24 August 2023. [Google Scholar]

- Sato, Y.; Chiba, Y.; Higashinaka, R. Effects of Multiple Japanese Datasets for Training Voice Activity Projection Models. In Proceedings of the 2024 27th Conference of the Oriental COCOSDA International Committee for the Co-Ordination and Standardisation of Speech Databases and Assessment Techniques (O-COCOSDA), Hsinchu, Taiwan, 17–19 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Sato, Y.; Chiba, Y.; Higashinaka, R. Investigating the Language Independence of Voice Activity Projection Models through Standardization of Speech Segmentation Labels. In Proceedings of the 2024 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Macau, China, 3–6 December 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, T.; Wang, Q.; Wu, B.; Itani, M.; Eskimez, S.E.; Yoshioka, T.; Gollakota, S. Target conversation extraction: Source separation using turn-taking dynamics. arXiv 2024, arXiv:2407.11277. [Google Scholar] [CrossRef]

- Kanai, T.; Wakabayashi, Y.; Nishimura, R.; Kitaoka, N. Predicting Utterance-final Timing Considering Linguistic Features Using Wav2vec 2.0. In Proceedings of the 2024 11th International Conference on Advanced Informatics: Concept, Theory and Application (ICAICTA), Singapore, 28–30 September 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Umair, M.; Sarathy, V.; Ruiter, J. Large Language Models Know What To Say But Not When To Speak. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024; Miami, FL, USA, 12–16 November 2024, Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; pp. 15503–15514. [CrossRef]

- Wang, J.; Chen, L.; Khare, A.; Raju, A.; Dheram, P.; He, D.; Wu, M.; Stolcke, A.; Ravichandran, V. Turn-Taking and Backchannel Prediction with Acoustic and Large Language Model Fusion. In Proceedings of the ICASSP 2024–2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 12121–12125. [Google Scholar]

- Jeon, H.; Guintu, F.; Sahni, R. Lla-VAP: LSTM Ensemble of Llama and VAP for Turn-Taking Prediction. arXiv 2024, arXiv:2412.18061. [Google Scholar]

- Pinto, M.J.; Belpaeme, T. Predictive Turn-Taking: Leveraging Language Models to Anticipate Turn Transitions in Human-Robot Dialogue. In Proceedings of the 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), Pasadena, CA, USA, 26–30 August 2024; pp. 1733–1738. [Google Scholar] [CrossRef]

- Lucarini, V.; Grice, M.; Wehrle, S.; Cangemi, F.; Giustozzi, F.; Amorosi, S.; Rasmi, F.; Fascendini, N.; Magnani, F.; Marchesi, C.; et al. Language in interaction: Turn-taking patterns in conversations involving individuals with schizophrenia. Psychiatry Res. 2024, 339, 116102. [Google Scholar] [CrossRef] [PubMed]

- Amer, A.Y.A.; Bhuvaneshwara, C.; Addluri, G.K.; Shaik, M.M.; Bonde, V.; Muller, P. Backchannel Detection and Agreement Estimation from Video with Transformer Networks. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Jain, V.; Leekha, M.; Shah, R.R.; Shukla, J. Exploring Semi-Supervised Learning for Predicting Listener Backchannels. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Virtual, 8–13 May 2021. [Google Scholar]

- Ortega, D.; Meyer, S.; Schweitzer, A.; Vu, N.T. Modeling Speaker-Listener Interaction for Backchannel Prediction. arXiv 2023, arXiv:2304.04472. [Google Scholar] [CrossRef]

- Inoue, K.; Lala, D.; Skantze, G.; Kawahara, T. Yeah, Un, Oh: Continuous and Real-time Backchannel Prediction with Fine-tuning of Voice Activity Projection. arXiv 2024, arXiv:2410.15929. [Google Scholar]

- Sharma, G.; Stefanov, K.; Dhall, A.; Cai, J. Graph-based Group Modelling for Backchannel Detection. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022. [Google Scholar]

- Morikawa, A.; Ishii, R.; Noto, H.; Fukayama, A.; Nakamura, T. Determining most suitable listener backchannel type for speaker’s utterance. In Proceedings of the 22nd ACM International Conference on Intelligent Virtual Agents, Faro, Portugal, 6–9 September 2022. [Google Scholar]

- Ishii, R.; Ren, X.; Muszynski, M.; Morency, L.P. Multimodal and Multitask Approach to Listener’s Backchannel Prediction: Can Prediction of Turn-changing and Turn-management Willingness Improve Backchannel Modeling? In Proceedings of the 21st ACM International Conference on Intelligent Virtual Agents, Virtual, 14–17 September 2021. [Google Scholar]

- Onishi, T.; Azuma, N.; Kinoshita, S.; Ishii, R.; Fukayama, A.; Nakamura, T.; Miyata, A. Prediction of Various Backchannel Utterances Based on Multimodal Information. In Proceedings of the 23rd ACM International Conference on Intelligent Virtual Agents, Würzburg, Germany, 19–22 September 2023. [Google Scholar]

- Lala, D.; Inoue, K.; Kawahara, T.; Sawada, K. Backchannel Generation Model for a Third Party Listener Agent. In Proceedings of the International Conference On Human-Agent Interaction, HAI 2022, Christchurch, New Zealand, 5–8 December 2022; pp. 114–122. [Google Scholar] [CrossRef]

- Kim, S.; Seok, S.; Choi, J.; Lim, Y.; Kwak, S.S. Effects of Conversational Contexts and Forms of Non-lexical Backchannel on User Perception of Robots. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 3042–3047. [Google Scholar] [CrossRef]

- Shahverdi, P.; Rousso, K.; Klotz, J.; Bakhoda, I.; Zribi, M.; Louie, W.Y.G. Emotionally Specific Backchanneling in Social Human-Robot Interaction and Human-Human Interaction. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 4059–4064. [Google Scholar] [CrossRef]

- Seering, J.; Khadka, M.; Haghighi, N.; Yang, T.; Xi, Z.; Bernstein, M.S. Chillbot: Content Moderation in the Backchannel. Proc. ACM Hum. Comput. Interact. 2024, 8, 1–26. [Google Scholar] [CrossRef]

- Lala, D.; Inoue, K.; Kawahara, T. Evaluation of Real-time Deep Learning Turn-taking Models for Multiple Dialogue Scenarios. In Proceedings of the 2018 On International Conference On Multimodal Interaction, ICMI 2018, Boulder, CO, USA, 16–20 October 2018; pp. 78–86. [Google Scholar] [CrossRef]

- Bae, Y.H.; Bennett, C.C. Real-Time Multimodal Turn-taking Prediction to Enhance Cooperative Dialogue during Human-Agent Interaction. In Proceedings of the 32nd IEEE International Conference On Robot And Human Interactive Communication, RO-MAN 2023, Busan, Republic Of Korea, 28–31 August 2023; pp. 2037–2044. [Google Scholar] [CrossRef]

- Inoue, K.; Jiang, B.; Ekstedt, E.; Kawahara, T.; Skantze, G. Real-time and Continuous Turn-taking Prediction Using Voice Activity Projection. arXiv 2024, arXiv:2401.04868. [Google Scholar]

- Hosseini, S.; Deng, X.; Miyake, Y.; Nozawa, T. Encouragement of Turn-Taking by Real-Time Feedback Impacts Creative Idea Generation in Dyads. IEEE Access 2021, 9, 57976–57988. [Google Scholar] [CrossRef]

- Tian, L. Improved Gazing Transition Patterns for Predicting Turn-Taking in Multiparty Conversation. In Proceedings of the 2021 5th International Conference on Video and Image Processing, Kumamoto, Japan, July 23–25 July 2021. [Google Scholar]

- Hadley, L.V.; Culling, J.F. Timing of head turns to upcoming talkers in triadic conversation: Evidence for prediction of turn ends and interruptions. Front. Psychol. 2022, 13, 1061582. [Google Scholar] [CrossRef] [PubMed]

- Paetzel-Prüsmann, M.; Kennedy, J. Improving a Robot’s Turn-Taking Behavior in Dynamic Multiparty Interactions. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm, Sweden, 13–16 March 2023. [Google Scholar]

- Moujahid, M.; Hastie, H.F.; Lemon, O. Multi-party Interaction with a Robot Receptionist. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Sapporo, Japan, 7–10 March 2022; pp. 927–931. [Google Scholar]

- Iitsuka, R.; Kawaguchi, I.; Shizuki, B.; Takahashi, S. Multi-party Video Conferencing System with Gaze Cues Representation for Turn-Taking. In Proceedings of the International Conference on Collaboration Technologies and Social Computing, Virtual, 31 August–3 September 2021. [Google Scholar]

- Wang, P.; Han, E.; Queiroz, A.C.M.; DeVeaux, C.; Bailenson, J.N. Predicting and Understanding Turn-Taking Behavior in Open-Ended Group Activities in Virtual Reality. arXiv 2024, arXiv:2407.02896. [Google Scholar] [CrossRef]

- Deadman, J.; Barker, J.L. Modelling Turn-taking in Multispeaker Parties for Realistic Data Simulation. In Proceedings of the Interspeech, Incheon, Republic of Korea, 18–22 September 2022. [Google Scholar]

- Yang, J.; Wang, P.H.; Zhu, Y.; Feng, M.; Chen, M.; He, X. Gated Multimodal Fusion with Contrastive Learning for Turn-Taking Prediction in Human-Robot Dialogue. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 7–13 May 2022; pp. 7747–7751. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. Opensmile: The munich versatile and fast open-source audio feature extractor. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010; pp. 1459–1462. [Google Scholar]

- Fatan, M.; Mincato, E.; Pintzou, D.; Dimiccoli, M. 3M-Transformer: A Multi-Stage Multi-Stream Multimodal Transformer for Embodied Turn-Taking Prediction. In Proceedings of the ICASSP 2024–2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2023; pp. 8050–8054. [Google Scholar]

- Lee, M.C.; Deng, Z. Online Multimodal End-of-Turn Prediction for Three-party Conversations. In Proceedings of the International Conference on Multimodal Interaction, San Jose, CA, USA, 4–8 November 2024. [Google Scholar]

- Fauviaux, T.; Marin, L.; Parisi, M.; Schmidt, R.; Mostafaoui, G. From unimodal to multimodal dynamics of verbal and nonverbal cues during unstructured conversation. PLoS ONE 2024, 19, e0309831. [Google Scholar] [CrossRef]

- Jiang, B.; Ekstedt, E.; Skantze, G. What makes a good pause? Investigating the turn-holding effects of fillers. arXiv 2023, arXiv:2305.02101. [Google Scholar] [CrossRef]

- Umair, M.; Mertens, J.B.; Warnke, L.; de Ruiter, J.P. Can Language Models Trained on Written Monologue Learn to Predict Spoken Dialogue? Cogn. Sci. 2024, 48, e70013. [Google Scholar] [CrossRef]

- Yoshikawa, S. Timing Sensitive Turn-Taking in Spoken Dialogue Systems Based on User Satisfaction. In Proceedings of the 20th Workshop of Young Researchers’ Roundtable on Spoken Dialogue Systems; Kyoto, Japan, 16–17 September 2024, Inoue, K., Fu, Y., Axelsson, A., Ohashi, A., Madureira, B., Zenimoto, Y., Mohapatra, B., Stricker, A., Khosla, S., Eds.; pp. 32–34.

- Liermann, W.; Park, Y.H.; Choi, Y.S.; Lee, K. Dialogue Act-Aided Backchannel Prediction Using Multi-Task Learning. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023; Singapore, 6–10 December 2023, Bouamor, H., Pino, J., Bali, K., Eds.; pp. 15073–15079. [CrossRef]

- Bilalpur, M.; Inan, M.; Zeinali, D.; Cohn, J.F.; Alikhani, M. Learning to generate context-sensitive backchannel smiles for embodied ai agents with applications in mental health dialogues. In Proceedings of the CEUR Workshop Proceedings, Enschede, The Netherlands, 15–19 July 2024; Volume 3649, p. 12. [Google Scholar]

- van den Oord, A.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- LeCun, Y.; Boser, B.E.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.E.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Waibel, A.; Hanazawa, T.; Hinton, G.; Shikano, K.; Lang, K. Phoneme recognition using time-delay neural networks. IEEE Trans. Acoust. Speech, Signal Process. 1989, 37, 328–339. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Rivière, M.; Joulin, A.; Mazaré, P.E.; Dupoux, E. Unsupervised pretraining transfers well across languages. In Proceedings of the ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 7414–7418. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- D’Costa, P.R.; Rowbotham, E.; Hu, X.E. What you say or how you say it? Predicting Conflict Outcomes in Real and LLM-Generated Conversations. arXiv 2024, arXiv:2409.09338. [Google Scholar] [CrossRef]

- D’efossez, A.; Mazar’e, L.; Orsini, M.; Royer, A.; P’erez, P.; J’egou, H.; Grave, E.; Zeghidour, N. Moshi: A speech-text foundation model for real-time dialogue. arXiv 2024, arXiv:2410.00037. [Google Scholar]

- Arora, S.; Lu, Z.; Chiu, C.C.; Pang, R.; Watanabe, S. Talking Turns: Benchmarking Audio Foundation Models on Turn-Taking Dynamics. arXiv 2025, arXiv:2503.01174. [Google Scholar] [CrossRef]

- Barbierato, E.; Gatti, A.; Incremona, A.; Pozzi, A.; Toti, D. Breaking Away From AI: The Ontological and Ethical Evolution of Machine Learning. IEEE Access 2025, 13, 55627–55647. [Google Scholar] [CrossRef]

| Dataset | Year of Release | Data Types | Sample Size | Use Cases & Link |

|---|---|---|---|---|

| HCRC Map Task [63] | 1991 | Text, Audio (Video recorded) | 128 dialogues (~15 h), 64 participants | Spontaneous speech, turn-taking analysis |

| https://groups.inf.ed.ac.uk/maptask/ | ||||

| HCRC Corpus (17 April 2025) | ||||

| Switchboard [64] | 1992 | Text, Audio | ~2500 conversations, ~500 speakers, (~250 h), ~ 3 M words text | ASR, speaker authentication |

| https://catalog.ldc.upenn.edu/LDC97S62 | ||||

| Switchboard Corpus (17 April 2025) | ||||

| Fisher [65] | 2004 | Text, Audio | 16,000 conversations, 2000 h | ASR, conversational speech processing |

| https://catalog.ldc.upenn.edu/LDC2004S13 | ||||

| Fisher Corpus (17 April 2025) | ||||

| CANDOR [66] | 2023 | Text, Audio, Video, Facial Data | 1656 conversations, 1456 participants, 850+ h, 7 M+ words | Multimodal interaction, turn-taking, rapport, backchanneling |

| https://guscooney.com/candor-dataset/ | ||||

| CANDOR Corpus (17 April 2025) | ||||

| NoXi [67] | 2017 | Text, Audio, Video, Depth, Sensor Data | 25+ h, dyadic interactions, 7 languages, 58 topics | Novice-expert dialogues, turn-taking, conversational interruptions |

| https://multimediate-challenge.org/datasets/Dataset_NoXi/ | ||||

| NoXi Database (17 April 2025) |

| Area of Focus | Articles/Paper | Architecture | Modality | Key Findings | Datasets Used | Limitations |

|---|---|---|---|---|---|---|

| Turn-taking Prediction | [134] | Voice Activity Projection (VAP) | Audio | Commercial TTS systems often produce ambiguous turn-taking cues. TTS systems trained on reading or spontaneous speech exhibit strong turn-holding but weak turn-yielding cues. | MultiWoZ | Ambiguity in synthesized cues affects consistent and accurate interpretation by users. |

| [132] | Voice Activity Projection (VAP) | Audio | Prosodic cues, particularly phonetic information is crucial for turn-taking prediction. F0 information is less important for general turn-taking but plays a significant role in distinguishing between turn-shifts and turn-holds. | Switchboard, Fisher | Models not trained on perturbed data; Relied on TTS for ambiguous phrases; Limited linguistic context. | |

| [139] | Large Language Models (LLMs) | Text | LLMs effectively predict what to say but struggle with accurately predicting turn-taking timing (TRPs) | In Conversation Corpus (ICC) – Novel TRP dataset | Inability to accurately predict withinturn TRPs, highlighting limitations in timing cues. | |

| [72] | E2E Self-supervised (Wav2Vec 2.0, roBERTa) | Audio, Text | A simple Wav2Vec 2.0 model with a linear classifier outperformed other models on datasets with lower signal-to-noise ratios and greater acoustic and language complexity. | Harper Valley, Switchboard, IBM datasets | Did not incorporate dialogue context and only addressed end-of-turn detection. It focused on binary turn-shift tasks and lacks continuous frame prediction. | |

| [122] | VAP with cross-attention Transformers | Audio | Monolingual VAP models do not generalize well across languages. Multilingual VAP performs similarly to monolingual models for English, Mandarin, and Japanese. | Switchboard (English), HKUST (Mandarin), Travel Agency Task Dialogues (Japanese) | Monolingual models have difficulty when applied directly across languages without retraining, especially with low-resourced languages and limited pitch variability | |

| [133] | Voice Activity Projection (VAP) | Audio | VAP effectively predicts voice activity events and models conversational dynamics accurately. (Effectively resolves overlaps.) | Switchboard, Fisher | Vulnerability to acoustic artifacts affecting predictive reliability. (struggles in noisy environments.) | |

| [137] | Neural Source Separation Network | Audio | Leveraging turn-taking dynamics effectively extracts target conversations from audio mixtures containing interfering speakers and noise. | LibriSpeech, AISHELL-3, RAMC, AMI Meeting Corpus | Performance deteriorates significantly when natural turn-taking timing is disrupted | |

| [172] | Voice Activity Projection (VAP) | Audio | Fillers have strong turn-holding effects, especially in yielding contexts. Prosodic features of fillers (pitch, intensity, duration) contribute significantly. | Fisher, Switchboard | Fillers have limited impact due to redundancy with other turnholding cues. | |

| [123] | Voice Activity Projection (VAP) | Audio | Modelling-dependent voice activity events improves accuracy for predicting future conversational events. | Switchboard | Requires precise modeling of voice activity dependencies to maintain accuracy. | |

| [173] | GPT-2, TurnGPT (GPT-2 augmented with explicit speaker embeddings), Transformer | Text | Fine-tuned LLMs find incongruent speaker transitions more surprising than congruent ones but do not exhibit the same tendency during holds. These models do not consider speaker identity as humans do, and further fine-tuning tends to yield diminishing returns. | In Conversation Corpus (ICC) | LLMs fail to replicate normative interactive structure of natural conversation adequately | |

| [174] | LSTM, syntactic completeness prediction | Audio | Improved response timing significantly enhances user satisfaction in spoken dialogue systems. | InteLLA conversational dataset | Difficulty confirming how timing errors affect user perception clearly. | |

| Backchannel Prediction | [175] | Multi-Task Learning Framework | Audio, Text | Dialogue act information enhances backchannel prediction performance, particularly for assessments. | Switchboard | High dependency on specific task labels; generalizability untested across languages. |

| [146] | Neural-Based Acoustic Classifier | Audio | Encoding both speaker and listener behaviour improves backchannel prediction performance, with speakerlistener interaction modelling leading to the best results. | Switchboard, GECO | The analysis did not consider the relationship between listener characteristics and the F1-score. It is limited to minimal responses and lacks a comprehensive interaction analysis. | |

| [140] | LLM + Acoustic (HuBERT + GPT-2/RedPajama) | Audio, Text | Fusing LLM and acoustic models improves turn-taking and backchannel prediction. Multi-task instruction finetuning enhances the LLM’s ability to understand task descriptions and dialogue history. | Switchboard | Future research should explore the effects of using automatic transcriptions in place of ground-truth transcriptions. High-quality ASR output is reliant, and the current approach lacks real-time implementation and adaptation to various conversational contexts. | |

| [147] | Fine-tuned Voice Activity Projection (VAP) | Audio | Fine-tuning the Voice Activity Projection (VAP) model enables continuous and realtime backchannel prediction, including both timing and type. Intensity dynamics play a significant role in backchannel prediction. | LibriSpeech, Switchboard – (Kyoto Backchannel Corpus) | Requires careful tuning of threshold values, sensitivity to dataset imbalance and real-time operational constraints | |

| [176] | Attention-Based Generative Model | Visual, Audio | Context-sensitive smiles significantly enhance human–agent rapport in mental health dialogues. | RealTalk Dataset (YouTube-based face-to-face interactions) | Results specific to mental health dialogues; limited generalization to broader interactions. | |

| Real-time Turn-taking | [158] | Voice Activity Projection (VAP) with Real-Time Optimization | Audio | The Voice Activity Projection (VAP) model offers high accuracy and minimal inference time for real-time turn-taking prediction. Real-time VAP functions effectively with little performance degradation. | Japanese Travel Agency Task Dialogue dataset, Switchboard, HKUST | The study concentrated on a single modality (audio) and did not account for the effects of other modalities on real-time turn-taking prediction, which is suboptimal with shorter context windows |

| [121] | Multi-Look-Ahead ASR with Estimates of Syntactic Completeness (ESC) | Audio | Extremely low latency ASR (Multi-Look-Ahead ASR) enables near-full utterance use for real-time response timing estimation, improving accuracy. | Simulated Japanese dialogue database | Latency in streaming ASR limits the available linguistic context for timing estimation. | |

| Multimodal Turn-taking | [51] | Voice Activity Projection (VAP) with Non-Verbal Feature Integration | Audio, Visual | Incorporating nonverbal features such as action units and gaze direction enhance the accuracy of turn-taking event prediction in expert novice conversations. Additionally, including gaze and gesture features increase the accuracy of voice activity prediction. | NoXi database (Expert-Novice Conversation Dataset) | There is limited exploration of the specific action units that are most effective for prediction. Additionally, accurate predictions are highly dependent on the quality and completeness of multimodal inputs. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Patamia, R.A.; Dinh, H.P.T.; Liu, M.; Cosgun, A. Turn-Taking Modelling in Conversational Systems: A Review of Recent Advances. Technologies 2025, 13, 591. https://doi.org/10.3390/technologies13120591

Patamia RA, Dinh HPT, Liu M, Cosgun A. Turn-Taking Modelling in Conversational Systems: A Review of Recent Advances. Technologies. 2025; 13(12):591. https://doi.org/10.3390/technologies13120591

Chicago/Turabian StylePatamia, Rutherford Agbeshi, Ha Pham Thien Dinh, Ming Liu, and Akansel Cosgun. 2025. "Turn-Taking Modelling in Conversational Systems: A Review of Recent Advances" Technologies 13, no. 12: 591. https://doi.org/10.3390/technologies13120591

APA StylePatamia, R. A., Dinh, H. P. T., Liu, M., & Cosgun, A. (2025). Turn-Taking Modelling in Conversational Systems: A Review of Recent Advances. Technologies, 13(12), 591. https://doi.org/10.3390/technologies13120591