SHEAB: A Novel Automated Benchmarking Framework for Edge AI

Abstract

1. Introduction

1.1. Cloud Computing and Edge Computing

1.2. Trends on Edge Computing with AI

1.3. Internet of Things (IoT) with Edge Computing

1.4. Use of 5G Networks and Edge Computing Architectures

1.5. Security in Edge Computing

In summary, the above technological landscape–spanning cloud-edge synergies, Edge AI, IoT integration, 5G networking, and security considerations–underscores the necessity for effective ways to evaluate and benchmark Edge AI systems. The rapid evolution of edge computing capabilities and use-cases has outpaced traditional performance assessment methods. Edge computing environments are highly heterogeneous and dynamic, making it vital to characterize how different hardware, network conditions, and AI workloads perform at the edge. However, edge performance benchmarking is still a relevant research area, with efforts only gaining momentum in the past few years. There is a clear need for standardized and automated benchmarking frameworks to help researchers and practitioners measure system behavior, compare solutions, and identify bottlenecks in Edge AI deployments. This is the motivation behind the proposed SHEAB framework—a novel automated benchmarking framework for Edge AI. By providing a structured way to test edge computing platforms and AI models under realistic conditions, SHEAB aims to fill the gap in tooling for this domain. Ultimately, such a benchmarking framework is crucial for guiding the design and optimization of future edge computing infrastructures, ensuring that the promise of Edge AI can be fully realized in practice.

1.6. Edge Ai in the Healthcare Sector

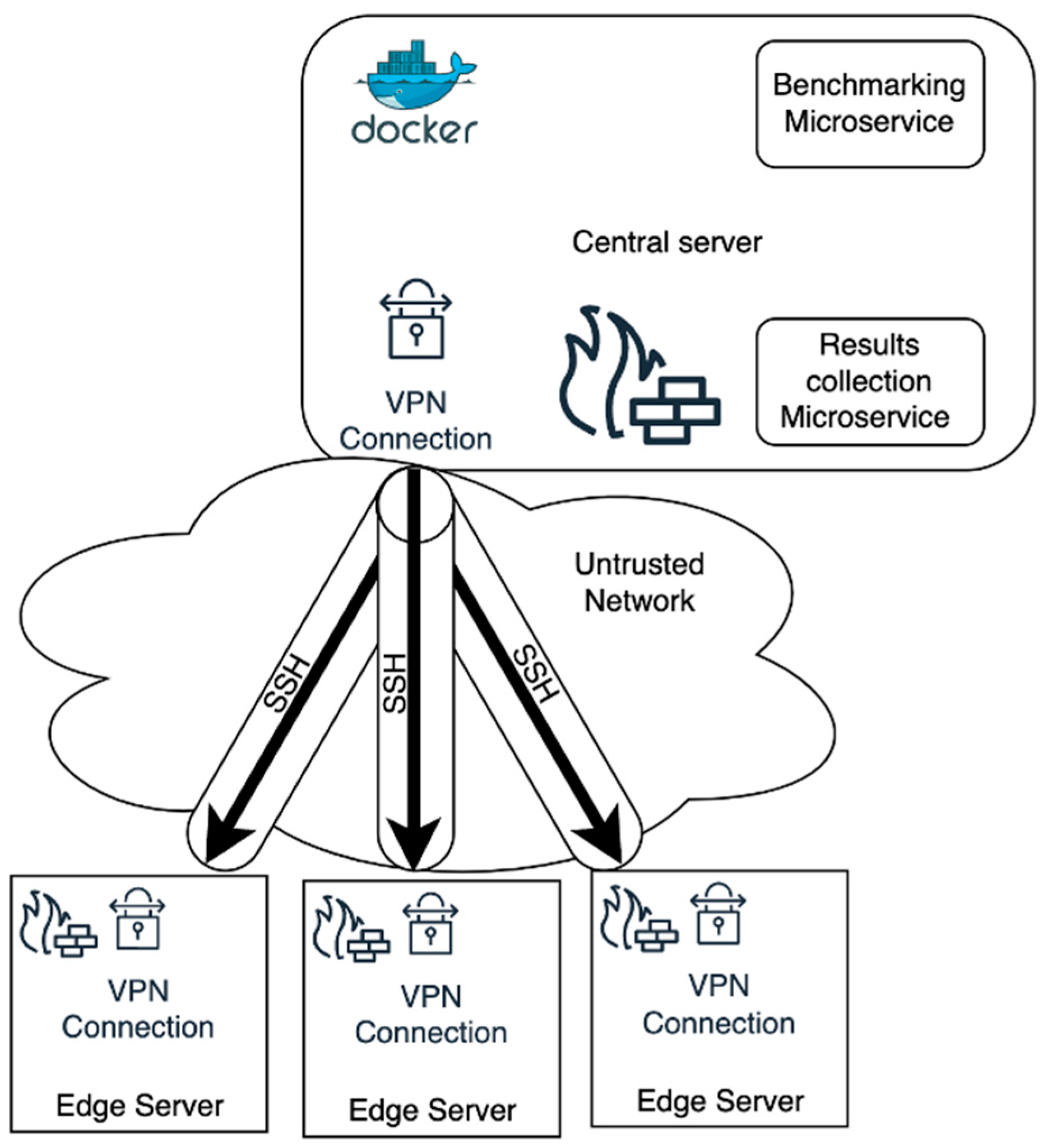

- Secure, Automated Benchmarking Framework: We design an architecture for automating the execution of benchmarks on multiple edge devices concurrently. Our framework emphasizes security through a multi-layer approach (firewalls, VPN, and SSH) for communications, enabling safe operation even across public or untrusted networks. To our knowledge, this is the first edge benchmarking framework to integrate such comprehensive security measures into the automation process.

- Scalability and Heterogeneity: SHEAB’s orchestration mechanism (implemented as a containerized microservice) can handle a group of heterogeneous edge devices simultaneously. We demonstrate the ability to launch benchmarking workloads on all devices “at once”, significantly reducing the overall benchmarking time as compared to sequential execution. The framework is hardware-agnostic, accommodating devices ranging from powerful single-board computers to lower-end IoT nodes, and on top of this, this deployment is agentless, which means no agent will be installed on edge nodes, only the benchmarking tool.

- Time-to-Deploy Metric and Analysis: We introduce a new performance metric, Time To Deploy (TTD), which we define as the time taken to complete a given benchmarking routine on an edge device. Using this metric, we formulate an analytic comparison between individual sequential benchmarking versus automated parallel benchmarking. We derive expressions for Latency Reduction Gain (percentage decrease in total benchmarking time) and system speedup achieved by SHEAB, and we provide empirical measurements of these for a real testbed of devices.

- Implementation and Real-world Validation: We develop a working implementation of the SHEAB framework using readily available technologies (Docker containers, OpenVPN, Ansible, etc.). The implementation is tested on a network of eleven heterogeneous edge devices (including Raspberry Pi 1/2/3/4/5, Orange Pi boards, Jetson and Odroid), orchestrated by a central server. We report detailed results from running a CPU, memory, network, ML inference and GPU benchmarking workload across all devices via the framework, including the measured speedups and resource utilization. The results demonstrate the practicality of SHEAB for improving benchmarking efficiency and highlight any overheads introduced by the automation.

2. Background

2.1. Containers and Edge Computing

2.2. Automation in the Era of the Edge

2.3. AI and Machine Learning Automation at the Edge

2.4. Comparative Analysis and Efficiency Gains

2.5. Microservices on the Edge

2.6. Security Aspect of Containerization on the Edge

Container Challenges

3. Literature Survey

3.1. Challenges in Edge AI Benchmarking

3.2. Limitations of Existing Approaches

- (i)

- Concurrent benchmarking of multiple distributed edge nodes;

- (ii)

- Integrated security mechanisms for safe operation over untrusted networks;

- (iii)

- A modular microservice design to facilitate easy deployment and result collection.These are precisely the contributions we target with our proposed framework.

4. Methodology and System Design

4.1. System Architecture

- Benchmarking Microservice: This container contains the automation logic for deploying and executing benchmarks on edge devices. It includes scripts or an orchestration engine (we use an Ansible playbook as part of this service in our implementation) which can initiate SSH sessions to each edge device and run the desired benchmarking commands. The containerization of this service means it can be easily started/stopped and configured without affecting the host OS, and it could potentially be scaled or moved to another host if needed.

- Results Collection Microservice: This container is responsible for aggregating the results returned by the edge devices. In practice, we run a lightweight database (MariaDB) or data collection server here. When benchmarks complete on edge nodes, their output (performance metrics) are sent back to this service, which stores them for analysis. Decoupling this into a separate microservice ensures that result handling (which might involve disk I/O or data processing) does not slow down the core orchestration logic. It also provides persistence—results can be queried or visualized after the benchmarking run.

- These two microservices interact with each other in a producer–consumer fashion: the Benchmarking service produces data (by triggering benchmarks and retrieving outputs), and the Results service consumes and stores that data.

- Edge Servers: Each edge server is a device being tested. We do not assume any special software is pre-installed on the edge aside from basic OS capabilities to run the benchmark and an SSH server to receive commands. To minimize intrusion, we do not install a permanent agent or daemon on the edge (though one could, to optimize repeated use; in our case we opted for an agentless approach via SSH). The edge devices in our design are behind their own local firewalls (or at least have host-based firewall rules) to only allow incoming connections from trusted sources (e.g., SSH from the central server). They also each run a VPN client that connects to a central VPN server (more on the VPN below).

4.2. Benchmarking Workflow

- Stage 1: Initialization. In this stage, the central server sets up the environment required for the rest of the process. This involves launching the Automation container (Benchmarking microservice) and the Storage container (Results microservice). In practice, this could be done via Docker commands or a script. Once these containers are up, the automation code inside the Benchmarking service takes over. A configuration check is performed to ensure the list of target edge servers is loaded and that the central server has the necessary credentials (e.g., SSH keys) and network configuration to reach them. An initial log is created for the session in the results database (for traceability). The flowchart includes an error check here: if either container fails to launch or if configuration is invalid, an error is flagged. The system can attempt to fix simple issues (for example, if the database container was not ready, it might retry after a delay) or else abort and log the failure for the user to resolve.

- Stage 2: Secure Communication Setup. This stage establishes and verifies the VPN connections and any other secure channels. Depending on the deployment, the VPN might already be set up prior (e.g., a persistent VPN link), or it might need to be initiated on-the-fly. In our implementation, we set up the VPN out-of-band (the VPN server on central and clients on edges run as services), so the automation’s job was to verify that each edge is reachable via the VPN. The Benchmarking service pings each edge’s VPN IP or attempts a quick SSH handshake to each. If any edge is not reachable, this is flagged as an error (“Error?” diamond in the flowchart after stage 2). If an error is detected (for instance, an edge device is offline or its VPN tunnel did not come up), the framework can log this in an error log file associated with that device. We designed the framework to either fix or skip depending on configuration. “Fix” could mean trying to restart the VPN connection or using an alternate route; “skip” means marking that device as unavailable and continuing with the others (the user would later see in the results which devices failed). Security setup also includes ensuring that the SSH service on each device is running and credentials work—essentially a dry-run login. Only after all target devices pass this connectivity check does the workflow proceed. This stage is crucial because a failure here often cannot be compensated during execution (if a device cannot be reached securely, you cannot benchmark it), so we isolate these issues early.

- Stage 3: Execution. This is the core benchmarking phase. Here, the automation service distributes the workload to all edge servers. In practical terms, this means the orchestrator triggers the benchmark on each device. The key difference of SHEAB, compared to manual testing, is that these triggers are done in parallel (to the extent possible). In our implementation using Ansible, we leverage its ability to execute tasks on multiple hosts concurrently. All edge nodes receive the command to start the benchmark nearly simultaneously. For example, we used the sysbench CPU benchmark as the workload in our test; the playbook invoked sysbench test = cpu time = 10 run (which runs a CPU prime number test for 10 s) on each of the 8 devices. Ansible, by default, might run on a few hosts in parallel (it has a configurable fork limit); we tuned this to ensure all 8 could start together. The result is that the benchmarking tasks run roughly at the same time on all devices, drastically shortening the wall-clock time needed to complete all tests, as compared to running them serially.

- Stage 4: Results Collection. Assuming the benchmarks run to completion, each edge will produce an output (it could be a simple text output from a tool, or a structured result). In our case, sysbench outputs a summary of the test, including number of events, execution time, etc. The automation service captures these outputs. The Results Collection stage then involves transferring the results into the central storage. This can happen in a couple of ways. We implemented it by having the Ansible playbook gather the command output and then issue a database insert via a local script. Alternatively, one could have each edge directly send a result to an API endpoint on the central server. The approach will depend on network permissions and convenience. Regardless, at the end of this stage, all data is centralized. The results in our example include each device’s ID, the test name, and key performance metrics (like execution time). Stage 4 in Figure 2 shows “all results stored in data collection microservice.” If an edge’s result was missing due to earlier failure, that entry would simply be absent or marked as failed.

- Stage 5: Termination and Cleanup. After results are collected, the framework enters the cleanup phase. This is often overlooked in simpler scripts, but for a long-running service, it is important to release resources and not leave processes hanging on devices. In this stage, the central server will gracefully close each SSH session (some orchestration tools do this automatically). It will also send a command to each edge to terminate any lingering benchmarking processes, in case a process did not exit properly. For instance, if a user accidentally set a very long benchmark duration and wants to abort, the framework could issue a kill signal. Cleanup also involves shutting down the containers on the central server if they are not needed to persist. In our design, the Results microservice (database) might remain running if one plans to run multiple benchmarks and accumulate results. But the Automation microservice can terminate or go idle after printing a summary of the operation. The VPN connections can either remain for future use or be closed. In a dynamic scenario, one might tear down the VPN if it was launched just for this test (to free network and ensure security).

5. Algorithm Design of SHEAB

5.1. Algorithm Implementation

| Algorithm 1: SHEAB Automated Edge Benchmarking |

| Input: EdgeDevices = {E1, E2, …, EN} // list of edge device identifiers or addresses |

| BenchmarkTask (script/command to run on each device) |

| Output: ResultsList = {R1, R2, …, RN} // collected results for each device (or error markers) |

| 1. // Stage 1: Initialization |

| 2. Launch Container_A (Automation Service) on Central Server |

| 3. Launch Container_R (Results Collection Service) on Central Server |

| 4. if either Container_A or Container_R fails to start: |

| 5. log “nitialization error: container launch failed” |

| 6. return (abort the process) |

| 7. Load device list EdgeDevices and credentials |

| 8. Log “Benchmark session started” with timestamp in Results DB |

| 9. // Stage 2: Secure Communication Setup |

| 10. for each device Ei in EdgeDevices: |

| 11. status = check_connectivity(Ei) // ping or SSH test via VPN |

| 12. if status == failure: |

| 13. log “Device Ei unreachable” in error log |

| 14. Mark Ei as skipped (will not execute BenchmarkTask) |

| 15. else: |

| 16. log “Device Ei is online and secure” |

| 17. end for |

| 18. if all devices failed connectivity: |

| 19. log “Error: No devices reachable. Aborting.” |

| 20. return (abort process) |

| 21. // Proceed with reachable devices |

| 22. // Stage 3: Execution (parallel dispatch) |

| 23. parallel for each device Ej in EdgeDevices that is reachable: |

| 24. try: |

| 25. send BenchmarkTask to Ej via SSH |

| 26. except error: |

| 27. log “Dispatch failed on Ej” in error log |

| 28. mark Ej as failed |

| 29. end parallel |

| 30. // Now, all reachable devices should be running the benchmark concurrently |

| 31. Wait until all launched benchmark tasks complete or timeout |

| 32. for each device Ek that was running BenchmarkTask: |

| 33. if task on Ek completed successfully: |

| 34. Retrieve result output from Ek (via SSH/SCP) |

| 35. Store result output in ResultsList (corresponding to Ek) |

| 36. else: |

| 37. log “Benchmark failed on Ek” in error log |

| 38. Store a failure marker in ResultsList for Ek |

| 39. end for |

| 40. // Stage 4: Results Collection |

| 41. for each result Ri in ResultsList: |

| 42. if Ri is available: |

| 43. Insert Ri into Results DB (Container_R) with device ID and timestamp |

| 44. else: |

| 45. Insert an entry into Results DB indicating failure for that device |

| 46. end for |

| 47. // Stage 5: Termination and Cleanup |

| 48. for each device Em in EdgeDevices that was contacted: |

| 49. if Em had an active SSH session, close it |

| 50. ensure no BenchmarkTask process is still running on Em (send termination signal if needed) |

| 51. end for |

| 52. Shut down Container_A (Automation) on Central (optional) |

| 53. (Optionally keep Container_R running if results need to persist for query) |

| 54. Close VPN connections if they were opened solely for this session |

| 55. log “Benchmark session completed” with summary (counts of successes/failures) |

| 56. return ResultsList (for any further processing or analysis) |

5.2. Explanation of the Algorithm

5.3. Computational Complexity

6. Implementation

6.1. Edge Nodes Hardware

6.2. Software Stack

- VPN: We used OpenVPN version 3.4 to create the secure network overlay. An OpenVPN server was configured on the central server. Each edge device ran an OpenVPN client that auto-starts on boot and connects to the central server. The VPN was set to use UDP on a pre-shared key (TLS certificates for server and clients for authentication) and AES-256 encryption for tunnel traffic, ensuring strong security. IP addresses were configured using DHCP with fixed IP assignment, These IPs were used by the orchestrator for SSH connections. The OpenVPN setup was tested independently to verify throughput and latency; the overhead was negligible for our purposes (added latency of <1 ms within our local network and throughput up to tens of Mbps, more than enough for sending text-based results).

- Containers: We created two Docker images—one for the Benchmarking service and one for the Results service. The Benchmarking service image was based on a lightweight Python/Ansible environment. It included:

- o

- Python 3 and the Ansible automation tool;

- o

- SSH client utilities;

- o

- The sysbench tool (for generating CPU load) installed so that it could, if needed, run locally or send to edges (though in practice sysbench needed to run on edges—so we ensured edges had it installed too);

- o

- Inventory file for Ansible listing the edge hosts (by their VPN IP addresses);

- o

- The playbook script described below.

- Automation Script (Ansible Playbook): The core logic of stages 2–4 from Algorithm 1 was implemented in an Ansible playbook (YAML format). The playbook tasks in summary:

- Ping all devices: Using Ansible’s built-in ping module to ensure connectivity (this corresponds to check_connectivity in the pseudocode). Ansible reports unreachable hosts if any—those we capture and mark.

- Execute benchmark on all devices: We wrote an Ansible task to run the shell command sysbench cpu --threads=1 --time=10 run on each host. We chose a single-thread CPU test running for 10 s to simulate a moderate load. (Threads = 1 so that we measure roughly single-core performance on each device; one could also test multi-thread by setting threads equal to number of cores, but that might saturate some devices differently—in this experiment we wanted comparable workloads.) Ansible will execute this on each host and gather the stdout.

- Register results: The playbook captures the stdout of the sysbench command in a variable for each host.

- Store results into DB: We added a step that runs on the central server (Ansible’s local connection) which takes the results and inserts them into MariaDB. This was done by using the MySQL version 8 module of Ansible or simply calling a Python script. For simplicity, we actually used a tiny Python snippet within the playbook that used MySQL connector to push data.

- Error handling: The playbook is written to not fail on one host’s failure. Instead, we use ignore_errors: yes on the benchmark task so that if a device fails to run sysbench, the playbook continues for others. We then check Ansible’s hostvars to see which hosts have no result and handle those accordingly in the DB insert step (inserting an error marker).

- Cleanup: In our test scenario, since sysbench runs for a fixed duration and exits, there were no lingering processes to kill. However, to simulate cleanup, we included a task that ensures no sysbench process is running after completion (e.g., using a killall sysbench command, which would effectively do nothing if all ended normally). Also, Ansible by design closes SSH connections after it is done.

- Edge Device Preparation: Each edge device was prepared with:

- o

- OpenVPN client configured to connect at boot.

- o

- SSH server running and a public key from the central server authorized (for passwordless access).

- o

- Sysbench installed (using apt on Debian/Ubuntu). This is important because our method chose to run sysbench on the device. Alternatively, we could have scp’d a binary or run everything remotely, but installing via package manager was straightforward.

- o

- Sufficient power and cooling to run reliably for the duration of tests (particularly Pi 4 can throttle if not cooled; our tests were short, so heat was not an issue).

- o

- Firewalls (ufw or iptables) set to allow OpenVPN (port 1194/UDP) and SSH (port 22) only through the VPN interface. For example, we required that SSH connections originate from the central server’s VPN IP. This means even if someone knew the device’s real IP, they could not SSH in unless they had VPN credentials. We also made sure the default route of the device remained its normal internet (so it could reach the VPN server), but all return traffic to central was through VPN.

6.3. Challenges

- Initially, two of the older devices had incorrect system time which could have affected VPN certificates and our timestamp comparisons. We synchronized all device clocks via NTP.

- One device (Orange Pi PC) had slightly different SSH key algorithms (older software); we had to ensure our central SSH client was configured to accept those (this was resolved by updating the device).

- We tuned the OpenVPN configuration to avoid reconnects; a short disconnection happened at first because one Pi had network flapping. We solved it by using a wired connection or a stable Wi-Fi for all edges.

- Ansible’s gather_facts step (which runs by default) can take a couple of seconds per device; we disabled fact gathering to speed up the process, since we did not need it for this simple task. This saved a significant amount of time in the parallel run (by default Ansible would gather facts sequentially before parallel tasks, but by disabling it we jumped straight to execution).

7. Statistical and Performance Analysis

7.1. Time to Deploy

7.2. Comparative Discussion

- If we had done each device manually, the human-induced overhead would be even larger (like logging in and running commands could take minutes each). SHEAB not only saved raw execution time but also would save enormous human time when scaling to many devices.

- If we had one device, ta would be slightly more than TTD (because overhead to set up a container and such might make it slower than just running one benchmark manually). So, for a single device, automation does not pay off. But, beyond a threshold number of devices, it clearly does. That threshold in our case appears to be low; even with 2 devices, it is likely beneficial given how parallel things were.

7.3. Statistical Variability

7.4. Validation of Speedup

- Active benchmarking phase: ~10 s, concurrency = 8 (all devices busy).

- Orchestration phase: ~35 s, concurrency = 0 (devices idle, central doing something or waiting).

7.5. Statistical Reliability

7.6. Impact of Network Scale

7.7. Edge Resource Utilization

8. Experiments and Evaluations

8.1. CPU Benchmarking

8.2. Memory Benchmarking

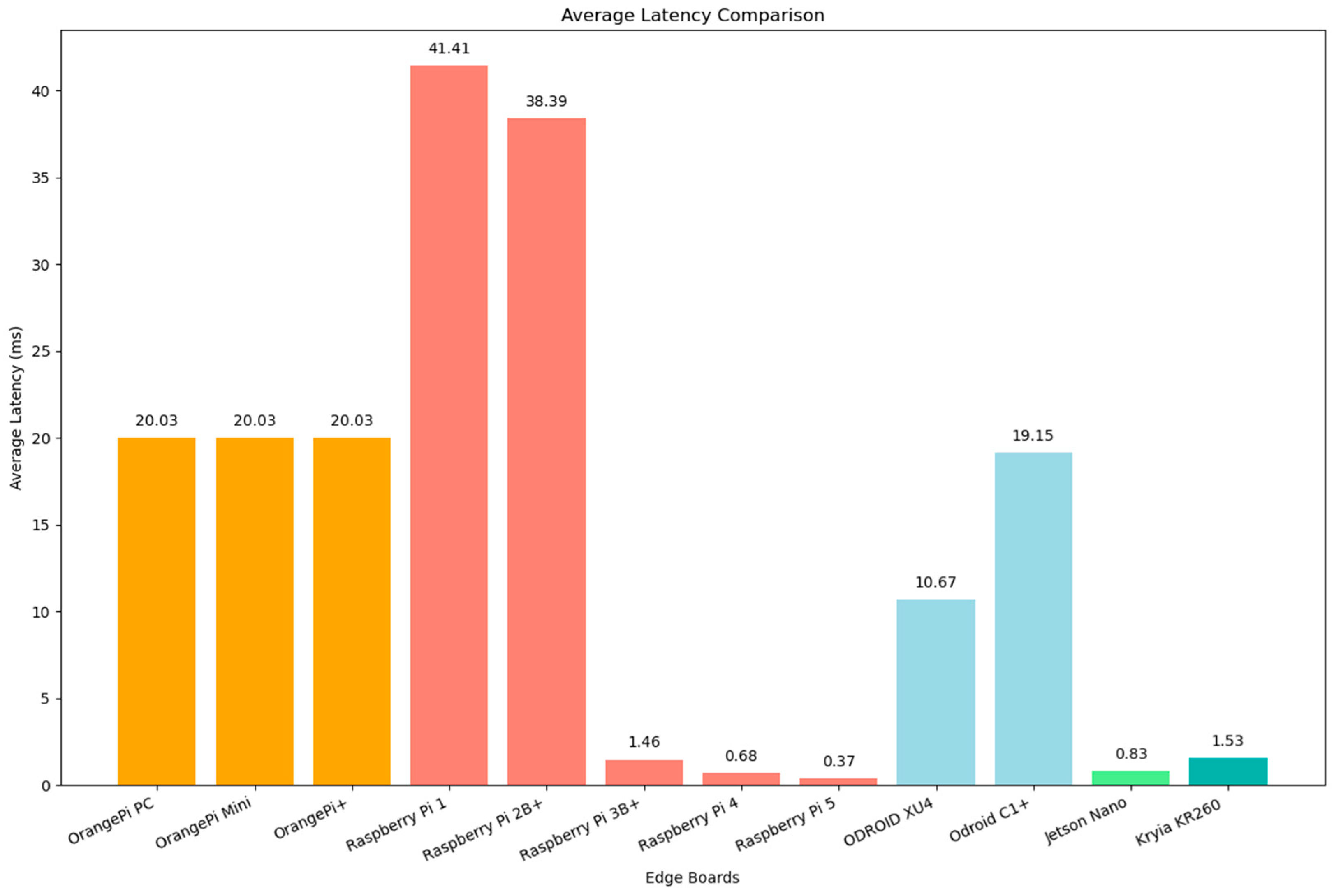

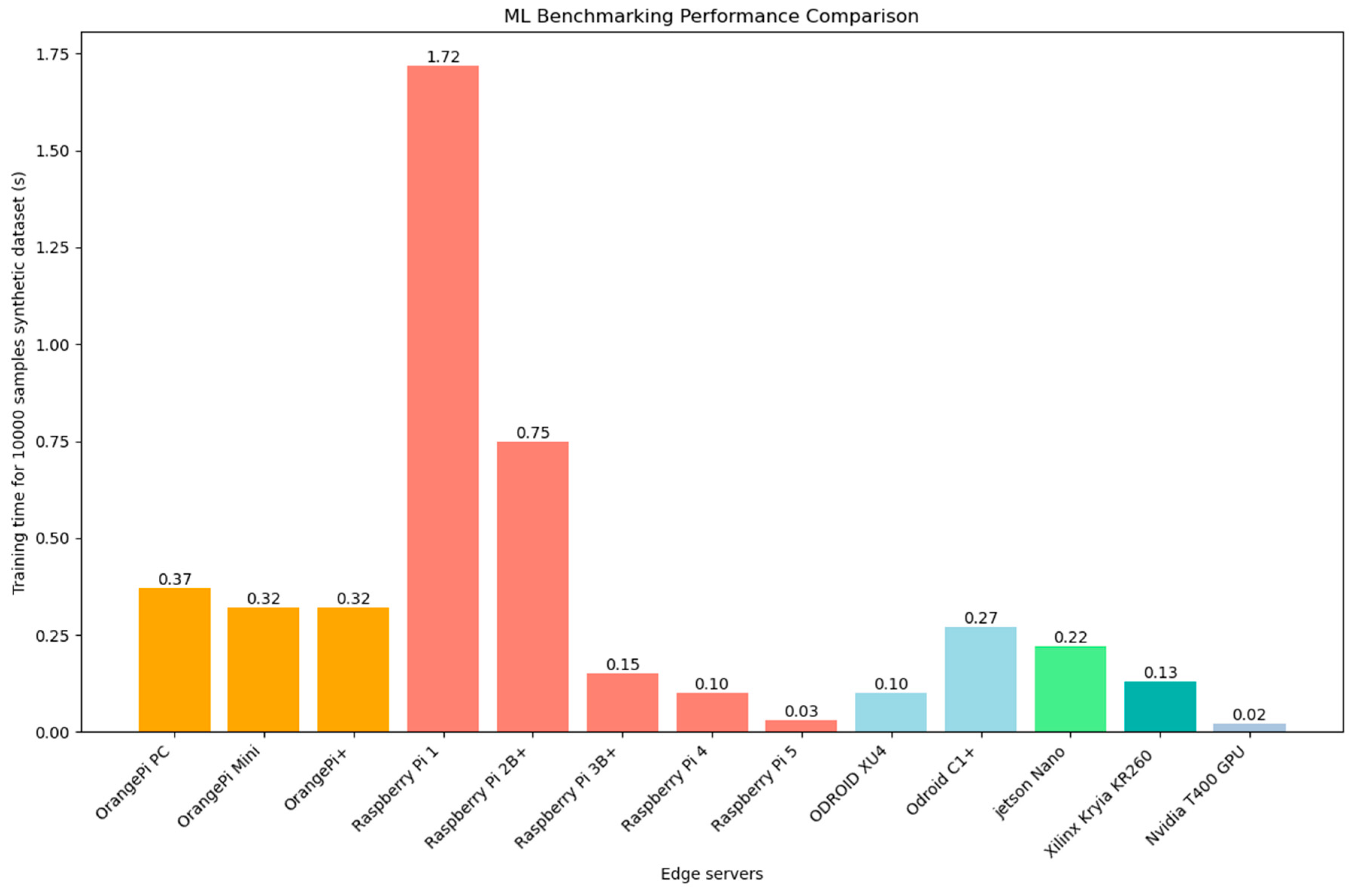

8.3. Machine Learning Inference Benchmarking

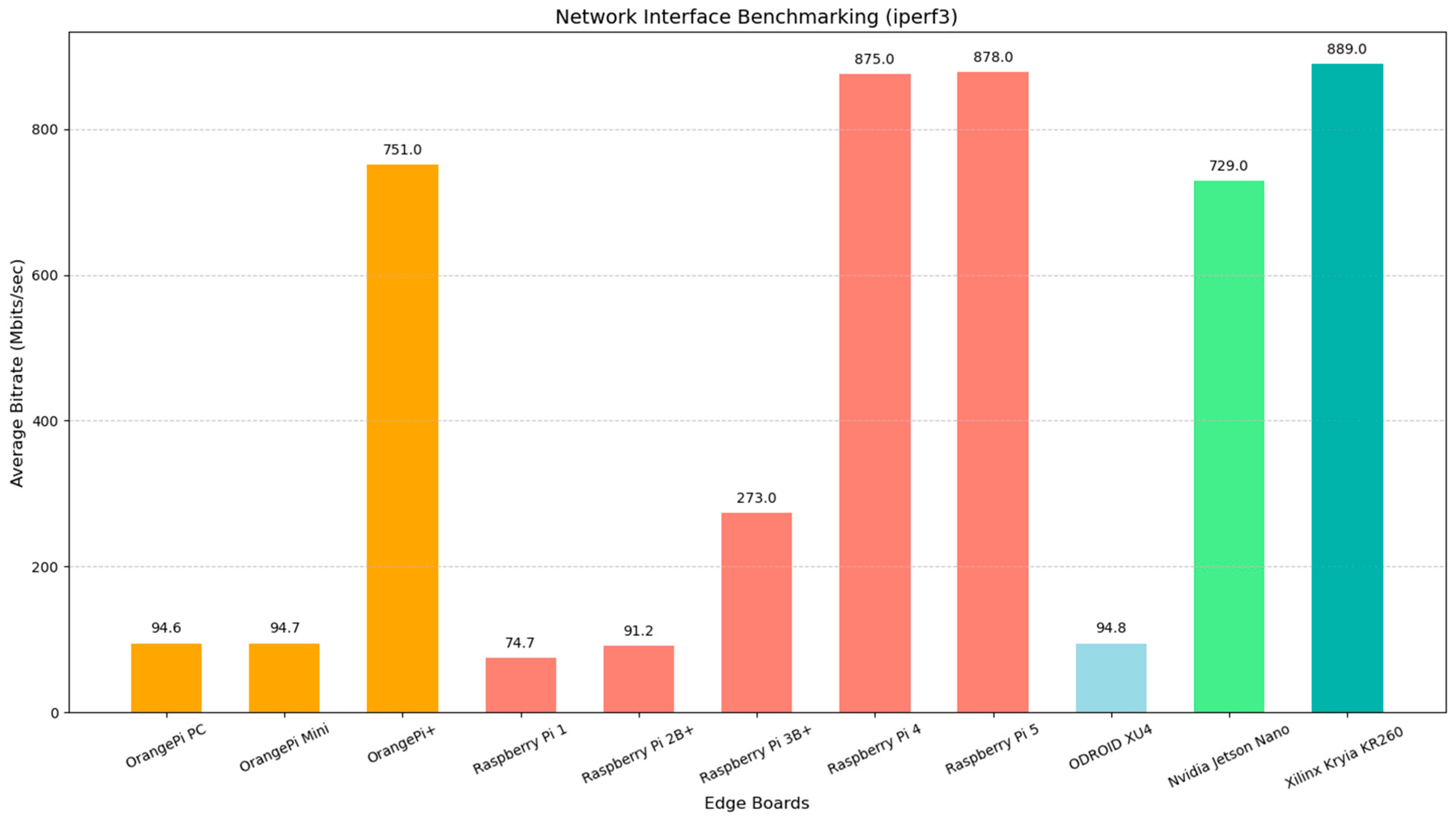

8.4. Network Benchmarking

8.5. GPU Versus CPU Benchmarking

8.6. Discussion

8.7. Summary of Findings

- Raspberry Pi 5 delivered the most balanced and superior performance across most benchmarks.

- Older devices like Raspberry Pi 1 and 2B+ are significantly less suitable for demanding edge applications due to latency and performance bottlenecks.

- GPU-enabled platforms like Jetson Nano and NVIDIA T400 significantly outperform traditional CPUs in computational workloads, emphasizing their value in edge deployments.

8.8. Future Work Considerations

- Reducing the orchestration overhead (perhaps by using persistent connections or a custom orchestrator instead of general-purpose Ansible) [51].

- Expanding to more diverse benchmarks (e.g., incorporating ML model inference tests which might use GPU accelerators on some devices, to see how the framework handles that).

- Integrating energy measurements: For edge, power consumption is key. We could equip devices with power meters and have the framework collect energy data during benchmarks. Automating that (maybe via networked power measurement units) could give a more holistic performance picture (not just speed, but energy efficiency).

- Benchmarking in presence of background load: In real deployments, devices might be doing something while we benchmark (or the benchmarking might itself be a stress-test). Concurrently running many devices can also test the shared network’s resilience. In one future test, we might saturate network links intentionally to see if VPN tunnels remain stable and if results can still be collected correctly.

8.9. Implications for Edge AI and Systems Research

9. Conclusions and Future Work

9.1. Conclusions

9.2. Future Work

- Enhanced Orchestration Efficiency: As analyzed, our current implementation has room for optimization in terms of reducing orchestration overhead. Future development could involve moving from Ansible to a more streamlined concurrency model, possibly using Golang or Python asyncio for SSH management. Alternatively, using container orchestration frameworks (like Kubernetes) where each device’s job is a pod (with Node affinity) could be explored. However, for edge devices that cannot run a full k8s, a lighter approach is needed. Another idea is to maintain persistent SSH connections to devices (via a daemon) so that repeated benchmarks incur minimal reconnection cost.

- Scalability Testing: We plan to test SHEAB with a larger pool of devices, potentially using virtual devices to simulate up to 50 or 100 nodes if physical ones are not available. This will help uncover any bottlenecks in the central coordinator and ensure the framework scales. If needed, a hierarchical approach could be used: e.g., have multiple orchestrators each handling a subset of devices (perhaps grouped by network location), coordinated by a higher-level script.

- Integration of Diverse Benchmarks: We intend to incorporate a library of benchmark workloads into SHEAB. This might include:

- o

- Microbenchmarks: CPU, memory bandwidth, storage I/O, network throughput between devices (to test device-to-device communication via the central or mesh).

- o

- AI Benchmarks: Running a set of TinyML inference tests (like the MLPerf Tiny benchmark or others) on all devices to compare AI inference latency and accuracy across hardware.

- o

- Application-level Benchmarks: E.g., deploying a simple edge application (like an MQTT broker test, or an image recognition service) on all devices to simulate how they would perform as edge servers.By doing so, SHEAB can become a one-stop solution where a user selects which tests to run and receives a full report per device.

- Dynamic Workload and Continuous Benchmarking: We consider adding features to run benchmarks continuously or on schedule, monitoring device performance over time. This could detect performance drift (if a device slows down due to wear-out or software changes). Combined with environmental sensors (temp, power), one could correlate performance with external factors.

- Energy and Thermal Measurements: To cater to edge AI’s concern for energy efficiency, we will look at integrating energy measurement. If devices have onboard power sensors or if we use smart power outlets, the framework could read those via API and include energy per task in the results. Similarly, reading CPU temperature before/after can indicate thermal throttling issues.

- Security and Fault-Tolerance Enhancements: While our security model is robust, future work could explore a zero-trust approach where even the central server does not fully trust devices. For instance, results could be signed by devices to ensure authenticity. Additionally, adding support for remote attestation (checking that the device is running a known software stack before benchmarking, to avoid compromised devices giving skewed results) could be interesting for high-assurance scenarios. Fault tolerance can be improved by perhaps queueing benchmarks to retry later if a device is temporarily unreachable, thus making the framework self-healing to network blips.

- User Interface and Visualization: A potential extension is a web-based dashboard for SHEAB. This UI could allow users to configure benchmark runs (select devices, select tests, schedule time) and then visualize results (graphs comparing devices, historical trends, etc.). This would make SHEAB more accessible to less technical users (e.g., a QA engineer who is not comfortable with command lines but needs performance data).

- Deployment as a Service: We envision packaging SHEAB such that it could be offered “as a service” in an organization. For example, an edge computing test lab could have SHEAB running, and whenever a new device is added to the lab network, an admin could trigger benchmarks. Or a device manufacturer could integrate SHEAB into their CI pipeline to automatically run performance tests on physical hardware prototypes whenever firmware changes [53].

- Comparative Studies Using SHEAB: Using the framework, we want to perform a broad comparative study of various edge devices (old and new) on a consistent set of benchmarks. This would produce a valuable dataset and possibly a journal publication on edge device performance trends. It would also serve as a proof-of-concept for how SHEAB simplifies such a study that might have been prohibitively tedious otherwise.

- Extending to Edge-Cloud Collaborative Benchmarks: Currently, SHEAB treats devices individually. But many edge scenarios involve client-edge-cloud interplay. We could extend the framework to coordinate not just device benchmarks but also end-to-end scenario tests. For instance, integrate with Edge AIBench’s notion of client-edge-cloud task. The central server could also simulate a cloud component (since it is powerful) and we can include an edge device’s interaction with it as part of a test.

9.3. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Y.; Wang, H.; Chen, S.; Xia, Y. A Survey on Mainstream Dimensions of Edge Computing. In Proceedings of the 2021 5th International Conference on Information System and Data Mining (ICISDM), Silicon Valley, CA, USA, 21–23 May 2021; ACM: New York, NY, USA, 2021; pp. 46–54. [Google Scholar] [CrossRef]

- Kong, L.; Tan, J.; Huang, J.; Chen, G.; Wang, S.; Jin, X.; Zeng, P.; Khan, M.; Das, S. Edge-Computing-Driven Internet of Things: A Survey. ACM Comput. Surv. 2023, 55, 1–41. [Google Scholar] [CrossRef]

- Meuser, T.; Lovén, L.; Bhuyan, M.; Patil, S.G.; Dustdar, S.; Aral, A.; Welzl, M. Revisiting Edge AI: Opportunities and Challenges. IEEE Internet Comput. 2024, 28, 49–59. [Google Scholar] [CrossRef]

- Gill, S.S.; Golec, M.; Hu, J.; Xu, M.; Du, J.; Wu, H.; Walia, G.K.; Murugesan, S.S.; Ali, B.; Kumar, M.; et al. Edge AI: A Taxonomy, Systematic Review and Future Directions. Cluster Comput. 2025, 28, 18. [Google Scholar] [CrossRef]

- Hamdan, S.; Ayyash, M.; Almajali, S. Edge-Computing Architectures for Internet of Things Applications: A Survey. Sensors 2020, 20, 6441. [Google Scholar] [CrossRef]

- Andriulo, F.C.; Fiore, M.; Mongiello, M.; Traversa, E.; Zizzo, V. Edge Computing and Cloud Computing for Internet of Things: A Review. Informatics 2024, 11, 71. [Google Scholar] [CrossRef]

- Nencioni, G.; Garroppo, R.; Olimid, R. 5G Multi-Access Edge Computing: A Survey on Security, Dependability, and Performance. IEEE Access 2023, 11, 63496–63533. [Google Scholar] [CrossRef]

- Xavier, R.; Silva, R.S.; Ribeiro, M.; Moreira, W.; Freitas, L.; Oliveira-Jr, A. Integrating Multi-Access Edge Computing (MEC) into Open 5G Core. Telecom 2024, 5, 2. [Google Scholar] [CrossRef]

- Sheikh, A.M.; Islam, M.R.; Habaebi, M.H.; Zabidi, S.A.; Bin Najeeb, A.R.; Kabbani, A. A Survey on Edge Computing (EC) Security Challenges: Classification, Threats, and Mitigation Strategies. Future Internet 2025, 17, 175. [Google Scholar] [CrossRef]

- Kolevski, D. Edge Computing and IoT Data Breaches: Security, Privacy, Trust, and Regulation. IEEE Technology and Society. Available online: https://technologyandsociety.org/edge-computing-and-iot-data-breaches-security-privacy-trust-and-regulation/ (accessed on 26 April 2025).

- Rocha, A.; Monteiro, M.; Mattos, C.; Dias, M.; Soares, J.; Magalhaes, R.; Macedo, J. Edge AI for Internet of Medical Things: A Literature Review. Comput. Electr. Eng. 2024, 116, 109202. [Google Scholar] [CrossRef]

- Rancea, A.; Anghel, I.; Cioara, T. Edge Computing in Healthcare: Innovations, Opportunities, and Challenges. Future Internet 2024, 16, 329. [Google Scholar] [CrossRef]

- Bevilacqua, R.; Barbarossa, F.; Fantechi, L.; Fornarelli, D.; Paci, E.; Bolognini, S.; Maranesi, E. Radiomics and Artificial Intelligence for the Diagnosis and Monitoring of Alzheimer’s Disease: A Systematic Review of Studies in the Field. J. Clin. Med. 2023, 12, 5432. [Google Scholar] [CrossRef]

- Akila, K.; Gopinathan, R.; Arunkumar, J.; Malar, B.S.B. The Role of Artificial Intelligence in Modern Healthcare: Advances, Challenges, and Future Prospects. Eur. J. Cardiovasc. Med. 2025, 15, 615–624. [Google Scholar] [CrossRef]

- Chen, P.; Huang, X. A Brief Discussion on Edge Computing and Smart Medical Care. In Proceedings of the 2024 3rd International Conference on Public Health and Data Science (ICPHDS ’24), New York, NY, USA, 22–24 March 2025; pp. 193–197. [Google Scholar] [CrossRef]

- Usman, M.; Ferlin, S.; Brunstrom, A.; Taheri, J. A Survey on Observability of Distributed Edge & Container-Based Microservices. IEEE Access 2022, 10, 86904–86919. [Google Scholar] [CrossRef]

- Chen, C.-C.; Chiang, Y.; Lee, Y.-C.; Wei, H. Edge Computing Management with Collaborative Lazy Pulling for Accelerated Container Startup. IEEE Trans. Netw. Serv. Manag. 2024, 12, 6437–6450. [Google Scholar] [CrossRef]

- Hebbar, A.; Thavarekere, S.; Kabber, A.; Subramaniam, K.V. Performance Comparison of Virtualization Methods. In Proceedings of the 2022 IEEE International Conference on Cloud Computing in Emerging Markets (CCEM), Bangalore, India, 7–10 December 2022; pp. 43–47. [Google Scholar] [CrossRef]

- Haq, M.S.; Tosun, A.; Korkmaz, T. Security Analysis of Docker Containers for ARM Architecture. In Proceedings of the 2022 IEEE/ACM 7th Symposium on Edge Computing (SEC), Seattle, WA, USA, 5–8 December 2022; p. 236. [Google Scholar] [CrossRef]

- Sami, H.; Otrok, H.; Bentahar, J.; Mourad, A. AI-Based Resource Provisioning of IoE Services in 6G: A Deep Reinforcement Learning Approach. IEEE Trans. Netw. Serv. Manag. 2021, 18, 3527–3540. [Google Scholar] [CrossRef]

- Detti, A. Microservices from Cloud to Edge: An Analytical Discussion on Risks, Opportunities and Enablers. IEEE Access 2023, 11, 49924–49942. [Google Scholar] [CrossRef]

- Abdullah, O.; Adelusi, J. Edge Computing vs. Cloud Computing: A Comparative Study of Performance, Scalability, and Latency. Preprint 2025. [Google Scholar]

- Banbury, C.; Reddi, V.J.; Torelli, P.; Holleman, J.; Jeffries, N.; Kiraly, C.; Xuesong, X. MLPerf Tiny Benchmark. arXiv 2021, arXiv:2106.07597. [Google Scholar] [CrossRef]

- Communications Security Establishment (Canada). Security Considerations for Edge Devices (ITSM.80.101). Canadian Centre for Cyber Security. Available online: https://www.cyber.gc.ca/en/guidance/security-considerations-edge-devices-itsm80101 (accessed on 25 April 2025).

- Kruger, C.P.; Hancke, G.P. Benchmarking Internet of Things Devices. In Proceedings of the 2014 12th IEEE International Conference on Industrial Informatics (INDIN), Porto Alegre, Brazil, 27–30 July 2014; pp. 611–616. [Google Scholar] [CrossRef]

- Shukla, A.; Chaturvedi, S.; Simmhan, Y. RIoTBench: An IoT Benchmark for Distributed Stream Processing Systems. Concurr. Comput. Pract. Exp. 2017, 29, e4257. [Google Scholar] [CrossRef]

- Lee, C.-I.; Lin, M.-Y.; Yang, C.-L.; Chen, Y.-K. IoTBench: A Benchmark Suite for Intelligent Internet of Things Edge Devices. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 170–174. [Google Scholar] [CrossRef]

- Edge AIBench: Towards Comprehensive End-to-End Edge Computing Benchmarking. Available online: https://ar5iv.labs.arxiv.org/html/1908.01924 (accessed on 25 April 2025).

- Naman, O.; Qadi, H.; Karsten, M.; Al-Kiswany, S. MECBench: A Framework for Benchmarking Multi-Access Edge Computing Platforms. In Proceedings of the 2023 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 2–8 July 2023; pp. 85–95. [Google Scholar] [CrossRef]

- Bäurle, S.; Mohan, N. ComB: A Flexible, Application-Oriented Benchmark for Edge Computing. In Proceedings of the 5th International Workshop on Edge Systems, Analytics and Networking (EdgeSys ’22), Rennes, France, 5 April 2022; pp. 19–24. [Google Scholar] [CrossRef]

- Rajput, K.R.; Kulkarni, C.D.; Cho, B.; Wang, W.; Kim, I.K. EdgeFaaSBench: Benchmarking Edge Devices Using Serverless Computing. In Proceedings of the 2022 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 10–16 July 2022; pp. 93–103. [Google Scholar] [CrossRef]

- Hasanpour, M.A.; Kirkegaard, M.; Fafoutis, X. EdgeMark: An Automation and Benchmarking System for Embedded Artificial Intelligence Tools. arXiv 2025, arXiv:2502.01700. [Google Scholar] [CrossRef]

- Anwar, A.; Nadeem, S.; Tanvir, A. Edge-AI Based Face Recognition System: Benchmarks and Analysis. In Proceedings of the 2022 19th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 16–20 August 2022; pp. 302–307. [Google Scholar] [CrossRef]

- Arroba, P.; Buyya, R.; Cárdenas, R.; Risco-Martín, J.L.; Moya, J.M. Sustainable Edge Computing: Challenges and Future Directions. Softw. Pract. Exp. 2024, 54, 2272–2296. [Google Scholar] [CrossRef]

- Bozkaya, E. A Digital Twin Framework for Edge Server Placement in Mobile Edge Computing. In Proceedings of the 2023 4th International Informatics and Software Engineering Conference (IISEC), Ankara, Türkiye, 21–22 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Carpio, F.; Michalke, M.; Jukan, A. BenchFaaS: Benchmarking Serverless Functions in an Edge Computing Network Testbed. IEEE Netw. 2023, 37, 81–88. [Google Scholar] [CrossRef]

- Sodiya, E.O.; Umoga, U.J.; Obaigbena, A.; Jacks, B.S.; Ugwuanyi, E.D.; Daraojimba, A.I.; Lottu, O.A. Current State and Prospects of Edge Computing within the Internet of Things (IoT) Ecosystem. Int. J. Sci. Res. Arch. 2024, 11, 1863–1873. [Google Scholar] [CrossRef]

- Garcia-Perez, A.; Miñón, R.; Torre-Bastida, A.I.; Diaz-de-Arcaya, J.; Zulueta-Guerrero, E. Conceptualising a Benchmarking Platform for Embedded Devices. In Proceedings of the 2024 9th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 18–21 June 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Goethals, T.; Sebrechts, M.; Al-Naday, M.; Volckaert, B.; De Turck, F. A Functional and Performance Benchmark of Lightweight Virtualization Platforms for Edge Computing. In Proceedings of the 2022 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 10–16 July 2022; pp. 60–68. [Google Scholar] [CrossRef]

- Hao, T.; Hwang, K.; Zhan, J.; Li, Y.; Cao, Y. Scenario-Based AI Benchmark Evaluation of Distributed Cloud/Edge Computing Systems. IEEE Trans. Comput. 2023, 72, 719–731. [Google Scholar] [CrossRef]

- Kaiser, S.; Tosun, A.Ş.; Korkmaz, T. Benchmarking Container Technologies on ARM-Based Edge Devices. IEEE Access 2023, 11, 107331–107347. [Google Scholar] [CrossRef]

- Kasioulis, M.; Symeonides, M.; Ioannou, G.; Pallis, G.; Dikaiakos, M.D. Energy Modeling of Inference Workloads with AI Accelerators at the Edge: A Benchmarking Study. In Proceedings of the 2024 IEEE International Conference on Cloud Engineering (IC2E), Pafos, Cyprus, 16–19 September 2024; pp. 189–196. [Google Scholar] [CrossRef]

- Schneider, M.; Prokscha, R.; Saadani, S.; Höß, A. ECBA-MLI: Edge Computing Benchmark Architecture for Machine Learning Inference. In Proceedings of the 2022 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 10–16 July 2022; pp. 23–32. [Google Scholar] [CrossRef]

- Zhang, Q.; Han, R.; Liu, C.H.; Wang, G.; Chen, L.Y. EdgeVisionBench: A Benchmark of Evolving Input Domains for Vision Applications at Edge. In Proceedings of the 2023 IEEE 39th International Conference on Data Engineering (ICDE), Anaheim, CA, USA, 3–7 April 2023; pp. 3643–3646. [Google Scholar] [CrossRef]

- Jo, J.; Jeong, S.; Kang, P. Benchmarking GPU-Accelerated Edge Devices. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Republic of Korea, 19–22 February 2020; pp. 117–120. [Google Scholar] [CrossRef]

- Toczé, K.; Schmitt, N.; Kargén, U.; Aral, A.; Brandić, I. Edge Workload Trace Gathering and Analysis for Benchmarking. In Proceedings of the 2022 IEEE 6th International Conference on Fog and Edge Computing (ICFEC), Turin, Italy, 16–19 May 2022; pp. 34–41. [Google Scholar] [CrossRef]

- Wen, S.; Deng, H.; Qiu, K.; Han, R. EdgeCloudBenchmark: A Benchmark Driven by Real Trace to Generate Cloud-Edge Workloads. In Proceedings of the 2022 IEEE International Conference on Sensing, Diagnostics, Prognostics, and Control (SDPC), Chongqing, China, 5–7 August 2022; pp. 377–382. [Google Scholar] [CrossRef]

- Ganguli, M.; Ranganath, S.; Ravisundar, S.; Layek, A.; Ilangovan, D.; Verplanke, E. Challenges and Opportunities in Performance Benchmarking of Service Mesh for the Edge. In Proceedings of the 2021 IEEE International Conference on Edge Computing (EDGE), Chicago, IL, USA, 18–23 September 2021; pp. 78–85. [Google Scholar] [CrossRef]

- Brochu, O.; Spatharakis, D.; Dechouniotis, D.; Leivadeas, A.; Papavassiliou, S. Benchmarking Real-Time Image Processing for Offloading at the Edge. In Proceedings of the 2022 IEEE International Mediterranean Conference on Communications and Networking (MeditCom), Athens, Greece, 5–8 September 2022; pp. 90–93. [Google Scholar] [CrossRef]

- Ferraz, O.; Araujo, H.; Silva, V.; Falcao, G. Benchmarking Convolutional Neural Network Inference on Low-Power Edge Devices. In Proceedings of the ICASSP 2023—IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Yang, S.; Ren, Y.; Zhang, J.; Guan, J.; Li, B. KubeHICE: Performance-Aware Container Orchestration on Heterogeneous-ISA Architectures in Cloud-Edge Platforms. In Proceedings of the 2021 IEEE ISPA/BDCloud/SocialCom/SustainCom, New York, NY, USA, 30 September–3 October 2021; pp. 81–91. [Google Scholar] [CrossRef]

- Fernando, D.; Rodriguez, M.A.; Buyya, R. iAnomaly: A Toolkit for Generating Performance Anomaly Datasets in Edge-Cloud Integrated Computing Environments. In Proceedings of the 2024 IEEE/ACM 17th International Conference on Utility and Cloud Computing (UCC), Shanghai, China, 16–19 December 2024; pp. 236–245. [Google Scholar] [CrossRef]

- Joint Shareability and Interference for Multiple Edge Application Deployment in Mobile-Edge Computing Environment. IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/9452122 (accessed on 2 May 2025).

| Tool | Platform (Controller → Targets/Protocol) | Performance (Fan-Out & Overhead) |

|---|---|---|

| Ansible (+AWX UI) | Linux/macOS container/VM | Parallelism via forks |

| Salt SSH | Salt controller → hosts over SSH | Works well but substantially slower than minion/ZeroMQ |

| Puppet Bolt | Any OS controller → Linux | Parallel task execution with light control plane. |

| Rundeck (Community) | Server app orchestrating jobs | Controller-side overhead only |

| StackStorm | Event-driven engine | Concurrency via action runners |

| Fabric (Python 3.12) | Python app → hosts over SSH | Fast & flexible; thread-based parallelism |

| parallel-ssh (pssh) | Python lib/CLI → async parallel SSH | Very high scale: non-blocking I/O |

| ClusterShell (clush) | CLI/lib → parallel SSH (OpenSSH) | Very high scalability: optimized fan-out & gather. |

| Nornir + NAPALM/Netmiko | Python framework (multi-threaded) | High throughput on network gear |

| OpenTofu (Terraform-compatible) | Controller IaC → cloud/infra-APIs (agentless) | Not a remote executor; scales infra provisioning well. |

| cdist | Controller → POSIX targets via SSH | Very light footprint; good parallel push on tiny devices. |

| Paper | Technology Scope | Topic Focus | Automation in Benchmarking | Support Heterogeneous Edge Servers | Containerization | Security |

|---|---|---|---|---|---|---|

| [33] | Edge-AI based face recognition on NVIDIA Jetson boards | Analysis and Benchmarking of real-time face recognition system, on only two different edge computing boards. | X | X | X | X |

| [34] | Sustainable edge computing | future directions and Challenges in achieving sustainability in edge computing. | X | X | X | X |

| [35] | positioning of edge nodes in mobile edge computing | A digital twin framework for optimizing edge server placement and workload balancing | X | X | X | X |

| [36] | Serverless functions in network testbed for edge computing | Benchmarking serverless functions under different hardware and network conditions | Yes | X | Docker | X |

| [37] | Edge computing in IoT ecosystems | Current state and prospects of edge computing in IoT, including challenges and future potential. | X | X | X | X |

| [38] | Benchmarking platform for embedded devices | Conceptualizing a modular and extensible platform for benchmarking embedded devices | automatic deployment of battery tests only | X | X | X |

| [39] | Lightweight virtualization platforms for edge computing | Functional and performance benchmarking of microVMs and containers for edge microservices | X | X | Docker | X |

| [40] | evaluation of AI benchmarking in distributed cloud-edge computing systems | Scenario-based AI benchmark evaluation of distributed cloud/edge computing systems | X | X | X | X |

| [41] | The Discussion of Container technologies on ARM edge servers | Benchmarking Docker, Podman, and Singularity containers on ARM-based edge devices | X | X | Docker/Podman | X |

| [42] | Energy simulation of inference workload for AI accelerators at the edge | Benchmarking study on Energy simulation of inference workload for AI accelerators at the edge | X | X | X | X |

| [29] | Introduces a new configurable and extensible benchmarking framework called MECBench for multi-access edge computing. | Demonstrates the framework’s capabilities through two scenarios: object detection for drone navigation and natural language processing. | Could be expanded using automation script nut not part of the original model | X | X | X |

| [43] | Presents a benchmark architecture for machine learning inference on edge devices. | Evaluates six object detection models on twenty-one different platform configurations, focusing on latency and energy consumption. | X | X | X | X |

| [44] | Introduces EdgeVisionBench, a benchmark for the evaluation of domain specific methods on edge nodes under input conditions. | Focuses on generating domains with diverse types of feature and label shifts for vision applications. | Workload generation automation only | X | X | X |

| [45] | benchmarking GPU-accelerated edge devices using deep learning benchmarks. | GPU-Accelerated Edge Devices to benchmark CNN performance, inference time. | X | X | X | X |

| [31] | Benchmarking serverless edge computing. | EdgeFaaSBench to measure Response time, resource usage, concurrency, cold/warm start | X | X | Serverless | X |

| [46] | gathering and analyzing edge workload traces for benchmarking. | Edge Workload Trace for Workload characterization, resource usage patterns. | X | X | X | X |

| [47] | EdgeCloudBenchmark, a benchmark generation system driven by real Alibaba cluster trace. | Cloud-Edge workloads Real-trace workloads performance, cloud-edge integration. | X | X | X | X |

| [48] | benchmarking the performance of AI in the service mesh for the edge. | Edge devices with Service Mesh architectures that measures Network overhead, latency, throughput. | X | X | X | X |

| [49] | benchmarking real-time image processing for offloading at the edge | Edge devices for offloading processing tasks for Image processing performance, latency. | X | X | X | X |

| [50] | benchmarking CNNs inference on low powered edge devices. | Low-Power Edge Devices (Jetson AGX Xavier, Movidius Neural Stick) to measure CNN inference rate, power efficiency. | X | X | X | X |

| Board Name | SoC | CPU | Memory | Storage | Networking | USB Ports | GPU/Accelerator |

|---|---|---|---|---|---|---|---|

| Odroid-XU4 | Samsung Exynos5422 | Octa-core (4 × Cortex-A15 @2.0 GHz, 4 × Cortex-A7 @1.4 GHz) | 2 GB LPDDR3 | MicroSD, eMMC | Gigabit Ethernet | 1 × USB 2.0, 2 × USB 3.0 | ARM Mali-T628 MP6 |

| Odroid C1+ | Amlogic S805 | Quad-core ARM Cortex-A5 @1.5 GHz | 1 GB DDR3 | MicroSD, eMMC | Gigabit Ethernet | 4 × USB 2.0 | ARM Mali-450 MP2 |

| Orange Pi Plus | Allwinner H3 | Quad-core ARM Cortex-A7 @1.6 GHz | 1 GB DDR3 | MicroSD, eMMC | Gigabit Ethernet, Wi-Fi | 4 × USB 2.0 | ARM Mali-400 MP2 |

| Orange Pi Mini | Allwinner A20 | Dual-core ARM Cortex-A7 @1.0 GHz | 1 GB DDR3 | MicroSD | 10/100 Ethernet | 2 × USB 2.0, 1 × USB OTG | ARM Mali-400 MP2 |

| Orange Pi PC | Allwinner H3 | Quad-core ARM Cortex-A7 @1.6 GHz | 1 GB DDR3 | MicroSD | 10/100 Ethernet | 3 × USB 2.0, 1 × USB OTG | ARM Mali-400 MP2 |

| Raspberry Pi 1 | Broadcom BCM2835 | Single-core ARM1176JZF-S @700 MHz | 512 MB SDRAM | MicroSD | 10/100 Ethernet | 2 × USB 2.0 | Broadcom VideoCore IV |

| Raspberry Pi 2B+ | Broadcom BCM2836 | Quad-core ARM Cortex-A7 @900 MHz | 1 GB LPDDR2 | MicroSD | 10/100 Ethernet | 4 × USB 2.0 | Broadcom VideoCore IV |

| Raspberry Pi 3B+ | Broadcom BCM2837B0 | Quad-core ARM Cortex-A53 @1.4 GHz | 1 GB LPDDR2 | MicroSD | Gigabit Ethernet, Wi-Fi, Bluetooth | 4 × USB 2.0 | Broadcom VideoCore IV |

| Raspberry Pi 4 | Broadcom BCM2711 | Quad-core ARM Cortex-A72 @1.5 GHz | 1, 2, 4, or 8 GB LPDDR4 | MicroSD | Gigabit Ethernet, Wi-Fi, Bluetooth | 2 × USB 3.0, 2 × USB 2.0 | Broadcom VideoCore VI |

| Raspberry Pi 5 | Broadcom BCM2712 | Quad-core ARM Cortex-A76 @2.4 GHz | 4 or 8 GB LPDDR4X | MicroSD | Gigabit Ethernet, Wi-Fi, Bluetooth | 2 × USB 3.0, 2 × USB 2.0 | Broadcom VideoCore VII |

| Jetson Nano | NVIDIA Tegra X1 | Quad-core ARM Cortex-A57 @1.43 GHz | 2 or 4 GB LPDDR4 | MicroSD, eMMC | Gigabit Ethernet | 4 × USB 3.0 | 128-core NVIDIA Maxwell GPU |

| Kria KR260 | Xilinx Zynq UltraScale+ MPSoC (Zynq XCZU5EV) | Quad-core ARM Cortex-A53 @1.3 GHz | 4 GB DDR4 | MicroSD, eMMC, SSD (via expansion) | Gigabit Ethernet | 4 × USB 3.0 | FPGA-based accelerator (Zynq FPGA fabric) |

| Edge Server Hardware | Execution Time (Sysbench) in Second |

|---|---|

| Odroid | 10.106 |

| Orangepi+ | 10.073 |

| OrangePi mini | 10.114 |

| Orange Pi PC | 10.125 |

| Raspberry pi 3B+ | 10.050 |

| Raspberry pi 2b+ | 10.129 |

| Raspberry pi 4 | 10.031 |

| Raspberry pi5 | 10.015 |

| Total time taken by all edge servers | 80.643 |

| Time using SHEAB framework | 45.362 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdulkadhim, M.; Repas, S.R. SHEAB: A Novel Automated Benchmarking Framework for Edge AI. Technologies 2025, 13, 515. https://doi.org/10.3390/technologies13110515

Abdulkadhim M, Repas SR. SHEAB: A Novel Automated Benchmarking Framework for Edge AI. Technologies. 2025; 13(11):515. https://doi.org/10.3390/technologies13110515

Chicago/Turabian StyleAbdulkadhim, Mustafa, and Sandor R. Repas. 2025. "SHEAB: A Novel Automated Benchmarking Framework for Edge AI" Technologies 13, no. 11: 515. https://doi.org/10.3390/technologies13110515

APA StyleAbdulkadhim, M., & Repas, S. R. (2025). SHEAB: A Novel Automated Benchmarking Framework for Edge AI. Technologies, 13(11), 515. https://doi.org/10.3390/technologies13110515