Decoding Temporally Encoded 3D Objects from Low-Cost Wearable Electroencephalography

Abstract

1. Introduction

1.1. Overview

1.2. Background

1.2.1. Summarizing Prior Work

1.2.2. EEG to Image

1.2.3. EEG to Video

1.2.4. EEG to Object

1.2.5. Summary of Prior Work

2. Materials and Methods

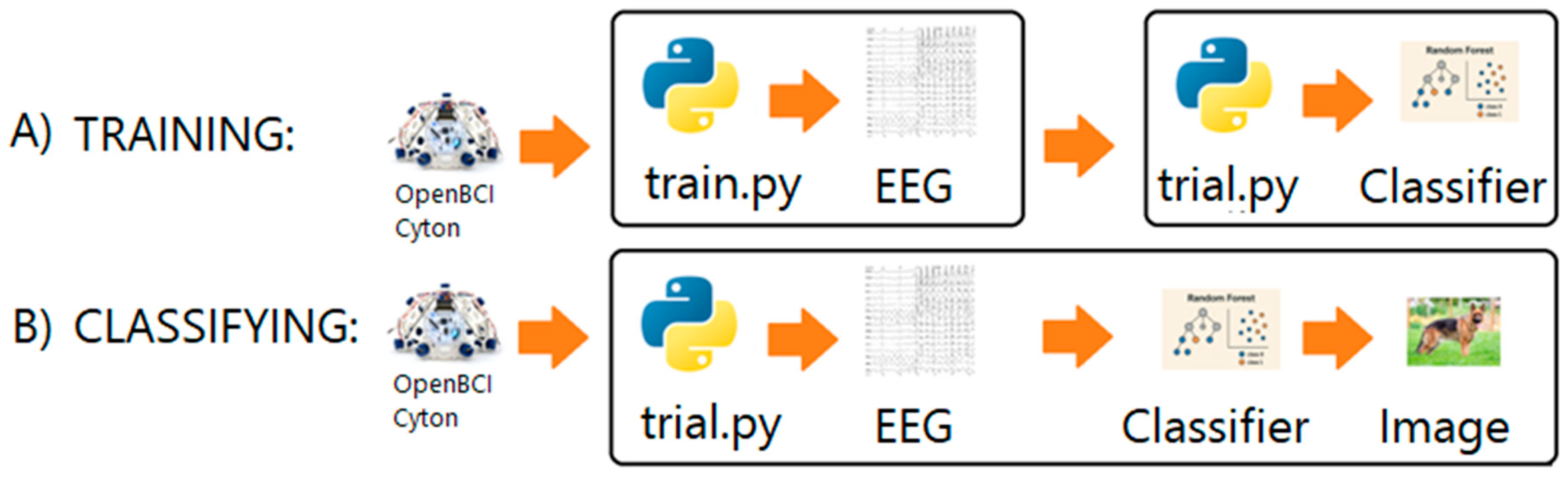

2.1. Summary

2.2. Participants

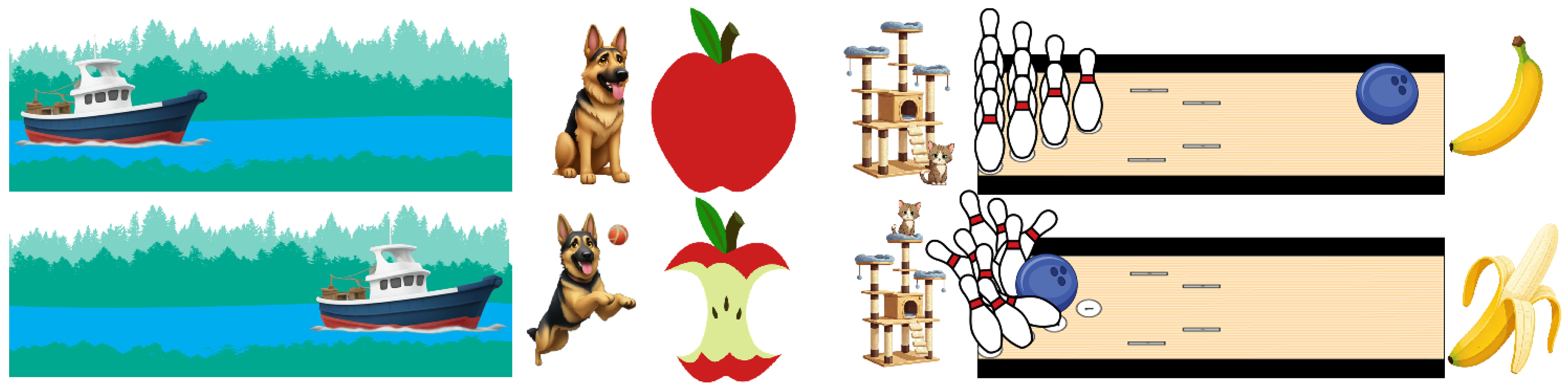

2.3. Stimulus Presentation

2.4. Image Processing

2.5. Design Requirements

2.6. Preprocessing and Feature Extraction

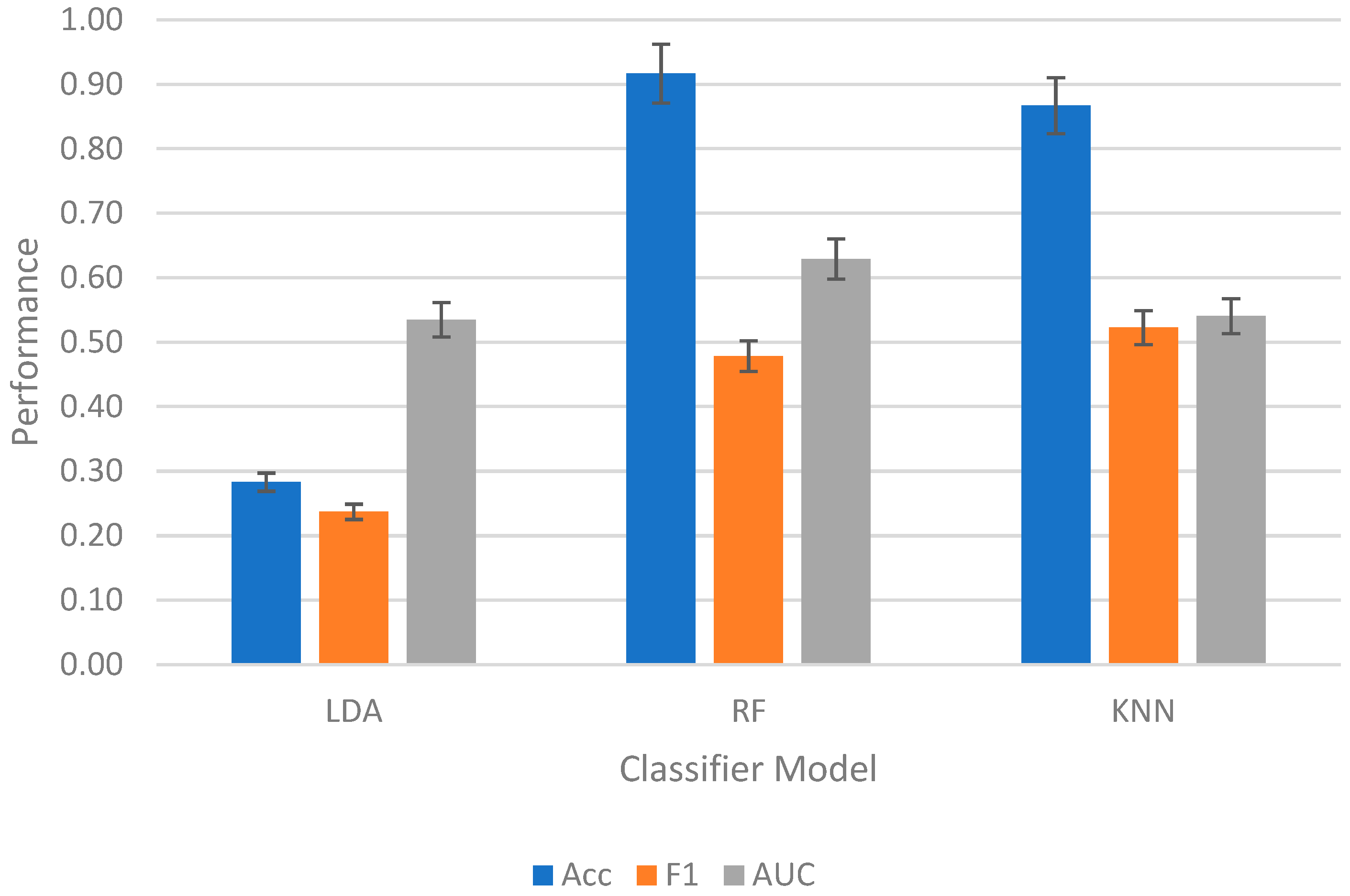

2.7. Data Classification

2.8. Performance Metrics

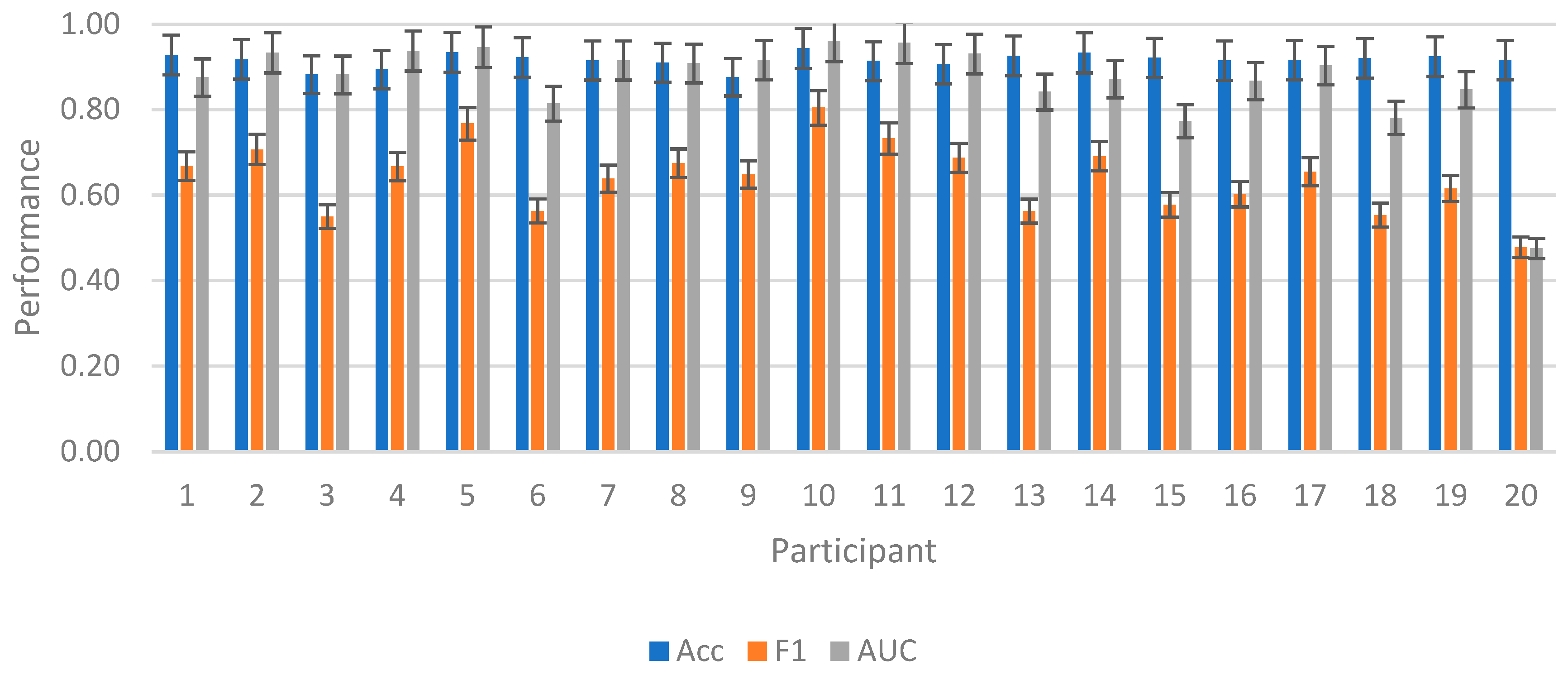

3. Results

3.1. Summarizing Results

3.2. Intrasubject Competition

3.3. Intersubject Competition

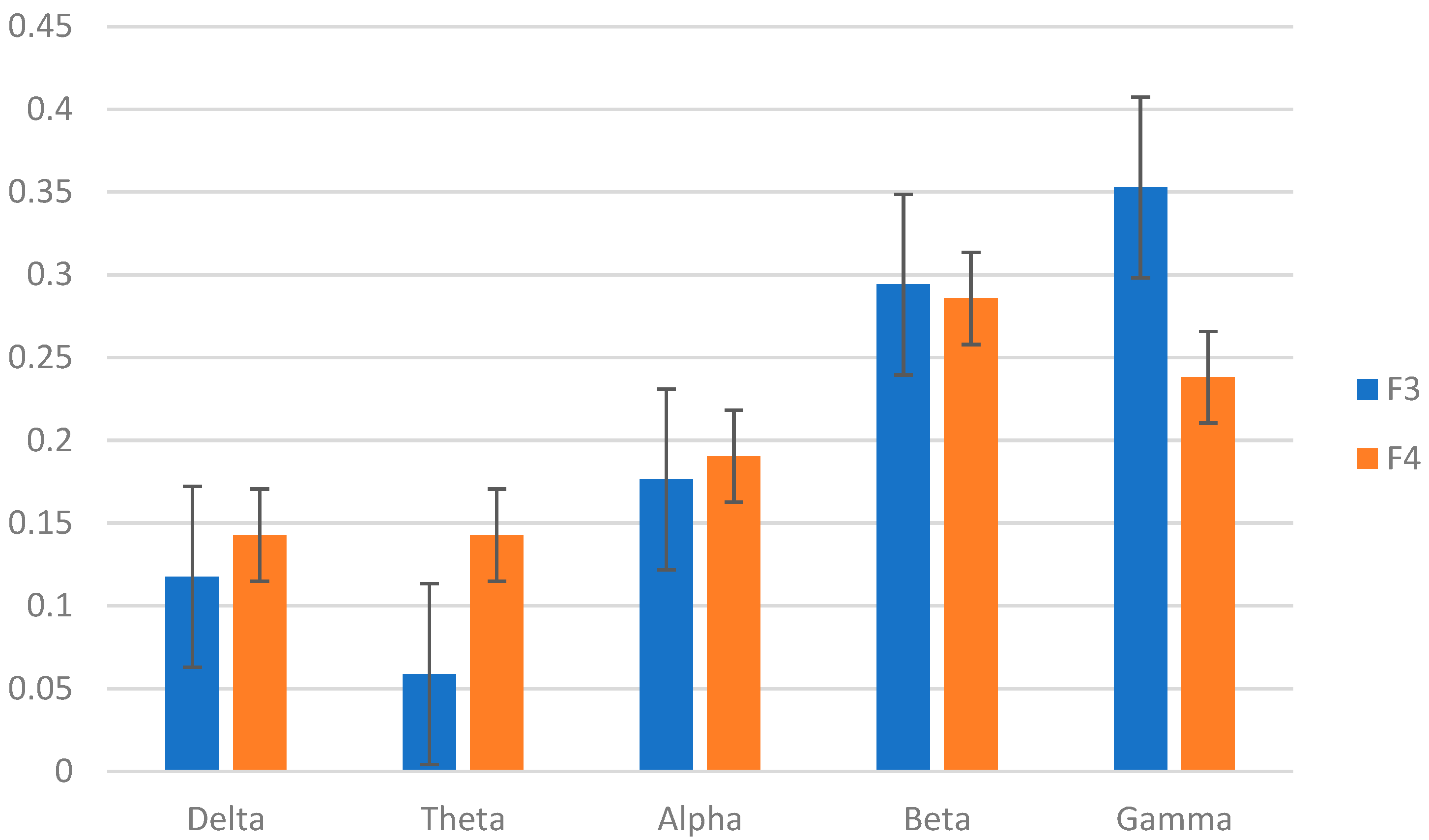

3.4. Top Features

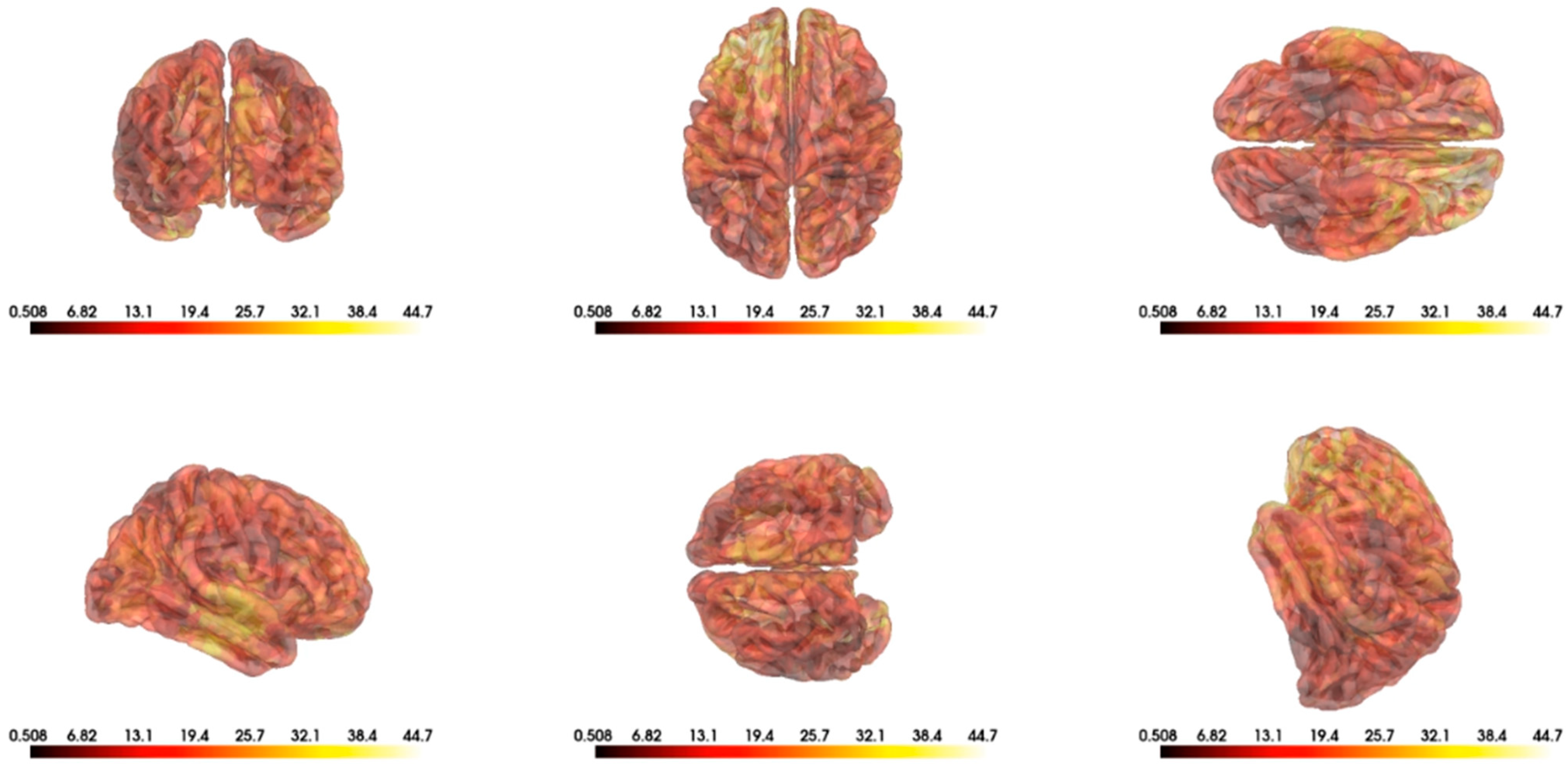

3.5. Image to Object

4. Discussion

4.1. Summary

4.2. Limitations

4.3. Future Work

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Acar, Z.; Acar, C.; Makeig, S. Simultaneous head tissue conductivity and EEG source location estimation. NeuroImage 2016, 124, 168–180. [Google Scholar] [CrossRef]

- Zou, B.; Zheng, Y.; Shen, M.; Luo, Y.; Li, L.; Zhang, L. Beats: An open-source; high-precision, multi-channel EEG acquisition tool system. IEEE Trans. Biomed. Circuits Syst. 2022, 16, 1287–1298. [Google Scholar] [CrossRef]

- Trans Cranial Technologies. 10/20 System Positioning. 2012. Available online: https://thebrainstimulator.net/wp-content/uploads/2023/02/Trans_Cranial_Technologies-10_20_positioning_manual_v1_0.pdf (accessed on 21 February 2023).

- Song, Y.; Liu, B.; Li, X.; Shi, N.; Wang, Y.; Gao, X. Decoding natural images from EEG for object recognition. arXiv 2023, arXiv:2308.13234. [Google Scholar]

- Yang, G.; Liu, J. A New Framework Combining Diffusion Models and the Convolution Classifier for Generating Images from EEG Signals. Brain Sci. 2024, 14, 478. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Y.; Wang, Y.; Ren, K.; Shi, H.; Wang, Z.L.D.; Lu, B.; Zheng, W. EEG2video: Towards decoding dynamic visual perception from EEG signals. Adv. Neural Inf. Process. Syst. 2024, 37, 72245–72273. [Google Scholar]

- Jahn, N.; Meshi, D.; Bente, G.; Schmälzle, R. Media neuroscience on a shoestring. J. Media Psychol. Theor. Methods Appl. 2023, 35, 75–86. [Google Scholar] [CrossRef]

- Capati, F.A.; Bechelli, R.P.; Castro, M.C.F. Hybrid SSVEP/P300 BCI keyboard. In Proceedings of the International Joint Conference on Biomedical Engineering Systems and Technologies, Rome, Italy, 21–23 February 2016; Volume 2016, pp. 214–218. [Google Scholar]

- Allison, B.; Luth, T.; Valbuena, D.; Teymourian, A.; Volosyak, I.; Graser, A. BCI demographics: How many (and what kinds of) people can use an SSVEP BCI? IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 107–116. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Dornhege, G.; Krauledat, M.; Muller, K.R.; Kunzmann, V.; Losch, F.; Curio, G. The Berlin Brain-Computer Interface: EEG-based communication without subject training. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 147–152. [Google Scholar] [CrossRef]

- Kübler, A. The history of BCI: From a vision for the future to real support for personhood in people with locked-in syndrome. Neuroethics 2020, 13, 163–180. [Google Scholar] [CrossRef]

- Singh, P.; Pandey, P.; Miyapuram, K.; Raman, S. EEG2IMAGE: Image reconstruction from EEG brain signals. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; Volume 2023, pp. 1–5. [Google Scholar]

- Shimizu, H.; Srinivasan, R. Improving classification and reconstruction of imagined images from EEG signals. PLoS ONE 2022, 17, e0274847. [Google Scholar] [CrossRef]

- LaRocco, J.; Tahmina, Q.; Lecian, S.; Moore, J.; Helbig, C.; Gupta, S. Evaluation of an English language phoneme-based imagined speech brain computer interface with low-cost electroencephalography. Front. Neuroinform. 2023, 17, 1306277. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; LeBel, A.; Jain, S.; Huth, A.G. Semantic reconstruction of continuous language from non-invasive brain recordings. Nat. Neurosci. 2023, 26, 858–866. [Google Scholar] [CrossRef]

- Lopez-Bernal, D.; Balderas, D.; Ponce, P.; Molina, A. A state-of-the-art review of EEG-based imagined speech decoding. Front. Hum. Neurosci. 2022, 16, 867281. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M. Reconstructing static memories from the brain with eeg feature extraction and generative adversarial networks. J. Stud. Res. 2023, 12, 1–17. [Google Scholar] [CrossRef]

- Tang, Z.; Wang, X.; Wu, J.; Ping, Y.; Guo, X.; Cui, Z. A BCI painting system using a hybrid control approach based on SSVEP and P300. Comput. Biol. Med. 2022, 150, 106118. [Google Scholar] [CrossRef] [PubMed]

- Zeng, H.; Xia, N.; Tao, M.; Pan, D.; Zheng, H.; Wang, C.; Xu, F.; Zakaria, W.; Dai, G. DCAE: A dual conditional autoencoder framework for the reconstruction from EEG into image. Biomed. Signal Process. Control 2023, 81, 104440. [Google Scholar] [CrossRef]

- Shirakawa, K.; Nagano, Y.; Tanaka, M.; Aoki, S.C.; Muraki, Y.; Majima, K.; Kamitani, Y. Spurious reconstruction from brain activity. Neural Netw. 2025, 190, 107515. [Google Scholar] [CrossRef]

- Mishra, R.; Sharma, K.; Jha, R.R.; Bhavsar, A. NeuroGAN: Image reconstruction from EEG signals via an attention-based GAN. Neural Comput. Appl. 2023, 35, 9181–9192. [Google Scholar] [CrossRef]

- El-Kalliny, M.M.; Wittig, J.H., Jr.; Sheehan, T.C.; Sreekumar, V.; Inati, S.K.; Zaghloul, K.A. Changing temporal context in human temporal lobe promotes memory of distinct episodes. Nat. Commun. 2019, 10, 203. [Google Scholar] [CrossRef]

- Linde-Domingo, J.; Treder, M.S.; Kerrén, C.; Wimber, M. Evidence that neural information flow is reversed between object perception and object reconstruction from memory. Nat. Commun. 2019, 10, 179. [Google Scholar] [CrossRef]

- Li, Y.; Pazdera, J.K.; Kahana, M.J. EEG decoders track memory dynamics. Nat. Commun. 2024, 15, 2981. [Google Scholar] [CrossRef]

- Huang, Q.; Jia, J.; Han, Q.; Luo, H. Fast-backward replay of sequentially memorized items in humans. eLife 2018, 7, e35164. [Google Scholar] [CrossRef]

- Wakita, S.; Orima, T.; Motoyoshi, I. Photorealistic reconstruction of visual texture from EEG signals. Front. Comput. Neurosci. 2021, 15, 754587. [Google Scholar] [CrossRef]

- Ling, S.; Lee, A.; Armstrong, B.; Nestor, A. How are visual words represented? insights from EEG-based visual word decoding, feature derivation and image reconstruction. Hum. Brain Mapp. 2019, 40, 5056–5068. [Google Scholar] [CrossRef] [PubMed]

- Nestor, A.; Plaut, D.; Behrmann, M. Feature-based face representations and image reconstruction from behavioral and neural data. Proc. Natl. Acad. Sci. USA 2015, 113, 416–421. [Google Scholar] [CrossRef] [PubMed]

- Fuad, N.; Taib, M. Three dimensional eeg model and analysis of correlation between sub band for right and left frontal brainwave for brain balancing application. J. Mach. Mach. Commun. 2015, 1, 91–106. [Google Scholar] [CrossRef]

- Khaleghi, N.; Rezaii, T.; Beheshti, S.; Meshgini, S.; Sheykhivand, S.; Danishvar, S. Visual saliency and image reconstruction from eeg signals via an effective geometric deep network-based generative adversarial network. Electronics 2022, 11, 3637. [Google Scholar] [CrossRef]

- Yang, B.; Wen, H.; Wang, S.; Clark, R.; Markham, A.; Trigoni, N. 3D object reconstruction from a single depth view with adversarial learning. Proc. IEEE Int. Conf. Comput. Vis. Workshops 2017, 2017, 679–688. [Google Scholar]

- Nemrodov, D.; Niemeier, M.; Patel, A.; Nestor, A. The neural dynamics of facial identity processing: Insights from eeg-based pattern analysis and image reconstruction. Eneuro 2018, 5, ENEURO.0358-17.2018. [Google Scholar] [CrossRef]

- Hung, Y.C.; Wang, Y.K.; Prasad, M.; Lin, C.T. Brain dynamic states analysis based on 3D convolutional neural network. Trans. IEEE Int. Conf. Syst. Man Cybern. 2017, 2017, 222–227. [Google Scholar]

- Park, S.; Kim, D.; Han, C.; Im, C. Estimation of emotional arousal changes of a group of individuals during movie screening using steady-state visual-evoked potential. Front. Neuroinform. 2021, 15, 731236. [Google Scholar] [CrossRef] [PubMed]

- Rashkov, G.; Bobe, A.; Fastovets, D.; Komarova, M. Natural image reconstruction from brain waves: A novel visual BCI system with native feedback. bioRxiv 2019, 2019, 787101. [Google Scholar]

- Wang, P.; Wang, S.; Peng, D.; Chen, L.; Wu, C.; Wei, Z.; Childs, P.; Guo, Y.; Li, L. Neurocognition-inspired design with machine learning. Des. Sci. 2020, 6, e33. [Google Scholar] [CrossRef]

- Shen, G.; Horikawa, T.; Majima, K.; Kamitani, Y. Deep image reconstruction from human brain activity. PLoS Comput. Biol. 2019, 15, e1006633. [Google Scholar] [CrossRef]

- Taberna, G.; Marino, M.; Ganzetti, M.; Mantini, D. Spatial localization of EEG electrodes using 3D scanning. J. Neural Eng. 2019, 16, 026020. [Google Scholar] [CrossRef]

- Clausner, T.; Dalal, S.S.; Crespo-García, M. Photogrammetry-based head digitization for rapid and accurate localization of EEG electrodes and MEG fiducial markers using a single digital SLR camera. Front. Neurosci. 2017, 11, 254. [Google Scholar] [CrossRef]

- Kwak, Y.; Kong, K.; Song, W.; Min, B.; Kim, S. Multilevel feature fusion with 3D convolutional neural network for EEG-based workload estimation. IEEE Access 2020, 8, 16009–16021. [Google Scholar] [CrossRef]

- Chiesi, M.; Guermandi, M.; Placati, S.; Scarselli, E.; Guerrieri, R. Creamino: A cost-effective, open-source EEG-based BCI system. IEEE Trans. Biomed. Eng. 2018, 66, 900–909. [Google Scholar] [CrossRef]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J. Fieldtrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 2011, 1–9. [Google Scholar] [CrossRef]

- Ávila, C.G.; Bott, F.; Tiemann, L.; Hohn, V.; May, E.; Nickel, M.; Zebhauser, P.; Gross, J.; Ploner, M. DISCOVER-EEG: An open, fully automated EEG pipeline for biomarker discovery in clinical neuroscience. Sci. Data 2023, 10, 613. [Google Scholar] [CrossRef] [PubMed]

- Cardona-Alvarez, Y.N.; Álvarez-Meza, A.M.; Cárdenas-Peña, D.A.; Castaño-Duque, G.A.; Castellanos-Dominguez, G. A Novel OpenBCI Framework for EEG-Based Neurophysiological Experiments. Sensors 2023, 23, 3763. [Google Scholar] [CrossRef] [PubMed]

- Lifanov-Carr, J.; Griffiths, B.; Linde-Domingo, J.; Ferreira, C.; Wilson, M.; Mayhew, S.; Charest, I.; Wimber, M. Reconstructing Spatiotemporal Trajectories of Visual Object Memories in the Human Brain. Eneuro 2024, 11, ENEURO.0091-24.2024. [Google Scholar] [CrossRef]

- Lehmann, M.; Schreiner, T.; Seifritz, E.; Rasch, B. Emotional arousal modulates oscillatory correlates of targeted memory reactivation during NREW, but not REM sleep. Sci. Rep. 2016, 6, 39229. [Google Scholar] [CrossRef]

- Li, W.; Zhang, W.; Jiang, Z.; Zhou, T.; Xu, S.; Zou, L. Source localization and functional network analysis in emotion cognitive reappraisal with EEG-fMRI integration. Front. Hum. Neurosci. 2022, 16, 960784. [Google Scholar] [CrossRef]

- Sharma, R.; Ribeiro, B.; Pinto, A.; Cardoso, A. Emulating cued recall of abstract concepts via regulated activation networks. Appl. Sci. 2021, 11, 2134. [Google Scholar] [CrossRef]

- Guenther, S.; Kosmyna, N.; Maes, P. Image classification and reconstruction from low-density EEG. Sci. Rep. 2024, 14, 16436. [Google Scholar] [CrossRef]

- Amemaet, F. Python Courses. 2021. Available online: https://pythonbasics.org/text-to-speech/ (accessed on 28 January 2022).

- Barratt, S.; Sharma, R. A note on the inception score. arXiv 2018, arXiv:1801.01973. [Google Scholar] [CrossRef]

- Xue, X.; Lu, Z.; Huang, D.; Ouyang, W.; Bai, L. GenAgent: Build Collaborative AI Systems with Automated Workflow Generation--Case Studies on ComfyUI. arXiv 2024, arXiv:2409.01392. [Google Scholar]

- Torres-García, A.A.; Reyes-García, C.A.; Villaseñor-Pineda, L.; García-Aguilar, G. Implementing a fuzzy inference system in a multi-objective EEG channel selection model for imagined speech classification. Expert Syst. Appl. 2016, 59, 1–12. [Google Scholar] [CrossRef]

- LaRocco, J.; Le, M.D.; Paeng, D.G. A systemic review of available low-cost EEG headsets used for drowsiness detection. Front. Neuroinform. 2020, 14, 553352. [Google Scholar] [CrossRef]

- LaRocco, J.; Innes, C.R.; Bones, P.J.; Weddell, S.; Jones, R.D. Optimal EEG feature selection from average distance between events and non-events. Proc. IEEE Eng. Med. Biol. Soc. 2014, 2014, 2641–2644. [Google Scholar]

- Shah, U.; Alzubaidi, M.; Mohsen, F.; Abd-Alrazaq, A.; Alam, T.; Househ, M. The Role of Artificial Intelligence in Decoding Speech from EEG Signals: A Scoping Review. Sensors 2022, 22, 6975. [Google Scholar] [CrossRef] [PubMed]

- LaRocco, J.; Paeng, D.G. Optimizing Computer–Brain Interface Parameters for Non-invasive Brain-to-Brain Interface. Front. Neuroinform. 2020, 14, 1. [Google Scholar] [CrossRef] [PubMed]

- Assem, M.; Hart, M.; Coelho, P.; Romero-Garcia, R.; McDonald, A.; Woodberry, E.; Morris, R.; Price, S.; Suckling, J.; Santarius, T.; et al. High gamma activity distinguishes frontal cognitive control regions from adjacent cortical networks. Cortex 2023, 159, 286–298. [Google Scholar] [CrossRef]

- Xu, S.; Liu, Y.; Lee, H.; Li, W. Neural interfaces: Bridging the brain to the world beyond healthcare. Exploration 2024, 4, 20230146. [Google Scholar] [CrossRef]

| Image | SSIM |

|---|---|

| Apple1 | 0.58 |

| Apple2 | 0.65 |

| Banana1 | 0.69 |

| Banana2 | 0.67 |

| Boat1 | 0.59 |

| Boat1 | 0.53 |

| Bowling1 | 0.51 |

| Bowling2 | 0.50 |

| Cat1 | 0.55 |

| Cat2 | 0.57 |

| Dog1 | 0.60 |

| Dog2 | 0.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

LaRocco, J.; Tahmina, Q.; Zia, S.; Merchant, S.; Forrester, J.; He, E.; Lin, Y. Decoding Temporally Encoded 3D Objects from Low-Cost Wearable Electroencephalography. Technologies 2025, 13, 501. https://doi.org/10.3390/technologies13110501

LaRocco J, Tahmina Q, Zia S, Merchant S, Forrester J, He E, Lin Y. Decoding Temporally Encoded 3D Objects from Low-Cost Wearable Electroencephalography. Technologies. 2025; 13(11):501. https://doi.org/10.3390/technologies13110501

Chicago/Turabian StyleLaRocco, John, Qudsia Tahmina, Saideh Zia, Shahil Merchant, Jason Forrester, Eason He, and Ye Lin. 2025. "Decoding Temporally Encoded 3D Objects from Low-Cost Wearable Electroencephalography" Technologies 13, no. 11: 501. https://doi.org/10.3390/technologies13110501

APA StyleLaRocco, J., Tahmina, Q., Zia, S., Merchant, S., Forrester, J., He, E., & Lin, Y. (2025). Decoding Temporally Encoded 3D Objects from Low-Cost Wearable Electroencephalography. Technologies, 13(11), 501. https://doi.org/10.3390/technologies13110501