Abstract

This study examines the interaction between cognitive demands and generative artificial intelligence (GenAI) technologies in shaping the quality and influence of academic research. While GenAI tools such as ChatGPT and Elicit are increasingly adopted to ease information processing and automate repetitive tasks, their broader impact on researchers’ cognitive performance remains underexplored. Using data from 998 researchers and applying structural equation modeling (SEM-PLS), we examined the effects of cognitive load, task fatigue, and resilience on research outcomes, with GenAI immersion as a higher-order moderator. Results reveal that both cognitive load and fatigue negatively affect research quality, while engagement and resilience offer partial protection. Unexpectedly, high immersion in GenAI intensified the negative impact of cognitive strain, suggesting that over-reliance on AI can amplify mental burden rather than reduce it. These results enhance the design and responsible integration of AI technologies in academic environments by demonstrating that sustainable adoption necessitates a balance between efficiency and human creativity and resilience. The study provides evidence-based insights for researchers, institutions, and policymakers seeking to optimize AI-supported workflows without compromising research integrity or well-being.

1. Introduction

GenAI systems like ChatGPT, Jasper AI, and Grammarly are quickly changing how academic research is performed. These technologies promise to make academics’ jobs easier and more productive by automating tasks like writing, summarizing, and evaluating [1]. However, with these benefits present, an essential question arises: how does the growing usage of GenAI change the cognitive and emotional demands of research work? Cognitive load—the mental work needed to absorb and combine information [2]—and task fatigue—the stress that comes from doing the same thing for a long time or over and over again [3]—are two of the most important things that researchers need to keep in mind while confronting complexity and keeping up their performance. To create sustainable, human-centered technologies in institutions, it is important to know how these components interact with GenAI. This study has its foundation on Cognitive Load Theory (CLT), which examines how mental effort affects learning and performance. It additionally takes a step further by investigating how GenAI changes cognitive dynamics in research. Prior studies have associated cognitive load and fatigue with research productivity, originality, and impact [4]. However, recent findings indicate that although GenAI might reduce stress by streamlining tasks, overdependence may compromise creativity, critical analysis, and academic integrity [5,6]. This contradiction makes GenAI more than just a tool for getting things done; it also makes it a technological environment that may benefit or harm cognitive processes, depending on how it is utilized.

Recent academic research has investigated the strategic and pedagogical prospects of Generative AI, highlighting its transformative influence on learning environments and decision-making frameworks. Previous study [7] used evolutionary game analysis of Generative Pre-Trained Transformer technologies to demonstrate how cooperative and competitive dynamics affect institutional behavior and policy toward AI adoption in education. Although these studies yield significant insights into the technological progress of AI, they predominantly focus on systemic or policy-level ramifications. In contrast, our study expands this debate by focusing on human cognitive and behavioral AI applications in research. It specifically examines the interplay between Generative AI immersion, cognitive load, task fatigue, and resilience in influencing research quality and academic impact, hence expanding Cognitive Load Theory into an AI-mediated academic context.

Building on this perspective, the study investigates four key questions:

RQ1: How does cognitive load impact academic scholars’ research quality and influence?

RQ2: How do task fatigue and mental exhaustion affect research quality and academic influence?

RQ3: To what extent does GenAI immersion influence cognitive load and fatigue, and in turn affect research quality and academic influence?

RQ4: What are the implications of integrating GenAI in research workflows for improving both academic outcomes and psychological well-being?

This study uses SEM-PLS modeling and IPMA analysis to demonstrate how cognitive load and GenAI immersion interact in research settings. The results add to the field of human–technology interaction by illustrating how digital tools change the way individuals think and affect how well they perform research. The study offers direction for educational institutions, policymakers, and technology developers on integrating efficiency with integrity by establishing definitive parameters for GenAI utilization, promoting AI literacy, and cultivating settings that enhance resilience and participation. While previous studies have investigated how AI may help with writing and teaching [8,9]. Relatively few studies have focused on how it might affect the relationship between cognitive load and research effectiveness. Most research so far has examined AI as a direct tool instead of as an environmental component that affects cognition. However, GenAI as a moderator has still not been explored in previous studies.

The present study provides practical insights for the development of academic communities that include AI deliberately and sustainably. It stresses how important it is to set explicit limits on how GenAI may be used—limits that let researchers take advantage of its efficiency without hurting engagement, originality, or critical thinking. These findings are pertinent for both governmental directives for the responsible integration of AI in research and the formulation of training programs aimed at equipping scholars with the skills to utilize AI tools proficiently. Simultaneously, it is important to recognize certain constraints. The study depends on self-reported data, which may contain bias and subjectivity, and its cross-sectional design complicates the determination of causal relationships. Longitudinal methods and behavioral assessments that can confirm and build on the patterns seen here should be used in future research to make these findings stronger.

2. Literature Review

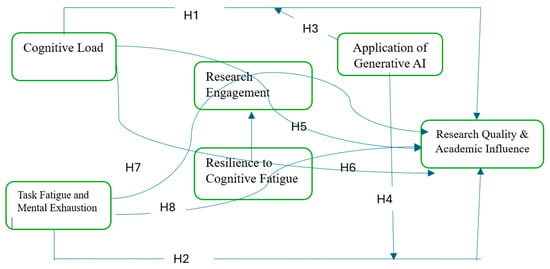

The literature review (LR) investigates the relationship between cognitive load and research efficacy, with a particular emphasis on the impact of cognitive demands on the performance and decision-making of researchers. The conceptual framework of the study, as shown in Figure 1, is developed to understand and task fatigue with the application of Generative AI to assess and understand Research Quality and Academic influence.

Figure 1.

Formative Conceptual Framework of the Study.

2.1. Cognitive Load

Cognitive load is the cognitive effort needed to analyze and comprehend intrinsic, superfluous, or relevant information. Intrinsic load originates from material complexity, whereas external load comes from information presentation. Poorly constructed materials can add unneeded strain and complicate learning and decision-making [10]. Well-structured information balances cognitive demands and aids retention, while excessive unnecessary load can overload working memory, reducing performance and blocking learning [2,11]. Cognitive load affects efficiency and decision-support system trust in high-stress situations, reducing judgment and performance [12,13]. Adaptive systems that monitor and modify task difficulty in real time enhance learning and decision-making results by maintaining mental effort within acceptable limits [14,15]. Several factors—such as instructional design, weariness, past experience, and the learning environment—further impact how cognitive load is experienced, and whether its consequences on performance are positive or negative [16,17]. Learners’ management of cognitive load also depends on individual variances, including emotional elements and cognitive capacity [18]. Research productivity spanning efficiency, quality, creativity, completion rate, and decision-making speed is greatly impacted by cognitive load—that is, the overall mental effort utilised in working memory. High cognitive load causes errors and distractions, therefore lowering job quality, task length, and processing efficiency [19,20]. While an ideal cognitive load promotes greater engagement [21,22], it also limits cognitive flexibility, hence restricting creativity and innovation. Furthermore, a heavy load hinders task completion, particularly under time limits, and strains cognitive resources [23], thereby affecting decision-making speed and accuracy [24].

In addition to Cognitive Load Theory, recent perspectives emphasize the ethical and motivational aspects of AI integration in knowledge labor. Distribution of cognition indicates that human–AI interaction redistributes mental effort across systems, impacting autonomy and decision quality [25]. Ethical evaluations of AI integration highlight concerns regarding transparency, accountability, and user agency, especially when automation affects cognitive effort and creativity [26]. Self-Determination Theory and other motivational theories explain how autonomy and competency affect how people use GenAI products. These frameworks integrate cognitive, ethical, and motivational mechanisms that affect research performance, strengthening this study’s theoretical base.

2.2. Task Fatigue and Mental Exhaustion

Mental fatigue is a psychobiological condition produced by extended cognitive work, resulting in diminished cognitive skills such as memory and attention. It arises from little sleep, extended concentration, or repeated jobs [27,28]. Effective treatments include rest intervals and optimal task design [29]. Cognitive and physical performance, output, and well-being are much affected by task fatigue and mental exhaustion [29] all lead to mental tiredness. It reduces memory, attention, and physical endurance [30], hence increasing mistakes and lowering production [31]. Measuring mental tiredness calls for objective (performance declines, physiological reactions) and subjective (self-reports) approaches [32]. Recovering from mental fatigue is slow; it is related to burnout, stress, and mental health problems that influence personal and professional life [33]. Cognitive function during research activities is highly influenced by task fatigue, particularly in cognitive functions. Through more errors and inferior reaction times in jobs needing constant attention [34], it results in declining performance over time. Cognitive fatigue reduces attentional control and influences decision-making, so task switching becomes more challenging and attention is compromised [35,36]. Neurophysiological changes also lower motivation and cognitive flexibility [37].

2.3. Research Engagement

Engagement is the level of involvement, enthusiasm, and commitment a person has toward their work, studies, or activities. When mental well-being is high, engagement improves, leading to better focus, productivity, and overall life satisfaction [38,39]. Greater previous academic experience lowers cognitive load, which improves engagement; lower cognitive load results from less knowledge struggle among researchers with less prior knowledge experience, therefore lowering their participation. High cognitive load might lower involvement; so, a motivating design is necessary to sustain learning [40]. Research engagement depends much on cognitive load [23]. Design and task complexity also count as activities with high intrinsic or extrinsic load that can impede engagement [41]. To maximize engagement, self-reported cognitive load biases may influence perspectives and engagement ratings, emphasizing the necessity for precise evaluation and task design [42,43]. GenAI’s predictive analytics and data-driven assistance minimize cognitive load, especially in complex situations with high information demands [44,45,46]. AI reduces exhaustion and effort in academic settings [47,48]. In research, GenAI speeds up project cycles and enhances repeatability, therefore streamlining the process and increasing its inclusive nature [49]. OpenAI GPT, among other tools, improves evaluation and learning in the classroom [50,51]. But issues like over-reliance and prejudices have to be controlled under openness and responsibility [44].

2.4. Resilience to Cognitive Fatigue

Resilience to cognitive fatigue is the capacity to remain cognitively engaged and productive even when physically exhausted. It enables individuals to handle challenging or lengthy tasks without noticeably declining performance [52]. Resilience to cognitive fatigue is mostly dependent on cognitive load; high cognitive demands increase tiredness levels and, over time, lower mental function [53]. Particularly in sedentary activities [54], a high cognitive load can also lead to physical pain. Even when tired, the brain may adjust by raising attentional effort [35]. Maintaining resilience and preserving performance under stressful situations depends on efficient management of cognitive load. Generative AI (GAI) improves efficiency and reduces cognitive burden, thereby increasing resilience to cognitive fatigue in academic research. It frees researchers to concentrate on difficult issues by automating tedious chores, such as data analysis and literature summarizing. By creating context-aware continuations [6], tools like CatAlyst help to improve task engagement [55] since they reduce stress in academic research. AI chatbots improve executive abilities, therefore supporting remembering and planning. Still, issues like plagiarism and false information have to be controlled to guarantee moral behavior and preserve cognitive advantages [56]. Changing AI to match task types improves cognitive fit and user satisfaction [57].

2.5. Research Production and Efficiency

Research productivity is a multifarious notion including quality, creativity, acceptability, time efficiency, and decision-making. Measured by citations and publications in esteemed journals, high-quality research strengthens institutional credibility and is associated with improved teaching results [58,59,60,61]. Acceptance shapes the larger influence and distribution of research [62,63] by showing up in references and academic partnerships. Maintaining high production requires effective time management and institutional support for juggling teaching and research. Research output is largely influenced by strategic decisions like giving high-impact research top priority and supporting multidisciplinary cooperation [61,64]. Effective resource allocation and the creation of encouraging research environments by universities help to develop more significant work. Policymakers and academic leaders must base their plans on empirical evidence to optimize faculty research contributions and institutional performance.

2.6. Generative AI and Research Productivity and Efficiency

AI-powered technologies help to write and hone research papers by increasing writing efficiency [65,66,67,68]. Generative AI (GenAI) improves research productivity by automating chores such as the crafting of research papers. In the classroom, it tailors instruction and generates instructional materials, hence increasing student involvement. But issues with creativity, openness, and certain biases need human supervision [6,69]. Optimizing procedures and encouraging innovation, GenAI also increases productivity in software development, business, and healthcare [6,67]. Notwithstanding its benefits, careful application of artificial intelligence is essential to maintain research integrity. Generative AI (GenAI) improves research productivity by automating chores such as the crafting of research papers, literature reviews, and data analysis summaries. It helps create research questions, analyze big data, and summarize outcomes [6,15]. Natural language processing driven by artificial intelligence (NLP) simplifies content synthesis and literature searches [70]. Research integrity depends on AI transparency and bias minimization. Researchers should be moral and check AI-generated content. Multidisciplinary cooperation may optimize AI’s research contribution, improving efficiency and creativity [71]. In both academic and commercial research environments, responsible artificial intelligence application guarantees accuracy while optimizing output. It increases repeatability, automates tedious chores, and speeds data analysis and insight generation [72]. AI technologies increase creativity and efficiency by helping to prepare papers, explore literature, and create prototypes. Still, issues including ethical questions, bias, and the necessity for skill development call for cautious supervision [73]. Researchers can customize GenAI tools to enhance output and efficiency. GenAI also helps researchers use tools efficiently, boosting learning and adaptation. To ensure study integrity, ethical considerations, including openness and prejudice avoidance, are essential. The following hypotheses are proposed:

H1.

Cognitive Load has a significant impact on Research Quality & Academic Influence.

H2.

Task Fatigue & Mental Exhaustion significantly influence Research Quality & Academic Influence.

H3.

Generative AI Immersion significantly moderates the relationship between Cognitive Load and Research Quality & Academic Influence.

H4.

Generative AI Immersion significantly moderates the relationship between Task Fatigue & Mental Exhaustion and Research Quality & Academic Influence.

H5.

Research Engagement mediates the relationship between Cognitive Load and Research Quality & Academic Influence.

H6.

Resilience to Cognitive Fatigue mediates the relationship between Cognitive Load and Research Quality & Academic Influence.

H7.

Research Engagement mediates the relationship between Task Fatigue & Mental Exhaustion and Research Quality & Academic Influence.

H8.

Resilience to Cognitive Fatigue mediates the relationship between Task Fatigue & Mental Exhaustion and Research Quality & Academic Influence.

3. Methodology

3.1. Research Design

Cognitive constructs (Cognitive Load, Task Fatigue, and Mental Exhaustion), mediators (Research Engagement and Resilience to Cognitive Fatigue), and outcome variables (Research Quality and Academic Influence) were examined using Structural Equation Modeling–Partial Least Squares (SEM-PLS). SEM-PLS is the most advanced tool to handle complex data and provide a robust framework for the study [74]. Complex factor relationships are analyzed while evaluating direct and indirect effects in one model. Additionally, it is the most reliable method for medium to large sample volumes. A dual-path study of cognitive-behavioral dynamics with and without AI is possible since generative AI (GenAI) immersion was included.

We chose Partial Least Squares Structural Equation Modeling (PLS-SEM) over Covariance-Based SEM (CB-SEM) because it works better with complicated prediction models that have many moderation and mediation effects and can handle non-normal data distributions. For exploratory research that extends theory rather than validates it, PLS-SEM is advised [75,76]. To improve methodological transparency, we have now explained our sampling process in more detail. We utilized a stratified procedure based on how much GenAI each respondent used to make sure that all academic contexts were represented. Anonymous participation, randomized item sequencing, and survey validation checks reduced social desirability bias.

3.2. Theoretical Foundation

The model is based on Cognitive Load Theory [2] and includes ideas from research on fatigue [27], engagement [77], and resilience [52]. The modern AI ethics and cognitive ergonomics literature [44,56] helps us understand GenAI’s position as a moderator, which is further discussed and detailed in the research. The moderation effect of Gen AI integrated in the study fills the gap of the previous study and makes this study novel in the contemporary world.

3.3. Constructs and Measurement Model

In this study, six validated constructs were operationalized through 24 measurement items adapted from prior literature. These items capture key dimensions such as Cognitive Load (3), Task Fatigue & Mental Exhaustion (3), Generative AI Immersion (4), Research Engagement (3), Resilience to Cognitive Fatigue (3), and Research Quality & Academic Influence (8), ensuring both theoretical grounding and empirical reliability as shown in Table 1.

Table 1.

Constructs and Measurement.

3.4. Sample and Data Collection

The study received data from 998 academic researchers, including PhD students and faculty members from a variety of fields, all of whom were actively involved in research. The responses are taken from the global top 500 ranking universities across diversified fields. Data was collected from personal contacts using social media and a structured communication channel (Refer Appendix E). Data was filtered for master’s or above courses. Using a stratified random sample approach, people were put into groups depending on how often they said they used Generative AI tools like ChatGPT, Elicit, or Scite. Anonymous internet questionnaires were used to collect the data over the course of three months, making sure that everyone who took part did so willingly. The study had ethical approval from the institutional review board, which protected the rights and privacy of all the people who took part.

3.5. Data Analysis Procedure

Data analysis using PLS-SEM (Partial Least Squares Structural Equation Modeling) was carried out using SmartPLS 4.0. For complex models with several mediators and moderators, as well as for exploratory research, this strategy works well. In the first evaluation, we examined the measurement model’s reliability and validity using Cronbach’s alpha, composite reliability, and average variance extracted (AVE) [88]. To ensure discriminant validity, we utilized the HTMT ratio and the Fornell–Larcker criterion. The next step was to use bootstrapping with 5000 subsamples to examine the path coefficients, t-values, and p-values (significance) to evaluate the structural model. The moderating impact was examined using interaction terms, whereas the mediation effect was examined using indirect effect estimates. To ensure that the proposed model could adequately explain and forecast, we further examined model fit indices (R2, Q2, and f2).

3.6. Moderation and Mediation Strategy

The moderation analysis examined how GenAI changed the effects of Cognitive Load and Task Fatigue on research results. We did this by utilizing interaction terms and simple slope graphs to demonstrate how the effect varies at low, medium, and high degrees of GenAI immersion.

The mediation study looked at indirect effects through Research Engagement and Resilience to Cognitive Fatigue, and bootstrapped confidence intervals were used to ensure the results were significant.

3.7. Ethical Considerations

Participants were informed about the study’s objectives, confidentiality measures, and the voluntary nature of their participation. No personal identifiers were collected. All procedures complied with the ethical standards of research involving human subjects.

4. Data Analysis and Interpretation

4.1. Measurement Model

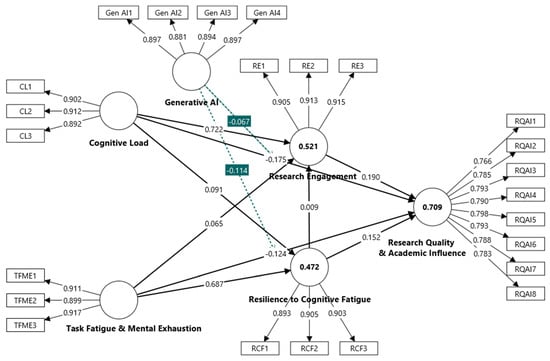

The measurement model in SEM-PLS validates the robustness of the study. It assesses and evaluates that all items and constructs are suitable to qualify the validity of the study. Figure 2 and Table 2, Table 3 and Table 4 assess the robustness of the study.

Figure 2.

Measurement Model.

Table 2.

Convergent Validity.

Table 3.

Discriminant Validity—HTMT.

Table 4.

Model Fit and Prediction Metrics.

All constructs show high outcomes in terms of reliability and validity. Each construct has great internal consistency; Cronbach’s alpha and composite reliability scores are all over 0.88, suggesting consistent measurement. The Average Variance Extracted (AVE) values are likewise far above the 0.50 threshold, implying high convergent validity. This indicates that the indicators for every construct accurately reflect their intended measurement. Specifically, terms such as Cognitive Load, Resilience to Cognitive Fatigue, and Research Engagement show notable validity and reliability, thereby supporting the robustness of the measuring approach in this study on Cognitive Fatigue and Research Efficiency, as shown in Figure 2 and Table 2.

The HTMT (Heterotrait-Monotrait) in Table 3 represents that the values in the table are all well below the accepted threshold of 0.85, indicating good discriminant validity. This means that each construct in the study, such as Cognitive Load, Generative AI, Research Engagement, and others, is distinct from the others and measures a unique concept. Low HTMT values (e.g., 0.021 between Cognitive Load and Generative AI) confirm there is no redundancy among constructs. Overall, the results suggest that the constructs in the model are not only reliable but also conceptually different, supporting the integrity of the structural model in this research, as shown in Table 3.

Table 4 represents the R-squared values in the model, indicating how well the predictors account for the variance in each dependent variable. Research Engagement’s R-squared score of 0.521 suggests that about 52% of its variation is accounted for by the independent variables. This indicates a fair amount of explanatory power. With an R-square of 0.709, Research Quality & Academic Influence indicates a significant predictive power as the model seems to explain over 71% of its variation. On the other hand, Resilience to Cognitive Fatigue has an R-squared of 0.472, which also suggests a reasonable explanation of variation. Reflecting small changes in model complexity, the modified R-squared values are somewhat lower.

The Q2 predict values indicate the predictive significance of the model for every result variable. A Q2 score over 0 indicates good predictive capability. All three values—0.516, 0.674, and 0.468—indicate here that the model has significant predictive accuracy for the constructs. The figures of MAE (Mean Absolute Error) and RMSE (Root Mean Square Error) gauge the forecast error. Lower numbers indicate higher forecasting performance. Of the three, the second row (Q2 = 0.674, RMSE = 0.573, MAE = 0.344) reveals the highest prediction accuracy with the fewest mistakes, suggesting that the model is very good at forecasting this particular result, as shown in Table 4.

The structural model has a reasonable overall fit, as indicated by the model fit statistics. Both the saturated model (0.054) and estimated model (0.057) SRMR (Standardised Root Mean Square Residual) values fall below the acceptable threshold of 0.08, suggesting a satisfactory match. Models are close in d_ULS and d_G values, which indicates consistency in model estimates. The Chi-square values—2824.476 and 2850.245—are rather close, indicating a little variation across the models. Though a little below the perfect 0.90 standard, the NFI (Normed match Index) values of 0.845 and 0.843 show a reasonable match. All things considered, the model matches the data perfectly, as shown in Table 4.

4.2. Structural Model

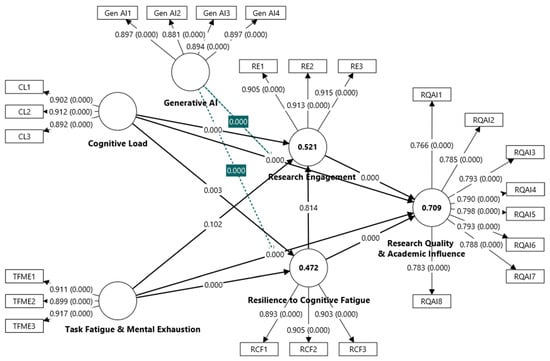

The structural model was tested using SEM-PLS to evaluate the relationships between cognitive load, task fatigue, resilience, engagement, GenAI immersion, and research outcomes, as shown in Figure 3 and Table 5.

Figure 3.

Structural Model.

Table 5.

Hypothesis Testing.

The structural model illustrates the impact of cognitive load and task fatigue on study findings, both directly and indirectly. It also illustrates how being involved in research and being able to handle cognitive fatigue may help with this process. Immersion in generative AI can also form and reinforce these relationships, as shown in Figure 3.

Table 5 demonstrates the findings of the structural model, which shows how strong and important the correlations are between the study’s constructs. It shows both the direct and indirect impacts, proving which paths have a real impact on study results. The details of Table 5 are discussed below.

H1: Cognitive Load → Research Quality & Academic Influence Path coefficient = −0.175, T-statistic = 5.371, p-value = 0.000, f2 = 0.049. This finding reveals that cognitive load has a significant, negative impact on the quality of research and academic influence. The high T-value and extremely low p-value show that this impact is statistically significant. The effect size (f2 = 0.049) is in the small-to-moderate range. Hence, the study reinforces [2,19] that it can decrease research and task performance. The findings show that maintaining a sustainable cognitive load is essential for research of high quality and global influence. The results demonstrate that maintaining a manageable cognitive load is crucial for upholding high research standards and making a meaningful impact in the world.

H2: Task Fatigue and Mental Exhaustion → Research Quality and Academic Influence Path coefficient = −0.124, T-statistic = 3.722, p-value = 0.000, f2 = 0.027. This result demonstrates a statistically significant negative effect; however, it is a little weaker than H1. Being mentally tired affects how well you do research, although the magnitude is minimal. Refs. [27,34] say that long-term mental weariness makes it harder to pay attention, makes more mistakes, and impacts memory. These impacts help explain why tired researchers may have trouble keeping up their quality, attention, and productivity. In a study [81] also addressed how being tired of a task makes you less productive and more likely to make mistakes.

H3: GenAI Immersion × Cognitive Load → Research Quality & Academic Influence Path coefficient = −0.067, T-statistic = 3.966, p-value = 0.000, f2 = 0.025. This strong negative moderation effect means that using GenAI more makes the detrimental effect of cognitive load on research findings even greater. If cognitive load is already high, adding GenAI without sufficient management might make things more complex, maybe because people rely too much on it or their cognitive systems do not work well with it. Previous studies [44,51] mentioned that GenAI can make things easier for the brain, but the results here say that it should not be used without thinking about it first. This is in line with what [56,57] say: that using AI without examining it out might lead to bias, reliance, or inefficiency, especially if the job complexity is not fixed.

H4: GenAI Immersion × Task Fatigue & Mental Exhaustion → Research Quality & Academic Influence Path coefficient = −0.114, T-statistic = 6.261, p-value = 0.000, f2 = 0.082. This is the most powerful moderating effect in the model, which means that GenAI makes the adverse impacts of fatigue on research quality much worse. The impact magnitude is close to moderate. This might be because exhausted individuals can find it harder to understand AI outputs or might just accept AI ideas without thinking about them. Refs. [47,55] discussed how GenAI can help with workload, but the literature also warns that it could make things worse if it is not properly monitored, especially when people are tired [35,56]. This shows how important it is to have adaptable AI systems and to use them in a way that helps people instead of making things worse when they are under emotional stress.

The H5 finding (β = 0.137, p < 0.001) shows that research engagement has a statistically significant mediating influence on the relationship between cognitive load and research quality. This means that researchers who are engaged may turn cognitive issues into useful academic results. This supports Cognitive Load Theory [2], which says that mental effort may be helpful when combined with motivation. However, the benefit is only mild, which means that involvement alone may not be enough in high-stress or long-term study situations. Ref. [77] also, stress involvement as a buffer, but this study reveals that it may not always work, depending on things like the person’s ability, the difficulty of the activity, and the support in the surroundings. So, engagement is a useful middleman, but to keep performance up when cognitive strain is high, it has to be supported by structural and cognitive systems as well.

The results for H6 (β = 0.014, p = 0.017) suggest that resilience has a statistically significant but very minor influence on the relationship between cognitive load and research quality. This suggests that resilience helps researchers keep doing their jobs even when they are stressed out, but it does not have a significant impact overall. Even those who are resilient may have a hard time adequately offsetting the negative effects of a high cognitive load, especially when they are doing lengthy or complicated research tasks. This backs up prior work by [35,52], which showed that resilience is critical for staying focused and making decisions when things get tough. The tiny impact size, on the other hand, implies that resilience alone is not adequate. If cognitive demands connected to structure or tasks are not met, its protective efficacy may fade. Ref. [18] also say that resilience varies a lot from person to person. This means that to make it a stronger mediator, we need to think about personal qualities, emotional control, and environmental support. So, even if resilience is important, it should only be considered as one aspect of a bigger plan to deal with too much information in academic research.

The result of H7 (β = 0.012, p = 0.123) reveals that research engagement does not substantially mediate the association between task weariness and research quality. This is a major drawback since once mental exhaustion sets in, just attempting to stay interested is not enough to keep up performance. This goes against the widely held belief that drive can always make up for tiredness. As shown by [27,34], lengthy periods of cognitive strain make it tougher for engagement to work well by making it harder to pay attention, remember things, and stay motivated. The study implies that mental exhaustion may be stronger than motivating resources, and that cognitive recovery (such as rest and managing your workload) is more important than just engagement tactics in these situations. So, interventions need to do more than just get people more involved; they also need to interrupt or ease cognitive fatigue.

The result for this route in H8 (β = 0.104, p < 0.001) shows that resilience is a strong mediator between tiredness and research performance. This means that researchers who can handle stress are better able to stay productive. Resilience is a greater buffer than engagement, which breaks down when people are tired. This lets individuals push through fatigue without a significant loss in quality. Refs. [36,54] also revealed that resilience keeps cognitive function going even when activities take a long time. Ref. [55] also mentioned that technologies like AI can make people more resilient by taking care of everyday activities, which lowers stress. This is important because it shows that measures for strengthening resilience, including cognitive training, taking breaks, or using supporting technology, are better than just motivation at fighting mental tiredness and keeping research productivity high.

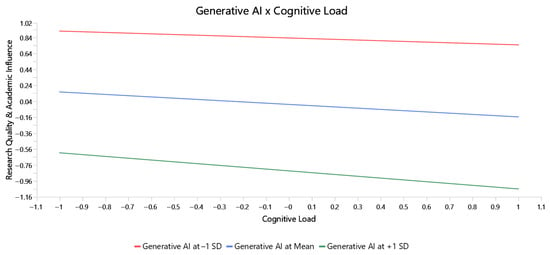

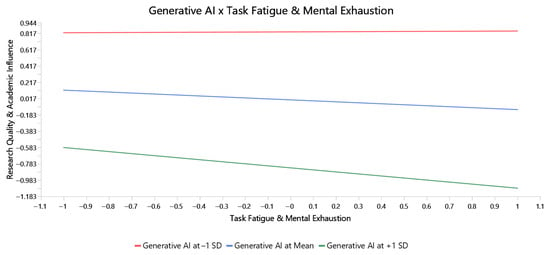

The moderation effect shown in the graph shows a novel result: instead of buffering the negative effects of cognitive load, increased Generative AI (GenAI) immersion seems to make them more complex, as seen by the steeper negative slope at +1 SD. This means that when individuals are under a lot of mental stress, relying more on GenAI can make their research worse. This might be because they are too reliant on it, they do not think critically enough, or they are unloading their cognitive burden. Several individuals commend GenAI for making work more efficient, but this research shows how critical it is not to become too reliant on AI technologies and to stop thinking deeply. Refs. [44,56] say that people should be careful not to rely too much on AI since it may make work easier but can create bias or hide the reasons behind the analysis if it is not utilized carefully. So, this moderating effect goes against the simple idea that GenAI is a universal cognitive helper and shows how important it is to use it in a balanced and aware way, especially in research settings with a lot of work, as shown in Figure 4.

Figure 4.

Generative AI as a Higher-Order Moderator in Cognitive Load.

The interaction plot shows a surprising moderating effect: when work tiredness and mental exhaustion rise, the quality of research drops off more quickly when Generative AI (GenAI) immersion is high. The individuals frequently think that GenAI may help with cognitive strain, but the precipitous drop in performance at greater levels of AI use implies that using these tools too much while you are tired may really make things worse. This might be because people are less intellectually engaged, rely more on AI outputs, or find it harder to manage AI tools when they are mentally tired. On the other hand, using GenAI less seems to protect against the effects of weariness, maybe by making people think more carefully. This conclusion is in line with what [44,56] have mentioned: GenAI can be helpful, but it should be utilized carefully, especially when things are mentally or physically hard. In the end, this shows that we need to work together with AI in a critical way instead of just relying on it, especially when our mental resources are low, as shown in Figure 5.

Figure 5.

Moderating Effect of Generative AI on Task Fatigue–Research Outcomes Relationship.

5. Discussion

The research demonstrates how human cognitive interactions with GenAI tools influence both the quality of research and its impact. Although cognitive load and fatigue were expected to affect performance negatively, the manifestations were less pronounced than predicted, implying that scholars rely on resilience, engagement, and online solutions to mitigate mental pressure. Conversely, the effect of GenAI immersion was significantly negative, and excess dependence on tools, such as ChatGPT, was associated with less originality and critical thinking. This aligns with the previous research [89,90,91]. These findings highlight a paradoxical aspect of Generative AI in academic endeavors. Higher immersion can increase cognitive strain, even though AI simplifies study. This phenomenon may be ascribed to automation bias, wherein researchers experiencing cognitive overload exhibit excessive faith in AI-generated content without sufficient critical evaluation [46]. AI also shifts cognitive demands from invention to evaluation, increasing cognitive switching costs and perceived workload [92]. Over time, this might weaken cognitive resilience and intrinsic drive, as researchers depend on external automation instead of addressing problems on their own. This corresponds with growing apprehensions that overreliance on AI could stifle creativity and diminish academic integrity [20,39,93]. Responsible AI integration in academia emphasizes critical understanding, reflective engagement, and resilience-building to balance technology efficiency with human adaptation. This suggests that technology, in itself, does not bring productivity, but rather adaptive and human-centered design is required to make AI beneficial as opposed to detrimental to research performance. In placing GenAI in the context of educational and assistive technologies, this research paper serves the purpose of advancing the mission of the journal towards sustainable, ethically balanced, and human motivation-driven and well-being-oriented innovations in AI and ICT [94]. Additionally, educating users and improving their understanding of AI are essential to achieving this balance. Researchers who can critically analyze, ethically apply, and adapt GenAI techniques ensure that technical efficiency is matched by educated human judgment and creative responsibility.

These findings support previous statements on strategic AI integration in education and research [7] that used evolutionary games to show how Generative AI might change academic institutional dynamics and cooperation. The previous study focused on systemic evolution and adoption patterns, whereas this study shows the cognitive and psychological effects of GenAI immersion on individual researchers. These viewpoints demonstrate the necessity for multi-level approaches—linking institutional policy, technology design, and human cognition—to ensure AI integration supports research ecosystem efficiency and well-being. This study aimed to examine how cognitive factors, including cognitive load, task fatigue, and individual resilience, work with Generative AI (GenAI) immersion to affect the quality of research and academic influence.

The results provide us with a deeper understanding of how the psychological and technological aspects of current research processes operate together by using SEM-PLS modeling and IPMA analysis. It is interesting because GenAI was thought to help with mental stress, but the results show that more GenAI immersion may make cognitive overload worse, maybe because people rely too much on it or do not think critically enough. The concept that human motivation and cognitive flexibility are more significant than tools was supported by study engagement and resilience as mediators. These results align with the findings of other researchers on Cognitive Load Theory [2,95,96] and the emotional and cognitive aspects of fatigue [37,97]. However, they also show some major differences, especially when it comes to the unexpected consequences of GenAI immersion. This conversation brings to light a very important gap in our present understanding: just having technology does not guarantee productivity or quality. Future studies need to look at not only how AI is employed, but also how academics think and feel about these technologies in high-stakes academic contexts. Further details are illustrated below:

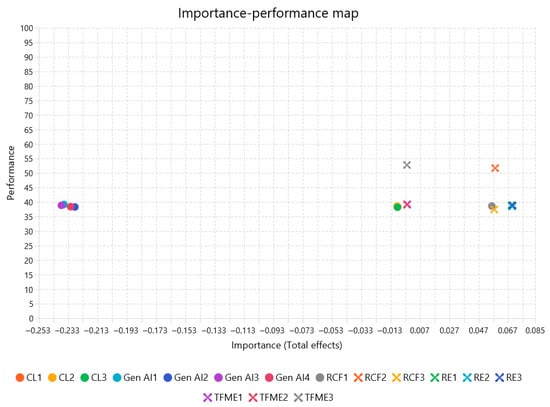

IPMA data for Cognitive Load (CL1–CL3) demonstrate a consistently small negative effect on Research Quality and Academic Influence, with effect values of –0.009 for all three items. Even with a little influence, performance levels stay around 38, indicating moderate cognitive strain in study activities. CL1 (“I often feel mentally overloaded…,” −0.009 | 38.339), CL2 (“The complexity of my research tasks makes it hard to concentrate…,” −0.009 | 38.566), and CL3 (“I find it mentally challenging to organize and analyze large volumes of information…,” −0.009 | 38.203) all show that the person thinks they have cognitive load but it is not detrimental to them. Ref. [2] Cognitive Load Theory says that this kind of mental strain usually makes performance worse by overloading working memory. The low impact values here, meanwhile, imply that the participants may have come up with ways to deal with this effect, such as splitting, managing their time, or using digital tools [18]. This difference between what was expected in theory and what really happened shows how strong academic professionals are when faced with difficult situations. Cognitive load is a known obstacle to getting things done, but in this study, its real-world effect seems to be lessened—possibly because of traits like resilience or research engagement that function as buffers. This is crucial because it shows that cognitive complexity alone does not influence the quality of the output. What matters more may be how researchers deal with or understand that complexity. Gap Identified: More studies should look at how cognitive load interacts with motivational or emotional moderators, such as gamification, perceived autonomy, or digital tool competency. Longitudinal research might look at threshold effects, which happen when cognitive load only becomes harmful at a certain point or when there are no support mechanisms in place. This would help make treatments more successful at dealing with the effects of load instead of getting rid of it.

The IPMA results for Generative AI Immersion (GenAI1–GenAI4) demonstrate that it has a very substantial negative influence on Research Quality & Academic Influence, with effect sizes between −0.229 and −0.238 and performance scores between ~38 and 39. GenAI1 (“I regularly use tools like ChatGPT or Elicit for literature review and writing support”) has a score of 39.156 and an effect of −0.236. GenAI2 (“Generative AI helps me simplify complex tasks during my research”) has a score of 38.294 and an effect of −0.229. GenAI3 (“I rely heavily on AI tools to assist in data analysis or content generation”) has a score of 38.838 and an effect of −0.238. GenAI4 (“AI integration has become a central part of my research workflow”) has a score of 38.385 and an effect of −0.232. These numbers show that even though people use AI a lot and it seems to work well, the more they depend on it, the worse their research gets. Refs. [44,56,98] address these concerns, which may be caused by things like less critical thinking, automation complacency, and a possible drop in creativity. In other words, technologies like ChatGPT may make some research jobs go faster, but relying too much on them might hurt the quality and depth of the work. These results are important because they show that the problem is not with GenAI itself, but with the need for it to be integrated in a structured and critical way. For example, this could be done through targeted AI literacy training, ethical guidelines, and strong feedback systems that encourage good collaboration between humans and AI. This leaves a significant gap for future study to illustrate at the points at which using AI goes from being helpful to harmful, and to provide the best integration rules that protect academic integrity and originality.

The modest positive path coefficients (0.056–0.058) and notably the high performance score of RCF2 (51.664) imply that resilience is an important but frequently overlooked factor in keeping research quality high when cognitive strain is high. This is similar to what [52,99] found: that resilience helps people stay focused on tasks and pay attention even when they are tired. RCF3 (“stay productive under prolonged strain”) has the lowest performance score (37.432), yet its impact size stays the same. This shows that even views of resilience that are not fully reported still have a favorable influence on study outcomes. But the most important challenge is that its impact size is too small. Resilience is helpful, but it does not have as direct an effect as engagement (RE1–RE3); therefore, it may work more as a stabilizer than a performance driver. This means that resilient researchers handle things better, but they do not always accomplish better unless they are also highly engaged or motivated. Also, if GenAI technologies become more common, there is a concern that natural resilience development will slow down. As mentioned in previous studies [18,97,100] mentioned that relying on AI to get around cognitively taxing activities might lead to “resilience atrophy,” which is when people slowly lose the capacity to push through mental weariness on their own. So, the results go against the idea that technology usually makes work easier and more productive. They want a balanced approach, where AI is utilized to help, not replace, the cognitive endurance that comes from traditional academic disciplines. Future study needs to look at how resilience changes when AI tools are used—does AI help with tiredness or make it harder to build resilience? Longitudinal studies might look at whether spending a lot of time in AI-supported research environments makes this cognitive trait stronger or weaker over time.

Research Engagement (RE1–RE3) is the best positive predictor of research quality and academic influence, with effect sizes between 0.069 and 0.070, even if the levels of performance are only modest (~38.5–38.8). Statements like “I feel deeply involved and interested in my research activities” (RE1) and “I feel mentally energized when working on research projects” (RE3) show that being engaged boosts mental stamina and cognitive output. These results are in line with [77,99,101], which illustrate that intrinsic motivation and cognitive excitement greatly improve academic performance [101,102]. The most important thing to remember is that even little increases in engagement, such as improved workspaces, rewards, or meaningful work, can lead to big boosts in research performance. As colleges and universities use AI technologies more and more, these results show that technology alone is not enough; it is also important to foster human-centered qualities like curiosity and purpose. There is a substantial gap in our knowledge of how AI tools affect engagement. Do they make people more curious by making it easier to get information, or do they make people less curious by automating thinking? This calls for a close look at the interaction between AI and engagement.

On the other hand, Task Fatigue & Mental Exhaustion (TFME1–TFME3) had absolutely no direct influence (−0.002) on research outcomes, even if the scores for TFME3 were quite high (52.753) and the statement was “Even small research tasks feel overwhelming when I am tired.” At first glance, this conclusion seems strange, but it shows how weariness might indirectly affect research output. Fatigue frequently works through mediating variables like involvement and resilience, as shown by [27,103]. It does not have a direct effect. Researchers can still do a good job even when they are fatigued, as long as they are resilient or interested. This means that academic fatigue is common but not too bad—it is an indication, not a breaking point. This is really important because it shows that institutions need to do more than just change the way they assign work; they also need to help people build their psychosocial coping skills. Future research should use longitudinal designs to follow the fatigue–engagement–resilience route to find out how mental weariness turns into burnout and how it affects the quality of research over time (Refer Appendix A, Appendix B, Appendix C, and Appendix D).

Concise Summary of Results

Cognitive load and task fatigue have slight but continuous negative effects on research quality and academic influence, indicating moderate researcher cognitive strain. Resilience and research involvement, crucial mediators of stress-induced performance, mitigate these effects. Research engagement had the greatest positive effect, proving that mental participation and motivation are essential for academic success.

Generational AI immersion, on the other hand, increases cognitive strain and decreases creativity and critical thinking. Cognitive complacency and automation bias may negate AI’s efficiency benefits. Through engagement and resilience, task tiredness affects indirectly. The findings show that cognitive effort, adaptive qualities, and AI use interact complexly, and balanced engagement and intentional GenAI integration improve research success.

To translate these results into reality, academic institutions and research institutes ought to prioritize teaching scholars how to utilize AI and how to use it ethically as the highest priority. The benefits and cognitive risks of Generative AI can be explained in structured workshops to help academics utilize ChatGPT ethically and critically. Institutions can also make explicit rules about how to use AI, stressing the need to be open, keep data safe, and come up with new ideas in research. Additionally, resilience-building and reflective learning programs help equip academics to combine automation with autonomous thinking, ensuring that AI encourages creativity rather than eliminating it.

6. Conclusions

This study demonstrates that technology alone does not ensure improved research outcomes. Researchers are affected by cognitive load and fatigue, but they can frequently handle these effects by being resilient and engaged. The unanticipated conclusion is that excessive dependence on generative AI (GenAI) techniques might exacerbate cognitive stress and diminish research quality. This means that AI systems should be made in better, more flexible ways that help people think instead of taking over their jobs. The findings show how important it is to develop AI and digital technologies that are not just effective but also sustainable, responsible, and focused on individuals. Future studies ought to investigate the circumstances under which AI is beneficial or detrimental, as well as how design and policy may transform GenAI into a genuine collaborator in creativity and education. In this manner, AI may be used in ways that support inventiveness, motivation, and well-being, which are all important goals of technological progress. For details, refer to Annexure III. Although this study concentrates on academic researchers, its implications apply to other knowledge-intensive domains, including education, management, and healthcare, where GenAI adoption similarly influences cognitive effort, performance, and creativity.

6.1. Future Avenues of Research

The results of this study show that AI may be useful in academic research in both practical and theoretical ways. The results indicate that while GenAI might enhance productivity, excessive dependence may compromise originality and critical thinking, necessitating the development of adaptive and human-centered technology. For educational institutions, this involves spending money on imparting AI skills, setting ethical standards, and creating environments that make individuals more resilient and involved. From a research standpoint, it is imperative to explore the thresholds at which AI transitions from beneficial to detrimental, and to develop adaptive systems that safeguard well-being while fostering innovation. The tables below show the practical uses of such concepts and where further research might be needed to make them more understandable (refer to Table 6 and Table 7 for details).

Table 6.

Gap Analysis.

Table 7.

Implication and Relevance of Study.

Limitations of the Study

The research employed Self-reported online survey data may have introduced social desirability and self-selection biases to the study. Several controls were applied to reduce risks. Voluntary and anonymous participation reduced societal pressure to respond positively. Informing respondents that there were no right or wrong answers encouraged honest reporting. To improve representativeness, 998 researchers from various fields and institutions provided data. Unreliable entries were identified via outlier filtering and response consistency tests. Although these measures reduce bias, the authors recognize that self-reported perceptions may differ from actual behavior. Mixed-method or longitudinal study using behavioral and observational data to supplement survey responses could improve validity.

6.2. Implications of the Study

Generative AI (GenAI) in academic and institutional ecosystems affects technology, human engagement, governance, and future research. The following Table 7 shows how responsible AI adoption improves human capacities, supports research integrity, and promotes sustainable innovation.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/technologies13110486/s1.

Author Contributions

Conceptualization, S.M.F.A.K.; Methodology, S.S.; Software, S.M.F.A.K.; Validation, S.M.F.A.K. and S.S.; Formal analysis, S.M.F.A.K.; Investigation, S.S.; Resources, S.S.; Data curation, S.S.; Writing—original draft, S.M.F.A.K.; Writing—review & editing, S.M.F.A.K. and S.S.; Visualization, S.M.F.A.K.; Supervision, S.S.; Project administration, S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Materials. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The author declares no financial or personal relationships that could have influenced the work reported in this paper.

Appendix A

Table A1.

Effect Size.

Table A1.

Effect Size.

| Relationship | f-Square |

|---|---|

| Cognitive Load → Research Engagement | 1.017 |

| Cognitive Load → Research Quality & Academic Influence | 0.043 |

| Cognitive Load → Resilience to Cognitive Fatigue | 0.021 |

| Generative AI → Research Quality & Academic Influence | 2.5 |

| Generative AI x Cognitive Load → Research Quality & Academic Influence | 0.017 |

| Generative AI x Task Fatigue & Mental Exhaustion → Research Quality & Academic Influence | 0.057 |

| Research Engagement → Research Quality & Academic Influence | 0.049 |

| Resilience to Cognitive Fatigue → Research Engagement | 0.001 |

| Resilience to Cognitive Fatigue → Research Quality & Academic Influence | 0.038 |

| Task Fatigue & Mental Exhaustion → Research Engagement | 0.003 |

| Task Fatigue & Mental Exhaustion → Research Quality & Academic Influence | 0.026 |

| Task Fatigue & Mental Exhaustion → Resilience to Cognitive Fatigue | 0.955 |

Appendix B

Table A2.

Future Avenues of Research.

Table A2.

Future Avenues of Research.

| Pathway | Effect Size (f2) | Gap Identified |

|---|---|---|

| Cognitive Load → Engagement | 1.017 (Very Large) | Investigate optimal cognitive stimulation levels and task design per field/discipline. |

| GenAI → Research Quality | 2.5 (Very Large) | Explore how task-AI alignment or user training impacts responsible GenAI usage. |

| Task Fatigue → Resilience | 0.955 (Very Large) | Develop resilience-building interventions tailored for high-stakes research roles. |

| Engagement → Research Quality | 0.049 (Moderate) | Decompose engagement into cognitive, emotional, and behavioral dimensions. |

| Task Fatigue → Engagement | 0.003 (Negligible) | Explore alternative mediators (e.g., emotional regulation, digital breaks). |

| Cognitive Load → Research Quality | 0.043 (Small) | Understand why some high-load tasks still yield high research outcomes (e.g., flow states). |

Appendix C

Table A3.

IPMA.

Table A3.

IPMA.

| Items | Code | Research Quality & Academic Influence | MV Performance |

|---|---|---|---|

| I often feel mentally overloaded when managing multiple research activities. | CL1 | −0.009 | 38.339 |

| The complexity of my research tasks makes it hard to concentrate. | CL2 | −0.009 | 38.566 |

| I find it mentally challenging to organize and analyze large volumes of information. | CL3 | −0.009 | 38.203 |

| I regularly use tools like ChatGPT or Elicit for literature review and writing support. | Gen AI1 | −0.236 | 39.156 |

| Generative AI helps me simplify complex tasks during my research. | Gen AI2 | −0.229 | 38.294 |

| I rely heavily on AI tools to assist in data analysis or content generation. | Gen AI3 | −0.238 | 38.838 |

| AI integration has become a central part of my research workflow. | Gen AI4 | −0.232 | 38.385 |

| Even when I feel mentally tired, I can still focus on research tasks. | RCF1 | 0.056 | 38.612 |

| I am able to bounce back quickly after mentally exhausting research work. | RCF2 | 0.058 | 51.664 |

| I can stay productive in research even under prolonged mental strain. | RCF3 | 0.057 | 37.432 |

| I feel deeply involved and interested in my research activities. | RE1 | 0.069 | 38.884 |

| I am enthusiastic and motivated about conducting research. | RE2 | 0.069 | 38.475 |

| I feel mentally energized when working on research projects. | RE3 | 0.07 | 38.884 |

| I feel mentally exhausted after prolonged research work. | TFME1 | −0.002 | 39.247 |

| I find it difficult to stay focused when working on research for extended hours. | TFME2 | −0.002 | 39.065 |

| Even small research tasks feel overwhelming when I am tired. | TFME3 | −0.002 | 52.753 |

Appendix D

Figure A1.

IPMA—Item wise.

Appendix E

Table A4.

Demographic Profile.

Table A4.

Demographic Profile.

| Education Level | Number of Respondents | Percentage (%) |

|---|---|---|

| Master’s Degree | 507 | 50.80% |

| Doctoral Degree (PhD) | 358 | 35.87% |

| Postdoctoral/Faculty | 133 | 13.33% |

| Total | 998 | 100% |

References

- Fernández Cerero, J.; Montenegro Rueda, M.; Román Graván, P.; Fernández Batanero, J.M. ChatGPT as a Digital Tool in the Transformation of Digital Teaching Competence: A Systematic Review. Technologies 2025, 13, 205. [Google Scholar] [CrossRef]

- Sweller, J. Measuring cognitive load. Perspect. Med. Educ. 2018, 7, 1–2. [Google Scholar] [CrossRef]

- Meijman, T.F.; Mulder, G. Psychological aspects of workload. In A Handbook of Work and Organizational Psychology, 2nd ed.; Psychology Press: Hove, UK, 1998; Volume 2, pp. 5–33. [Google Scholar]

- Lee, S.; Bozeman, B. The impact of research collaboration on scientific productivity. Soc. Stud. Sci. 2005, 35, 673–702. [Google Scholar] [CrossRef]

- Suzuki, K.; Hiraoka, N. Transactional Distance Theory and Scaffolding Removal Design for Nurturing Students’ Autonomy. J. Educ. Technol. Dev. Exch. 2022, 15, 1–5. [Google Scholar] [CrossRef]

- Wang, Y.; Zhao, L. Toward the Transparent Use of Generative Artificial Intelligence in Academic Articles. J. Sch. Publ. 2024, 55, 467–484. [Google Scholar] [CrossRef]

- You, Y.; Chen, Y.; You, Y.; Zhang, Q.; Cao, Q. Evolutionary Game Analysis of Artificial Intelligence Such as the Generative Pre-Trained Transformer in Future Education. Sustainability 2023, 15, 9355. [Google Scholar] [CrossRef]

- Denes, G. A case study of using AI for General Certificate of Secondary Education (GCSE) grade prediction in a selective independent school in England. Comput. Educ. Artif. Intell. 2023, 4, 100129. [Google Scholar] [CrossRef]

- Gkanatsiou, M.A.; Triantari, S.; Tzartzas, G.; Kotopoulos, T.; Gkanatsios, S. Rewired Leadership: Integrating AI-Powered Mediation and Decision-Making in Higher Education Institutions. Technologies 2025, 13, 396. [Google Scholar] [CrossRef]

- Young, J.Q.; Van Merrienboer, J.; Durning, S.; Ten Cate, O. Cognitive Load Theory: Implications for medical education: AMEE Guide No. 86. Med. Teach. 2014, 36, 371–384. [Google Scholar] [CrossRef]

- Miwa, K.; Terai, H.; Kojima, K. Empirical investigation of cognitive load theory in problem solving domain. In Intelligent Tutoring Systems; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2018; Volume 10858. [Google Scholar]

- Zhou, J.; Arshad, S.Z.; Luo, S.; Chen, F. Effects of uncertainty and cognitive load on user trust in predictive decision making. In Intelligent Tutoring Systems; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2017; Volume 10516. [Google Scholar]

- Patac, L.P.; Patac, A. V Using ChatGPT for academic support: Managing cognitive load and enhancing learning efficiency—A phenomenological approach. Soc. Sci. Humanit. Open 2025, 11, 101301. [Google Scholar] [CrossRef]

- Pan, J.; Shachat, J.; Wei, S. Cognitive Stress and Learning Economic Order Quantity Inventory Management: An Experimental Investigation. Decis. Anal. 2022, 19, 189–254. [Google Scholar] [CrossRef]

- Suhluli, S.A.; Ali Khan, S.M.F. Determinants of user acceptance of wearable IoT devices. Cogent Eng. 2022, 9, 2087456. [Google Scholar] [CrossRef]

- Gupta, U.; Zheng, R.Z. Cognitive Load in Solving Mathematics Problems: Validating the Role of Motivation and the Interaction Among Prior Knowledge, Worked Examples, and Task Difficulty. Eur. J. STEM Educ. 2020, 5, 1–14. [Google Scholar] [CrossRef]

- Wirzberger, M.; Beege, M.; Schneider, S.; Nebel, S.; Rey, G.D. One for all?! Simultaneous examination of load-inducing factors for advancing media-related instructional research. Comput. Educ. 2016, 100, 18–31. [Google Scholar] [CrossRef]

- Le Cunff, A.-L.; Giampietro, V.; Dommett, E. Neurodiversity and cognitive load in online learning: A focus group study. PLoS ONE 2024, 19, e0301932. [Google Scholar] [CrossRef] [PubMed]

- Jeffri, N.F.S.; Awang Rambli, D.R. A review of augmented reality systems and their effects on mental workload and task performance. Heliyon 2021, 7, e06277. [Google Scholar] [CrossRef]

- Abdullah, R.S.; Masmali, F.H.; Alhazemi, A.; Onn, C.W.; Ali Khan, S.M.F. Enhancing institutional readiness: A Multi-Stakeholder approach to learning analytics policy with the SHEILA-UTAUT framework using PLS-SEM. Educ. Inf. Technol. 2025, 30, 22315–22342. [Google Scholar] [CrossRef]

- Eesee, A.K.; Jasko, S.; Eigner, G.; Abonyi, J.; Ruppert, T. Extension of HAAS for the Management of Cognitive Load. IEEE Access 2024, 12, 16559–16572. [Google Scholar] [CrossRef]

- de Bruin, A.B.H.; Janssen, E.M.; Waldeyer, J.; Stebner, F. Cognitive Load and Challenges in Self-regulation: An Introduction and Reflection on the Topical Collection. Educ. Psychol. Rev. 2025, 37, 65. [Google Scholar] [CrossRef] [PubMed]

- Dong, A.; Jong, M.S.Y.; King, R.B. How Does Prior Knowledge Influence Learning Engagement? The Mediating Roles of Cognitive Load and Help-Seeking. Front. Psychol. 2020, 11, 591203. [Google Scholar] [CrossRef] [PubMed]

- Ball, S.; Katz, B.; Li, F.; Smith, A. The effect of cognitive load on economic decision-making: A replication attempt. J. Econ. Behav. Organ. 2023, 210, 226–242. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, S. Social media affordances and fatigue: The role of privacy concerns, impression management concerns, and self-esteem. Technol. Soc. 2022, 71, 102142. [Google Scholar] [CrossRef]

- Wartenberg, G.; Aldrup, K.; Grund, S.; Klusmann, U. Satisfied and High Performing? A Meta-Analysis and Systematic Review of the Correlates of Teachers’ Job Satisfaction. Educ. Psychol. Rev. 2023, 35, 114. [Google Scholar] [CrossRef]

- Mozuraityte, K.; Stanyte, A.; Fineberg, N.A.; Serretti, A.; Gecaite-Stonciene, J.; Burkauskas, J. Mental fatigue in individuals with psychiatric disorders: A scoping review. Int. J. Psychiatry Clin. Pract. 2023, 27, 186–195. [Google Scholar] [CrossRef] [PubMed]

- Souchet, A.D.; Lourdeaux, D.; Pagani, A.; Rebenitsch, L. A narrative review of immersive virtual reality’s ergonomics and risks at the workplace: Cybersickness, visual fatigue, muscular fatigue, acute stress, and mental overload. Virtual Real. 2023, 27, 19–50. [Google Scholar] [CrossRef]

- Hassan, E.K.; Jones, A.M.; Buckingham, G. A novel protocol to induce mental fatigue. Behav. Res. Methods 2024, 56, 3995–4008. [Google Scholar] [CrossRef]

- Yuan, R.; Sun, H.; Soh, K.G.; Mohammadi, A.; Toumi, Z.; Zhang, Z. The effects of mental fatigue on sport-specific motor performance among team sport athletes: A systematic scoping review. Front. Psychol. 2023, 14, 1143618. [Google Scholar] [CrossRef]

- Barker, L.M.; Nussbaum, M.A. The effects of fatigue on performance in simulated nursing work. Ergonomics 2011, 54, 815–829. [Google Scholar] [CrossRef]

- Kar, G.; Hedge, A. Effects of a sit-stand-walk intervention on musculoskeletal discomfort, productivity, and perceived physical and mental fatigue, for computer-based work. Int. J. Ind. Ergon. 2020, 78, 102983. [Google Scholar] [CrossRef]

- Dobešová Cakirpaloglu, S.; Cakirpaloglu, P.; Skopal, O.; Kvapilová, B.; Schovánková, T.; Vévodová, Š.; Greaves, J.P.; Steven, A. Strain and serenity: Exploring the interplay of stress, burnout, and well-being among healthcare professionals. Front. Psychol. 2024, 15, 1415996. [Google Scholar] [CrossRef]

- Sun, H.; Soh, K.G.; Xu, X. Nature Scenes Counter Mental Fatigue-Induced Performance Decrements in Soccer Decision-Making. Front. Psychol. 2022, 13, 877844. [Google Scholar] [CrossRef]

- Takács, E.; Barkaszi, I.; Altbäcker, A.; Czigler, I.; Balázs, L. Cognitive resilience after prolonged task performance: An ERP investigation. Exp. Brain Res. 2019, 237, 377–388. [Google Scholar] [CrossRef]

- Borragán, G.; Guerrero-Mosquera, C.; Guillaume, C.; Slama, H.; Peigneux, P. Decreased prefrontal connectivity parallels cognitive fatigue-related performance decline after sleep deprivation. An optical imaging study. Biol. Psychol. 2019, 144, 115–124. [Google Scholar] [CrossRef]

- Coccia, M. Destructive Creation of New Invasive Technologies: Generative Artificial Intelligence Behaviour. Technologies 2025, 13, 261. [Google Scholar] [CrossRef]

- Huang, S.Y.B.; Huang, C.H.; Chang, T.W. A New Concept of Work Engagement Theory in Cognitive Engagement, Emotional Engagement, and Physical Engagement. Front. Psychol. 2022, 12, 663440. [Google Scholar] [CrossRef] [PubMed]

- Islam, Q.; Khan, S.M.F.A. Understanding deep learning across academic domains: A structural equation modelling approach with a partial least squares approach. Int. J. Innov. Res. Sci. Stud. 2024, 7, 1389–1407. [Google Scholar] [CrossRef]

- Feldon, D.F.; Callan, G.; Juth, S.; Jeong, S. Cognitive Load as Motivational Cost. Educ. Psychol. Rev. 2019, 31, 319–337. [Google Scholar] [CrossRef]

- Skulmowski, A. Learners Emphasize Their Intrinsic Load if Asked About It First: Communicative Aspects of Cognitive Load Measurement. Mind Brain Educ. 2023, 17, 165–169. [Google Scholar] [CrossRef]

- Scheiter, K.; Ackerman, R.; Hoogerheide, V. Looking at Mental Effort Appraisals through a Metacognitive Lens: Are they Biased? Educ. Psychol. Rev. 2020, 32, 1003–1027. [Google Scholar] [CrossRef]

- Máté, D.; Kiss, J.T.; Csernoch, M. Cognitive biases in user experience and spreadsheet programming. Educ. Inf. Technol. 2025, 30, 14821–14851. [Google Scholar] [CrossRef]

- Hao, X.; Demir, E.; Eyers, D. Exploring collaborative decision-making: A quasi-experimental study of human and Generative AI interaction. Technol. Soc. 2024, 78, 102662. [Google Scholar] [CrossRef]

- Chen, J.; Mokmin, N.A.M.; Shen, Q.; Su, H. Leveraging AI in design education: Exploring virtual instructors and conversational techniques in flipped classroom models. Educ. Inf. Technol. 2025, 30, 16441–16461. [Google Scholar] [CrossRef]

- Khan, S.M.; Shehawy, Y.M. Perceived AI Consumer-Driven Decision Integrity: Assessing Mediating Effect of Cognitive Load and Response Bias. Technologies 2025, 13, 374. [Google Scholar] [CrossRef]

- Gandhi, T.K.; Classen, D.; Sinsky, C.A.; Rhew, D.C.; Vande Garde, N.; Roberts, A.; Federico, F. How can artificial intelligence decrease cognitive and work burden for front line practitioners? JAMIA Open 2023, 6, ooad079. [Google Scholar] [CrossRef]

- Shi, L. Assessing teachers’ generative artificial intelligence competencies: Instrument development and validation. Educ. Inf. Technol. 2025, 1–20. [Google Scholar] [CrossRef]

- Sætra, H.S. Generative AI: Here to stay, but for good? Technol. Soc. 2023, 75, 102372. [Google Scholar] [CrossRef]

- Kim, S.D. Application and Challenges of the Technology Acceptance Model in Elderly Healthcare: Insights from ChatGPT. Technologies 2024, 12, 68. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Morse, L.; Paul, S.M.; Cooper, B.A.; Oppegaard, K.; Shin, J.; Calvo-Schimmel, A.; Harris, C.; Hammer, M.; Conley, Y.; Wright, F.; et al. Higher Stress in Oncology Patients is Associated With Cognitive and Evening Physical Fatigue Severity. J. Pain Symptom Manag. 2023, 65, 203–215. [Google Scholar] [CrossRef]

- Borragán, G.; Slama, H.; Bartolomei, M.; Peigneux, P. Cognitive fatigue: A Time-based Resource-sharing account. Cortex 2017, 89, 71–84. [Google Scholar] [CrossRef]

- Terentjeviene, A.; Maciuleviciene, E.; Vadopalas, K.; Mickeviciene, D.; Karanauskiene, D.; Valanciene, D.; Solianik, R.; Emeljanovas, A.; Kamandulis, S.; Skurvydas, A. Prefrontal cortex activity predicts mental fatigue in young and elderly men during a 2 h “Go/NoGo” task. Front. Neurosci. 2018, 12, 620. [Google Scholar] [CrossRef]

- Andreatta, P.; Smith, C.S.; Graybill, J.C.; Bowyer, M.; Elster, E. Challenges and opportunities for artificial intelligence in surgery. J. Def. Model. Simul. 2022, 19, 219–227. [Google Scholar] [CrossRef]

- Wang, S. Challenges and responses: ChatGPT and academic misconduct governance. Stud. Sci. Sci. 2024, 42, 1361–1368. [Google Scholar]

- Thompson, K.; Corrin, L.; Lodge, J.M. AI in tertiary education: Progress on research and practice. Australas. J. Educ. Technol. 2023, 39, 1–7. [Google Scholar] [CrossRef]

- Cadez, S.; Dimovski, V.; Zaman Groff, M. Research, teaching and performance evaluation in academia: The salience of quality. Stud. High. Educ. 2017, 42, 1455–1473. [Google Scholar] [CrossRef]

- Torres-Hernández, M.A.; Ibarra-Pérez, T.; García-Sánchez, E.; Guerrero-Osuna, H.A.; Solís-Sánchez, L.O.; Martínez-Blanco, M.D. Web System for Solving the Inverse Kinematics of 6DoF Robotic Arm Using Deep Learning Models: CNN and LSTM. Technologies 2025, 13, 405. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, D.; Zhang, Z. Categorical Evaluation of Scientific Research Efficiency in Chinese Universities: Basic and Applied Research. Sustainability 2022, 14, 4402. [Google Scholar] [CrossRef]

- Ryazanova, O.; Jaskiene, J. Managing individual research productivity in academic organizations: A review of the evidence and a path forward. Res. Policy 2022, 51, 104448. [Google Scholar] [CrossRef]

- Aulawi, H. The impact of knowledge sharing towards higher education performance in research productivity. Int. J. Sociotechnol. Knowl. Dev. 2021, 13, 121–132. [Google Scholar] [CrossRef]

- Abramo, G.; D’Angelo, C.A.; Solazzi, M. The relationship between scientists’ research performance and the degree of internationalization of their research. Scientometrics 2011, 86, 629–643. [Google Scholar] [CrossRef]

- Shehawy, Y.M.; Khan, S.M.F.A.; Alshammakhi, Q.M. The Knowledgeable Nexus Between Metaverse Startups and SDGs: The Role of Innovations in Community Building and Socio-Cultural Exchange. J. Knowl. Econ. 2025, 1–36. [Google Scholar] [CrossRef]

- Noy, S.; Zhang, W. Experimental evidence on the productivity effects of generative artificial intelligence. Science 2023, 381, 187–192. [Google Scholar] [CrossRef]

- Shehawy, Y.M.; Faisal Ali Khan, S.M.; Ali M Khalufi, N.; Abdullah, R.S. Customer adoption of robot: Synergizing customer acceptance of robot-assisted retail technologies. J. Retail. Consum. Serv. 2025, 82, 104062. [Google Scholar] [CrossRef]

- Doron, G.; Genway, S.; Roberts, M.; Jasti, S. Generative AI: Driving productivity and scientific breakthroughs in pharmaceutical R&D. Drug Discov. Today 2025, 30, 104272. [Google Scholar] [PubMed]

- Rana, D.; Khan, S.M.F.A.; Arahant, A.; Chaudhary, J.K. Beyond Bitcoin: Green Cryptocurrencies as a Sustainable Alternative. In Green Economics and Strategies for Business Sustainability; Yıldırım, S., Demirtaş, I., Malik, F.A., Eds.; IGI Global: Hershey, PA, USA, 2025; pp. 235–260. ISBN 9798369389492. [Google Scholar]

- Shehawy, Y.M.; Ali Khan, S.M.F. Consumer readiness for green consumption: The role of green awareness as a moderator of the relationship between green attitudes and purchase intentions. J. Retail. Consum. Serv. 2024, 78, 103739. [Google Scholar] [CrossRef]

- Thapa, S.; Adhikari, S. ChatGPT, Bard, and Large Language Models for Biomedical Research: Opportunities and Pitfalls. Ann. Biomed. Eng. 2023, 51, 2647–2651. [Google Scholar] [CrossRef] [PubMed]

- Lorenz, F.; Lorenzen, S.; Franco, M.; Velz, J.; Clauß, T. Generative artificial intelligence in management research: A practical guide on mistakes to avoid. Manag. Rev. Q. 2024, 1–21. [Google Scholar] [CrossRef]

- Abdallah, M.; Abu Talib, M.; Feroz, S.; Nasir, Q.; Abdalla, H.; Mahfood, B. Artificial intelligence applications in solid waste management: A systematic research review. Waste Manag. 2020, 109, 231–246. [Google Scholar] [CrossRef]

- Kaul, V.; Enslin, S.; Gross, S.A. History of artificial intelligence in medicine. Gastrointest. Endosc. 2020, 92, 807–812. [Google Scholar] [CrossRef]

- Memon, M.A.; Ramayah, T.; Cheah, J.H.; Ting, H.; Chuah, F.; Cham, T.H. PLS-SEM Statistical Programs: A Review. J. Appl. Struct. Equ. Model. 2021, 5, 1–14. [Google Scholar] [CrossRef]

- Hair, J.; Alamer, A. Partial Least Squares Structural Equation Modeling (PLS-SEM) in second language and education research: Guidelines using an applied example. Res. Methods Appl. Linguist. 2022, 1, 100027. [Google Scholar] [CrossRef]

- Becker, J.M.; Cheah, J.H.; Gholamzade, R.; Ringle, C.M.; Sarstedt, M. PLS-SEM’s most wanted guidance. Int. J. Contemp. Hosp. Manag. 2023, 35, 321–346. [Google Scholar] [CrossRef]

- Huang, A.; Chao, Y.; de la Mora Velasco, E.; Bilgihan, A.; Wei, W. When artificial intelligence meets the hospitality and tourism industry: An assessment framework to inform theory and management. J. Hosp. Tour. Insights 2022, 5, 1080–1100. [Google Scholar] [CrossRef]

- Leppink, J.; Paas, F.; Van der Vleuten, C.P.M.; Van Gog, T.; Van Merriënboer, J.J.G. Development of an instrument for measuring different types of cognitive load. Behav. Res. Methods 2013, 45, 1058–1072. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory and educational technology. Educ. Technol. Res. Dev. 2020, 68, 1–16. [Google Scholar] [CrossRef]

- Zhang, J.; Meng, J.; Wen, X. The relationship between stress and academic burnout in college students: Evidence from longitudinal data on indirect effects. Front. Psychol. 2025, 16, 1517920. [Google Scholar] [CrossRef] [PubMed]

- Barker, L.M.; Nussbaum, M.A. Fatigue, performance and the work environment: A survey of registered nurses. J. Adv. Nurs. 2011, 67, 1370–1382. [Google Scholar] [CrossRef] [PubMed]