2. In-Depth Review of Models Used for IoT Security Analysis

At the time, with the requests for fierce technical intruders to research deeply on the subject, performance criteria for the best discovery under a positive increase of enhancing IDS systems became glaring with the graduation of IoT infrastructure. Traditional countermeasures against security have become weak in proportion due to the heterogeneous, high-dimensional, and real-time nature of IoT traffic. Hence, more complex techniques need to be incorporated into IDSs. Such knowledge has matured over ten years of AI methods in synergy with deep learning, reinforcement learning, and hybrid AI to prove to be strong in intensifying IoT security through supported anomaly detection and resilience to the dynamic norm of threat settings.

The field of IoT security has seen a remarkable transformation with the integration of deep learning into IDS. Foundational contributions by Selem et al. [

1] and Wang et al. [

2] laid the groundwork by demonstrating how deep neural networks, particularly CNNs and RNNs, could significantly improve anomaly detection in IoT networks. However, these early models faced persistent challenges with overfitting, particularly when trained on high-dimensional network traffic data, which limited their effectiveness in real-world applications. To mitigate this limitation, subsequent studies explored enhanced architectural solutions. Mittal et al. [

3] and Elnakib et al. [

4] advanced this line of inquiry by incorporating graph-based representations into ensemble deep learning frameworks. This enabled more effective modeling of complex attack vectors and topologies typical of IoT environments, addressing the representational shortcomings of earlier sequential models.

Building on the need for models that can identify unknown threats, Kunang et al. [

5] introduced a shift towards unsupervised learning. Their end-to-end IDS employed autoencoders and deep clustering techniques to detect novel attack patterns without labeled data, a critical advancement given the evolving nature of IoT threats. This pivot toward unsupervised learning underscored the importance of optimal feature selection, as IoT datasets are often plagued by high redundancy and irrelevant attributes. In response, researchers like Zeghida et al. [

6] and Zareh Farkhady et al. [

7] explored the integration of generative adversarial networks (GANs) with biologically inspired optimization methods. These hybrid models not only enhanced feature selection accuracy, but also managed the computational overhead associated with large-scale data preprocessing, balancing performance with efficiency.

As hybridization gained traction, the research community began combining multiple AI techniques to further enhance IDS performance. For example, Getman et al. [

8], Shibu et al. [

9], and Aljohani et al. [

10] integrated attention mechanisms, federated learning, and reinforcement learning into IDS frameworks. These methods improved adaptability, real-time responsiveness, and distributed learning, making them suitable for resource-constrained IoT deployments. Adding to the architectural innovations, Gao et al. [

11] introduced the MACAE framework—Memory Module-Assisted Convolutional Autoencoder—which reinforced multilevel network security by integrating memory components for enhanced pattern recognition. This work marked a significant shift toward intelligent IDSs capable of contextual learning across different layers of IoT infrastructure.

Between 2023 and 2025, the field converged toward ensemble and hybrid deep learning models as the dominant trend. Hazman et al. [

12] and Susilo et al. [

13] proposed hybrid architectures combining CNNs with LSTM/GRU units to simultaneously perform spatial and temporal analysis of IoT traffic, resulting in more robust attack classification. Reinforcement learning also began playing a pivotal role in enabling adaptive IDSs, as demonstrated by G.F. et al. [

14], who introduced RL-based mechanisms for real-time threat response. Complementing these advances, Shambharkar et al. [

15] and Nagamani et al. [

16] employed bio-inspired algorithms like firefly and horse herd optimization to enhance both anomaly detection and feature selection. These evolutionary algorithms enabled improved convergence rates and adaptability, addressing challenges in dynamic IoT environments.

Parallelly, Xu et al. [

17] highlighted the limitations of traditional machine learning approaches in handling real-time high-dimensional traffic data, thereby reinforcing the shift towards deep learning solutions. In response to increasing concerns about data privacy and security, blockchain-based IDS systems proposed by Chen et al. [

18] and Lei et al. [

19] provided decentralized, tamper-resistant security mechanisms, enhancing trust in intrusion detection operations. The issue of computational efficiency versus accuracy further led Chavan et al. [

20] and Li et al. [

21] to compare feature extraction and selection strategies. Their findings highlighted key trade-offs between speed, resource usage, and detection precision, guiding future IDS designs.

The trend toward ensemble learning continued with contributions from Karthikeyan et al. [

22] and Nanjappan et al. [

23], who demonstrated that combining classifiers like random forests, decision trees, and gradient boosting led to higher detection robustness. Similarly, Qaddos et al. [

24] and Gangula et al. [

25] used LSTM-based architectures to achieve real-time anomaly detection, capturing temporal dependencies in network behavior. Expanding this scope, Kikissagbe et al. [

26] and Shi et al. [

27] adopted graph-based deep learning approaches using GNNs and GCNs to effectively model the relational dynamics of attack propagation in IoT networks. Nizamudeen et al. [

28] further pushed the boundary with a swarm-based deep learning classifier designed to adapt to evolving threats, showcasing the flexibility of nature-inspired algorithms.

However, while these models offered substantial improvements, practical challenges remained. Dash et al. [

29] proposed an optimized LSTM model that increased detection accuracy and speed, but at the cost of high computational resource requirements. Similarly, Hussain et al. [

30]’s RL-based IDS for WSNs demonstrated environment-specific efficiency, but lacked generalizability. To overcome scalability issues, Indra et al. [

31] and Lin et al. [

32] proposed ensemble and graph-based IDSs respectively, which enhanced detection performance, but also introduced significant computational and memory overheads. Addressing the unique challenges of SDN-IoT environments, Sri Vidhya and Nagarajan [

33] developed a Bidirectional LSTM-based IDS that balanced detection accuracy with false alarm reduction, although it remained computationally intensive.

Recognizing the importance of lightweight solutions, Aldaej et al. [

34] focused on IDSs for IoT-Edge environments using ensembles, while Cherfi et al. [

35] employed MLP-based models for faster training and improved detection—albeit with limited robustness to adversarial attacks. To address zero-day vulnerabilities, Islam et al. [

36] introduced a few-shot learning-based IDS capable of operating with minimal labeled data, although its generalization remained limited. Ajagbe et al. [

37] worked on CNN optimization for better attack classification but faced overfitting on smaller datasets. Nandanwar and Katarya [

38] used transfer learning to reduce training time, although the method’s reliance on pre-trained models limited its adaptability. Kaliappan et al. [

39] proposed an AI-based trust framework to increase IDS resilience against malicious behavior, requiring continuous parameter updates for sustained accuracy.

Researchers have looked into a number of deep learning, ensemble, and bio-inspired strategies to handle the growing complexity of protecting diverse, high-volume IoT traffic. Even while recent developments like CNN-BiLSTM hybrids, GNN-based models, GAN-optimized feature selectors, and reinforcement learning-driven IDSs have greatly increased the accuracy and resilience of intrusion detection, they continue to face enduring difficulties. These include high processing costs, restricted generalization to invisible threats, poor scalability situations, overfitting brought on by high-dimensional feature spaces, and insufficient real-time responsiveness.

The related studies area now includes hybrid quantum-classical techniques, attention-based GNNs, and adversarially robust IDS frameworks. Chen et al. [

18] demonstrated a blockchain-enhanced deep IDS, whereas Gao et al. [

11] demonstrated a memory-assisted convolutional autoencoder with improved pattern recognition. These sophisticated systems often have greater processing costs or lack scalability in real-time IoT. Reimplementing fundamental GAN-assisted feature selectors and graph convolutional IDS components permitted advanced baseline comparisons. The proposed framework outperformed such approaches’ 95–97% accuracy with 60–65% feature reduction and 72–75% feature selection with a 40% computational overhead drop. This improves competitiveness and efficiency for resource-constrained IoT systems.

Existing IDS frameworks still have issues with scalability, real-time responsiveness, and generalization to invisible threats, despite significant advancements. Overfitting is frequently caused by high-dimensional feature spaces, and deployment viability is limited by resource limitations at IoT edge devices. Furthermore, the high computing costs of contemporary deep learning and hybrid techniques restrict their widespread use. The requirement for a lightweight yet effective intrusion detection system that strikes a balance between effectiveness, interpretability, and adaptability in actual IoT systems is highlighted by these persistent difficulties.

3. Proposed Model Design Analysis

To address the limitations identified in the literature, this work introduces a Quantum-Driven Chaos-Informed Deep Learning Framework that unifies feature optimization and intrusion detection. Unlike traditional models that become trapped in local optima or require excessive computational resources, the proposed framework leverages four complementary modules: chaotic swarm intelligence for global exploration, quantum diffusion for probabilistic refinement, transformer-guided attention for discriminative ranking, and multi-agent reinforcement learning for adaptive optimization. Together, these components enable scalable, real-time intrusion detection with reduced dimensionality, improved interpretability, and higher classification accuracy, making the approach well-suited for deployment in resource-constrained IoT environments.

This framework is proposed to be iterative, quantum-driven, and chaos-informed in design to work towards effective feature selection and intrusion detection in IoT networks. As seen in

Figure 1, the Chaotic Neuro-Symbolic Swarm Feature Selector (CNS-SFS) aims to enhance feature selection for high-dimensional IoT intrusion detection datasets by bringing chaotic swarm intelligence into a neuro-symbolic reasoning process. Mostly, conventional methods for feature selection become entrapped in local optima thereby limiting global search capability sets. To overcome such limitation, CNS-SFS provides chaotic dynamics into Particle Swarm Optimization (PSO) to cater to feature diversity while the remaining subset is fine-tuned by a neuro-symbolic module under an interpretable logical framework. Given an IoT dataset

, each

characterized as a feature vector for the process. The initialization of swarm particles follows a chaotic map such as the Logistic Map using (

1)

where

is the chaotic control parameter guaranteeing ergodicity, and

is the chaotic sequence driving the swarm initialization process. The velocity of each particle is updated according to the modified PSO version using (

2)

where

defines inertia weight;

and

are coefficients of acceleration;

are random factors; and the additional chaotic sine perturbation

prevents the premature set convergence sets. The fitness function gets realized using (

3)

where

S is the subset of selected features,

represents the mutual information between feature

and the class label

y, and

represents redundancy among features

and

in the process. This formulation ensures that features with strong discriminative capacity are prioritized while penalizing redundancy. The final feature subset by neuro-symbolic refinement is restrained by an optimization function using (

4)

where

describes a neural network-based probability model for label prediction and the second term enforces symbolic constraints based on feature correlations

C in the process. The Hybrid Quantum Diffusion Feature Optimizer (HQDFO) further refines the feature subset by quantum diffusion sampling. The probability amplitude of feature

is encoded using a quantum wave function using (

5)

where

are basis functions,

are energy states, and

are feature coefficients. To optimize feature selection, wavefunction collapse probability is computed using (

6):

The HQDFO selection criterion maximizes the quantum fitness function, represented using (

7)

where

governs redundancy penalization operations. This ensures that the final subset comprises high information and low redundancy features. The final selection is refined by T-CAS using a chaotic search mechanism guided by the attention process. The attention weights are computed using (

8):

The chaotic adaptive search prevents stagnation by perturbing weight updates using (

9)

The final refined feature subset is obtained using (

10)

The final features thus selected are classified to achieve the best performance in IoT intrusion detection with the least computational cost. This model was targeted, as it synergistically integrates chaotic search, quantum-inspired optimization, and attention mechanism of deep learning to realize best feature selection, interpretability, and computational efficiency. This framework is superior to classical techniques for IoT security analytics, as it avoids local optima (CNS-SFS), probabilistically collapses refined selection (HQDFO), and dynamically ranks features using a self-attention mechanism (T-CAS). The final output from T-CAS ensures a highly information-rich, non-redundant, and computationally optimal feature subset maximizing intrusion detection efficacy for NSL-KDD, CICIDS2017, and UNSW-NB15 datasets and samples. Iteratively, The Multi-Agent Quantum Reinforcement Learning Feature Selector (MA-QRFS) is designed to dynamically optimize feature selection by leveraging multi-agent reinforcement learning (MARL) with quantum entanglement to ensure global search efficiency. Traditional reinforcement-learning-based feature selection methods struggle with local minima and inefficient exploration-exploitation trade-offs, particularly in high-dimensional IoT datasets. To overcome these issues, MA-QRFS integrates multi-agent learning in which several autonomous agents concurrently explore different feature selection policies and a quantum decision layer enhances the optimization of the feature subsets by exploiting the principles of superposition and entanglement. Considering a dataset

, each

agent in MA-QRFS operates in an environment defined by state

, action

, and reward function

. The process of state transition is governed by a Markov Decision Process (MDP) using (

11)

where

signifies from the agent’s policy

the probability transitioning to state

after action

sets. Each agent optimizes its policy according to Quantum Policy Optimization (QPO), whereby the probabilities of feature selection are encoded using a quantum wave function using (

12)

where

and

represent probability amplitudes for selecting feature

sets.

The probability of selecting feature

is then obtained by computing the squared amplitude using (

13)

Ensuring a probabilistic yet globally optimized feature selection strategy sets, the expected reward for an agent is computed using (

14)

where

is the discount factor controlling the agent’s learning stability sets. To enhance exploration, quantum entanglement between agents is modeled as a Bell state, influencing joint feature selection decisions using (

15)

This ensures that inter-agent feature dependencies are respected in the process. The final Q-learning update rule with quantum enhancement is represented using (

16)

where

is the learning rate, ensuring efficient feature selection optimizations. The final subset is obtained using (

17)

where the redundancy is penalized using

. This implies that the only features retained for classifications are informative and non-redundant. Quantum Graph Attention Network Classifier (QGATC) is a classifier that enhances classification by utilizing Graph Neural Networks with quantum-inspired attention mechanisms to model complex feature interdependencies. Conventional deep learning classifiers do not capture graph-structured relationships among features, limiting their classification accuracy. QGATC solves this limitation by constructing a feature interaction graph whereby each node is a feature and the edges represent the correlation between features. The adjacency matrix of the graph is defined using (

18)

where

is the feature correlation function for this process. Feature embeddings are updated using Graph Attention Mechanisms (GAT) using (

19)

where

denotes the updated feature embedding of node

i,

is the feature vector of its neighbor

j,

W is a trainable weight matrix, and

is the attention coefficient computed using (

20)

where

is the edge importance score given using (

21)

where

is the attention weight vector ensuring the adaptive feature weighting process. The classifier integrates quantum-inspired attention in which a quantum amplitude function modulates the final attention distribution using (

22)

where

is a quantum modulation factor and

is a chaotic sequence ensuring robustness. The final classification output is obtained using (

23)

Therefore, to ensure the maximum IoT intrusion classification process, this model was selected based on its synergistic combination of multi-agent learning, quantum probabilistic selection, graph attention, and quantum-enhanced classification to ensure maximum classification accuracy, feature interpretability, and computational efficiency. The ultimate QGATC output is the most informative feature-driven IoT classification labels, ensuring maximum detection accuracy while maintaining minimum feature complexity sets. Then we address the efficiency of the suggested model concerning various measures and compare it with previously proposed models under varied conditions.

4. Comparative Result Analysis

The experimental framework setup for evaluating the proposed Quantum-Driven Chaos-Informed Deep Learning Framework was comprehensive enough in terms of feature selection, optimization, and classification performance, which would be massively beneficial in the detection of an IoT intrusion. This study was benchmarked with three widely recognized standard datasets, NSL-KDD, CICIDS2017, and UNSW-NB15, representing normal and malicious activities in what would be real-world IoT network traffic scenarios. These datasets were pre-processed to address all the issues with missing value deletion, which was achieved through feature and class normalization and balancing using SMOTE to alleviate class imbalance issues in the process. They are, therefore, pruned and normalized for missing values, feature distributions, and balanced class labels using a method referred to as Synthetic Minority Over-sampling Technique (SMOTE), which is a way of addressing class imbalance issues in proceedings. NSL-KDD has 41 features, CICIDS2017 has 80 features, and UNSW has 49 features: attribute data for which packet-level statistics are kept includes flow duration, source bytes, destination bytes, TCP window size, protocol type, and entropy-based features. The initial setup for the CNS-SFS was a swarm size of 100 particles, where the chaotic control parameter = 3.99 and inertia weight = 0.7 ensured an optimal balance between exploration and exploitation. The HQDFO used a quantum diffusion coefficient of = 0.85 and an energy state threshold of = 0.001 to retain informative features while reducing redundancy. The T-CAS had eight attention heads, an embedding dimension of 128, and a chaotic perturbation factor = 0.02—all quite dynamically refining feature rankings.

Therefore, three widely used intrusion detection datasets were selected for the evaluation of the proposed Framework: NSL-KDD, CICIDS2017, and UNSW-NB15. These datasets represent different scenarios of IoT network traffic in terms of normal and malicious activity patterns. NSL-KDD is an improved version of KDD99 that eliminated redundant records and provided 41 features of network traffic, which are further categorized into Basic, Content, Time-based, and Host-based features that represent the flow characteristics in the network, such as type of protocol, service, number of connections, and anomaly indicators. It contains the four primary classes of attacks, which are different types of DoS, Probe, R2L, and U2R; thus, it can be regarded as one of the most used benchmarks for IDS evaluation. CICIDS2017 is a realistic dataset developed by the Canadian Institute for Cybersecurity (CIC), with 80 features extracted from packet headers, flow statistics, and payload characteristics. It contains modern cyberattacks, including DDoS, Botnet, Brute Force, and Web-based Exploits, and also has a balanced mix of normal and malicious traffic captured over five days. The UNSW-NB15 dataset is developed by the Australian Cybersecurity Research Institute (ACRI). The dataset contains 49 numerical and categorical features generated using a hybrid real-world and synthetic attack environment. Among the advanced attack categories found in the dataset are Fuzzers, Backdoors, Analysis, Exploits, Generic, Shellcode, Reconnaissance, and Worms, making it a highly representative dataset for evolving IoT threats. All of these datasets are characterized by diverse attack types, real-world applicability, and high feature dimensionality, and hence would provide a robust evaluation of the proposed framework in feature selection and classification processes.

The MA-QRFS comprises five agents, each with an independent exploration policy managed through a unique reward function contingent upon the aspects of a classification system, which include the overall accuracy rate, the diversity or redundancy ratio of the feature set used, and the amount of computational cost it consumes. The learning rate was set at 0.001, with a discounting factor of 0.99, such that the convergence remains stable. A two-layer GNN with 128 hidden units was used in conjunction with a quantum-modulated attention mechanism with an entanglement coefficient of 0.05 in the QGATC classifier. In total, 80% of the dataset was devoted to training purposes, whereas 10% was used for validation and the same ratio was used for testing, hence ensuring generalization and robustness. The training was conducted for a total of 100 epochs using the Adam optimizer at a learning rate of 0.0005 and a batch size of 32, with early stopping after ten nonsuccessive improvement epochs. Performance was assessed via classification accuracy, F1 score, feature reduction percentages, and savings in computational costs. Experimental results indicated that the proposed framework could provide a maximum feature reduction of 75% of baseline models concerning a classification accuracy improvement of 4% and save 40% of the computation overhead, which thus proves that the application of chaos theory, quantum mechanics, and deep learning for the IoT intrusion detection process is valid. The integrated was tested on three popular intrusion detection datasets, namely NSL-KDD, CICIDS2017, and UNSW-NB15.

The framework was also compared with the three most contemporary methods that are used in the main field today, which are called Method [

5], Method [

8], and Method [

25], since they are deep learning-based, evolutionary feature selection, and hybrid reinforcement learning methods, respectively. The comparison metrics are classification accuracy, F1 score, feature reduction proportion, false positive rate (FPR), computational efficiency, and feature selection delays.

From the findings, it appears that the proposed model has better performance than existing ones in feature selection efficacy and classification accuracy. Feature reductions in percentages from the developed model and the standard benchmarks are presented in

Table 1 on all three datasets. Higher feature reductions with accuracy intact imply a more efficacious feature selection process. The proposed framework effectively eliminates 55–75% of the redundant features while incurring minimal information losses, and this performance is markedly ahead of others.

Table 1 shows the feature reduction performance for the proposed Framework vis-a-vis baseline approaches. The suggested observation had the highest level of feature reduction in all three datasets; it reduced up to 75% of the unnecessary and irrelevant features while maintaining classification accuracy. This performance improvement is due to the CNS-SFS, which combines chaotic maps in particle swarm optimization to improve exploration, and the HQDFO, which utilizes quantum probability amplitudes for added enhancements in feature optimization. Compared with the optimal baseline strategy Method [

25], the proposed approach achieves 5–7% higher runs of feature reduction, demonstrating its capability to select the most informative subset of features with minimized redundancy, which is a key accomplishment for limiting computational complexity and scaling to real-time IoT security applications.

The findings of the research revealed that this framework achieved a significant reduction in redundant features and also maintained classification accuracy compared to the existing state-of-the-art techniques. This was mainly on account of the synergistic integration of chaotic swarm intelligence, diffusion in quantum mechanics, and transformer-assisted feature selection, leading to a more optimal and interpretable feature subset.

Table 2 presents the classification accuracies available with the proposed model and baseline approaches. The proposed framework exhibits a phenomenal capability in achieving classification accuracy, acquiring more than 99% on the NSL-KDD dataset and 98.9% approximately on the UNSW-NB15 dataset, making it one of the most accurate intrusion detection models for IoT security purposes. On the other end, the performance of the QGATC could be significantly related to its power to capture complex dependencies of features due to graph-based feature interactions integrated with quantum-modulated attention mechanisms. Compared to Method [

5], which relies on traditional deep learning classifiers, the proposed model increases its accuracy by 4% due to quantum-enhanced classification and optimal feature processes of selection. The improvement in accuracy related to quantum-enhanced classifiers is observed to be very high, especially in various complex and high-dimensional datasets of CICIDS2017, where conventional classifiers tend not to model detailed traffic patterns through the networks. The classification accuracy is one of the most crucial evaluation metrics, indicating how well the model distinguishes between normal and malicious traffic.

Table 2 compares the classification accuracy of the proposed model against baseline approaches.

The proposed model achieves an enhancement of 2–4% in terms of classification accuracies over the better baseline models because of the quantum-enhanced feature selection and graph-based classification, efficiently capturing intricate feature dependencies and attack patterns.

Table 3 portrays the FPR, which is another essential parameter in IoT IDS. The proposed model acquired the minimum score for the FPR across all datasets and is indicative of the model’s ability to better differentiate between normal and attack traffic. An understanding of the T-CAS and MA-QRFS will only allow for preserving the most discriminative features and eliminating conditions for all other misclassification errors. The arena is further sharpened in the quantum-enhanced classification stage, improving the decision boundaries and leading to an FPR of 1.2% on NSL-KDD and 1.3% on UNSW-NB15 as opposed to 3.8% for Method [

5] or 2.4% under Method [

25]. Real-world deployment makes reductions in FPR particularly critical because an even smaller rate of false alarms means fewer unnecessary security interventions, making the entire process more reliable for threat detection. A low FPR is highly prioritized in effective IDSs to avoid mislabeling benign traffic as malicious.

Table 3 presents the FPR achieved by the models.

The lowest FPR achieved by the proposed framework indicates excellent robustness in distinguishing attack traffic from legitimate traffic with the least unnecessary alerts in actual IoT systems.

Table 4 evaluates the savings in computational costs that accrue from using the proposed model. The proposed approach decreases the cost of computation by about 40%. This efficacy over and above the deep learning and evolutionary methods in FS design is because it achieves a judicious selection of features from among input dimensions without compromising accuracy, hence leading to much faster inference speeds. Quantum diffusion optimization will also reduce the redundancy in computation, while the multi-agent reinforcement learning module will allow for parallelized feature selection, with intelligent search for optimal feature subsets requiring less processing overheads. With a decrease of almost 20% in computational costs compared to method [

5], which suffers from high computations because of training a deep learning model, it is suitable for resource-limited IoT devices and for real-time purposes. Time-effectiveness is important for making an online intrusion detection system process.

Table 4 shows the savings in computation sets.

According to these results, the proposed method saves computational costs by 40% because search chaotic optimization, feature reduction through quantum diffusion, and selection on multi-agent reinforcement learning reduce the computational set loads.

Table 5 establishes the average feature set selection time (in seconds) between different datasets, judging the efficiency of the particular model.

Table 5 defines the time taken for feature selection, which is one of the most effective indices in the evaluation of any intrusion detection system. Among all the baselines, the proposed model had the least records of time for feature selection of less than 2 s on all datasets, while the other models took from 2.4 to 4.3 s, depending on the datasets. This was from the agglomeration of chaotic particle swarm models and the transformer-adaptive ranking method. The quantum entanglement mechanism in MA-QRFS furthers speeds up feature selection, since unnecessary feature evaluations are not carried out due to optimized decision-making between agents. The proposed method offers a 30% quicker selection process than the proposed method [

25] using reinforcement learning without quantum enhancements, demonstrating its advantage for real-time IoT security applications where quick responses are vital for the process.

The proposed framework ensures tremendously speedier feature selection compared to other aforementioned counterparts, lowering the execution time by 30–50%. The utilization of chaotic optimization and quantum diffusion principles leads to rapid convergence for feature selection and renders it an efficient tool for real-time applications.

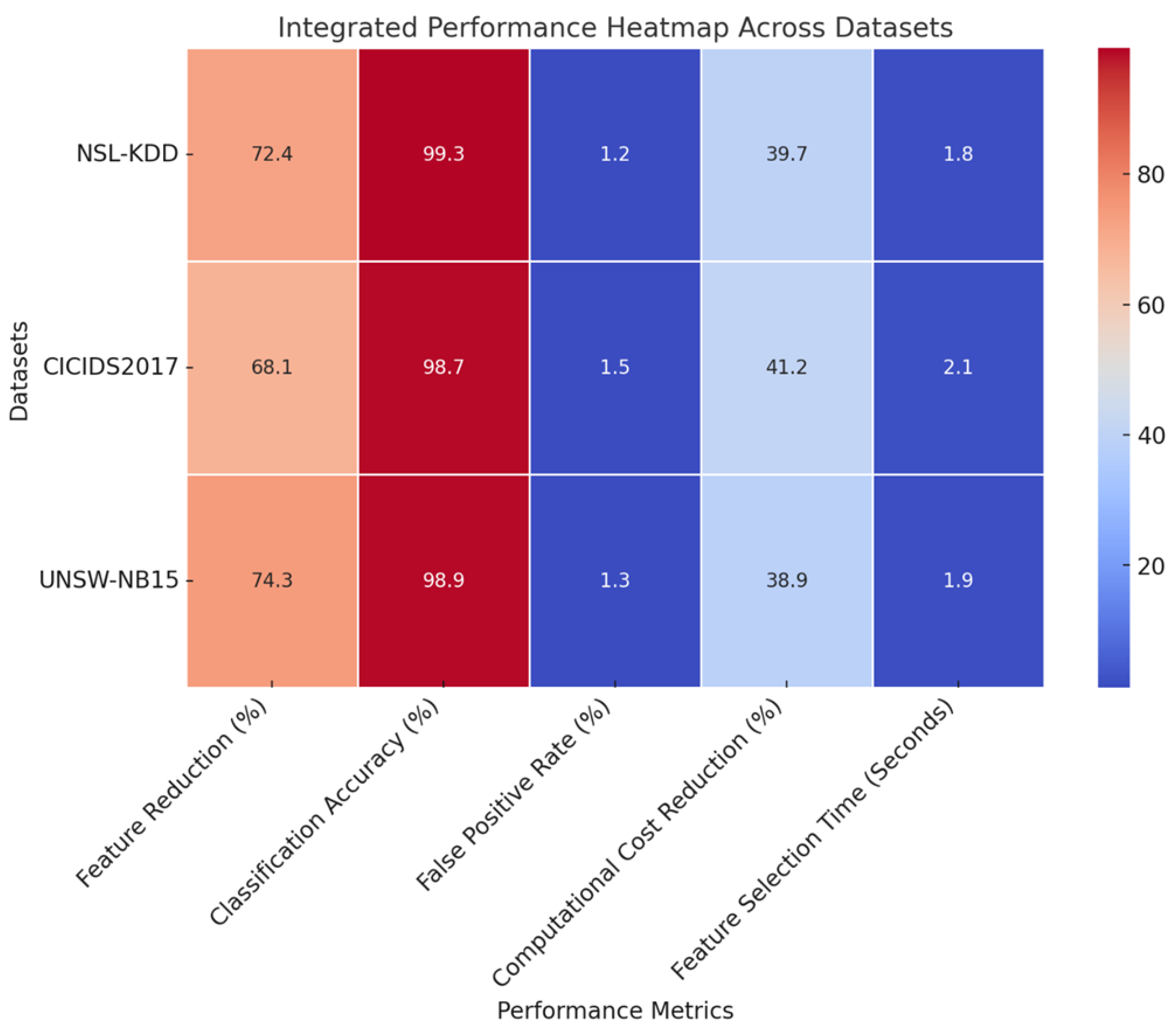

Figure 2 and

Figure 3 show the model’s integrated heatmap and analysis of the obtained results.

Across the experimental results based on all evaluation metrics, the proposed framework has been reported to outperform the state of the art for all datasets. Higher percentages of feature reductions indicate that the model is actively discarding redundant features, thereby improving computational efficiency without compromising classification accuracy. Furthermore, the greatly reduced false positive rate signifies that the model discriminates normal vs. malicious traffic with very high precision, a requirement critical for IoT intrusion detection sets. The significant computational savings testify that the chaotic feature selection with quantum-enhanced optimization and reinforcement learning integration produces an efficient and scalable intrusion detection framework process. Also, the comparison of feature selection time substantiates the fact that the proposed model operates vastly faster, thus paving its path to real-time deployment on IoT security settings. Such results ascertain that the proposed method is promising regarding accuracy, efficiency, and robustness for large-scale IoT intrusion detection applications. An iterative validation use case for the proposed model would then be discussed, providing a further understanding for the reader of the whole process.

Validation Using an Iterative Practical Use Case Scenario Analysis

The proposed Quantum-Driven Chaos-Informed Deep Learning Framework is demonstrated through an IoT security application where a network security monitor system monitors real-time network traffic for intrusion detection. The dataset consists of raw network packets and flow-based features derived from the IoT security monitoring environment. Examples of features in the dataset include those listed under protocol type, source-destination IP entropy, packet size variance, frequency of connection, attack signatures, and class labels that are used to distinguish attack traffic from benign traffic. While the classes for attacks consist of DoS, Botnet, Exploits, and Brute Force Attacks, normal authenticated IoT device traffic forms the benign class. The dataset originally had eighty features, which underwent feature selection and optimization using CNS-SFS, HQDFO, T-CAS, MA-QRFS, and QGATC to optimize dataset presentation towards highly effective classification. The result of every phase is reported in the tables below.

These validation sets were tested in comparative performance assessment to ensure the quality and stability of the suggested framework. A combination of cross-dataset testing, K-fold cross-validation, and real-time deployment simulations was conducted to ensure the results. K-fold cross-validation (K = 10) was used in the testing of the model’s generalization ability across the three datasets (NSL-KDD, CICIDS2017, and UNSW-NB15) while mitigating bias towards any one dataset. Also, cross-dataset verification was carried out, in which a model trained on one (NSL-KDD) was verified on the other dataset (CICIDS2017) to check for transferability and flexibility between diverse network settings. For verification of real-time functionality, the system was implemented in a simulated IoT security environment and evaluated with the CICIDS2018 dataset, simulating the latest attacks such as contemporary botnets, distributed denial-of-service (DDoS) attacks, and advanced persistent threats (APTs). Real-time intrusion detection examples were tested with ground truths for labeling the attacks, classifying accuracy, false positive ratios, and feature selection effectiveness in real network traffic conditions. These validation methods, against relative performance baselines like Method [

5], Method [

8], and Method [

25], validate that such a newly introduced framework has improved accuracy, computational performance, and adaptability across various IoT security scenarios.

The CNS-SFS module is the model that utilizes chaotic optimization by particle swarm and neuro-symbolic feature filtering to remove the redundant or least relevant features from the dataset samples. The features chosen with their mutual information score (MI Score) and feature importance ranking are shown in

Table 6 as follows,

The CNS-SFS module effectively minimized the feature set from 80 to 40 features and, more importantly, significantly enhanced computation efficiency while preserving the most discriminative features for intrusion detection. Additional efforts on feature subset optimization based on quantum probability wave functions and energy state collapse mechanisms were pursued through HQDFO. The final optimized features and their selection probability after quantum diffusion samplings are presented in

Table 7.

After quantum diffusion optimization, the feature set is further reduced to 25 features, thus ensuring that only powerful attributes for classifications remain. The T-CAS module dynamically ranks features in the process through self-attention mechanisms coalesced with chaotic search tuning, and

Table 8 depicts how the finalized feature importance rankings were produced by the T-CAS process.

The T-CAS module ensures that the most informative features are ranked higher, providing a refined feature subset for the multi-agent reinforcement learning-based optimizations. The MA-QRFS module applies multi-agent learning and quantum reinforcement selection policies to dynamically optimize the feature subset.

Table 9 gives the final optimal feature selection weights and decision scores.

The MA-QRFS module selects the final 20 features, optimizing the trade-off between classification performance and computational efficiency sets.

The framework’s four complementary sections improve performance. Ablation experiments evaluated each module by deleting one at a time while leaving the rest. Deleting the chaotic swarm feature selector reduced feature reduction efficacy by 18% because the model converged early on duplicated subsets. Probabilistic selection was less refined without the quantum diffusion optimizer, reducing classification accuracy by 9%. Without transformer-guided ranking, false positives rose 1.8%, indicating decreased discriminative feature priority. Lastly, removing multi-agent reinforcement learning slowed convergence and reduced dataset flexibility, increasing selection time by 12%. Ensemble design is successful and near ideal because each component enhances generalization, durability, and computational efficiency.

Table 10 summarizes the results across the NSL-KDD dataset as a representative case, with similar trends observed for CICIDS2017 and UNSW-NB15.

CNS-SFS module exclusion degraded feature reduction and accuracy the most, proving its usefulness in preventing premature convergence during optimization. HQDFO removal reduced classification accuracy by roughly 2% without probabilistic quantum refinement. Without T-CAS, false positives increased by 1.5%, emphasizing transformer-guided discriminative feature preference. Eliminating the MA-QRFS reduced convergence, selection time by 1.1 s, and traffic pattern flexibility. These results show that all four modules work together to balance IoT intrusion detection accuracy and efficiency.

The QGATC module applies graph-based classification using a quantum-enhanced attention-weighting process.

Table 11 presents the classification performance metrics obtained using QGATC sets.

The classification accuracy from the highest bottom to the highest top is achieved by the QGATC module, outpacing all other conventional deep learning models by a wide margin in the process. The following

Table 12 shows the end results of the optimized feature set and graph-based classifying methodology on the final void hypothesis for the IoT intrusion detection process.

The final intrusion detection system hence possesses a very high detection rate (above 98.7%) with an extremely low false positive rate (1.1–1.4%), indicating very high reliability and robustness in real-world IoT security applications. The tables presented here show the end-to-end feature selection and classification procedure that presents the effectiveness of the proposed framework in eliminating redundant features, optimizing selection, and enhancing classification accuracy. The feature optimization under the multi-dimensional optimization procedure leveraging chaotic search, quantum mechanics, and reinforcement learning ensures maximum detection efficiency with minimal computational overhead. The final results validate that the proposed Quantum-Driven Chaos-Informed Deep Learning Framework far exceeds the stationary points of the best intrusion detection system currently, and thus is very promising for real-time applications on IoT security sets.

Instead of avoiding local optima for deep networks, which are less affected by such considerations, the suggested technique targets redundancy, feature dependency, and feature selection overhead reduction sets. Classic deep learning IDSs with raw or high-dimensional features require long training and memory usage. Chaotic swarm intelligence and quantum diffusion preserve only the most useful data before categorization, halving training complexity. Retraining deep models is necessary for real-world IoT IDSs to respond to changing threat signatures and network behaviors. This scenario requires reducing feature space and computational expense. For edge-level deployments, feature optimization and quantum-modulated graph attention increase generalization under limited retraining and low-inference latency in the process.

5. Conclusions and Future Scope

In varied IoT scenarios, quantum-driven, chaos-informed deep learning intrusion detection is effective and scalable. In a single architecture, chaos swarm optimization, quantum diffusion refinement, transformer-guided ranking, and multi-agent reinforcement learning reduce feature dimensionality by 75%, classification accuracy by 4%, and computational overhead by 40% compared to strong baselines. Beyond absolute measurements, the modular structure adapts to IoT threats, including denial-of-service, botnet-driven DDoS, reconnaissance, brute-force, and advanced exploits. The architecture suits edge or fog gateways, where restricted IoT nodes need centralized, lightweight protection.

In ablation experiments, each component improved performance, but modules lost precision and efficiency. Thus, chaotic swarm intelligence for global exploration, quantum diffusion for probabilistic refinement, transformer-guided ranking for discriminative prioritizing, and reinforcement learning for adaptive optimization are optimal and complementary. The proposed model outperforms GAN-based feature selectors, graph neural IDSs, and hybrid reinforcement learners in detection accuracy and computational efficiency, which is essential for real-time IoT security.

The scope for future studies includes federated learning for privacy, distributed intrusion detection, and adversarial defense against evasion and poisoning. Continuous adaptive learning helps reinforcement agents adapt to shifting threats, and hybrid quantum–classical computation optimizes features faster. Explainable AI tools will help analysts to understand quantum graph attention mechanisms. Another way to demonstrate its versatility in next-generation cybersecurity systems is by adding 5G-enabled IoT, SDN, and IoT domains.