Edge or Cloud Architecture: The Applicability of New Data Processing Methods in Large-Scale Poultry Farming

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

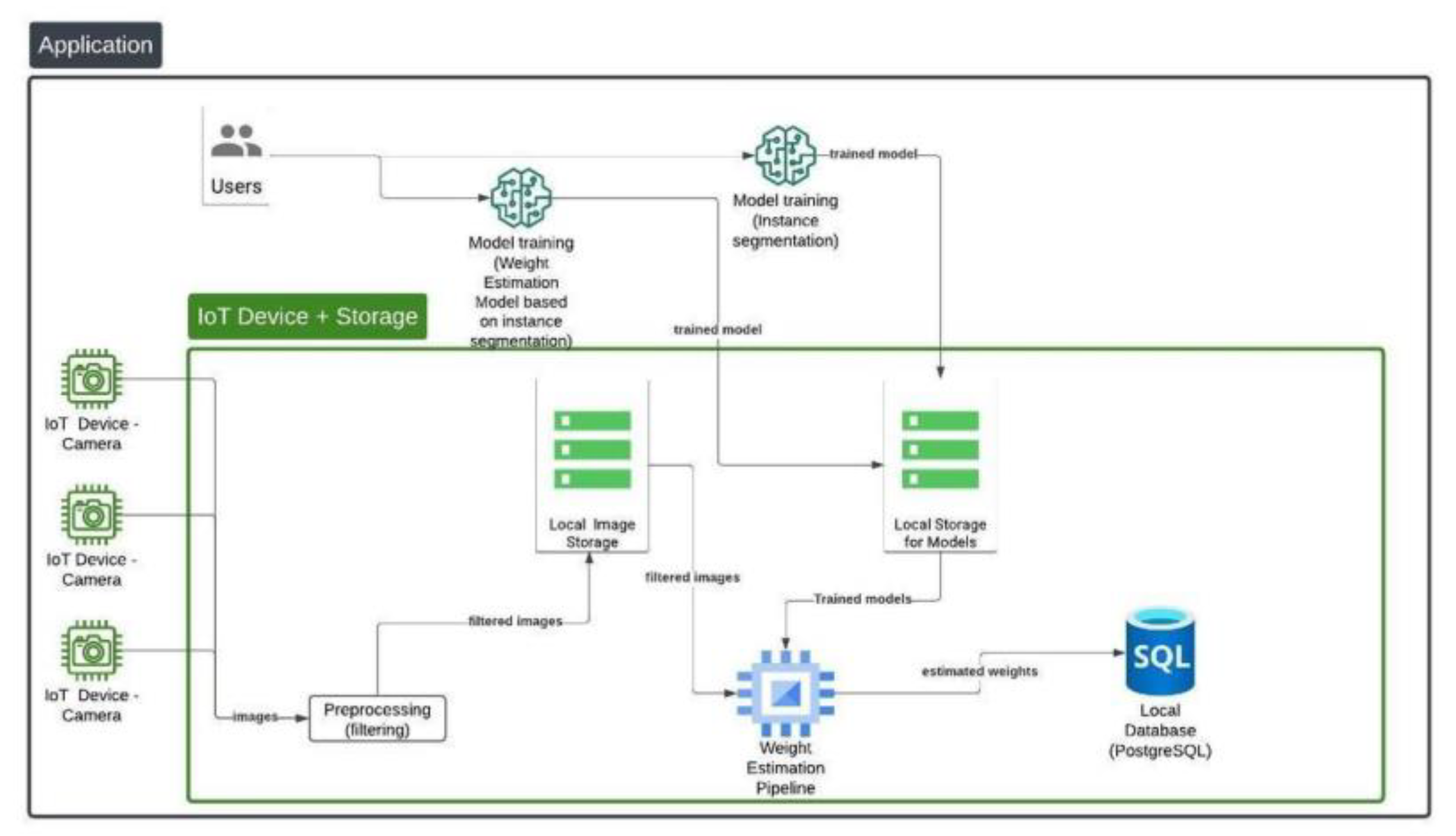

3.1. Edge-Based Infrastructure

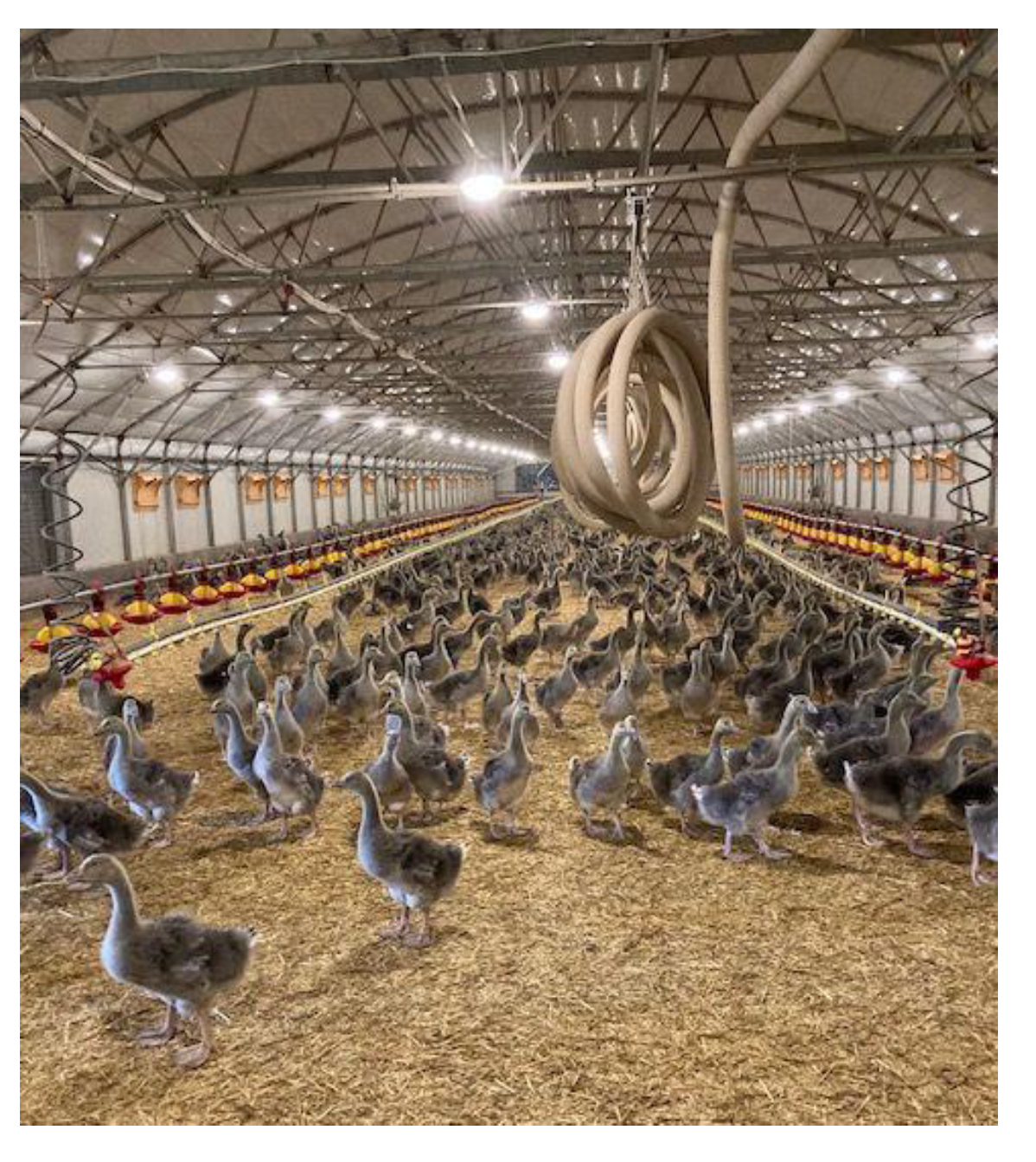

3.1.1. Dataset

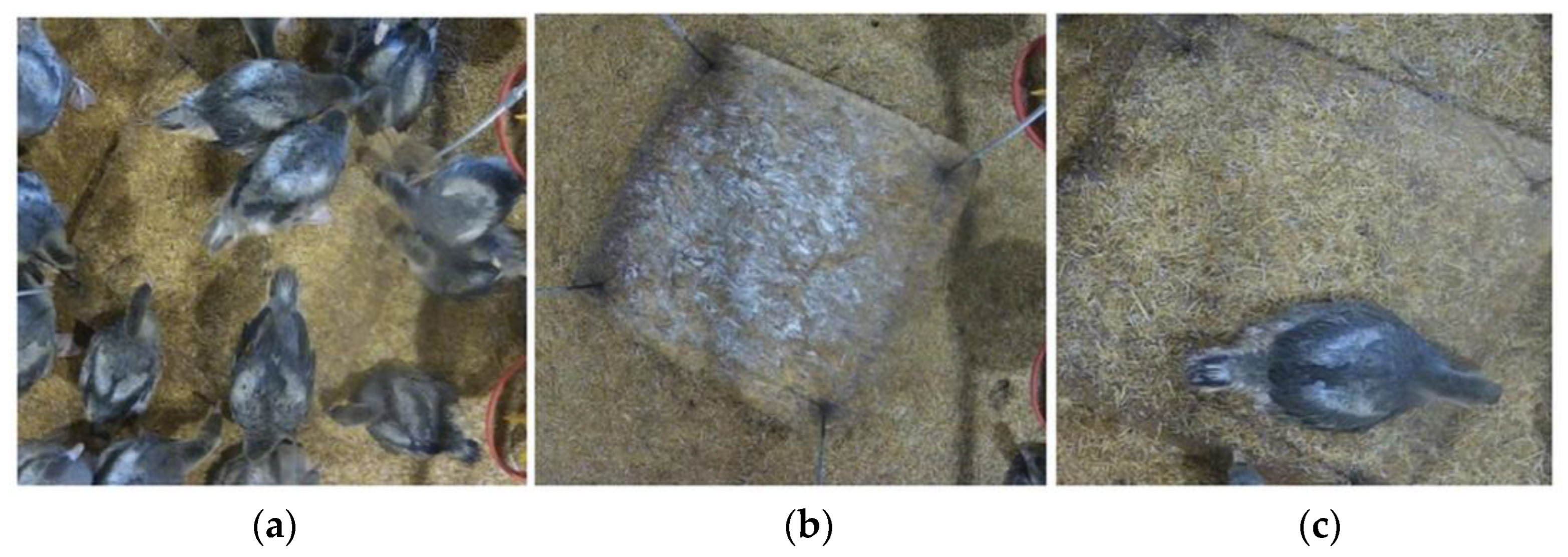

3.1.2. Preparation of Mask Dataset

3.1.3. Preparation of Key Point Dataset

3.1.4. Feature Extraction

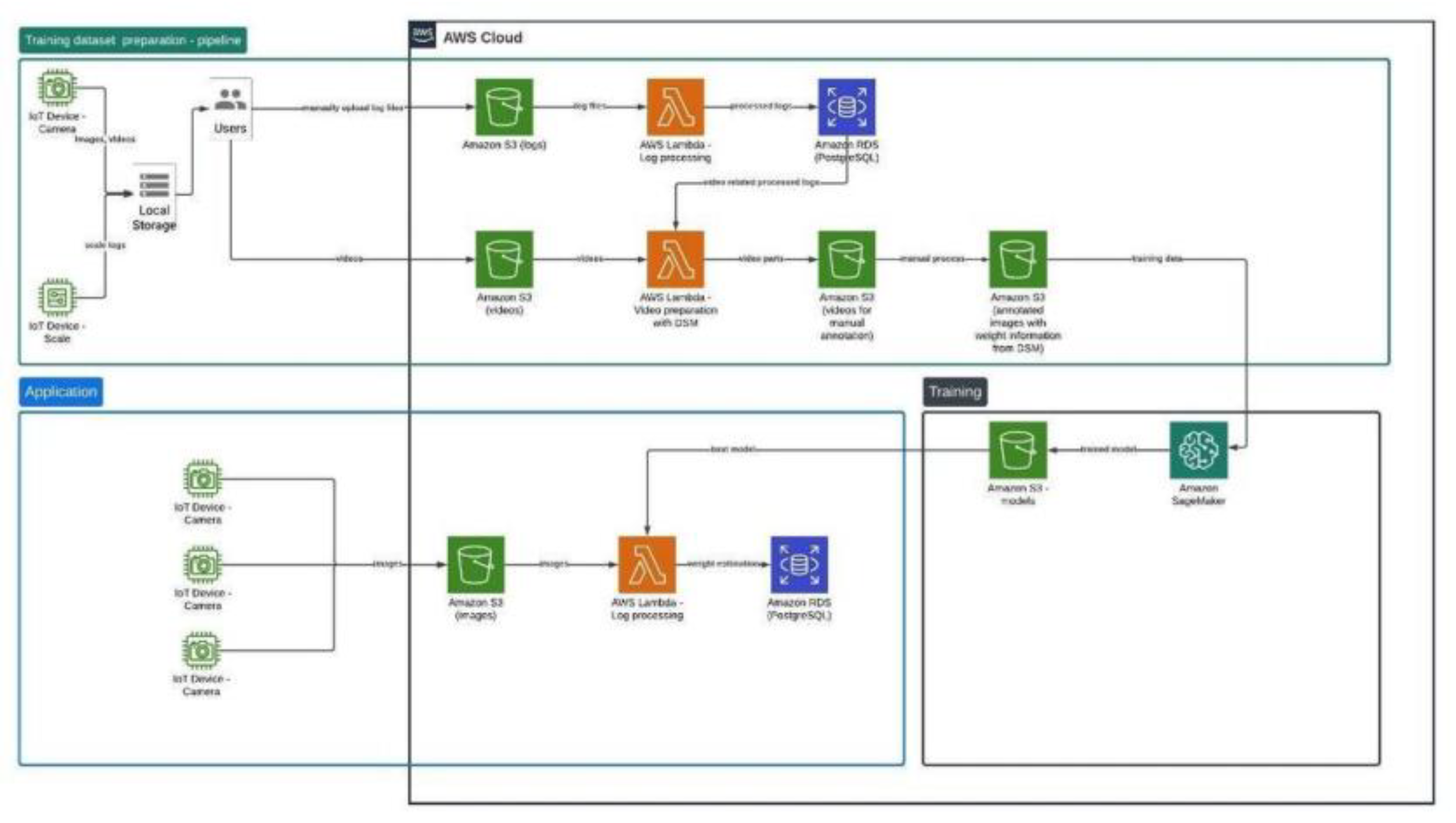

3.2. Cloud-Based Solution

3.2.1. AWS Infrastructure

3.2.2. Dataset

4. Results

4.1. Edge-Based Results

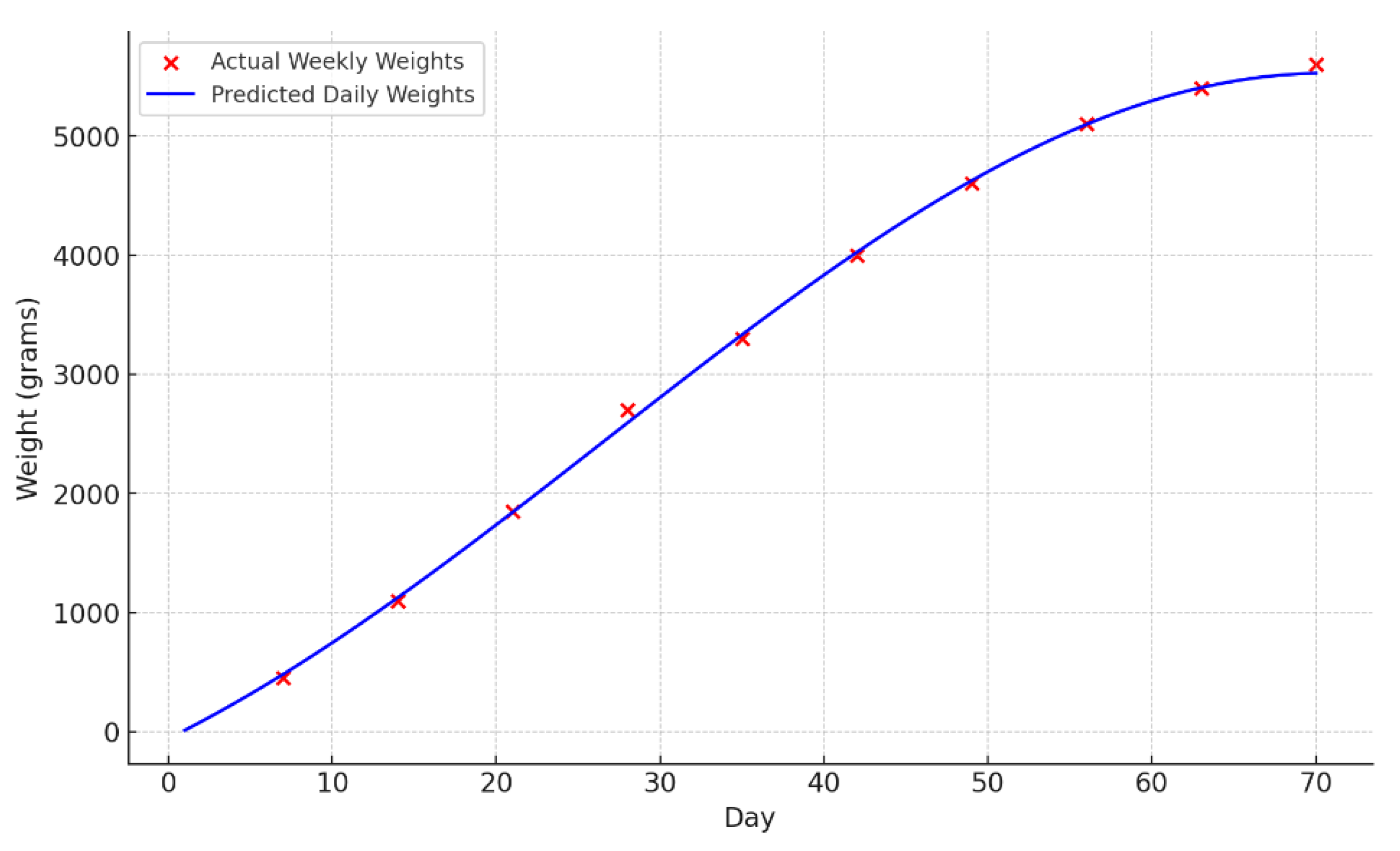

4.2. Cloud-Based Solution

5. Discussion

6. Future Works

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Takács, K.; Mason, A.; Cordova-Lopez, L.E.; Alexy, M.; Galambos, P.; Haidegger, T. Current Safety Legislation of Food Processing Smart Robot Systems–The Red Meat Sector. Acta Polytech. Hung. 2022, 19, 249–267. [Google Scholar] [CrossRef]

- Mason, A.; de Medeiros Esper, I.; Korostynska, O.; Cordova-Lopez, L.E.; Romanov, D.; Pinceková, M.; Bjørnstad, P.H.; Alvseike, O.; Popov, A.; Smolkin, O.; et al. RoBUTCHER: A novel robotic meat factory cell platform. Int. J. Robot. Res. 2024, 43, 1711–1730. [Google Scholar] [CrossRef]

- Mason, A.; Haidegger, T.; Alvseike, O. Time for change: The case of robotic food processing. IEEE Robot. Autom. Mag. 2023, 30, 116–122. [Google Scholar] [CrossRef]

- Berckmans, D. General introduction to precision livestock farming. Anim. Front. 2017, 7, 6–11. [Google Scholar] [CrossRef]

- Norton, T.; Chen, C.; Larsen, M.L.V.; Berckmans, D. Review: Precision livestock farming: Building ‘digital representations’ to bring the animals closer to the farmer. Animal 2019, 13, 3009–3017. [Google Scholar] [CrossRef]

- Jaksa, L.; Azamatov, B.; Nazenova, G.; Alontseva, D.; Haidegger, T. State of the art in Medical Additive Manufacturing. Acta Polytech. Hung. 2023, 20, 8. [Google Scholar]

- Okinda, C.; Nyalala, I.; Korohou, T.; Wang, J.; Achieng, T.; Wamalwa, P.; Mang, T.; Shen, M. A review on computer vision systems in the monitoring of poultry: A welfare perspective. Artif. Intell. Agric. 2020, 4, 184–208. [Google Scholar] [CrossRef]

- Szabo, S.; Alexy, M. Practical Aspects of Weight Measurement Using Image Processing Methods in Waterfowl Production. Agriculture 2022, 12, 1869. [Google Scholar] [CrossRef]

- Kristensen, H.H.; Aerts, J.M.; Leroy, T.; Wathes, C.M.; Berckmans, D. We are modeling the dynamic activity of broiler chickens in response to step-wise changes in light intensity. Appl. Anim. Behav. Sci. 2006, 101, 125–143. [Google Scholar] [CrossRef]

- Available online: https://venturebeat.com/ai/why-do-87-of-data-science-projects-never-make-it-into-production/ (accessed on 13 June 2024).

- Haidegger, T.; Mai, V.; Mörch, C.M.; Boesl, D.O.; Jacobs, A.; Khamis, A.; Lach, L.; Vanderborght, B. Robotics: Enabler and inhibitor of the sustainable development goals. Sustain. Prod. Consum. 2023, 43, 422–434. [Google Scholar] [CrossRef]

- Bist, R.B.; Bist, K.; Poudel, S.; Subedi, D.; Yang, X.; Paneru, B.; Mani, S.; Wang, D.; Chai, L. Sustainable poultry farming practices: A critical review of current strategies and future prospects. Poult. Sci. 2024, 104295. [Google Scholar] [CrossRef]

- Yu, K.; Ren, J.; Zhao, Y. Principles, developments, and applications of laser-induced breakdown spectroscopy in agriculture: A review. Artif. Intell. Agric. 2020, 4, 127–139. [Google Scholar] [CrossRef]

- Cheng, H.-D.; Jiang, X.H.; Sun, Y.; Wang, J. Color image segmentation: Advances and prospects. Pattern Recognit. 2001, 34, 2259–2281. [Google Scholar] [CrossRef]

- Zhuang, X.; Bi, M.; Guo, J.; Wu, S.; Zhang, T. Development of an early warning algorithm to detect sick broilers. Comput. Electron. Agric. 2018, 144, 102–113. [Google Scholar] [CrossRef]

- Mollah, M.B.R.; Hasan, M.A.; Salam, M.A.; Ali, M.A. Digital image analysis to estimate the live weight of broiler. Comput. Electron. Agric. 2010, 72, 48–52. [Google Scholar] [CrossRef]

- Amraei, S.; Mehdizadeh, S.A.; Näas, I.A. Development of a transfer function for weight prediction of live broiler chicken using machine vision. Eng. Agrícola 2018, 38, 776–782. [Google Scholar] [CrossRef]

- Amraei, S.; Mehdizadeh, S.A.; Sallary, S. Application of computer vision and support vector regression for weight prediction of live broiler chicken. Eng. Agric. Environ. Food 2017, 10, 266–271. [Google Scholar] [CrossRef]

- Amraei, S.; Mehdizadeh, S.A.; Salari, S. Broiler weight estimation based on machine vision and artificial neural network. Br. Poult. Sci. 2017, 58, 200–205. [Google Scholar] [CrossRef] [PubMed]

- Du, C.-J.; Sun, D.-W. Estimating the surface area and volume of ellipsoidal ham using computer vision. J. Food Eng. 2006, 73, 260–268. [Google Scholar] [CrossRef]

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. Rev. Sci. Tech. De L’office Int. Des Epizoot. 2014, 33, 189–196. [Google Scholar] [CrossRef] [PubMed]

- Okinda, C.; Sun, Y.; Nyalala, I.; Korohou, T.; Opiyo, S.; Wang, J.; Shen, M. Egg volume estimation based on image processing and computer vision. J. Food Eng. 2020, 283, 110041. [Google Scholar] [CrossRef]

- Wang, L.; Sun, C.; Li, W.; Ji, Z.; Zhang, X.; Wang, Y.; Lei, P.; Yang, X. Establishment of broiler quality estimation model based on depth image and BP neural network. Trans. Chin. Soc. Agric. Eng. 2017, 33, 199–205. [Google Scholar]

- Chedad, A.; Vranken, E.; Aerts, J.-M.; Berckmans, D. Behaviour of Chickens Towards Automatic Weighing Systems. IFAC Proc. Vol. 2000, 33, 207–212. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-Based Tool for Image Annotation. Int. J. Comput. Vis. 2007, 77, 157–173. [Google Scholar] [CrossRef]

- Available online: https://aws.amazon.com/s3/ (accessed on 13 November 2024).

- Available online: https://aws.amazon.com/rds/ (accessed on 13 November 2024).

- Available online: https://aws.amazon.com/lambda/ (accessed on 13 November 2024).

- Available online: https://aws.amazon.com/sagemaker/ (accessed on 13 November 2024).

- Available online: https://www.docker.com/ (accessed on 13 June 2024).

- Rasmussen, C.E.; Nickisch, H. Gaussian processes for machine learning (GPML) toolbox. J. Mach. Learn. Res. 2010, 11, 3011–3015. [Google Scholar]

- Buhmann, M.D. Radial basis functions. Acta Numer. 2000, 9, 1–38. [Google Scholar] [CrossRef]

- Mortensen, A.K.; Lisouski, P.; Ahrendt, P. Weight prediction of broiler chickens using 3D computer vision. Comput. Electron. Agric. 2016, 123, 319–326. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector network. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar]

- Larios, D.F.; Rodríguez, C.; Barbancho, J.; Baena, M.; Leal, M.Á.; Marín, J.; León, C.; Bustamante, J. An Automatic Weighting System for Wild Animals Based in an Artificial Neural Network: How to Weigh Wild Animals without Causing Stress. Sensors 2013, 13, 2862–2883. [Google Scholar] [CrossRef]

- Wang, K.; Pan, J.; Rao, X.; Yang, Y.; Wang, F.; Zheng, R.; Ying, Y. An Image-Assisted Rod-Platform Weighing System for Weight Information Sampling of Broilers. Trans. ASABE 2018, 61, 631–640. [Google Scholar] [CrossRef]

- Lee, C.C.; Adom, A.H.; Markom, M.A.; Tan, E.S.M.M. Automated Chicken Weighing System Using Wireless Sensor Network for Poultry Farmers. IOP Conf. Ser. Mater. Sci. Eng. 2019, 557, 012017. [Google Scholar] [CrossRef]

- Lacy, M.P. Broiler Management. In Commercial Chicken Meat and Egg Production; Springer Science + Business Media: New York, NY, USA, 2002; pp. 829–868. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Toth, G.; Szabo, S.; Haidegger, T.; Alexy, M. Edge or Cloud Architecture: The Applicability of New Data Processing Methods in Large-Scale Poultry Farming. Technologies 2025, 13, 17. https://doi.org/10.3390/technologies13010017

Toth G, Szabo S, Haidegger T, Alexy M. Edge or Cloud Architecture: The Applicability of New Data Processing Methods in Large-Scale Poultry Farming. Technologies. 2025; 13(1):17. https://doi.org/10.3390/technologies13010017

Chicago/Turabian StyleToth, Gergo, Sandor Szabo, Tamas Haidegger, and Marta Alexy. 2025. "Edge or Cloud Architecture: The Applicability of New Data Processing Methods in Large-Scale Poultry Farming" Technologies 13, no. 1: 17. https://doi.org/10.3390/technologies13010017

APA StyleToth, G., Szabo, S., Haidegger, T., & Alexy, M. (2025). Edge or Cloud Architecture: The Applicability of New Data Processing Methods in Large-Scale Poultry Farming. Technologies, 13(1), 17. https://doi.org/10.3390/technologies13010017